2_abstractions

2. Up and down the level of abstraction

In this chapter, we'll travel up and down the level of abstraction, look at some impossibility results (CAP and FLP), and then travel back down for the sake of performance.

If you've done any programming, the idea of levels of abstraction is probably familiar to you. You'll always work at some level of abstraction, interface with a lower level layer through some API, and probably provide some higher-level API or user interface to your users. The seven-layer OSI model of computer networking is a good example of this.

Distributed programming is, I'd assert, in large part dealing with consequences(后果) of distribution (duh!). That is, there is a tension between the reality that there are many nodes and with our desire for systems that "work like a single system". That means finding a good abstraction that balances what is possible with what is understandable and performant.

What do we mean when say X is more abstract than Y? First, that X does not introduce anything new or fundamentally different from Y. In fact, X may remove some aspects of Y or present them in a way that makes them more manageable.

Second, that X is in some sense easier to grasp(理解) than Y, assuming that the things that X removed from Y are not important to the matter at hand.

As Nietzsche wrote:

Every concept originates(起源) through our equating(等同) what is unequal. No leaf ever wholly equals another, and the concept "leaf" is formed through an arbitrary(任意的) abstraction from these individual differences, through forgetting the distinctions; and now it gives rise to the idea that in nature there might be something besides the leaves which would be "leaf" - some kind of original form after which all leaves have been woven, marked, copied, colored, curled, and painted, but by unskilled hands, so that no copy turned out to be a correct, reliable, and faithful image of the original form.

Abstractions, fundamentally(根本上), are fake. Every situation is unique, as is every node. But abstractions make the world manageable: simpler problem statements - free of reality - are much more analytically tractable(易处理的) and provided that(假如) we did not ignore anything essential, the solutions are widely applicable(适用的).

Indeed, if the things that we kept around are essential, then the results we can derive(获得) will be widely applicable. This is why impossibility results are so important: they take the simplest possible formulation of a problem, and demonstrate(证明) that it is impossible to solve within some set of constraints or assumptions.

All abstractions ignore something in favor of equating things that are in reality unique. The trick is to get rid of everything that is not essential. How do you know what is essential? Well, you probably won't know a priori(先验).

Every time we exclude(排除) some aspect of a system from our specification(规范) of the system, we risk introducing a source of error and/or a performance issue. That's why sometimes we need to go in the other direction, and selectively introduce some aspects of real hardware and the real-world problem back. It may be sufficient to reintroduce some specific hardware characteristics (e.g. physical sequentiality) or other physical characteristics to get a system that performs well enough.

With this in mind, what is the least amount of reality we can keep around while still working with something that is still recognizable as a distributed system? A system model is a specification of the characteristics we consider important; having specified one, we can then take a look at some impossibility results and challenges.

A system model

A key property(特性) of distributed systems is distribution. More specifically, programs in a distributed system:

- run concurrently(并发运行) on independent nodes ...

- are connected by a network that may introduce nondeterminism(不确定性) and message loss ...

- and have no shared memory or shared clock.

There are many implications:

- each node executes a program concurrently

- knowledge is local: nodes have fast access only to their local state, and any information about global state is potentially out of date

- nodes can fail and recover from failure independently

- messages can be delayed or lost (independent of node failure; it is not easy to distinguish(区分) network failure and node failure)

- and clocks are not synchronized across nodes (local timestamps do not correspond to the global real time order, which cannot be easily observed(观察到))

A system model enumerates(列举) the many assumptions associated with a particular system design.

System model

a set of assumptions about the environment and facilities on which a distributed system is implemented

System models vary in their assumptions about the environment and facilities. These assumptions include:

- what capabilities the nodes have and how they may fail

- how communication links operate and how they may fail and

- properties of the overall system, such as assumptions about time and order

A robust(健壮的) system model is one that makes the weakest assumptions: any algorithm written for such a system is very tolerant of different environments, since it makes very few and very weak assumptions.

On the other hand, we can create a system model that is easy to reason about by making strong assumptions. For example, assuming that nodes do not fail means that our algorithm does not need to handle node failures. However, such a system model is unrealistic(不切实际的) and hence hard to apply into practice.

Let's look at the properties of nodes, links and time and order in more detail.

Nodes in our system model

Nodes serve as hosts for computation and storage.(节点作为计算和存储的主机) They have:

- the ability to execute a program

- the ability to store data into volatile memory(易失性存储器) (which can be lost upon failure) and into stable state (which can be read after a failure)

- a clock (which may or may not be assumed to be accurate)

Nodes execute deterministic algorithms: the local computation, the local state after the computation, and the messages sent are determined uniquely by the message received and local state when the message was received.

节点执行决定性的算法:本地计算,计算后的本地状态,发送的消息由接收到的消息和接收到消息时的本地状态唯一确定。

There are many possible failure models which describe the ways in which nodes can fail. In practice(实际上), most systems assume a crash-recovery failure model: that is, nodes can only fail by crashing, and can (possibly) recover after crashing at some later point.

Another alternative is to assume that nodes can fail by misbehaving in any arbitrary way. This is known as Byzantine fault tolerance. Byzantine faults are rarely handled in real world commercial systems, because algorithms resilient to arbitrary faults are more expensive to run and more complex to implement.(拜占庭式的错误在现实的商业系统中很少被处理,因为对任意错误能快速恢复的算法运行起来更昂贵,实现起来更复杂) I will not discuss them here.

Communication links in our system model

Communication links connect individual nodes to each other, and allow messages to be sent in either direction. Many books that discuss distributed algorithms assume that there are individual links between each pair of nodes, that the links provide FIFO (first in, first out) order for messages, that they can only deliver messages that were sent, and that sent messages can be lost.

通信链路将各个节点相互连接,并允许消息向任意方向发送。许多讨论分布式算法的书籍都假设每对节点之间有单独的链路,这些链路为消息提供FIFO(先进先出)顺序,它们只能传递已发送的消息,并且已发送的消息可能会丢失。

Some algorithms assume that the network is reliable: that messages are never lost and never delayed indefinitely(无限期地). This may be a reasonable assumption for some real-world settings, but in general it is preferable to consider the network to be unreliable and subject to message loss and delays.

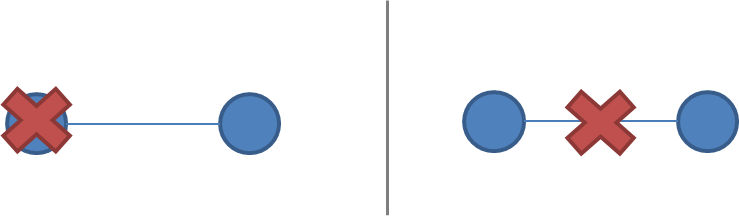

A network partition occurs when the network fails while the nodes themselves remain operational. When this occurs, messages may be lost or delayed until the network partition is repaired. Partitioned nodes may be accessible by some clients, and so must be treated differently from crashed nodes. The diagram below illustrates a node failure vs. a network partition:

It is rare to make further assumptions about communication links. We could assume that links only work in one direction, or we could introduce different communication costs (e.g. latency due to physical distance) for different links. However, these are rarely concerns in commercial environments except for long-distance links (WAN广域网 latency) and so I will not discuss them here; a more detailed model of costs and topology(拓扑结构) allows for better optimization at the cost of complexity.

Timing / ordering assumptions

One of the consequences of physical distribution is that each node experiences the world in a unique manner. This is inescapable(不可避免的), because information can only travel at the speed of light. If nodes are at different distances from each other, then any messages sent from one node to the others will arrive at a different time and potentially in a different order at the other nodes.

Timing assumptions are a convenient(方便的) shorthand(简略的表达方式) for capturing assumptions about the extent to which we take this reality into account. The two main alternatives are:

Synchronous system model

Processes execute in lock-step; there is a known upper bound on message transmission delay; each process has an accurate clock

Asynchronous system model

No timing assumptions - e.g. processes execute at independent rates; there is no bound on message transmission delay; useful clocks do not exist

The synchronous system model imposes(强加) many constraints on time and order. It essentially(本质上) assumes that the nodes have the same experience: that messages that are sent are always received within a particular maximum transmission delay, and that processes execute in lock-step. This is convenient, because it allows you as the system designer to make assumptions about time and order, while the asynchronous system model doesn't.

Asynchronicity is a non-assumption: it just assumes that you can't rely on timing (or a "time sensor(时间传感器)").

It is easier to solve problems in the synchronous system model, because assumptions about execution speeds, maximum message transmission delays and clock accuracy(准确) all help in solving problems since you can make inferences(推断) based on those assumptions and rule out inconvenient failure scenarios(设想,方案) by assuming they never occur.

Of course, assuming the synchronous system model is not particularly realistic. Real-world networks are subject to failures and there are no hard bounds on message delay. Real world systems are at best(顶多) partially synchronous: they may occasionally(偶尔) work correctly and provide some upper bounds, but there will be times where messages are delayed indefinitely and clocks are out of sync. I won't really discuss algorithms for synchronous systems here, but you will probably run into them in many other introductory books because they are analytically easier (but unrealistic).

The consensus(共识) problem

During the rest of this text, we'll vary the parameters of the system model. Next, we'll look at how varying two system properties:

- whether or not network partitions are included in the failure model, and

- synchronous vs. asynchronous timing assumptions

influence the system design choices by discussing two impossibility results (FLP and CAP).

Of course, in order to have a discussion, we also need to introduce a problem to solve. The problem I'm going to discuss is the consensus problem.

Several computers (or nodes) achieve consensus if they all agree on some value. More formally:

- Agreement: Every correct process must agree on the same value.

- Integrity: Every correct process decides at most one value, and if it decides some value, then it must have been proposed by some process.

- Termination: All processes eventually reach a decision.

- Validity: If all correct processes propose the same value V, then all correct processes decide V.

The consensus problem is at the core of many commercial distributed systems. After all, we want the reliability(可靠性) and performance of a distributed system without having to deal with the consequences of distribution (e.g. disagreements / divergence between nodes), and solving the consensus problem makes it possible to solve several related, more advanced problems such as atomic broadcast(原子广播) and atomic commit(原子提交).

Two impossibility results

The first impossibility result, known as the FLP impossibility result, is an impossibility result that is particularly(特别) relevant to people who design distributed algorithms. The second - the CAP theorem - is a related result that is more relevant to practitioners(从业人员); people who need to choose between different system designs but who are not directly concerned with the design of algorithms.

The FLP impossibility result

I will only briefly summarize the FLP impossibility result, though it is considered to be more important in academic circles(学术界). The FLP impossibility result (named after the authors, Fischer, Lynch and Patterson) examines the consensus problem under the asynchronous system model (technically, the agreement problem, which is a very weak form of the consensus problem). It is assumed that nodes can only fail by crashing; that the network is reliable, and that the typical timing assumptions of the asynchronous system model hold: e.g. there are no bounds on message delay.

Under these assumptions, the FLP result states that "there does not exist a (deterministic) algorithm for the consensus problem in an asynchronous system subject to failures, even if messages can never be lost, at most one process may fail, and it can only fail by crashing (stopping executing)".

This result means that there is no way to solve the consensus problem under a very minimal system model in a way that cannot be delayed forever. The argument(论点) is that if such an algorithm existed, then one could devise(设计) an execution of that algorithm in which it would remain undecided ("bivalent") for an arbitrary amount of time by delaying message delivery - which is allowed in the asynchronous system model. Thus, such an algorithm cannot exist.

This impossibility result is important because it highlights that assuming the asynchronous system model leads to a tradeoff: algorithms that solve the consensus problem must either give up safety or liveness when the guarantees regarding bounds on message delivery do not hold.

This insight is particularly relevant to people who design algorithms, because it imposes a hard constraint on the problems that we know are solvable in the asynchronous system model. The CAP theorem is a related theorem that is more relevant to practitioners: it makes slightly different assumptions (network failures rather than node failures), and has more clear implications for practitioners choosing between system designs.

The CAP theorem

The CAP theorem was initially a conjecture made by computer scientist Eric Brewer. It's a popular and fairly useful way to think about tradeoffs in the guarantees that a system design makes. It even has a formal proof by Gilbert and Lynch and no, Nathan Marz didn't debunk it, in spite of what a particular discussion site thinks.

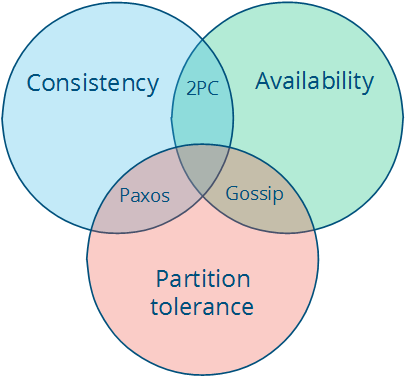

The theorem states that of these three properties:

- Consistency: all nodes see the same data at the same time.

- Availability: node failures do not prevent survivors from continuing to operate.

- Partition tolerance: the system continues to operate despite(尽管) message loss due to network and/or node failure

only two can be satisfied simultaneously(同时). We can even draw this as a pretty diagram, picking two properties out of three gives us three types of systems that correspond to different intersections(交叉点):

Note that the theorem states that the middle piece (having all three properties) is not achievable. Then we get three different system types:

- CA (consistency + availability). Examples include full strict quorum protocols, such as two-phase commit.

- CP (consistency + partition tolerance). Examples include majority quorum protocols in which minority(少数) partitions are unavailable such as Paxos.

- AP (availability + partition tolerance). Examples include protocols using conflict resolution, such as Dynamo.

The CA and CP system designs both offer the same consistency model: strong consistency. The only difference is that a CA system cannot tolerate any node failures; a CP system can tolerate up to f faults given 2f+1 nodes in a non-Byzantine failure model (in other words, it can tolerate the failure of a minority f of the nodes as long as majority f+1 stays up). The reason is simple:

- A CA system does not distinguish between node failures and network failures, and hence must stop accepting writes everywhere to avoid introducing divergence (multiple copies). It cannot tell whether a remote node is down, or whether just the network connection is down: so the only safe thing is to stop accepting writes.

- A CP system prevents(阻止) divergence (e.g. maintains single-copy consistency) by forcing asymmetric behavior on the two sides of the partition. It only keeps the majority partition around, and requires the minority partition to become unavailable (e.g. stop accepting writes), which retains(保持) a degree of availability (the majority partition) and still ensures single-copy consistency.

I'll discuss this in more detail in the chapter on replication when I discuss Paxos. The important thing is that CP systems incorporate(包含) network partitions into their failure model and distinguish between a majority partition and a minority partition using an algorithm like Paxos, Raft or viewstamped replication. CA systems are not partition-aware, and are historically more common: they often use the two-phase commit algorithm and are common in traditional distributed relational databases.

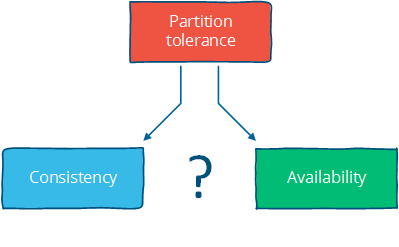

Assuming that a partition occurs, the theorem reduces to a binary choice between availability and consistency.

I think there are four conclusions(结论) that should be drawn from the CAP theorem:

First, that many system designs used in early distributed relational database systems did not take into account partition tolerance (e.g. they were CA designs). Partition tolerance is an important property for modern systems, since network partitions become much more likely if the system is geographically distributed (as many large systems are).

Second, that there is a tension between strong consistency and high availability during network partitions. The CAP theorem is an illustration of the tradeoffs that occur between strong guarantees and distributed computation.

In some sense, it is quite crazy to promise that a distributed system consisting of independent nodes connected by an unpredictable network "behaves in a way that is indistinguishable(无法区分的) from a non-distributed system".

Strong consistency guarantees require us to give up availability during a partition. This is because one cannot prevent divergence between two replicas(副本) that cannot communicate with each other while continuing to accept writes on both sides of the partition.

How can we work around this? By strengthening(加强) the assumptions (assume no partitions) or by weakening the guarantees. Consistency can be traded off against availability (and the related capabilities of offline accessibility and low latency). If "consistency" is defined as something less than "all nodes see the same data at the same time" then we can have both availability and some (weaker) consistency guarantee.

Third, that there is a tension between strong consistency and performance in normal operation.

Strong consistency / single-copy consistency requires that nodes communicate and agree on every operation. This results in high latency during normal operation.

If you can live with a consistency model other than the classic one, a consistency model that allows replicas to lag(滞后) or to diverge(分叉), then you can reduce latency during normal operation and maintain availability in the presence of partitions.

When fewer messages and fewer nodes are involved, an operation can complete faster. But the only way to accomplish that is to relax the guarantees: let some of the nodes be contacted less frequently, which means that nodes can contain old data.

This also makes it possible for anomalies(异常) to occur. You are no longer guaranteed to get the most recent value. Depending on what kinds of guarantees are made, you might read a value that is older than expected, or even lose some updates.

Fourth - and somewhat indirectly - that if we do not want to give up availability during a network partition, then we need to explore(探索) whether consistency models other than strong consistency are workable for our purposes.

For example, even if user data is georeplicated to multiple datacenters, and the link between those two datacenters is temporarily out of order, in many cases we'll still want to allow the user to use the website / service. This means reconciling(协调) two divergent sets of data later on, which is both a technical challenge and a business risk. But often both the technical challenge and the business risk are manageable(可控的), and so it is preferable to provide high availability.

Consistency and availability are not really binary choices, unless you limit yourself to strong consistency. But strong consistency is just one consistency model: the one where you, by necessity, need to give up availability in order to prevent more than a single copy of the data from being active. As Brewer himself points out, the "2 out of 3" interpretation(解释) is misleading(误导).

If you take away(拿走) just one idea from this discussion, let it be this: "consistency" is not a singular, unambiguous(明确的) property. Remember:

Instead, a consistency model is a guarantee - any guarantee - that a data store gives to programs that use it.

Consistency model

a contract(契约) between programmer and system, wherein the system guarantees that if the programmer follows some specific rules, the results of operations on the data store will be predictable(可预测的)

The "C" in CAP is "strong consistency", but "consistency" is not a synonym for "strong consistency".

Let's take a look at some alternative consistency models.

Strong consistency vs. other consistency models

Consistency models can be categorized into two types: strong and weak consistency models:

- Strong consistency models (capable of maintaining a single copy)

- Linearizable consistency

- Sequential consistency(顺序一致性)

- Weak consistency models (not strong)

- Client-centric consistency models

- Causal consistency(因果一致性): strongest model available

- Eventual consistency models

Strong consistency models guarantee that the apparent order and visibility of updates is equivalent to a non-replicated system. Weak consistency models, on the other hand, do not make such guarantees.

Note that this is by no means(绝不) an exhaustive(详尽的) list. Again, consistency models are just arbitrary contracts between the programmer and system, so they can be almost anything.

Strong consistency models

Strong consistency models can further be divided into two similar, but slightly different consistency models:

- Linearizable consistency: Under linearizable consistency, all operations appear to have executed atomically in an order that is consistent with the global real-time ordering of operations. (Herlihy & Wing, 1991)

- Sequential consistency: Under sequential consistency, all operations appear to have executed atomically in some order that is consistent with the order seen at individual nodes and that is equal at all nodes. (Lamport, 1979)

The key difference is that linearizable consistency requires that the order in which operations take effect is equal to the actual real-time ordering of operations. Sequential consistency allows for operations to be reordered as long as the order observed on each node remains consistent(一致的). The only way someone can distinguish between the two is if they can observe all the inputs and timings going into the system; from the perspective of a client interacting with a node, the two are equivalent.

The difference seems immaterial(不重要), but it is worth noting that sequential consistency does not compose.

Strong consistency models allow you as a programmer to replace a single server with a cluster of distributed nodes and not run into any problems.

强一致性模型允许你作为程序员用分布式节点集群替换单个服务器,而不会遇到任何问题.

All the other consistency models have anomalies (compared to a system that guarantees strong consistency), because they behave in a way that is distinguishable from a non-replicated system. But often these anomalies are acceptable, either because we don't care about occasional issues or because we've written code that deals with inconsistencies after they have occurred in some way.

Note that there really aren't any universal typologies for weak consistency models, because "not a strong consistency model" (e.g. "is distinguishable from a non-replicated system in some way") can be almost anything.

Client-centric consistency models

Client-centric consistency models are consistency models that involve the notion(观念) of a client or session in some way. For example, a client-centric consistency model might guarantee that a client will never see older versions of a data item. This is often implemented by building additional caching into the client library, so that if a client moves to a replica node that contains old data, then the client library returns its cached value rather than the old value from the replica.

Clients may still see older versions of the data, if the replica node they are on does not contain the latest version, but they will never see anomalies where an older version of a value resurfaces (e.g. because they connected to a different replica). Note that there are many kinds of consistency models that are client-centric.

如果客户机所在的副本节点不包含最新版本,则客户机可能仍会看到较旧版本的数据,但他们永远不会看到值的较旧版本重新出现的异常情况(例如,因为它们连接到不同的副本)。请注意,有很多种以客户机为中心的一致性模型。

Eventual consistency

The eventual consistency model says that if you stop changing values, then after some undefined amount of time all replicas will agree on the same value. It is implied that before that time results between replicas are inconsistent in some undefined manner. Since it is trivially satisfiable (liveness property only), it is useless without supplemental information.

Saying something is merely eventually consistent is like saying "people are eventually dead". It's a very weak constraint, and we'd probably want to have at least some more specific characterization of two things:

First, how long is "eventually"? It would be useful to have a strict lower bound, or at least some idea of how long it typically takes for the system to converge to the same value.

Second, how do the replicas agree on a value? A system that always returns "42" is eventually consistent: all replicas agree on the same value. It just doesn't converge to a useful value since it just keeps returning the same fixed value. Instead, we'd like to have a better idea of the method. For example, one way to decide is to have the value with the largest timestamp always win.

So when vendors say "eventual consistency", what they mean is some more precise term, such as "eventually last-writer-wins, and read-the-latest-observed-value in the meantime" consistency. The "how?" matters, because a bad method can lead to writes being lost - for example, if the clock on one node is set incorrectly and timestamps are used.

I will look into these two questions in more detail in the chapter on replication methods for weak consistency models.

Further reading

- Brewer's Conjecture and the Feasibility of Consistent, Available, Partition-Tolerant Web Services - Gilbert & Lynch, 2002

- Impossibility of distributed consensus with one faulty process - Fischer, Lynch and Patterson, 1985

- Perspectives on the CAP Theorem - Gilbert & Lynch, 2012

- CAP Twelve Years Later: How the "Rules" Have Changed - Brewer, 2012

- Uniform consensus is harder than consensus - Charron-Bost & Schiper, 2000

- Replicated Data Consistency Explained Through Baseball - Terry, 2011

- Life Beyond Distributed Transactions: an Apostate's Opinion - Helland, 2007

- If you have too much data, then 'good enough' is good enough - Helland, 2011

- Building on Quicksand - Helland & Campbell, 2009

2_abstractions的更多相关文章

随机推荐

- 《python可以这样学》第二章

Python序列 列表与列表推导式 列表创建与删除 创建列表对象 >>> a_list = list((3, 5, 7, 9, 11)) >>> a_list = ...

- Windows下配置开机自启Tomcat服务

给单位内部做了一个管理系统,部署项目要求服务器启动管理系统自启..直接给出操作流程. 一.配置环境变量 由于Tomcat启动依赖jdk,因此需要配置jdk与Tomcat两项环境变量,如系统已安装jdk ...

- toj 3019 Hidden Password (最小表示法)

Hidden Password 时间限制(普通/Java):1000MS/3000MS 运行内存限制:65536KByte总提交: 53 测试通过: 19 描述 Some time the progr ...

- 跨域打开页面:Uncaught DOMException: Blocked a frame with origin

Uncaught DOMException: Blocked a frame with origin 使用postMessage()方法可以解决跨域传值的问题 Api: https://develop ...

- C语言中的弱符号(weak)用法及实例

一 符号概念: 在C语言中,有强符号和弱符号,符号简单来说就是函数.变量的名字,对于全局(非局部.非static)的函数和变量,能不能重名是有一定规矩的,强.弱符号就是针对这些全局函数和变量来说的. ...

- HTML连载71-翻转菜单练习

一.翻转菜单练习 <!DOCTYPE html> <html lang="en"> <head> <meta charset=" ...

- Girlfreind:1 Vulnhub Walkthrough

靶机链接: https://www.vulnhub.com/entry/me-and-my-girlfriend-1,409/ 主机扫描: HTTP 目录访问,提示无权限,右键源码,提示XXF即可 正 ...

- Dynamics 365 marketing中添加自定义渠道磁贴

Dynamics 365 marketing中默认的渠道只有Marketing Email和Marketing Activity,想要添加其他渠道必须自定义磁贴,自定义磁贴的步骤如下: 1.创建实体 ...

- Linux如何定位文件在磁盘的物理位置

我在学习研究Linux内核结构的时候,思考过一个问题:Linux如何定位文件在磁盘的物理位置每个文件都有一个inode,inode在内核代码中的数据结构如下: 1 struct ext4_inode ...

- 算法训练 最大获利 注意数据规模(long long)

资源限制 时间限制:1.0s 内存限制:256.0MB 问题描述 Chakra是一位年轻有为的企业家,最近他在进军餐饮行业.他在各地开拓市场,共买下了N个饭店.在初期的市场调研中,他将一天划分为M ...