Reducing and Profiling GPU Memory Usage in Keras with TensorFlow Backend

keras 自适应分配显存 & 清理不用的变量释放 GPU 显存

Intro

Are you running out of GPU memory when using keras or tensorflow deep learning models, but only some of the time?

Are you curious about exactly how much GPU memory your tensorflow model uses during training?

Are you wondering if you can run two or more keras models on your GPU at the same time?

Background

By default, tensorflow pre-allocates nearly all of the available GPU memory, which is bad for a variety of use cases, especially production and memory profiling.

When keras uses tensorflow for its back-end, it inherits this behavior.

Setting tensorflow GPU memory options

For new models

Thankfully, tensorflow allows you to change how it allocates GPU memory, and to set a limit on how much GPU memory it is allowed to allocate.

Let’s set GPU options on keras‘s example Sequence classification with LSTM network

## keras example imports

from keras.models import Sequential

from keras.layers import Dense, Dropout

from keras.layers import Embedding

from keras.layers import LSTM ## extra imports to set GPU options

import tensorflow as tf

from keras import backend as k ###################################

# TensorFlow wizardry

config = tf.ConfigProto() # Don't pre-allocate memory; allocate as-needed

config.gpu_options.allow_growth = True # Only allow a total of half the GPU memory to be allocated

#config.gpu_options.per_process_gpu_memory_fraction = 0.5 # Create a session with the above options specified.

k.tensorflow_backend.set_session(tf.Session(config=config))

################################### model = Sequential()

model.add(Embedding(max_features, output_dim=256))

model.add(LSTM(128))

model.add(Dropout(0.5))

model.add(Dense(1, activation='sigmoid')) model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy']) model.fit(x_train, y_train, batch_size=16, epochs=10)

score = model.evaluate(x_test, y_test, batch_size=16)

After the above, when we create the sequence classification model, it won’t use half the GPU memory automatically, but rather will allocate GPU memory as-needed during the calls to model.fit() and model.evaluate().

Additionally, with the per_process_gpu_memory_fraction = 0.5, tensorflow will only allocate a total of half the available GPU memory.

If it tries to allocate more than half of the total GPU memory, tensorflow will throw a ResourceExhaustedError, and you’ll get a lengthy stack trace.

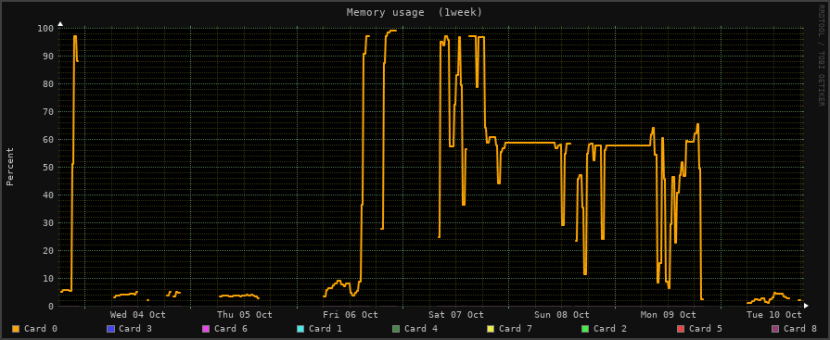

If you have a Linux machine and an nvidia card, you can watch nvidia-smi to see how much GPU memory is in use, or can configure a monitoring tool like monitorix to generate graphs for you.

GPU memory usage, as shown in Monitorix for Linux

For a model that you’re loading

We can even set GPU memory management options for a model that’s already created and trained, and that we’re loading from disk for deployment or for further training.

For that, let’s tweak keras‘s load_model example:

# keras example imports

from keras.models import load_model ## extra imports to set GPU options

import tensorflow as tf

from keras import backend as k ###################################

# TensorFlow wizardry

config = tf.ConfigProto() # Don't pre-allocate memory; allocate as-needed

config.gpu_options.allow_growth = True # Only allow a total of half the GPU memory to be allocated

config.gpu_options.per_process_gpu_memory_fraction = 0.5 # Create a session with the above options specified.

k.tensorflow_backend.set_session(tf.Session(config=config))

################################### # returns a compiled model

# identical to the previous one

model = load_model('my_model.h5') # TODO: classify all the things

Now, with your loaded model, you can open your favorite GPU monitoring tool and watch how the GPU memory usage changes under different loads.

Conclusion

Good news everyone! That sweet deep learning model you just made doesn’t actually need all that memory it usually claims!

And, now that you can tell tensorflow not to pre-allocate memory, you can get a much better idea of what kind of rig(s) you need in order to deploy your model into production.

Is this how you’re handling GPU memory management issues with tensorflow or keras?

Did I miss a better, cleaner way of handling GPU memory allocation with tensorflow and keras?

Let me know in the comments!

How to remove stale models from GPU memory

import gc

m = Model(.....)

m.save(tmp_model_name)

del m

K.clear_session()

gc.collect()

m = load_model(tmp_model_name)

Reducing and Profiling GPU Memory Usage in Keras with TensorFlow Backend的更多相关文章

- GPU Memory Usage占满而GPU-Util却为0的调试

最近使用github上的一个开源项目训练基于CNN的翻译模型,使用THEANO_FLAGS='floatX=float32,device=gpu2,lib.cnmem=1' python run_nn ...

- Allowing GPU memory growth

By default, TensorFlow maps nearly all of the GPU memory of all GPUs (subject to CUDA_VISIBLE_DEVICE ...

- Redis: Reducing Memory Usage

High Level Tips for Redis Most of Stream-Framework's users start out with Redis and eventually move ...

- Android 性能优化(21)*性能工具之「GPU呈现模式分析」Profiling GPU Rendering Walkthrough:分析View显示是否超标

Profiling GPU Rendering Walkthrough 1.In this document Prerequisites Profile GPU Rendering $adb shel ...

- Memory usage of a Java process java Xms Xmx Xmn

http://www.oracle.com/technetwork/java/javase/memleaks-137499.html 3.1 Meaning of OutOfMemoryError O ...

- Shell script for logging cpu and memory usage of a Linux process

Shell script for logging cpu and memory usage of a Linux process http://www.unix.com/shell-programmi ...

- 5 commands to check memory usage on Linux

Memory Usage On linux, there are commands for almost everything, because the gui might not be always ...

- SHELL:Find Memory Usage In Linux (统计每个程序内存使用情况)

转载一个shell统计linux系统中每个程序的内存使用情况,因为内存结构非常复杂,不一定100%精确,此shell可以在Ghub上下载. [root@db231 ~]# ./memstat.sh P ...

- Why does the memory usage increase when I redeploy a web application?

That is because your web application has a memory leak. A common issue are "PermGen" memor ...

随机推荐

- AngularJS Directive 命名规则

使用规则 在HTML代码中,使用的是连接符的形式,下面我们对比看看命名和使用的对应字符串: 命名 使用 people people peopleList people-list peopleListA ...

- RabbitMQ和Kafka对比以及场景使用说明

我目前的项目最后使用的是RabbitMQ,这里依然是结合网上大神们的优秀博客,对kafka和rabbitmq进行简单的比对.最后附上参考博客. 1.架构模型 rabbitmq RabbitMQ遵循AM ...

- 获取设备信息——获取客户端ip地址和mac地址

1.获取本地IP(有可能是 内网IP,192.168.xxx.xxx) /** * 获取本地IP * * @return */ public static String getLocalIpAddre ...

- 《LeetBook》leetcode题解(17):Letter Combinations of a Phone Number[M]

我现在在做一个叫<leetbook>的免费开源书项目,力求提供最易懂的中文思路,目前把解题思路都同步更新到gitbook上了,需要的同学可以去看看 书的地址:https://hk029.g ...

- Eclipse打不开 提示an error has occurred.see the log file

有时由于Eclipse卡死,强制关闭之后会出现打不开的情况.弹窗提示: 查看log文件,发现有这样的信息: !MESSAGE The workspace exited with unsaved ch ...

- [转] TCP/IP原理、基础以及在Linux上的实现

导言:本篇作为理论基础,将向我们讲述TCP/IP的基本原理以及重要的协议细节,并在此基础上介绍了TCP/IP在LINUX上的实现. OSI参考模型及TCP/IP参考模型 OSI模型(open syst ...

- CAJ Viewer安装流程以及CAJ或Pdf转换为Word格式

不多说,直接上干货! pdf转word格式,最简单的就是,实用工具 Adobe Acrobat DC 首先声明的是,将CAJ或者Pdf转换成Word文档,包括里面的文字.图片以及格式,根本不需 ...

- tomcat启动(一)startup.bat|catalina.bat分析

环境:windows X64位 Tomcat8.0.47 bootstrap.jar是tomcat的内核 开始位置 startup.bat 查看文本 具体的批处理脚本语法可以查看我整理的文章 http ...

- spring中获取applicationContext(2)

前几天写web项目的时候,用到了spring mvc. 但是又写bean.我要在代码里面生成,而这个bean里面,又有一些属性是通过spring注入的. 所以,只能通过ApplicationConte ...

- HihoCoder - 1040 矩形判断

矩形判断 给出平面上4条线段,判断这4条线段是否恰好围成一个面积大于0的矩形. Input 输入第一行是一个整数T(1<=T<=100),代表测试数据的数量. 每组数据包含4行,每行包含4 ...