课程四(Convolutional Neural Networks),第四 周(Special applications: Face recognition & Neural style transfer) —— 3.Programming assignments:Face Recognition for the Happy House

Face Recognition for the Happy House

Welcome to the first assignment of week 4! Here you will build a face recognition system. Many of the ideas presented here are from FaceNet. In lecture, we also talked about DeepFace.

Face recognition problems commonly fall into two categories:

- Face Verification - "is this the claimed person?". For example, at some airports, you can pass through customs by letting a system scan your passport and then verifying that you (the person carrying the passport) are the correct person. A mobile phone that unlocks using your face is also using face verification. This is a 1:1 matching problem.

- Face Recognition - "who is this person?". For example, the video lecture showed a face recognition video (https://www.youtube.com/watch?v=wr4rx0Spihs) of Baidu employees entering the office without needing to otherwise identify themselves. This is a 1:K matching problem.

FaceNet learns a neural network that encodes a face image into a vector of 128 numbers. By comparing two such vectors, you can then determine if two pictures are of the same person.

In this assignment, you will:

- Implement the triplet loss function

- Use a pretrained model to map face images into 128-dimensional encodings

- Use these encodings to perform face verification and face recognition

In this exercise, we will be using a pre-trained model which represents ConvNet activations using a "channels first" convention, as opposed to the "channels last" convention used in lecture and previous programming assignments. In other words, a batch of images will be of shape (m,nC,nH,nW) instead of (m,nH,nW,nC). Both of these conventions have a reasonable amount of traction among open-source implementations; there isn't a uniform standard yet within the deep learning community.

【中文翻译】

- 人脸验证-"这是被要求的人?例如, 在一些机场, 您可以通过让系统扫描您的护照, 然后验证您 (携带护照的人) 是正确的人通过海关。用你的脸开锁的手机也使用脸部验证。这是一个1:1 匹配的问题。

- 人脸识别-"谁是这个人?例如, 视频讲座显示了百度员工进入办公室的人脸识别视频 (https://www.youtube.com/watch?v=wr4rx0Spihs), 而不需要以其他方式识别自己。这是一个 1: K 匹配问题。

- 实现 triplet损失函数

- 使用 pretrained 模型将人脸图像映射为128维编码

- 使用这些编码来执行人脸验证和人脸识别

在本练习中, 我们将使用一个预先训练的模型, 它使用 "通道第一" 约定, 而不是在讲课和以前的编程任务中使用的 "通道最后" 约定。换言之, 一批图像的形状是 (m,nC,nH,nW)而不是 (m,nH,nW,nC)。这两项公约在开源实现中都是合理的;在深入学习的社区里还没有统一的标准。

Let's load the required packages.

【code】

from keras.models import Sequential

from keras.layers import Conv2D, ZeroPadding2D, Activation, Input, concatenate

from keras.models import Model

from keras.layers.normalization import BatchNormalization

from keras.layers.pooling import MaxPooling2D, AveragePooling2D

from keras.layers.merge import Concatenate

from keras.layers.core import Lambda, Flatten, Dense

from keras.initializers import glorot_uniform

from keras.engine.topology import Layer

from keras import backend as K

K.set_image_data_format('channels_first')

import cv2

import os

import numpy as np

from numpy import genfromtxt

import pandas as pd

import tensorflow as tf

from fr_utils import *

from inception_blocks_v2 import * %matplotlib inline

%load_ext autoreload

%autoreload 2 np.set_printoptions(threshold=np.nan)

0 - Naive Face Verification

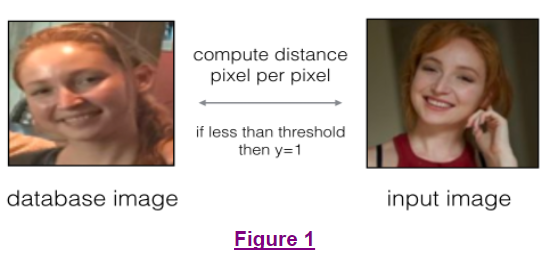

In Face Verification, you're given two images and you have to tell if they are of the same person. The simplest way to do this is to compare the two images pixel-by-pixel. If the distance between the raw images are less than a chosen threshold, it may be the same person!

Of course, this algorithm performs really poorly, since the pixel values change dramatically due to variations in lighting, orientation of the person's face, even minor changes in head position, and so on.

You'll see that rather than using the raw image, you can learn an encoding f(img) so that element-wise comparisons of this encoding gives more accurate judgements as to whether two pictures are of the same person.

【中文翻译】

图片见英文部分

1 - Encoding face images into a 128-dimensional vector

1.1 - Using an ConvNet to compute encodings

The FaceNet model takes a lot of data and a long time to train. So following common practice in applied deep learning settings, let's just load weights that someone else has already trained. The network architecture follows the Inception model from Szegedy et al.. We have provided an inception network implementation. You can look in the file inception_blocks.py to see how it is implemented (do so by going to "File->Open..." at the top of the Jupyter notebook).

The key things you need to know are:

- This network uses 96x96 dimensional RGB images as its input. Specifically, inputs a face image (or batch of m face images) as a tensor of shape (m,nC,nH,nW)=(m,3,96,96)

- It outputs a matrix of shape (m,128) that encodes each input face image into a 128-dimensional vector

【中文翻译】

- 该网络采用96x96 维 RGB 图像作为输入。具体地说, 输入一张人脸图像 (或一批 m张 人脸图像) 作为张量,shape为 (m,nC,nH,nW)=(m,3,96,96)

- 它输出shape为 (m, 128) , 将每个输入人脸图像编码为128维向量

Run the cell below to create the model for face images.

【code】

FRmodel = faceRecoModel(input_shape=(3, 96, 96))

print("Total Params:", FRmodel.count_params())

【result】

Total Params: 3743280

Expected Output

Total Params: 3743280

By using a 128-neuron fully connected layer as its last layer, the model ensures that the output is an encoding vector of size 128. You then use the encodings the compare two face images as follows:

By computing a distance between two encodings and thresholding, you can determine if the two pictures represent the same person

So, an encoding is a good one if:

- The encodings of two images of the same person are quite similar to each other

- The encodings of two images of different persons are very different

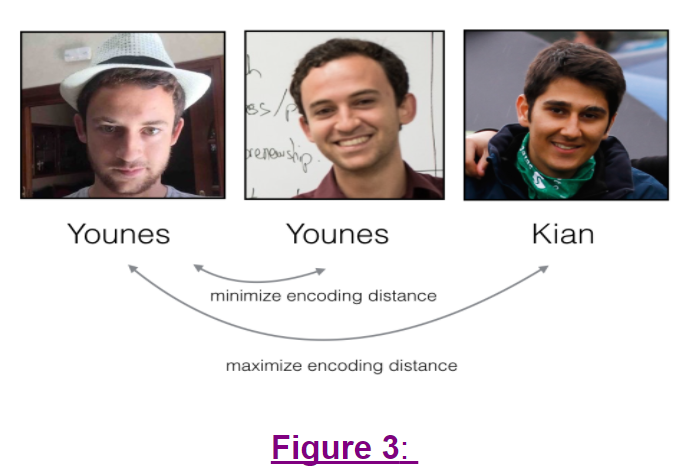

The triplet loss function formalizes this, and tries to "push" the encodings of two images of the same person (Anchor and Positive) closer together, while "pulling" the encodings of two images of different persons (Anchor, Negative) further apart.

In the next part, we will call the pictures from left to right: Anchor (A), Positive (P), Negative (N)

1.2 - The Triplet Loss

For an image x, we denote(表示) its encoding f(x), where ff is the function computed by the neural network.

Training will use triplets of images (A,P,N):

- A is an "Anchor" image--a picture of a person.

- P is a "Positive" image--a picture of the same person as the Anchor image.

- N is a "Negative" image--a picture of a different person than the Anchor image.

These triplets are picked from our training dataset. We will write (A(i),P(i),N(i)) to denote the i-th training example.

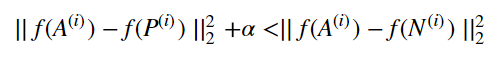

You'd like to make sure that an image A(i)of an individual is closer to the Positive P(i) than to the Negative image N(i) by at least a margin α:

You would thus like to minimize the following "triplet cost":

Here, we are using the notation "[z]+" to denote max(z,0).

Notes:

- The term (1) is the squared distance between the anchor "A" and the positive "P" for a given triplet; you want this to be small.

- The term (2) is the squared distance between the anchor "A" and the negative "N" for a given triplet, you want this to be relatively large, so it thus makes sense to have a minus sign preceding it.

- α is called the margin. It is a hyperparameter that you should pick manually. We will use α=0.2.

Most implementations also normalize the encoding vectors to have norm equal one (i.e., ∣∣f(img)∣∣2=1); you won't have to worry about that here.

Useful functions: tf.reduce_sum(), tf.square(), tf.subtract(), tf.add(), tf.maximum(). For steps 1 and 2, you will need to sum over the entries of

while for step 4 you will need to sum over the training examples.

while for step 4 you will need to sum over the training examples.

# GRADED FUNCTION: triplet_loss def triplet_loss(y_true, y_pred, alpha = 0.2):

"""

Implementation of the triplet loss as defined by formula (3) Arguments:

y_true -- true labels, required when you define a loss in Keras, you don't need it in this function.

y_pred -- python list containing three objects:

anchor -- the encodings for the anchor images, of shape (None, 128)

positive -- the encodings for the positive images, of shape (None, 128)

negative -- the encodings for the negative images, of shape (None, 128) Returns:

loss -- real number, value of the loss

""" anchor, positive, negative = y_pred[0], y_pred[1], y_pred[2] ### START CODE HERE ### (≈ 4 lines)

# Step 1: Compute the (encoding) distance between the anchor and the positive, you will need to sum over axis=-1

pos_dist = tf.reduce_sum(tf.square(tf.subtract(anchor, positive)),axis=-1)

# Step 2: Compute the (encoding) distance between the anchor and the negative, you will need to sum over axis=-1

neg_dist = tf.reduce_sum(tf.square(tf.subtract(anchor, negative)),axis=-1)

# Step 3: subtract the two previous distances and add alpha.

basic_loss = tf.add(tf.subtract(pos_dist,neg_dist),alpha)

# Step 4: Take the maximum of basic_loss and 0.0. Sum over the training examples.

loss = tf.reduce_sum(tf.maximum(basic_loss, 0.0))

### END CODE HERE ### return loss

with tf.Session() as test:

tf.set_random_seed(1)

y_true = (None, None, None)

y_pred = (tf.random_normal([3, 128], mean=6, stddev=0.1, seed = 1),

tf.random_normal([3, 128], mean=1, stddev=1, seed = 1),

tf.random_normal([3, 128], mean=3, stddev=4, seed = 1))

loss = triplet_loss(y_true, y_pred) print("loss = " + str(loss.eval()))

【reuslt】

loss = 528.143

Expected Output:

| loss | 528.143 |

2 - Loading the trained model

FaceNet is trained by minimizing the triplet loss. But since training requires a lot of data and a lot of computation, we won't train it from scratch here(从头开始). Instead, we load a previously trained model. Load a model using the following cell; this might take a couple of minutes to run.

【code】

FRmodel.compile(optimizer = 'adam', loss = triplet_loss, metrics = ['accuracy'])

load_weights_from_FaceNet(FRmodel)

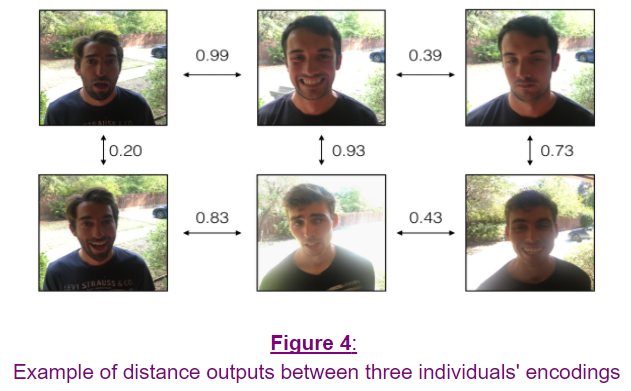

Here're some examples of distances between the encodings between three individuals:

3 - Applying the model

Back to the Happy House! Residents are living blissfully since you implemented happiness recognition for the house in an earlier assignment.

However, several issues keep coming up: The Happy House became so happy that every happy person in the neighborhood is coming to hang out in your living room. It is getting really crowded, which is having a negative impact on the residents of the house. All these random happy people are also eating all your food.

So, you decide to change the door entry policy, and not just let random happy people enter anymore, even if they are happy! Instead, you'd like to build a Face verification system so as to only let people from a specified list come in. To get admitted, each person has to swipe an ID card (identification card) to identify themselves at the door. The face recognition system then checks that they are who they claim to be.

3.1 - Face Verification

Let's build a database containing one encoding vector for each person allowed to enter the happy house. To generate the encoding we use img_to_encoding(image_path, model) which basically runs the forward propagation of the model on the specified image.

Run the following code to build the database (represented as a python dictionary). This database maps each person's name to a 128-dimensional encoding of their face.

【code】

database = {}

database["danielle"] = img_to_encoding("images/danielle.png", FRmodel)

database["younes"] = img_to_encoding("images/younes.jpg", FRmodel)

database["tian"] = img_to_encoding("images/tian.jpg", FRmodel)

database["andrew"] = img_to_encoding("images/andrew.jpg", FRmodel)

database["kian"] = img_to_encoding("images/kian.jpg", FRmodel)

database["dan"] = img_to_encoding("images/dan.jpg", FRmodel)

database["sebastiano"] = img_to_encoding("images/sebastiano.jpg", FRmodel)

database["bertrand"] = img_to_encoding("images/bertrand.jpg", FRmodel)

database["kevin"] = img_to_encoding("images/kevin.jpg", FRmodel)

database["felix"] = img_to_encoding("images/felix.jpg", FRmodel)

database["benoit"] = img_to_encoding("images/benoit.jpg", FRmodel)

database["arnaud"] = img_to_encoding("images/arnaud.jpg", FRmodel)

Now, when someone shows up at your front door and swipes their ID card (thus giving you their name), you can look up their encoding in the database, and use it to check if the person standing at the front door matches the name on the ID.

【中文翻译】

【code】

database = {}

database["danielle"] = img_to_encoding("images/danielle.png", FRmodel)

database["younes"] = img_to_encoding("images/younes.jpg", FRmodel)

database["tian"] = img_to_encoding("images/tian.jpg", FRmodel)

database["andrew"] = img_to_encoding("images/andrew.jpg", FRmodel)

database["kian"] = img_to_encoding("images/kian.jpg", FRmodel)

database["dan"] = img_to_encoding("images/dan.jpg", FRmodel)

database["sebastiano"] = img_to_encoding("images/sebastiano.jpg", FRmodel)

database["bertrand"] = img_to_encoding("images/bertrand.jpg", FRmodel)

database["kevin"] = img_to_encoding("images/kevin.jpg", FRmodel)

database["felix"] = img_to_encoding("images/felix.jpg", FRmodel)

database["benoit"] = img_to_encoding("images/benoit.jpg", FRmodel)

database["arnaud"] = img_to_encoding("images/arnaud.jpg", FRmodel)

现在, 当有人出现在你的前门, 并刷他们的身份证 (从而给你他们的名字), 你可以在数据库中查找他们的编码, 并使用它来检查站在前门的人是否与 id 上的名称匹配。

Exercise: Implement the verify() function which checks if the front-door camera picture (image_path) is actually the person called "identity". You will have to go through the following steps:

- Compute the encoding of the image from image_path

- Compute the distance about this encoding and the encoding of the identity image stored in the database

- Open the door if the distance is less than 0.7, else do not open.

As presented above, you should use the L2 distance (np.linalg.norm). (Note: In this implementation, compare the L2 distance, not the square of the L2 distance, to the threshold 0.7.)

【中文翻译】

- 从 image_path 中计算图像的编码

- 计算此编码与存储在数据库中的标识图像的编码的距离

- 如果距离小于 0.7, 则打开门, 否则不打开。

【code】

# GRADED FUNCTION: verify def verify(image_path, identity, database, model):

"""

Function that verifies if the person on the "image_path" image is "identity". Arguments:

image_path -- path to an image

identity -- string, name of the person you'd like to verify the identity. Has to be a resident of the Happy house.

字符串, 您要验证身份的人的姓名。必须是幸福之家的居民

database -- python dictionary mapping names of allowed people's names (strings) to their encodings (vectors).

model -- your Inception model instance in Keras Returns:

dist -- distance between the image_path and the image of "identity" in the database.

door_open -- True, if the door should open. False otherwise.

""" ### START CODE HERE ### # Step 1: Compute the encoding for the image. Use img_to_encoding() see example above. (≈ 1 line)

encoding = img_to_encoding(image_path,model) # Step 2: Compute distance with identity's image (≈ 1 line)

dist = np.linalg.norm(encoding - database[identity]) # Step 3: Open the door if dist < 0.7, else don't open (≈ 3 lines)

if dist<0.7:

print("It's " + str(identity) + ", welcome home!")

door_open = True

else:

print("It's not " + str(identity) + ", please go away")

door_open = False ### END CODE HERE ### return dist, door_open

【整理者注释】

Younes is trying to enter the Happy House and the camera takes a picture of him ("images/camera_0.jpg"). Let's run your verification algorithm on this picture:

【code】

verify("images/camera_0.jpg", "younes", database, FRmodel)

【result】

It's younes, welcome home!

(0.65939283, True)

Expected Output:

| It's younes, welcome home! | (0.65939283, True) |

Benoit, who broke the aquarium(水族馆) last weekend, has been banned from the house and removed from the database. He stole Kian's ID card and came back to the house to try to present himself as Kian. The front-door camera took a picture of Benoit ("images/camera_2.jpg). Let's run the verification algorithm to check if benoit can enter.

【code】

verify("images/camera_2.jpg", "kian", database, FRmodel)

【result】

It's not kian, please go away

Out[11]:

(0.86224014, False)

Expected Output:

| It's not kian, please go away | (0.86224014, False) |

3.2 - Face Recognition

Your face verification system is mostly working well. But since Kian got his ID card stolen, when he came back to the house that evening he couldn't get in!

To reduce such shenanigans, you'd like to change your face verification system to a face recognition system. This way, no one has to carry an ID card anymore. An authorized person can just walk up to the house, and the front door will unlock for them!

You'll implement a face recognition system that takes as input an image, and figures out if it is one of the authorized persons (and if so, who). Unlike the previous face verification system, we will no longer get a person's name as another input.

Exercise: Implement who_is_it(). You will have to go through the following steps:

- Compute the target encoding of the image from image_path

- Find the encoding from the database that has smallest distance with the target encoding.

- Initialize the

min_distvariable to a large enough number (100). It will help you keep track of what is the closest encoding to the input's encoding. - Loop over the database dictionary's names and encodings. To loop use

for (name, db_enc) in database.items().- Compute L2 distance between the target "encoding" and the current "encoding" from the database.

- If this distance is less than the min_dist, then set min_dist to dist, and identity to name.

- Initialize the

【中文翻译】

- 从 image_path 中计算图像的目标编码

- 从数据库中查找与目标编码具有最小距离的编码。

【code】

# GRADED FUNCTION: who_is_it def who_is_it(image_path, database, model):

"""

Implements face recognition for the happy house by finding who is the person on the image_path image. Arguments:

image_path -- path to an image

database -- database containing image encodings along with the name of the person on the image

model -- your Inception model instance in Keras Returns:

min_dist -- the minimum distance between image_path encoding and the encodings from the database

identity -- string, the name prediction for the person on image_path

""" ### START CODE HERE ### ## Step 1: Compute the target "encoding" for the image. Use img_to_encoding() see example above. ## (≈ 1 line)

encoding = img_to_encoding(image_path,model) ## Step 2: Find the closest encoding ## # Initialize "min_dist" to a large value, say 100 (≈1 line)

min_dist =100 # Loop over the database dictionary's names and encodings.

for (name, db_enc) in database.items(): # Compute L2 distance between the target "encoding" and the current "emb" from the database. (≈ 1 line)

dist =np.linalg.norm(encoding-db_enc) # If this distance is less than the min_dist, then set min_dist to dist, and identity to name. (≈ 3 lines)

if dist<min_dist:

min_dist = dist

identity = name ### END CODE HERE ### if min_dist > 0.7:

print("Not in the database.")

else:

print ("it's " + str(identity) + ", the distance is " + str(min_dist)) return min_dist, identity

Younes is at the front-door and the camera takes a picture of him ("images/camera_0.jpg"). Let's see if your who_it_is() algorithm identifies Younes.

【code】

who_is_it("images/camera_0.jpg", database, FRmodel)

【result】

it's younes, the distance is 0.659393

(0.65939283, 'younes')

Expected Output:

| it's younes, the distance is 0.659393 | (0.65939283, 'younes') |

You can change "camera_0.jpg" (picture of younes) to "camera_1.jpg" (picture of bertrand) and see the result.

Your Happy House is running well. It only lets in authorized persons, and people don't need to carry an ID card around anymore!

You've now seen how a state-of-the-art face recognition system works.

Although we won't implement it here, here're some ways to further improve the algorithm:

- Put more images of each person (under different lighting conditions, taken on different days, etc.) into the database. Then given a new image, compare the new face to multiple pictures of the person. This would increae accuracy.

- Crop the images to just contain the face, and less of the "border" region around the face. This preprocessing removes some of the irrelevant pixels around the face, and also makes the algorithm more robust.

What you should remember:

- Face verification solves an easier 1:1 matching problem; face recognition addresses a harder 1:K matching problem.

- The triplet loss is an effective loss function for training a neural network to learn an encoding of a face image.

- The same encoding can be used for verification and recognition. Measuring distances between two images' encodings allows you to determine whether they are pictures of the same person.

Congrats on finishing this assignment!

References:

- Florian Schroff, Dmitry Kalenichenko, James Philbin (2015). FaceNet: A Unified Embedding for Face Recognition and Clustering

- Yaniv Taigman, Ming Yang, Marc'Aurelio Ranzato, Lior Wolf (2014). DeepFace: Closing the gap to human-level performance in face verification

- The pretrained model we use is inspired by Victor Sy Wang's implementation and was loaded using his code: https://github.com/iwantooxxoox/Keras-OpenFace.

- Our implementation also took a lot of inspiration from the official FaceNet github repository: https://github.com/davidsandberg/facenet

课程四(Convolutional Neural Networks),第四 周(Special applications: Face recognition & Neural style transfer) —— 3.Programming assignments:Face Recognition for the Happy House的更多相关文章

- 课程四(Convolutional Neural Networks),第二 周(Deep convolutional models: case studies) —— 2.Programming assignments : Keras Tutorial - The Happy House (not graded)

Keras tutorial - the Happy House Welcome to the first assignment of week 2. In this assignment, you ...

- 课程四(Convolutional Neural Networks),第一周(Foundations of Convolutional Neural Networks) —— 3.Programming assignments:Convolutional Model: application

Convolutional Neural Networks: Application Welcome to Course 4's second assignment! In this notebook ...

- 课程四(Convolutional Neural Networks),第二 周(Deep convolutional models: case studies) ——3.Programming assignments : Residual Networks

Residual Networks Welcome to the second assignment of this week! You will learn how to build very de ...

- 课程四(Convolutional Neural Networks),第一周(Foundations of Convolutional Neural Networks) —— 2.Programming assignments:Convolutional Model: step by step

Convolutional Neural Networks: Step by Step Welcome to Course 4's first assignment! In this assignme ...

- 课程四(Convolutional Neural Networks),第二 周(Deep convolutional models: case studies) —— 0.Learning Goals

Learning Goals Understand multiple foundational papers of convolutional neural networks Analyze the ...

- 课程四(Convolutional Neural Networks),第二 周(Deep convolutional models: case studies) —— 1.Practice questions

[解释] 应该是same padding 而不是 valid padding . [解释] 卷积操作用的应该是adding additional layers to the network ,而是应该 ...

- 课程四(Convolutional Neural Networks),第一周(Foundations of Convolutional Neural Networks) —— 0.Learning Goals

Learning Goals Understand the convolution operation Understand the pooling operation Remember the vo ...

- 课程四(Convolutional Neural Networks),第一周(Foundations of Convolutional Neural Networks) —— 1.Practice questions:The basics of ConvNets

[解释] 100*(300*300*3)+ 100=27000100 [解释] (5*5*3+1)*100=7600 [中文翻译] 您有一个输入是 63x63x16, 并 将他与32个滤波器卷积, 每 ...

- 课程二(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization),第一周(Practical aspects of Deep Learning) —— 4.Programming assignments:Gradient Checking

Gradient Checking Welcome to this week's third programming assignment! You will be implementing grad ...

- Neural Networks and Deep Learning(week4)Deep Neural Network - Application(图像分类)

Deep Neural Network for Image Classification: Application 预先实现的代码,保存在本地 dnn_app_utils_v3.py import n ...

随机推荐

- ORACLE查询内存溢出

首先我们来看一个带排序的查询,点击工具栏的显示包含实际的执行计划. 1 SELECT * FROM AdventureWorks2008R2.Person.Person WHERE FirstName ...

- 从汇编层面解释switch语句判断快速的原因

源码如下: #include <stdio.h> void main(){ int flag; flag=1; switch (flag){ ...

- layui禁止某些导航菜单展开

官网上查得监听导航菜单的点击 当点击导航父级菜单和二级菜单时触发,回调函数返回所点击的菜单DOM对象: element.on('nav(filter)', function(elem){ consol ...

- stark组件开发之添加功能实现

添加功能,还是使用, form 组件来完成! 并且 完成添加之后,需要保留原搜索条件. def memory_url(self): '''用于反向生成url, 并且携带,get请求的参数,跳转到下一 ...

- python 之字符编码

一 了解字符编码的储备知识 python解释器和文件本编辑的异同 相同点:python解释器是解释执行文件内容的,因而python解释器具备读py文件的功能,这一点与文本编辑器一样 不 ...

- 使用虚拟机VM12安装REHL7

转载https://blog.csdn.net/qq_19467623/article/details/52869108 转载http://www.07net01.com/2016/03/141198 ...

- xsd

2018-10-08 <xsd:annotation> <xsd:documentation> <![CDATA[ 说明文档 ]]> </xsd:docume ...

- AX_List

List list = new List(Types::Class); CustTable custTable; while select custTable { list.addEn ...

- Zookeeper Client基础操作和Java调用

## Zookeeper > Zookeeper目前用来做数据同步,再各个服务之前同步关键信息 i.客户端操作 1. 创建 create [-s] [-e] path data acl -s 为 ...

- 孤岛营救问题 (BFS+状压)

https://loj.ac/problem/6121 BFS + 状压 写过就好想,注意细节debug #include <bits/stdc++.h> #define read rea ...