《T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction》 代码解读

论文链接:https://arxiv.org/abs/1811.05320

博客原作者Missouter,博客链接https://www.cnblogs.com/missouter/,欢迎交流。

解读了一下这篇论文github上关于T-GCN的代码,主要分为main文件与TGCN文件两部分,后续有空将会更新其他部分作为baseline代码的解读(鸽)。

1、main.py

# -*- coding: utf-8 -*-

import pickle as pkl

import tensorflow as tf

import pandas as pd

import numpy as np

import math

import os

import numpy.linalg as la

from input_data import preprocess_data,load_sz_data,load_los_data

from tgcn import tgcnCell

#from gru import GRUCell from visualization import plot_result,plot_error

from sklearn.metrics import mean_squared_error,mean_absolute_error

#import matplotlib.pyplot as plt

import time time_start = time.time()

###### Settings ######

flags = tf.app.flags

FLAGS = flags.FLAGS

flags.DEFINE_float('learning_rate', 0.001, 'Initial learning rate.')

flags.DEFINE_integer('training_epoch', 1, 'Number of epochs to train.')

flags.DEFINE_integer('gru_units', 64, 'hidden units of gru.')

flags.DEFINE_integer('seq_len',12 , ' time length of inputs.')

flags.DEFINE_integer('pre_len', 3, 'time length of prediction.')

flags.DEFINE_float('train_rate', 0.8, 'rate of training set.')

flags.DEFINE_integer('batch_size', 32, 'batch size.')

flags.DEFINE_string('dataset', 'los', 'sz or los.')

flags.DEFINE_string('model_name', 'tgcn', 'tgcn')

model_name = FLAGS.model_name

data_name = FLAGS.dataset

train_rate = FLAGS.train_rate

seq_len = FLAGS.seq_len

output_dim = pre_len = FLAGS.pre_len

batch_size = FLAGS.batch_size

lr = FLAGS.learning_rate

training_epoch = FLAGS.training_epoch

gru_units = FLAGS.gru_units

开头部分用于设置训练基本参数;使用flag对参数进行设置与说明。

if data_name == 'sz':

data, adj = load_sz_data('sz')

if data_name == 'los':

data, adj = load_los_data('los') time_len = data.shape[0]

num_nodes = data.shape[1]

data1 =np.mat(data,dtype=np.float32) #### normalization

max_value = np.max(data1)

data1 = data1/max_value

trainX, trainY, testX, testY = preprocess_data(data1, time_len, train_rate, seq_len, pre_len) totalbatch = int(trainX.shape[0]/batch_size)

training_data_count = len(trainX)

这部分导入数据集并对数据进行归一化,input_data文件中导入函数如下:

def load_sz_data(dataset):

sz_adj = pd.read_csv(r'data/sz_adj.csv',header=None)

adj = np.mat(sz_adj)

sz_tf = pd.read_csv(r'data/sz_speed.csv')

return sz_tf, adj def load_los_data(dataset):

los_adj = pd.read_csv(r'data/los_adj.csv',header=None)

adj = np.mat(los_adj)

los_tf = pd.read_csv(r'data/los_speed.csv')

return los_tf, adj

其中preprocess_data函数根据main函数开头设置的训练集、测试集比例对数据集进行分割:

def preprocess_data(data, time_len, rate, seq_len, pre_len):

train_size = int(time_len * rate)

train_data = data[0:train_size]

test_data = data[train_size:time_len] trainX, trainY, testX, testY = [], [], [], []

for i in range(len(train_data) - seq_len - pre_len):

a = train_data[i: i + seq_len + pre_len]

trainX.append(a[0 : seq_len])

trainY.append(a[seq_len : seq_len + pre_len])

for i in range(len(test_data) - seq_len -pre_len):

b = test_data[i: i + seq_len + pre_len]

testX.append(b[0 : seq_len])

testY.append(b[seq_len : seq_len + pre_len]) trainX1 = np.array(trainX)

trainY1 = np.array(trainY)

testX1 = np.array(testX)

testY1 = np.array(testY)

return trainX1, trainY1, testX1, testY1

接着定义了TGCN函数:

def TGCN(_X, _weights, _biases):

###

cell_1 = tgcnCell(gru_units, adj, num_nodes=num_nodes)

cell = tf.nn.rnn_cell.MultiRNNCell([cell_1], state_is_tuple=True)

_X = tf.unstack(_X, axis=1)

outputs, states = tf.nn.static_rnn(cell, _X, dtype=tf.float32)

m = []

for i in outputs:

o = tf.reshape(i,shape=[-1,num_nodes,gru_units])

o = tf.reshape(o,shape=[-1,gru_units])

m.append(o)

last_output = m[-1]

output = tf.matmul(last_output, _weights['out']) + _biases['out']

output = tf.reshape(output,shape=[-1,num_nodes,pre_len])

output = tf.transpose(output, perm=[0,2,1])

output = tf.reshape(output, shape=[-1,num_nodes])

return output, m, states

函数开头首先引入了TGCN的计算单元,tgcnCell的解读将在后文进行;使用tf.nn.rnn_cell.MultiRNNCell实现多层神经网络;对输入数据进行处理,创建由RNNCell指定的循环神经网络。接着对每个循环神经网络的输出进行处理,首先重塑结果张量,tf.reshape中参数-1表示计算该维度的大小,以使总大小保持不变;第二维为点的数量,第三维为GRU单元的数量,再紧接上一层张量重塑的结果继续进行重塑,得到由长度为GRU数量列表组成的列表,使用tf.matmul将输出矩阵乘以权重矩阵,biases为偏差,接着重塑输出张量为第二维为数据点的数量,第三维为预测长度的矩阵,再置换输出矩阵,使用transpose按照[0,2,1]重新排列尺寸,进一步重塑为由数据点数目长度列表组成的列表,得到最终输出结果。

紧接着下一段使用占位符定义输入与标签,随机初始化权重与偏差:

inputs = tf.placeholder(tf.float32, shape=[None, seq_len, num_nodes])

labels = tf.placeholder(tf.float32, shape=[None, pre_len, num_nodes]) weights = {

'out': tf.Variable(tf.random_normal([gru_units, pre_len], mean=1.0), name='weight_o')}

biases = {

'out': tf.Variable(tf.random_normal([pre_len]),name='bias_o')}

if model_name == 'tgcn':

pred,ttts,ttto = TGCN(inputs, weights, biases) y_pred = pred

lambda_loss = 0.0015

Lreg = lambda_loss * sum(tf.nn.l2_loss(tf_var) for tf_var in tf.trainable_variables())

label = tf.reshape(labels, [-1,num_nodes])

loss = tf.reduce_mean(tf.nn.l2_loss(y_pred-label) + Lreg)

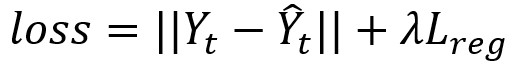

对应论文公式(详见上篇博客):

定义均方根误差:

error = tf.sqrt(tf.reduce_mean(tf.square(y_pred-label)))

optimizer = tf.train.AdamOptimizer(lr).minimize(loss)

对迭代训练过程进行初始化:

variables = tf.global_variables()

saver = tf.train.Saver(tf.global_variables()) #

#sess = tf.Session()

gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.333)

sess = tf.Session(config=tf.ConfigProto(gpu_options=gpu_options))

sess.run(tf.global_variables_initializer())

out = 'out/%s'%(model_name)

#out = 'out/%s_%s'%(model_name,'perturbation')

path1 = '%s_%s_lr%r_batch%r_unit%r_seq%r_pre%r_epoch%r'%(model_name,data_name,lr,batch_size,gru_units,seq_len,pre_len,training_epoch)

path = os.path.join(out,path1)

if not os.path.exists(path):

os.makedirs(path)

其中global_variables用于获取程序中的变量,配合train.Saver将训练好的模型参数保存起来,以便以后进行验证或测试。tf.GPUOptions用于限制GPU资源的使用,不过为什么要限制使用三分之一的显存尚不清楚,算训练小技巧嘛?初始化模型的参数后设置输出路径与文件名,不详细讨论。

文件中的评估模块定义了论文实验部分的指标:均方根误差、平均绝对误差、准确率、确定系数与可方差值。

def evaluation(a,b):

rmse = math.sqrt(mean_squared_error(a,b))

mae = mean_absolute_error(a, b)

F_norm = la.norm(a-b,'fro')/la.norm(a,'fro')

r2 = 1-((a-b)**2).sum()/((a-a.mean())**2).sum()

var = 1-(np.var(a-b))/np.var(a)

return rmse, mae, 1-F_norm, r2, var

接下来就是常见的训练部分:

for epoch in range(training_epoch):

for m in range(totalbatch):

mini_batch = trainX[m * batch_size : (m+1) * batch_size]

mini_label = trainY[m * batch_size : (m+1) * batch_size]

_, loss1, rmse1, train_output = sess.run([optimizer, loss, error, y_pred],

feed_dict = {inputs:mini_batch, labels:mini_label})

batch_loss.append(loss1)

batch_rmse.append(rmse1 * max_value) # Test completely at every epoch

loss2, rmse2, test_output = sess.run([loss, error, y_pred],

feed_dict = {inputs:testX, labels:testY})

test_label = np.reshape(testY,[-1,num_nodes])

rmse, mae, acc, r2_score, var_score = evaluation(test_label, test_output)

test_label1 = test_label * max_value#反归一化

test_output1 = test_output * max_value

test_loss.append(loss2)

test_rmse.append(rmse * max_value)

test_mae.append(mae * max_value)

test_acc.append(acc)

test_r2.append(r2_score)

test_var.append(var_score)

test_pred.append(test_output1) print('Iter:{}'.format(epoch),

'train_rmse:{:.4}'.format(batch_rmse[-1]),

'test_loss:{:.4}'.format(loss2),

'test_rmse:{:.4}'.format(rmse),

'test_acc:{:.4}'.format(acc))

if (epoch % 500 == 0):

saver.save(sess, path+'/model_100/TGCN_pre_%r'%epoch, global_step = epoch) time_end = time.time()

print(time_end-time_start,'s')

附带对每个周期训练结果的测试、对结果的反归一化,训练设置为每训练500层保存一次模型,并对训练得到的参数指标进行打印与保存。代码最后还给出了可视化数据指标的方法,即将数据指标写入csv文件中:

b = int(len(batch_rmse)/totalbatch)

batch_rmse1 = [i for i in batch_rmse]

train_rmse = [(sum(batch_rmse1[i*totalbatch:(i+1)*totalbatch])/totalbatch) for i in range(b)]

batch_loss1 = [i for i in batch_loss]

train_loss = [(sum(batch_loss1[i*totalbatch:(i+1)*totalbatch])/totalbatch) for i in range(b)] index = test_rmse.index(np.min(test_rmse))

test_result = test_pred[index]

var = pd.DataFrame(test_result)

var.to_csv(path+'/test_result.csv',index = False,header = False)

#plot_result(test_result,test_label1,path)

#plot_error(train_rmse,train_loss,test_rmse,test_acc,test_mae,path) print('min_rmse:%r'%(np.min(test_rmse)),

'min_mae:%r'%(test_mae[index]),

'max_acc:%r'%(test_acc[index]),

'r2:%r'%(test_r2[index]),

'var:%r'%test_var[index])

至此对论文对应代码main文件的解读就结束了。

2、tgcn.py

此文件只定义了一个TGCN计算单元的类,初始化部分不作详谈:

# -*- coding: utf-8 -*- #import numpy as np

import tensorflow as tf

from tensorflow.contrib.rnn import RNNCell

from utils import calculate_laplacian class tgcnCell(RNNCell):

"""Temporal Graph Convolutional Network """ def call(self, inputs, **kwargs):

pass

-

def __init__(self, num_units, adj, num_nodes, input_size=None,

act=tf.nn.tanh, reuse=None): super(tgcnCell, self).__init__(_reuse=reuse)

self._act = act

self._nodes = num_nodes

self._units = num_units

self._adj = []

self._adj.append(calculate_laplacian(adj)) @property

def state_size(self):

return self._nodes * self._units @property

def output_size(self):

return self._units

重点之一在于对GRU单元的定义:

def __call__(self, inputs, state, scope=None):

with tf.variable_scope(scope or "tgcn"):

with tf.variable_scope("gates"):

value = tf.nn.sigmoid(

self._gc(inputs, state, 2 * self._units, bias=1.0, scope=scope))

r, u = tf.split(value=value, num_or_size_splits=2, axis=1)

with tf.variable_scope("candidate"):

r_state = r * state

c = self._act(self._gc(inputs, r_state, self._units, scope=scope))

new_h = u * state + (1 - u) * c

return new_h, new_h

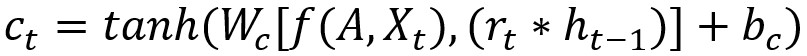

代码还原论文中tgcn单元的计算过程(详见上一篇博客):

参数中state对应论文中上一时刻的状态,即ht-1。variable_scope使得多个变量得以有相同的命名;上述代码中tf.nn.sigmoid语句为激活函数,用于进行图卷积GC;tf.split语句用于

函数最后返回最新状态ht。

图卷积过程最后被定义:

def _gc(self, inputs, state, output_size, bias=0.0, scope=None):

## inputs:(-1,num_nodes) inputs = tf.expand_dims(inputs, 2)

## state:(batch,num_node,gru_units)

state = tf.reshape(state, (-1, self._nodes, self._units))

## concat

x_s = tf.concat([inputs, state], axis=2)

input_size = x_s.get_shape()[2].value

## (num_node,input_size,-1)

x0 = tf.transpose(x_s, perm=[1, 2, 0])

x0 = tf.reshape(x0, shape=[self._nodes, -1]) scope = tf.get_variable_scope()

with tf.variable_scope(scope):

for m in self._adj:

x1 = tf.sparse_tensor_dense_matmul(m, x0)

# print(x1)

x = tf.reshape(x1, shape=[self._nodes, input_size,-1])

x = tf.transpose(x,perm=[2,0,1])

x = tf.reshape(x, shape=[-1, input_size])

weights = tf.get_variable(

'weights', [input_size, output_size], initializer=tf.contrib.layers.xavier_initializer())

x = tf.matmul(x, weights) # (batch_size * self._nodes, output_size)

biases = tf.get_variable(

"biases", [output_size], initializer=tf.constant_initializer(bias, dtype=tf.float32))

x = tf.nn.bias_add(x, biases)

x = tf.reshape(x, shape=[-1, self._nodes, output_size])

x = tf.reshape(x, shape=[-1, self._nodes * output_size])

return x

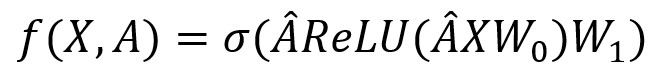

函数开头对特征矩阵进行构建:使用expand_dims增加输入维度,再使用将当前状态转化为第二维为数据点数量,第三维为gru单元数量的列表,使用concat在第二个维度拼接张量,最后得到一个长度为数据点数量的列表。get_variable_scope获取变量后,将得到的特征矩阵与邻接矩阵相乘。在tf.nn.bias_add处激活得到两层GCN,对应公式:

最终返回输出值x。此函数经历了很多张量的形式转换,对应论文空间关系建模过程。

关于论文中TGCN部分代码的解读结束了,模块化的编程对于学习实验手法有很多值得学习的地方,对于TGCN本身的实现、涉及张量的处理变换也有很多可以借鉴的地方。

《T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction》 代码解读的更多相关文章

- 《T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction》 论文解读

论文链接:https://arxiv.org/abs/1811.05320 最近发现博客好像会被CSDN和一些奇怪的野鸡网站爬下来?看见有人跟爬虫机器人单方面讨论问题我也蛮无奈的.总之原作者Misso ...

- 【论文笔记】Spatial Temporal Graph Convolutional Networks for Skeleton-Based Action Recognition

Spatial Temporal Graph Convolutional Networks for Skeleton-Based Action Recognition 2018-01-28 15:4 ...

- Two-Stream Adaptive Graph Convolutional Network for Skeleton-Based Action Recognition

Two-Stream Adaptive Graph Convolutional Network for Skeleton-Based Action Recognition 摘要 基于骨架的动作识别因为 ...

- Spatial Temporal Graph Convolutional Networks for Skeleton-Based Action Recognition (ST-GCN)

Spatial Temporal Graph Convolutional Networks for Skeleton-Based Action Recognition 摘要 动态人体骨架模型带有进行动 ...

- Graph Convolutional Network

How to do Deep Learning on Graphs with Graph Convolutional Networks https://towardsdatascience.com/h ...

- GCN(Graph Convolutional Network)的简单公式推导

第一步:从前一个隐藏层到后一个隐藏层,对结点进行特征变换 第二步:对第一步进行具体实现 第三步:对邻接矩阵进行归一化(行之和为1) 邻接矩阵A的归一化,可以通过度矩阵D来实现(即通过D^-1*A来实现 ...

- GCN: Graph Convolutional Network

从CNN到GCN的联系与区别: https://www.zhihu.com/question/54504471/answer/332657604 更加详解Laplacian矩阵: https://ww ...

- GRAPH CONVOLUTIONAL NETWORK WITH SEQUENTIAL ATTENTION FOR GOAL-ORIENTED DIALOGUE SYSTEMS

面向领域特定目标的对话系统通常需要建模三种类型的输入,即(i)与领域相关的知识库,(ii)对话的历史(即话语序列)和(iii)需要生成响应的当前话语. 在对这些输入进行建模时,当前最先进的模型(如Me ...

- 关于Graph Convolutional Network的初步理解

为给之后关于图卷积网络的科研做知识积累,这里写一篇关于GCN基本理解的博客.GCN的本质是一个图网络中,特征信息的交互+与传播.这里的图指的不是图片,而是数据结构中的图,图卷积网络的应用非常广泛 ,经 ...

随机推荐

- 二进制图片blob数据转canvas

javascript是有操作二进制文件的方法的,在这里就不详述了. 而AJAX的核心XMLHttpRequest也可以获取服务端给的二进制Blob. 可以参考: XMLHttpRequest Leve ...

- 带你上手阿里开源的 Java 诊断利器:Arthas

本文适合有 Java 基础知识的人群. 本文作者:HelloGitHub-秦人 HelloGitHub 推出的<讲解开源项目>系列,今天给大家带来一款阿里开源的 Java 诊断利器 Art ...

- STL源码剖析:序列式容器

前言 容器,置物之所也.就是存放数据的地方. array(数组).list(串行).tree(树).stack(堆栈).queue(队列).hash table(杂凑表).set(集合).map(映像 ...

- [spring] -- bean作用域跟生命周期篇

作用域 singleton : 唯一 bean 实例,Spring 中的 bean 默认都是单例的. prototype : 每次请求都会创建一个新的 bean 实例. request : 每一次HT ...

- 题解 SP2713 【GSS4 - Can you answer these queries IV】

用计算器算一算,就可以发现\(10^{18}\)的数,被开方\(6\)次后就变为了\(1\). 所以我们可以直接暴力的进行区间修改,若这个数已经到达\(1\),则以后就不再修改(因为\(1\)开方后还 ...

- python thrift 实现 单端口多服务的过程

Thrift 是一种接口描述语言和二进制通信协议.以前也没接触过,最近有个项目需要建立自动化测试,这个项目之间的微服务都是通过 Thrift 进行通信的,然后写自动化脚本之前研究了一下. 需要定义一个 ...

- DJANGO-天天生鲜项目从0到1-012-订单-用户订单页面

本项目基于B站UP主‘神奇的老黄’的教学视频‘天天生鲜Django项目’,视频讲的非常好,推荐新手观看学习 https://www.bilibili.com/video/BV1vt41147K8?p= ...

- 今天完成了deviceman的程序,压缩成deivceman.rar

目录在d:\android_projects\deviceman 压成了deviceman.rar 发送到了yzx3233@sina.com

- Caused by: java.sql.SQLSyntaxErrorException: Expression #1 of SELECT list is not in GROUP BY clause and contains nonaggregated column 'c.id'

打开mysql客户端,输入 select @@global.sql_mode 再执行 set @@global.sql_mode ='STRICT_TRANS_TABLES,NO_ZERO_IN_DA ...

- 3-Pandas之Series和DataFrame区别

一.Pandas pandas的数据元素包括以下几种类型: 类型 说明 object 字符串或混合类型 int 整型 float 浮点型 datetime 时间类型 bool 布尔型 二.Series ...