docker高级应用之cpu与内存资源限制(转)

标签:docker资源限制 docker cpu限制 docker内存限制

之前介绍过如何使用ovs的qos技术对docker容器做网络资源限制,本次介绍如何使用docker本身的参数进行cpu与内存资源限制。

默认docker本身提供在创建容器的时候,进行内存、swap、cpu限制,但使用起来就得有些技巧需要注意,具体如下:

1、内存限制

默认docker内存限制可以使用-m参数进行限制,但如果仅-m参数,不添加其他,那么内存限制不会限制死,比如你-m 256m内存,那么容器里程序可以跑到256m*2=512m后才会被oom给杀死,

原因是源码里memory.memsw.limit_in_bytes 值是被设置成我们指定的内存参数的两倍。

源码地址是https://github.com/docker/libcontainer/blob/v1.2.0/cgroups/fs/memory.go#L39

内容如下

// By default, MemorySwap is set to twice the size of RAM.

// If you want to omit MemorySwap, set it to `-1‘.

if d.c.MemorySwap != -1 {

if err := writeFile(dir, "memory.memsw.limit_in_bytes", strconv.FormatInt(d.c.Memory*2, 10)); err != nil {

return err

}

当然以上的内容都是有人发过的,不是我原创,地址是https://goldmann.pl/blog/2014/09/11/resource-management-in-docker/

下面是介绍一下我的测试过程

先限制内存为100m

docker run --restart always -d -m 100m --name=‘11‘ docker.ops-chukong.com:5000/centos6-base:5.0 /usr/bin/supervisord

然后登陆容器后使用stress进行内存测试

PS:怕大家不知道如何安装stress,我也简单介绍一下

rpm -Uvh http://pkgs.repoforge.org/stress/stress-1.0.2-1.el7.rf.x86_64.rpm

这一个命令就安装完成了

使用stress进行测试

stress --vm 1 --vm-bytes 190M --vm-hang 0

这个命令是跑190mM的内存

下面是跑的结果

10:11:35 # stress --vm 1 --vm-bytes 190M --vm-hang 0

stress: info: [282] dispatching hogs: 0 cpu, 0 io, 1 vm, 0 hdd

stress: FAIL: [282] (420) <-- worker 283 got signal 9

stress: WARN: [282] (422) now reaping child worker processes

stress: FAIL: [282] (456) failed run completed in 1s

可以看到程序死了,想查看程序死的原因可以去/var/log/messages里查看

May 22 10:11:06 ip-10-10-125-11 docker: 2015/05/22 10:11:06 http: multiple response.WriteHeader calls

May 22 10:11:45 ip-10-10-125-11 kernel: stress invoked oom-killer: gfp_mask=0xd0, order=0, oom_score_adj=0

May 22 10:11:45 ip-10-10-125-11 kernel: stress cpuset=docker-6ae152aa189f2596249a56a2e9c414ae70addc55a2fe495b93e7420fc64758d5.scope mems_allowed=0-1

May 22 10:11:45 ip-10-10-125-11 kernel: CPU: 7 PID: 37242 Comm: stress Not tainted 3.10.0-229.el7.x86_64 #1

May 22 10:11:45 ip-10-10-125-11 kernel: Hardware name: Dell Inc. PowerEdge R720/01XT2D, BIOS 2.0.19 08/29/2013

May 22 10:11:45 ip-10-10-125-11 kernel: ffff88041780a220 0000000019d4767f ffff8807c189b808 ffffffff81604b0a

May 22 10:11:45 ip-10-10-125-11 kernel: ffff8807c189b898 ffffffff815ffaaf 0000000000000046 ffff880418462d80

May 22 10:11:45 ip-10-10-125-11 kernel: ffff8807c189b870 ffff8807c189b868 ffffffff81159fb6 ffff8803f55fc9a8

May 22 10:11:45 ip-10-10-125-11 kernel: Call Trace:

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81604b0a>] dump_stack+0x19/0x1b

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff815ffaaf>] dump_header+0x8e/0x214

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81159fb6>] ? find_lock_task_mm+0x56/0xc0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff8115a44e>] oom_kill_process+0x24e/0x3b0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff8107bdde>] ? has_capability_noaudit+0x1e/0x30

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff811bd5ae>] __mem_cgroup_try_charge+0xb9e/0xbe0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff811bdd80>] ? mem_cgroup_charge_common+0xc0/0xc0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff811bdd19>] mem_cgroup_charge_common+0x59/0xc0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff811bf96a>] mem_cgroup_cache_charge+0x8a/0xb0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff811571a2>] __add_to_page_cache_locked+0x52/0x260

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81157407>] add_to_page_cache_lru+0x37/0xb0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff812070f5>] mpage_readpages+0xb5/0x160

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffffa020b310>] ? _ext4_get_block+0x1b0/0x1b0 [ext4]

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffffa020b310>] ? _ext4_get_block+0x1b0/0x1b0 [ext4]

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffffa0208f0c>] ext4_readpages+0x3c/0x40 [ext4]

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff8116333c>] __do_page_cache_readahead+0x1cc/0x250

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81163981>] ra_submit+0x21/0x30

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81158f1d>] filemap_fault+0x11d/0x430

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff8117e63e>] __do_fault+0x7e/0x520

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81182995>] handle_mm_fault+0x3d5/0xd70

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81189396>] ? mmap_region+0x186/0x610

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff8160fe06>] __do_page_fault+0x156/0x540

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81189b25>] ? do_mmap_pgoff+0x305/0x3c0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff81173f89>] ? vm_mmap_pgoff+0xb9/0xe0

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff8161020a>] do_page_fault+0x1a/0x70

May 22 10:11:45 ip-10-10-125-11 kernel: [<ffffffff8160c408>] page_fault+0x28/0x30

May 22 10:11:45 ip-10-10-125-11 kernel: Task in /system.slice/docker-6ae152aa189f2596249a56a2e9c414ae70addc55a2fe495b93e7420fc64758d5.scope killed as a result of limit of /system.slice/docker-6ae152aa189f2596249a56a2e9c414ae70addc55a2fe495b93e7420fc64758d5.scope

May 22 10:11:45 ip-10-10-125-11 kernel: memory: usage 101144kB, limit 102400kB, failcnt 10934

May 22 10:11:45 ip-10-10-125-11 kernel: memory+swap: usage 204800kB, limit 204800kB, failcnt 37

May 22 10:11:45 ip-10-10-125-11 kernel: kmem: usage 0kB, limit 9007199254740991kB, failcnt 0

May 22 10:11:45 ip-10-10-125-11 kernel: Memory cgroup stats for /system.slice/docker-6ae152aa189f2596249a56a2e9c414ae70addc55a2fe495b93e7420fc64758d5.scope: cache:80KB rss:101064KB rss_huge:90112KB mapped_file:0KB swap:103656KB inactive_anon:72136KB active_anon:28928KB inactive_file:56KB active_file:24KB unevictable:0KB

May 22 10:11:45 ip-10-10-125-11 kernel: [ pid ] uid tgid total_vm rss nr_ptes swapents oom_score_adj name

May 22 10:11:45 ip-10-10-125-11 kernel: [34515] 0 34515 24879 302 54 1947 0 supervisord

May 22 10:11:45 ip-10-10-125-11 kernel: [34531] 0 34531 16674 0 38 179 0 sshd

May 22 10:11:45 ip-10-10-125-11 kernel: [34542] 0 34542 5115 9 14 141 0 crond

May 22 10:11:45 ip-10-10-125-11 kernel: [34544] 0 34544 42841 0 20 101 0 rsyslogd

May 22 10:11:45 ip-10-10-125-11 kernel: [35344] 0 35344 4359 0 11 52 0 anacron

May 22 10:11:45 ip-10-10-125-11 kernel: [36682] 0 36682 2873 38 10 58 0 bash

May 22 10:11:45 ip-10-10-125-11 kernel: [37241] 0 37241 1643 1 8 22 0 stress

May 22 10:11:45 ip-10-10-125-11 kernel: [37242] 0 37242 50284 23693 103 24637 0 stress

May 22 10:11:45 ip-10-10-125-11 kernel: Memory cgroup out of memory: Kill process 37242 (stress) score 945 or sacrifice child

May 22 10:11:45 ip-10-10-125-11 kernel: Killed process 37242 (stress) total-vm:201136kB, anon-rss:94772kB, file-rss:0kB

显示内存使用超过2倍限制的内存,所以进行oom kill掉stress的进程,但容器不会死,仍然能正常运行。

但如果你想写死内存,可以使用

-m 100m --memory-swap=100m

这样就直接限制死,只要程序内存使用超过100m,那么就会被oom给kill。

2、cpu限制

目前cpu限制可以使用绑定到具体的线程,或者是在绑定线程基础上对线程资源权重分配。

绑定线程可以使用参数--cpuset-cpus=7(注意1.6版本才是--cpuset-cpus,1.6版本以下是--cpuset)

分配线程资源使用可以使用参数-c

具体的cpu如何使用与测试,参考上面国外朋友写的文章,很全面。

我这里注意介绍一下我这里平台cpu在容器里是如何使用?

我这里平台是直接把docker弄成iaas平台,然后在这个iaas基础上跑paas;其他的docker云基本都是在openstack的iaas或者其他的iaas里跑docker做paas。

内存使用我是直接限制为256m、512m、1g,cpu默认都是0.2c。

c 的概念是cpu线程,比如你top的时候,使用1命令可以直接看到系统里有多少线程,以16个线程为例,我保留2个c给宿主机系统使用,另外14个c给 docker使用,一个c有5个容器共同使用,最少可以使用20%资源,也就是0.2c,最多可以使用100%资源,也就是1c。

这样好处是线程不忙的时候,容器可以独享100%资源,资源紧张的时候,也就保证自己有20%的资源使用,另外同一台宿主机的容器即使某个资源使用多,也不好太影响其他c的容器。

我这里测试环境出现过某个项目,在进行压力测试的时候,由于没有对容器做资源限制,导致测试中把宿主机压测挂了,导致同台宿主机所有容器都无法访问。

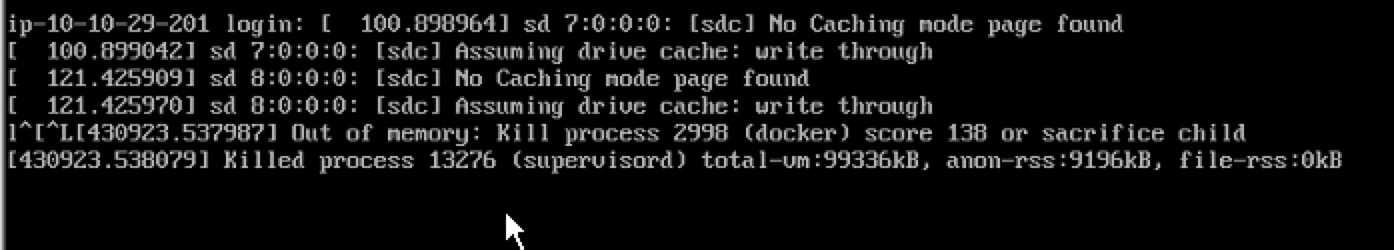

错误如下图

讲了一大堆内存与cpu资源限制,基本别人都多多少少的结束过,我在介绍一下我独有的。

3、模块化进行cpu、内存、网络资源限制

可以使用先是脚本进行一键化的配置下面功能容器:

1、持久化独立ip;

2、可以做系统描述符配置;

3、可以限制内存使用资源;

4、可以限制网络使用资源;

5、可以自动绑定cpu线程;

6、可以出现故障时自动重启容器;

7、支持容器使用密码或者key验证;

下面是创建容器的过程

1、创建密码验证的

内存是256m,网络资源1m(bit),使用密码验证,文件描述符是65535

[root@ip-10-10-125-8 docker_code]# python create_docker_container_multi.py test1 docker.ops-chukong.com:5000/centos6-base:5.0 /usr/bin/supervisord 256m 1 passwd "123123" 65535

{‘Container_id‘: ‘522b5a91838375657c5ebba6f8b43d737dd7290cfad46803ba663a70ef789555‘, ‘Container_gateway_ip‘: u‘172.16.0.14‘, ‘Memory_limit‘: ‘256m‘, ‘Cpu_share‘: ‘3‘, ‘Container_status‘: ‘running‘, ‘Physics_ip‘: ‘10.10.125.8‘, ‘Container_create‘: ‘2015.06.09-10:47:41‘, ‘Container_name‘: ‘test1‘, ‘Cpu_core‘: ‘14‘, ‘Network_limit‘: ‘1‘, ‘Container_gateway_bridge‘: ‘ovs2‘, ‘Container_ip‘: u‘172.16.2.164/16‘}

测试

[root@ip-10-10-125-8 docker_code]# ssh 172.16.2.164

root@172.16.2.164‘s password:

Last login: Fri Mar 13 13:53:37 2015 from 172.17.42.1

root@522b5a918383:~

10:48:56 # ifconfig eth1

eth1 Link encap:Ethernet HWaddr 86:44:D4:F5:5C:01

inet addr:172.16.2.164 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::8444:d4ff:fef5:5c01/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:96 errors:0 dropped:0 overruns:0 frame:0

TX packets:75 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:12261 (11.9 KiB) TX bytes:10464 (10.2 KiB) root@522b5a918383:~

10:48:59 # ulimit -a

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 128596

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 65535

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 1048576

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

内存的话,使用docker stats模块查看

[root@ip-10-10-125-8 docker_code]# docker stats test1

CONTAINER CPU % MEM USAGE/LIMIT MEM % NET I/O

test1 0.00% 30.7 MiB/256 MiB 11.99% 0 B/0 B

可以看到就是256m的内存限制

网络的话,我通过wget下载iso文件来测试

root@522b5a918383:~

10:50:20 # wget -S -O /dev/null http://mirror.neu.edu.cn/centos/7/isos/x86_64/CentOS-7-x86_64-DVD-1503-01.iso

--2015-06-09 10:51:04-- http://mirror.neu.edu.cn/centos/7/isos/x86_64/CentOS-7-x86_64-DVD-1503-01.iso

Resolving mirror.neu.edu.cn... 202.118.1.64, 2001:da8:9000::64

Connecting to mirror.neu.edu.cn|202.118.1.64|:80... connected.

HTTP request sent, awaiting response...

HTTP/1.1 200 OK

Server: nginx/1.0.4

Date: Tue, 09 Jun 2015 02:44:48 GMT

Content-Type: application/octet-stream

Content-Length: 4310695936

Last-Modified: Wed, 01 Apr 2015 00:05:50 GMT

Connection: keep-alive

Accept-Ranges: bytes

Length: 4310695936 (4.0G) [application/octet-stream]

Saving to: “/dev/null” 0% [ ] 884,760 117K/s eta 9h 54m

可以看到下载速度是1m(bit)/8=117k(byte)。

在测试一下持久化固定ip,重启容器

[root@ip-10-10-125-8 docker_code]# docker restart test1

test1

[root@ip-10-10-125-8 docker_code]# docker exec test1 ifconfig

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

可以看到重启后容器ip没了,但我有另外的一个脚本,会在发现容器没有ip后自动的把ip以及之前的配置重新给予(在crontab里每分钟运行一次)。

[root@ip-10-10-125-8 docker_code]# docker exec test1 ifconfig

eth1 Link encap:Ethernet HWaddr B6:6D:BC:CC:CD:69

inet addr:172.16.2.164 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::b46d:bcff:fecc:cd69/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:9 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:648 (648.0 b) TX bytes:690 (690.0 b) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b) [root@ip-10-10-125-8 docker_code]#

可以看到ip重新赋予了,实现了持久化固定ip功能。

下面是创建过程中使用的脚本.大家参考就行,没必要直接复制使用,每个人的需求都是不一样的。

[root@ip-10-10-125-8 docker_code]# cat create_docker_container_multi.py

#!/usr/bin/env python

#-*- coding: utf-8 -*-

#author:Deng Lei

#email: dl528888@gmail.com

import sys

import etcd

import time

import socket, struct, fcntl

from docker import Client

import subprocess

from multiprocessing import cpu_count

#get local host ip

def get_local_ip(iface = ‘em1‘):

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

sockfd = sock.fileno()

SIOCGIFADDR = 0x8915

ifreq = struct.pack(‘16sH14s‘, iface, socket.AF_INET, ‘\x00‘*14)

try:

res = fcntl.ioctl(sockfd, SIOCGIFADDR, ifreq)

except:

return None

ip = struct.unpack(‘16sH2x4s8x‘, res)[2]

return socket.inet_ntoa(ip)

def docker_container_all():

docker_container=docker_client.containers(all=True)

container_name=[]

container_stop_name=[]

for i in docker_container:

container_name.append(i[‘Names‘])

for b in container_name:

for c in b:

container_stop_name.append(c[1:])

return container_stop_name

def get_now_cpu_core():

#get recycle cpu use in etcd

recycle_cpu_key=‘%s%s/resource_limit/cpu_limit/now_cpu_core_avaiable‘%(docker_etcd_key,local_ip)

try:

all_recycle_cpu_result=eval(etcd_client.read(recycle_cpu_key).value)

except etcd.EtcdKeyNotFound:

all_recycle_cpu_result=‘‘

if len(all_recycle_cpu_result) > 0:

now_core={}

now_core[‘now_core‘]=all_recycle_cpu_result[0].split(‘-‘)[0]

now_core[‘now_core_share‘]=all_recycle_cpu_result[0].split(‘-‘)[1]

all_recycle_cpu_result.remove(all_recycle_cpu_result[0])

etcd_client.write(recycle_cpu_key,all_recycle_cpu_result)

return now_core

else:

#get local core use in etcd

now_core={‘count‘:cpu_core,‘now_core‘:cpu_core-1,‘core_share_limit‘:cpu_core_share_limit,‘now_core_share‘:-1}

try:

now_core_use=eval(etcd_client.read(‘%s%s/resource_limit/cpu_limit/now_cpu_core‘%(docker_etcd_key,local_ip)).value)

except KeyError:

now_core_use=now_core

etcd_client.write(‘%s%s/resource_limit/cpu_limit/now_cpu_core‘%(docker_etcd_key,local_ip),now_core)

time.sleep(1)

except etcd.EtcdKeyNotFound:

now_core_use=now_core

etcd_client.write(‘%s%s/resource_limit/cpu_limit/now_cpu_core‘%(docker_etcd_key,local_ip),now_core)

time.sleep(1)

if int(now_core_use[‘now_core‘]) == int((cpu_core_retain - 1)):

msg={‘status‘:1,‘resource‘:‘cpu‘,‘result‘:‘the avaiable cpu core is less than retain:%s,i can not create new container!‘%cpu_core_retain}

print msg

sys.exit(1)

else:

if int(now_core_use[‘now_core_share‘]+1) >= int(now_core_use[‘core_share_limit‘]):

now_core_use[‘now_core‘]=now_core_use[‘now_core‘]-1

if int(now_core_use[‘now_core‘]) == int((cpu_core_retain - 1)):

msg={‘status‘:1,‘resource‘:‘cpu‘,‘result‘:‘the avaiable cpu core is less than retain:%s,i can not create new container!‘%cpu_core_retain}

print msg

sys.exit(1)

else:

now_core_use[‘now_core_share‘]=0

else:

now_core_use[‘now_core_share‘]=now_core_use[‘now_core_share‘]+1

etcd_client.write(‘%s%s/resource_limit/cpu_limit/now_cpu_core‘%(docker_etcd_key,local_ip),now_core_use)

return now_core_use

def get_container_ip():

#get avaiable container ip

recycle_ip_key=‘%scontainer/now_ip_avaiable‘%docker_etcd_key

try:

all_recycle_ip_result=eval(etcd_client.read(recycle_ip_key).value)

except etcd.EtcdKeyNotFound:

all_recycle_ip_result=‘‘

if len(all_recycle_ip_result) > 0:

now_recycle_ip=all_recycle_ip_result[0]

all_recycle_ip_result.remove(all_recycle_ip_result[0])

etcd_client.write(recycle_ip_key,all_recycle_ip_result)

return now_recycle_ip

else:

#get new container ip

try:

now_container_ip=etcd_client.read(‘%scontainer/now_ip‘%(docker_etcd_key)).value

except KeyError:

now_container_ip=default_container_ip

except etcd.EtcdKeyNotFound:

now_container_ip=default_container_ip

ip=now_container_ip.split(‘/‘)[0]

netmask=now_container_ip.split(‘/‘)[1]

if int(ip.split(‘.‘)[-1]) <254:

new_container_ip=‘.‘.join(ip.split(‘.‘)[0:3])+‘.‘+str(int(ip.split(‘.‘)[-1])+1)+‘/‘+netmask

elif int(ip.split(‘.‘)[-1]) >=254 and int(ip.split(‘.‘)[-2]) <254:

if int(ip.split(‘.‘)[-1]) ==254:

last_ip=‘1‘

else:

last_ip=int(ip.split(‘.‘)[-1])+1

new_container_ip=‘.‘.join(ip.split(‘.‘)[0:2])+‘.‘+str(int(ip.split(‘.‘)[-2])+1)+‘.‘+last_ip+‘/‘+netmask

elif int(ip.split(‘.‘)[-2]) <=254 and int(ip.split(‘.‘)[-3])<254:

if int(ip.split(‘.‘)[-1]) == 254:

last_ip=‘1‘

else:

last_ip=int(ip.split(‘.‘)[-1])+1

new_container_ip=‘.‘.join(ip.split(‘.‘)[0:2])+‘.‘+str(int(ip.split(‘.‘)[2])+1)+‘.‘+last_ip+‘/‘+netmask

elif int(ip.split(‘.‘)[-2]) ==255 and int(ip.split(‘.‘)[-1])==254:

print ‘now ip had more than ip pool!‘

sys.exit(1)

etcd_client.write(‘%scontainer/now_ip‘%docker_etcd_key,new_container_ip)

return new_container_ip

if __name__ == "__main__":

bridge=‘ovs2‘

default_physics_ip=‘172.16.0.1/16‘

default_container_ip=‘172.16.1.1/16‘

email=‘244979152@qq.com‘

local_ip=get_local_ip(‘ovs1‘)

if local_ip is None:

local_ip=get_local_ip(‘em1‘)

docker_etcd_key=‘/app/docker/‘

try:

container=sys.argv[1]

if container == "-h" or container == "-help":

print ‘Usage: container_name container_image command‘

sys.exit(1)

except IndexError:

print ‘print input container name!‘

sys.exit(1)

try:

images=sys.argv[2]

except IndexError:

print ‘print input images!‘

sys.exit(1)

try:

daemon_program=sys.argv[3]

except IndexError:

print ‘please input run image commmand!‘

sys.exit(1)

try:

memory_limit=sys.argv[4]

except IndexError:

print ‘please input memory limit where unit = b, k, m or g!‘

sys.exit(1)

try:

network_limit=sys.argv[5]

except IndexError:

print ‘please input network limit where unit = M!‘

sys.exit(1)

try:

ssh_method=sys.argv[6]

except IndexError:

print ‘please input container ssh auth method by key or passwd!‘

sys.exit(1)

try:

ssh_auth=sys.argv[7]

except IndexError:

print ‘please input container ssh auth passwd or key_context!‘

sys.exit(1)

try:

ulimit=sys.argv[8]

except IndexError:

ulimit=65535

etcd_client=etcd.Client(host=‘127.0.0.1‘, port=4001)

cpu_core=cpu_count()

cpu_core_retain=2

cpu_core_share_limit=5

local_dir=sys.path[0]

#get local core use in etcd

now_core=get_now_cpu_core()

docker_client = Client(base_url=‘unix://var/run/docker.sock‘, version=‘1.15‘, timeout=10)

docker_container_all_name=docker_container_all()

#check input container exist

if container in docker_container_all_name:

print ‘The container %s is exist!‘%container

sys.exit(1)

#check pipework software exist

check_pipework_status=((subprocess.Popen("which pipework &>>/dev/null && echo 0 || echo 1",shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

if check_pipework_status != "0":

print ‘I can not find pipework in this host!‘

sys.exit(1)

#check ovs bridge

check_bridge_status=((subprocess.Popen("ovs-vsctl list-br |grep %s &>>/dev/null && echo 0 || echo 1"%bridge,shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

if check_bridge_status !="0":

subprocess.Popen("ovs-vsctl add-br %s"%bridge,shell=True,stdout=subprocess.PIPE)

#check default physics ip

try:

default_physics_ip=etcd_client.read(‘%sphysics/now_ip‘%(docker_etcd_key)).value

except KeyError:

default_physics_ip=default_physics_ip

etcd_client.write(‘%sphysics/now_ip‘%docker_etcd_key,default_physics_ip)

time.sleep(1)

except etcd.EtcdKeyNotFound:

default_physics_ip=default_physics_ip

etcd_client.write(‘%sphysics/now_ip‘%docker_etcd_key,default_physics_ip)

time.sleep(1)

#check physics ip

try:

new_physics_ip=etcd_client.read(‘%sphysics/%s‘%(docker_etcd_key,local_ip)).value

except KeyError:

now_physics_ip=etcd_client.read(‘%sphysics/now_ip‘%(docker_etcd_key)).value

ip=now_physics_ip.split(‘/‘)[0]

netmask=now_physics_ip.split(‘/‘)[1]

if int(ip.split(‘.‘)[-1]) <254:

new_physics_ip=‘.‘.join(ip.split(‘.‘)[0:3])+‘.‘+str(int(ip.split(‘.‘)[-1])+1)+‘/‘+netmask

elif int(ip.split(‘.‘)[-1]) >=254:

print ‘physics gateway ip more than set up ip pool!‘

sys.exit(1)

etcd_client.write(‘%sphysics/now_ip‘%docker_etcd_key,new_physics_ip)

etcd_client.write(‘%sphysics/%s‘%(docker_etcd_key,local_ip),new_physics_ip)

except etcd.EtcdKeyNotFound:

now_physics_ip=etcd_client.read(‘%sphysics/now_ip‘%(docker_etcd_key)).value

ip=now_physics_ip.split(‘/‘)[0]

netmask=now_physics_ip.split(‘/‘)[1]

if int(ip.split(‘.‘)[-1]) <254:

new_physics_ip=‘.‘.join(ip.split(‘.‘)[0:3])+‘.‘+str(int(ip.split(‘.‘)[-1])+1)+‘/‘+netmask

elif int(ip.split(‘.‘)[-1]) >=254:

print ‘physics gateway ip more than set up ip pool!‘

sys.exit(1)

etcd_client.write(‘%sphysics/now_ip‘%docker_etcd_key,new_physics_ip)

etcd_client.write(‘%sphysics/%s‘%(docker_etcd_key,local_ip),new_physics_ip)

#set physics ip

#run_command="awk -v value=`ifconfig %s|grep inet|grep -v inet6|awk ‘{print $2}‘|wc -l` ‘BEGIN{if(value<1) {system(‘/sbin/ifconfig %s %s‘)}}‘"%(bridge,bridge,new_physics_ip)

set_physics_status=((subprocess.Popen("/sbin/ifconfig %s %s && echo 0 || echo 1"%(bridge,new_physics_ip),shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

if set_physics_status !="0":

print ‘config local physics ip:%s is fail!‘%new_physics_ip

sys.exit(1)

docker_version=docker_client.version()[‘Version‘][0:3]

if float(docker_version) >= 1.6 :

cpu_tag=‘--cpuset-cpus‘

container_ulimit="--ulimit nofile=%s:%s"%(ulimit,ulimit)

else:

cpu_tag=‘--cpuset‘

container_ulimit=‘‘

#create new container

get_container_id=((subprocess.Popen("docker run --restart always %s -d -m %s --memory-swap=%s %s=%s --net=‘none‘ --name=‘%s‘ %s %s"%(container_ulimit,memory_limit,memory_limit,cpu_tag,now_core[‘now_core‘],container,images,daemon_program),shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

check_container_status=((subprocess.Popen("docker inspect %s|grep Id &>>/dev/null && echo 0 || echo 1"%(container),shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

if check_container_status !="0":

print ‘Create container %s is fail!‘%container

sys.exit(1)

#get container ip

new_container_ip=get_container_ip()

#check container network

new_physics_ip=new_physics_ip.split(‘/‘)[0]

set_container_status=((subprocess.Popen("`which pipework` %s %s %s@%s &>>/dev/null && echo 0 || echo 1"%(bridge,container,new_container_ip,new_physics_ip),shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

if set_container_status != "0":

print ‘config container:%s network is fail!‘%container

sys.exit(1)

#set container network limit

set_container_network_limit=((subprocess.Popen("/bin/bash %s/modify_docker_container_network_limit.sh limit %s %s &>>/dev/null && echo 0 || echo 1"%(local_dir,container,network_limit),shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

if set_container_network_limit != "0":

print ‘config container:%s network limit:%s is fail!‘%(container,network_limit)

sys.exit(1)

#write container network limit to etcd

etcd_client.write(‘%s%s/resource_limit/network_limit/%s‘%(docker_etcd_key,local_ip,container),network_limit)

#modify container ssh auth

if ssh_method == ‘key‘:

cmd=‘docker exec %s sh -c "echo \‘%s\‘ >>/root/.ssh/authorized_keys && echo 0 || echo 1"‘%(container,ssh_auth)

elif ssh_method == ‘passwd‘:

cmd=‘docker exec %s sh -c "echo "%s" | passwd --stdin root &>>/dev/null && echo 0 || echo 1"‘%(container,ssh_auth)

check_ssh_status=((subprocess.Popen(cmd,shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

if check_ssh_status != "0":

print ‘config container:%s ssh auth method:%s is fail!‘%(container,ssh_method)

delete_cmd=‘docker rm $(docker stop %s)‘%container

delete_ssh_status=((subprocess.Popen(delete_cmd,shell=True,stdout=subprocess.PIPE)).stdout.readlines()[0]).strip(‘\n‘)

sys.exit(1)

#write container info to etcd

key=‘%s%s/container/%s‘%(docker_etcd_key,local_ip,container)

value={‘Physics_ip‘:‘%s‘%local_ip,‘Container_id‘:get_container_id,‘Container_name‘:container,‘Container_ip‘:‘%s‘%new_container_ip,‘Container_gateway_bridge‘:bridge,‘Container_gateway_ip‘:new_physics_ip,‘Container_create‘:time.strftime(‘%Y.%m.%d-%T‘),‘Container_status‘:‘running‘,‘Cpu_core‘:now_core[‘now_core‘],‘Cpu_share‘:now_core[‘now_core_share‘],‘Memory_limit‘:memory_limit,‘Network_limit‘:network_limit}

etcd_client.write(key,value)

#print ‘create container:%s is success.follow is container info.‘%container

print value

这个脚本是创建容器并进行资源限制的

下面脚本是进行网络资源限制,上面脚本会调用做网络限制

[root@ip-10-10-125-8 docker_code]# cat modify_docker_container_network_limit.sh

#!/bin/bash

#filename:modify_docker_container_network_limit.sh

#author:Deng Lei

#email:dl528888@gmail.com

op=$1

container=$2

limit=$3 # Mbits/s

if [ -z $1 ] || [ -z $2 ]; then

echo "Usage: operation container_name limit(default:5m)"

echo "Example1: I want limit 5m in the container:test"

echo "The command is: bash `basename $0` limit test 5"

echo "Example2: I want delete network limit in the container:test"

echo "The command is: bash `basename $0` ulimit test"

exit 1

fi

if [ -z $3 ];then

limit=‘5m‘

fi

if [ `docker inspect --format "{{.State.Pid}}" $container &>>/dev/null && echo 0 || echo 1` -eq 1 ];then

echo "no this container:$container"

exit 1

fi

ovs_prefix=‘veth1pl‘

container_id=`docker inspect --format "{{.State.Pid}}" $container`

device_name=`echo ${ovs_prefix}${container_id}`

docker_etcd_key=‘/app/docker/‘

local_ip=`ifconfig ovs1|grep inet|awk ‘{print $2}‘|head -n1`

if [ $op == ‘limit‘ ];then

for v in $device_name; do

ovs-vsctl set interface $v ingress_policing_rate=$((limit*1000))

ovs-vsctl set interface $v ingress_policing_burst=$((limit*100))

ovs-vsctl set port $v qos=@newqos -- --id=@newqos create qos type=linux-htb queues=0=@q0 other-config:max-rate=$((limit*1000000)) -- --id=@q0 create queue other-config:min-rate=$((limit*1000000)) other-config:max-rate=$((limit*1000000)) &>>/dev/null && echo ‘0‘ || echo ‘1‘

done

#save result to etcd

`which etcdctl` set ${docker_etcd_key}${local_ip}/resource_limit/network_limit/${container} $limit &>>/dev/null

elif [ $op == ‘ulimit‘ ];then

for v in $device_name; do

ovs-vsctl set interface $v ingress_policing_rate=0

ovs-vsctl set interface $v ingress_policing_burst=0

ovs-vsctl clear Port $v qos &>>/dev/null && echo ‘0‘ || echo ‘1‘

done

`which etcdctl` rm ${docker_etcd_key}${local_ip}/resource_limit/network_limit/${container} &>>/dev/null

fi

docker高级应用之cpu与内存资源限制(转)的更多相关文章

- W3wp.exe占用CPU及内存资源

问题背景 最近使用一款系统,但是经常出现卡顿或者用户账号登录不了系统.后来将问题定位在了服务器中的“w3wp.exe”这个进程.在我们的用户对系统进行查询.修改等操作后,该进程占用大量的CPU以及内存 ...

- Kubernetes K8S之CPU和内存资源限制详解

Kubernetes K8S之CPU和内存资源限制详解 Pod资源限制 备注:CPU单位换算:100m CPU,100 milliCPU 和 0.1 CPU 都相同:精度不能超过 1m.1000m C ...

- Linux下查看哪些进程占用的CPU、内存资源

1.CPU占用最多的前10个进程: ps auxw|head -1;ps auxw|sort -rn -k3|head -10 2.内存消耗最多的前10个进程 ps auxw|head -1;ps a ...

- 通过cgroup给docker的CPU和内存资源做限制

1.cpu docker run -it --cpu-period=100000 --cpu-quota=2000 ubuntu /bin/bash 相当于只能使用20%的CPU 在每个100ms的时 ...

- JMeter命令行监控CPU和内存资源

首先确定Agent启动成功 客户端

- Linux资源控制-CPU和内存

主要介绍Linux下, 如果对进程的CPU和内存资源的使用情况进行控制的方法. CPU资源控制 每个进程能够占用CPU多长时间, 什么时候能够占用CPU是和系统的调度密切相关的. Linux系统中有多 ...

- Linux资源控制-CPU和内存【转】

转自:http://www.cnblogs.com/wang_yb/p/3942208.html 主要介绍Linux下, 如果对进程的CPU和内存资源的使用情况进行控制的方法. CPU资源控制 每个进 ...

- 鲲鹏性能优化十板斧(二)——CPU与内存子系统性能调优

1.1 CPU与内存子系统性能调优简介 调优思路 性能优化的思路如下: l 如果CPU的利用率不高,说明资源没有充分利用,可以通过工具(如strace)查看应用程序阻塞在哪里,一般为磁盘,网络或应 ...

- 如何使用 Docker 来限制 CPU、内存和 IO等资源?

如何使用 Docker 来限制 CPU.内存和 IO等资源?http://www.sohu.com/a/165506573_609513

随机推荐

- [BZOJ2007][NOI2010]海拔(对偶图最短路)

首先确定所有点的海拔非0即1,问题转化成裸的平面图最小割问题,进而转化成对偶图最短路(同BZOJ1002). 这题的边是有向的,所以所有边顺时针旋转90度即可. 如下图(S和T的位置是反的). #in ...

- 2017icpc 乌鲁木齐网络赛

A .Banana Bananas are the favoured food of monkeys. In the forest, there is a Banana Company that pr ...

- 【DFS】Anniversary Cake

[poj1020]Anniversary Cake Time Limit: 1000MS Memory Limit: 10000K Total Submissions: 17203 Accep ...

- Spring的Bean生命周期理解

首先,在经历过很多次的面试之后,一直不能很好的叙述关于springbean的生命周期这个概念.今日对于springBean的生命周期进行一个总结. 一.springBean的生命周期: 如下图所示: ...

- JAVA EE 中之AJAX 无刷新地区下拉列表三级联动

JSP页面 <html> <head> <meta http-equiv="Content-Type" content="text/html ...

- 消除Xcode 5中JosnKit类库的bit masking for introspection of objective-c 警告

Xcode 5中苹果对多个系统框架及相关类库进行了改进.之前建立的项目在Xcode 5中重新编译会产生一些新问题. JosnKit是常用的轻量级Josn解析类,在Xcode 5中: BOOL work ...

- Hiho---欧拉图

欧拉路·一 时间限制:10000ms 单点时限:1000ms 内存限制:256MB 描述 小Hi和小Ho最近在玩一个解密类的游戏,他们需要控制角色在一片原始丛林里面探险,收集道具,并找到最后的宝藏.现 ...

- NFS迁移

Auth: Jin Date: 20140317 需求将NFS共享IP切换为192.168.201.221,通过192.168.201.0网段提供共享(10.0.0.0和192.168.201.0都能 ...

- <摘录>GCC 中文手

GCC 中文手册 作者:徐明 GCC Section: GNU Tools (1) Updated: 2003/12/05 Index Return to Main Contents -------- ...

- Linux 内核中的 GCC 特性

https://www.ibm.com/developerworks/cn/linux/l-gcc-hacks/ GCC 和 Linux 是出色的组合.尽管它们是独立的软件,但是 Linux 完全依靠 ...