【转载】 拒绝遗忘:高效的动态规划算法 —— “到底什么是动态规划”—— An intro to Algorithms: Dynamic Programming

原文地址(英文):

https://medium.freecodecamp.org/an-intro-to-algorithms-dynamic-programming-dd00873362bb

PS: 本文讲的很通俗易懂,搞计算机十多年了,头一次遇到能把这个问题讲的如此清楚的文章。

-----------------------------------------------------------------------------------------------

英文原版:

Suppose you are doing some calculation using an appropriate series of input. There is some computation done at every instance to derive some result. You don’t know that you had encountered the same output when you had supplied the same input. So it’s like you are doing re-computation of a result that was previously achieved by specific input for its respective output.

But what’s the problem here? Thing is that your precious time is wasted. You can easily solve the problem here by keeping records that map previously computed results. Such as using the appropriate data structure. For example, you could store input as key and output as a value (part of mapping).

Those who cannot remember the past are condemned to repeat it.

~Dynamic Programming

Now by analyzing the problem, store its input if it’s new (or not in the data structure) with its respective output. Else check that input key and get the resultant output from its value. That way when you do some computation and check if that input existed in that data structure, you can directly get the result. Thus we can relate this approach to dynamic programming techniques.

Diving into dynamic programming

In a nutshell, we can say that dynamic programming is used primarily for optimizing problems, where we wish to find the “best” way of doing something.

A certain scenario is like there are re-occurring subproblems which in turn have their own smaller subproblems. Instead of trying to solve those re-appearing subproblems, again and again, dynamic programming suggests solving each of the smaller subproblems only once. Then you record the results in a table from which a solution to the original problem can be obtained.

For instance, the Fibonacci numbers 0,1,1,2,3,5,8,13,… have a simple description where each term is related to the two terms before it. If F(n) is the nth term of this series then we have F(n) = F(n-1) + F(n-2). This is called a recursive formula or a recurrence relation. It needs earlier terms to have been computed in order to compute a later term.

The majority of Dynamic Programming problems can be categorized into two types:

- Optimization problems.

- Combinatorial problems.

The optimization problems expect you to select a feasible solution so that the value of the required function is minimized or maximized. Combinatorial problems expect you to figure out the number of ways to do something or the probability of some event happening.

An approach to solve: top-down vs bottom-up

There are the following two main different ways to solve the problem:

Top-down: You start from the top, solving the problem by breaking it down. If you see that the problem has been solved already, then just return the saved answer. This is referred to as Memoization.

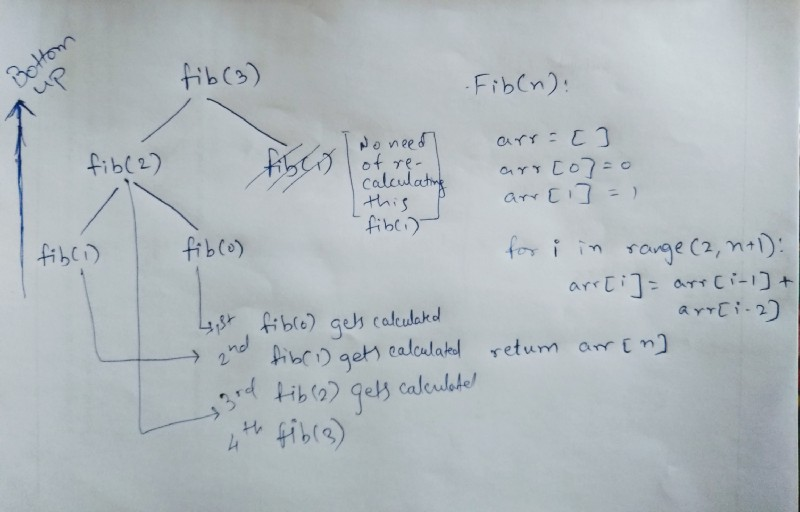

Bottom-up: You directly start solving the smaller subproblems making your way to the top to derive the final solution of that one big problem. In this process, it is guaranteed that the subproblems are solved before solving the problem. This can be called Tabulation (table-filling algorithm).

In reference to iteration vs recursion, bottom-up uses iteration and the top-down uses recursion.

The visualization displayed in the image is not correct acc. to theoretical knowledge, but I have displayed in an understandable manner

Here there is a comparison between a naive approach vs a DP approach. You can see the difference by the time complexity of both.

Memoization: Don’t forget

Jeff Erickson describes in his notes, for Fibonacci numbers:

The obvious reason for the recursive algorithm’s lack of speed is that it computes the same Fibonacci numbers over and over and over.

From Jeff Erickson’s notes CC: http://jeffe.cs.illinois.edu/

We can speed up our recursive algorithm considerably just by writing down the results of our recursive calls. Then we can look them up again if we need them later.

Memoization refers to the technique of caching and reusing previously computed results.

If you use memoization to solve the problem, you do it by maintaining a map of already solved subproblems (as we earlier talked about the mapping of key and value). You do it “top-down” in the sense that you solve the “top” problem first (which typically recurses down to solve the sub-problems).

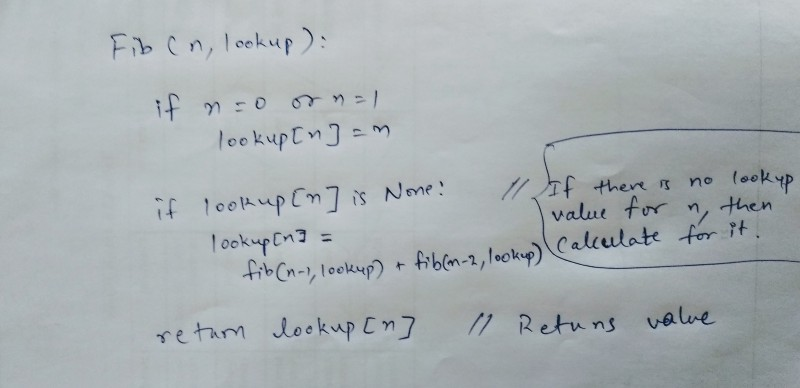

Pseudocode for memoization:

So using recursion, we perform this with extra overhead memory (i.e. here lookup) to store results. If there is a value stored in the lookup, we return it directly or we add it to lookup for that specific index.

Remember that there is a tradeoff of extra overhead with respect to the tabulation method.

However, if you want more visualizations for memoization, then I suggest looking into this video.

In a top-down manner.

Tabulation: Filling up in tabular form

But once we see how the array (memoized solution) is filled, we can replace the recursion with a simple loop that intentionally fills the array in order, instead of relying on the complicated recursion to do it for us ‘accidentally’.

From Jeff Erickson’s notes CC: http://jeffe.cs.illinois.edu/

Tabulation does it in “bottom-up” fashion. It’s more straight forward, it does compute all values. It requires less overhead as it does not have to maintain mapping and stores data in tabular form for each value. It may also compute unnecessary values. This can be used if all you want is to compute all values for your problem.

Pseudocode for tabulation:

Pseudocode with Fibonacci tree

Looking back in history

Richard bellman was the man behind this concept. He came up with this when he was working for RAND Corporation in the mid-1950s. The reason he chose this name “dynamic programming” was to hide the mathematics work he did for this research. He was afraid his bosses would oppose or dislike any kind of mathematical research.

Okay, so the word ‘programming’ is just a reference to clarify that this was an old-fashioned way of planning or scheduling, typically by filling in a table (in a dynamic manner rather than in a linear way) over the time rather than all at once.

Wrapping up

That’s it. This is part 2 of the algorithm series I started last year. In my previous post, we discussed about what are searching and sorting algorithms. Apologies that I couldn’t deliver this in a shorter time. But I am willing to make things faster in the coming months.

Hope you liked it and I’ll be soon looking to add a third one in the series soon. Happy coding!

Resources:

Introduction to Dynamic Programming 1 Tutorials & Notes | Algorithms | HackerEarth

The image above says a lot about Dynamic Programming. So, is repeating the things for which you already have the…www.hackerearth.comCommunity — Competitive Programming — Competitive Programming Tutorials — Dynamic Programming: From…

Community — Competitive Programming — Competitive Programming Tutorials — Dynamic Programming: From Novice to Advancedwww.topcoder.com

https://www.geeksforgeeks.org/overlapping-subproblems-property-in-dynamic-programming-dp-1/

Special props to Jeff Erickson and his notes for algorithm — http://jeffe.cs.illinois.edu/

---------------------------------------------------------------------------------------------

*tabulation*的伪代码:

-----------------------------------------------------------------------------------------------

【转载】 拒绝遗忘:高效的动态规划算法 —— “到底什么是动态规划”—— An intro to Algorithms: Dynamic Programming的更多相关文章

- 动态规划(Dynamic Programming)算法与LC实例的理解

动态规划(Dynamic Programming)算法与LC实例的理解 希望通过写下来自己学习历程的方式帮助自己加深对知识的理解,也帮助其他人更好地学习,少走弯路.也欢迎大家来给我的Github的Le ...

- (转载)LCA问题的Tarjan算法

转载自:Click Here LCA问题(Lowest Common Ancestors,最近公共祖先问题),是指给定一棵有根树T,给出若干个查询LCA(u, v)(通常查询数量较大),每次求树T中两 ...

- 动态规划 算法(DP)

多阶段决策过程(multistep decision process)是指这样一类特殊的活动过程,过程可以按时间顺序分解成若干个相互联系的阶段,在每一个阶段都需要做出决策,全部过程的决策是一个决策序列 ...

- [算法]动态规划(Dynamic programming)

转载请注明原创:http://www.cnblogs.com/StartoverX/p/4603173.html Dynamic Programming的Programming指的不是程序而是一种表格 ...

- 算法导论——lec 11 动态规划及应用

和分治法一样,动态规划也是通过组合子问题的解而解决整个问题的.分治法是指将问题划分为一个一个独立的子问题,递归地求解各个子问题然后合并子问题的解而得到原问题的解.与此不同,动态规划适用于子问题不是相互 ...

- 以计算斐波那契数列为例说说动态规划算法(Dynamic Programming Algorithm Overlapping subproblems Optimal substructure Memoization Tabulation)

动态规划(Dynamic Programming)是求解决策过程(decision process)最优化的数学方法.它的名字和动态没有关系,是Richard Bellman为了唬人而取的. 动态规划 ...

- 动态规划算法(Dynamic Programming,简称 DP)

动态规划算法(Dynamic Programming,简称 DP) 浅谈动态规划 动态规划算法(Dynamic Programming,简称 DP)似乎是一种很高深莫测的算法,你会在一些面试或算法书籍 ...

- 动态规划算法详解 Dynamic Programming

博客出处: https://blog.csdn.net/u013309870/article/details/75193592 前言 最近在牛客网上做了几套公司的真题,发现有关动态规划(Dynamic ...

- Python算法之动态规划(Dynamic Programming)解析:二维矩阵中的醉汉(魔改版leetcode出界的路径数)

原文转载自「刘悦的技术博客」https://v3u.cn/a_id_168 现在很多互联网企业学聪明了,知道应聘者有目的性的刷Leetcode原题,用来应付算法题面试,所以开始对这些题进行" ...

- 一个快速、高效的Levenshtein算法实现——代码实现

在网上看到一篇博客讲解Levenshtein的计算,大部分内容都挺好的,只是在一些细节上不够好,看了很长时间才明白.我对其中的算法描述做了一个简单的修改.原文的链接是:一个快速.高效的Levensht ...

随机推荐

- ETL工具-nifi干货系列 第十四讲 nifi处理器PublishKafka实战教程

1.kettle的kafka生产者叫kafka producer,nifi中的相应处理器为PublishKafka,如下图所示: 可以很清楚的看到PublishKafka处理器支持多个版本的kafka ...

- nginx rewrite实践

nginx rewrite跳转(高级) 官网 https://nginx.org/en/docs/http/ngx_http_rewrite_module.html 该ngx_http_rewrite ...

- AlexNet论文解读

前言 作为深度学习的开山之作AlexNet,确实给后来的研究者们很大的启发,使用神经网络来做具体的任务,如分类任务.回归(预测)任务等,尽管AlexNet在今天看来已经有很多神经网络超越了它,但是 ...

- 手把手教你搭建Docker私有仓库Harbor

1.什么是Docker私有仓库 Docker私有仓库是用于存储和管理Docker镜像的私有存储库.Docker默认会有一个公共的仓库Docker Hub,而与Docker Hub不同,私有仓库是受限访 ...

- 《Android开发卷——实时监听文本框输入》

在实际开发中,有时候会让用户发布一些类似微博.说说的东西,但是这个是有限制长度的,除了在文本输入框限制长度外,还要在旁边有一条提示还能输入多少个字的"友好提示". 1.文本框 ...

- Python3.7+Robot Framework+RIDE1.7.4.1安装使用教程

一.解惑:Robot Framewprk今天我们聊一聊,Robot Framework被众多测试工程师误会多年的秘密.今天我们一起来揭秘一下,最近经常在各大群里听到许多同行,在拿Robot Frame ...

- C#.NET与JAVA互通之DES加密V2024

C#.NET与JAVA互通之DES加密V2024 配置视频: 环境: .NET Framework 4.6 控制台程序 JAVA这边:JDK8 (1.8) 控制台程序 注意点: 1.由 ...

- [AGC030C] Coloring Torus

非常巧妙的一道构造题,发现对于所构造的 \(n\) 有上限,那么对于 \(K<=500\) 的情况,很好构造,每行全是一个数就行了,对于 \(K>500\) 的情况,显然每行都是 \(1, ...

- Go 使用原始套接字捕获网卡流量

Go 使用原始套接字捕获网卡流量 Go 捕获网卡流量使用最多的库为 github.com/google/gopacket,需要依赖 libpcap 导致必须开启 CGO 才能够进行编译. 为了减少对环 ...

- 全网最适合入门的面向对象编程教程:08 类和对象的Python实现-@property装饰器:把方法包装成属性

全网最适合入门的面向对象编程教程:08 类和对象的 Python 实现-@property 装饰器:把方法包装成属性 摘要: 本文主要对@property 装饰器的基本定义.使用场景和使用方法进行了介 ...