Paper Reading - Convolutional Sequence to Sequence Learning ( CoRR 2017 ) ★

Link of the Paper: https://arxiv.org/abs/1705.03122

Motivation:

- Compared to recurrent layers, convolutions create representations for fixed size contexts, however, the effective context size of the network can easily be made larger by stacking several layers on top of each other. This allows to precisely control the maximum length of dependencies to be modeled. Convolutional networks do not depend on the computations of the previous time step and therefore allow parallelization over every element in a sequence. This contrasts with RNNs which maintain a hidden state of the entire past that prevents parallel computation within a sequence.

- Multi-layer convolutional neural networks create hierarchical representations over the input sequence in which nearby input elements interact at lower layers while distant elements interact at higher layers. Hierarchical structure provides a shorter path to capture long-range dependencies compared to the chain structure modeled by recurrent networks. Inputs to a convolutional network are fed through a constant number of kernels and non-linearities, whereas recurrent networks apply up to n operations and non-linearities to the first word and only a single set of operations to the last word. Fixing the number of nonlinearities applied to the inputs also eases learning.

Innotation:

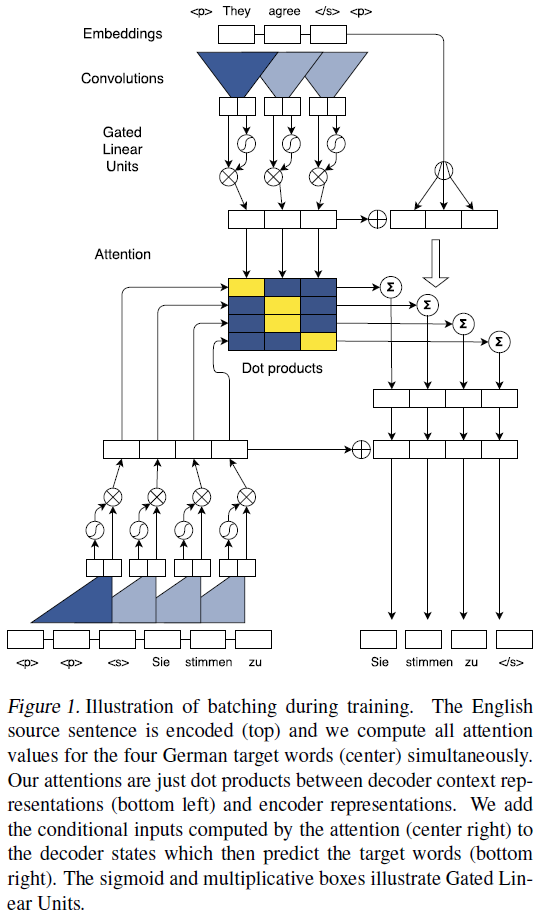

- An architecture for Seq2Seq modeling based entirely on convolutional neural networks. Both encoder and decoder networks share a simple block structure that computes intermediate states based on a fixed number of input elements. Each block contains a one dimensional convolution followed by a non-linearity. For a decoder network with a single block and kernel width k, each resulting state hi1 contains information over k input elements. Stacking several blocks on top of each other increases the number of input elements represented in a state. ( Stacking is similar to the pooling process. )

- Position Embeddings: Input elements x = (x1, . . . , xm) embedded in distributional space as w = (w1, . . . , wm), where wj ∈ Rf is a column in an embedding matrix D ∈ RV×f. The authors also equip the model with a sense of order by embedding the absolute position of input elements p = (p1, . . . , pm) where pj ∈ Rf. Both are combined to obtain input element representations e = (w1+p1, . . . , wm+pm). Position embeddings are useful in the architecture since they give the model a sense of which portion of the sequence in the input or output it is currently dealing with.

- The authors introduce a separate attention mechanism for each decoder layer.

Improvement:

- The model is equipped with gated linear units ( Language modeling with gated linear units - Dauphin et al., arXiv 2016 ) and residual connections ( Deep Residual Learning for Image Recognition - He et al., CVPR 2015a ).

- The authors choose gated linear units as non-linearity which implement a simple gating mechanism over the output of the convolution Y = [A B] ∈ R2d: v([A B]) = A ⓧ σ(B), where A, B ∈ Rd are the inputs to the non-linearity, ⓧ is the point-wise multiplication and the output v([A B]) ∈ Rd is half the size of Y. The gates σ(B) control which inputs A of the current context are relevant. And GLUs perform better than tanh in the context of language modelling.

- To enable deep convolutional networks, authors add residual connections from the input of each convolution to the output of the block. hil = v( Wl [hi−k/2l−1, . . . , hi+k/2l−1] + bwl ) + hil−1

- For encoder networks authors ensure that the output of the convolutional layers matches the input length by padding the input at each layer. However, for decoder networks they have to take care that no future information is available to the decoder. Specifically, we pad the input by k − 1 elements on both the left and right side by zero vectors, and then remove k elements from the end of the convolution output.

General Points:

- Sequence to sequence modeling has been synonymous with recurrent neural network based encoder-decoder architectures. The encoder RNN processes an input sequence x = (x1, . . . , xm) of m elements and returns state representations z = (z1, . . . , zm). The decoder RNN takes z and generates the output sequence y = (y1, . . . , yn) left to right, one element at a time. To generate output yi+1, the decoder computes a new hidden state hi+1 based on the previous state hi, an embedding gi of the previous target language word yi, as well as a conditional input ci derived from the encoder output z. Models without attention consider only the final encoder state zm by setting ci = zm for all i, or simply initialize the first decoder state with zm, in which case ci is not used. Architectures with attention compute ci as a weighted sum of (z1, . . . , zm) at each time step. The weights of the sum are referred to as attention scores and allow the network to focus on different parts of the input sequence as it generates the output sequences. Attention scores are computed by essentially comparing each encoder state zj to a combination of the previous decoder state hi and the last prediction yi; the result is normalized to be a distribution over input elements.

Paper Reading - Convolutional Sequence to Sequence Learning ( CoRR 2017 ) ★的更多相关文章

- Paper Reading - Convolutional Image Captioning ( CVPR 2018 )

Link of the Paper: https://arxiv.org/abs/1711.09151 Motivation: LSTM units are complex and inherentl ...

- [paper reading] C-MIL: Continuation Multiple Instance Learning for Weakly Supervised Object Detection CVPR2019

MIL陷入局部最优,检测到局部,无法完整的检测到物体.将instance划分为空间相关和类别相关的子集.在这些子集中定义一系列平滑的损失近似代替原损失函数,优化这些平滑损失. C-MIL learns ...

- Paper Reading - Attention Is All You Need ( NIPS 2017 ) ★

Link of the Paper: https://arxiv.org/abs/1706.03762 Motivation: The inherently sequential nature of ...

- Convolutional Sequence to Sequence Learning 论文笔记

目录 简介 模型结构 Position Embeddings GLU or GRU Convolutional Block Structure Multi-step Attention Normali ...

- PP: Sequence to sequence learning with neural networks

From google institution; 1. Before this, DNN cannot be used to map sequences to sequences. In this p ...

- 【论文阅读】Sequence to Sequence Learning with Neural Network

Sequence to Sequence Learning with NN <基于神经网络的序列到序列学习>原文google scholar下载. @author: Ilya Sutske ...

- Mol Cell Proteomics. | Prediction of LC-MS/MS properties of peptides from sequence by deep learning (通过深度学习技术根据肽段序列预测其LC-MS/MS谱特征) (解读人:梅占龙)

通过深度学习技术根据肽段序列预测其LC-MS/MS谱特征 解读人:梅占龙 质谱平台 文献名:Prediction of LC-MS/MS properties of peptides from se ...

- Paper Read: Convolutional Image Captioning

Convolutional Image Captioning 2018-11-04 20:42:07 Paper: http://openaccess.thecvf.com/content_cvpr_ ...

- A neural chatbot using sequence to sequence model with attentional decoder. This is a fully functional chatbot.

原项目链接:https://github.com/chiphuyen/stanford-tensorflow-tutorials/tree/master/assignments/chatbot 一个使 ...

随机推荐

- oracle定时器在项目中的应用

业务需求: 现在业务人员提出了一个需求: 在项目中的工作流,都要有一个流程编号,此编号有一定的规则: 前四五位是流程的字母缩写,中间是8位的日期,后面五位是流水码,要求流水码每天从00001开始.即: ...

- 学习Java的知识体系路线(详细完整版,附图加目录)

将网上的Java学习路线图进行归纳囊括,方便以后学习时弥补自身所欠缺的知识点,也算是给自己一个明确的学习方向.至于第一阶段,即JavaSE的基础,这里不给出. 第二阶段 技术名称 技术内容 数据库技术 ...

- Vue如何循环渲染图片

Vue如何把服务器返回的图片数据渲染出来 首先,一般来说,当请求图片的接口时,会返回一个数组,这个数组里会是一些图片的名字,比如1.jpg,2.jpg. 我的做法是先在data里定义一个数组,来存储服 ...

- nodejs的事件轮询机制

1.timers定时器阶段 执行定时器到点的回调函数(所有定时器setTimeout / setInterval的回调函数都在这个阶段执行) 2.idle prepare 准备阶段 TCP错误回调 3 ...

- ajax 动态载入html后不能执行其中的js解决方法

事件背景 有一个公用页面需要在多个页面调用,其中涉及到部分js已经写在了公用页面中,通过ajax加载该页面后无法执行其中的js. 解决思路 1. 采用附加一个iframe的方法去执行js,为我等代码洁 ...

- mysql的索引和执行计划

一.mysql的索引 索引是帮助mysql高效获取数据的数据结构.本质:索引是数据结构 1:索引分类 普通索引:一个索引只包含单个列,一个表可以有多个单列索引. 唯一索引:索引列的值必须唯一 ,但允许 ...

- 阿里云CentOS7部署MySql8.0

本文主要介绍了阿里云CentOS7如何安装MySql8.0,并对所踩的坑加以记录; 环境.工具.准备工作 服务器:阿里云CentOS 7.4.1708版本; 客户端:Windows 10; SFTP客 ...

- hubilder 打包app ios高版本不支持问题

<script type="text/javascript"> document.addEventListener('plusready', function(){ v ...

- js 里常用的数组操作方法

var ar=[112,44,55,66,77,88,99,'00',77]; var ar1=['ddd','fff','ggg']; //concat() 拼接一个或多个数组: //console ...

- 解决ios下audio不能正常播放的问题

解决ios下audio不能正常播放的问题 ios系统下会自动屏蔽audio标签的自动播放,需要使用一个事件来驱动音频播放 this.$refs.startaudio.addEventListener( ...