本地启动spark-shell

由于spark-1.3作为一个里程碑式的发布, 加入众多的功能特性,所以,有必要好好的研究一把,spark-1.3需要scala-2.10.x的版本支持,而系统上默认的scala的版本为2.9,需要进行升级, 可以参考ubuntu 安装 2.10.x版本的scala. 配置好scala的环境后,下载spark的cdh版本, 点我下载.

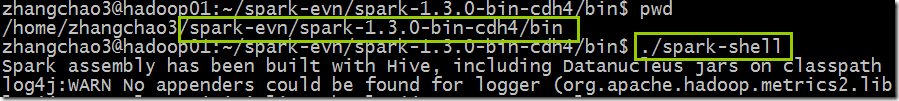

下载好后,直接解压,然后在bin目录直接运行./spark-shell 即可:

日志如下:

zhangchao3@hadoop01:~/spark-evn/spark-1.3.0-bin-cdh4/bin$ ./spark-shell

Spark assembly has been built with Hive, including Datanucleus jars on classpath

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

15/04/14 00:03:30 INFO SecurityManager: Changing view acls to: zhangchao3

15/04/14 00:03:30 INFO SecurityManager: Changing modify acls to: zhangchao3

15/04/14 00:03:30 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(zhangchao3); users with modify permissions: Set(zhangchao3)

15/04/14 00:03:30 INFO HttpServer: Starting HTTP Server

15/04/14 00:03:30 INFO Server: jetty-8.y.z-SNAPSHOT

15/04/14 00:03:30 INFO AbstractConnector: Started SocketConnector@0.0.0.0:45918

15/04/14 00:03:30 INFO Utils: Successfully started service 'HTTP class server' on port 45918.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.3.0

/_/ Using Scala version 2.10.4 (OpenJDK 64-Bit Server VM, Java 1.7.0_75)

Type in expressions to have them evaluated.

Type :help for more information.

15/04/14 00:03:33 WARN Utils: Your hostname, hadoop01 resolves to a loopback address: 127.0.1.1; using 172.18.147.71 instead (on interface em1)

15/04/14 00:03:33 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

15/04/14 00:03:33 INFO SparkContext: Running Spark version 1.3.0

15/04/14 00:03:33 INFO SecurityManager: Changing view acls to: zhangchao3

15/04/14 00:03:33 INFO SecurityManager: Changing modify acls to: zhangchao3

15/04/14 00:03:33 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(zhangchao3); users with modify permissions: Set(zhangchao3)

15/04/14 00:03:33 INFO Slf4jLogger: Slf4jLogger started

15/04/14 00:03:33 INFO Remoting: Starting remoting

15/04/14 00:03:33 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@172.18.147.71:51629]

15/04/14 00:03:33 INFO Utils: Successfully started service 'sparkDriver' on port 51629.

15/04/14 00:03:33 INFO SparkEnv: Registering MapOutputTracker

15/04/14 00:03:33 INFO SparkEnv: Registering BlockManagerMaster

15/04/14 00:03:33 INFO DiskBlockManager: Created local directory at /tmp/spark-d398c8f3-6345-41f9-a712-36cad4a45e67/blockmgr-255070a6-19a9-49a5-a117-e4e8733c250a

15/04/14 00:03:33 INFO MemoryStore: MemoryStore started with capacity 265.4 MB

15/04/14 00:03:33 INFO HttpFileServer: HTTP File server directory is /tmp/spark-296eb142-92fc-46e9-bea8-f6065aa8f49d/httpd-4d6e4295-dd96-48bc-84b8-c26815a9364f

15/04/14 00:03:33 INFO HttpServer: Starting HTTP Server

15/04/14 00:03:33 INFO Server: jetty-8.y.z-SNAPSHOT

15/04/14 00:03:33 INFO AbstractConnector: Started SocketConnector@0.0.0.0:56529

15/04/14 00:03:33 INFO Utils: Successfully started service 'HTTP file server' on port 56529.

15/04/14 00:03:33 INFO SparkEnv: Registering OutputCommitCoordinator

15/04/14 00:03:33 INFO Server: jetty-8.y.z-SNAPSHOT

15/04/14 00:03:33 INFO AbstractConnector: Started SelectChannelConnector@0.0.0.0:4040

15/04/14 00:03:33 INFO Utils: Successfully started service 'SparkUI' on port 4040.

15/04/14 00:03:33 INFO SparkUI: Started SparkUI at http://172.18.147.71:4040

15/04/14 00:03:33 INFO Executor: Starting executor ID <driver> on host localhost

15/04/14 00:03:33 INFO Executor: Using REPL class URI: http://172.18.147.71:45918

15/04/14 00:03:33 INFO AkkaUtils: Connecting to HeartbeatReceiver: akka.tcp://sparkDriver@172.18.147.71:51629/user/HeartbeatReceiver

15/04/14 00:03:33 INFO NettyBlockTransferService: Server created on 55429

15/04/14 00:03:33 INFO BlockManagerMaster: Trying to register BlockManager

15/04/14 00:03:33 INFO BlockManagerMasterActor: Registering block manager localhost:55429 with 265.4 MB RAM, BlockManagerId(<driver>, localhost, 55429)

15/04/14 00:03:33 INFO BlockManagerMaster: Registered BlockManager

15/04/14 00:03:34 INFO SparkILoop: Created spark context..

Spark context available as sc.

15/04/14 00:03:34 INFO SparkILoop: Created sql context (with Hive support)..

SQL context available as sqlContext. scala>

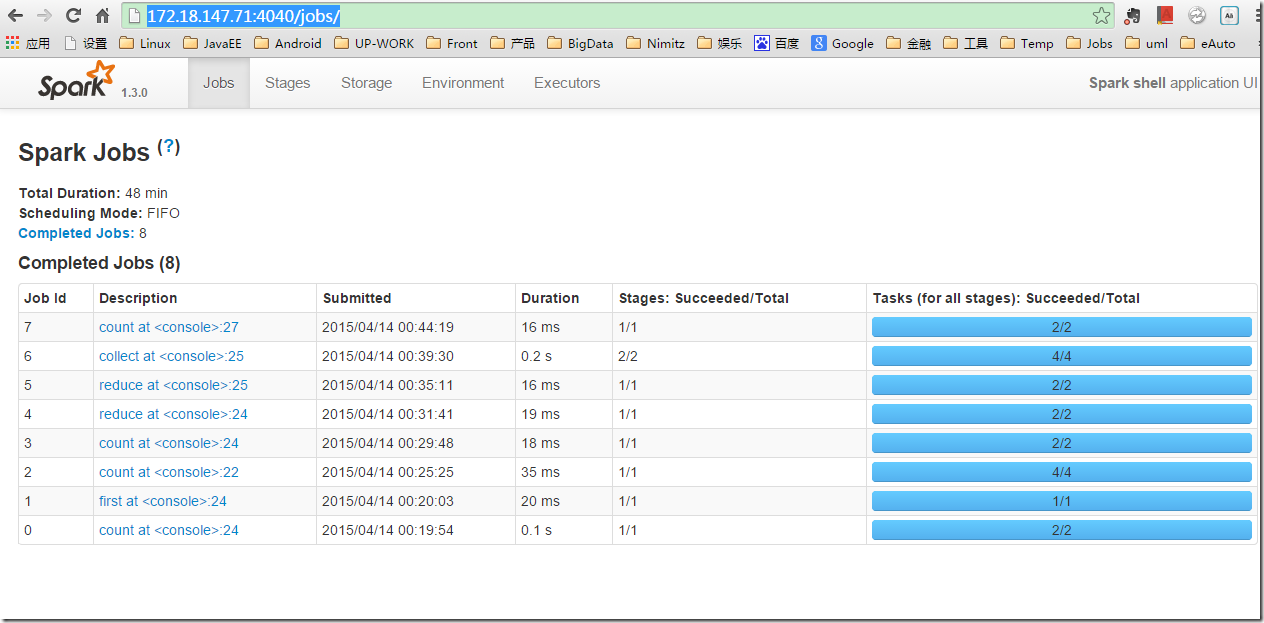

http://172.18.147.71:4040/jobs/ ,可以看到spark运行情况:

本地启动spark-shell的更多相关文章

- Spark Standalone Mode 单机启动Spark -- 分布式计算系统spark学习(一)

spark是个啥? Spark是一个通用的并行计算框架,由UCBerkeley的AMP实验室开发. Spark和Hadoop有什么不同呢? Spark是基于map reduce算法实现的分布式计算,拥 ...

- Spark学习进度-Spark环境搭建&Spark shell

Spark环境搭建 下载包 所需Spark包:我选择的是2.2.0的对应Hadoop2.7版本的,下载地址:https://archive.apache.org/dist/spark/spark-2. ...

- [Spark内核] 第36课:TaskScheduler内幕天机解密:Spark shell案例运行日志详解、TaskScheduler和SchedulerBackend、FIFO与FAIR、Task运行时本地性算法详解等

本課主題 通过 Spark-shell 窥探程序运行时的状况 TaskScheduler 与 SchedulerBackend 之间的关系 FIFO 与 FAIR 两种调度模式彻底解密 Task 数据 ...

- Spark源码分析之Spark Shell(下)

继上次的Spark-shell脚本源码分析,还剩下后面半段.由于上次涉及了不少shell的基本内容,因此就把trap和stty放在这篇来讲述. 上篇回顾:Spark源码分析之Spark Shell(上 ...

- Spark shell的原理

Spark shell是一个特别适合快速开发Spark原型程序的工具,可以帮助我们熟悉Scala语言.即使你对Scala不熟悉,仍然可以使用这个工具.Spark shell使得用户可以和Spark集群 ...

- Spark源码分析之Spark Shell(上)

终于开始看Spark源码了,先从最常用的spark-shell脚本开始吧.不要觉得一个启动脚本有什么东东,其实里面还是有很多知识点的.另外,从启动脚本入手,是寻找代码入口最简单的方法,很多开源框架,其 ...

- 【原创 Hadoop&Spark 动手实践 5】Spark 基础入门,集群搭建以及Spark Shell

Spark 基础入门,集群搭建以及Spark Shell 主要借助Spark基础的PPT,再加上实际的动手操作来加强概念的理解和实践. Spark 安装部署 理论已经了解的差不多了,接下来是实际动手实 ...

- Spark Shell简单使用

基础 Spark的shell作为一个强大的交互式数据分析工具,提供了一个简单的方式学习API.它可以使用Scala(在Java虚拟机上运行现有的Java库的一个很好方式)或Python.在Spark目 ...

- 02、体验Spark shell下RDD编程

02.体验Spark shell下RDD编程 1.Spark RDD介绍 RDD是Resilient Distributed Dataset,中文翻译是弹性分布式数据集.该类是Spark是核心类成员之 ...

- 利用Maven管理工程项目本地启动报错及解决方案

目前利用Maven工具来构建自己的项目已比较常见.今天主要不是介绍Maven工具,而是当你本地启动这样的服务时,如果遇到报错,该如何解决?下面只是参考的解决方案,具体的解法还是得看log的信息. 1. ...

随机推荐

- 使用C语言操作InfluxDB

环境: CentOS6.5_x64 InfluxDB版本:1.1.0 InfluxDB官网暂未提供C语言开发库,但github提供的有: https://github.com/influxdata/i ...

- 小贝_mysql 触发器使用

触发器 简要 1.触发器基本概念 2.触发器语法及实战样例 3.before和after差别 一.触发器基本概念 1.一触即发 2.作用: 监视某种情况并触发某种操作 3.观察场景 一个电子商城: 商 ...

- 8、redis之事务1-redis命令

一.概述: 和众多其它数据库一样,Redis作为NoSQL数据库也同样提供了事务机制.在Redis中,MULTI/EXEC/DISCARD/WATCH这四个命令是我们实现事务的基石.相信对有 ...

- django之创建第7个项目-url配置

1.配置urls.py from django.conf.urls import patterns, include, url #Uncomment the next two lines to ena ...

- 友盟消息push功能

友盟地址:https://i.umeng.com/user/products 一.android 1.产品->U-App/U-push->立即使用->管理->左侧-集成测试-& ...

- 利用jquery修改href的部分字符

试了好久 就一句代码即可. $(document).ready(function(){ $('a').each(function(){ this.href = this.href.replace('y ...

- Spring的缺点有哪些--Ext扩展

http://www.iteye.com/topic/1131284 1.JavaTear2014 -- 发表时间:2013-07-17 最后修改:2013-07-17 Spring应用比较 ...

- 接口测试-Http状态码-postman上传文件

转自:https://www.cnblogs.com/jiadan/articles/8546015.html 一. 接口 接口:什么是接口呢?接口一般来说有两种,一种是程序内部的接口,一种是系统 ...

- Git的图形化工具使用教程

虽然感觉并没有什么暖用,但姑且还是写出来留作纪念好了 Git这种分布式版本控制系统最适合的就是单枪匹马搞开发的选手,不需要服务器,下载个git和图形工具,网速快十分钟就能搞定开始愉快的开发工作.我在搭 ...

- React(0.13) 定义一个input组件,使其输入的值转为大写

<!DOCTYPE html> <html> <head> <title>React JS</title> <script src=& ...