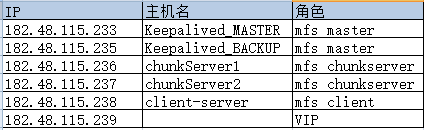

MFS+Keepalived双机高可用热备方案操作记录

基于MFS的单点及手动备份的缺陷,考虑将其与Keepalived相结合以提高可用性。在Centos下MooseFS(MFS)分布式存储共享环境部署记录这篇文档部署环境的基础上,只需要做如下改动:

1)将master-server作为Keepalived_MASTER(启动mfsmaster、mfscgiserv)

2)将matelogger作为Keepalived_BACKUP(启动mfsmaster、mfscgiserv)

3)将ChunkServer服务器里配置的MASTER_HOST参数值改为VIP地址

4)clinet挂载的master的ip地址改为VIP地址

按照这样调整后,需要将Keepalived_MASTER和Keepalived_BACKUP里面的hosts绑定信息也修改下。

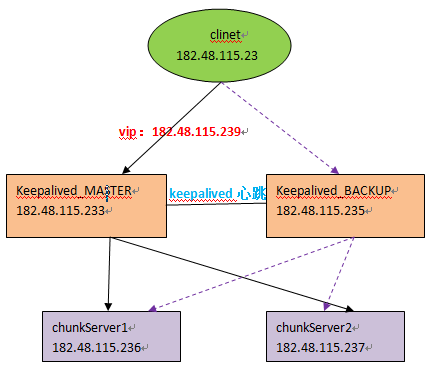

方案实施原理及思路

1)mfsmaster的故障恢复在1.6.5版本后可以由mfsmetalogger产生的日志文件changelog_ml.*.mfs和metadata.mfs.back文件通过命令mfsmetarestore恢复

2)定时从mfsmaster获取metadata.mfs.back 文件用于master恢复

3)Keepalived MASTER检测到mfsmaster进程宕停时会执行监控脚本,即自动启动mfsmaster进程,如果启动失败,则会强制kill掉keepalived和mfscgiserv进程,由此转移VIP到BACKUP上面。

4)若是Keepalived MASTER故障恢复,则会将VIP资源从BACKUP一方强制抢夺回来,继而由它提供服务

5)整个切换在2~5秒内完成 根据检测时间间隔。

架构拓扑图如下:

1)Keepalived_MASTER(mfs master)机器上的操作

mfs的master日志服务器的安装配置已经在另一篇文档中详细记录了,这里就不做赘述了。下面直接记录下keepalived的安装配置:

-----------------------------------------------------------------------------------------------------------------------

安装Keepalived

[root@Keepalived_MASTER ~]# yum install -y openssl-devel popt-devel

[root@Keepalived_MASTER ~]# cd /usr/local/src/

[root@Keepalived_MASTER src]# wget http://www.keepalived.org/software/keepalived-1.3.5.tar.gz

[root@Keepalived_MASTER src]# tar -zvxf keepalived-1.3.5.tar.gz

[root@Keepalived_MASTER src]# cd keepalived-1.3.5

[root@Keepalived_MASTER keepalived-1.3.5]# ./configure --prefix=/usr/local/keepalived

[root@Keepalived_MASTER keepalived-1.3.5]# make && make install [root@Keepalived_MASTER keepalived-1.3.5]# cp /usr/local/src/keepalived-1.3.5/keepalived/etc/init.d/keepalived /etc/rc.d/init.d/

[root@Keepalived_MASTER keepalived-1.3.5]# cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

[root@Keepalived_MASTER keepalived-1.3.5]# mkdir /etc/keepalived/

[root@Keepalived_MASTER keepalived-1.3.5]# cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

[root@Keepalived_MASTER keepalived-1.3.5]# cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

[root@Keepalived_MASTER keepalived-1.3.5]# echo "/etc/init.d/keepalived start" >> /etc/rc.local [root@Keepalived_MASTER keepalived-1.3.5]# chmod +x /etc/rc.d/init.d/keepalived #添加执行权限

[root@Keepalived_MASTER keepalived-1.3.5]# chkconfig keepalived on #设置开机启动

[root@Keepalived_MASTER keepalived-1.3.5]# service keepalived start #启动

[root@Keepalived_MASTER keepalived-1.3.5]# service keepalived stop #关闭

[root@Keepalived_MASTER keepalived-1.3.5]# service keepalived restart #重启 配置Keepalived

[root@Keepalived_MASTER ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf-bak

[root@Keepalived_MASTER ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

} notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id MFS_HA_MASTER

} vrrp_script chk_mfs {

script "/usr/local/mfs/keepalived_check_mfsmaster.sh"

interval 2

weight 2

} vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_mfs

}

virtual_ipaddress {

182.48.115.239

}

notify_master "/etc/keepalived/clean_arp.sh 182.48.115.239"

} 接着编写监控脚本

[root@Keepalived_MASTER ~]# vim /usr/local/mfs/keepalived_check_mfsmaster.sh

#!/bin/bash

A=`ps -C mfsmaster --no-header | wc -l`

if [ $A -eq 0 ];then

/etc/init.d/mfsmaster start

sleep 3

if [ `ps -C mfsmaster --no-header | wc -l ` -eq 0 ];then

/usr/bin/killall -9 mfscgiserv

/usr/bin/killall -9 keepalived

fi

fi [root@Keepalived_MASTER ~]# chmod 755 /usr/local/mfs/keepalived_check_mfsmaster.sh 设置更新虚拟服务器(VIP)地址的arp记录到网关脚本

[root@Keepalived_MASTER ~]# vim /etc/keepalived/clean_arp.sh

#!/bin/sh

VIP=$1

GATEWAY=182.48.115.254 //这个是网关地址

/sbin/arping -I eth0 -c 5 -s $VIP $GATEWAY &>/dev/null [root@Keepalived_MASTER ~]# chmod 755 /etc/keepalived/clean_arp.sh 启动keepalived(确保Keepalived_MASTER机器的mfs master服务和Keepalived服务都要启动)

[root@Keepalived_MASTER ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@Keepalived_MASTER ~]# ps -ef|grep keepalived

root 28718 1 0 13:09 ? 00:00:00 keepalived -D

root 28720 28718 0 13:09 ? 00:00:00 keepalived -D

root 28721 28718 0 13:09 ? 00:00:00 keepalived -D

root 28763 27466 0 13:09 pts/0 00:00:00 grep keepalived 查看vip

[root@Keepalived_MASTER ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:09:21:60 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.233/27 brd 182.48.115.255 scope global eth0

inet 182.48.115.239/32 scope global eth0

inet6 fe80::5054:ff:fe09:2160/64 scope link

valid_lft forever preferred_lft forever

2)Keepalived_BACKUP(mfs master)机器上的操作

在另一篇文档里,该机器是作为metalogger元数据日志服务器的,那么在这个高可用环境下,该台机器改为Keepalived_BACKUP使用。

即去掉metalogger的部署,直接部署mfs master(部署过程参考另一篇文档)。下面直接说下Keepalived_BACKUP下的Keepalived配置: 安装Keepalived

[root@Keepalived_BACKUP ~]# yum install -y openssl-devel popt-devel

[root@Keepalived_BACKUP ~]# cd /usr/local/src/

[root@Keepalived_BACKUP src]# wget http://www.keepalived.org/software/keepalived-1.3.5.tar.gz

[root@Keepalived_BACKUP src]# tar -zvxf keepalived-1.3.5.tar.gz

[root@Keepalived_BACKUP src]# cd keepalived-1.3.5

[root@Keepalived_BACKUP keepalived-1.3.5]# ./configure --prefix=/usr/local/keepalived

[root@Keepalived_BACKUP keepalived-1.3.5]# make && make install [root@Keepalived_BACKUP keepalived-1.3.5]# cp /usr/local/src/keepalived-1.3.5/keepalived/etc/init.d/keepalived /etc/rc.d/init.d/

[root@Keepalived_BACKUP keepalived-1.3.5]# cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

[root@Keepalived_BACKUP keepalived-1.3.5]# mkdir /etc/keepalived/

[root@Keepalived_BACKUP keepalived-1.3.5]# cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

[root@Keepalived_BACKUP keepalived-1.3.5]# cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

[root@Keepalived_BACKUP keepalived-1.3.5]# echo "/etc/init.d/keepalived start" >> /etc/rc.local [root@Keepalived_BACKUP keepalived-1.3.5]# chmod +x /etc/rc.d/init.d/keepalived #添加执行权限

[root@Keepalived_BACKUP keepalived-1.3.5]# chkconfig keepalived on #设置开机启动

[root@Keepalived_BACKUP keepalived-1.3.5]# service keepalived start #启动

[root@Keepalived_BACKUP keepalived-1.3.5]# service keepalived stop #关闭

[root@Keepalived_BACKUP keepalived-1.3.5]# service keepalived restart #重启 配置Keepalived

[root@Keepalived_BACKUP ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf-bak

[root@Keepalived_BACKUP ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

} notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id MFS_HA_BACKUP

} vrrp_script chk_mfs {

script "/usr/local/mfs/keepalived_check_mfsmaster.sh"

interval 2

weight 2

} vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_mfs

}

virtual_ipaddress {

182.48.115.239

}

notify_master "/etc/keepalived/clean_arp.sh 182.48.115.239"

} 接着编写监控脚本

[root@Keepalived_BACKUP ~]# vim /usr/local/mfs/keepalived_notify.sh

#!/bin/bash

A=`ps -C mfsmaster --no-header | wc -l`

if [ $A -eq 0 ];then

/etc/init.d/mfsmaster start

sleep 3

if [ `ps -C mfsmaster --no-header | wc -l ` -eq 0 ];then

/usr/bin/killall -9 mfscgiserv

/usr/bin/killall -9 keepalived

fi

fi [root@Keepalived_BACKUP ~]# chmod 755 /usr/local/mfs/keepalived_notify.sh 设置更新虚拟服务器(VIP)地址的arp记录到网关脚本(Haproxy_Keepalived_Master 和 Haproxy_Keepalived_Backup两台机器都要操作)

[root@Keepalived_BACKUP ~]# vim /etc/keepalived/clean_arp.sh

#!/bin/sh

VIP=$1

GATEWAY=182.48.115.254

/sbin/arping -I eth0 -c 5 -s $VIP $GATEWAY &>/dev/null 启动keepalived

[root@Keepalived_BACKUP ~]# /etc/init.d/keepalived start

Starting keepalived:

[root@Keepalived_BACKUP ~]# ps -ef|grep keepalived

root 17565 1 0 11:06 ? 00:00:00 keepalived -D

root 17567 17565 0 11:06 ? 00:00:00 keepalived -D

root 17568 17565 0 11:06 ? 00:00:00 keepalived -D

root 17778 17718 0 13:47 pts/1 00:00:00 grep keepalived 要保证Keepalived_BACKUP机器的mfs master服务和keepalived服务都要启动!

3)chunkServer的配置

只需要将mfschunkserver.cfg文件中的MASTER_HOST参数配置成182.48.115.239,即VIP地址。

其他的配置都不需要修改。然后重启mfschunkserver服务

4)clinet客户端的配置

只需要见挂载命令中的元数据ip改为182.48.115.249即可! [root@clinet-server ~]# mkdir /mnt/mfs

[root@clinet-server ~]# mkdir /mnt/mfsmeta [root@clinet-server ~]# /usr/local/mfs/bin/mfsmount /mnt/mfs -H 182.48.115.239

mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root [root@clinet-server ~]# /usr/local/mfs/bin/mfsmount -m /mnt/mfsmeta -H 182.48.115.239

mfsmaster accepted connection with parameters: read-write,restricted_ip [root@clinet-server ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root

8.3G 3.8G 4.1G 49% /

tmpfs 499M 228K 498M 1% /dev/shm

/dev/vda1 477M 35M 418M 8% /boot

/dev/sr0 3.7G 3.7G 0 100% /media/CentOS_6.8_Final

182.48.115.239:9421 107G 42G 66G 39% /mnt/mfs [root@clinet-server ~]# mount

/dev/mapper/VolGroup-lv_root on / type ext4 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

tmpfs on /dev/shm type tmpfs (rw,rootcontext="system_u:object_r:tmpfs_t:s0")

/dev/vda1 on /boot type ext4 (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

gvfs-fuse-daemon on /root/.gvfs type fuse.gvfs-fuse-daemon (rw,nosuid,nodev)

/dev/sr0 on /media/CentOS_6.8_Final type iso9660 (ro,nosuid,nodev,uhelper=udisks,uid=0,gid=0,iocharset=utf8,mode=0400,dmode=0500)

182.48.115.239:9421 on /mnt/mfs type fuse.mfs (rw,nosuid,nodev,allow_other)

182.48.115.239:9421 on /mnt/mfsmeta type fuse.mfsmeta (rw,nosuid,nodev,allow_other) 验证下客户端挂载MFS文件系统后的数据读写是否正常

[root@clinet-server ~]# cd /mnt/mfs

[root@clinet-server mfs]# echo "12312313" > test.txt

[root@clinet-server mfs]# cat test.txt

12312313 [root@clinet-server mfs]# rm -f test.txt

[root@clinet-server mfs]# cd ../mfsmeta/trash/

[root@clinet-server trash]# find . -name "*test*"

./003/00000003|test.txt

[root@clinet-server trash]# cd ./003/

[root@clinet-server 003]# ls

00000003|test.txt undel

[root@clinet-server 003]# mv 00000003\|test.txt undel/

[root@clinet-server 003]# ls /mnt/mfs

test.txt

[root@clinet-server 003]# cat /mnt/mfs/test.txt

12312313 以上说明挂载后的MFS数据共享正常

5)Keepalived_MASTER和Keepalived_BACKUP的iptales防火墙设置

Keepalived_MASTER和Keepalived_BACKUP的防火墙iptables在实验中是关闭的。

如果开启了iptables防火墙功能,则需要在两台机器的iptables里配置如下: 可以使用"ss -l"命令查看本机监听的端口

[root@Keepalived_MASTER ~]# ss -l

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 100 *:9419 *:*

LISTEN 0 100 *:9420 *:*

LISTEN 0 100 *:9421 *:*

LISTEN 0 50 *:9425 *:*

LISTEN 0 128 :::ssh :::*

LISTEN 0 128 *:ssh *:*

LISTEN 0 100 ::1:smtp :::*

LISTEN 0 100 127.0.0.1:smtp *:* [root@Keepalived_MASTER ~]# vim /etc/sysconfig/iptables

........

-A INPUT -s 182.148.15.0/24 -d 224.0.0.18 -j ACCEPT #允许组播地址通信。注意设置这两行,就会在Keepalived_MASTER故障恢复后,将VIP资源从Keepalived_BACK那里再转移回来

-A INPUT -s 182.148.15.0/24 -p vrrp -j ACCEPT #允许VRRP(虚拟路由器冗余协)通信

-A INPUT -m state --state NEW -m tcp -p tcp --dport 9419 -j ACCEPT

-A INPUT -m state --state NEW -m tcp -p tcp --dport 9420 -j ACCEPT

-A INPUT -m state --state NEW -m tcp -p tcp --dport 9421 -j ACCEPT

-A INPUT -m state --state NEW -m tcp -p tcp --dport 9425 -j ACCEPT [root@Keepalived_MASTER ~]# /etc/init.d/iptables start

6)故障切换后的数据同步脚本

上面的配置可以实现Keepalived_MASTER机器出现故障(keepalived服务关闭),VIP资源转移到Keepalived_BACKUP上;

当Keepalived_MASTER机器故障恢复(即keepalived服务开启),那么它就会将VIP资源再次抢夺回来! 但是只是实现了VIP资源的转移,但是MFS文件系统的数据该如何进行同步呢?

下面在两台机器上分别写了数据同步脚本(Keepalived_MASTER和Keepalived_BACKUP要提前做好双方的ssh无密码登陆的信任关系) Keepalived_MASTER机器上

[root@Keepalived_MASTER ~]# vim /usr/local/mfs/MFS_DATA_Sync.sh

#!/bin/bash

A=`ip addr|grep 182.48.115.239|awk -F" " '{print $2}'|cut -d"/" -f1`

if [ $A == 182.48.115.239 ];then

/etc/init.d/mfsmaster stop

/bin/rm -f /usr/local/mfs/var/mfs/*

/usr/bin/rsync -e "ssh -p22" -avpgolr 182.48.115.235:/usr/local/mfs/var/mfs/* /usr/local/mfs/var/mfs/

/usr/local/mfs/sbin/mfsmetarestore -m

/etc/init.d/mfsmaster -a

sleep 3

echo "this server has become the master of MFS"

if [ $A != 182.48.115.239 ];then

echo "this server is still MFS's slave"

fi

fi Keepalived_BACKUP机器上

[root@Keepalived_BACKUP ~]# vim /usr/local/mfs/MFS_DATA_Sync.sh

#!/bin/bash

A=`ip addr|grep 182.48.115.239|awk -F" " '{print $2}'|cut -d"/" -f1`

if [ $A == 182.48.115.239 ];then

/etc/init.d/mfsmaster stop

/bin/rm -f /usr/local/mfs/var/mfs/*

/usr/bin/rsync -e "ssh -p22" -avpgolr 182.48.115.233:/usr/local/mfs/var/mfs/* /usr/local/mfs/var/mfs/

/usr/local/mfs/sbin/mfsmetarestore -m

/etc/init.d/mfsmaster -a

sleep 3

echo "this server has become the master of MFS"

if [ $A != 182.48.115.239 ];then

echo "this server is still MFS's slave"

fi

fi 即当VIP资源转移到自己这一方时,执行这个同步脚本,就会将对方的数据同步过来了。

7)故障切换测试

1)关闭Keepalived_MASTER的mfsmaster服务

由于keepalived.conf文件中的监控脚本定义,当发现mfsmaster进程不存在时,就会主动启动mfsmaster。只要当mfsmaster启动失败,才会强制

killall掉keepalived和mfscgiserv进程 [root@Keepalived_MASTER ~]# /etc/init.d/mfsmaster stop

sending SIGTERM to lock owner (pid:29266)

waiting for termination terminated 发现mfsmaster关闭后,会自动重启

[root@Keepalived_MASTER ~]# ps -ef|grep mfs

root 26579 1 0 16:00 ? 00:00:00 /usr/bin/python /usr/local/mfs/sbin/mfscgiserv start

root 30389 30388 0 17:18 ? 00:00:00 /bin/bash /usr/local/mfs/keepalived_check_mfsmaster.sh

mfs 30395 1 71 17:18 ? 00:00:00 /etc/init.d/mfsmaster start 默认情况下,VIP资源是在Keepalived_MASTER上的

[root@Keepalived_MASTER ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:09:21:60 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.233/27 brd 182.48.115.255 scope global eth0

inet 182.48.115.239/32 scope global eth0

inet6 fe80::5054:ff:fe09:2160/64 scope link

valid_lft forever preferred_lft forever 在client端挂载后(通过VIP地址挂载),查看数据

[root@clinet-server ~]# cd /mnt/mfs

[root@clinet-server mfs]# ll

total 4

-rw-r--r-- 1 root root 9 May 24 17:11 grace

-rw-r--r-- 1 root root 9 May 24 17:11 grace1

-rw-r--r-- 1 root root 9 May 24 17:11 grace2

-rw-r--r-- 1 root root 9 May 24 17:11 grace3

-rw-r--r-- 1 root root 9 May 24 17:10 kevin

-rw-r--r-- 1 root root 9 May 24 17:10 kevin1

-rw-r--r-- 1 root root 9 May 24 17:10 kevin2

-rw-r--r-- 1 root root 9 May 24 17:10 kevin3 当keepalived关闭(这个时候mfsmaster关闭后就不会自动重启了,因为keepalived关闭了,监控脚本就不会执行了)

[root@Keepalived_MASTER ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@Keepalived_MASTER ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:09:21:60 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.233/27 brd 182.48.115.255 scope global eth0

inet6 fe80::5054:ff:fe09:2160/64 scope link

valid_lft forever preferred_lft forever 发现,Keepalived_MASTER的keepalived关闭后,VIP资源就不在它上面了。 查看系统日志,发现VIP已经转移

[root@Keepalived_MASTER ~]# tail -1000 /var/log/messages

.......

May 24 17:11:19 centos6-node1 Keepalived_vrrp[29184]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 182.48.115.239

May 24 17:11:19 centos6-node1 Keepalived_vrrp[29184]: Sending gratuitous ARP on eth0 for 182.48.115.239

May 24 17:11:19 centos6-node1 Keepalived_vrrp[29184]: Sending gratuitous ARP on eth0 for 182.48.115.239

May 24 17:11:19 centos6-node1 Keepalived_vrrp[29184]: Sending gratuitous ARP on eth0 for 182.48.115.239

May 24 17:11:19 centos6-node1 Keepalived_vrrp[29184]: Sending gratuitous ARP on eth0 for 182.48.115.239 然后到Keepalived_BACKUP上面发现,VIP已经过来了

[root@Keepalived_BACKUP ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:82:69:69 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.235/27 brd 182.48.115.255 scope global eth0

inet 182.48.115.239/32 scope global eth0

inet6 fe80::5054:ff:fe82:6969/64 scope link

valid_lft forever preferred_lft forever 查看日志,也能看到VIP转移过来了

[root@Keepalived_BACKUP ~]# tail -1000 /var/log/messages

.......

May 24 17:27:57 centos6-node2 Keepalived_vrrp[5254]: VRRP_Instance(VI_1) Received advert with higher priority 100, ours 99

May 24 17:27:57 centos6-node2 Keepalived_vrrp[5254]: VRRP_Instance(VI_1) Entering BACKUP STATE

May 24 17:27:57 centos6-node2 Keepalived_vrrp[5254]: VRRP_Instance(VI_1) removing protocol VIPs. 再次在clinet客户端挂载后,查看数据

[root@clinet-server mfs]# ll

[root@clinet-server mfs]# 发现没有数据,这就需要执行上面的那个同步脚本

[root@Keepalived_BACKUP ~]# sh -x /usr/local/mfs/MFS_DATA_Sync.sh 再次在clinet客户端挂载后,查看数据

[root@clinet-server mfs]# ll

total 4

-rw-r--r--. 1 root root 9 May 24 17:11 grace

-rw-r--r--. 1 root root 9 May 24 17:11 grace1

-rw-r--r--. 1 root root 9 May 24 17:11 grace2

-rw-r--r--. 1 root root 9 May 24 17:11 grace3

-rw-r--r--. 1 root root 9 May 24 17:10 kevin

-rw-r--r--. 1 root root 9 May 24 17:10 kevin1

-rw-r--r--. 1 root root 9 May 24 17:10 kevin2

-rw-r--r--. 1 root root 9 May 24 17:10 kevin3 发现数据已经同步过来,然后再更新数据

[root@clinet-server mfs]# rm -f ./*

[root@clinet-server mfs]# echo "123123" > wangshibo

[root@clinet-server mfs]# echo "123123" > wangshibo1

[root@clinet-server mfs]# echo "123123" > wangshibo2

[root@clinet-server mfs]# echo "123123" > wangshibo3

[root@clinet-server mfs]# echo "123123" > wangshibo4

[root@clinet-server mfs]# ll

total 3

-rw-r--r--. 1 root root 7 May 24 17:26 wangshibo

-rw-r--r--. 1 root root 7 May 24 17:26 wangshibo1

-rw-r--r--. 1 root root 7 May 24 17:26 wangshibo2

-rw-r--r--. 1 root root 7 May 24 17:26 wangshibo3

-rw-r--r--. 1 root root 7 May 24 17:26 wangshibo4 2)恢复Keepalived_MASTER的keepalived进程

[root@Keepalived_MASTER ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@Keepalived_MASTER ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:09:21:60 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.233/27 brd 182.48.115.255 scope global eth0

inet 182.48.115.239/32 scope global eth0

inet6 fe80::5054:ff:fe09:2160/64 scope link

valid_lft forever preferred_lft forever 发现Keepalived_MASTER的keepalived进程启动后,VIP资源又抢夺回来。查看/var/log/messages日志能看出VIP资源转移回来 再次查看Keepalived_BACKUP,发现VIP不在了

[root@Keepalived_BACKUP ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:82:69:69 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.235/27 brd 182.48.115.255 scope global eth0

inet6 fe80::5054:ff:fe82:6969/64 scope link

valid_lft forever preferred_lft forever 在client端挂载后(通过VIP地址挂载),查看数据

[root@clinet-server mfs]# ll

total 4

-rw-r--r--. 1 root root 9 May 24 17:11 grace

-rw-r--r--. 1 root root 9 May 24 17:11 grace1

-rw-r--r--. 1 root root 9 May 24 17:11 grace2

-rw-r--r--. 1 root root 9 May 24 17:11 grace3

-rw-r--r--. 1 root root 9 May 24 17:10 kevin

-rw-r--r--. 1 root root 9 May 24 17:10 kevin1

-rw-r--r--. 1 root root 9 May 24 17:10 kevin2

-rw-r--r--. 1 root root 9 May 24 17:10 kevin3 发现数据还是旧的,需要在Keepalived_MASTER上执行同步

[root@Keepalived_MASTER ~]# sh -x /usr/local/mfs/MFS_DATA_Sync.sh 再次在clinet客户端挂载后,查看数据,发现已经同步

[root@xqsj_web3 mfs]# ll

total 3

-rw-r--r-- 1 root root 7 May 24 17:26 wangshibo

-rw-r--r-- 1 root root 7 May 24 17:26 wangshibo1

-rw-r--r-- 1 root root 7 May 24 17:26 wangshibo2

-rw-r--r-- 1 root root 7 May 24 17:26 wangshibo3

-rw-r--r-- 1 root root 7 May 24 17:26 wangshibo4

MFS+Keepalived双机高可用热备方案操作记录的更多相关文章

- Haproxy+Heartbeat 高可用集群方案操作记录

之前详细介绍了haproxy的基础知识点, 下面记录下Haproxy+Heartbeat高可用web集群方案实现过程, 以加深理解. 架构草图如下: 1) 基本环境准备 (centos6.9系统) 1 ...

- LVS+Heartbeat 高可用集群方案操作记录

之前分别介绍了LVS基础知识和Heartbeat基础知识, 今天这里简单说下LVS+Heartbeat实现高可用web集群方案的操作说明. Heartbeat 项目是 Linux-HA 工程的一个组成 ...

- keepalived工作原理和配置说明 腾讯云VPC内通过keepalived搭建高可用主备集群

keepalived工作原理和配置说明 腾讯云VPC内通过keepalived搭建高可用主备集群 内网路由都用mac地址 一个mac地址绑定多个ip一个网卡只能一个mac地址,而且mac地址无法改,但 ...

- Heartbeat实现集群高可用热备

公司最近需要针对服务器实现热可用热备,这几天也一直在琢磨这个方面的东西,今天做了一些Heartbeat方面的工作,在此记录下来,给需要的人以参考. Heartbeat 项目是 Linux-HA 工程的 ...

- 高可用高性能分布式文件系统FastDFS进阶keepalived+nginx对多tracker进行高可用热备

在上一篇 分布式文件系统FastDFS如何做到高可用 中已经介绍了FastDFS的原理和怎么搭建一个简单的高可用的分布式文件系统及怎么访问. 高可用是实现了,但由于我们只设置了一个group,如果现在 ...

- Rabbitmq+Nginx+keepalived高可用热备

摘自: http://www.cnblogs.com/wangyichen/p/4917241.html 公司两台文件服务器要做高可用,避免单点故障,故采用keepalived实现,其中一台宕机,依靠 ...

- LVS+Keepalived 实现高可用负载均衡

前言 在业务量达到一定量的时候,往往单机的服务是会出现瓶颈的.此时最常见的方式就是通过负载均衡来进行横向扩展.其中我们最常用的软件就是 Nginx.通过其反向代理的能力能够轻松实现负载均衡,当有服务出 ...

- Centos下SFTP双机高可用环境部署记录

SFTP(SSH File Transfer Protocol),安全文件传送协议.有时也被称作 Secure File Transfer Protocol 或 SFTP.它和SCP的区别是它允许用户 ...

- ProxySQL Cluster 高可用集群环境部署记录

ProxySQL在早期版本若需要做高可用,需要搭建两个实例,进行冗余.但两个ProxySQL实例之间的数据并不能共通,在主实例上配置后,仍需要在备用节点上进行配置,对管理来说非常不方便.但是Proxy ...

随机推荐

- SEVERE: An incompatible version 1.1.27 of the APR based Apache Tomcat Native library is installed, while Tomcat requires version 1.1.32

问题: SEVERE: An incompatible version 1.1.27 of the APR based Apache Tomcat Native library is installe ...

- Android 接收系统广播(动态和静态)

1.标准广播:是一种完全异步执行的广播,在广播发出之后,所有的广播接收器几乎会在同一时刻接收到这条广播信息,它们之间没有先后顺序.效率高.无法被截断. 2.有序广播:是一种同步执行的广播,在广播发出后 ...

- VScode启动后cup100%占用的解决方法

新安装的vscode,版本1.29.1.启动后,cpu占用一直是100%,非常的卡.百度以下,找到了解决方法,整理一下. 解决方法:在VScode中文件->首选项->设置->搜索-& ...

- VM虚拟机打不开,没有反应,解决方法。

最近的项目开发,需要用到虚拟机,但是打开虚拟机VM8却发现,以前创建的虚拟机都用不了,点击左侧[我的计算机]中的虚拟机列表,没有任何反应,也没有任何错误提示,服务中所有的虚拟机服务都开启了,网上百度没 ...

- 记录线上一次线程hang住问题

线上发现执行某特定任务在某个特定时间点后不再work.该任务由线程池中线程执行定时周期性调度,根据日志查看无任何异常.从代码研判应该无关定时任务框架,因为对提交的定时任务做了wrap,会将异常都cat ...

- C - Reduced ID Numbers 寒假训练

T. Chur teaches various groups of students at university U. Every U-student has a unique Student Ide ...

- luogu P1858 多人背包

嘟嘟嘟 既然让求前\(k\)优解,那么就多加一维,\(dp[j][k]\)表示体积为\(j\)的第\(k\)优解是啥(\(i\)一维已经优化掉了). 考虑原来的转移方程:dp[j] = max(dp[ ...

- 如何使用Simulink模糊控制

在用这个控制器之前,需要用readfis指令将fuzzy1.fis加载到matlab的工作空间,比如我们用这样的指令:fis1=readfis(‘fis1.fis’):就创建了一个叫myFLC的结构体 ...

- 深入浅出的webpack4构建工具---比mock模拟数据更简单的方式(二十一)

如果想要了解mock模拟数据的话,请看这篇文章(https://www.cnblogs.com/tugenhua0707/p/9813122.html) 在实际应用场景中,总感觉mock数据比较麻烦, ...

- ESP32 environment ubuntu

ubuntu官方用的是16.04版本的,阿里源下载地址:http://mirrors.aliyun.com/ubuntu-releases/16.04/ 用官方版本的好处就是省的搞一堆各种错误 ...