Deep Learning 学习随记(五)深度网络--续

前面记到了深度网络这一章。当时觉得练习应该挺简单的,用不了多少时间,结果训练时间真够长的...途中debug的时候还手贱的clear了一下,又得从头开始运行。不过最终还是调试成功了,sigh~

前一篇博文讲了深度网络的一些基本知识,这次讲义中的练习还是针对MNIST手写库,主要步骤是训练两个自编码器,然后进行softmax回归,最后再整体进行一次微调。

训练自编码器以及softmax回归都是利用前面已经写好的代码。微调部分的代码其实就是一次反向传播。

以下就是代码:

主程序部分:

stackedAEExercise.m

% For the purpose of completing the assignment, you do not need to

% change the code in this file.

%

%%======================================================================

%% STEP 0: Here we provide the relevant parameters values that will

% allow your sparse autoencoder to get good filters; you do not need to

% change the parameters below.

DISPLAY = true;

inputSize = 28 * 28;

numClasses = 10;

hiddenSizeL1 = 200; % Layer 1 Hidden Size

hiddenSizeL2 = 200; % Layer 2 Hidden Size

sparsityParam = 0.1; % desired average activation of the hidden units.

% (This was denoted by the Greek alphabet rho, which looks like a lower-case "p",

% in the lecture notes).

lambda = 3e-3; % weight decay parameter

beta = 3; % weight of sparsity penalty term %%======================================================================

%% STEP 1: Load data from the MNIST database

%

% This loads our training data from the MNIST database files. % Load MNIST database files

trainData = loadMNISTImages('mnist/train-images-idx3-ubyte');

trainLabels = loadMNISTLabels('mnist/train-labels-idx1-ubyte'); trainLabels(trainLabels == 0) = 10; % Remap 0 to 10 since our labels need to start from 1 %%======================================================================

%% STEP 2: Train the first sparse autoencoder

% This trains the first sparse autoencoder on the unlabelled STL training

% images.

% If you've correctly implemented sparseAutoencoderCost.m, you don't need

% to change anything here. % Randomly initialize the parameters

sae1Theta = initializeParameters(hiddenSizeL1, inputSize); %% ---------------------- YOUR CODE HERE ---------------------------------

% Instructions: Train the first layer sparse autoencoder, this layer has

% an hidden size of "hiddenSizeL1"

% You should store the optimal parameters in sae1OptTheta % Use minFunc to minimize the function

addpath minFunc/

options.Method = 'lbfgs'; % Here, we use L-BFGS to optimize our cost

% function. Generally, for minFunc to work, you

% need a function pointer with two outputs: the

% function value and the gradient. In our problem,

% sparseAutoencoderCost.m satisfies this.

options.maxIter = 400; % Maximum number of iterations of L-BFGS to run

options.display = 'on'; [sae1optTheta, cost] = minFunc( @(p) sparseAutoencoderCost(p, ...

inputSize, hiddenSizeL1, ...

lambda, sparsityParam, ...

beta, trainData), ...

sae1Theta, options); %------------------------------------------------------------------------- %======================================================================

% STEP 2: Train the second sparse autoencoder %This trains the second sparse autoencoder on the first autoencoder

%featurse.

%If you've correctly implemented sparseAutoencoderCost.m, you don't need

%to change anything here. [sae1Features] = feedForwardAutoencoder(sae1optTheta, hiddenSizeL1, ...

inputSize, trainData); % Randomly initialize the parameters

sae2Theta = initializeParameters(hiddenSizeL2, hiddenSizeL1); %% ---------------------- YOUR CODE HERE ---------------------------------

% Instructions: Train the second layer sparse autoencoder, this layer has

% an hidden size of "hiddenSizeL2" and an inputsize of

% "hiddenSizeL1"

%

% You should store the optimal parameters in sae2OptTheta [sae2opttheta, cost] = minFunc( @(p) sparseAutoencoderCost(p, ...

hiddenSizeL1, hiddenSizeL2, ...

lambda, sparsityParam, ...

beta, sae1Features), ...

sae2Theta, options); %------------------------------------------------------------------------- %======================================================================

%% STEP 3: Train the softmax classifier

% This trains the sparse autoencoder on the second autoencoder features.

% If you've correctly implemented softmaxCost.m, you don't need

% to change anything here. [sae2Features] = feedForwardAutoencoder(sae2opttheta, hiddenSizeL2, ...

hiddenSizeL1, sae1Features); % Randomly initialize the parameters

saeSoftmaxTheta = 0.005 * randn(hiddenSizeL2 * numClasses, 1); %% ---------------------- YOUR CODE HERE ---------------------------------

% Instructions: Train the softmax classifier, the classifier takes in

% input of dimension "hiddenSizeL2" corresponding to the

% hidden layer size of the 2nd layer.

%

% You should store the optimal parameters in saeSoftmaxOptTheta

%

% NOTE: If you used softmaxTrain to complete this part of the exercise,

% set saeSoftmaxOptTheta = softmaxModel.optTheta(:); options.maxIter = 100;

softmax_lambda = 1e-4; numLabels = 10;

softmaxModel = softmaxTrain(hiddenSizeL2, numLabels, softmax_lambda, ...

sae2Features, trainLabels, options);

saeSoftmaxOptTheta = softmaxModel.optTheta(:); %------------------------------------------------------------------------- %======================================================================

%% STEP 5: Finetune softmax model % Implement the stackedAECost to give the combined cost of the whole model

% then run this cell. % Initialize the stack using the parameters learned

inputSize = 28*28;

stack = cell(2,1);

stack{1}.w = reshape(sae1optTheta(1:hiddenSizeL1*inputSize), ...

hiddenSizeL1, inputSize);

stack{1}.b = sae1optTheta(2*hiddenSizeL1*inputSize+1:2*hiddenSizeL1*inputSize+hiddenSizeL1);

stack{2}.w = reshape(sae2opttheta(1:hiddenSizeL2*hiddenSizeL1), ...

hiddenSizeL2, hiddenSizeL1);

stack{2}.b = sae2opttheta(2*hiddenSizeL2*hiddenSizeL1+1:2*hiddenSizeL2*hiddenSizeL1+hiddenSizeL2); % Initialize the parameters for the deep model

[stackparams, netconfig] = stack2params(stack);

stackedAETheta = [ saeSoftmaxOptTheta ; stackparams ]; %% ---------------------- YOUR CODE HERE ---------------------------------

% Instructions: Train the deep network, hidden size here refers to the '

% dimension of the input to the classifier, which corresponds

% to "hiddenSizeL2".

%

%

[stackedAEOptTheta, cost] = minFunc( @(p) stackedAECost(p, inputSize, hiddenSizeL2, ...

numClasses, netconfig, ...

lambda, trainData, trainLabels), ...

stackedAETheta,options); % ------------------------------------------------------------------------- %%======================================================================

%% STEP 6: Test

% Instructions: You will need to complete the code in stackedAEPredict.m

% before running this part of the code

% % Get labelled test images

% Note that we apply the same kind of preprocessing as the training set

testData = loadMNISTImages('mnist/t10k-images-idx3-ubyte');

testLabels = loadMNISTLabels('mnist/t10k-labels-idx1-ubyte'); testLabels(testLabels == 0) = 10; % Remap 0 to 10 [pred] = stackedAEPredict(stackedAETheta, inputSize, hiddenSizeL2, ...

numClasses, netconfig, testData); acc = mean(testLabels(:) == pred(:));

fprintf('Before Finetuning Test Accuracy: %0.3f%%\n', acc * 100); [pred] = stackedAEPredict(stackedAEOptTheta, inputSize, hiddenSizeL2, ...

numClasses, netconfig, testData); acc = mean(testLabels(:) == pred(:));

fprintf('After Finetuning Test Accuracy: %0.3f%%\n', acc * 100); % Accuracy is the proportion of correctly classified images

% The results for our implementation were:

%

% Before Finetuning Test Accuracy: 87.7%

% After Finetuning Test Accuracy: 97.6%

%

% If your values are too low (accuracy less than 95%), you should check

% your code for errors, and make sure you are training on the

% entire data set of 60000 28x28 training images

% (unless you modified the loading code, this should be the case)

微调部分的代价函数:

stackedAECost.m

function [ cost, grad ] = stackedAECost(theta, inputSize, hiddenSize, ...

numClasses, netconfig, ...

lambda, data, labels) % stackedAECost: Takes a trained softmaxTheta and a training data set with labels,

% and returns cost and gradient using a stacked autoencoder model. Used for

% finetuning. % theta: trained weights from the autoencoder

% visibleSize: the number of input units

% hiddenSize: the number of hidden units *at the 2nd layer*

% numClasses: the number of categories

% netconfig: the network configuration of the stack

% lambda: the weight regularization penalty

% data: Our matrix containing the training data as columns. So, data(:,i) is the i-th training example.

% labels: A vector containing labels, where labels(i) is the label for the

% i-th training example %% Unroll softmaxTheta parameter % We first extract the part which compute the softmax gradient

softmaxTheta = reshape(theta(1:hiddenSize*numClasses), numClasses, hiddenSize); % Extract out the "stack"

stack = params2stack(theta(hiddenSize*numClasses+1:end), netconfig); % You will need to compute the following gradients

softmaxThetaGrad = zeros(size(softmaxTheta));

stackgrad = cell(size(stack));

for d = 1:numel(stack)

stackgrad{d}.w = zeros(size(stack{d}.w));

stackgrad{d}.b = zeros(size(stack{d}.b));

end cost = 0; % You need to compute this % You might find these variables useful

M = size(data, 2);

groundTruth = full(sparse(labels, 1:M, 1)); %% --------------------------- YOUR CODE HERE -----------------------------

% Instructions: Compute the cost function and gradient vector for

% the stacked autoencoder.

%

% You are given a stack variable which is a cell-array of

% the weights and biases for every layer. In particular, you

% can refer to the weights of Layer d, using stack{d}.w and

% the biases using stack{d}.b . To get the total number of

% layers, you can use numel(stack).

%

% The last layer of the network is connected to the softmax

% classification layer, softmaxTheta.

%

% You should compute the gradients for the softmaxTheta,

% storing that in softmaxThetaGrad. Similarly, you should

% compute the gradients for each layer in the stack, storing

% the gradients in stackgrad{d}.w and stackgrad{d}.b

% Note that the size of the matrices in stackgrad should

% match exactly that of the size of the matrices in stack.

%

%----------先计算a和z----------------

d = numel(stack); %stack的深度

n = d+1; %网络层数

a = cell(n,1);

z = cell(n,1);

a{1} = data; %a{1}设成输入数据

for l = 2:n %给a{2,...n}和z{2,,...n}赋值

z{l} = stack{l-1}.w * a{l-1} + repmat(stack{l-1}.b,[1,size(a{l-1},2)]);

a{l} = sigmoid(z{l});

end

%------------------------------------ %-------------计算softmax的代价函数和梯度函数-------------

Ma = softmaxTheta * a{n};

NorM = bsxfun(@minus, Ma, max(Ma, [], 1)); %归一化,每列减去此列的最大值,使得M的每个元素不至于太大。

ExpM = exp(NorM);

P = bsxfun(@rdivide,ExpM,sum(ExpM)); %概率

cost = -1/M*(groundTruth(:)'*log(P(:)))+lambda/2*(softmaxTheta(:)'*softmaxTheta(:)); %代价函数

softmaxThetaGrad = -1/M*((groundTruth-P)*a{n}') + lambda*softmaxTheta; %梯度

%-------------------------------------------------------- %--------------计算每一层的delta---------------------

delta = cell(n);

delta{n} = -softmaxTheta'*(groundTruth-P).*(a{n}).*(1-a{n}); %可以参照前面讲义BP算法的实现

for l = n-1:-1:1

delta{l} = stack{l}.w' * delta{l+1}.*(a{l}).*(1-a{l});

end

%---------------------------------------------------- %--------------计算每一层的w和b的梯度-----------------

for l = n-1:-1:1

stackgrad{l}.w = (1/M)*delta{l+1}*a{l}';

stackgrad{l}.b = (1/M)*sum(delta{l+1},2);

end

%---------------------------------------------------- % ------------------------------------------------------------------------- %% Roll gradient vector

grad = [softmaxThetaGrad(:) ; stack2params(stackgrad)]; end % You might find this useful

function sigm = sigmoid(x)

sigm = 1 ./ (1 + exp(-x));

end

预测函数:

stackedAEPredict.m

function [pred] = stackedAEPredict(theta, inputSize, hiddenSize, numClasses, netconfig, data) % stackedAEPredict: Takes a trained theta and a test data set,

% and returns the predicted labels for each example. % theta: trained weights from the autoencoder

% visibleSize: the number of input units

% hiddenSize: the number of hidden units *at the 2nd layer*

% numClasses: the number of categories

% data: Our matrix containing the training data as columns. So, data(:,i) is the i-th training example. % Your code should produce the prediction matrix

% pred, where pred(i) is argmax_c P(y(c) | x(i)). %% Unroll theta parameter % We first extract the part which compute the softmax gradient

softmaxTheta = reshape(theta(1:hiddenSize*numClasses), numClasses, hiddenSize); % Extract out the "stack"

stack = params2stack(theta(hiddenSize*numClasses+1:end), netconfig); %% ---------- YOUR CODE HERE --------------------------------------

% Instructions: Compute pred using theta assuming that the labels start

% from 1.

%

%----------先计算a和z----------------

d = numel(stack); %stack的深度

n = d+1; %网络层数

a = cell(n,1);

z = cell(n,1);

a{1} = data; %a{1}设成输入数据

for l = 2:n %给a{2,...n}和z{2,,...n}赋值

z{l} = stack{l-1}.w * a{l-1} + repmat(stack{l-1}.b,[1,size(a{l-1},2)]);

a{l} = sigmoid(z{l});

end

%-------------------------------------

M = softmaxTheta * a{n};

[Y,pred] = max(M,[],1); % ----------------------------------------------------------- end % You might find this useful

function sigm = sigmoid(x)

sigm = 1 ./ (1 + exp(-x));

end

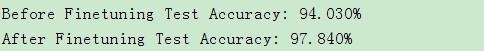

最后结果:

跟讲义以及程序注释中有点差别,特别是没有微调的结果,讲义中提到是不到百分之九十的,这里算出来是百分之九十四左右:

但是微调后的结果基本是一样的。

PS:讲义地址:http://deeplearning.stanford.edu/wiki/index.php/Exercise:_Implement_deep_networks_for_digit_classification

Deep Learning 学习随记(五)深度网络--续的更多相关文章

- Deep Learning 学习随记(三)续 Softmax regression练习

上一篇讲的Softmax regression,当时时间不够,没把练习做完.这几天学车有点累,又特别想动动手自己写写matlab代码 所以等到了现在,这篇文章就当做上一篇的续吧. 回顾: 上一篇最后给 ...

- Deep Learning 学习随记(五)Deep network 深度网络

这一个多周忙别的事去了,忙完了,接着看讲义~ 这章讲的是深度网络(Deep Network).前面讲了自学习网络,通过稀疏自编码和一个logistic回归或者softmax回归连接,显然是3层的.而这 ...

- 深度学习笔记之关于总结、展望、参考文献和Deep Learning学习资源(五)

不多说,直接上干货! 十.总结与展望 1)Deep learning总结 深度学习是关于自动学习要建模的数据的潜在(隐含)分布的多层(复杂)表达的算法.换句话来说,深度学习算法自动的提取分类需要的低层 ...

- Deep Learning学习随记(一)稀疏自编码器

最近开始看Deep Learning,随手记点,方便以后查看. 主要参考资料是Stanford 教授 Andrew Ng 的 Deep Learning 教程讲义:http://deeplearnin ...

- Deep Learning 学习随记(七)Convolution and Pooling --卷积和池化

图像大小与参数个数: 前面几章都是针对小图像块处理的,这一章则是针对大图像进行处理的.两者在这的区别还是很明显的,小图像(如8*8,MINIST的28*28)可以采用全连接的方式(即输入层和隐含层直接 ...

- Deep Learning 学习随记(四)自学习和非监督特征学习

接着看讲义,接下来这章应该是Self-Taught Learning and Unsupervised Feature Learning. 含义: 从字面上不难理解其意思.这里的self-taught ...

- Deep Learning学习随记(二)Vectorized、PCA和Whitening

接着上次的记,前面看了稀疏自编码.按照讲义,接下来是Vectorized, 翻译成向量化?暂且这么认为吧. Vectorized: 这节是老师教我们编程技巧了,这个向量化的意思说白了就是利用已经被优化 ...

- Deep Learning 学习随记(八)CNN(Convolutional neural network)理解

前面Andrew Ng的讲义基本看完了.Andrew讲的真是通俗易懂,只是不过瘾啊,讲的太少了.趁着看完那章convolution and pooling, 自己又去翻了翻CNN的相关东西. 当时看讲 ...

- Deep Learning 学习随记(六)Linear Decoder 线性解码

线性解码器(Linear Decoder) 前面第一章提到稀疏自编码器(http://www.cnblogs.com/bzjia-blog/p/SparseAutoencoder.html)的三层网络 ...

随机推荐

- mysql 服务意外停止1067错误解决办法小结

今天在配置服务器时安装mysql5.5总是无法安装,查看日志错误提示为1067错误,下面来看我的解决办法 事件类型: 错误 事件来源: Service Control Manager 事件种类: 无 ...

- 对于利用pca 和 cca 进行fmri激活区识别的理解

1.pca 抛开fmri研究这个范畴,我们有一个超长向量,这个超长向量在fmri研究中,就是体素数据.向量中的每个数值,都代表在相应坐标轴下的坐标值.这些坐标轴所组成的坐标系,其实是标准单位坐标系.向 ...

- Linux下高效编写Shell——shell特殊字符汇总

Linux下无论如何都是要用到shell命令的,在Shell的实际使用中,有编程经验的很容易上手,但稍微有难度的是shell里面的那些个符号,各种特殊的符号在我们编写Shell脚本的时候如果能够用的好 ...

- 汇编学习笔记(14)BIOS对键盘输入的处理

字符的处理 键盘输入的字符一般由int9中断例程从60h端口中读取,并存放在键盘缓冲区中,由int16h例程从键盘缓冲区中读取相应字符,CPU对键盘输入a.shift_a的处理过程如下 1.一开始没有 ...

- Linux学习笔记15——GDB 命令详细解释【转】

GDB 命令详细解释 Linux中包含有一个很有用的调试工具--gdb(GNU Debuger),它可以用来调试C和C++程序,功能不亚于Windows下的许多图形界面的调试工具. 和所有常用的调试工 ...

- HDOJ 1312题Red and Black

Red and Black Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 65536/32768 K (Java/Others) Total ...

- TCP协议状态简介

原文出自:Vimer的程序世界 1.建立连接协议(三次握手)(1)客户端发送一个带SYN标志的TCP报文到服务器.这是三次握手过程中的报文1.(2) 服务器端回应客户端的,这是三次握手中的第2个报文, ...

- [基础] Loss function(一)

Loss function = Loss term(误差项) + Regularization term(正则项),我们先来研究误差项:首先,所谓误差项,当然是误差的越少越好,由于不存在负误差,所以为 ...

- 323. Number of Connected Components in an Undirected Graph

算连接的..那就是union find了 public class Solution { public int countComponents(int n, int[][] edges) { if(e ...

- 406. Queue Reconstruction by Height

一开始backtrack,设计了很多剪枝情况,还是TLE了 ..后来用PQ做的. 其实上面DFS做到一半的时候意识到应该用PQ做,但是不确定会不会TLE,就继续了,然后果然TLE了.. PQ的做法和剪 ...