android NDK 神经网络API——是给tensorflow lite调用的底层API,应用开发者使用tensorflow lite即可

eural Networks API

In this document show more

- Understanding the Neural Networks API Runtime

- Neural Networks API Programming Model

- More About Operands

Related API reference

Related sample

Note: The Neural Networks API is available in Android 8.1 and higher system images. The header file is available in the latest version of the NDK. We encourage you to send us your feedback via the Android 8.1 Preview issue tracker.

The Android Neural Networks API (NNAPI) is an Android C API designed for running computationally intensive operations for machine learning on mobile devices. NNAPI is designed to provide a base layer of functionality for higher-level machine learning frameworks (such as TensorFlow Lite, Caffe2, or others) that build and train neural networks. The API is available on all devices running Android 8.1 (API level 27) or higher.

NNAPI supports inferencing by applying data from Android devices to previously trained, developer-defined models. Examples of inferencing include classifying images, predicting user behavior, and selecting appropriate responses to a search query.

On-device inferencing has many benefits:

- Latency: You don’t need to send a request over a network connection and wait for a response. This can be critical for video applications that process successive frames coming from a camera.

- Availability: The application runs even when outside of network coverage.

- Speed: New hardware specific to neural networks processing provide significantly faster computation than with general-use CPU alone.

- Privacy: The data does not leave the device.

- Cost: No server farm is needed when all the computations are performed on the device.

There are also trade-offs that a developer should keep in mind:

- System utilization: Evaluating neural networks involve a lot of computation, which could increase battery power usage. You should consider monitoring the battery health if this is a concern for your app, especially for long-running computations.

- Application size: Pay attention to the size of your models. Models may take up multiple megabytes of space. If bundling large models in your APK would unduly impact your users, you may want to consider downloading the models after app installation, using smaller models, or running your computations in the cloud. NNAPI does not provide functionality for running models in the cloud.

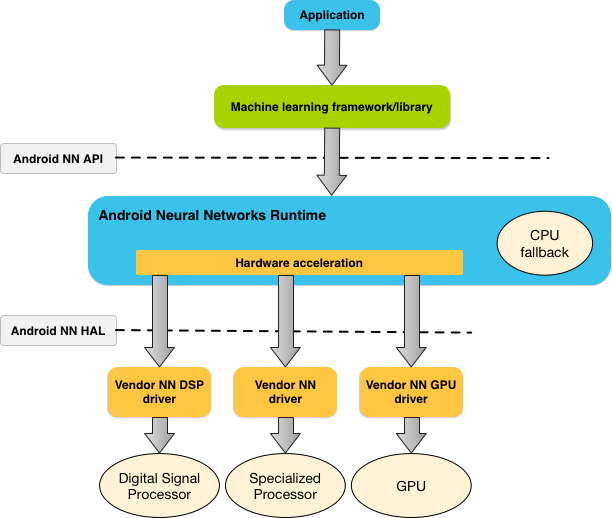

Understanding the Neural Networks API runtime

NNAPI is meant to be called by machine learning libraries, frameworks, and tools that let developers train their models off-device and deploy them on Android devices. Apps typically would not use NNAPI directly, but would instead directly use higher-level machine learning frameworks. These frameworks in turn could use NNAPI to perform hardware-accelerated inference operations on supported devices.

Based on the app’s requirements and the hardware capabilities on a device, Android’s neural networks runtime can efficiently distribute the computation workload across available on-device processors, including dedicated neural network hardware, graphics processing units (GPUs), and digital signal processors (DSPs).

For devices that lack a specialized vendor driver, the NNAPI runtime relies on optimized code to execute requests on the CPU.

The diagram below shows a high-level system architecture for NNAPI.

Figure 1. System architecture for Android Neural Networks API

Figure 1. System architecture for Android Neural Networks API

eural Networks API

In this document show more

- Understanding the Neural Networks API Runtime

- Neural Networks API Programming Model

- More About Operands

Related API reference

Related sample

Note: The Neural Networks API is available in Android 8.1 and higher system images. The header file is available in the latest version of the NDK. We encourage you to send us your feedback via the Android 8.1 Preview issue tracker.

The Android Neural Networks API (NNAPI) is an Android C API designed for running computationally intensive operations for machine learning on mobile devices. NNAPI is designed to provide a base layer of functionality for higher-level machine learning frameworks (such as TensorFlow Lite, Caffe2, or others) that build and train neural networks. The API is available on all devices running Android 8.1 (API level 27) or higher.

NNAPI supports inferencing by applying data from Android devices to previously trained, developer-defined models. Examples of inferencing include classifying images, predicting user behavior, and selecting appropriate responses to a search query.

On-device inferencing has many benefits:

- Latency: You don’t need to send a request over a network connection and wait for a response. This can be critical for video applications that process successive frames coming from a camera.

- Availability: The application runs even when outside of network coverage.

- Speed: New hardware specific to neural networks processing provide significantly faster computation than with general-use CPU alone.

- Privacy: The data does not leave the device.

- Cost: No server farm is needed when all the computations are performed on the device.

There are also trade-offs that a developer should keep in mind:

- System utilization: Evaluating neural networks involve a lot of computation, which could increase battery power usage. You should consider monitoring the battery health if this is a concern for your app, especially for long-running computations.

- Application size: Pay attention to the size of your models. Models may take up multiple megabytes of space. If bundling large models in your APK would unduly impact your users, you may want to consider downloading the models after app installation, using smaller models, or running your computations in the cloud. NNAPI does not provide functionality for running models in the cloud.

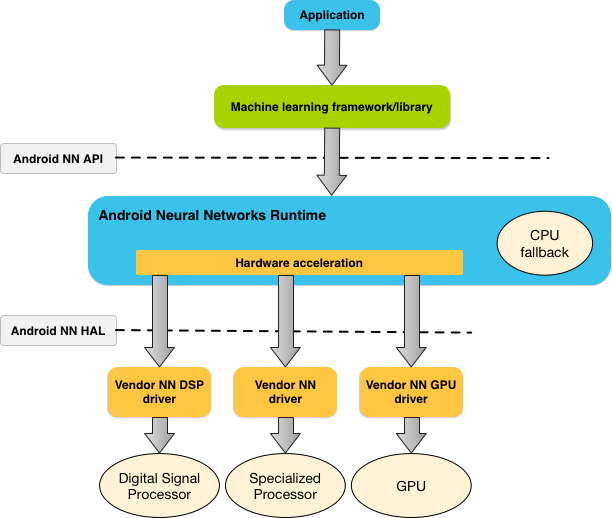

Understanding the Neural Networks API runtime

NNAPI is meant to be called by machine learning libraries, frameworks, and tools that let developers train their models off-device and deploy them on Android devices. Apps typically would not use NNAPI directly, but would instead directly use higher-level machine learning frameworks. These frameworks in turn could use NNAPI to perform hardware-accelerated inference operations on supported devices.

Based on the app’s requirements and the hardware capabilities on a device, Android’s neural networks runtime can efficiently distribute the computation workload across available on-device processors, including dedicated neural network hardware, graphics processing units (GPUs), and digital signal processors (DSPs).

For devices that lack a specialized vendor driver, the NNAPI runtime relies on optimized code to execute requests on the CPU.

The diagram below shows a high-level system architecture for NNAPI.

Figure 1. System architecture for Android Neural Networks API

Figure 1. System architecture for Android Neural Networks API

参考:https://developer.android.com/ndk/guides/neuralnetworks/index.html

android NDK 神经网络API——是给tensorflow lite调用的底层API,应用开发者使用tensorflow lite即可的更多相关文章

- Android NDK 初探,生成so文件以及调用so文件方法

因为最近业务上涉及安全的问题 然后有一些加密解密的方法和key的存储问题 本来想存储到手机里面,但是网上说一般敏感信息不要存储到手机Sdcard上 而且我这个如果从网络建立通信获取的话,又太耗时,所以 ...

- Android NDK学习(五):Java调用Native代码流程总结

编写一个Java类,并且在某个方法签名的修饰符中加上native修饰符. 使用Javac命令编译第一步中的Java类,使之成为一个class文件. 使用Javah -jni 包名.类名 生成Jni接口 ...

- TensorFlow Lite demo——就是为嵌入式设备而存在的,底层调用NDK神经网络API,注意其使用的tf model需要转换下,同时提供java和C++ API,无法使用tflite的见后

Introduction to TensorFlow Lite TensorFlow Lite is TensorFlow’s lightweight solution for mobile and ...

- 【译】Android NDK API 规范

[译]Android NDK API 规范 译者按: 修改R代码遇到Lint tool的报错,搜到了这篇文档,aosp仓库地址:Android NDK API Guidelines. 975a589 ...

- Android NDK开发初识

神秘的Android NDK开发往往众多程序员感到兴奋,但又不知它为何物,由于近期开发应用时,为了是开发的.apk文件不被他人解读(反编译),查阅了很多资料,其中有提到使用NDK开发,怀着好奇的心理, ...

- 初识Android NDK

本文介绍Windows环境下搭建Android NDK开发环境,并创建一个简单的使用Native代码的Android Application. 一.环境搭建 二.JNI函数绑定 三.例子 一.环境搭建 ...

- Android NDK开发入门实例

AndroidNDK是能使Android应用开发者把从c/c++编译而来的本地代码嵌入到应用包中的一系列工具的组合. 注意: AndroidNDK只能用于Android1.5及以上版本中. I. An ...

- [原]如何用Android NDK编译FFmpeg

我们知道在Ubuntu下直接编译FFmpeg是很简单的,主要是先执行./configure,接着执行make命令来编译,完了紧接着执行make install执行安装.那么如何使用Android的ND ...

- android ndk环境配置(转)

转载自:http://jingyan.baidu.com/article/3ea51489e7a9bd52e61bbac7.html android sdk 更新到 r23 时,eclipse 自带 ...

随机推荐

- 图解TCP/IP笔记(1)——TCP/IP协议群

转载请注明:https://www.cnblogs.com/igoslly/p/9167916.html TCP/IP制定 制定:IETF 记录:RFC - Request for comment ...

- html5——多媒体(一)

<audio> 1.autoplay 自动播放 2.controls 是否显不默认播放控件 3.loop 循环播放 4.preload 预加载 同时设置autoplay时些属性失效 5.由 ...

- MySQL主从备份配置

MySQL主从热备配置 两台服务器的MySQL版本都是5.5.41master:192.168.3.119slave:192.168.3.120 MySQL主服务器配置:1.创建用于备份的用户 gra ...

- PowerDesigner16逆向工程生成PDM列注释(My Sql5.0模版)

一.编辑当前DataBase 选择DataBase——>edit Current DBMS...弹出如下对话框: 如上图,先解释一下: 根据红颜色框从上往下解释一下. 第一个红框是对应的修改的 ...

- centOS安装python3 以及解决 导入ssl包出错的问题

参考: https://www.cnblogs.com/mqxs/p/9103031.html https://www.cnblogs.com/cerutodog/p/9908574.html 确认环 ...

- vino-server服务是啥服务

近期接手一个项目,开始梳理服务器,突然发现有个进程是开启5900远程桌面端口的, 在不知情的情况下怕被人给利用了,啥也不说,先干掉再说. server端开启vino-server,允许别人查看自己的桌 ...

- 创建pod索引库(Specs)

专门用来存放xxx.podspec 的索引文件的库就叫做索引库.我们需要将这些索引文件上传到远程索引库才能保证其他的人能够拿来用. 创建一个远程索引库和本地索引库对应起来,步骤如下: 1.登录开源中国 ...

- form:input 标签使用

<form:input path="suplier" htmlEscape="false" maxlength="50" id=&qu ...

- homework week 1

第一周的作业 首先来完成第二个作业, 编写登录接口, 因为视频上并没有相关的教程, 就在网上搜了一下读写文件的语句, 粗略了解. f1 = open("data.txt",&quo ...

- JavaSE 学习笔记之Object对象(八)

Object:所有类的直接或者间接父类,Java认为所有的对象都具备一些基本的共性内容,这些内容可以不断的向上抽取,最终就抽取到了一个最顶层的类中的,该类中定义的就是所有对象都具备的功能. 具体方法: ...