kolla-ansible部署多节点OpenStack-Pike

一、准备工作:

系统:均为centos 7.5

这里以笔记本一台、vmware workstation做为实验环境

建议虚拟机硬盘存储为单个文件,因为在上传和使用windows镜像时文件太大容易造成虚拟机卡住而失败

架构:

3个control节点、2个network节点、2个compute节点、1个storage节点、1个monitoring节点、1个deploy节点,每节点2核心4G + 1块100G硬盘,storage节点额外增加一个600G硬盘

网络:

每台机3块网卡,

第一块网卡:NAT模式,用于下载安装包,设置好IP可以上网

第二块网卡:仅主机模式,用作API网络、VM网络(tenant 网络),之所有选择VMnet1(仅主机模式),是方便笔记本连接 horizon UI,需要设置IP

第三块网卡:NAT模式,用作External 网络,用于虚拟机连接外部网络,不用设置IP

control01

ens33:192.168.163.21/24 gw:192.168.163.2

ens37:192.168.41.21/24

ens38

control02

ens33:192.168.163.22/24 gw:192.168.163.2

ens37:192.168.41.22/24

ens38

control03

ens33:192.168.163.30/24 gw:192.168.163.2

ens37:192.168.41.30/24

ens38

network01

ens33:192.168.163.23/24 gw:192.168.163.2

ens37:192.168.41.23/24

ens38

network02

ens33:192.168.163.27/24 gw:192.168.163.2

ens37:192.168.41.27/24

ens38

compute01

ens33:192.168.163.24/24 gw:192.168.163.2

ens37:192.168.41.24/24

ens38

compute02

ens33:192.168.163.28/24 gw:192.168.163.2

ens37:192.168.41.28/24

ens38

monitoring01

ens33:192.168.163.26/24 gw:192.168.163.2

ens37:192.168.41.26/24

ens38

storage01

ens33:192.168.163.25/24 gw:192.168.163.2

ens37:192.168.41.25/24

ens38

deploy

ens33:192.168.163.29/24 gw:192.168.163.2

ens37:192.168.41.29/24

ens38

每台机上绑定host:

192.168.41.21 control01

192.168.41.22 control02

192.168.41.30 control03

192.168.41.23 network01

192.168.41.27 network02

192.168.41.24 compute01

192.168.41.28 compute02

192.168.41.25 monitoring01

192.168.41.26 storage01

192.168.41.29 deploy

存储节点:

要启动cinder存储服务,需先添加一块新的硬盘,然后创建pv、vg

pvcreate /dev/sdb

vgcreate cinder-volumes /dev/sdb #vg名取名为 cinder-volumes,这里主要跟 kolla配置文件里vg名一致

只允许vm实例访问块存储卷,对LVM可能出现异常做设置

vi /etc/lvm/lvm.conf

修改 devices 下面的,有多少块硬盘就写多少块,如果不想使用系统盘,则不写a|sda

devices {

...

filter = [ "a|sda|", "a|sdb|", "r|.*|" ]

重启lvm服务

systemctl restart lvm2-lvmetad.service

所有节点:

配置国内pipy源

mkdir ~/.pip

cat << EOF > ~/.pip/pip.conf

[global]

index-url = https://pypi.tuna.tsinghua.edu.cn/simple/

[install]

trusted-host=pypi.tuna.tsinghua.edu.cn

EOF

安装pip

yum -y install epel-release

yum -y install python-pip

如果pip install 出现问题可以试试命令

pip install setuptools==33.1.1

二、所有节点安装docker

一定要先启用EPEL的repo源

yum -y install python-devel libffi-devel gcc openssl-devel git python-pip qemu-kvm qemu-img

安装docker

1)下载RPM包

2)安装Docker 1.12.6,创建安装1.12.6比较稳定

yum localinstall -y docker-engine-1.12.6-1.el7.centos.x86_64.rpm docker-engine-selinux-1.12.6-1.el7.centos.noarch.rpm

也可以参考官方文档安装:

https://docs.docker.com/engine/installation/linux/centos/

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install docker-ce #或 yum install docker-ce-1.12.6

配置Docker共享挂载

mkdir /etc/systemd/system/docker.service.d

tee /etc/systemd/system/docker.service.d/kolla.conf << 'EOF'

[Service]

MountFlags=shared

EOF

设置访问私有的Docker仓库

公共的:https://hub.docker.com/u/kolla/,但下载比较慢

编辑 vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd --insecure-registry 192.168.41.29:4000

重启服务

systemctl daemon-reload && systemctl enable docker && systemctl restart docker

三、在deploy上安装私有镜像仓库、安装kolla、安装openstack

下载kolla官方提供的镜像

wget 原来官方的下载地址http://tarballs.openstack.org/kolla/images/centos-source-registry-pike.tar.gz已经转到到https://hub.docker.com/u/kolla/,4G左右

百度云盘:https://pan.baidu.com/s/4oAdAJjk

mkdir -p /data/registry

tar -zxvf centos-source-registry-pike-5.0.1.tar.gz -C /data/registry

这样就把kolla的docker镜像文件放到Regisitry服务器上。

Registry 服务器

默认docker的registry是使用5000端口,对于OpenStack来说,有端口冲突,所以改成4000

docker run -d -v /data/registry:/var/lib/registry -p 4000:5000 --restart=always --name registry registry

测试是否成功:

# curl -k localhost:4000/v2/_catalog

# curl -k localhost:4000/v2/lokolla/centos-source-fluentd/tags/list

{"name":"lokolla/centos-source-fluentd","tags":["5.0.1"]}

Ansible

Kolla项目的Mitaka版本要求ansible版本低于2.0,Newton版本以后的就只支持2.x以上的版本。

yum install -y ansible

设置免密登录

ssh-keygen

ssh-copy-id control01

ssh-copy-id control02

ssh-copy-id control03

ssh-copy-id network01

...

安装kolla

升级pip:

pip install -U pip -i https://pypi.tuna.tsinghua.edu.cn/simple

安装docker模块:pip install docker

安装kolla-ansible

cd /home

git clone -b stable/pike https://github.com/openstack/kolla-ansible.git

cd kolla-ansible

pip install . -i https://pypi.tuna.tsinghua.edu.cn/simple

复制相关文件

cp -r /usr/share/kolla-ansible/etc_examples/kolla /etc/kolla/

cp /usr/share/kolla-ansible/ansible/inventory/* /home/

如果是在虚拟机里再启动虚拟机,那么需要把virt_type=qemu,默认是kvm

mkdir -p /etc/kolla/config/nova

cat << EOF > /etc/kolla/config/nova/nova-compute.conf

[libvirt]

virt_type=qemu

cpu_mode = none

EOF

生成密码文件

kolla-genpwd

编辑 vim /etc/kolla/passwords.yml

keystone_admin_password: admin123

这是登录Dashboard,admin使用的密码,根据需要进行修改。

编辑/etc/kolla/globals.yml 文件

grep -Ev "^$|^[#;]" /etc/kolla/globals.yml

---

kolla_install_type: "source"

openstack_release: "5.0.1"

kolla_internal_vip_address: "192.168.41.20"

docker_registry: "192.168.41.29:4000"

docker_namespace: "lokolla"

network_interface: "ens37"

api_interface: "{{ network_interface }}"

neutron_external_interface: "ens38"

enable_cinder: "yes"

enable_cinder_backend_iscsi: "yes"

enable_cinder_backend_lvm: "yes"

tempest_image_id:

tempest_flavor_ref_id:

tempest_public_network_id:

tempest_floating_network_name:

因为控制节点有多个,所以要启动haproxy,默认enable_haproxy: "yes"、enable_heat: "yes"

编辑 /home/multinode 文件

[control]

# These hostname must be resolvable from your deployment host

control01

control02

control03

# The above can also be specified as follows:

#control[01:03] ansible_user=kolla

# The network nodes are where your l3-agent and loadbalancers will run

# This can be the same as a host in the control group

[network]

network01

network02

[compute]

compute01

compute02

[monitoring]

monitoring01

# When compute nodes and control nodes use different interfaces,

# you can specify "api_interface" and other interfaces like below:

#compute01 neutron_external_interface=eth0 api_interface=em1 storage_interface=em1 tunnel_interface=em1

[storage]

storage01

[deployment]

localhost ansible_connection=local

网关配置:

/home/kolla-ansible/ansible/roles/neutron/templates/ml2_conf.ini.j2

安装OpenStack

提前拉取镜像:

kolla-ansible -i ./multinode pull

检查:kolla-ansible prechecks -i /home/multinode

部署:kolla-ansible deploy -i /home/multinode

验证部署

kolla-ansible post-deploy

这样就创建 /etc/kolla/admin-openrc.sh 文件

. /etc/kolla/admin-openrc.sh

安装OpenStack client端

pip install --ignore-installed python-openstackclient

总结:

多节点openstack集群:

haproxy 容器会运行在network节点上,

私有网络网关、外网网关、路由器 会运行在network节点上

容器运行情况:

control01:

horizon

heat_engine

heat_api_cfn

heat_api

neutron_server

nova_novncproxy

nova_consoleauth

nova_conductor

nova_scheduler

nova_api

placement_api

cinder_scheduler

cinder_api

glance_registry

glance_api

keystone

rabbitmq

mariadb

cron

kolla_toolbox

fluentd

memcached

control02:

horizon

heat_engine

heat_api_cfn

heat_api

neutron_server

nova_novncproxy

nova_consoleauth

nova_conductor

nova_scheduler

nova_api

placement_api

cinder_scheduler

cinder_api

glance_registry

keystone

rabbitmq

mariadb

cron

kolla_toolbox

fluentd

memcached

network01:

neutron_metadata_agent

neutron_l3_agent

neutron_dhcp_agent

neutron_openvswitch_agent

openvswitch_vswitchd

openvswitch_db

keepalived

haproxy

cron

kolla_toolbox

fluentd

network02:

neutron_metadata_agent

neutron_l3_agent

neutron_dhcp_agent

neutron_openvswitch_agent

openvswitch_vswitchd

openvswitch_db

keepalived

haproxy

cron

kolla_toolbox

fluentd

compute01、compute02:

neutron_openvswitch_agent

openvswitch_vswitchd

openvswitch_db

nova_compute

nova_libvirt

nova_ssh

iscsid

cron

kolla_toolbox

fluentd

storage01:

cinder_backup

cinder_volume

tgtd

iscsid

cron

kolla_toolbox

fluentd

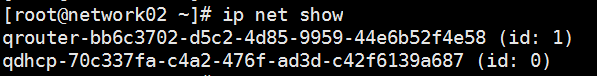

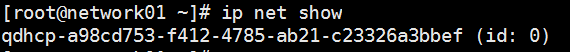

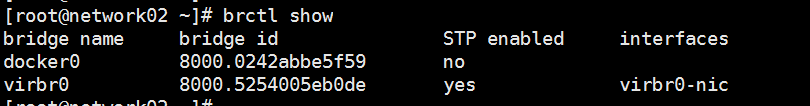

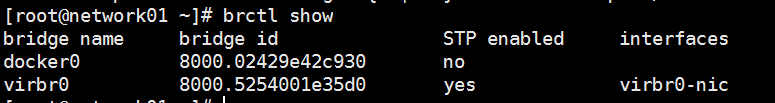

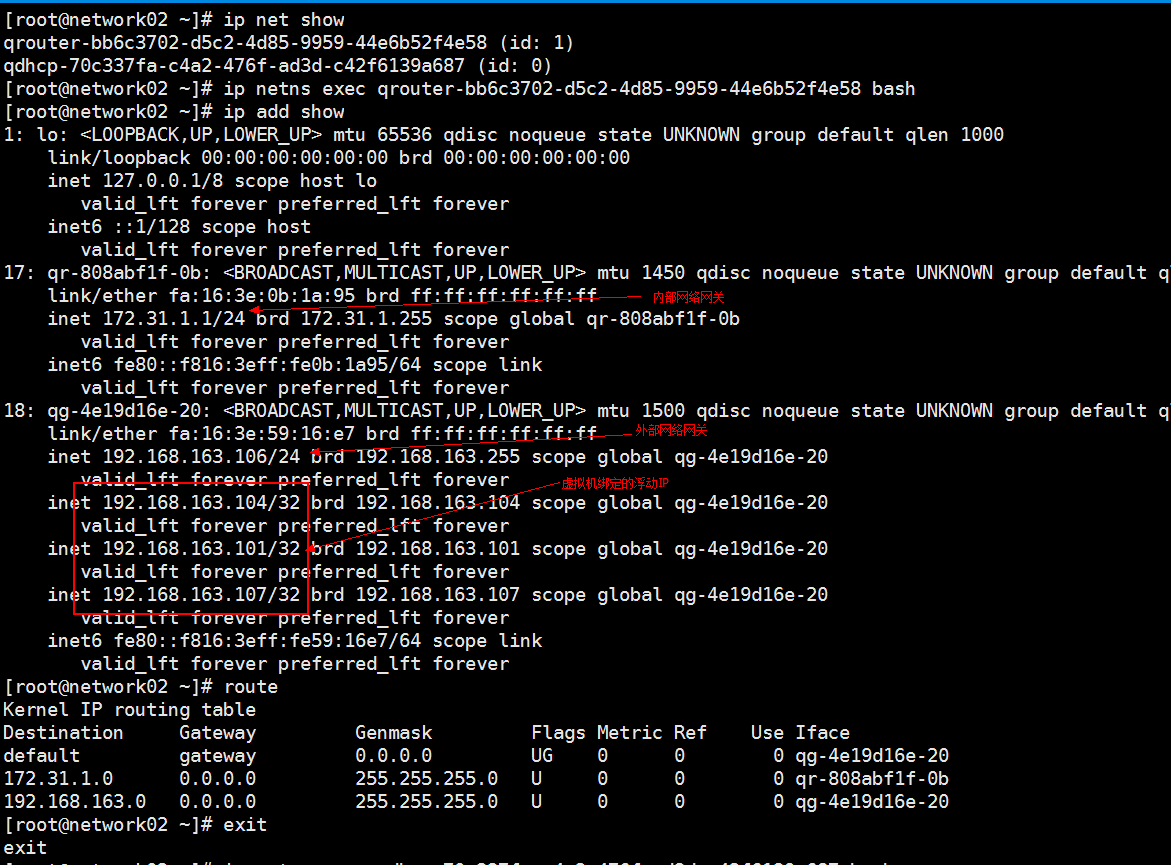

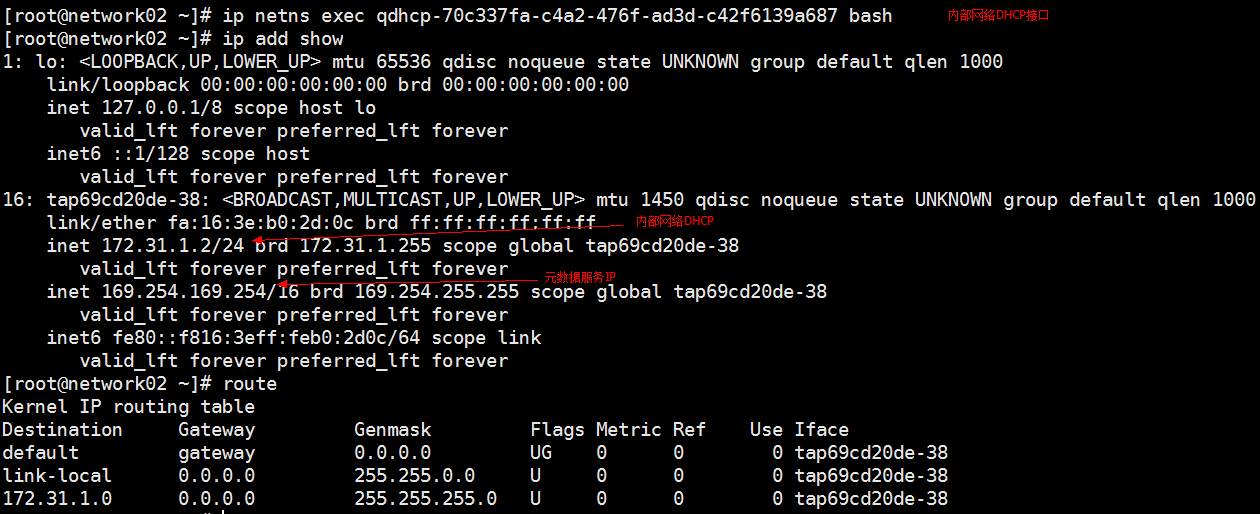

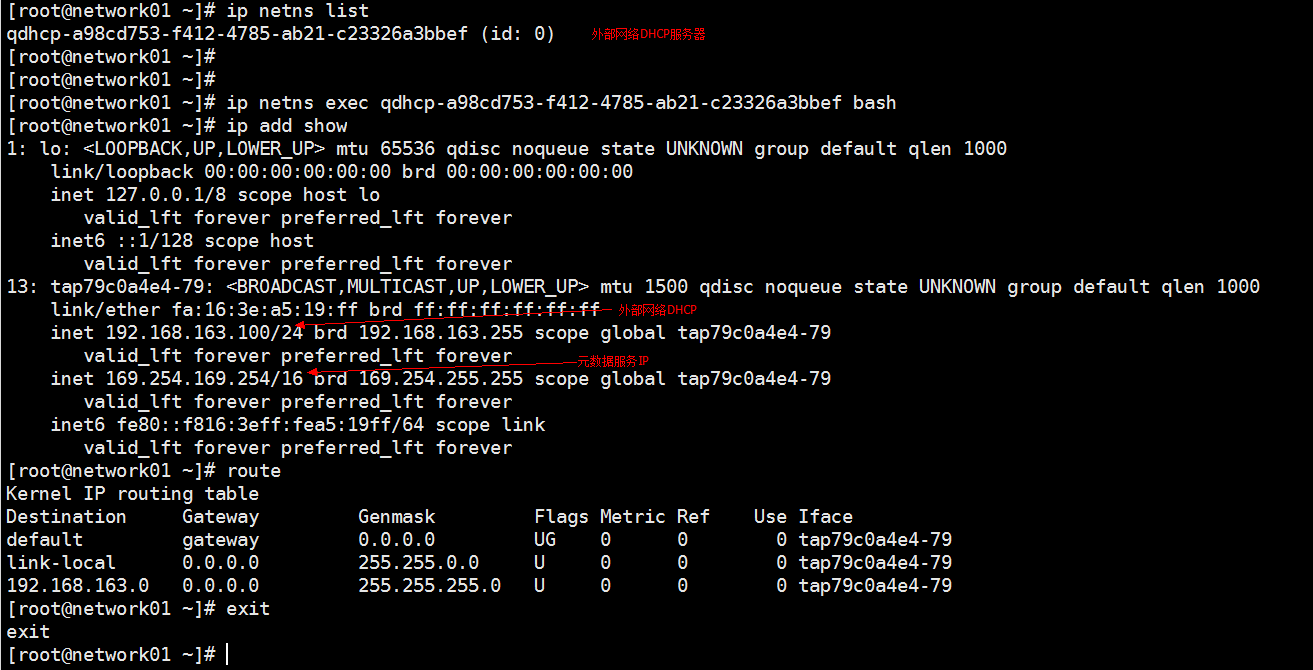

私有网络网关、外网网关、路由器

先安装 yum install bridge-utils -y

各节点上服务常用目录:

/etc/kolla 服务配置目录

/var/lib/docker/volumes/kolla_logs/_data 服务日志目录

/var/lib/docker/volumes 服务数据映射的目录

haproxy配置:

grep -v "^$" /etc/kolla/haproxy/haproxy.cfg

global

chroot /var/lib/haproxy

user haproxy

group haproxy

daemon

log 192.168.41.23: local1

maxconn

stats socket /var/lib/kolla/haproxy/haproxy.sock

defaults

log global

mode http

option redispatch

option httplog

option forwardfor

retries

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

listen stats

bind 192.168.41.23:

mode http

stats enable

stats uri /

stats refresh 15s

stats realm Haproxy\ Stats

stats auth openstack:oa8hvXNwWT3h33auKwn2RcMdt0Q0IWxljLgz97i1

listen rabbitmq_management

bind 192.168.41.20:

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen keystone_internal

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen keystone_admin

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen glance_registry

bind 192.168.41.20:

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen glance_api

bind 192.168.41.20:

timeout client 6h

timeout server 6h

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen nova_api

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen nova_metadata

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen placement_api

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen nova_novncproxy

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

http-request set-header X-Forwarded-Proto https if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen neutron_server

bind 192.168.41.20:

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen horizon

bind 192.168.41.20:

balance source

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen cinder_api

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen heat_api

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

listen heat_api_cfn

bind 192.168.41.20:

http-request del-header X-Forwarded-Proto if { ssl_fc }

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall

server control03 192.168.41.30: check inter rise fall

# (NOTE): This defaults section deletes forwardfor as recommended by:

# https://marc.info/?l=haproxy&m=141684110710132&w=1

defaults

log global

mode http

option redispatch

option httplog

retries

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

listen mariadb

mode tcp

timeout client 3600s

timeout server 3600s

option tcplog

option tcpka

option mysql-check user haproxy post-

bind 192.168.41.20:

server control01 192.168.41.21: check inter rise fall

server control02 192.168.41.22: check inter rise fall backup

server control03 192.168.41.30: check inter rise fall backup

cat /etc/kolla/haproxy/config.json

{

"command": "/usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid",

"config_files": [

{

"source": "/var/lib/kolla/config_files/haproxy.cfg",

"dest": "/etc/haproxy/haproxy.cfg",

"owner": "root",

"perm": ""

},

{

"source": "/var/lib/kolla/config_files/haproxy.pem",

"dest": "/etc/haproxy/haproxy.pem",

"owner": "root",

"perm": "",

"optional": true

}

]

}

[root@network01 ~]# cat /etc/kolla/keepalived/keepalived.conf

vrrp_script check_alive {

script "/check_alive.sh"

interval

fall

rise

} vrrp_instance kolla_internal_vip_51 {

state BACKUP

nopreempt

interface ens37

virtual_router_id

priority

advert_int

virtual_ipaddress {

192.168.41.20 dev ens37

}

authentication {

auth_type PASS

auth_pass jPYBCht9ne37XTvQdeUxgh5xAdpG9vQp0gsB0jTk

}

track_script {

check_alive

}

}

[root@network02 ~]# cat /etc/kolla/keepalived/keepalived.conf

vrrp_script check_alive {

script "/check_alive.sh"

interval

fall

rise

} vrrp_instance kolla_internal_vip_51 {

state BACKUP

nopreempt

interface ens37

virtual_router_id

priority

advert_int

virtual_ipaddress {

192.168.41.20 dev ens37

}

authentication {

auth_type PASS

auth_pass jPYBCht9ne37XTvQdeUxgh5xAdpG9vQp0gsB0jTk

}

track_script {

check_alive

}

}

cat /check_alive.sh

#!/bin/bash # This will return when it successfully talks to the haproxy daemon via the socket

# Failures return echo "show info" | socat unix-connect:/var/lib/kolla/haproxy/haproxy.sock stdio > /dev/null

kolla-ansible部署多节点OpenStack-Pike的更多相关文章

- ##5.1 Nova控制节点-- openstack pike

##5.1 Nova控制节点 openstack pike 安装 目录汇总 http://www.cnblogs.com/elvi/p/7613861.html ##5.1 Nova控制节点 # co ...

- ##6.1 Neutron控制节点-- openstack pike

##6.1 Neutron控制节点 openstack pike 安装 目录汇总 http://www.cnblogs.com/elvi/p/7613861.html ##6.1 Neutron控制节 ...

- ##5.2 Nova计算节点-- openstack pike

##5.2 Nova计算节点 openstack pike 安装 目录汇总 http://www.cnblogs.com/elvi/p/7613861.html ##5.2 Nova计算节点 # co ...

- ##6.2 Neutron计算节点-- openstack pike

##6.2 Neutron计算节点 openstack pike 安装 目录汇总 http://www.cnblogs.com/elvi/p/7613861.html ##6.2 Neutron计算节 ...

- RDO部署多节点OpenStack Havana(OVS+GRE)

RDO是由红帽RedHat公司推出的部署OpenStack集群的一个基于Puppet的部署工具,可以很快地通过RDO部署一套复杂的OpenStack环境,当前的RDO默认情况下,使用Neutron进行 ...

- 使用Ansible部署openstack平台

使用Ansible部署openstack平台 本周没啥博客水了,就放个云计算的作业上来吧(偷个懒) 案例描述 1.了解高可用OpenStack平台架构 2.了解Ansible部署工具的使用 3.使用A ...

- kolla-ansible部署单节点OpenStack-Pike

一.准备工作 最小化安装CentOS 7.5,装完后,进行初始化 selinux,防火墙端口无法访问,主机名问题,都是安装的常见错误,一定要细心确认. kolla的安装,要求目标机器是两块网卡: en ...

- 用Kolla在阿里云部署10节点高可用OpenStack

为展现 Kolla 的真正实力,我在阿里云使用 Ansible 自动创建 10 台虚机,部署一套多节点高可用 OpenStack 集群! 前言 上次 Kolla 已经表示了要打 10 个的愿望,这次我 ...

- CentOS7.2非HA分布式部署Openstack Pike版 (实验)

部署环境 一.组网拓扑 二.设备配置 笔记本:联想L440处理器:i3-4000M 2.40GHz内存:12G虚拟机软件:VMware® Workstation 12 Pro(12.5.2 build ...

- openstack pike 集群高可用 安装 部署 目录汇总

# openstack pike 集群高可用 安装部署#安装环境 centos 7 史上最详细的openstack pike版 部署文档欢迎经验分享,欢迎笔记分享欢迎留言,或加QQ群663105353 ...

随机推荐

- L258 技术转让

We will inform you of the weight, measurements, number of cases, cost of the drawings and other docu ...

- 在 子 iframe中 点击一个按钮, 变换 这个 iframe的地址url.

//跳到测试结果: function jump() { console.log(self.parent.document.getElementById("iframe").src) ...

- centos下mysql数据迁移方法

第一种: 原始数据库不需要重新安装: 默认mysql会安装在/var/lib/mysql这里,若将数据迁移到/data/mysql目录下,步骤如下: 1.停止mysql服务 2.#cp /var/li ...

- C# 创建 写入 读取 excel

public static void CreateExcelFile(string FileName, List<UUser> luu) { ] == "xlsx")/ ...

- Semaphore计数信号量

ExecutorService exec = Executors.newCachedThreadPool(); final Semaphore semp = new Semaphore(5); for ...

- js--未来元素

通过动态生成的标签,在生成标签直接绑定事件是无效的. eg:html标签 <div id="tree"> </div> <script> $(' ...

- Arrays 类的 binarySearch() 数组查询方法详解

Arrays类的binarySearch()方法,可以使用二分搜索法来搜索指定的数组,以获得指定对象.该方法返回要搜索元素的索引值.binarySearch()方法提供多种重载形式,用于满足各种类型数 ...

- P3084 [USACO13OPEN]照片Photo (dp+单调队列优化)

题目链接:传送门 题目: 题目描述 Farmer John has decided to assemble a panoramic photo of a lineup of his N cows ( ...

- a标签的功能

最常见的a标签是用来做跳转链接,实际上a标签还有其他的功能,具体如下: <a href="http://www.cnblogs.com/wangzhenyu666/"> ...

- 20155208徐子涵Vim编辑器学习经验

20155208徐子涵 2016-2017-2 Vim编辑器学习经验 当我们运用虚拟机进行书写代码时,我们就会用到Vim编辑器,用Vim编辑器进行编辑特别方便,而Vim编辑器中也有一些操作需要去学习. ...