CNN for NLP

卷积神经网络在自然语言处理任务中的应用。参考链接:Understanding Convolutional Neural Networks for NLP(2015.11)

Instead of image pixels, the input to most NLP tasks are sentences or documents represented as a matrix. Each row of the matrix corresponds to one token, typically a word, but it could be a character. That is, each row is vector that represents a word. Typically, these vectors are word embeddings (low-dimensional representations) like word2vec or GloVe, but they could also be one-hot vectors that index the word into a vocabulary. For a 10 word sentence using a 100-dimensional embedding we would have a 10×100 matrix as our input. That’s our “image”.

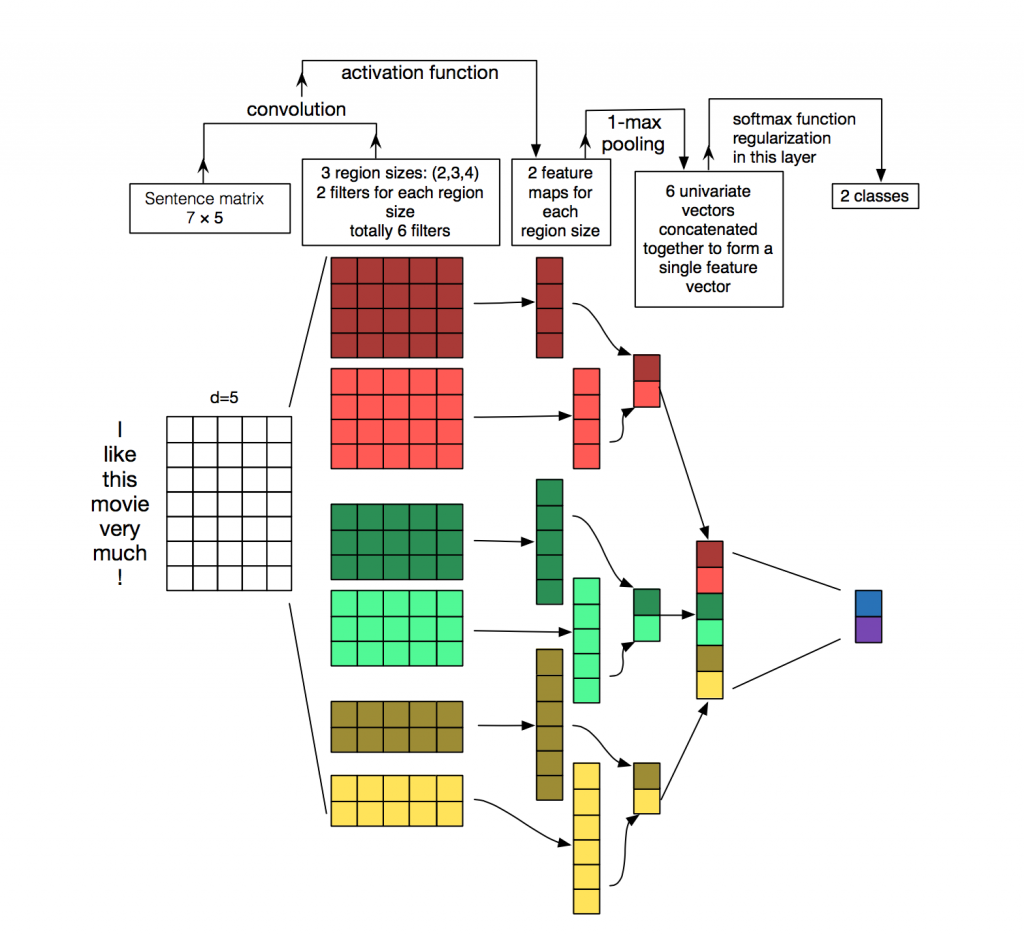

In vision, our filters slide over local patches of an image, but in NLP we typically use filters that slide over full rows of the matrix (words). Thus, the “width” of our filters is usually the same as the width of the input matrix. The height, or region size, may vary, but sliding windows over 2-5 words at a time is typical. Putting all the above together, a Convolutional Neural Network for NLP may look like this:

What about the nice intuitions we had for Computer Vision? Location Invariance and local Compositionality made intuitive sense for images, but not so much for NLP. You probably do care a lot where in the sentence a word appears. Pixels close to each other are likely to be semantically related (part of the same object), but the same isn’t always true for words. In many languages, parts of phrases could be separated by several other words. The compositional aspect isn’t obvious either. Clearly, words compose in some ways, like an adjective modifying a noun, but how exactly this works what higher level representations actually “mean” isn’t as obvious as in the Computer Vision case.

Given all this, it seems like CNNs wouldn’t be a good fit for NLP tasks. Recurrent Neural Networks make more intuitive sense. They resemble how we process language (or at least how we think we process language): Reading sequentially from left to right. Fortunately, this doesn’t mean that CNNs don’t work. All models are wrong, but some are useful. It turns out that CNNs applied to NLP problems perform quite well. The simple Bag of Words model is an obvious oversimplification with incorrect assumptions, but has nonetheless been the standard approach for years and lead to pretty good results.

A big argument for CNNs is that they are fast. Very fast. Convolutions are a central part of computer graphics and implemented on a hardware level on GPUs. Compared to something liken-grams, CNNs are also efficient in terms of representation. With a large vocabulary, computing anything more than 3-grams can quickly become expensive. Even Google doesn’t provide anything beyond 5-grams. Convolutional Filters learn good representations automatically, without needing to represent the whole vocabulary. It’s completely reasonable to have filters of size larger than 5. I like to think that many of the learned filters in the first layer are capturing features quite similar (but not limited) to n-grams, but represent them in a more compact way.

CNN Hyperparameters

Before explaining at how CNNs are applied to NLP tasks, let’s look at some of the choices you need to make when building a CNN. Hopefully this will help you better understand the literature in the field.

Narrow vs. Wide convolution

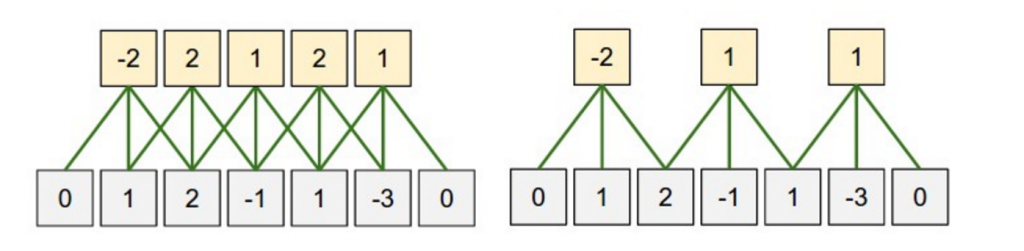

When I explained convolutions above I neglected a little detail of how we apply the filter. Applying a 3×3 filter at the center of the matrix works fine, but what about the edges? How would you apply the filter to the first element of a matrix that doesn’t have any neighboring elements to the top and left? You can use zero-padding. All elements that would fall outside of the matrix are taken to be zero. By doing this you can apply the filter to every element of your input matrix, and get a larger or equally sized output. Adding zero-padding is also called wide convolution, and not using zero-padding would be a narrow convolution. An example in 1D looks like this:

You can see how wide convolution is useful, or even necessary, when you have a large filter relative to the input size. In the above, the narrow convolution yields an output of size , and a wide convolution an output of size

. More generally, the formula for the output size is

.

Stride Size

Another hyperparameter for your convolutions is the stride size, defining by how much you want to shift your filter at each step. In all the examples above the stride size was 1, and consecutive applications of the filter overlapped. A larger stride size leads to fewer applications of the filter and a smaller output size. The following from the Stanford cs231 website shows stride sizes of 1 and 2 applied to a one-dimensional input:

In the literature we typically see stride sizes of 1, but a larger stride size may allow you to build a model that behaves somewhat similarly to a Recursive Neural Network, i.e. looks like a tree.

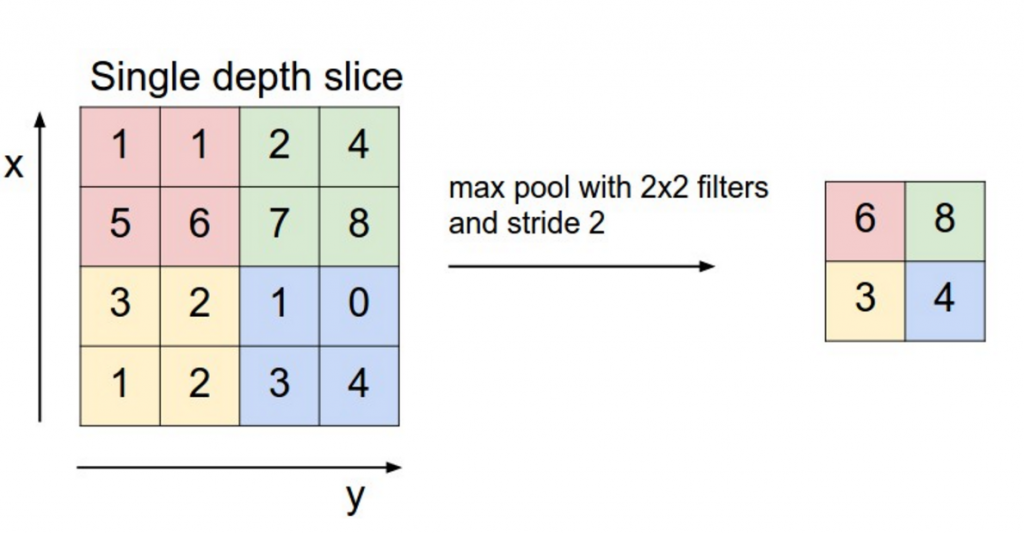

Pooling Layers

A key aspect of Convolutional Neural Networks are pooling layers, typically applied after the convolutional layers. Pooling layers subsample their input. The most common way to do pooling is to apply a operation to the result of each filter. You don’t necessarily need to pool over the complete matrix, you could also pool over a window. For example, the following shows max pooling for a 2×2 window (in NLP we typically are apply pooling over the complete output, yielding just a single number for each filter):

Why pooling? There are a couple of reasons. One property of pooling is that it provides a fixed size output matrix, which typically is required for classification. For example, if you have 1,000 filters and you apply max pooling to each, you will get a 1000-dimensional output, regardless of the size of your filters, or the size of your input. This allows you to use variable size sentences, and variable size filters, but always get the same output dimensions to feed into a classifier.

Pooling also reduces the output dimensionality but (hopefully) keeps the most salient information. You can think of each filter as detecting a specific feature, such as detecting if the sentence contains a negation like “not amazing” for example. If this phrase occurs somewhere in the sentence, the result of applying the filter to that region will yield a large value, but a small value in other regions. By performing the max operation you are keeping information about whether or not the feature appeared in the sentence, but you are losing information about where exactly it appeared. But isn’t this information about locality really useful? Yes, it is and it’s a bit similar to what a bag of n-grams model is doing. You are losing global information about locality (where in a sentence something happens), but you are keeping local information captured by your filters, like “not amazing” being very different from “amazing not”.

In imagine recognition, pooling also provides basic invariance to translating (shifting) and rotation. When you are pooling over a region, the output will stay approximately the same even if you shift/rotate the image by a few pixels, because the max operations will pick out the same value regardless.

Channels

The last concept we need to understand are channels. Channels are different “views” of your input data. For example, in image recognition you typically have RGB (red, green, blue) channels. You can apply convolutions across channels, either with different or equal weights. In NLP you could imagine having various channels as well: You could have a separate channels for different word embeddings (word2vec and GloVe for example), or you could have a channel for the same sentence represented in different languages, or phrased in different ways.

Convolutional Neural Networks applied to NLP

Let’s now look at some of the applications of CNNs to Natural Language Processing. I’ll try it to summarize some of the research results. Invariably I’ll miss many interesting applications (do let me know in the comments), but I hope to cover at least some of the more popular results.

The most natural fit for CNNs seem to be classifications tasks, such as Sentiment Analysis, Spam Detection or Topic Categorization. Convolutions and pooling operations lose information about the local order of words, so that sequence tagging as in PoS Tagging or Entity Extraction is a bit harder to fit into a pure CNN architecture (though not impossible, you can add positional features to the input).

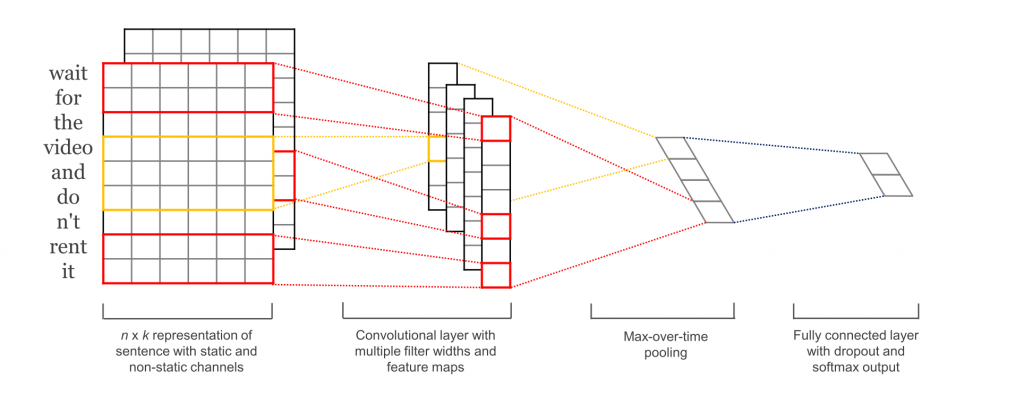

[1] Evaluates a CNN architecture on various classification datasets, mostly comprised of Sentiment Analysis and Topic Categorization tasks. The CNN architecture achieves very good performance across datasets, and new state-of-the-art on a few. Surprisingly, the network used in this paper is quite simple, and that’s what makes it powerful.The input layer is a sentence comprised of concatenated word2vec word embeddings. That’s followed by a convolutional layer with multiple filters, then a max-pooling layer, and finally a softmax classifier. The paper also experiments with two different channels in the form of static and dynamic word embeddings, where one channel is adjusted during training and the other isn’t. A similar, but somewhat more complex, architecture was previously proposed in [2]. [6] Adds an additional layer that performs “semantic clustering” to this network architecture.

Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification

[4] Trains a CNN from scratch, without the need for for pre-trained word vectors like word2vec or GloVe. It applies convolutions directly to one-hot vectors. The author also proposes a space-efficient bag-of-words-like representation for the input data, reducing the number of parameters the network needs to learn. In [5] the author extends the model with an additional unsupervised “region embedding” that is learned using a CNN predicting the context of text regions. The approach in these papers seems to work well for long-form texts (like movie reviews), but their performance on short texts (like tweets) isn’t clear. Intuitively, it makes sense that using pre-trained word embeddings for short texts would yield larger gains than using them for long texts.

Building a CNN architecture means that there are many hyperparameters to choose from, some of which I presented above: Input represenations (word2vec, GloVe, one-hot), number and sizes of convolution filters, pooling strategies (max, average), and activation functions (ReLU, tanh). [7] performs an empirical evaluation on the effect of varying hyperparameters in CNN architectures, investigating their impact on performance and variance over multiple runs. If you are looking to implement your own CNN for text classification, using the results of this paper as a starting point would be an excellent idea. A few results that stand out are that max-pooling always beat average pooling, that the ideal filter sizes are important but task-dependent, and that regularization doesn’t seem to make a big different in the NLP tasks that were considered. A caveat of this research is that all the datasets were quite similar in terms of their document length, so the same guidelines may not apply to data that looks considerably different.

[8] explores CNNs for Relation Extraction and Relation Classification tasks. In addition to the word vectors, the authors use the relative positions of words to the entities of interest as an input to the convolutional layer. This models assumes that the positions of the entities are given, and that each example input contains one relation. [9] and [10] have explored similar models.

Another interesting use case of CNNs in NLP can be found in [11] and [12], coming out of Microsoft Research. These papers describe how to learn semantically meaningful representations of sentences that can be used for Information Retrieval. The example given in the papers includes recommending potentially interesting documents to users based on what they are currently reading. The sentence representations are trained based on search engine log data.

Most CNN architectures learn embeddings (low-dimensional representations) for words and sentences in one way or another as part of their training procedure. Not all papers though focus on this aspect of training or investigate how meaningful the learned embeddings are. [13] presents a CNN architecture to predict hashtags for Facebook posts, while at the same time generating meaningful embeddings for words and sentences. These learned embeddings are then successfully applied to another task – recommending potentially interesting documents to users, trained based on clickstream data.

Character-Level CNNs

So far, all of the models presented were based on words. But there has also been research in applying CNNs directly to characters. [14] learns character-level embeddings, joins them with pre-trained word embeddings, and uses a CNN for Part of Speech tagging. [15][16] explores the use of CNNs to learn directly from characters, without the need for any pre-trained embeddings. Notably, the authors use a relatively deep network with a total of 9 layers, and apply it to Sentiment Analysis and Text Categorization tasks. Results show that learning directly from character-level input works very well on large datasets (millions of examples), but underperforms simpler models on smaller datasets (hundreds of thousands of examples). [17] explores to application of character-level convolutions to Language Modeling, using the output of the character-level CNN as the input to an LSTM at each time step. The same model is applied to various languages.

What’s amazing is that essentially all of the papers above were published in the past 1-2 years. Obviously there has been excellent work with CNNs on NLP before, as inNatural Language Processing (almost) from Scratch, but the pace of new results and state of the art systems being published is clearly accelerating.

Paper References

- [1] Kim, Y. (2014). Convolutional Neural Networks for Sentence Classification. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP 2014), 1746–1751.

- [2] Kalchbrenner, N., Grefenstette, E., & Blunsom, P. (2014). A Convolutional Neural Network for Modelling Sentences. Acl, 655–665.

- [3] Santos, C. N. dos, & Gatti, M. (2014). Deep Convolutional Neural Networks for Sentiment Analysis of Short Texts. In COLING-2014 (pp. 69–78).

- [4] Johnson, R., & Zhang, T. (2015). Effective Use of Word Order for Text Categorization with Convolutional Neural Networks. To Appear: NAACL-2015, (2011).

- [5] Johnson, R., & Zhang, T. (2015). Semi-supervised Convolutional Neural Networks for Text Categorization via Region Embedding.

- [6] Wang, P., Xu, J., Xu, B., Liu, C., Zhang, H., Wang, F., & Hao, H. (2015). Semantic Clustering and Convolutional Neural Network for Short Text Categorization. Proceedings ACL 2015, 352–357.

- [7] Zhang, Y., & Wallace, B. (2015). A Sensitivity Analysis of (and Practitioners’ Guide to) Convolutional Neural Networks for Sentence Classification,

- [8] Nguyen, T. H., & Grishman, R. (2015). Relation Extraction: Perspective from Convolutional Neural Networks. Workshop on Vector Modeling for NLP, 39–48.

- [9] Sun, Y., Lin, L., Tang, D., Yang, N., Ji, Z., & Wang, X. (2015). Modeling Mention , Context and Entity with Neural Networks for Entity Disambiguation, (Ijcai), 1333–1339.

- [10] Zeng, D., Liu, K., Lai, S., Zhou, G., & Zhao, J. (2014). Relation Classification via Convolutional Deep Neural Network. Coling, (2011), 2335–2344.

- [11] Gao, J., Pantel, P., Gamon, M., He, X., & Deng, L. (2014). Modeling Interestingness with Deep Neural Networks.

- [12] Shen, Y., He, X., Gao, J., Deng, L., & Mesnil, G. (2014). A Latent Semantic Model with Convolutional-Pooling Structure for Information Retrieval. Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management – CIKM ’14, 101–110.

- [13] Weston, J., & Adams, K. (2014). # T AG S PACE : Semantic Embeddings from Hashtags, 1822–1827.

- [14] Santos, C., & Zadrozny, B. (2014). Learning Character-level Representations for Part-of-Speech Tagging. Proceedings of the 31st International Conference on Machine Learning, ICML-14(2011), 1818–1826.

- [15] Zhang, X., Zhao, J., & LeCun, Y. (2015). Character-level Convolutional Networks for Text Classification, 1–9.

- [16] Zhang, X., & LeCun, Y. (2015). Text Understanding from Scratch. arXiv E-Prints, 3, 011102.

- [17] Kim, Y., Jernite, Y., Sontag, D., & Rush, A. M. (2015). Character-Aware Neural Language Models.

CNN for NLP的更多相关文章

- CNN for NLP (CS224D)

斯坦福课程CS224d: Deep Learning for Natural Language Processing lecture13:Convolutional neural networks - ...

- CNN for NLP(2)

参考链接: 卷积神经网络(CNN)在句子建模上的应用, 卷积神经网络CNN在自然语言处理中的应用, CNN在NLP中的应用.

- 【论文笔记】CNN for NLP

什么是Convolutional Neural Network(卷积神经网络)? 最早应该是LeCun(1998)年论文提出,其结果如下:运用于手写数字识别.详细就不介绍,可参考zouxy09的专栏, ...

- 用于NLP的CNN架构搬运:from keras0.x to keras2.x

本文亮点: 将用于自然语言处理的CNN架构,从keras0.3.3搬运到了keras2.x,强行练习了Sequential+Model的混合使用,具体来说,是Model里嵌套了Sequential. ...

- 理解NLP中的卷积神经网络(CNN)

此篇文章是Denny Britz关于CNN在NLP中应用的理解,他本人也曾在Google Brain项目中参与多项关于NLP的项目. · 翻译不周到的地方请大家见谅. 阅读完本文大概需要7分钟左右的时 ...

- 卷积神经网络(CNN)在句子建模上的应用

之前的博文已经介绍了CNN的基本原理,本文将大概总结一下最近CNN在NLP中的句子建模(或者句子表示)方面的应用情况,主要阅读了以下的文献: Kim Y. Convolutional neural n ...

- NLP十大里程碑

NLP十大里程碑 2.1 里程碑一:1985复杂特征集 复杂特征集(complex feature set)又叫做多重属性(multiple features)描写.语言学里,这种描写方法最早出现在语 ...

- 注意力机制(Attention Mechanism)应用——自然语言处理(NLP)

近年来,深度学习的研究越来越深入,在各个领域也都获得了不少突破性的进展.基于注意力(attention)机制的神经网络成为了最近神经网络研究的一个热点,下面是一些基于attention机制的神经网络在 ...

- 卷积神经网络CNN在自然语言处理中的应用

卷积神经网络(Convolution Neural Network, CNN)在数字图像处理领域取得了巨大的成功,从而掀起了深度学习在自然语言处理领域(Natural Language Process ...

随机推荐

- CAN总线多节点通信异常分析及解决

一.CAN物理层特征 CAN收发器的作用是负责逻辑电平和信号电平之间的转换.即从CAN控制芯片输出逻辑电平到CAN收发器,然后经过CAN收发器内部转换将逻辑电平转换为差分信号输出到CAN总线上,CAN ...

- vue的特点 关键字

1.对mvvm模式的理解 Model-view-viewmodel Model数据模型 View代表ui组件 Viewmodel监听模型数据的改变和控制视图行为.处理用户交互,简单理解就是一个同步vi ...

- kubeadm安装集群系列-3.添加工作节点

添加工作节点 worker通过kubeadm join加入集群,加入所需的集群的token默认24小时过期 查看Token kubeadm token list # 如果失效创建一个新的 kubead ...

- Redis的大白话解释

Redis的官方解释可以百度,这里讲redis缓存为啥速度非常快! 这么说吧,别人问你什么是“redis”,如果你知道,你可以直接吧啦吧啦一大堆,其实这个时候你的大脑就类似redis缓存,别人问的“r ...

- Mysql安装后在服务里找不到和服务启动不起来的解决方法

一,在安装完Mysql数据库后,发现在控制面板->管理->服务中找不到Mysql的服务启动 解决方法如下:开启命令行,按照如下步骤即可: 1.进入到mysql的安装包,在bin里执行:my ...

- vue加scoped后无法修改样式(无法修改element UI 样式)

有的时候element提供的默认的样式不能满足项目的需要,就需要我们队标签的样式进行修改,但是发现修改的样式不起作用 第一种方法 原因:scoped 解决方法:去掉scoped 注意:此时该样式会污染 ...

- Android MVC MVP MVVM (三)

MVVM Model-View-ViewModel的简写 在MVP基础上实现数据视图的DataBinding,数据变化,视图自动变化,反之也成立. DataBinding 启用DataBinding ...

- [转帖] ./demoCA/newcerts: No such file or directory openssl 生成证书时问题的解决.

接上面一篇blog 发现openssl 生成server.crt 时有问题. 找了一个网站处理了一下: http://blog.sina.com.cn/s/blog_49f8dc400100tznt. ...

- SQLYOG如何将数据导出excel格式

方法/步骤 如图,笔者的数据库中有一张student表,想把这张表中的数据导出成excel 在这张表上右击,选择“Export”,再选择“Export Table Data as CSV, ...

- 【2018】Python面试题【web框架】

1.谈谈你对http协议的认识. HTTP协议(HyperText Transfer Protocol,超文本传输协议)是用于从WWW服务器传输超文本到本地浏览器的传送协议.它可以使浏览器更加高效,使 ...