Machine learning 第7周编程作业 SVM

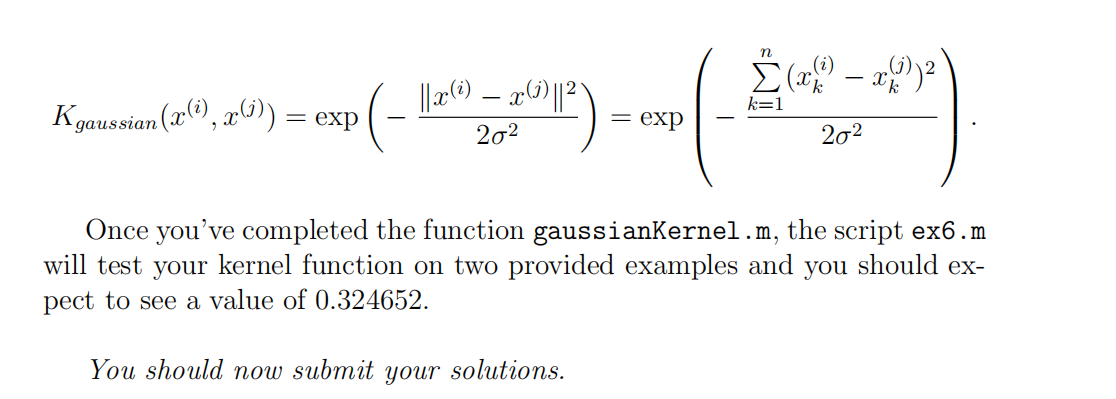

1.Gaussian Kernel

function sim = gaussianKernel(x1, x2, sigma)

%RBFKERNEL returns a radial basis function kernel between x1 and x2

% sim = gaussianKernel(x1, x2) returns a gaussian kernel between x1 and x2

% and returns the value in sim % Ensure that x1 and x2 are column vectors

x1 = x1(:); x2 = x2(:); % You need to return the following variables correctly.

sim = 0; % ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to return the similarity between x1

% and x2 computed using a Gaussian kernel with bandwidth

% sigma

%

% m=length(x1)

sum=0

for i=1:m,

sum=sum-((x1(i)-x2(i))^2)

endfor sim=exp(sum/(2*sigma^2)) % ============================================================= end

2.Example Dataset 3

function [C, sigma] = dataset3Params(X, y, Xval, yval)

%DATASET3PARAMS returns your choice of C and sigma for Part 3 of the exercise

%where you select the optimal (C, sigma) learning parameters to use for SVM

%with RBF kernel

% [C, sigma] = DATASET3PARAMS(X, y, Xval, yval) returns your choice of C and

% sigma. You should complete this function to return the optimal C and

% sigma based on a cross-validation set.

% % You need to return the following variables correctly.

C = 1;

sigma = 0.3; % ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to return the optimal C and sigma

% learning parameters found using the cross validation set.

% You can use svmPredict to predict the labels on the cross

% validation set. For example,

% predictions = svmPredict(model, Xval);

% will return the predictions on the cross validation set.

%

% Note: You can compute the prediction error using

% mean(double(predictions ~= yval))

%

steps=[0.01,0.03,0.1,0.3,1,3,10,30];

minerror=Inf;

minC=Inf;

minsigma=Inf; for i=1:length(steps),

for j=1:length(steps),

curc=steps(i);

cursigma=steps(j);

model=svmTrain(X,y,curc,@(x1,x2)gaussianKernel(x1,x2,cursigma));

predictions=svmPredict(model,Xval);

error=mean(double(predictions~=yval));

if(error<minerror)

minerror=error;

minC=curc;

minsigma=cursigma;

end

endfor

endfor C=minC;

sigma=minsigma; % ========================================================================= end

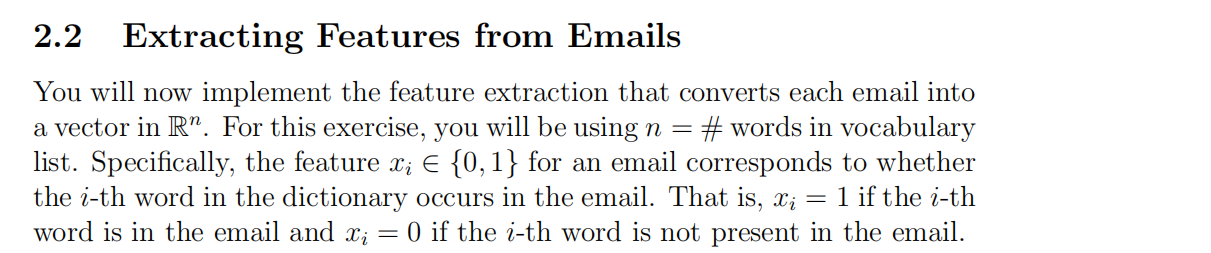

3.Vocabulary List

function word_indices = processEmail(email_contents)

%PROCESSEMAIL preprocesses a the body of an email and

%returns a list of word_indices

% word_indices = PROCESSEMAIL(email_contents) preprocesses

% the body of an email and returns a list of indices of the

% words contained in the email.

% % Load Vocabulary

vocabList = getVocabList(); % Init return value

word_indices = []; % ========================== Preprocess Email =========================== % Find the Headers ( \n\n and remove )

% Uncomment the following lines if you are working with raw emails with the

% full headers % hdrstart = strfind(email_contents, ([char(10) char(10)]));

% email_contents = email_contents(hdrstart(1):end); % Lower case

email_contents = lower(email_contents); % Strip all HTML

% Looks for any expression that starts with < and ends with > and replace

% and does not have any < or > in the tag it with a space

email_contents = regexprep(email_contents, '<[^<>]+>', ' '); % Handle Numbers

% Look for one or more characters between 0-9

email_contents = regexprep(email_contents, '[0-9]+', 'number'); % Handle URLS

% Look for strings starting with http:// or https://

email_contents = regexprep(email_contents, ...

'(http|https)://[^\s]*', 'httpaddr'); % Handle Email Addresses

% Look for strings with @ in the middle

email_contents = regexprep(email_contents, '[^\s]+@[^\s]+', 'emailaddr'); % Handle $ sign

email_contents = regexprep(email_contents, '[$]+', 'dollar'); % ========================== Tokenize Email =========================== % Output the email to screen as well

fprintf('\n==== Processed Email ====\n\n'); % Process file

l = 0; while ~isempty(email_contents) % Tokenize and also get rid of any punctuation

[str, email_contents] = ...

strtok(email_contents, ...

[' @$/#.-:&*+=[]?!(){},''">_<;%' char(10) char(13)]); % Remove any non alphanumeric characters

str = regexprep(str, '[^a-zA-Z0-9]', ''); % Stem the word

% (the porterStemmer sometimes has issues, so we use a try catch block)

try str = porterStemmer(strtrim(str));

catch str = ''; continue;

end; % Skip the word if it is too short

if length(str) < 1

continue;

end % Look up the word in the dictionary and add to word_indices if

% found

% ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to add the index of str to

% word_indices if it is in the vocabulary. At this point

% of the code, you have a stemmed word from the email in

% the variable str. You should look up str in the

% vocabulary list (vocabList). If a match exists, you

% should add the index of the word to the word_indices

% vector. Concretely, if str = 'action', then you should

% look up the vocabulary list to find where in vocabList

% 'action' appears. For example, if vocabList{18} =

% 'action', then, you should add 18 to the word_indices

% vector (e.g., word_indices = [word_indices ; 18]; ).

%

% Note: vocabList{idx} returns a the word with index idx in the

% vocabulary list.

%

% Note: You can use strcmp(str1, str2) to compare two strings (str1 and

% str2). It will return 1 only if the two strings are equivalent.

% for idx=1:length(vocabList),

if(strcmp(vocabList{idx},str)==1)

word_indices=[word_indices;idx];

end

endfor % ============================================================= % Print to screen, ensuring that the output lines are not too long

if (l + length(str) + 1) > 78

fprintf('\n');

l = 0;

end

fprintf('%s ', str);

l = l + length(str) + 1; end % Print footer

fprintf('\n\n=========================\n'); end

4.emailFeatures

function x = emailFeatures(word_indices)

%EMAILFEATURES takes in a word_indices vector and produces a feature vector

%from the word indices

% x = EMAILFEATURES(word_indices) takes in a word_indices vector and

% produces a feature vector from the word indices. % Total number of words in the dictionary

n = 1899; % You need to return the following variables correctly.

x = zeros(n, 1); % ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to return a feature vector for the

% given email (word_indices). To help make it easier to

% process the emails, we have have already pre-processed each

% email and converted each word in the email into an index in

% a fixed dictionary (of 1899 words). The variable

% word_indices contains the list of indices of the words

% which occur in one email.

%

% Concretely, if an email has the text:

%

% The quick brown fox jumped over the lazy dog.

%

% Then, the word_indices vector for this text might look

% like:

%

% 60 100 33 44 10 53 60 58 5

%

% where, we have mapped each word onto a number, for example:

%

% the -- 60

% quick -- 100

% ...

%

% (note: the above numbers are just an example and are not the

% actual mappings).

%

% Your task is take one such word_indices vector and construct

% a binary feature vector that indicates whether a particular

% word occurs in the email. That is, x(i) = 1 when word i

% is present in the email. Concretely, if the word 'the' (say,

% index 60) appears in the email, then x(60) = 1. The feature

% vector should look like:

%

% x = [ 0 0 0 0 1 0 0 0 ... 0 0 0 0 1 ... 0 0 0 1 0 ..];

%

% for i=1:length(word_indices),

x(word_indices(i))=1;

endfor % ========================================================================= end

Machine learning 第7周编程作业 SVM的更多相关文章

- Machine learning第6周编程作业

1.linearRegCostFunction: function [J, grad] = linearRegCostFunction(X, y, theta, lambda) %LINEARREGC ...

- Machine learning 第8周编程作业 K-means and PCA

1.findClosestCentroids function idx = findClosestCentroids(X, centroids) %FINDCLOSESTCENTROIDS compu ...

- Machine learning 第5周编程作业

1.Sigmoid Gradient function g = sigmoidGradient(z) %SIGMOIDGRADIENT returns the gradient of the sigm ...

- Machine learning第四周code 编程作业

1.lrCostFunction: 和第三周的那个一样的: function [J, grad] = lrCostFunction(theta, X, y, lambda) %LRCOSTFUNCTI ...

- 吴恩达深度学习第4课第3周编程作业 + PIL + Python3 + Anaconda环境 + Ubuntu + 导入PIL报错的解决

问题描述: 做吴恩达深度学习第4课第3周编程作业时导入PIL包报错. 我的环境: 已经安装了Tensorflow GPU 版本 Python3 Anaconda 解决办法: 安装pillow模块,而不 ...

- 吴恩达深度学习第2课第2周编程作业 的坑(Optimization Methods)

我python2.7, 做吴恩达深度学习第2课第2周编程作业 Optimization Methods 时有2个坑: 第一坑 需将辅助文件 opt_utils.py 的 nitialize_param ...

- c++ 西安交通大学 mooc 第十三周基础练习&第十三周编程作业

做题记录 风影影,景色明明,淡淡云雾中,小鸟轻灵. c++的文件操作已经好玩起来了,不过掌握好控制结构显得更为重要了. 我这也不做啥题目分析了,直接就题干-代码. 总结--留着自己看 1. 流是指从一 ...

- Machine Learning - 第7周(Support Vector Machines)

SVMs are considered by many to be the most powerful 'black box' learning algorithm, and by posing构建 ...

- Machine Learning - 第6周(Advice for Applying Machine Learning、Machine Learning System Design)

In Week 6, you will be learning about systematically improving your learning algorithm. The videos f ...

随机推荐

- 通过HttpWebRequest实现模拟登陆

1>通过HttpWebRequest模拟登陆 using System; using System.Collections.Generic; using System.Linq; using S ...

- 《OpenGL超级宝典》编程环境配置

最近在接触OpenGL,使用的书籍就是那本<OpenGL超级宝典>,不过编程环境的搭建和设置还是比较麻烦的,在网上找了很多资料,找不到GLTools.lib这个库.没办法自己就借助源码自己 ...

- 状态模式c#(状态流转例子吃饭)

using System;using System.Collections.Generic;using System.Linq;using System.Text; namespace 状态模式{ ...

- 【美食技术】家庭自制DIY鸡蛋饼和疙瘩汤早餐视频教程

鸡蛋饼制作方法 食材准备面粉 150g鸡蛋饼 鸡蛋饼鸡蛋 2个盐 适量水 适量(约300ml)油 20g荵花适量也可根据自己喜好准备一些调味料. 做法 鸡蛋饼是一种家常点心,做法很多,这里提供3种. ...

- cannot be cast to

java.lang.ClassCastException: com.service.impl.OrderPlanServiceImpl cannot be cast to com.provider.s ...

- Web数据挖掘综述

- C++ 类 & 对象-类成员函数-类访问修饰符-C++ 友元函数-构造函数 & 析构函数-C++ 拷贝构造函数

C++ 类成员函数 成员函数可以定义在类定义内部,或者单独使用范围解析运算符 :: 来定义. 需要强调一点,在 :: 运算符之前必须使用类名.调用成员函数是在对象上使用点运算符(.),这样它就能操作与 ...

- (最短路 SPFA)Currency Exchange -- poj -- 1860

链接: http://poj.org/problem?id=1860 Time Limit: 1000MS Memory Limit: 30000K Total Submissions: 2326 ...

- Register A Callback To Handle SQLITE_BUSY Errors(译)

http://www.sqlite.org/c3ref/busy_handler.html留着自己看的. Register A Callback To Handle SQLITE_BUSY Error ...

- handsontable-developer guide-cell function

renderer 展示的数据不是来自于数据源,而是先把DOM和其他信息传给renderer,然后展示. //五种展示函数 TextRenderer: default NumericRenderer A ...