Whitening

The goal of whitening is to make the input less redundant; more formally, our desiderata are that our learning algorithms sees a training input where (i) the features are less correlated with each other, and (ii) the features all have the same variance.

example

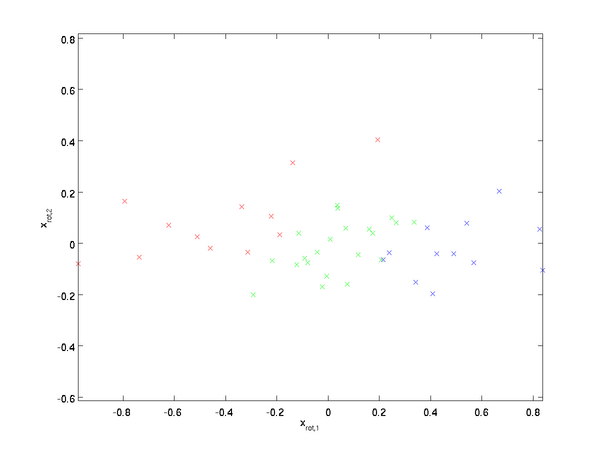

How can we make our input features uncorrelated with each other? We had already done this when computing

. Repeating our previous figure, our plot for

was:

The covariance matrix of this data is given by:

It is no accident that the diagonal values are

and

. Further, the off-diagonal entries are zero; thus,

and

are uncorrelated, satisfying one of our desiderata for whitened data (that the features be less correlated).

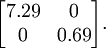

To make each of our input features have unit variance, we can simply rescale each feature

by

. Concretely, we define our whitened data

as follows:

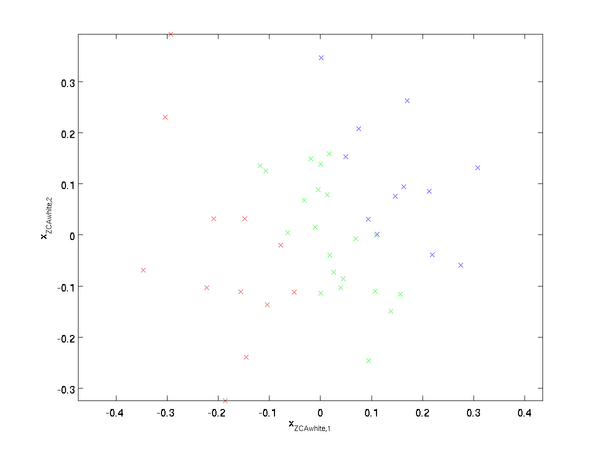

Plotting

, we get:

This data now has covariance equal to the identity matrix

. We say that

is our PCA whitened version of the data: The different components of

are uncorrelated and have unit variance.

ZCA Whitening

Finally, it turns out that this way of getting the data to have covariance identity

isn't unique. Concretely, if

is any orthogonal matrix, so that it satisfies

(less formally, if

is a rotation/reflection matrix), then

will also have identity covariance. In ZCA whitening, we choose

. We define

Plotting

, we get:

It can be shown that out of all possible choices for

, this choice of rotation causes

to be as close as possible to the original input data

.

When using ZCA whitening (unlike PCA whitening), we usually keep all

dimensions of the data, and do not try to reduce its dimension.

Regularizaton

When implementing PCA whitening or ZCA whitening in practice, sometimes some of the eigenvalues

will be numerically close to 0, and thus the scaling step where we divide by

would involve dividing by a value close to zero; this may cause the data to blow up (take on large values) or otherwise be numerically unstable. In practice, we therefore implement this scaling step using a small amount of regularization, and add a small constant

to the eigenvalues before taking their square root and inverse:

When

takes values around

, a value of

might be typical.

For the case of images, adding

here also has the effect of slightly smoothing (or low-pass filtering) the input image. This also has a desirable effect of removing aliasing artifacts caused by the way pixels are laid out in an image, and can improve the features learned (details are beyond the scope of these notes).

ZCA whitening is a form of pre-processing of the data that maps it from

to

. It turns out that this is also a rough model of how the biological eye (the retina) processes images. Specifically, as your eye perceives images, most adjacent "pixels" in your eye will perceive very similar values, since adjacent parts of an image tend to be highly correlated in intensity. It is thus wasteful for your eye to have to transmit every pixel separately (via your optic nerve) to your brain. Instead, your retina performs a decorrelation operation (this is done via retinal neurons that compute a function called "on center, off surround/off center, on surround") which is similar to that performed by ZCA. This results in a less redundant representation of the input image, which is then transmitted to your brain.

Whitening的更多相关文章

- (六)6.8 Neurons Networks implements of PCA ZCA and whitening

PCA 给定一组二维数据,每列十一组样本,共45个样本点 -6.7644914e-01 -6.3089308e-01 -4.8915202e-01 ... -4.4722050e-01 -7.4 ...

- (六)6.7 Neurons Networks whitening

PCA的过程结束后,还有一个与之相关的预处理步骤,白化(whitening) 对于输入数据之间有很强的相关性,所以用于训练数据是有很大冗余的,白化的作用就是降低输入数据的冗余,通过白化可以达到(1)降 ...

- UFLDL教程之(三)PCA and Whitening exercise

Exercise:PCA and Whitening 第0步:数据准备 UFLDL下载的文件中,包含数据集IMAGES_RAW,它是一个512*512*10的矩阵,也就是10幅512*512的图像 ( ...

- Deep Learning学习随记(二)Vectorized、PCA和Whitening

接着上次的记,前面看了稀疏自编码.按照讲义,接下来是Vectorized, 翻译成向量化?暂且这么认为吧. Vectorized: 这节是老师教我们编程技巧了,这个向量化的意思说白了就是利用已经被优化 ...

- Modeling Filters and Whitening Filters

Colored and White Process White Process White Process,又称为White Noise(白噪声),其中white来源于白光,寓意着PSD的平坦分布,w ...

- 白化(Whitening): PCA 与 ZCA (转)

转自:findbill 本文讨论白化(Whitening),以及白化与 PCA(Principal Component Analysis) 和 ZCA(Zero-phase Component Ana ...

- CS229 6.8 Neurons Networks implements of PCA ZCA and whitening

PCA 给定一组二维数据,每列十一组样本,共45个样本点 -6.7644914e-01 -6.3089308e-01 -4.8915202e-01 ... -4.4722050e-01 -7.4 ...

- CS229 6.7 Neurons Networks whitening

PCA的过程结束后,还有一个与之相关的预处理步骤,白化(whitening) 对于输入数据之间有很强的相关性,所以用于训练数据是有很大冗余的,白化的作用就是降低输入数据的冗余,通过白化可以达到(1)降 ...

- PCA和Whitening

PCA: PCA的具有2个功能,一是维数约简(可以加快算法的训练速度,减小内存消耗等),一是数据的可视化. PCA并不是线性回归,因为线性回归是保证得到的函数是y值方面误差最小,而PCA是保证得到的函 ...

- 【DeepLearning】Exercise:PCA and Whitening

Exercise:PCA and Whitening 习题链接:Exercise:PCA and Whitening pca_gen.m %%============================= ...

随机推荐

- 51Nod 天堂里的游戏

多年后,每当Noder看到吉普赛人,就会想起那个遥远的下午. Noder躺在草地上漫无目的的张望,二楼的咖啡馆在日光下闪着亮,像是要进化成一颗巨大的咖啡豆.天气稍有些冷,但草还算暖和.不远的地方坐着一 ...

- 关于zxing生成二维码,在微信长按识别不了问题

在做校园学生到校情况签到系统时,我采用了zxing作为二维码生成工具.在测试的时候使用微信打开连接发现.我长按我的二维码之后,总是不会出现以下这种识别二维码的选项. 这就大大的降低了用户的体验,只能大 ...

- appium 模拟实现物理按键点击

appium自动化测试中,当确认,搜索,返回等按键通过定位点击不好实现的时候,可以借助物理按键来实现.appium支持以下物理按键模拟: 电话键 KEYCODE_CALL 拨号键 5 KEYCODE_ ...

- blongsTo 用法

当存在这样两张表的时候: one{ , 'name':"name" 'sex':"sex" } two{ , 'type':json } 当我们需要在调用到 o ...

- [POI2011]MET-Meteors(整体二分+树状数组)

题意 给定一个环,每个节点有一个所属国家,k次事件,每次对[l,r]区间上的每个点点权加上一个值,求每个国家最早多少次操作之后所有点的点权和能达到一个值 题解 一个一个国家算会T.这题要用整体二分.我 ...

- python supper()函数

参考链接:https://www.runoob.com/python/python-func-super.html super() 函数是用于调用父类(超类)的一个方法. class Field(ob ...

- 【模板】2-SAT 问题(2-SAT)

[模板]2-SAT 问题 题目背景 2-SAT 问题 模板 题目描述 有n个布尔变量 \(x_1\) ~ \(x_n\) ,另有m个需要满足的条件,每个条件的形式都是" \(x_i\) ...

- HDU 4941 Magical Forest (Hash)

这个题比赛的时候是乱搞的,比赛结束之后学长说是映射+hash才恍然大悟.因此决定好好学一下hash. 题意: M*N的格子,里面有一些格子里面有一个值. 有三种操作: 1.交换两行的值. 2.交换两列 ...

- PKU 3281 Dining 网络流 (抄模板)

题意: 农夫约翰为他的牛准备了F种食物和D种饮料.每头牛都有各自喜欢的食物和饮料,而每种食物或饮料只能分配给一头牛.最多能有多少头牛可以同时得到各自喜欢的食物和饮料? 思路: 用 s -> 食物 ...

- Spring Cloud学习笔记【九】配置中心Spring Cloud Config

Spring Cloud Config 是 Spring Cloud 团队创建的一个全新项目,用来为分布式系统中的基础设施和微服务应用提供集中化的外部配置支持,它分为服务端与客户端两个部分.其中服务端 ...