Data - Hadoop伪分布式配置 - 使用Hadoop2.8.0和Ubuntu16.04

系统版本

anliven@Ubuntu1604:~$ uname -a

Linux Ubuntu1604 4.8.0-36-generic #36~16.04.1-Ubuntu SMP Sun Feb 5 09:39:57 UTC 2017 x86_64 x86_64 x86_64 GNU/Linux

anliven@Ubuntu1604:~$

anliven@Ubuntu1604:~$ cat /proc/version

Linux version 4.8.0-36-generic (buildd@lgw01-18) (gcc version 5.4.0 20160609 (Ubuntu 5.4.0-6ubuntu1~16.04.4) ) #36~16.04.1-Ubuntu SMP Sun Feb 5 09:39:57 UTC 2017

anliven@Ubuntu1604:~$

anliven@Ubuntu1604:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 16.04.2 LTS

Release: 16.04

Codename: xenial

anliven@Ubuntu1604:~$

创建hadoop用户

anliven@Ubuntu1604:~$ sudo useradd -m hadoop -s /bin/bash

anliven@Ubuntu1604:~$ sudo passwd hadoop

输入新的 UNIX 密码:

重新输入新的 UNIX 密码:

passwd:已成功更新密码

anliven@Ubuntu1604:~$

anliven@Ubuntu1604:~$ sudo adduser hadoop sudo

正在添加用户"hadoop"到"sudo"组...

正在将用户“hadoop”加入到“sudo”组中

完成。

anliven@Ubuntu1604:~$

更新apt及安装vim

hadoop@Ubuntu1604:~$ sudo apt-get update

命中:1 http://mirrors.aliyun.com/ubuntu xenial InRelease

命中:2 http://mirrors.aliyun.com/ubuntu xenial-updates InRelease

命中:3 http://mirrors.aliyun.com/ubuntu xenial-backports InRelease

命中:4 http://mirrors.aliyun.com/ubuntu xenial-security InRelease

正在读取软件包列表... 完成

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ sudo apt-get install vim

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

vim 已经是最新版 (2:7.4.1689-3ubuntu1.2)。

升级了 0 个软件包,新安装了 0 个软件包,要卸载 0 个软件包,有 50 个软件包未被升级。

hadoop@Ubuntu1604:~$

配置SSH免密码登录

hadoop@Ubuntu1604:~$ sudo apt-get install openssh-server

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

openssh-server 已经是最新版 (1:7.2p2-4ubuntu2.1)。

升级了 0 个软件包,新安装了 0 个软件包,要卸载 0 个软件包,有 50 个软件包未被升级。

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ cd ~

hadoop@Ubuntu1604:~$ mkdir .ssh

hadoop@Ubuntu1604:~$ cd .ssh

hadoop@Ubuntu1604:~/.ssh$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:DzjVWgTQB5I1JGRBmWi6gVHJ03V4WnJZEdojtbou0DM hadoop@Ubuntu1604

The key's randomart image is:

+---[RSA 2048]----+

| o.o =X@B=*o |

|. + +.*+*B.. |

| o + *+.* |

|. o .o = . |

| o .o S |

| . . E. + |

| . o. . |

| .. |

| .. |

+----[SHA256]-----+

hadoop@Ubuntu1604:~/.ssh$

hadoop@Ubuntu1604:~/.ssh$ cat id_rsa.pub >> authorized_keys

hadoop@Ubuntu1604:~/.ssh$ ls -l

总用量 12

-rw-rw-r-- 1 hadoop hadoop 399 4月 27 07:33 authorized_keys

-rw------- 1 hadoop hadoop 1679 4月 27 07:32 id_rsa

-rw-r--r-- 1 hadoop hadoop 399 4月 27 07:32 id_rsa.pub

hadoop@Ubuntu1604:~/.ssh$

hadoop@Ubuntu1604:~/.ssh$ cd

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ ssh localhost

The authenticity of host 'localhost (127.0.0.1)' can't be established.

ECDSA key fingerprint is SHA256:fZ7fAvnnFk0/Imkn0YPdc2Gzxnfr0IJGSRb1swbm7oU.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

Welcome to Ubuntu 16.04.2 LTS (GNU/Linux 4.8.0-36-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

44 个可升级软件包。

0 个安全更新。

*** 需要重启系统 ***

Last login: Thu Apr 27 07:25:26 2017 from 192.168.16.1

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ exit

注销

Connection to localhost closed.

hadoop@Ubuntu1604:~$

安装Java

hadoop@Ubuntu1604:~$ dpkg -l |grep jdk

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ sudo apt-get install openjdk-8-jre openjdk-8-jdk

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

将会同时安装下列软件:

......

......

......

done.

正在处理用于 libc-bin (2.23-0ubuntu7) 的触发器 ...

正在处理用于 ca-certificates (20160104ubuntu1) 的触发器 ...

Updating certificates in /etc/ssl/certs...

0 added, 0 removed; done.

Running hooks in /etc/ca-certificates/update.d...

done.

done.

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ dpkg -l |grep jdk

ii openjdk-8-jdk:amd64 8u121-b13-0ubuntu1.16.04.2 amd64 OpenJDK Development Kit (JDK)

ii openjdk-8-jdk-headless:amd64 8u121-b13-0ubuntu1.16.04.2 amd64 OpenJDK Development Kit (JDK) (headless)

ii openjdk-8-jre:amd64 8u121-b13-0ubuntu1.16.04.2 amd64 OpenJDK Java runtime, using Hotspot JIT

ii openjdk-8-jre-headless:amd64 8u121-b13-0ubuntu1.16.04.2 amd64 OpenJDK Java runtime, using Hotspot JIT (headless)

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ dpkg -L openjdk-8-jdk | grep '/bin$'

/usr/lib/jvm/java-8-openjdk-amd64/bin

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ vim ~/.bashrc

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ head ~/.bashrc |grep java

export JAVA_HOME="/usr/lib/jvm/java-8-openjdk-amd64"

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ source ~/.bashrc

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ echo $JAVA_HOME

/usr/lib/jvm/java-8-openjdk-amd64

hadoop@Ubuntu1604:~$

hadoop@Ubuntu1604:~$ java -version

openjdk version "1.8.0_121"

OpenJDK Runtime Environment (build 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13)

OpenJDK 64-Bit Server VM (build 25.121-b13, mixed mode)

hadoop@Ubuntu1604:~$

安装Hadoop

hadoop@Ubuntu1604:~$ sudo tar -zxf ~/hadoop-2.8.0.tar.gz -C /usr/local

[sudo] hadoop 的密码:

hadoop@Ubuntu1604:~$ cd /usr/local

hadoop@Ubuntu1604:/usr/local$ sudo mv ./hadoop-2.8.0/ ./hadoop

hadoop@Ubuntu1604:/usr/local$ sudo chown -R hadoop ./hadoop

hadoop@Ubuntu1604:/usr/local$ ls -l |grep hadoop

drwxr-xr-x 9 hadoop dialout 4096 3月 17 13:31 hadoop

hadoop@Ubuntu1604:/usr/local$ cd ./hadoop

hadoop@Ubuntu1604:/usr/local/hadoop$ ls -l

总用量 148

drwxr-xr-x 2 hadoop dialout 4096 3月 17 13:31 bin

drwxr-xr-x 3 hadoop dialout 4096 3月 17 13:31 etc

drwxr-xr-x 2 hadoop dialout 4096 3月 17 13:31 include

drwxr-xr-x 3 hadoop dialout 4096 3月 17 13:31 lib

drwxr-xr-x 2 hadoop dialout 4096 3月 17 13:31 libexec

-rw-r--r-- 1 hadoop dialout 99253 3月 17 13:31 LICENSE.txt

-rw-r--r-- 1 hadoop dialout 15915 3月 17 13:31 NOTICE.txt

-rw-r--r-- 1 hadoop dialout 1366 3月 17 13:31 README.txt

drwxr-xr-x 2 hadoop dialout 4096 3月 17 13:31 sbin

drwxr-xr-x 4 hadoop dialout 4096 3月 17 13:31 share

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hadoop version

Hadoop 2.8.0

Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 91f2b7a13d1e97be65db92ddabc627cc29ac0009

Compiled by jdu on 2017-03-17T04:12Z

Compiled with protoc 2.5.0

From source with checksum 60125541c2b3e266cbf3becc5bda666

This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-2.8.0.jar

hadoop@Ubuntu1604:/usr/local/hadoop$

Hadoop伪分布式配置

Hadoop可以伪分布式的方式在单节点上运行,读取HDFS中的文件。此节点既作为 NameNode 也作为 DataNode。

在Hadoop伪分布式配置情况下,删除core-site.xml的配置项,可以从伪分布式模式切换回非分布式模式。

修改配置文件

hadoop@Ubuntu1604:~$ cd /usr/local/hadoop/etc/hadoop

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ vim core-site.xml

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ cat core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ vim hdfs-site.xml

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ cat hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

</property>

</configuration>

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$

格式化NameNode

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs namenode -format

17/04/27 23:39:01 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: user = hadoop

STARTUP_MSG: host = Ubuntu1604/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.8.0

......

......

......

17/04/27 23:39:02 INFO namenode.FSImage: Allocated new BlockPoolId: BP-806199003-127.0.1.1-1493307542086

17/04/27 23:39:02 INFO common.Storage: Storage directory /usr/local/hadoop/tmp/dfs/name has been successfully formatted.

17/04/27 23:39:02 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

17/04/27 23:39:02 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 323 bytes saved in 0 seconds.

17/04/27 23:39:02 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

17/04/27 23:39:02 INFO util.ExitUtil: Exiting with status 0

17/04/27 23:39:02 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at Ubuntu1604/127.0.1.1

************************************************************/

hadoop@Ubuntu1604:/usr/local/hadoop$

启动NameNode和DataNode进程

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/start-dfs.sh

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-namenode-Ubuntu1604.out

localhost: starting datanode, logging to /usr/local/hadoop/logs/hadoop-hadoop-datanode-Ubuntu1604.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is SHA256:fZ7fAvnnFk0/Imkn0YPdc2Gzxnfr0IJGSRb1swbm7oU.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-secondarynamenode-Ubuntu1604.out

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ jps

1908 Jps

1576 DataNode

1467 NameNode

1791 SecondaryNameNode

hadoop@Ubuntu1604:/usr/local/hadoop$

访问Web界面

hadoop@Ubuntu1604:/usr/local/hadoop$ ip addr show enp0s3

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:02:49:c1 brd ff:ff:ff:ff:ff:ff

inet 192.168.16.100/24 brd 192.168.16.255 scope global enp0s3

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe02:49c1/64 scope link

valid_lft forever preferred_lft forever

hadoop@Ubuntu1604:/usr/local/hadoop$

访问Web界面http://192.168.16.100:50070,可以查看NameNode/Datanode信息和HDFS中的文件

运行Hadoop伪分布式实例

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -mkdir -p /user/hadoop

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -mkdir input

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -put ./etc/hadoop/*.xml input

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -ls

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2017-04-29 07:42 input

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -ls input

Found 8 items

-rw-r--r-- 1 hadoop supergroup 4942 2017-04-29 07:42 input/capacity-scheduler.xml

-rw-r--r-- 1 hadoop supergroup 1111 2017-04-29 07:42 input/core-site.xml

-rw-r--r-- 1 hadoop supergroup 9683 2017-04-29 07:42 input/hadoop-policy.xml

-rw-r--r-- 1 hadoop supergroup 1181 2017-04-29 07:42 input/hdfs-site.xml

-rw-r--r-- 1 hadoop supergroup 620 2017-04-29 07:42 input/httpfs-site.xml

-rw-r--r-- 1 hadoop supergroup 3518 2017-04-29 07:42 input/kms-acls.xml

-rw-r--r-- 1 hadoop supergroup 5546 2017-04-29 07:42 input/kms-site.xml

-rw-r--r-- 1 hadoop supergroup 690 2017-04-29 07:42 input/yarn-site.xml

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.0.jar grep input output 'dfs[a-z.]+'

17/04/29 07:43:54 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

......

......

......

17/04/29 07:43:58 INFO mapreduce.Job: map 100% reduce 100%

17/04/29 07:43:58 INFO mapreduce.Job: Job job_local329465708_0002 completed successfully

17/04/29 07:43:58 INFO mapreduce.Job: Counters: 35

File System Counters

FILE: Number of bytes read=1222362

FILE: Number of bytes written=2503241

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=55020

HDFS: Number of bytes written=515

HDFS: Number of read operations=67

HDFS: Number of large read operations=0

HDFS: Number of write operations=16

Map-Reduce Framework

Map input records=4

Map output records=4

Map output bytes=101

Map output materialized bytes=115

Input split bytes=132

Combine input records=0

Combine output records=0

Reduce input groups=1

Reduce shuffle bytes=115

Reduce input records=4

Reduce output records=4

Spilled Records=8

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=0

Total committed heap usage (bytes)=1054867456

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=219

File Output Format Counters

Bytes Written=77

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -ls

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2017-04-29 07:42 input

drwxr-xr-x - hadoop supergroup 0 2017-04-29 07:43 output

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -ls output

Found 2 items

-rw-r--r-- 1 hadoop supergroup 0 2017-04-29 07:43 output/_SUCCESS

-rw-r--r-- 1 hadoop supergroup 77 2017-04-29 07:43 output/part-r-00000

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -cat output/*

1 dfsadmin

1 dfs.replication

1 dfs.namenode.name.dir

1 dfs.datanode.data.dir

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ls -l ./output

ls: 无法访问'./output': 没有那个文件或目录

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -get output ./output

hadoop@Ubuntu1604:/usr/local/hadoop$ cat ./output/*

1 dfsadmin

1 dfs.replication

1 dfs.namenode.name.dir

1 dfs.datanode.data.dir

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -rm -r output

Deleted output

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -ls

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2017-04-29 07:42 input

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.0.jar grep input output 'dfs[a-z.]+'

17/04/29 07:48:40 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

......

......

......

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -ls

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2017-04-29 07:42 input

drwxr-xr-x - hadoop supergroup 0 2017-04-29 07:48 output

hadoop@Ubuntu1604:/usr/local/hadoop$ ./bin/hdfs dfs -cat output/*

1 dfsadmin

1 dfs.replication

1 dfs.namenode.name.dir

1 dfs.datanode.data.dir

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/stop-dfs.sh

Stopping namenodes on [localhost]

localhost: stopping namenode

localhost: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ jps

3807 Jps

hadoop@Ubuntu1604:/usr/local/hadoop$

特别注意:Hadoop运行程序时,输出目录不能存在,否则会出错。再次执行前,必须删除 output 文件夹:./bin/hdfs dfs -rm -r output。

YARN

修改配置文件mapred-site.xml

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ pwd

/usr/local/hadoop/etc/hadoop

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ mv mapred-site.xml.template mapred-site.xml

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ vim mapred-site.xml

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ cat mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ vim yarn-site.xml

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$ cat yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

hadoop@Ubuntu1604:/usr/local/hadoop/etc/hadoop$

如果不想启动YARN,务必将配置文件 mapred-site.xml改为原名称mapred-site.xml.template,否则将很可能会引起程序异常。

启动YARN

hadoop@Ubuntu1604:/usr/local/hadoop$ pwd

/usr/local/hadoop

hadoop@Ubuntu1604:/usr/local/hadoop$ jps

5774 Jps

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/start-dfs.sh

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-namenode-Ubuntu1604.out

localhost: starting datanode, logging to /usr/local/hadoop/logs/hadoop-hadoop-datanode-Ubuntu1604.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-secondarynamenode-Ubuntu1604.out

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ jps

6034 DataNode

6373 Jps

5915 NameNode

6221 SecondaryNameNode

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/logs/yarn-hadoop-resourcemanager-Ubuntu1604.out

localhost: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-hadoop-nodemanager-Ubuntu1604.out

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ jps

6034 DataNode

6644 Jps

6422 ResourceManager

6536 NodeManager

5915 NameNode

6221 SecondaryNameNode

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /usr/local/hadoop/logs/mapred-hadoop-historyserver-Ubuntu1604.out

hadoop@Ubuntu1604:/usr/local/hadoop$

hadoop@Ubuntu1604:/usr/local/hadoop$ jps

6816 JobHistoryServer

6034 DataNode

6917 Jps

6422 ResourceManager

6536 NodeManager

5915 NameNode

6221 SecondaryNameNode

hadoop@Ubuntu1604:/usr/local/hadoop$

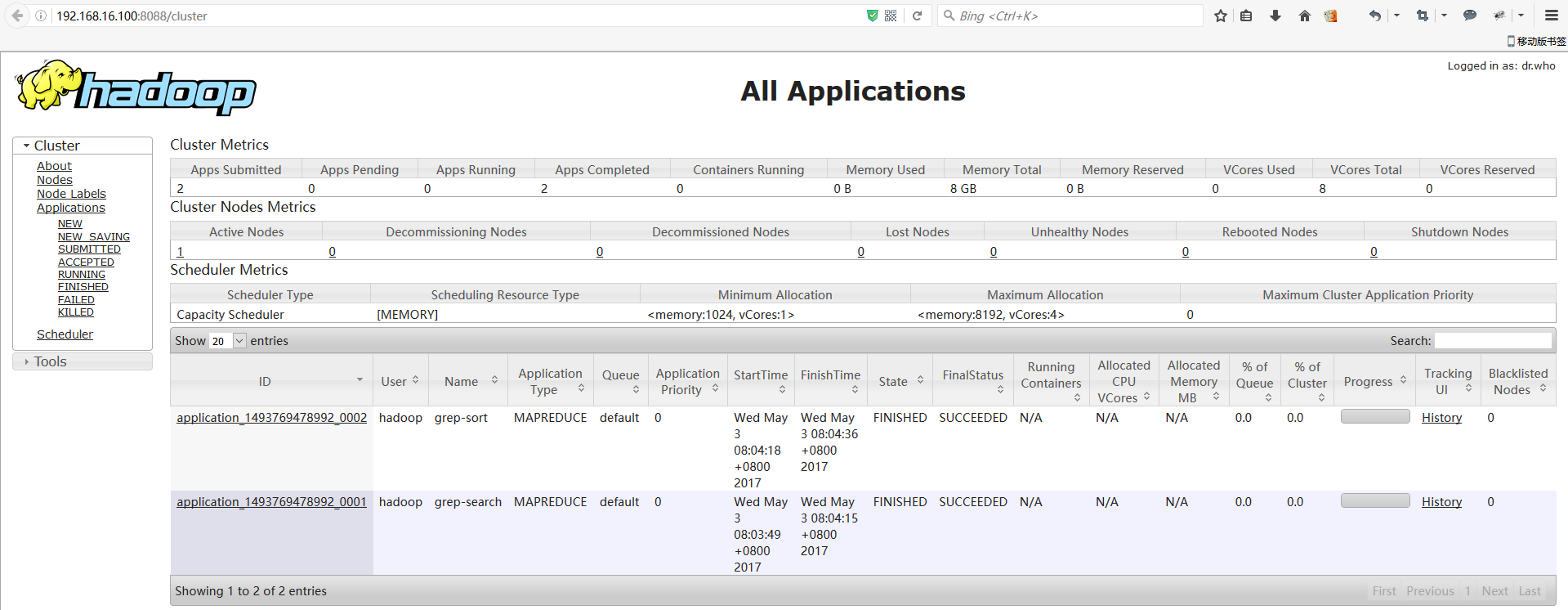

访问Web页面

启用YARN之后,可以通过 Web 界面查看任务的运行情况:http://192.168.16.100:8088/cluster

关闭YARN和Hadoop

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/mr-jobhistory-daemon.sh stop historyserver

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/stop-yarn.sh

hadoop@Ubuntu1604:/usr/local/hadoop$ ./sbin/stop-dfs.sh

Data - Hadoop伪分布式配置 - 使用Hadoop2.8.0和Ubuntu16.04的更多相关文章

- Data - Hadoop单机配置 - 使用Hadoop2.8.0和Ubuntu16.04

系统版本 anliven@Ubuntu1604:~$ uname -a Linux Ubuntu1604 4.8.0-36-generic #36~16.04.1-Ubuntu SMP Sun Feb ...

- Hadoop伪分布式配置

一步一步来: 安装VMWARE简单,安装CentOS也简单 但是,碰到了一个问题:安装的虚拟机没有图形化界面 最后,我选择了CentOS-7-x86_64-DVD-1503-01.iso镜像 配置用户 ...

- Hadoop伪分布式配置:CentOS6.5(64)+JDK1.7+hadoop2.7.2

java环境配置 修改环境变量 export JAVA_HOME=/usr/java/jdk1.7.0_79 export PATH=$PATH:$JAVA_HOME/bin export CLASS ...

- Flume1.5.0的安装、部署、简单应用(含伪分布式、与hadoop2.2.0、hbase0.96的案例)

目录: 一.什么是Flume? 1)flume的特点 2)flume的可靠性 3)flume的可恢复性 4)flume 的 一些核心概念 二.flume的官方网站在哪里? 三.在哪里下载? 四.如何安 ...

- Hadoop完全分布式环境搭建(三)——基于Ubuntu16.04安装和配置Java环境

[系统环境] 1.宿主机OS:Win10 64位 2.虚拟机软件:VMware WorkStation 12 3.虚拟机OS:Ubuntu16.04 4.三台虚拟机 5.JDK文件:jdk-8u201 ...

- Hadoop完全分布式环境搭建(二)——基于Ubuntu16.04设置免密登录

在Windows里,使用虚拟机软件Vmware WorkStation搭建三台机器,操作系统Ubuntu16.04,下面是IP和机器名称. [实验目标]:在这三台机器之间实现免密登录 1.从主节点可以 ...

- Hadoop环境搭建-入门伪分布式配置(Mac OS,0.21.0,Eclipse 3.6)

http://www.linuxidc.com/Linux/2012-10/71900p2.htm http://andy-ghg.iteye.com/blog/1165453 为Mac的MyEcli ...

- Hadoop完全分布式环境搭建(四)——基于Ubuntu16.04安装和配置Hadoop大数据环境

[系统环境] [安装配置概要] 1.上传hadoop安装文件到主节点机器 2.给文件夹设置权限 3.解压 4.拷贝到目标文件夹 放在/opt文件夹下,目录结构:/opt/hadoop/hadoop-2 ...

- Hadoop安装教程_单机/伪分布式配置_CentOS6.4/Hadoop2.6.0

Hadoop安装教程_单机/伪分布式配置_CentOS6.4/Hadoop2.6.0 环境 本教程使用 CentOS 6.4 32位 作为系统环境,请自行安装系统.如果用的是 Ubuntu 系统,请查 ...

随机推荐

- 使用 kbmmw 的ORM开发纯REST数据库访问服务

运行环境: WIN 10 X64 delphi 10.2.2 kbmmw 5.05.11 Firefox 58.0.2 今天使用最新的kbmmw 版本做一个基于ORM的纯数据库访问的REST 服务器 ...

- python中的函数嵌套

一.函数嵌套 1.只要遇到了()就是函数的调用.如果没有就不是函数的调用 2.函数的执行顺序 遵循空间作用域,遇到调用才执行 def outer(): def inner(): print(" ...

- SQL SERVER 如果判断text类型数据不为空

一个字段Remark的数据类型设置先设置为varcharr(255),后来考虑到扩展性需要将其定义为TEXT类型,但是SQL 语句报错. SQL 语句: SELECT * FROM ...

- vue的computed属性

vue的computed属性要注意的两个地方,1,必须有return,2,使用属性不用括号 <div> <input type="text" v-model=&q ...

- Java翻转数组的方法

java的api没用翻转数组的工具类,只能自己写了. int [] testIntArr = {1,2,3}; //翻转数组 for (int i = 0; i < testIntArr.len ...

- openstack的Host Aggregates和Availability Zones

1.关系 Availability Zones 通常是对 computes 节点上的资源在小的区域内进行逻辑上的分组和隔离.例如在同一个数据中心,我们可以将 Availability Zones 规划 ...

- mouseover和mouseout事件的相关元素

在发生mouseover和mouseout事件时,还会涉及更多的元素,这两个事件都会涉及把鼠标指针从一个元素的边界之内移动到另一个元素的边界之内.对mouseover事件而言,事件的主目标获得光标元素 ...

- python 基础1

一.python版本的介绍 python有两个大的版本2.X与3.X的版本,而在不久的将来将全面的进入3的版本.3的版本将比2的版本功能更加强大,而且也修复了大量的bug. 二.python的安装可以 ...

- Iframe跨域JavaScript自动适应高度

重点分析: 主域名页面:页面A,页面C 其它域名页面:页面B 步骤: 1.页面A(主域名)通过Iframe(id="iframeB")嵌套页面B(其它域名) 2.页面B(其它域名) ...

- Spring 整合 RocketMQ

1. 引入jar包 <!-- RocketMQ --> <dependency> <groupId>com.alibaba.rocketmq</groupId ...