sqoop导入数据

来源https://www.cnblogs.com/qingyunzong/p/8807252.html

一、概述

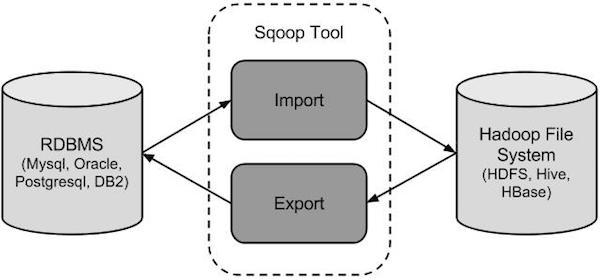

sqoop 是 apache 旗下一款“Hadoop 和关系数据库服务器之间传送数据”的工具。

核心的功能有两个:

导入、迁入

导出、迁出

导入数据:MySQL,Oracle 导入数据到 Hadoop 的 HDFS、HIVE、HBASE 等数据存储系统

导出数据:从 Hadoop 的文件系统中导出数据到关系数据库 mysql 等 Sqoop 的本质还是一个命令行工具,和 HDFS,Hive 相比,并没有什么高深的理论。

sqoop:

工具:本质就是迁移数据, 迁移的方式:就是把sqoop的迁移命令转换成MR程序

hive

工具:本质就是执行计算,依赖于HDFS存储数据,把SQL转换成MR程序

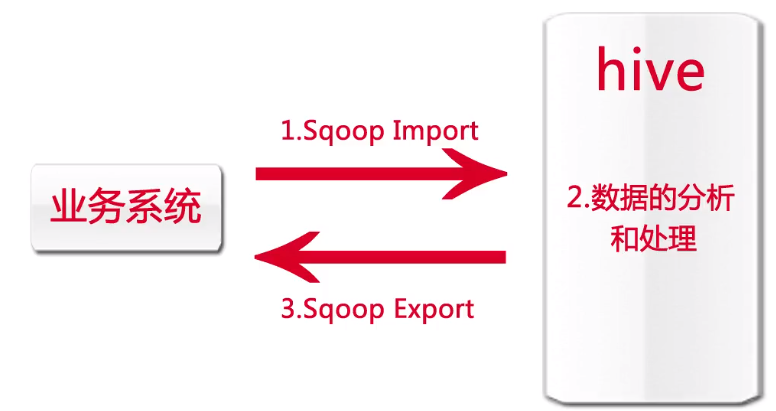

生产环境中sqoop的使用

二、工作机制

将导入或导出命令翻译成 MapReduce 程序来实现 在翻译出的 MapReduce 中主要是对 InputFormat 和 OutputFormat 进行定制

三、安装

1、前提概述

将来sqoop在使用的时候有可能会跟那些系统或者组件打交道?

HDFS, MapReduce, YARN, ZooKeeper, Hive, HBase, MySQL

sqoop就是一个工具, 只需要在一个节点上进行安装即可。

补充一点: 如果你的sqoop工具将来要进行hive或者hbase等等的系统和MySQL之间的交互

你安装的SQOOP软件的节点一定要包含以上你要使用的集群或者软件系统的安装包

补充一点: 将来要使用的azakban这个软件 除了会调度 hadoop的任务或者hbase或者hive的任务之外, 还会调度sqoop的任务

azkaban这个软件的安装节点也必须包含以上这些软件系统的客户端/2、

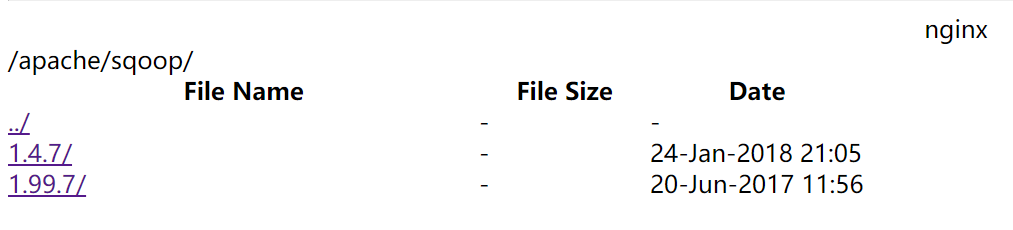

2、软件下载

下载地址http://mirrors.hust.edu.cn/apache/

sqoop版本说明

绝大部分企业所使用的sqoop的版本都是 sqoop1

sqoop-1.4.6 或者 sqoop-1.4.7 它是 sqoop1

sqoop-1.99.4----都是 sqoop2

此处使用sqoop-1.4.6版本sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

(1)上传解压缩安装包到指定目录

因为之前hive只是安装在hadoop3机器上,所以sqoop也同样安装在hadoop3机器上

[hadoop@hadoop3 ~]$ tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz -C apps/

2)进入到 conf 文件夹,找到 sqoop-env-template.sh,修改其名称为 sqoop-env.sh

[hadoop@hadoop3 ~]$ cd apps/

[hadoop@hadoop3 apps]$ ls

apache-hive-2.3.-bin hadoop-2.7. hbase-1.2. sqoop-1.4..bin__hadoop-2.0.-alpha zookeeper-3.4.

[hadoop@hadoop3 apps]$ mv sqoop-1.4..bin__hadoop-2.0.-alpha/ sqoop-1.4.

[hadoop@hadoop3 apps]$ cd sqoop-1.4./conf/

[hadoop@hadoop3 conf]$ ls

oraoop-site-template.xml sqoop-env-template.sh sqoop-site.xml

sqoop-env-template.cmd sqoop-site-template.xml

[hadoop@hadoop3 conf]$ mv sqoop-env-template.sh sqoop-env.sh

(3)修改 sqoop-env.sh

hadoop@hadoop3 conf]$ vi sqoop-env.sh

export HADOOP_COMMON_HOME=/home/hadoop/apps/hadoop-2.7. #Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/home/hadoop/apps/hadoop-2.7. #set the path to where bin/hbase is available

export HBASE_HOME=/home/hadoop/apps/hbase-1.2. #Set the path to where bin/hive is available

export HIVE_HOME=/home/hadoop/apps/apache-hive-2.3.-bin #Set the path for where zookeper config dir is

export ZOOCFGDIR=/home/hadoop/apps/zookeeper-3.4./conf

为什么在sqoop-env.sh 文件中会要求分别进行 common和mapreduce的配置呢???

在apache的hadoop的安装中;四大组件都是安装在同一个hadoop_home中的 但是在CDH, HDP中, 这些组件都是可选的。 在安装hadoop的时候,可以选择性的只安装HDFS或者YARN, CDH,HDP在安装hadoop的时候,会把HDFS和MapReduce有可能分别安装在不同的地方。

4)加入 mysql 驱动包到 sqoop1.4.6/lib 目录下

hadoop@hadoop3 ~]$ cp mysql-connector-java-5.1.40-bin.jar apps/sqoop-1.4.6/lib/

(5)配置系统环境变量

[hadoop@hadoop3 ~]$ vi .bashrc

#Sqoop

export SQOOP_HOME=/home/hadoop/apps/sqoop-1.4.

export PATH=$PATH:$SQOOP_HOME/bin

保存退出使其立即生效

[hadoop@hadoop3 ~]$ source .bashrc

(6)验证安装是否成功

sqoop-version 或者 sqoop version

Sqoop的基本命令

基本操作

首先,我们可以使用 sqoop help 来查看,sqoop 支持哪些命令

[hadoop@hadoop3 ~]$ sqoop help

Warning: /home/hadoop/apps/sqoop-1.4./../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop-1.4./../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

usage: sqoop COMMAND [ARGS] Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information See 'sqoop help COMMAND' for information on a specific command.

[hadoop@hadoop3 ~]$

然后得到这些支持了的命令之后,如果不知道使用方式,可以使用 sqoop command 的方式 来查看某条具体命令的使用方式,比如:

[hadoop@hadoop3 ~]$ sqoop help import

Warning: /home/hadoop/apps/sqoop-1.4./../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop-1.4./../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

usage: sqoop import [GENERIC-ARGS] [TOOL-ARGS] Common arguments:

--connect <jdbc-uri> Specify JDBC connect

string

--connection-manager <class-name> Specify connection manager

class name

--connection-param-file <properties-file> Specify connection

parameters file

--driver <class-name> Manually specify JDBC

driver class to use

--hadoop-home <hdir> Override

$HADOOP_MAPRED_HOME_ARG

--hadoop-mapred-home <dir> Override

$HADOOP_MAPRED_HOME_ARG

--help Print usage instructions

-P Read password from console

--password <password> Set authentication

password

--password-alias <password-alias> Credential provider

password alias

--password-file <password-file> Set authentication

password file path

--relaxed-isolation Use read-uncommitted

isolation for imports

--skip-dist-cache Skip copying jars to

distributed cache

--username <username> Set authentication

username

--verbose Print more information

while working Import control arguments:

--append Imports data

in append

mode

--as-avrodatafile Imports data

to Avro data

files

--as-parquetfile Imports data

to Parquet

files

--as-sequencefile Imports data

to

SequenceFile

s

--as-textfile Imports data

as plain

text

(default)

--autoreset-to-one-mapper Reset the

number of

mappers to

one mapper

if no split

key

available

--boundary-query <statement> Set boundary

query for

retrieving

max and min

value of the

primary key

--columns <col,col,col...> Columns to

import from

table

--compression-codec <codec> Compression

codec to use

for import

--delete-target-dir Imports data

in delete

mode

--direct Use direct

import fast

path

--direct-split-size <n> Split the

input stream

every 'n'

bytes when

importing in

direct mode

-e,--query <statement> Import

results of

SQL

'statement'

--fetch-size <n> Set number

'n' of rows

to fetch

from the

database

when more

rows are

needed

--inline-lob-limit <n> Set the

maximum size

for an

inline LOB

-m,--num-mappers <n> Use 'n' map

tasks to

import in

parallel

--mapreduce-job-name <name> Set name for

generated

mapreduce

job

--merge-key <column> Key column

to use to

join results

--split-by <column-name> Column of

the table

used to

split work

units

--table <table-name> Table to

read

--target-dir <dir> HDFS plain

table

destination

--validate Validate the

copy using

the

configured

validator

--validation-failurehandler <validation-failurehandler> Fully

qualified

class name

for

ValidationFa

ilureHandler

--validation-threshold <validation-threshold> Fully

qualified

class name

for

ValidationTh

reshold

--validator <validator> Fully

qualified

class name

for the

Validator

--warehouse-dir <dir> HDFS parent

for table

destination

--where <where clause> WHERE clause

to use

during

import

-z,--compress Enable

compression Incremental import arguments:

--check-column <column> Source column to check for incremental

change

--incremental <import-type> Define an incremental import of type

'append' or 'lastmodified'

--last-value <value> Last imported value in the incremental

check column Output line formatting arguments:

--enclosed-by <char> Sets a required field enclosing

character

--escaped-by <char> Sets the escape character

--fields-terminated-by <char> Sets the field separator character

--lines-terminated-by <char> Sets the end-of-line character

--mysql-delimiters Uses MySQL's default delimiter set:

fields: , lines: \n escaped-by: \

optionally-enclosed-by: '

--optionally-enclosed-by <char> Sets a field enclosing character Input parsing arguments:

--input-enclosed-by <char> Sets a required field encloser

--input-escaped-by <char> Sets the input escape

character

--input-fields-terminated-by <char> Sets the input field separator

--input-lines-terminated-by <char> Sets the input end-of-line

char

--input-optionally-enclosed-by <char> Sets a field enclosing

character Hive arguments:

--create-hive-table Fail if the target hive

table exists

--hive-database <database-name> Sets the database name to

use when importing to hive

--hive-delims-replacement <arg> Replace Hive record \0x01

and row delimiters (\n\r)

from imported string fields

with user-defined string

--hive-drop-import-delims Drop Hive record \0x01 and

row delimiters (\n\r) from

imported string fields

--hive-home <dir> Override $HIVE_HOME

--hive-import Import tables into Hive

(Uses Hive's default

delimiters if none are

set.)

--hive-overwrite Overwrite existing data in

the Hive table

--hive-partition-key <partition-key> Sets the partition key to

use when importing to hive

--hive-partition-value <partition-value> Sets the partition value to

use when importing to hive

--hive-table <table-name> Sets the table name to use

when importing to hive

--map-column-hive <arg> Override mapping for

specific column to hive

types. HBase arguments:

--column-family <family> Sets the target column family for the

import

--hbase-bulkload Enables HBase bulk loading

--hbase-create-table If specified, create missing HBase tables

--hbase-row-key <col> Specifies which input column to use as the

row key

--hbase-table <table> Import to <table> in HBase HCatalog arguments:

--hcatalog-database <arg> HCatalog database name

--hcatalog-home <hdir> Override $HCAT_HOME

--hcatalog-partition-keys <partition-key> Sets the partition

keys to use when

importing to hive

--hcatalog-partition-values <partition-value> Sets the partition

values to use when

importing to hive

--hcatalog-table <arg> HCatalog table name

--hive-home <dir> Override $HIVE_HOME

--hive-partition-key <partition-key> Sets the partition key

to use when importing

to hive

--hive-partition-value <partition-value> Sets the partition

value to use when

importing to hive

--map-column-hive <arg> Override mapping for

specific column to

hive types. HCatalog import specific options:

--create-hcatalog-table Create HCatalog before import

--hcatalog-storage-stanza <arg> HCatalog storage stanza for table

creation Accumulo arguments:

--accumulo-batch-size <size> Batch size in bytes

--accumulo-column-family <family> Sets the target column family for

the import

--accumulo-create-table If specified, create missing

Accumulo tables

--accumulo-instance <instance> Accumulo instance name.

--accumulo-max-latency <latency> Max write latency in milliseconds

--accumulo-password <password> Accumulo password.

--accumulo-row-key <col> Specifies which input column to

use as the row key

--accumulo-table <table> Import to <table> in Accumulo

--accumulo-user <user> Accumulo user name.

--accumulo-visibility <vis> Visibility token to be applied to

all rows imported

--accumulo-zookeepers <zookeepers> Comma-separated list of

zookeepers (host:port) Code generation arguments:

--bindir <dir> Output directory for compiled

objects

--class-name <name> Sets the generated class name.

This overrides --package-name.

When combined with --jar-file,

sets the input class.

--input-null-non-string <null-str> Input null non-string

representation

--input-null-string <null-str> Input null string representation

--jar-file <file> Disable code generation; use

specified jar

--map-column-java <arg> Override mapping for specific

columns to java types

--null-non-string <null-str> Null non-string representation

--null-string <null-str> Null string representation

--outdir <dir> Output directory for generated

code

--package-name <name> Put auto-generated classes in

this package Generic Hadoop command-line arguments:

(must preceed any tool-specific arguments)

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines. The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions] At minimum, you must specify --connect and --table

Arguments to mysqldump and other subprograms may be supplied

after a '--' on the command line.

[hadoop@hadoop3 ~]$

示例

列出MySQL数据有哪些数据库

[hadoop@hadoop3 ~]$ sqoop list-databases \

> --connect jdbc:mysql://hadoop1:3306/ \

> --username root \

> --password root

Warning: /home/hadoop/apps/sqoop-1.4./../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop-1.4./../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

// :: WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

hivedb

mysql

performance_schema

test

[hadoop@hadoop3 ~]$

列出MySQL中的某个数据库有哪些数据表:

[hadoop@hadoop3 ~]$ sqoop list-tables \

> --connect jdbc:mysql://hadoop1:3306/mysql \

> --username root \

> --password root

Warning: /home/hadoop/apps/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/12 13:46:21 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/04/12 13:46:21 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/12 13:46:21 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

columns_priv

db

event

func

general_log

help_category

help_keyword

help_relation

help_topic

innodb_index_stats

innodb_table_stats

ndb_binlog_index

plugin

proc

procs_priv

proxies_priv

servers

slave_master_info

slave_relay_log_info

slave_worker_info

slow_log

tables_priv

time_zone

time_zone_leap_second

time_zone_name

time_zone_transition

time_zone_transition_type

user

[hadoop@hadoop3 ~]$

创建一张跟mysql中的help_keyword表一样的hive表hk:

[hadoop@hadoop3 ~]$ sqoop create-hive-table \

> --connect jdbc:mysql://hadoop1:3306/mysql \

> --username root \

> --password root \

> --table help_keyword \

> --hive-table hk

Warning: /home/hadoop/apps/sqoop-1.4./../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop-1.4./../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

// :: WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

// :: INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override

// :: INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc.

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

// :: INFO hive.HiveImport: Loading uploaded data into Hive

// :: INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings.

// :: INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hadoop/apps/apache-hive-2.3.-bin/lib/log4j-slf4j-impl-2.6..jar!/org/slf4j/impl/StaticLoggerBinder.class]

// :: INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hadoop/apps/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

// :: INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hadoop/apps/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

// :: INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

// :: INFO hive.HiveImport: SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

// :: INFO hive.HiveImport:

// :: INFO hive.HiveImport: Logging initialized using configuration in jar:file:/home/hadoop/apps/apache-hive-2.3.-bin/lib/hive-common-2.3..jar!/hive-log4j2.properties Async: true

// :: INFO hive.HiveImport: OK

// :: INFO hive.HiveImport: Time taken: 11.651 seconds

// :: INFO hive.HiveImport: Hive import complete.

[hadoop@hadoop3 ~]$

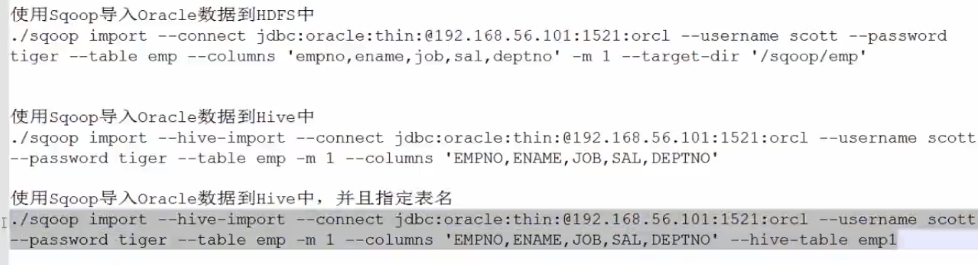

Sqoop的数据导入

、从RDBMS导入到HDFS中

、把MySQL数据库中的表数据导入到Hive中

3、把MySQL数据库中的表数据导入到hbase

“导入工具”导入单个表从 RDBMS 到 HDFS。表中的每一行被视为 HDFS 的记录。所有记录 都存储为文本文件的文本数据(或者 Avro、sequence 文件等二进制数据)

https://www.jianshu.com/p/be33f4b5c62e 详细说明和参数设置

Sqoop并行化是启多个map task实现的,-m(或--num-mappers)参数指定map task数,默认是四个。并行度不是设置的越大越好,map task的启动和销毁都会消耗资源,

而且过多的数据库连接对数据库本身也会造成压力。在并行操作里,首先要解决输入数据是以什么方式负债均衡到多个map的,即怎么保证每个map处理的数据量大致相同且数据不重复。

--split-by指定了split column,在执行并行操作时(多个map task),Sqoop需要知道以什么列split数据,其思想是: 1、先查出split column的最小值和最大值 2、然后根据map task数对(max-min)之间的数据进行均匀的范围切分 例如id作为split column,其最小值是0、最大值1000,如果设置4个map数,每个map task执行的查询语句类似于:

SELECT * FROM sometable WHERE id >= lo AND id < hi

每个task里(lo,hi)的值分别是 (0, 250), (250, 500), (500, 750), and (750, 1001)。

1、从RDBMS导入到HDFS中

语法格式

sqoop import (generic-args) (import-args)

常用参数

--connect <jdbc-uri> jdbc 连接地址

--connection-manager <class-name> 连接管理者

--driver <class-name> 驱动类

--hadoop-mapred-home <dir> $HADOOP_MAPRED_HOME

--help help 信息

-P 从命令行输入密码

--password <password> 密码

--username <username> 账号

--verbose 打印流程信息

--connection-param-file <filename> 可选参数

示例

普通导入:导入mysql库中的help_keyword的数据到HDFS上

导入的默认路径:/user/hadoop/help_keyword

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--table help_keyword \

-m

[hadoop@hadoop3 ~]$ sqoop import \

> --connect jdbc:mysql://hadoop1:3306/mysql \

> --username root \

> --password root \

> --table help_keyword \

> -m 1

Warning: /home/hadoop/apps/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/12 13:53:48 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/04/12 13:53:48 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/12 13:53:48 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/12 13:53:48 INFO tool.CodeGenTool: Beginning code generation

18/04/12 13:53:49 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

18/04/12 13:53:49 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

18/04/12 13:53:49 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/apps/hadoop-2.7.5

注: /tmp/sqoop-hadoop/compile/979d87b9521d0a09ee6620060a112d60/help_keyword.java使用或覆盖了已过时的 API。

注: 有关详细信息, 请使用 -Xlint:deprecation 重新编译。

18/04/12 13:53:51 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/979d87b9521d0a09ee6620060a112d60/help_keyword.jar

18/04/12 13:53:51 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/04/12 13:53:51 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/04/12 13:53:51 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/04/12 13:53:51 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/04/12 13:53:51 INFO mapreduce.ImportJobBase: Beginning import of help_keyword

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hadoop-2.7.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

18/04/12 13:53:52 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/04/12 13:53:53 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/04/12 13:53:58 INFO db.DBInputFormat: Using read commited transaction isolation

18/04/12 13:53:58 INFO mapreduce.JobSubmitter: number of splits:1

18/04/12 13:53:59 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1523510178850_0001

18/04/12 13:54:00 INFO impl.YarnClientImpl: Submitted application application_1523510178850_0001

18/04/12 13:54:00 INFO mapreduce.Job: The url to track the job: http://hadoop3:8088/proxy/application_1523510178850_0001/

18/04/12 13:54:00 INFO mapreduce.Job: Running job: job_1523510178850_0001

18/04/12 13:54:17 INFO mapreduce.Job: Job job_1523510178850_0001 running in uber mode : false

18/04/12 13:54:17 INFO mapreduce.Job: map 0% reduce 0%

18/04/12 13:54:33 INFO mapreduce.Job: map 100% reduce 0%

18/04/12 13:54:34 INFO mapreduce.Job: Job job_1523510178850_0001 completed successfully

18/04/12 13:54:35 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=142965

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=8264

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=12142

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=12142

Total vcore-milliseconds taken by all map tasks=12142

Total megabyte-milliseconds taken by all map tasks=12433408

Map-Reduce Framework

Map input records=619

Map output records=619

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=123

CPU time spent (ms)=1310

Physical memory (bytes) snapshot=93212672

Virtual memory (bytes) snapshot=2068234240

Total committed heap usage (bytes)=17567744

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=8264

18/04/12 13:54:35 INFO mapreduce.ImportJobBase: Transferred 8.0703 KB in 41.8111 seconds (197.6507 bytes/sec)

18/04/12 13:54:35 INFO mapreduce.ImportJobBase: Retrieved 619 records.

[hadoop@hadoop3 ~]$

查看导入的文件

[hadoop@hadoop4 ~]$ hadoop fs -cat /user/hadoop/help_keyword/part-m-

导入: 指定分隔符和导入路径

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--table help_keyword \

--target-dir /user/hadoop11/my_help_keyword1 \

--fields-terminated-by '\t' \

-m

导入数据:带where条件

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--where "name='STRING' " \

--table help_keyword \

--target-dir /sqoop/hadoop11/myoutport1 \

-m

查询指定列

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--columns "name" \

--where "name='STRING' " \

--table help_keyword \

--target-dir /sqoop/hadoop11/myoutport22 \

-m

selct name from help_keyword where name = "string"

导入:指定自定义查询SQL

sqoop import \

--connect jdbc:mysql://hadoop1:3306/ \

--username root \

--password root \

--target-dir /user/hadoop/myimport33_1 \

--query 'select help_keyword_id,name from mysql.help_keyword where $CONDITIONS and name = "STRING"' \

--split-by help_keyword_id \

--fields-terminated-by '\t' \

-m

在以上需要按照自定义SQL语句导出数据到HDFS的情况下:

1、引号问题,要么外层使用单引号,内层使用双引号,$CONDITIONS的$符号不用转义, 要么外层使用双引号,那么内层使用单引号,然后$CONDITIONS的$符号需要转义

2、自定义的SQL语句中必须带有WHERE \$CONDITIONS

2、把MySQL数据库中的表数据导入到Hive中

Sqoop 导入关系型数据到 hive 的过程是先导入到 hdfs,然后再 load 进入 hive

普通导入:数据存储在默认的default hive库中,表名就是对应的mysql的表名:

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--table help_keyword \

--hive-import \

-m

导入过程

第一步:导入mysql.help_keyword的数据到hdfs的默认路径

第二步:自动仿造mysql.help_keyword去创建一张hive表, 创建在默认的default库中

第三步:把临时目录中的数据导入到hive表中

查看数据

[hadoop@hadoop3 ~]$ hadoop fs -cat /user/hive/warehouse/help_keyword/part-m-

指定行分隔符和列分隔符,指定hive-import,指定覆盖导入,指定自动创建hive表,指定表名,指定删除中间结果数据目录

手动创建mydb_test数据块

create database mydb_test;

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--table help_keyword \

--fields-terminated-by "\t" \

--lines-terminated-by "\n" \

--hive-import \

--hive-overwrite \

--create-hive-table \

--delete-target-dir \

--hive-database mydb_test \

--hive-table new_help_keyword

查询验证

select * from new_help_keyword limit 10; 上面的导入语句等价于

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--table help_keyword \

--fields-terminated-by "\t" \

--lines-terminated-by "\n" \

--hive-import \

--hive-overwrite \

--create-hive-table \

--hive-table mydb_test.new_help_keyword \

--delete-target-dir

增量导入

执行增量导入之前,先清空hive数据库中的help_keyword表中的数据

truncate table help_keyword;

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--table help_keyword \

--target-dir /user/hadoop/myimport_add \

--incremental append \

--check-column help_keyword_id \

--last-value \

-m

语句执行成功

[hadoop@hadoop3 ~]$ sqoop import \

> --connect jdbc:mysql://hadoop1:3306/mysql \

> --username root \

> --password root \

> --table help_keyword \

> --target-dir /user/hadoop/myimport_add \

> --incremental append \

> --check-column help_keyword_id \

> --last-value 500 \

> -m 1

Warning: /home/hadoop/apps/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/12 22:01:07 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/04/12 22:01:08 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/12 22:01:08 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/12 22:01:08 INFO tool.CodeGenTool: Beginning code generation

18/04/12 22:01:08 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

18/04/12 22:01:08 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `help_keyword` AS t LIMIT 1

18/04/12 22:01:08 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/apps/hadoop-2.7.5

注: /tmp/sqoop-hadoop/compile/a51619d1ef8c6e4b112a209326ed9e0f/help_keyword.java使用或覆盖了已过时的 API。

注: 有关详细信息, 请使用 -Xlint:deprecation 重新编译。

18/04/12 22:01:11 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/a51619d1ef8c6e4b112a209326ed9e0f/help_keyword.jar

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hadoop-2.7.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/apps/hbase-1.2.6/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

18/04/12 22:01:12 INFO tool.ImportTool: Maximal id query for free form incremental import: SELECT MAX(`help_keyword_id`) FROM `help_keyword`

18/04/12 22:01:12 INFO tool.ImportTool: Incremental import based on column `help_keyword_id`

18/04/12 22:01:12 INFO tool.ImportTool: Lower bound value: 500

18/04/12 22:01:12 INFO tool.ImportTool: Upper bound value: 618

18/04/12 22:01:12 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/04/12 22:01:12 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/04/12 22:01:12 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/04/12 22:01:12 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/04/12 22:01:12 INFO mapreduce.ImportJobBase: Beginning import of help_keyword

18/04/12 22:01:12 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/04/12 22:01:12 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/04/12 22:01:17 INFO db.DBInputFormat: Using read commited transaction isolation

18/04/12 22:01:17 INFO mapreduce.JobSubmitter: number of splits:1

18/04/12 22:01:17 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1523510178850_0010

18/04/12 22:01:19 INFO impl.YarnClientImpl: Submitted application application_1523510178850_0010

18/04/12 22:01:19 INFO mapreduce.Job: The url to track the job: http://hadoop3:8088/proxy/application_1523510178850_0010/

18/04/12 22:01:19 INFO mapreduce.Job: Running job: job_1523510178850_0010

18/04/12 22:01:30 INFO mapreduce.Job: Job job_1523510178850_0010 running in uber mode : false

18/04/12 22:01:30 INFO mapreduce.Job: map 0% reduce 0%

18/04/12 22:01:40 INFO mapreduce.Job: map 100% reduce 0%

18/04/12 22:01:40 INFO mapreduce.Job: Job job_1523510178850_0010 completed successfully

18/04/12 22:01:41 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=143200

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=1576

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=7188

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=7188

Total vcore-milliseconds taken by all map tasks=7188

Total megabyte-milliseconds taken by all map tasks=7360512

Map-Reduce Framework

Map input records=118

Map output records=118

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=86

CPU time spent (ms)=870

Physical memory (bytes) snapshot=95576064

Virtual memory (bytes) snapshot=2068234240

Total committed heap usage (bytes)=18608128

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=1576

18/04/12 22:01:41 INFO mapreduce.ImportJobBase: Transferred 1.5391 KB in 28.3008 seconds (55.6875 bytes/sec)

18/04/12 22:01:41 INFO mapreduce.ImportJobBase: Retrieved 118 records.

18/04/12 22:01:41 INFO util.AppendUtils: Creating missing output directory - myimport_add

18/04/12 22:01:41 INFO tool.ImportTool: Incremental import complete! To run another incremental import of all data following this import, supply the following arguments:

18/04/12 22:01:41 INFO tool.ImportTool: --incremental append

18/04/12 22:01:41 INFO tool.ImportTool: --check-column help_keyword_id

18/04/12 22:01:41 INFO tool.ImportTool: --last-value 618

18/04/12 22:01:41 INFO tool.ImportTool: (Consider saving this with 'sqoop job --create')

[hadoop@hadoop3 ~]$

3、把MySQL数据库中的表数据导入到hbase

普通导入:先创建Hbase里面的表,再执行导入的语句

hbase(main):001:0> create 'new_help_keyword', 'base_info'

sqoop import \

--connect jdbc:mysql://hadoop1:3306/mysql \

--username root \

--password root \

--table help_keyword \

--hbase-table new_help_keyword \

--column-family person \

--hbase-row-key help_keyword_id

实验案例:

sqoop导入数据的更多相关文章

- Sqoop导入数据到mysql数据库报错:ERROR tool.ExportTool: Error during export: Export job failed!(已解决)

问题描述: Container killed by the ApplicationMaster. Container killed on request. Exit code is 143 Conta ...

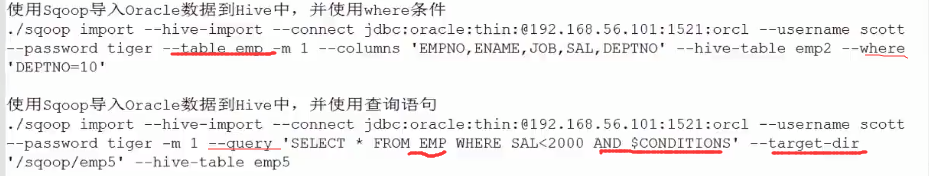

- sqoop导入数据到hive---2

1.hive-table 从mysql导入数据到hive表中,可以使用--hive-table来指定hive的表名,不指定hive表名,则hive表名与mysql表名保持一致. sqoop impor ...

- sqoop导入数据到hive

1.1hive-import参数 使用--hive-import就可以将数据导入到hive中,但是下面这个命令执行后会报错,报错信息如下: sqoop import --connect jdbc:my ...

- sqoop导入数据到hive中元数据问题

简单配置了sqoop之后开始使用,之前用的时候很好用,也不记得有没有启动hivemetastore,今天用的时候没有启动,结果导入数据时,如果使用了db.tablename,就会出现找不到数据库的错, ...

- 1.6-1.10 使用Sqoop导入数据到HDFS及一些设置

一.导数据 1.import和export Sqoop可以在HDFS/Hive和关系型数据库之间进行数据的导入导出,其中主要使用了import和export这两个工具.这两个工具非常强大, 提供了很多 ...

- 大数据学习——sqoop导入数据

把数据从关系型数据库导入到hadoop 启动sqoop 导入表表数据到HDFS 下面的命令用于从MySQL数据库服务器中的emp表导入HDFS. sqoop import \ --connect jd ...

- sqoop导入数据到hive表中的相关操作

1.使用sqoop创建表并且指定对应的hive表中的字段的数据类型,同时指定该表的分区字段名称 sqoop create-hive-table --connect "jdbc:oracle: ...

- 1.11-1.12 Sqoop导入数据时两种增量方式导入及direct

一.增量数据的导入 1.两种方式 ## query 有一个唯一标识符,通常这个表都有一个字段,类似于插入时间createtime where createtime => 201509240000 ...

- 使用sqoop1.4.4从oracle导入数据到hive中错误记录及解决方案

在使用命令导数据过程中,出现如下错误 sqoop import --hive-import --connect jdbc:oracle:thin:@192.168.29.16:1521/testdb ...

随机推荐

- Luogu 2322 [HNOI2006]最短母串问题

唔,太菜了,弄了好几个小时. 状压dp,设$f_{s, i}$表示选了集合$s$,以$i$结尾的最短长度,设$g_{i, j}$表示串$i$的后缀和串$j$的前缀的最长匹配长度. $f_{s, i} ...

- redis配置文件常用配置介绍

博客内容首发地址 参数说明 redis.conf 配置项说明如下: Redis默认不是以守护进程的方式运行,可以通过该配置项修改,使用yes启用守护进程 daemonize no 当Redis以守护进 ...

- java 学习第三篇if判断

JAVA 判断 单词: if 如果 else 否则 单分支: If(条件) { 代码块 } If是一个判断语句.代码格式如上. If括号的内是表达式.如果表达式值是成立的便执行代码块.之后在执行IF语 ...

- 51nod 1350 斐波那契表示(递推+找规律)

传送门 题意 分析 我们发现该数列遵循下列规律: 1 1,2 1,2,2 1,2,2,2,3 1,2,2,2,3,2,3,3 我们令A[i]表示f[i]开始长为f[i-1]的i的最短表示和 那么得到A ...

- loj #2044. 「CQOI2016」手机号码

#2044. 「CQOI2016」手机号码 内存限制:256 MiB时间限制:1000 ms标准输入输出 题目类型:传统评测方式:文本比较 上传者: 匿名 提交提交记录统计讨论测试数据 题目描述 ...

- CI框架源码学习笔记4——Benchmark.php

我们回到Codeigniter.php上继续往下看,第一个引入的类文件是Benchmark.php,这个文件主要是提供基准测试,具体使用方法参考手册http://codeigniter.org.cn/ ...

- CSS之引入样式

CSS引入样式 内部样式 内嵌式是将CSS代码集中写在HTML文档的head头部标签中,并且用style标签定义,其基本语法格式如下: <head> <style type=&quo ...

- P1891 疯狂LCM

\(\color{#0066ff}{ 题目描述 }\) 众所周知,czmppppp是数学大神犇.一天,他给众蒟蒻们出了一道数论题,蒟蒻们都惊呆了... 给定正整数N,求LCM(1,N)+LCM(2,N ...

- 【模板】普通平衡树 Splay

题目描述 您需要写一种数据结构(可参考题目标题),来维护一些数,其中需要提供以下操作: 插入xxx数 删除xxx数(若有多个相同的数,因只删除一个) 查询xxx数的排名(排名定义为比当前数小的数的个数 ...

- 牛客寒假算法基础集训营4 I Applese 的回文串

链接:https://ac.nowcoder.com/acm/contest/330/I来源:牛客网 自从 Applese 学会了字符串之后,精通各种字符串算法,比如……判断一个字符串是不是回文串. ...