Docker学习-Kubernetes - 集群部署

Docker学习

Docker学习-VMware Workstation 本地多台虚拟机互通,主机网络互通搭建

Docker学习-Spring Boot on Docker

Docker学习-Kubernetes - Spring Boot 应用

简介

kubernetes,简称K8s,是用8代替8个字符“ubernete”而成的缩写。是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效(powerful),Kubernetes提供了应用部署,规划,更新,维护的一种机制。

基本概念

Kubernetes 中的绝大部分概念都抽象成 Kubernetes 管理的一种资源对象

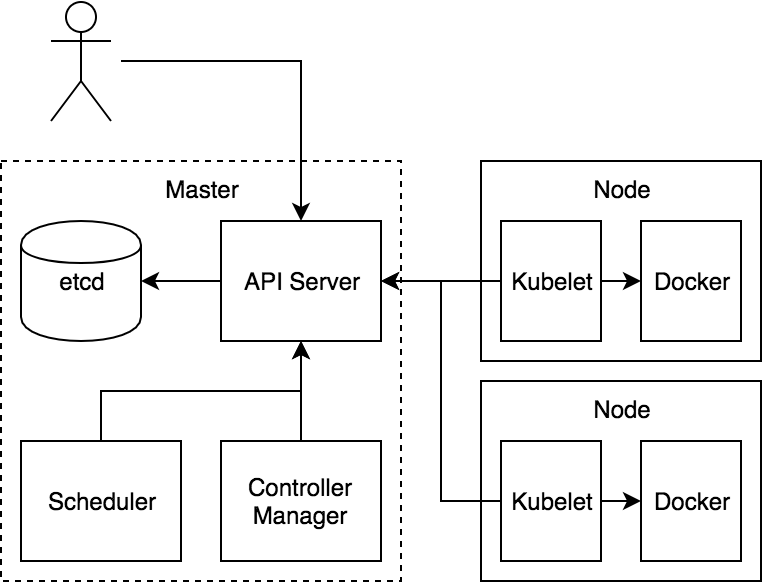

- Master:Master 节点是 Kubernetes 集群的控制节点,负责整个集群的管理和控制。Master 节点上包含以下组件:

- kube-apiserver:集群控制的入口,提供 HTTP REST 服务

- kube-controller-manager:Kubernetes 集群中所有资源对象的自动化控制中心

- kube-scheduler:负责 Pod 的调度

Node:Node 节点是 Kubernetes 集群中的工作节点,Node 上的工作负载由 Master 节点分配,工作负载主要是运行容器应用。Node 节点上包含以下组件:

- kubelet:负责 Pod 的创建、启动、监控、重启、销毁等工作,同时与 Master 节点协作,实现集群管理的基本功能。

- kube-proxy:实现 Kubernetes Service 的通信和负载均衡

- 运行容器化(Pod)应用

Pod: Pod 是 Kubernetes 最基本的部署调度单元。每个 Pod 可以由一个或多个业务容器和一个根容器(Pause 容器)组成。一个 Pod 表示某个应用的一个实例

- ReplicaSet:是 Pod 副本的抽象,用于解决 Pod 的扩容和伸缩

- Deployment:Deployment 表示部署,在内部使用ReplicaSet 来实现。可以通过 Deployment 来生成相应的 ReplicaSet 完成 Pod 副本的创建

- Service:Service 是 Kubernetes 最重要的资源对象。Kubernetes 中的 Service 对象可以对应微服务架构中的微服务。Service 定义了服务的访问入口,服务的调用者通过这个地址访问 Service 后端的 Pod 副本实例。Service 通过 Label Selector 同后端的 Pod 副本建立关系,Deployment 保证后端Pod 副本的数量,也就是保证服务的伸缩性。

Kubernetes 主要由以下几个核心组件组成:

- etcd 保存了整个集群的状态,就是一个数据库;

- apiserver 提供了资源操作的唯一入口,并提供认证、授权、访问控制、API 注册和发现等机制;

- controller manager 负责维护集群的状态,比如故障检测、自动扩展、滚动更新等;

- scheduler 负责资源的调度,按照预定的调度策略将 Pod 调度到相应的机器上;

- kubelet 负责维护容器的生命周期,同时也负责 Volume(CSI)和网络(CNI)的管理;

- Container runtime 负责镜像管理以及 Pod 和容器的真正运行(CRI);

- kube-proxy 负责为 Service 提供 cluster 内部的服务发现和负载均衡;

当然了除了上面的这些核心组件,还有一些推荐的插件:

- kube-dns 负责为整个集群提供 DNS 服务

- Ingress Controller 为服务提供外网入口

- Heapster 提供资源监控

- Dashboard 提供 GUI

组件通信

Kubernetes 多组件之间的通信原理:

- apiserver 负责 etcd 存储的所有操作,且只有 apiserver 才直接操作 etcd 集群

apiserver 对内(集群中的其他组件)和对外(用户)提供统一的 REST API,其他组件均通过 apiserver 进行通信

- controller manager、scheduler、kube-proxy 和 kubelet 等均通过 apiserver watch API 监测资源变化情况,并对资源作相应的操作

- 所有需要更新资源状态的操作均通过 apiserver 的 REST API 进行

apiserver 也会直接调用 kubelet API(如 logs, exec, attach 等),默认不校验 kubelet 证书,但可以通过

--kubelet-certificate-authority开启(而 GKE 通过 SSH 隧道保护它们之间的通信)

比如最典型的创建 Pod 的流程:

- 用户通过 REST API 创建一个 Pod

- apiserver 将其写入 etcd

- scheduluer 检测到未绑定 Node 的 Pod,开始调度并更新 Pod 的 Node 绑定

- kubelet 检测到有新的 Pod 调度过来,通过 container runtime 运行该 Pod

- kubelet 通过 container runtime 取到 Pod 状态,并更新到 apiserver 中

集群部署

使用kubeadm工具安装

1. master和node 都用yum 安装kubelet,kubeadm,docker

2. master 上初始化:kubeadm init

3. master 上启动一个flannel的pod

4. node上加入集群:kubeadm join

准备环境

Centos7 192.168.50.21 k8s-master

Centos7 192.168.50.22 k8s-node01

Centos7 192.168.50.23 k8s-node02

修改主机名(3台机器都需要修改)

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

关闭防火墙

systemctl stop firewalld.service

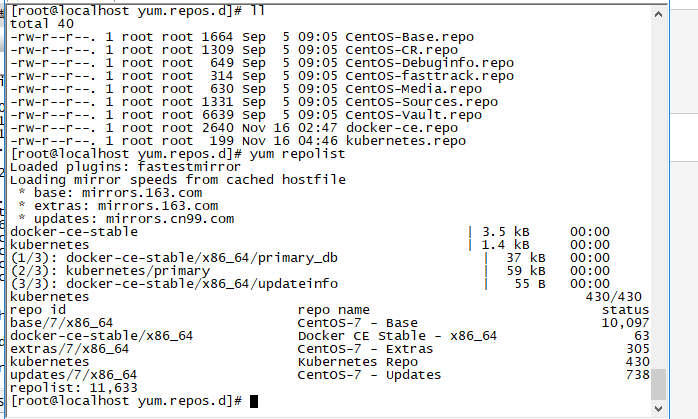

配置docker yum源

yum install -y yum-utils device-mapper-persistent-data lvm2 wget

cd /etc/yum.repos.d

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

配置kubernetes yum 源

cd /opt/

wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

wget https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

rpm --import yum-key.gpg

rpm --import rpm-package-key.gpg

cd /etc/yum.repos.d

vi kubernetes.repo

输入以下内容

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

enabled= yum repolist

master和node 安装kubelet,kubeadm,docker

yum install docker

yum install kubelet-1.13.1

yum install kubeadm-1.13.1

master 上安装kubectl

yum install kubectl-1.13.1

docker的配置

配置私有仓库和镜像加速地址,私有仓库配置参见 https://www.cnblogs.com/woxpp/p/11871886.html

vi /etc/docker/daemon.json

{

"registry-mirror":[

"http://hub-mirror.c.163.com"

],

"insecure-registries":[

"192.168.50.24:5000"

]

}

启动docker

systemctl daemon-reload

systemctl start docker

docker info

master 上初始化:kubeadm init

vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

kubeadm init \

--apiserver-advertise-address=192.168.50.21 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.13.1 \

--pod-network-cidr=10.244.0.0/

初始化命令说明:

--apiserver-advertise-address指明用 Master 的哪个 interface 与 Cluster 的其他节点通信。如果 Master 有多个 interface,建议明确指定,如果不指定,kubeadm 会自动选择有默认网关的 interface。

--pod-network-cidr指定 Pod 网络的范围。Kubernetes 支持多种网络方案,而且不同网络方案对 --pod-network-cidr 有自己的要求,这里设置为 10.244.0.0/16 是因为我们将使用 flannel 网络方案,必须设置成这个 CIDR。

--image-repositoryKubenetes默认Registries地址是 k8s.gcr.io,在国内并不能访问 gcr.io,在1.13版本中我们可以增加–image-repository参数,默认值是 k8s.gcr.io,将其指定为阿里云镜像地址:registry.aliyuncs.com/google_containers。

--kubernetes-version=v1.13.1 关闭版本探测,因为它的默认值是stable-1,会导致从https://dl.k8s.io/release/stable-1.txt下载最新的版本号,我们可以将其指定为固定版本(最新版:v1.13.1)来跳过网络请求。

初始化过程中

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull' 是在下载镜像文件,过程比较慢。

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 24.002300 seconds 这个过程也比较慢 可以忽略

[init] Using Kubernetes version: v1.13.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.50.21]

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.50.21 127.0.0.1 ::]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.50.21 127.0.0.1 ::]

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 24.002300 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master" as an annotation

[mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 7ax0k4.nxpjjifrqnbrpojv

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node

as root: kubeadm join 192.168.50.21: --token 7ax0k4.nxpjjifrqnbrpojv --discovery-token-ca-cert-hash sha256:95942f10859a71879c316e75498de02a8b627725c37dee33f74cd040e1cd9d6b

初始化过程说明:

1) [preflight] kubeadm 执行初始化前的检查。

2) [kubelet-start] 生成kubelet的配置文件”/var/lib/kubelet/config.yaml”

3) [certificates] 生成相关的各种token和证书

4) [kubeconfig] 生成 KubeConfig 文件,kubelet 需要这个文件与 Master 通信

5) [control-plane] 安装 Master 组件,会从指定的 Registry 下载组件的 Docker 镜像。

6) [bootstraptoken] 生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

7) [addons] 安装附加组件 kube-proxy 和 kube-dns。

8) Kubernetes Master 初始化成功,提示如何配置常规用户使用kubectl访问集群。

9) 提示如何安装 Pod 网络。

10) 提示如何注册其他节点到 Cluster。

异常情况:

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING Swap]: running with swap on is not supported. Please disable swap

[WARNING Hostname]: hostname "k8s-master" could not be reached

[WARNING Hostname]: hostname "k8s-master": lookup k8s-master on 114.114.114.114:: no such host

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

运行

systemctl enable docker.service

systemctl enable kubelet.service

会提示以下错误

[WARNING Hostname]: hostname "k8s-master" could not be reached

[WARNING Hostname]: hostname "k8s-master": lookup k8s-master on 114.114.114.114:: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

配置host

cat >> /etc/hosts << EOF

192.168.50.21 k8s-master

192.168.50.22 k8s-node01

192.168.50.23 k8s-node02

EOF

再次运行初始化命令会出现

[ERROR NumCPU]: the number of available CPUs is less than the required 2 --设置虚拟机CPU个数大于2

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/bridge/bridge-nf-call-ip6tables设置好虚拟机CPU个数,重启后再次运行:

kubeadm init \

--apiserver-advertise-address=192.168.50.21 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.13.1 \

--pod-network-cidr=10.244.0.0/

[init] Using Kubernetes version: v1.13.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull

解决办法:docker.io仓库对google的容器做了镜像,可以通过下列命令下拉取相关镜像

先看下需要用到哪些

kubeadm config images list

配置yum源

[root@k8s-master opt]# vi kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: v1.13.1

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

certSANs:

- 192.168.50.21

controlPlaneEndpoint: "192.168.50.20:16443"

networking:

# This CIDR is a Calico default. Substitute or remove for your CNI provider.

podSubnet: "172.168.0.0/16"

kubeadm config images pull --config /opt/kubeadm-config.yaml

初始化master

kubeadm init --config=kubeadm-config.yaml --upload-certs

xecution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[ERROR Port-]: Port is in use

kubeadm会自动检查当前环境是否有上次命令执行的“残留”。如果有,必须清理后再行执行init。我们可以通过”kubeadm reset”来清理环境,以备重来。

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

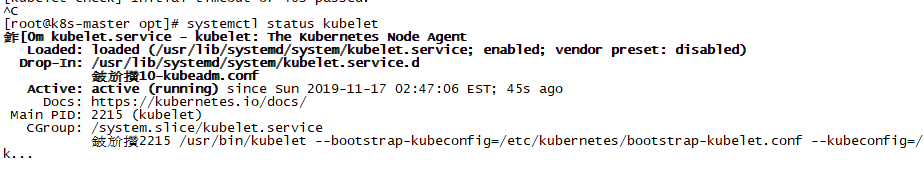

[kubelet-check] Initial timeout of 40s passed.

==原因==

这是因为kubelet没启动

==解决==

systemctl restart kubelet

如果启动不了kubelet

kubelet.service - kubelet: The Kubernetes Node Agent

则可能是swap交换分区还开启的原因

-关闭swap

swapoff -a

-配置kubelet

vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

再次运行

kubeadm init \

--apiserver-advertise-address=192.168.50.21 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.13.1 \

--pod-network-cidr=10.244.0.0/

配置 kubectl

kubectl 是管理 Kubernetes Cluster 的命令行工具,前面我们已经在所有的节点安装了 kubectl。Master 初始化完成后需要做一些配置工作,然后 kubectl 就能使用了。

依照 kubeadm init 输出的最后提示,推荐用 Linux 普通用户执行 kubectl。

- 创建普通用户centos

#创建普通用户并设置密码123456

useradd centos && echo "centos:123456" | chpasswd centos #追加sudo权限,并配置sudo免密

sed -i '/^root/a\centos ALL=(ALL) NOPASSWD:ALL' /etc/sudoers #保存集群安全配置文件到当前用户.kube目录

su - centos

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config #启用 kubectl 命令自动补全功能(注销重新登录生效)

echo "source <(kubectl completion bash)" >> ~/.bashrc

需要这些配置命令的原因是:Kubernetes 集群默认需要加密方式访问。所以,这几条命令,就是将刚刚部署生成的 Kubernetes 集群的安全配置文件,保存到当前用户的.kube 目录下,kubectl 默认会使用这个目录下的授权信息访问 Kubernetes 集群。

如果不这么做的话,我们每次都需要通过 export KUBECONFIG 环境变量告诉 kubectl 这个安全配置文件的位置。

配置完成后centos用户就可以使用 kubectl 命令管理集群了。

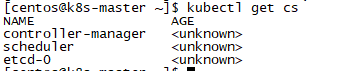

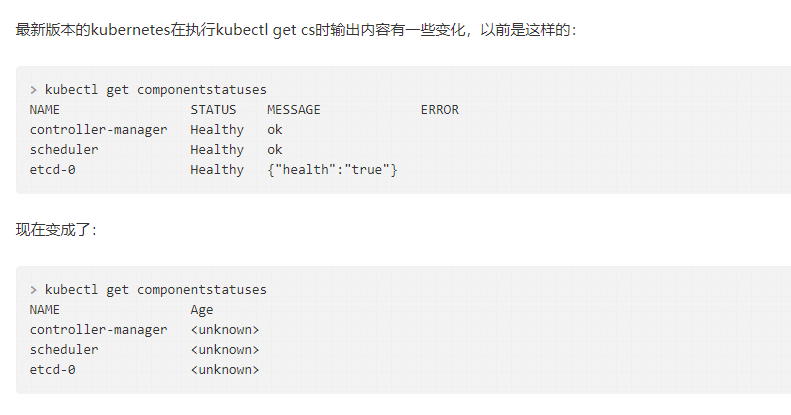

查看集群状态:

kubectl get cs

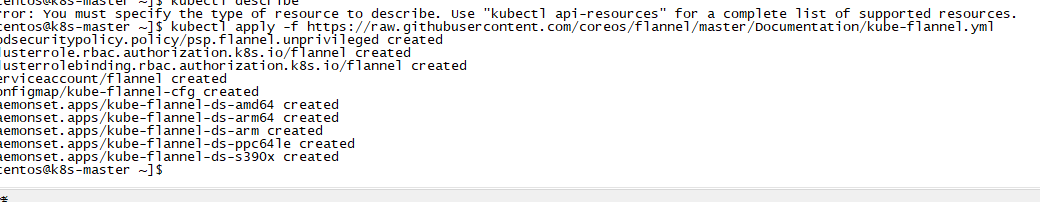

部署网络插件

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

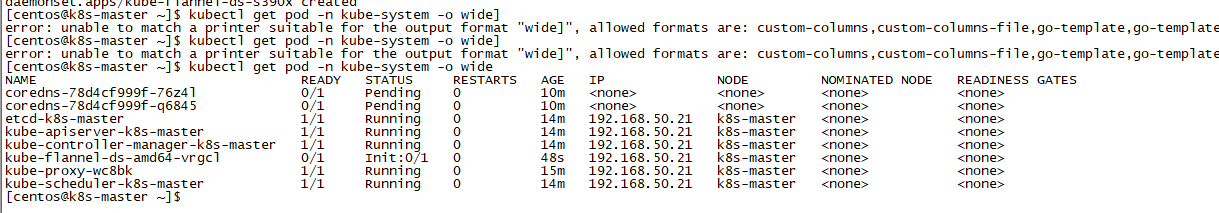

kubectl get 重新检查 Pod 的状态

部署worker节点

在master机器保存生成号的镜像文件

docker save -o master.tar registry.aliyuncs.com/google_containers/kube-proxy:v1.13.1 registry.aliyuncs.com/google_containers/kube-apiserver:v1.13.1 registry.aliyuncs.com/google_containers/kube-controller-manager:v1.13.1 registry.aliyuncs.com/google_containers/kube-scheduler:v1.13.1 registry.aliyuncs.com/google_containers/coredns:1.2. registry.aliyuncs.com/google_containers/etcd:3.2. registry.aliyuncs.com/google_containers/pause:3.1

注意对应的版本号

将master上保存的镜像同步到节点上

scp master.tar node01:/root/

scp master.tar node02:/root/

将镜像导入本地,node01,node02

docker load< master.tar

配置host,node01,node02

cat >> /etc/hosts << EOF

192.168.50.21 k8s-master

192.168.50.22 k8s-node01

192.168.50.23 k8s-node02

EOF

配置iptables,node01,node02

echo > /proc/sys/net/bridge/bridge-nf-call-iptables

echo > /proc/sys/net/bridge/bridge-nf-call-ip6tables

-关闭swap,node01,node02

swapoff -a

-配置kubelet,node01,node02

vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

systemctl enable docker.service

systemctl enable kubelet.service

启动docker,node01,node02

service docker strat

部署网络插件,node01,node02

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

获取join指令,master

kubeadm token create --print-join-command

kubeadm token create --print-join-command

kubeadm join 192.168.50.21: --token n9g4nq.kf8ppgpgb3biz0n5 --discovery-token-ca-cert-hash sha256:95942f10859a71879c316e75498de02a8b627725c37dee33f74cd040e1cd9d6b

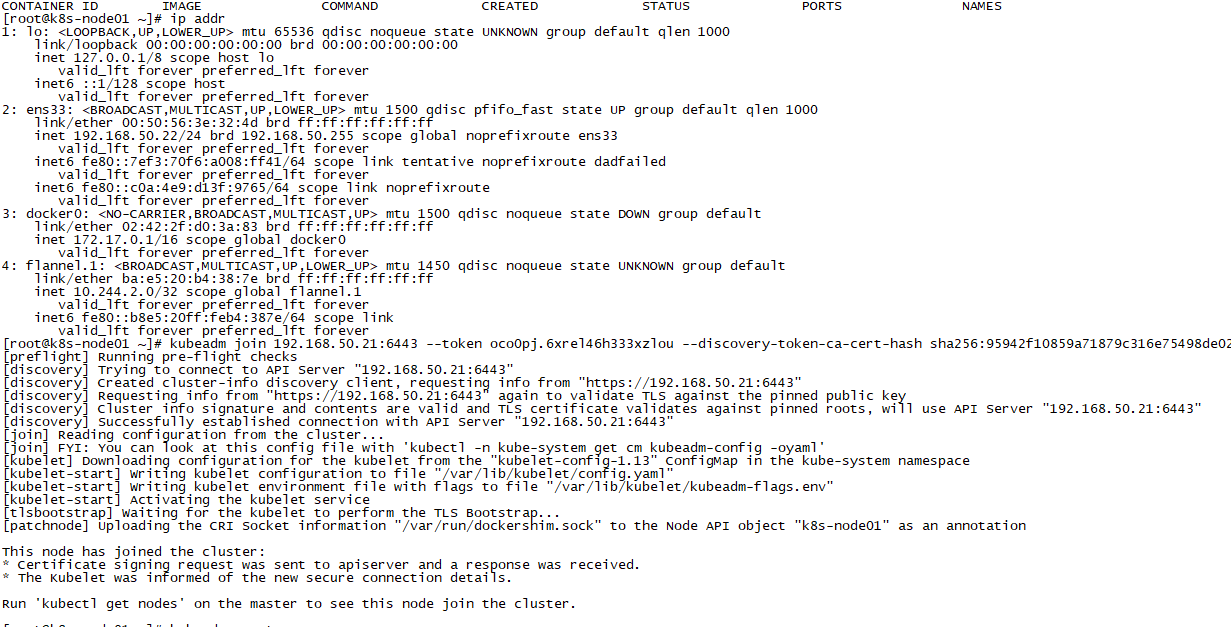

在子节点运行指令 ,node01,node02

kubeadm join 192.168.50.21: --token n9g4nq.kf8ppgpgb3biz0n5 --discovery-token-ca-cert-hash sha256:95942f10859a71879c316e75498de02a8b627725c37dee33f74cd040e1cd9d6b

[preflight] Running pre-flight checks

[discovery] Trying to connect to API Server "192.168.50.21:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.50.21:6443"

[discovery] Requesting info from "https://192.168.50.21:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.50.21:6443"

[discovery] Successfully established connection with API Server "192.168.50.21:6443"

[join] Reading configuration from the cluster...

[join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] WARNING: unable to stop the kubelet service momentarily: [exit status ]

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-node01" as an annotation This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

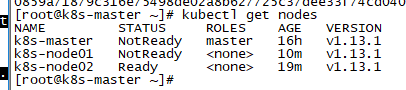

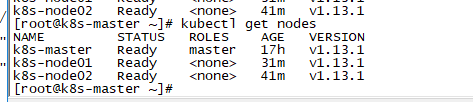

在master上查看节点状态

kubectl get nodes

这种状态是错误的 ,只有一台联机正确

查看node01,和node02发现 node01有些进程没有完全启动

删除node01所有运行的容器,node01

docker stop $(docker ps -q) & docker rm $(docker ps -aq)

重置 kubeadm ,node01

kubeadm reset

获取join指令,master

kubeadm token create --print-join-command

再次在node01上运行join

查看node01镜像运行状态

查看master状态

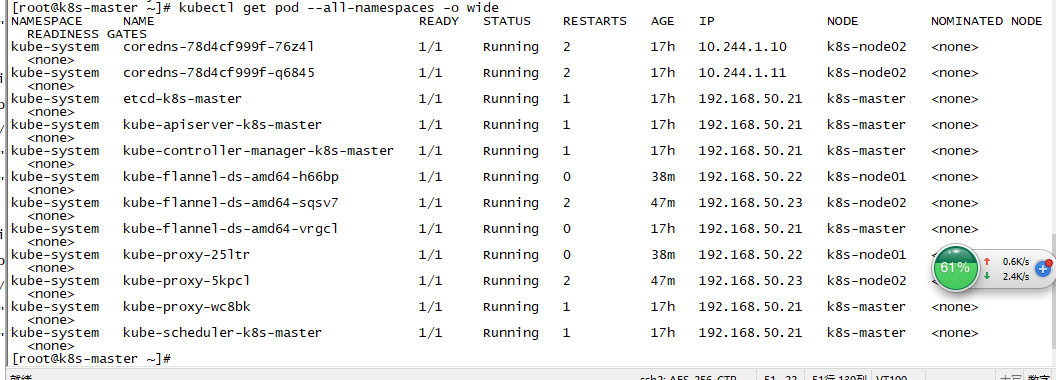

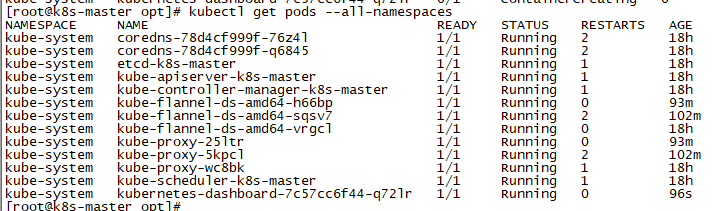

nodes状态全部为ready,由于每个节点都需要启动若干组件,如果node节点的状态是 NotReady,可以查看所有节点pod状态,确保所有pod成功拉取到镜像并处于running状态:

kubectl get pod --all-namespaces -o wide

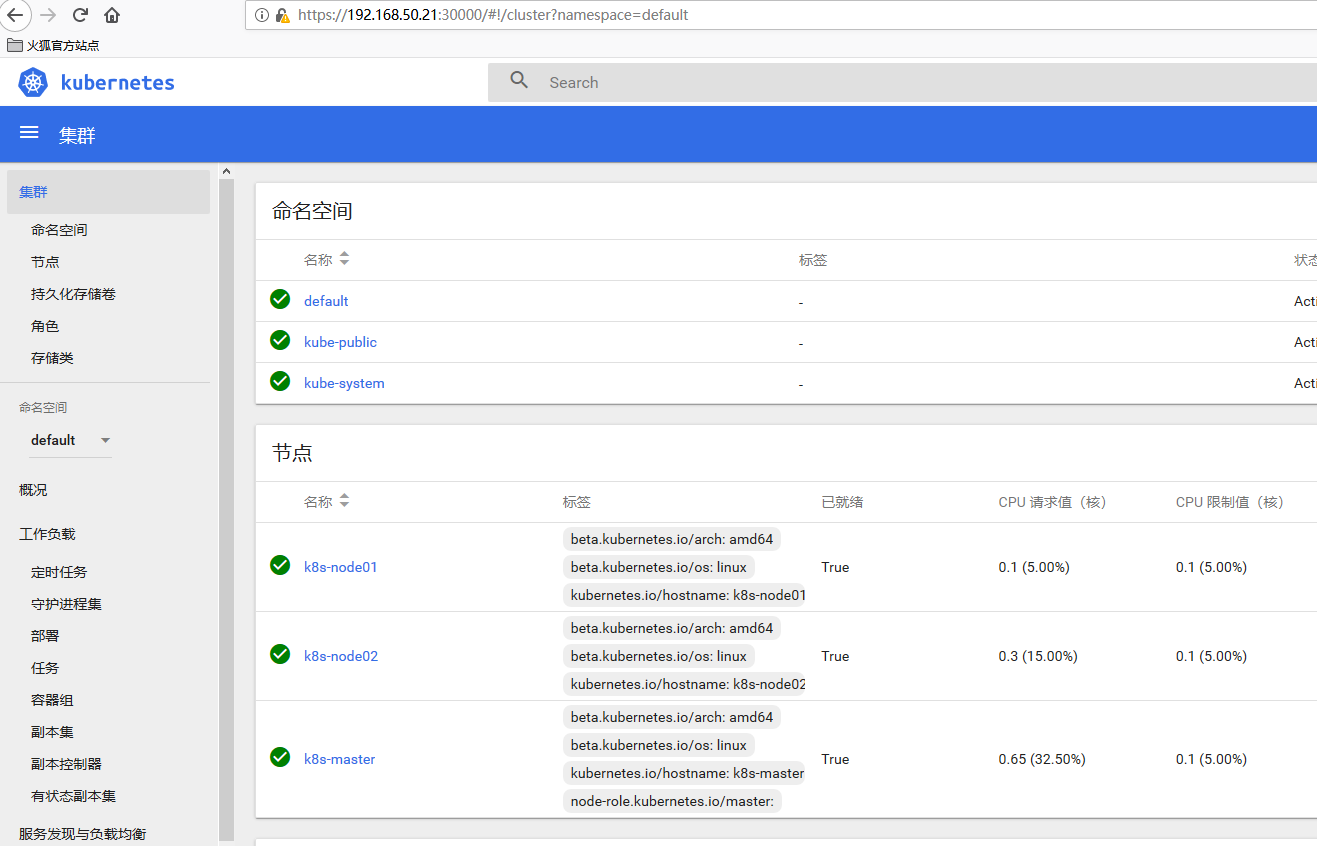

配置kubernetes UI图形化界面

创建kubernetes-dashboard.yaml

# Copyright The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License. # ------------------- Dashboard Secret ------------------- # apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque ---

# ------------------- Dashboard Service Account ------------------- # apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system ---

# ------------------- Dashboard Role & Role Binding ------------------- # kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"] ---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system ---

# ------------------- Dashboard Deployment ------------------- # kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas:

revisionHistoryLimit:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/rsqlh/kubernetes-dashboard:v1.10.1

imagePullPolicy: IfNotPresent

ports:

- containerPort:

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port:

initialDelaySeconds:

timeoutSeconds:

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule ---

# ------------------- Dashboard Service ------------------- # kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30000

selector:

k8s-app: kubernetes-dashboard

执行以下命令创建kubernetes-dashboard:

kubectl create -f kubernetes-dashboard.yaml

如果出现

Error from server (AlreadyExists): error when creating "kubernetes-dashboard.yaml": secrets "kubernetes-dashboard-certs" already exists

Error from server (AlreadyExists): error when creating "kubernetes-dashboard.yaml": serviceaccounts "kubernetes-dashboard" already exists

Error from server (AlreadyExists): error when creating "kubernetes-dashboard.yaml": roles.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" already exists

Error from server (AlreadyExists): error when creating "kubernetes-dashboard.yaml": rolebindings.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" already exists

Error from server (AlreadyExists): error when creating "kubernetes-dashboard.yaml": deployments.apps "kubernetes-dashboard" already exists

运行delete清理

kubectl delete -f kubernetes-dashboard.yaml

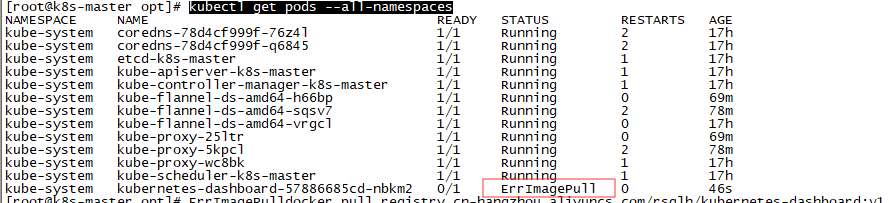

查看组件运行状态

kubectl get pods --all-namespaces

ErrImagePull 拉取镜像失败

手动拉取 并重置tag

docker pull registry.cn-hangzhou.aliyuncs.com/rsqlh/kubernetes-dashboard:v1.10.1

docker tag registry.cn-hangzhou.aliyuncs.com/rsqlh/kubernetes-dashboard:v1.10.1 k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

重新创建

ImagePullBackOff

默认情况是会根据配置文件中的镜像地址去拉取镜像,如果设置为IfNotPresent 和Never就会使用本地镜像。

IfNotPresent :如果本地存在镜像就优先使用本地镜像。

Never:直接不再去拉取镜像了,使用本地的;如果本地不存在就报异常了。

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/rsqlh/kubernetes-dashboard:v1.10.1

imagePullPolicy: IfNotPresent

查看映射状态

kubectl get service -n kube-system

创建能够访问 Dashboard 的用户

新建文件 account.yaml ,内容如下:

# Create Service Account

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

# Create ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

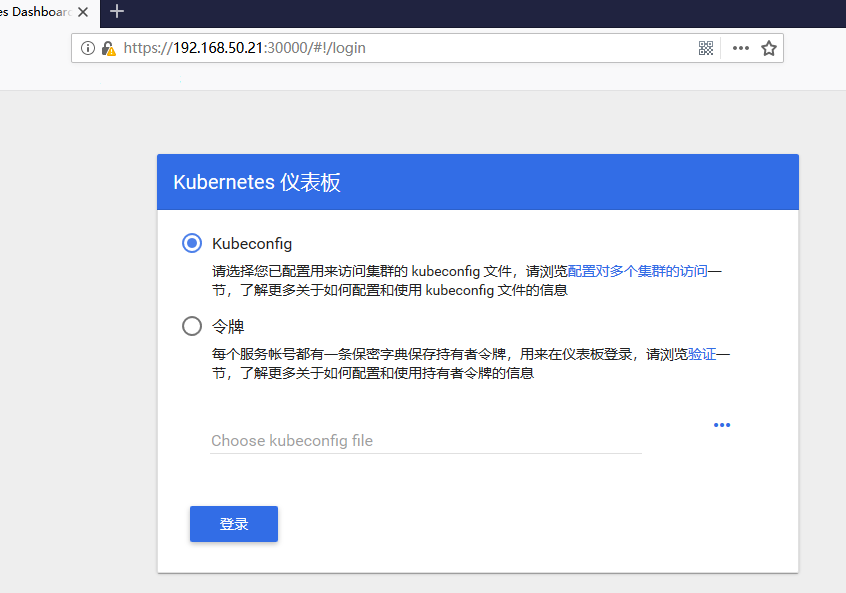

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

复制token登陆

configmaps is forbidden: User "system:serviceaccount:kube-system:admin-user" cannot list resource "configmaps" in API group "" in the namespace "default"

授权用户

kubectl create clusterrolebinding test:admin-user --clusterrole=cluster-admin --serviceaccount=kube-system:admin-user

NodePort方式,可以到任意一个节点的XXXX端口查看

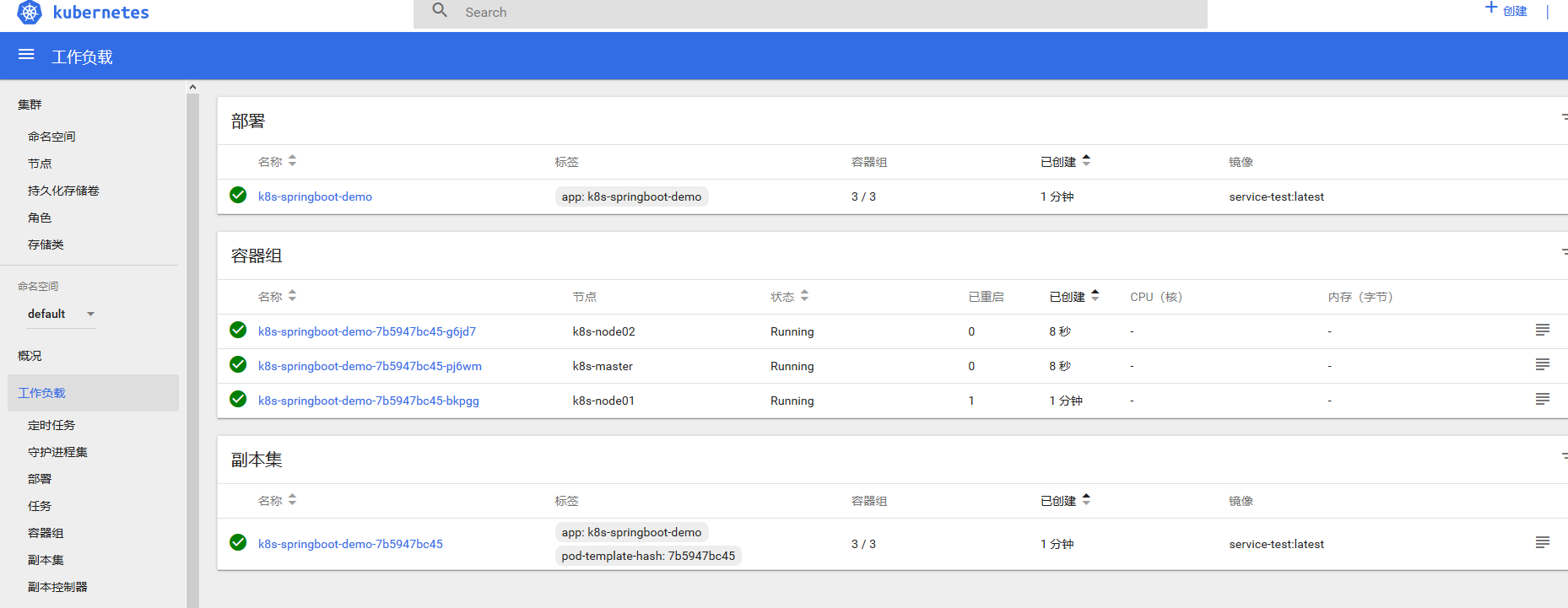

Docker学习-Kubernetes - Spring Boot 应用

本文参考:

https://www.cnblogs.com/tylerzhou/p/10971336.html

https://www.cnblogs.com/zoujiaojiao/p/10986320.html

Docker学习-Kubernetes - 集群部署的更多相关文章

- Kubernetes集群部署史上最详细(一)Kubernetes集群安装

适用部署结构以及版本 本系列中涉及的部署方式和脚本适用于1.13.x和1.14,而且采取的是二进制程序部署方式. 脚本支持的部署模式 最小部署模式 3台主机,1台为k8s的master角色,其余2台为 ...

- Kubernetes集群部署关键知识总结

Kubernetes集群部署需要安装的组件东西很多,过程复杂,对服务器环境要求很苛刻,最好是能连外网的环境下安装,有些组件还需要连google服务器下载,这一点一般很难满足,因此最好是能提前下载好准备 ...

- 基于Kubernetes集群部署skyDNS服务

目录贴:Kubernetes学习系列 在之前几篇文章的基础,(Centos7部署Kubernetes集群.基于kubernetes集群部署DashBoard.为Kubernetes集群部署本地镜像仓库 ...

- 为Kubernetes集群部署本地镜像仓库

目录贴:Kubernetes学习系列 经过之前两篇文章:Centos7部署Kubernetes集群.基于kubernetes集群部署DashBoard,我们基本上已经能够在k8s的集群上部署一个应用了 ...

- kubernetes集群部署

鉴于Docker如此火爆,Google推出kubernetes管理docker集群,不少人估计会进行尝试.kubernetes得到了很多大公司的支持,kubernetes集群部署工具也集成了gce,c ...

- Gitlab CI 集成 Kubernetes 集群部署 Spring Boot 项目

在上一篇博客中,我们成功将 Gitlab CI 部署到了 Docker 中去,成功创建了 Gitlab CI Pipline 来执行 CI/CD 任务.那么这篇文章我们更进一步,将它集成到 K8s 集 ...

- kubernetes 集群部署

kubernetes 集群部署 环境JiaoJiao_Centos7-1(152.112) 192.168.152.112JiaoJiao_Centos7-2(152.113) 192.168.152 ...

- linux运维、架构之路-Kubernetes集群部署

一.kubernetes介绍 Kubernetes简称K8s,它是一个全新的基于容器技术的分布式架构领先方案.Kubernetes(k8s)是Google开源的容器集群管理系统(谷歌内部 ...

- Kubernetes 集群部署(2) -- Etcd 集群

Kubenetes 集群部署规划: 192.168.137.81 Master 192.168.137.82 Node 192.168.137.83 Node 以下在 Master 节点操作. ...

随机推荐

- Ubuntu16.04 安装apache+mysql+php(LAMP)

记录下ubuntu环境下安装apache+mysql+php(LAMP)环境. 0x01安装apache sudo apt-get update sudo apt-get install apache ...

- CTFd平台部署

学校要办ctf了,自己一个人给学校搭建踩了好多坑啊..这里记录一下吧 心累心累 这里只记录尝试成功的过程 有些尝试失败的就没贴上来 为各位搭建的时候节省一部分时间吧. ubuntu18搭建 0x01 ...

- wireshark分析https

0x01 分析淘宝网站的https数据流 打开淘宝 wireshark抓取到如下 第一部分: 因为https是基于http协议上的,可以看到首先也是和http协议一样的常规的TCP三次握手的连接建立, ...

- POJ 1753 Flip Game(状态压缩+BFS)

题目网址:http://poj.org/problem?id=1753 题目: Flip Game Description Flip game is played on a rectangular 4 ...

- 《HelloGitHub》第 43 期

兴趣是最好的老师,HelloGitHub 就是帮你找到兴趣! 简介 分享 GitHub 上有趣.入门级的开源项目. 这是一个面向编程新手.热爱编程.对开源社区感兴趣 人群的月刊,月刊的内容包括:各种编 ...

- 徐明星系列之徐明星创办的OK资本成为RnF金融有限公司的锚定投资者

12月17日,由区块链专家徐明星创办的OK集团的投资部门OK资本宣布,它将成为RnF金融有限公司的锚定投资者.OK集团成立于2012年,创始人徐明星是前豆丁网CTO,从豆丁网离职后,徐明星创办了OK集 ...

- StreamWriter 相关知识分享

在介绍StreamWriter之前,我们首先来了解一下它的父类TextWriter. 一.TextWriter 1.TextWriter的构造函数和常用属性方法 下面是TextWriter的构造函数: ...

- 游图邦YOTUBANG是如何搭建生态系统的?

现在的我们最关心的一个问题就是任何一个行业,如果没有办法很好的落地,就算描绘的非常美好,那也只是空中楼阁.昙花一现而已,它无法实现长久的一个发展.互联网时代呢,就是一个流量为王的一个时代,谁拥有庞大的 ...

- django-表单之数据保存(七)

models.py class Student(models.Model): #字段映射,数据库中是male,female,后台显示的是男,女 choices={ ('male',"男&qu ...

- float使用0xFF

1. float f = 0xFFFFFFFF; 这一句完全是错误的用法,它不会使f变量内存变为4个0xFF,因为0xFFFFFFFF根本就不是有效的float数值,编译器无从处理,如果用printf ...