Python爬虫抓取微博评论

第一步:引入库

import time

import base64

import rsa

import binascii

import requests

import re

from PIL import Image

import random

from urllib.parse import quote_plus

import http.cookiejar as cookielib

import csv

import os

第二步:一些全局变量的设置

comment_path = 'comment'

agent = 'mozilla/5.0 (windowS NT 10.0; win64; x64) appLewEbkit/537.36 (KHTML, likE gecko) chrome/71.0.3578.98 safari/537.36'

headers = {'User-Agent': agent}

第三步:创立目录作为存放数据的

if not os.path.exists(comment_path):

os.mkdir(comment_path)

第四步:登陆类的创立

class WeiboLogin(object):

"""

通过登录 weibo.com 然后跳转到 m.weibo.cn

""" # 初始化数据

def __init__(self, user, password, cookie_path):

super(WeiboLogin, self).__init__()

self.user = user

self.password = password

self.session = requests.Session()

self.cookie_path = cookie_path

# LWPCookieJar是python中管理cookie的工具,可以将cookie保存到文件,或者在文件中读取cookie数据到程序

self.session.cookies = cookielib.LWPCookieJar(filename=self.cookie_path)

self.index_url = "http://weibo.com/login.php"

self.session.get(self.index_url, headers=headers, timeout=2)

self.postdata = dict() def get_su(self):

"""

对 email 地址和手机号码 先 javascript 中 encodeURIComponent

对应 Python 3 中的是 urllib.parse.quote_plus

然后在 base64 加密后decode

"""

username_quote = quote_plus(self.user)

username_base64 = base64.b64encode(username_quote.encode("utf-8"))

return username_base64.decode("utf-8") # 预登陆获得 servertime, nonce, pubkey, rsakv

def get_server_data(self, su):

"""与原来的相比,微博的登录从 v1.4.18 升级到了 v1.4.19

这里使用了 URL 拼接的方式,也可以用 Params 参数传递的方式

"""

pre_url = "http://login.sina.com.cn/sso/prelogin.php?entry=weibo&callback=sinaSSOController.preloginCallBack&su="

pre_url = pre_url + su + "&rsakt=mod&checkpin=1&client=ssologin.js(v1.4.19)&_="

pre_url = pre_url + str(int(time.time() * 1000))

pre_data_res = self.session.get(pre_url, headers=headers)

# print("*"*50)

# print(pre_data_res.text)

# print("*" * 50)

sever_data = eval(pre_data_res.content.decode("utf-8").replace("sinaSSOController.preloginCallBack", '')) return sever_data def get_password(self, servertime, nonce, pubkey):

"""对密码进行 RSA 的加密"""

rsaPublickey = int(pubkey, 16)

key = rsa.PublicKey(rsaPublickey, 65537) # 创建公钥

message = str(servertime) + '\t' + str(nonce) + '\n' + str(self.password) # 拼接明文js加密文件中得到

message = message.encode("utf-8")

passwd = rsa.encrypt(message, key) # 加密

passwd = binascii.b2a_hex(passwd) # 将加密信息转换为16进制。

return passwd def get_cha(self, pcid):

"""获取验证码,并且用PIL打开,

1. 如果本机安装了图片查看软件,也可以用 os.subprocess 的打开验证码

2. 可以改写此函数接入打码平台。

"""

cha_url = "https://login.sina.com.cn/cgi/pin.php?r="

cha_url = cha_url + str(int(random.random() * 100000000)) + "&s=0&p="

cha_url = cha_url + pcid

cha_page = self.session.get(cha_url, headers=headers)

with open("cha.jpg", 'wb') as f:

f.write(cha_page.content)

f.close()

try:

im = Image.open("cha.jpg")

im.show()

im.close()

except Exception as e:

print(u"请到当前目录下,找到验证码后输入") def pre_login(self):

# su 是加密后的用户名

su = self.get_su()

sever_data = self.get_server_data(su)

servertime = sever_data["servertime"]

nonce = sever_data['nonce']

rsakv = sever_data["rsakv"]

pubkey = sever_data["pubkey"]

showpin = sever_data["showpin"] # 这个参数的意义待探索

password_secret = self.get_password(servertime, nonce, pubkey) self.postdata = {

'entry': 'weibo',

'gateway': '',

'from': '',

'savestate': '',

'useticket': '',

'pagerefer': "https://passport.weibo.com",

'vsnf': '',

'su': su,

'service': 'miniblog',

'servertime': servertime,

'nonce': nonce,

'pwencode': 'rsa2',

'rsakv': rsakv,

'sp': password_secret,

'sr': '1366*768',

'encoding': 'UTF-8',

'prelt': '',

"cdult": "",

'url': 'http://weibo.com/ajaxlogin.php?framelogin=1&callback=parent.sinaSSOController.feedBackUrlCallBack',

'returntype': 'TEXT' # 这里是 TEXT 和 META 选择,具体含义待探索

}

return sever_data def login(self):

# 先不输入验证码登录测试

try:

sever_data = self.pre_login()

login_url = 'https://login.sina.com.cn/sso/login.php?client=ssologin.js(v1.4.19)&_'

login_url = login_url + str(time.time() * 1000)

login_page = self.session.post(login_url, data=self.postdata, headers=headers)

ticket_js = login_page.json()

ticket = ticket_js["ticket"]

except Exception as e:

sever_data = self.pre_login()

login_url = 'https://login.sina.com.cn/sso/login.php?client=ssologin.js(v1.4.19)&_'

login_url = login_url + str(time.time() * 1000)

pcid = sever_data["pcid"]

self.get_cha(pcid)

self.postdata['door'] = input(u"请输入验证码")

login_page = self.session.post(login_url, data=self.postdata, headers=headers)

ticket_js = login_page.json()

ticket = ticket_js["ticket"]

# 以下内容是 处理登录跳转链接

save_pa = r'==-(\d+)-'

ssosavestate = int(re.findall(save_pa, ticket)[0]) + 3600 * 7

jump_ticket_params = {

"callback": "sinaSSOController.callbackLoginStatus",

"ticket": ticket,

"ssosavestate": str(ssosavestate),

"client": "ssologin.js(v1.4.19)",

"_": str(time.time() * 1000),

}

jump_url = "https://passport.weibo.com/wbsso/login"

jump_headers = {

"Host": "passport.weibo.com",

"Referer": "https://weibo.com/",

"User-Agent": headers["User-Agent"]

}

jump_login = self.session.get(jump_url, params=jump_ticket_params, headers=jump_headers)

uuid = jump_login.text uuid_pa = r'"uniqueid":"(.*?)"'

uuid_res = re.findall(uuid_pa, uuid, re.S)[0]

web_weibo_url = "http://weibo.com/%s/profile?topnav=1&wvr=6&is_all=1" % uuid_res

weibo_page = self.session.get(web_weibo_url, headers=headers) # print(weibo_page.content.decode("utf-8") Mheaders = {

"Host": "login.sina.com.cn",

"User-Agent": agent

} # m.weibo.cn 登录的 url 拼接

_rand = str(time.time())

mParams = {

"url": "https://m.weibo.cn/",

"_rand": _rand,

"gateway": "",

"service": "sinawap",

"entry": "sinawap",

"useticket": "",

"returntype": "META",

"sudaref": "",

"_client_version": "0.6.26",

}

murl = "https://login.sina.com.cn/sso/login.php"

mhtml = self.session.get(murl, params=mParams, headers=Mheaders)

mhtml.encoding = mhtml.apparent_encoding

mpa = r'replace\((.*?)\);'

mres = re.findall(mpa, mhtml.text) # 关键的跳转步骤,这里不出问题,基本就成功了。

Mheaders["Host"] = "passport.weibo.cn"

self.session.get(eval(mres[0]), headers=Mheaders)

mlogin = self.session.get(eval(mres[0]), headers=Mheaders)

# print(mlogin.status_code)

# 进过几次 页面跳转后,m.weibo.cn 登录成功,下次测试是否登录成功

Mheaders["Host"] = "m.weibo.cn"

Set_url = "https://m.weibo.cn"

pro = self.session.get(Set_url, headers=Mheaders)

pa_login = r'isLogin":true,'

login_res = re.findall(pa_login, pro.text)

# print(login_res) # 可以通过 session.cookies 对 cookies 进行下一步相关操作

self.session.cookies.save()

# print("*"*50)

# print(self.cookie_path)

第五步:定义cookie的加载和信息的重定义

def get_cookies():

# 加载cookie

cookies = cookielib.LWPCookieJar("Cookie.txt")

cookies.load(ignore_discard=True, ignore_expires=True)

# 将cookie转换成字典

cookie_dict = requests.utils.dict_from_cookiejar(cookies)

return cookie_dict def info_parser(data):

id,time,text = data['id'],data['created_at'],data['text']

user = data['user']

uid,username,following,followed,gender = \

user['id'],user['screen_name'],user['follow_count'],user['followers_count'],user['gender']

return {

'wid':id,

'time':time,

'text':text,

'uid':uid,

'username':username,

'following':following,

'followed':followed,

'gender':gender

}

第六步:开始爬

def start_crawl(cookie_dict,id):

base_url = 'https://m.weibo.cn/comments/hotflow?id={}&mid={}&max_id_type=0'

next_url = 'https://m.weibo.cn/comments/hotflow?id={}&mid={}&max_id={}&max_id_type={}'

page = 1

id_type = 0

comment_count = 0

requests_count = 1

res = requests.get(url=base_url.format(id,id), headers=headers,cookies=cookie_dict)

while True:

print('parse page {}'.format(page))

page += 1

try:

data = res.json()['data']

wdata = []

max_id = data['max_id']

for c in data['data']:

comment_count += 1

row = info_parser(c)

wdata.append(info_parser(c))

if c.get('comments', None):

temp = []

for cc in c.get('comments'):

temp.append(info_parser(cc))

wdata.append(info_parser(cc))

comment_count += 1

row['comments'] = temp

print(row)

with open('{}/{}.csv'.format(comment_path, id), mode='a+', encoding='utf-8-sig', newline='') as f:

writer = csv.writer(f)

for d in wdata:

writer.writerow([d['wid'],d['time'],d['text'],d['uid'],d['username'],d['following'],d['followed'],d['gender']]) time.sleep(3)

except:

print(res.text)

id_type += 1

print('评论总数: {}'.format(comment_count)) res = requests.get(url=next_url.format(id, id, max_id,id_type), headers=headers,cookies=cookie_dict)

requests_count += 1

if requests_count%50==0:

print(id_type)

print(res.status_code)

第七步:主函数

if __name__ == '__main__':

username = "" # 用户名(注册的手机号)

password = "" # 密码

cookie_path = "Cookie.txt" # 保存cookie 的文件名称

id = '' # 爬取微博的 id

WeiboLogin(username, password, cookie_path).login()

with open('{}/{}.csv'.format(comment_path, id), mode='w', encoding='utf-8-sig', newline='') as f:

writer = csv.writer(f)

writer.writerow(['wid', 'time', 'text', 'uid', 'username', 'following', 'followed', 'gender'])

start_crawl(get_cookies(), id)

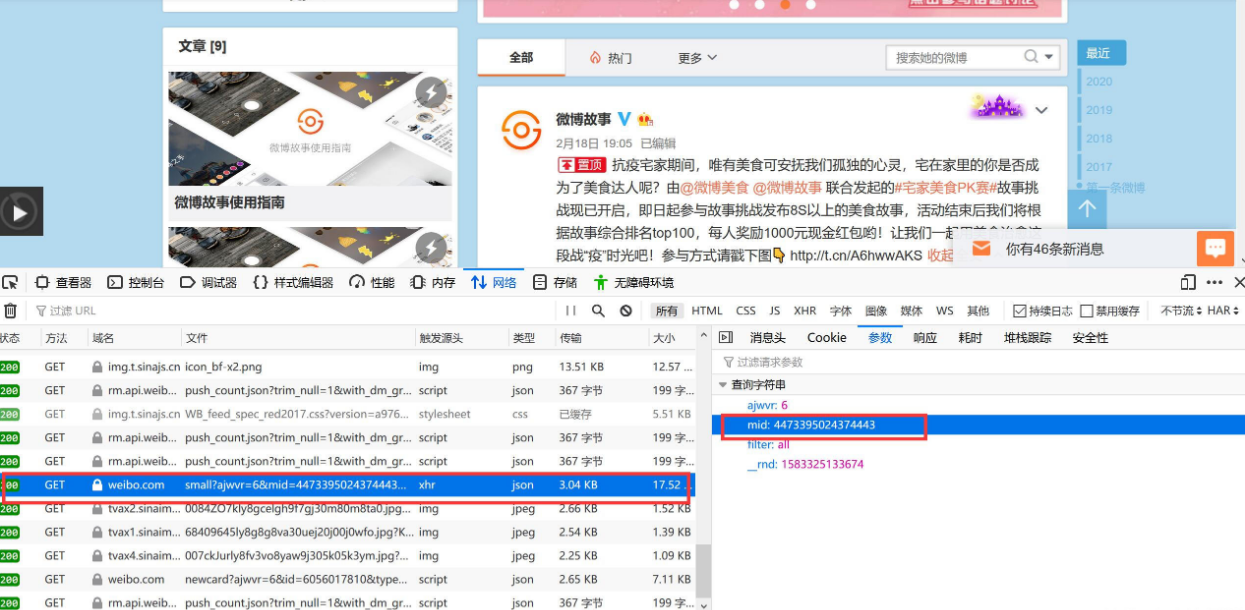

第八步:获取id

- 你需要获得想要找的微博id,那么对于小白来说怎么找id呢?

- 首先找到你想爬的微博,这里以微博故事为例,在浏览器内按下F12,并且点击评论按钮

- 点击‘网络’,找到一条像图中的get请求。查看它的参数,mid就是它的id

全文代码

为了方便大家拿去练习,以下是上文的全部代码整合!

import time

import base64

import rsa

import binascii

import requests

import re

from PIL import Image

import random

from urllib.parse import quote_plus

import http.cookiejar as cookielib

import csv

import os

comment_path = 'comment'

if not os.path.exists(comment_path):

os.mkdir(comment_path) agent = 'mozilla/5.0 (windowS NT 10.0; win64; x64) appLewEbkit/537.36 (KHTML, likE gecko) chrome/71.0.3578.98 safari/537.36'

headers = {'User-Agent': agent} class WeiboLogin(object):

"""

通过登录 weibo.com 然后跳转到 m.weibo.cn

""" # 初始化数据

def __init__(self, user, password, cookie_path):

super(WeiboLogin, self).__init__()

self.user = user

self.password = password

self.session = requests.Session()

self.cookie_path = cookie_path

# LWPCookieJar是python中管理cookie的工具,可以将cookie保存到文件,或者在文件中读取cookie数据到程序

self.session.cookies = cookielib.LWPCookieJar(filename=self.cookie_path)

self.index_url = "http://weibo.com/login.php"

self.session.get(self.index_url, headers=headers, timeout=2)

self.postdata = dict() def get_su(self):

"""

对 email 地址和手机号码 先 javascript 中 encodeURIComponent

对应 Python 3 中的是 urllib.parse.quote_plus

然后在 base64 加密后decode

"""

username_quote = quote_plus(self.user)

username_base64 = base64.b64encode(username_quote.encode("utf-8"))

return username_base64.decode("utf-8") # 预登陆获得 servertime, nonce, pubkey, rsakv

def get_server_data(self, su):

"""与原来的相比,微博的登录从 v1.4.18 升级到了 v1.4.19

这里使用了 URL 拼接的方式,也可以用 Params 参数传递的方式

"""

pre_url = "http://login.sina.com.cn/sso/prelogin.php?entry=weibo&callback=sinaSSOController.preloginCallBack&su="

pre_url = pre_url + su + "&rsakt=mod&checkpin=1&client=ssologin.js(v1.4.19)&_="

pre_url = pre_url + str(int(time.time() * 1000))

pre_data_res = self.session.get(pre_url, headers=headers)

# print("*"*50)

# print(pre_data_res.text)

# print("*" * 50)

sever_data = eval(pre_data_res.content.decode("utf-8").replace("sinaSSOController.preloginCallBack", '')) return sever_data def get_password(self, servertime, nonce, pubkey):

"""对密码进行 RSA 的加密"""

rsaPublickey = int(pubkey, 16)

key = rsa.PublicKey(rsaPublickey, 65537) # 创建公钥

message = str(servertime) + '\t' + str(nonce) + '\n' + str(self.password) # 拼接明文js加密文件中得到

message = message.encode("utf-8")

passwd = rsa.encrypt(message, key) # 加密

passwd = binascii.b2a_hex(passwd) # 将加密信息转换为16进制。

return passwd def get_cha(self, pcid):

"""获取验证码,并且用PIL打开,

1. 如果本机安装了图片查看软件,也可以用 os.subprocess 的打开验证码

2. 可以改写此函数接入打码平台。

"""

cha_url = "https://login.sina.com.cn/cgi/pin.php?r="

cha_url = cha_url + str(int(random.random() * 100000000)) + "&s=0&p="

cha_url = cha_url + pcid

cha_page = self.session.get(cha_url, headers=headers)

with open("cha.jpg", 'wb') as f:

f.write(cha_page.content)

f.close()

try:

im = Image.open("cha.jpg")

im.show()

im.close()

except Exception as e:

print(u"请到当前目录下,找到验证码后输入") def pre_login(self):

# su 是加密后的用户名

su = self.get_su()

sever_data = self.get_server_data(su)

servertime = sever_data["servertime"]

nonce = sever_data['nonce']

rsakv = sever_data["rsakv"]

pubkey = sever_data["pubkey"]

showpin = sever_data["showpin"] # 这个参数的意义待探索

password_secret = self.get_password(servertime, nonce, pubkey) self.postdata = {

'entry': 'weibo',

'gateway': '',

'from': '',

'savestate': '',

'useticket': '',

'pagerefer': "https://passport.weibo.com",

'vsnf': '',

'su': su,

'service': 'miniblog',

'servertime': servertime,

'nonce': nonce,

'pwencode': 'rsa2',

'rsakv': rsakv,

'sp': password_secret,

'sr': '1366*768',

'encoding': 'UTF-8',

'prelt': '',

"cdult": "",

'url': 'http://weibo.com/ajaxlogin.php?framelogin=1&callback=parent.sinaSSOController.feedBackUrlCallBack',

'returntype': 'TEXT' # 这里是 TEXT 和 META 选择,具体含义待探索

}

return sever_data def login(self):

# 先不输入验证码登录测试

try:

sever_data = self.pre_login()

login_url = 'https://login.sina.com.cn/sso/login.php?client=ssologin.js(v1.4.19)&_'

login_url = login_url + str(time.time() * 1000)

login_page = self.session.post(login_url, data=self.postdata, headers=headers)

ticket_js = login_page.json()

ticket = ticket_js["ticket"]

except Exception as e:

sever_data = self.pre_login()

login_url = 'https://login.sina.com.cn/sso/login.php?client=ssologin.js(v1.4.19)&_'

login_url = login_url + str(time.time() * 1000)

pcid = sever_data["pcid"]

self.get_cha(pcid)

self.postdata['door'] = input(u"请输入验证码")

login_page = self.session.post(login_url, data=self.postdata, headers=headers)

ticket_js = login_page.json()

ticket = ticket_js["ticket"]

# 以下内容是 处理登录跳转链接

save_pa = r'==-(\d+)-'

ssosavestate = int(re.findall(save_pa, ticket)[0]) + 3600 * 7

jump_ticket_params = {

"callback": "sinaSSOController.callbackLoginStatus",

"ticket": ticket,

"ssosavestate": str(ssosavestate),

"client": "ssologin.js(v1.4.19)",

"_": str(time.time() * 1000),

}

jump_url = "https://passport.weibo.com/wbsso/login"

jump_headers = {

"Host": "passport.weibo.com",

"Referer": "https://weibo.com/",

"User-Agent": headers["User-Agent"]

}

jump_login = self.session.get(jump_url, params=jump_ticket_params, headers=jump_headers)

uuid = jump_login.text uuid_pa = r'"uniqueid":"(.*?)"'

uuid_res = re.findall(uuid_pa, uuid, re.S)[0]

web_weibo_url = "http://weibo.com/%s/profile?topnav=1&wvr=6&is_all=1" % uuid_res

weibo_page = self.session.get(web_weibo_url, headers=headers) # print(weibo_page.content.decode("utf-8") Mheaders = {

"Host": "login.sina.com.cn",

"User-Agent": agent

} # m.weibo.cn 登录的 url 拼接

_rand = str(time.time())

mParams = {

"url": "https://m.weibo.cn/",

"_rand": _rand,

"gateway": "",

"service": "sinawap",

"entry": "sinawap",

"useticket": "",

"returntype": "META",

"sudaref": "",

"_client_version": "0.6.26",

}

murl = "https://login.sina.com.cn/sso/login.php"

mhtml = self.session.get(murl, params=mParams, headers=Mheaders)

mhtml.encoding = mhtml.apparent_encoding

mpa = r'replace\((.*?)\);'

mres = re.findall(mpa, mhtml.text) # 关键的跳转步骤,这里不出问题,基本就成功了。

Mheaders["Host"] = "passport.weibo.cn"

self.session.get(eval(mres[0]), headers=Mheaders)

mlogin = self.session.get(eval(mres[0]), headers=Mheaders)

# print(mlogin.status_code)

# 进过几次 页面跳转后,m.weibo.cn 登录成功,下次测试是否登录成功

Mheaders["Host"] = "m.weibo.cn"

Set_url = "https://m.weibo.cn"

pro = self.session.get(Set_url, headers=Mheaders)

pa_login = r'isLogin":true,'

login_res = re.findall(pa_login, pro.text)

# print(login_res) # 可以通过 session.cookies 对 cookies 进行下一步相关操作

self.session.cookies.save()

# print("*"*50)

# print(self.cookie_path) def get_cookies():

# 加载cookie

cookies = cookielib.LWPCookieJar("Cookie.txt")

cookies.load(ignore_discard=True, ignore_expires=True)

# 将cookie转换成字典

cookie_dict = requests.utils.dict_from_cookiejar(cookies)

return cookie_dict def info_parser(data):

id,time,text = data['id'],data['created_at'],data['text']

user = data['user']

uid,username,following,followed,gender = \

user['id'],user['screen_name'],user['follow_count'],user['followers_count'],user['gender']

return {

'wid':id,

'time':time,

'text':text,

'uid':uid,

'username':username,

'following':following,

'followed':followed,

'gender':gender

} def start_crawl(cookie_dict,id):

base_url = 'https://m.weibo.cn/comments/hotflow?id={}&mid={}&max_id_type=0'

next_url = 'https://m.weibo.cn/comments/hotflow?id={}&mid={}&max_id={}&max_id_type={}'

page = 1

id_type = 0

comment_count = 0

requests_count = 1

res = requests.get(url=base_url.format(id,id), headers=headers,cookies=cookie_dict)

while True:

print('parse page {}'.format(page))

page += 1

try:

data = res.json()['data']

wdata = []

max_id = data['max_id']

for c in data['data']:

comment_count += 1

row = info_parser(c)

wdata.append(info_parser(c))

if c.get('comments', None):

temp = []

for cc in c.get('comments'):

temp.append(info_parser(cc))

wdata.append(info_parser(cc))

comment_count += 1

row['comments'] = temp

print(row)

with open('{}/{}.csv'.format(comment_path, id), mode='a+', encoding='utf-8-sig', newline='') as f:

writer = csv.writer(f)

for d in wdata:

writer.writerow([d['wid'],d['time'],d['text'],d['uid'],d['username'],d['following'],d['followed'],d['gender']]) time.sleep(3)

except:

print(res.text)

id_type += 1

print('评论总数: {}'.format(comment_count)) res = requests.get(url=next_url.format(id, id, max_id,id_type), headers=headers,cookies=cookie_dict)

requests_count += 1

if requests_count%50==0:

print(id_type)

print(res.status_code) if __name__ == '__main__':

username ="" # 用户名(注册的手机号)

password = "" # 密码

cookie_path = "Cookie.txt" # 保存cookie 的文件名称

id = '' # 爬取微博的 id

WeiboLogin(username, password, cookie_path).login()

with open('{}/{}.csv'.format(comment_path, id), mode='w', encoding='utf-8-sig', newline='') as f:

writer = csv.writer(f)

writer.writerow(['wid', 'time', 'text', 'uid', 'username', 'following', 'followed', 'gender'])

start_crawl(get_cookies(), id)

Python爬虫抓取微博评论的更多相关文章

- 一篇文章教会你使用Python定时抓取微博评论

[Part1--理论篇] 试想一个问题,如果我们要抓取某个微博大V微博的评论数据,应该怎么实现呢?最简单的做法就是找到微博评论数据接口,然后通过改变参数来获取最新数据并保存.首先从微博api寻找抓取评 ...

- python 爬虫抓取心得

quanwei9958 转自 python 爬虫抓取心得分享 urllib.quote('要编码的字符串') 如果你要在url请求里面放入中文,对相应的中文进行编码的话,可以用: urllib.quo ...

- Python爬虫----抓取豆瓣电影Top250

有了上次利用python爬虫抓取糗事百科的经验,这次自己动手写了个爬虫抓取豆瓣电影Top250的简要信息. 1.观察url 首先观察一下网址的结构 http://movie.douban.com/to ...

- Python爬虫抓取东方财富网股票数据并实现MySQL数据库存储

Python爬虫可以说是好玩又好用了.现想利用Python爬取网页股票数据保存到本地csv数据文件中,同时想把股票数据保存到MySQL数据库中.需求有了,剩下的就是实现了. 在开始之前,保证已经安装好 ...

- python爬虫抓取哈尔滨天气信息(静态爬虫)

python 爬虫 爬取哈尔滨天气信息 - http://www.weather.com.cn/weather/101050101.shtml 环境: windows7 python3.4(pip i ...

- 用python+selenium抓取微博24小时热门话题的前15个并保存到txt中

抓取微博24小时热门话题的前15个,抓取的内容请保存至txt文件中,需要抓取排行.话题和阅读数 #coding=utf-8 from selenium import webdriver import ...

- Python抓取微博评论(二)

对于新浪微博评论的抓取,首篇做的时候有些考虑不周,然后现在改正了一些地方,因为有人问,抓取评论的时候“爬前50页的热评,或者最新评论里的前100页“,这样的数据看了看,好像每条微博的评论都只能抓取到前 ...

- Python抓取微博评论

本人是张杰的小迷妹,所以用杰哥的微博为例,之前一直看的是网页版,然后在知乎上看了一个抓取沈梦辰的微博评论的帖子,然后得到了这样的网址 然后就用m.weibo.cn进行网站的爬取,里面的微博和每一条微博 ...

- 如何科学地蹭热点:用python爬虫获取热门微博评论并进行情感分析

前言:本文主要涉及知识点包括新浪微博爬虫.python对数据库的简单读写.简单的列表数据去重.简单的自然语言处理(snowNLP模块.机器学习).适合有一定编程基础,并对python有所了解的盆友阅读 ...

随机推荐

- 吴裕雄--天生自然C语言开发:位域

struct { unsigned int widthValidated; unsigned int heightValidated; } status; struct { unsigned ; un ...

- Linux 杀死进程方法大全(kill,killall)

杀死进程最安全的方法是单纯使用kill命令,不加修饰符,不带标志. 首先使用ps -ef命令确定要杀死进程的PID,然后输入以下命令: # kill -pid 注释:标准的kill命令通常 ...

- Java面试题2-附答案

JVM的内存结构 根据 JVM 规范,JVM 内存共分为虚拟机栈.堆.方法区.程序计数器.本地方法栈五个部分. 1.Java虚拟机栈: 线程私有:每个方法在执行的时候会创建一个栈帧,存储了局部变量表, ...

- EXAM-2018-7-29

EXAM-2018-7-29 未完成 [ ] H [ ] A D 莫名TLE 不在循环里写strlen()就行了 F 相减特判 水题 J 模拟一下就可以发现规律,o(n) K 每个数加一减一不变,用m ...

- django框架中的cookie与session

cookie因为http是一个无状态协议,无法记录用户上一步的操作,所以需要状态保持.cookie和session的区别:1.cookie是保存在浏览器本地的,所以相对不安全.cookie是4k的大小 ...

- deeplearning.ai 构建机器学习项目 Week 1 机器学习策略 I

这门课是讲一些分析机器学习问题的方法,如何更快速高效的优化机器学习系统,以及NG自己的工程经验和教训. 1. 正交化(Othogonalization) 设计机器学习系统时需要面对一个问题是:可以尝试 ...

- linux centos的安装及一些相关知识的整理

相关知识点 ***网桥:主机和虚拟机之间使用"桥接"网络组网 VMware 0 ***Net适配器:把本地网中虚拟机的ip地址转换为主机的外部网络地址 ***仅主机适 ...

- python2查找匹配数据及类型转换

判断一个字符是否包含在另一个字符串中,如果包含,但是数据类型不同,需要进行数据类型转换 下面这个是针对python2

- OpenCV 输入输出XML和YAML文件

#include <opencv2/core/core.hpp> #include <iostream> #include <string> using names ...

- 深入 Java 调试体系: 第 1 部分,JPDA 体系概览

JPDA 概述 所有的程序员都会遇到 bug,对于运行态的错误,我们往往需要一些方法来观察和测试运行态中的环境.在 Java 程序中,最简单的,您是否尝试过使用 System.out.println( ...