kubernetes 1.17.2 结合 Ceph 13.2.8 实现 静态 动态存储 并附带一个实验

检查集群状态

kubernetes集群

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

20.0.0.201 Ready,SchedulingDisabled master 31h v1.17.2

20.0.0.202 Ready,SchedulingDisabled master 31h v1.17.2

20.0.0.203 Ready,SchedulingDisabled master 31h v1.17.2

20.0.0.204 Ready node 31h v1.17.2

20.0.0.205 Ready node 31h v1.17.2

20.0.0.206 Ready node 31h v1.17.2

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6cf5b744d7-rxt86 / Running 25h

kube-system calico-node-25dlc / Running 30h

kube-system calico-node-49q4n / Running 30h

kube-system calico-node-4gmcp / Running 30h

kube-system calico-node-gt4bt / Running 30h

kube-system calico-node-svcdj / Running 30h

kube-system calico-node-tkrqt / Running 30h

kube-system coredns-76b74f549-dkjxd / Running 25h

kube-system dashboard-metrics-scraper-64c8c7d847-dqbx2 / Running 24h

kube-system kubernetes-dashboard-85c79db674-bnvlk / Running 24h

kube-system metrics-server-6694c7dd66-hsbzb / Running 25h

kube-system traefik-ingress-controller-m8jf9 / Running 25h

kube-system traefik-ingress-controller-r7cgl / Running 25h

ceph集群

# ceph -s

cluster:

id: ed4d59da-c861-4da0-bbe2-8dfdea5be796

health: HEALTH_WARN

clock skew detected on mon.bs-k8s-gitlab, mon.bs-k8s-ceph

原因:一个是mon节点上ntp服务器未启动,另一个是ceph设置的mon的时间偏差阈值比较小,排查时也应遵循先第一个原因,后第二个原因的方式

# cp ceph.conf ceph.conf-`date +%F`

diff ceph.conf ceph.conf-`date +%F`

,18d16

< mon clock drift allowed =

< mon clock drift warn backoff =

# ceph-deploy --overwrite-conf config push bs-k8s-ceph bs-k8s-harbor bs-k8s-gitlab

重启mon服务

systemctl restart ceph-mon.target

# ceph -s

cluster:

id: ed4d59da-c861-4da0-bbe2-8dfdea5be796

health: HEALTH_OK

验证ceph集群和kubernetes集群都处于正常状态

kubernetes集群安装ceph客户端

bs-k8s-ceph

# scp /etc/yum.repos.d/ceph.repo 20.0.0.201:/etc/yum.repos.d/

# scp /etc/yum.repos.d/ceph.repo 20.0.0.202:/etc/yum.repos.d/

#scp /etc/yum.repos.d/ceph.repo 20.0.0.203:/etc/yum.repos.d/

#scp /etc/yum.repos.d/ceph.repo 20.0.0.204:/etc/yum.repos.d/

#scp /etc/yum.repos.d/ceph.repo 20.0.0.205:/etc/yum.repos.d/

#scp /etc/yum.repos.d/ceph.repo 20.0.0.206:/etc/yum.repos.d/

安装ceph client k8s集群操作

# yum install -y ceph

拷贝集群配置信息和 admin 密钥

[root@bs-k8s-cephlab ceph]# scp ceph.conf ceph.client.admin.keyring 20.0.0.206:/etc/ceph/

# ceph -s

cluster:

id: ed4d59da-c861-4da0-bbe2-8dfdea5be796

health: HEALTH_OK

客户端部署完成

部署一个常用的测试app

bs-k8s-ceph

创建一个app rbd pool

# ceph osd pool create webapp

授权wordpress用户

# ceph auth get-or-create client.webapp mon 'allow r' osd 'allow class-read, allow rwx pool=webapp' -o ceph.client.webapp.keyring

# ceph auth get client.webapp

exported keyring for client.webapp

[client.webapp]

key = AQDDcnFeMcbnMxAA7hDGAmEAgpmrY8Z+ATFG+A==

caps mon = "allow r"

caps osd = "allow class-read, allow rwx pool=webapp" Mon 权限: 包括 r 、 w 、 x 。

OSD 权限: 包括 r 、 w 、 x 、 class-read 、 class-write

创建ceph secret

bs-k8s-master01 # ceph auth get-key client.admin | base64 #获取client.admin的keyring值,并用base64编码

QVFBdG1IQmVvdG5zTGhBQVFpa214WVNUZFdOcWVydGgyVVBlL0E9PQ==

# ceph auth get-key client.webapp | base64 #获取client.kube的keyring值,并用base64编码

QVFERGNuRmVNY2JuTXhBQTdoREdBbUVBZ3Btclk4WitBVEZHK0E9PQ==

# pwd

/data/k8s/app/wordpress

# cat namespace.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: namespace.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: v1

kind: Namespace

metadata:

name: myweb

labels:

name: myweb

# kubectl apply -f namespace.yaml

namespace/myweb created

# cat ceph-wordpress-secret.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: ceph-jenkins-secret.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: v1

kind: Secret

metadata:

name: ceph-admin-secret

namespace: myweb

data:

key: QVFBdG1IQmVvdG5zTGhBQVFpa214WVNUZFdOcWVydGgyVVBlL0E9PQ==

type: kubernetes.io/rbd

---

apiVersion: v1

kind: Secret

metadata:

name: ceph-myweb-secret

namespace: myweb

data:

key: QVFERGNuRmVNY2JuTXhBQTdoREdBbUVBZ3Btclk4WitBVEZHK0E9PQ==

type: kubernetes.io/rbd

# kubectl apply -f ceph-wordpress-secret.yaml

secret/ceph-admin-secret created

secret/ceph-myweb-secret created

使用StorageClass动态创建PV时,controller-manager会自动在Ceph上创建image,所以我们要为其准备好rbd命令。 如果集群是用kubeadm部署的,由于controller-manager官方镜像中没有rbd命令,所以我们要导入外部配置。

# cat external-storage-rbd-provisioner.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: external-storage-rbd-provisioner.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-provisioner

namespace: myweb

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["kube-dns"]

verbs: ["list", "get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: myweb

roleRef:

kind: ClusterRole

name: rbd-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: rbd-provisioner

namespace: myweb

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: rbd-provisioner

namespace: myweb

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: myweb

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: rbd-provisioner

namespace: myweb

spec:

replicas:

selector:

matchLabels:

app: rbd-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: "harbor.linux.com/rbd/rbd-provisioner:latest"

imagePullPolicy: IfNotPresent

env:

- name: PROVISIONER_NAME

value: ceph.com/rbd

imagePullSecrets:

- name: k8s-harbor-login

serviceAccount: rbd-provisioner

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

rbd: "true"

节点打标签

#kubectl label nodes 20.0.0.204 rbd=true

# kubectl get nodes --show-labels

20.0.0.204 Ready node 46h v1.17.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,dashboard=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=20.0.0.204,kubernetes.io/os=linux,kubernetes.io/role=node,metricsscraper=true,metricsserver=true,rbd=true

# kubectl apply -f external-storage-rbd-provisioner.yaml

serviceaccount/rbd-provisioner created

clusterrole.rbac.authorization.k8s.io/rbd-provisioner created

clusterrolebinding.rbac.authorization.k8s.io/rbd-provisioner created

role.rbac.authorization.k8s.io/rbd-provisioner created

rolebinding.rbac.authorization.k8s.io/rbd-provisioner created

deployment.apps/rbd-provisioner created

# kubectl get pods -n myweb -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rbd-provisioner-9cf46c856-b9pm9 / Running 36s 172.20.46.83 20.0.0.204 <none> <none>

# cat ceph-wordpress-storageclass.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: ceph-jenkins-storageclass.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ceph-wordpress

namespace: myweb

annotations:

storageclass.kubernetes.io/is-default-class: "false"

provisioner: ceph.com/rbd

reclaimPolicy: Retain

parameters:

monitors: 20.0.0.207:,20.0.0.208:,20.0.0.209:

adminId: admin

adminSecretName: ceph-admin-secret

adminSecretNamespace: myweb

pool: webapp

fsType: xfs

userId: webapp

userSecretName: ceph-myweb-secret

imageFormat: ""

imageFeatures: "layering"

# kubectl apply -f ceph-wordpress-storageclass.yaml

storageclass.storage.k8s.io/ceph-wordpress created

注: storageclass.kubernetes.io/is-default-class:注释为true,标记为默认的StorageClass,注释的任何其他值或缺失都被解释为false。 monitors:Ceph监视器,逗号分隔。此参数必需。 adminId:Ceph客户端ID,能够在pool中创建images。默认为“admin”。 adminSecretNamespace:adminSecret的namespace。默认为“default”。 adminSecret:adminId的secret。此参数必需。提供的secret必须具有“kubernetes.io/rbd”类型。 pool:Ceph RBD池。默认为“rbd”。 userId:Ceph客户端ID,用于映射RBD image。默认值与adminId相同。 userSecretName:用于userId映射RBD image的Ceph Secret的名称。它必须与PVC存在于同一namespace中。此参数必需。 fsType:kubernetes支持的fsType。默认值:"ext4"。 imageFormat:Ceph RBD image格式,“1”或“2”。默认值为“1”。 imageFeatures:此参数是可选的,只有在设置imageFormat为“2”时才能使用。目前仅支持的功能为layering。默认为“”,并且未开启任何功能。 默认的StorageClass标记为(default)

创建pvc

动态卷配置的实现基于StorageClass API组中的API对象storage.k8s.io。

用户通过在其中包含存储类来请求动态调配存储PersistentVolumeClaim

# cat mysql-pvc.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: mysql-pvc.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

namespace: myweb

labels:

app: wordpress

spec:

storageClassName: ceph-wordpress

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

# cat wordpress-pvc.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: wordpress-pvc.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wp-pv-claim

namespace: myweb

labels:

app: wordpress

spec:

storageClassName: ceph-wordpress

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

# kubectl apply -f mysql-pvc.yaml

persistentvolumeclaim/mysql-pv-claim created

# kubectl apply -f wordpress-pvc.yaml

persistentvolumeclaim/wp-pv-claim created

# kubectl get pvc,pv -n myweb

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mysql-pv-claim Bound pvc-cc32b750-e602-4d8f-a054-a9941f971315 2Gi RWO ceph-wordpress 3m24s

persistentvolumeclaim/wp-pv-claim Bound pvc-113e27f5--4f90-80d6-b45cfcddca34 2Gi RWO ceph-wordpress 51s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-113e27f5--4f90-80d6-b45cfcddca34 2Gi RWO Retain Bound myweb/wp-pv-claim ceph-wordpress 50s

persistentvolume/pvc-cc32b750-e602-4d8f-a054-a9941f971315 2Gi RWO Retain Bound myweb/mysql-pv-claim ceph-wordpress 3m17s

可以看到已经实现了动态创建pv

使用持久卷部署WordPress和mysql

为mysql密码创建一个Secret,换命令行创建一次吧

# cat wordpress-mysql-password.sh

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: myweb-mysql-password.sh

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

##########################################################################

#!/bin/bash

kubectl -n myweb create secret generic mysql-pass --from-literal=password=zisefeizhu

# sh -x wordpress-mysql-password.sh

+ kubectl -n myweb create secret generic mysql-pass --from-literal=password=zisefeizhu

secret/mysql-pass created

准备资源清单

# cat mysql-deployment.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: mysql-deployment.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: v1

kind: Service

metadata:

name: wordpress-mysql

namespace: myweb

labels:

app: wordpress

spec:

ports:

- port:

selector:

app: wordpress

tier: mysql

clusterIP: None

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-mysql

namespace: myweb

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: mysql

spec:

imagePullSecrets:

- name: k8s-harbor-login

containers:

- image: harbor.linux.com/myweb/mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort:

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

wordpress-mysql: "true" 节点打标签,按照规划是打到bs-k8s-node03节点上

# kubectl label nodes 20.0.0.206 wordpress-mysql=true

node/20.0.0.206 labeled

# kubectl apply -f mysql-deployment.yaml

service/wordpress-mysql created

deployment.apps/wordpress-mysql created

# kubectl get pods -n myweb -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rbd-provisioner-9cf46c856-b9pm9 / Running 27m 172.20.46.83 20.0.0.204 <none> <none>

wordpress-mysql-6d7bd496b4-w4wlc / Running 4m39s 172.20.208.3 20.0.0.206 <none> <none>

mysql部署成功

# cat wordpress.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: wordpress.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: v1

kind: Service

metadata:

name: wordpress

namespace: myweb

labels:

app: wordpress

spec:

ports:

- port:

selector:

app: wordpress

tier: frontend

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

namespace: myweb

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: frontend

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: frontend

spec:

imagePullSecrets:

- name: k8s-harbor-login

containers:

- image: harbor.linux.com/myweb/wordpress:4.8

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-mysql

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort:

name: wordpress

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wp-pv-claim

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

wordpress-wordpress: "true"

# kubectl apply -f wordpress.yaml

service/wordpress created

deployment.apps/wordpress created

# kubectl get pods -n myweb -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

rbd-provisioner-9cf46c856-b9pm9 / Running 59m 172.20.46.83 20.0.0.204 <none> <none>

wordpress-6677ff7bd-sc45d / Running 10m 172.20.208.18 20.0.0.206 <none> <none>

wordpress-mysql-6d7bd496b4-w4wlc / Running 36m 172.20.208.10 20.0.0.206 <none> <none>

部署代理

# cat wordpress-ingressroute.yaml

##########################################################################

#Author: zisefeizhu

#QQ: ********

#Date: --

#FileName: myweb--ingressroute.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): All rights reserved

###########################################################################

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: wordpress

namespace: myweb

spec:

entryPoints:

- web

routes:

- match: Host(`wordpress.linux.com`)

kind: Rule

services:

- name: wordpress

port:

# kubectl apply -f wordpress-ingressroute.yaml

ingressroute.traefik.containo.us/wordpress created

代理成功

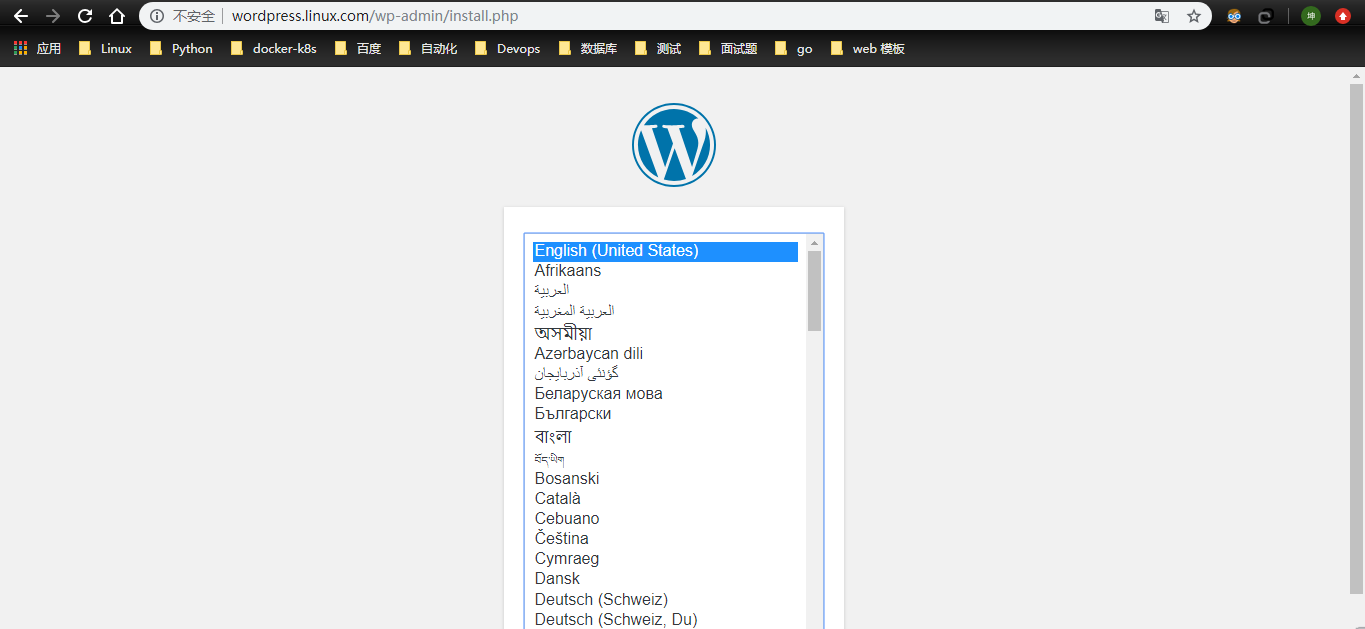

看样子是OK了,按照步骤next吧

测试

思路:删除mysql-deployment.yaml 即只保留mysql pvc 然后重启mysql-deployment.yaml,web访问看能否成功。

# kubectl delete -f mysql-deployment.yaml

service "wordpress-mysql" deleted

deployment.apps "wordpress-mysql" deleted

# kubectl get pvc -n myweb

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-cc32b750-e602-4d8f-a054-a9941f971315 2Gi RWO ceph-wordpress 63m

wp-pv-claim Bound pvc-113e27f5--4f90-80d6-b45cfcddca34 2Gi RWO ceph-wordpress 61m

# kubectl apply -f mysql-deployment.yaml

service/wordpress-mysql created

deployment.apps/wordpress-mysql created

# kubectl get pods -n myweb

NAME READY STATUS RESTARTS AGE

rbd-provisioner-9cf46c856-b9pm9 / Running 71m

wordpress-6677ff7bd-sc45d / Running 22m

wordpress-mysql-6d7bd496b4-62dps / Running 18s

ok了。这样,当我们的集群负载过高的时候就可以先把pod给停了 ,只保留pvc即可。

kubernetes 1.17.2 结合 Ceph 13.2.8 实现 静态 动态存储 并附带一个实验的更多相关文章

- kubernetes 1.17.2结合ceph13.2.8 实现jenkins部署并用traefik2.1代理

注:关于ceph.kubernetes集群的部署在此不声明,相信搜到本篇博文,你一定对ceph.kubernetes的部署环节手刃有余. 注:本篇博文牵扯到的技术点有:ceph.kubernetes. ...

- 基于ceph rbd 在kubernetes harbor 空间下创建动态存储

[root@bs-k8s-ceph ~]# ceph osd pool create harbor 128 Error ETIMEDOUT: crush test failed with -110: ...

- Kubernetes v1.17 版本解读 | 云原生生态周报 Vol. 31

作者 | 徐迪.李传云.黄珂.汪萌海.张晓宇.何淋波 .陈有坤.李鹏审核 | 陈俊 上游重要进展 1. Kubernetes v1.17 版本发布 功能稳定性是第一要务.v1.17 包含 22 个增强 ...

- [转帖]Kubernetes v1.17 版本解读 | 云原生生态周报 Vol. 31

Kubernetes v1.17 版本解读 | 云原生生态周报 Vol. 31 https://www.kubernetes.org.cn/6252.html 2019-12-13 11:59 ali ...

- 002.使用kubeadm安装kubernetes 1.17.0

一 环境准备 1.1 环境说明 master 192.168.132.131 docker-server1 node1 192.168.132.132 doc ...

- Ceph集群搭建及Kubernetes上实现动态存储(StorageClass)

集群准备 ceph集群配置说明 节点名称 IP地址 配置 作用 ceph-moni-0 10.10.3.150 centos7.5 4C,16G,200Disk 管理节点,监视器 monitor ...

- kubernetes配置使用ceph动态存储

在k8s集群中配置ceph 3.1.使用cephfs (1) 在ceph集群创建cephfs #以下操作在ceph集群的admin或者mon节点上执行 #创建pool来存储数据和元数据 ceph os ...

- kubeadm使用外部etcd部署kubernetes v1.17.3 高可用集群

文章转载自:https://mp.weixin.qq.com/s?__biz=MzI1MDgwNzQ1MQ==&mid=2247483891&idx=1&sn=17dcd7cd ...

- kubernetes 静态存储与动态存储

静态存储 Kubernetes 同样将操作系统和 Docker 的 Volume 概念延续了下来,并且对其进一步细化.Kubernetes 将 Volume 分为持久化的 PersistentVo ...

随机推荐

- Python 爬取 热词并进行分类数据分析-[拓扑数据]

日期:2020.01.29 博客期:137 星期三 [本博客的代码如若要使用,请在下方评论区留言,之后再用(就是跟我说一声)] 所有相关跳转: a.[简单准备] b.[云图制作+数据导入] c.[拓扑 ...

- SpringBoot与Jpa入门

一.JPA简介 目前JPA主要实现由hibernate和openJPA等. Spring Data JPA 是Spring Data 的一个子项目,它通过提供基于JPA的Repository极大了减少 ...

- selenium 获取table数据

public class Table { /** * @param args */ public static void main(String[] args) { // TODO Auto-gene ...

- NFS文件服务器

NFS文件服务器 NFS介绍 应用场景 NFS安装部署 NFS共享 客户端NFS共享挂载 一.NFS介绍 NFS(Network File System)即网络文件系统,它允许网络中的计算机之间通过T ...

- Pytorch本人疑问(2)model.train()和model.eval()的区别

我们在训练时如果使用了BN层和Dropout层,我们需要对model进行标识: model.train():在训练时使用BN层和Dropout层,对模型进行更改. model.eval():在评价时将 ...

- 学习笔记(10)- 智能会话框架rasa

https://rasa.com/ 阿里用过这个,贝壳也在用这个. 优点: 有中文版本(https://github.com/crownpku/rasa_nlu_chi): 2018年发布,文档多,业 ...

- Chrome 浏览器新功能:共享剪贴板

导读 Chrome 79 在桌面版和 Android 版浏览器中添加了一项新的功能,名为“共享剪贴板”(shared clipboard). 简单来说,就是可以实现在电脑端复制,手机端粘贴.有了这项功 ...

- 关于Android Studio中点9图的编译错误问题

Android中的点9图想必大家都非常熟悉了,能够指定背景图片的缩放区域和文本内容的显示区域,常见如QQ聊天界面的背景气泡这种文本内容不固定并需要适配的应用场景. 这里也给大家准备了一张图,详细介绍了 ...

- 「SP10628 COT - Count on a tree」

主席树的综合运用题. 前置芝士 可持久化线段树:其实就是主席树了. LCA:最近公共祖先,本题需要在\(\log_2N\)及以内的时间复杂度内解决这个问题. 具体做法 主席树维护每个点到根节点这一条链 ...

- mysql 命令行个性化设置

通过配置显示主机和用户名 mysql -u root -p --prompt="(\u@\h) [\d]>" 或在配置文件中修改,可在命令行中的目标位置查看 --tee na ...