HiBench成长笔记——(7) 阅读《The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis》

《The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis》内容精选

We then evaluate and characterize the Hadoop framework using HiBench, in terms of speed (i.e., job running time), throughput (i.e., the number of tasks completed per minute), HDFS bandwidth, system resource (e.g., CPU, memory and I/O) utilizations, and data access patterns.

关键内容:speed、 throughput、HDFS bandwidth、 system resource、data access patterns

the last one is an enhanced version of the DFSIO benchmark that we have extended to evaluate the aggregated bandwidth delivered by HDFS.

关键内容:evaluate the aggregated bandwidth delivered by HDFS

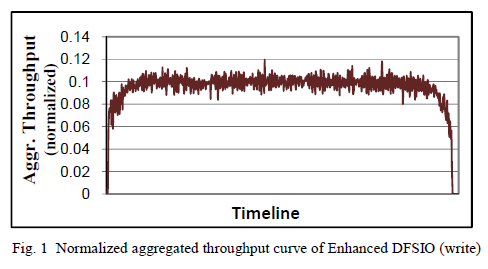

As shown in Fig. 1, the aggregated throughput curve has a warm-up period and a cool-down period where map tasks are launching up and shutting down respectively. Between these two periods, there is a steady period where the aggregated throughput values are stable across different time slots. Therefore, the Enhanced DFSIO workload computes the aggregated HDFS throughput by averaging the throughput value of each time slot in the steady period. In Enhanced DFSIO, when the number of concurrent map tasks at a time slot is above a specified percentage (e.g., 50% is used in our benchmarking) of the total map task slots in the Hadoop cluster, the slot is considered to be in the steady period.

关键内容:warm-up period、cool-down period、steady period、computes the aggregated HDFS throughput by averaging the throughput value of each time slot in the steady period

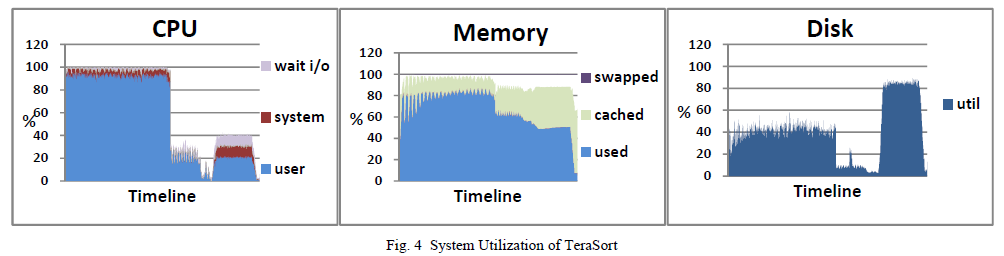

In essence, the TeraSort workload is similar to Sort and therefore is I/O bound in nature. However, we have compressed its shuffle data (i.e., map output) in the experiment so as to minimize the disk and network I/O during shuffle, as shown in Table III. Consequently, TeraSort have very high CPU utilization and moderate disk I/O during the map stage and shuffle phases, and moderate CPU utilization and heavy disk I/O during the reduce phases, as shown in Fig. 4.

关键内容:map stage、shuffle phases、reduce phases、high、moderate、CPU utilization、disk I/O

The best performance (total running time) of Hadoop workloads is usually obtained by accurately estimating the size of the map output, shuffle data and reduce input data, and properly allocating memory buffers to prevent multiple spilling (to disk) of those data.

关键内容:estimating the size of the map output、 shuffle data and reduce input data、allocating memory buffers

HiBench成长笔记——(7) 阅读《The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis》的更多相关文章

- HiBench成长笔记——(2) CentOS部署安装HiBench

安装Scala 使用spark-shell命令进入shell模式,查看spark版本和Scala版本: 下载Scala2.10.5 wget https://downloads.lightbend.c ...

- HiBench成长笔记——(3) HiBench测试Spark

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html 创建并修改配置文件conf/spa ...

- HiBench成长笔记——(1) HiBench概述

测试分类 HiBench共计19个测试方向,可大致分为6个测试类别:分别是micro,ml(机器学习),sql,graph,websearch和streaming. 2.1 micro Benchma ...

- HiBench成长笔记——(5) HiBench-Spark-SQL-Scan源码分析

run.sh #!/bin/bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributo ...

- HiBench成长笔记——(4) HiBench测试Spark SQL

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html 和 https://www.cnb ...

- HiBench成长笔记——(11) 分析源码run.sh

#!/bin/bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributor licen ...

- HiBench成长笔记——(10) 分析源码execute_with_log.py

#!/usr/bin/env python2 # Licensed to the Apache Software Foundation (ASF) under one or more # contri ...

- HiBench成长笔记——(9) 分析源码monitor.py

monitor.py 是主监控程序,将监控数据写入日志,并统计监控数据生成HTML统计展示页面: #!/usr/bin/env python2 # Licensed to the Apache Sof ...

- HiBench成长笔记——(8) 分析源码workload_functions.sh

workload_functions.sh 是测试程序的入口,粘连了监控程序 monitor.py 和 主运行程序: #!/bin/bash # Licensed to the Apache Soft ...

随机推荐

- idea隐藏配置文件

- 前端学习 之 JavaScript DOM 与 BOM

一. DOM介绍 1. 什么是DOM? DOM:文档对象模型.DOM 为文档提供了结构化表示,并定义了如何通过脚本来访问文档结构. 目的其实就是为了能让js操作html元素而制定的一个规范. DOM就 ...

- 【快学springboot】4.接口参数校验

前言 在开发接口的时候,参数校验是必不可少的.参数的类型,长度等规则,在开发初期都应该由产品经理或者技术负责人等来约定.如果不对入参做校验,很有可能会因为一些不合法的参数而导致系统出现异常. 上一篇文 ...

- CyclicBarrier 解读

简介 字面上的意思: 可循环利用的屏障. 作用: 让所有线程都等待完成后再继续下一步行动. 举例模拟: 吃饭人没到齐不准动筷. 使用Demo package com.ronnie; import ja ...

- KALI 2017 X64安装到U盘

KALI 2017 X64安装到U盘启动(作者:黑冰) 此方法为虚拟机方法,自认为成功率很高,已经成功安装过16,32G U盘,但也不排除有些人用拷碟方法安装这里我仅介绍虚拟机安装方法. 1.准备 ...

- 更改windows系统的快捷键方法

众所周知,windows平台有很多快捷键使用非常的别扭. 现在提供windows 平台快捷键替换的绝佳软件:autohotkey 下载链接:http://ahkscript.org/ 中文帮助站点:h ...

- linux sed命令(擅长输出行)(转)

linux命令总结sed命令详解 Sed 简介 sed 是一种新型的,非交互式的编辑器.它能执行与编辑器 vi 和 ex 相同的编辑任务.sed 编辑器没有提供交互式使用方式,使用者只能在命令行输入编 ...

- Codeforces 598D:Igor In the Museum

D. Igor In the Museum time limit per test 1 second memory limit per test 256 megabytes input standar ...

- ReentrantReadWriteLock之读写锁判断

一. 读写锁是怎么实现的? 继承AQS,然后通过将AQS中的state转化为二进制,分为高16位和低16位来区分.高16位表示读状态,低16位为写状态. 二. 解析表示方式(高低16位) 假设此时st ...

- storm的JavaAPI运行报错

报错:java.lang.NoClassDefFoundError: org/apache/storm/topology/IRichSpout 原因:idea的bug:本地运行时设置scope为pro ...