064 UDF

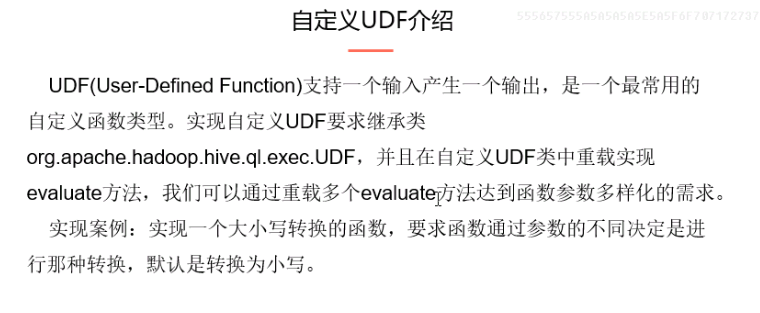

一:UDF

1.自定义UDF

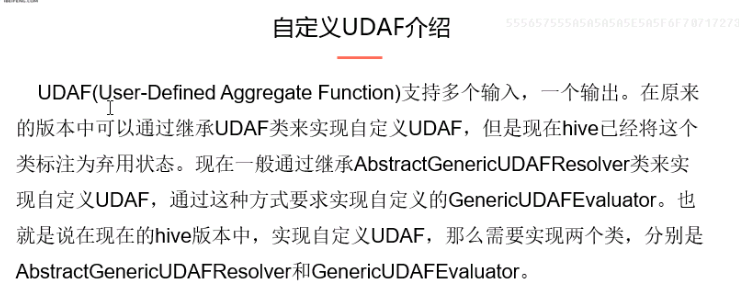

二:UDAF

2.UDAF

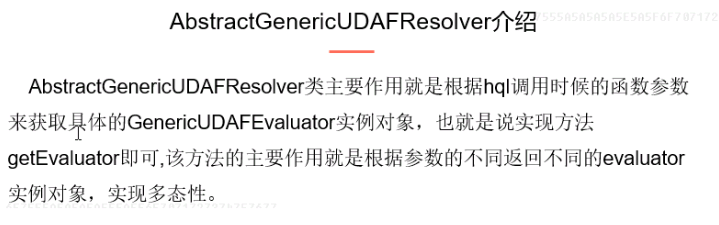

3.介绍AbstractGenericUDAFResolver

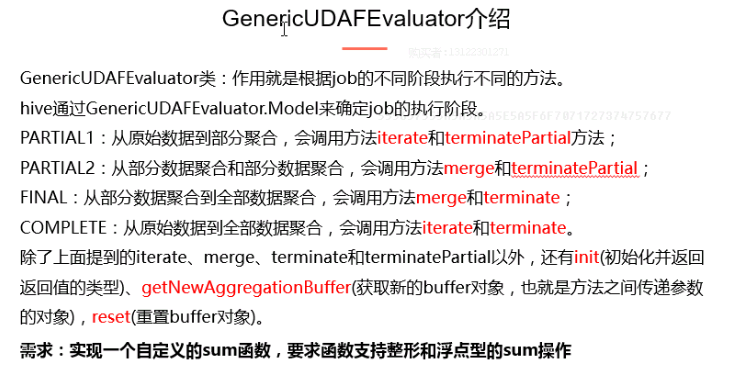

4.介绍GenericUDAFEvaluator

5.程序

package org.apache.hadoop.hive_udf; import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.parse.SemanticException;

import org.apache.hadoop.hive.ql.udf.generic.AbstractGenericUDAFResolver;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDAFEvaluator;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDAFParameterInfo;

import org.apache.hadoop.hive.serde2.io.DoubleWritable;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.PrimitiveObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.AbstractPrimitiveWritableObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorUtils;

import org.apache.hadoop.io.LongWritable; /**

*

* 需求:实现sum函数,支持int和double类型

*

*/ public class UdafProject extends AbstractGenericUDAFResolver{

public GenericUDAFEvaluator getEvaluator(GenericUDAFParameterInfo info)

throws SemanticException {

//判断参数是否是全部列

if(info.isAllColumns()){

throw new SemanticException("不支持*的参数");

} //判断是否只有一个参数

ObjectInspector[] inspector = info.getParameterObjectInspectors();

if(inspector.length != 1){

throw new SemanticException("参数只能有一个");

}

//判断输入列的数据类型是否为基本类型

if(inspector[0].getCategory() != ObjectInspector.Category.PRIMITIVE){

throw new SemanticException("参数必须为基本数据类型");

} AbstractPrimitiveWritableObjectInspector woi = (AbstractPrimitiveWritableObjectInspector) inspector[0]; //判断是那种基本数据类型 switch(woi.getPrimitiveCategory()){

case INT:

case LONG:

case BYTE:

case SHORT:

return new udafLong();

case FLOAT:

case DOUBLE:

return new udafDouble();

default:

throw new SemanticException("参数必须是基本类型,且不能为string等类型"); } } /**

* 对整形数据进行求和

*/

public static class udafLong extends GenericUDAFEvaluator{ //定义输入数据类型

public PrimitiveObjectInspector inputor; //实现自定义buffer

static class sumlongagg implements AggregationBuffer{

long sum;

boolean empty;

} //初始化方法

@Override

public ObjectInspector init(Mode m, ObjectInspector[] parameters)

throws HiveException {

// TODO Auto-generated method stub super.init(m, parameters);

if(parameters.length !=1 ){

throw new UDFArgumentException("参数异常");

}

if(inputor == null){

this.inputor = (PrimitiveObjectInspector) parameters[0];

}

//注意返回的类型要与最终sum的类型一致

return PrimitiveObjectInspectorFactory.writableLongObjectInspector;

} @Override

public AggregationBuffer getNewAggregationBuffer() throws HiveException {

// TODO Auto-generated method stub

sumlongagg slg = new sumlongagg();

this.reset(slg);

return slg;

} @Override

public void reset(AggregationBuffer agg) throws HiveException {

// TODO Auto-generated method stub

sumlongagg slg = (sumlongagg) agg;

slg.sum=0;

slg.empty=true;

} @Override

public void iterate(AggregationBuffer agg, Object[] parameters)

throws HiveException {

// TODO Auto-generated method stub

if(parameters.length != 1){

throw new UDFArgumentException("参数错误");

}

this.merge(agg, parameters[0]); } @Override

public Object terminatePartial(AggregationBuffer agg)

throws HiveException {

// TODO Auto-generated method stub

return this.terminate(agg);

} @Override

public void merge(AggregationBuffer agg, Object partial)

throws HiveException {

// TODO Auto-generated method stub

sumlongagg slg = (sumlongagg) agg;

if(partial != null){

slg.sum += PrimitiveObjectInspectorUtils.getLong(partial, inputor);

slg.empty=false;

}

} @Override

public Object terminate(AggregationBuffer agg) throws HiveException {

// TODO Auto-generated method stub

sumlongagg slg = (sumlongagg) agg;

if(slg.empty){

return null;

}

return new LongWritable(slg.sum);

} } /**

* 实现浮点型的求和

*/

public static class udafDouble extends GenericUDAFEvaluator{ //定义输入数据类型

public PrimitiveObjectInspector input; //实现自定义buffer

static class sumdoubleagg implements AggregationBuffer{

double sum;

boolean empty;

} //初始化方法

@Override

public ObjectInspector init(Mode m, ObjectInspector[] parameters)

throws HiveException {

// TODO Auto-generated method stub super.init(m, parameters);

if(parameters.length !=1 ){

throw new UDFArgumentException("参数异常");

}

if(input == null){

this.input = (PrimitiveObjectInspector) parameters[0];

}

//注意返回的类型要与最终sum的类型一致

return PrimitiveObjectInspectorFactory.writableDoubleObjectInspector;

} @Override

public AggregationBuffer getNewAggregationBuffer() throws HiveException {

// TODO Auto-generated method stub

sumdoubleagg sdg = new sumdoubleagg();

this.reset(sdg);

return sdg;

} @Override

public void reset(AggregationBuffer agg) throws HiveException {

// TODO Auto-generated method stub

sumdoubleagg sdg = (sumdoubleagg) agg;

sdg.sum=0;

sdg.empty=true;

} @Override

public void iterate(AggregationBuffer agg, Object[] parameters)

throws HiveException {

// TODO Auto-generated method stub

if(parameters.length != 1){

throw new UDFArgumentException("参数错误");

}

this.merge(agg, parameters[0]);

} @Override

public Object terminatePartial(AggregationBuffer agg)

throws HiveException {

// TODO Auto-generated method stub

return this.terminate(agg);

} @Override

public void merge(AggregationBuffer agg, Object partial)

throws HiveException {

// TODO Auto-generated method stub

sumdoubleagg sdg =(sumdoubleagg) agg;

if(partial != null){

sdg.sum += PrimitiveObjectInspectorUtils.getDouble(sdg, input);

sdg.empty=false;

}

} @Override

public Object terminate(AggregationBuffer agg) throws HiveException {

// TODO Auto-generated method stub

sumdoubleagg sdg = (sumdoubleagg) agg;

if (sdg.empty){

return null;

}

return new DoubleWritable(sdg.sum);

} } }

6.打成jar包

并放入路径:/etc/opt/datas/

7.添加jar到path

格式:

add jar linux_path;

即:

add jar /etc/opt/datas/af.jar

8.创建方法

create temporary function af as 'org.apache.hadoop.hive_udf.UdafProject';

9.在hive中运行

select sum(id),af(id) from stu_info;

三:UDTF

1.UDTF

2.程序

package org.apache.hadoop.hive.udf; import java.util.ArrayList; import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory; public class UDTFtest extends GenericUDTF { @Override

public StructObjectInspector initialize(StructObjectInspector argOIs)

throws UDFArgumentException {

// TODO Auto-generated method stub

if(argOIs.getAllStructFieldRefs().size() != 1){

throw new UDFArgumentException("参数只能有一个");

}

ArrayList<String> fieldname = new ArrayList<String>();

fieldname.add("name");

fieldname.add("email");

ArrayList<ObjectInspector> fieldio = new ArrayList<ObjectInspector>();

fieldio.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldio.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector); return ObjectInspectorFactory.getStandardStructObjectInspector(fieldname, fieldio);

} @Override

public void process(Object[] args) throws HiveException {

// TODO Auto-generated method stub

if(args.length == 1){

String name = args[0].toString();

String email = name + "@ibeifneg.com";

super.forward(new String[] {name,email});

}

} @Override

public void close() throws HiveException {

// TODO Auto-generated method stub

super.forward(new String[] {"complete","finish"});

} }

3.同样的步骤

4.在hive中运行

select tf(ename) as (name,email) from emp;

064 UDF的更多相关文章

- SQL Server-聚焦在视图和UDF中使用SCHEMABINDING(二十六)

前言 上一节我们讨论了视图中的一些限制以及建议等,这节我们讲讲关于在UDF和视图中使用SCHEMABINDING的问题,简短的内容,深入的理解,Always to review the basics. ...

- MySql UDF 调用外部程序和系统命令

1.mysql利用mysqludf的一个mysql插件可以实现调用外部程序和系统命令 下载lib_mysqludf_sys程序:https://github.com/mysqludf/lib_mysq ...

- Hive UDF初探

1. 引言 在前一篇中,解决了Hive表中复杂数据结构平铺化以导入Kylin的问题,但是平铺之后计算广告日志的曝光PV是翻倍的,因为一个用户对应于多个标签.所以,为了计算曝光PV,我们得另外创建视图. ...

- sparksql udf的运用----scala及python版(2016年7月17日前完成)

问:udf在sparksql 里面的作用是什么呢? 答:oracle的存储过程会有用到定义函数,那么现在udf就相当于一个在sparksql用到的函数定义: 第二个问题udf是怎么实现的呢? regi ...

- Hive UDF开发实例学习

1. 本地环境配置 必须包含的一些包. http://blog.csdn.net/azhao_dn/article/details/6981115 2. 去重UDF实例 http://blog.csd ...

- Adding New Functions to MySQL(User-Defined Function Interface UDF、Native Function)

catalog . How to Add New Functions to MySQL . Features of the User-Defined Function Interface . User ...

- gearman mysql udf

gearman安装 apt-get install gearman gearman-server libgearman-dev 配置bindip /etc/defalut/gearman-job-se ...

- HiveServer2 的jdbc方式创建udf的修改(add jar 最好不要使用),否则会造成异常: java.sql.SQLException: Error while processing statement: null

自从Hive0.13.0开始,使用HiveServer2 的jdbc方式创建udf的临时函数的方法由: ADD JAR ${HiveUDFJarPath} create TEMPORARY funct ...

- HIVE: UDF应用实例

数据文件内容 TEST DATA HERE Good to Go 我们准备写一个函数,把所有字符变为小写. 1.开发UDF package MyTestPackage; import org.apac ...

随机推荐

- pyinstaller 打包不成功,提示inporterror 缺少xlrd、xlwt

问题:pyinstaller 打包不成功,提示inporterror 缺少xlrd.xlwt 解决:将 pypiwin 230 改为 219

- OracleHelper与SqlServerHelper

1.OracleHelper using System; using System.Data; using System.Configuration; using System.Linq; using ...

- matplotlib-2D绘图库

安装 python -m pip install matplotlib 允许中文: 使用matplotlib的字体管理器指定字体文件 plt.rcParams['font.sans-serif'] ...

- [USACO]地震 (二分答案+最优比率生成树详解)

题面:[USACO 2001 OPEN]地震 题目描述: 一场地震把约翰家的牧场摧毁了, 坚强的约翰决心重建家园. 约翰已经重建了N个牧场,现在他希望能修建一些道路把它们连接起来.研究地形之后,约翰发 ...

- python - 远程主机执行命令练习(socket UDP + subprocess.Popen()) 练习1

环境是windows 环境. server端: import socket import subprocess ss = socket.socket(socket.AF_INET,socket.SOC ...

- 【漏洞分析】两个例子-数组溢出修改返回函数与strcpy覆盖周边内存地址

修改返回函数 return 0 下面的程序的运行流程为main()函数调用了Magic()函数,通常执行完Magic()函数后会调用return 0 的地址, 但是在执行Magic()函数中时,数组下 ...

- Faster rcnn代码理解(2)

接着上篇的博客,咱们继续看一下Faster RCNN的代码- 上次大致讲完了Faster rcnn在训练时是如何获取imdb和roidb文件的,主要都在train_rpn()的get_roidb()函 ...

- linux内核中链表代码分析---list.h头文件分析(一)【转】

转自:http://blog.chinaunix.net/uid-30254565-id-5637596.html linux内核中链表代码分析---list.h头文件分析(一) 16年2月27日17 ...

- python获取当前环境的编码

# coding:gbk import sys import locale def p(f): print '%s.%s(): %s' % (f.__module__, f.__name__, f() ...

- S5PV210 NAND Flash

NAND Flash 关于NAND FlashS5PV210的NAND Flash控制器有如下特点:1) 支持512byte,2k,4k,8k的页大小2) 通过各种软件模式来进行NAND Flash的 ...