Load Balancing with NGINX 负载均衡算法

Using nginx as HTTP load balancer

Using nginx as HTTP load balancer http://nginx.org/en/docs/http/load_balancing.html

Using nginx as HTTP load balancer

| Load balancing methods Default load balancing configuration Least connected load balancing Session persistence Weighted load balancing Health checks Further reading |

Introduction

Load balancing across multiple application instances is a commonly used technique for optimizing resource utilization, maximizing throughput, reducing latency, and ensuring fault-tolerant configurations.

It is possible to use nginx as a very efficient HTTP load balancer to distribute traffic to several application servers and to improve performance, scalability and reliability of web applications with nginx.

Load balancing methods

The following load balancing mechanisms (or methods) are supported in nginx:

- round-robin — requests to the application servers are distributed in a round-robin fashion,

- least-connected — next request is assigned to the server with the least number of active connections,

- ip-hash — a hash-function is used to determine what server should be selected for the next request (based on the client’s IP address).

Default load balancing configuration

The simplest configuration for load balancing with nginx may look like the following:

http {

upstream myapp1 {

server srv1.example.com;

server srv2.example.com;

server srv3.example.com;

}

server {

listen 80;

location / {

proxy_pass http://myapp1;

}

}

}

In the example above, there are 3 instances of the same application running on srv1-srv3. When the load balancing method is not specifically configured, it defaults to round-robin. All requests areproxied to the server group myapp1, and nginx applies HTTP load balancing to distribute the requests.

Reverse proxy implementation in nginx includes load balancing for HTTP, HTTPS, FastCGI, uwsgi, SCGI, memcached, and gRPC.

To configure load balancing for HTTPS instead of HTTP, just use “https” as the protocol.

When setting up load balancing for FastCGI, uwsgi, SCGI, memcached, or gRPC, use fastcgi_pass,uwsgi_pass, scgi_pass, memcached_pass, and grpc_pass directives respectively.

Least connected load balancing

Another load balancing discipline is least-connected. Least-connected allows controlling the load on application instances more fairly in a situation when some of the requests take longer to complete.

With the least-connected load balancing, nginx will try not to overload a busy application server with excessive requests, distributing the new requests to a less busy server instead.

Least-connected load balancing in nginx is activated when the least_conn directive is used as part of the server group configuration:

upstream myapp1 {

least_conn;

server srv1.example.com;

server srv2.example.com;

server srv3.example.com;

}

Session persistence

Please note that with round-robin or least-connected load balancing, each subsequent client’s request can be potentially distributed to a different server. There is no guarantee that the same client will be always directed to the same server.

If there is the need to tie a client to a particular application server — in other words, make the client’s session “sticky” or “persistent” in terms of always trying to select a particular server — the ip-hash load balancing mechanism can be used.

With ip-hash, the client’s IP address is used as a hashing key to determine what server in a server group should be selected for the client’s requests. This method ensures that the requests from the same client will always be directed to the same server except when this server is unavailable.

To configure ip-hash load balancing, just add the ip_hash directive to the server (upstream) group configuration:

upstream myapp1 {

ip_hash;

server srv1.example.com;

server srv2.example.com;

server srv3.example.com;

}

Weighted load balancing

It is also possible to influence nginx load balancing algorithms even further by using server weights.

In the examples above, the server weights are not configured which means that all specified servers are treated as equally qualified for a particular load balancing method.

With the round-robin in particular it also means a more or less equal distribution of requests across the servers — provided there are enough requests, and when the requests are processed in a uniform manner and completed fast enough.

When the weight parameter is specified for a server, the weight is accounted as part of the load balancing decision.

upstream myapp1 {

server srv1.example.com weight=3;

server srv2.example.com;

server srv3.example.com;

}

With this configuration, every 5 new requests will be distributed across the application instances as the following: 3 requests will be directed to srv1, one request will go to srv2, and another one — to srv3.

It is similarly possible to use weights with the least-connected and ip-hash load balancing in the recent versions of nginx.

Health checks

Reverse proxy implementation in nginx includes in-band (or passive) server health checks. If the response from a particular server fails with an error, nginx will mark this server as failed, and will try to avoid selecting this server for subsequent inbound requests for a while.

The max_fails directive sets the number of consecutive unsuccessful attempts to communicate with the server that should happen during fail_timeout. By default, max_fails is set to 1. When it is set to 0, health checks are disabled for this server. The fail_timeout parameter also defines how long the server will be marked as failed. After fail_timeout interval following the server failure, nginx will start to gracefully probe the server with the live client’s requests. If the probes have been successful, the server is marked as a live one.

Further reading

In addition, there are more directives and parameters that control server load balancing in nginx, e.g.proxy_next_upstream, backup, down, and keepalive. For more information please check our reference documentation.

Last but not least, application load balancing, application health checks, activity monitoring and on-the-fly reconfiguration of server groups are available as part of our paid NGINX Plus subscriptions.

The following articles describe load balancing with NGINX Plus in more detail:

NGINX is a capable accelerating proxy for a wide range of HTTP‑based applications. Its caching, HTTP connection processing, and offload significantly increase application performance, particularly during periods of high load.

Editor – NGINX Plus Release 5 and later can also load balance TCP-based applications. TCP load balancing was significantly extended in Release 6 by the addition of health checks, dynamic reconfiguration, SSL termination, and more. In NGINX Plus Release 7 and later, the TCP load balancer has full feature parity with the HTTP load balancer. Support for UDP load balancing was introduced in Release 9.

You configure TCP and UDP load balancing in the stream context instead of the http context. The available directives and parameters differ somewhat because of inherent differences between HTTP and TCP/UDP; for details, see the documentation for the HTTP and TCP Upstream modules.

NGINX Plus extends the capabilities of NGINX by adding further load‑balancing capabilities: health checks, session persistence, live activity monitoring, and dynamic configuration of load‑balanced server groups.

This blog post steps you through the configuring NGINX to load balance traffic to a set of web servers. It highlights some of the additional features in NGINX Plus.

For further reading, you can also take a look at the NGINX Plus Admin Guide and the follow‑up article to this one, Load Balancing with NGINX and NGINX Plus, Part 2.

Proxying Traffic with NGINX

We’ll begin by proxying traffic to a pair of upstream web servers. The following NGINX configuration is sufficient to terminate all HTTP requests to port 80, and forward them in a round‑robin fashion across the web servers in the upstream group:

http {

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

upstream backend {

server web-server1:80;

server web-server2:80;

}

}With this simple configuration, NGINX forwards each request received on port 80 to web-server1 and web-server2 in turn, establishing a new HTTP connection in each case.

Setting the Load‑Balancing Method

By default, NGINX uses the Round Robin method to spread traffic evenly between servers, informed by an optional “weight” assigned to each server to indicate its relative capacity.

The IP Hash method distributes traffic based on a hash of the source IP address. Requests from the same client IP address are always sent to the same upstream server. This is a crude session persistence method that is recalculated whenever a server fails or recovers, or whenever the upstream group is modified; NGINX Plus offers better solutions if session persistence is required.

The Least Connections method routes each request to the upstream server with the fewest active connections. This method works well when handling a mixture of quick and complex requests.

All load‑balancing methods can be tuned using an optional weight parameter on the server directive. This makes sense when servers have different processing capacities. In the following example, NGINX directs four times as many requests to web-server2 than to web-server1:

upstream backend {

zone backend 64k;

least_conn;

server web-server1 weight=1;

server web-server2 weight=4;

}In NGINX, weights are managed independently by each worker process. NGINX Plus uses a shared memory segment for upstream data (configured with the zone directive), so weights are shared between workers and traffic is distributed more accurately.

Failure Detection

If there is an error or timeout when NGINX tries to connect with a server, pass a request to it, or read the response header, NGINX retries the connection request with another server. (You can include the proxy_next_upstream directive in the configuration to define other conditions for retrying the request.) In addition, NGINX can take the failed server out of the set of potential servers and occasionally try requests against it to detect when it recovers. The max_fails and fail_timeout parameters to the server directive control this behavior.

NGINX Plus adds a set of out‑of‑band health checks that perform sophisticated HTTP tests against each upstream server to determine whether it is active, and a slow‑start mechanism to gradually reintroduce recovered servers back into the load‑balanced group:

server web-server1 slow_start=30s;A Common ‘Gotcha’ – Fixing the Host Header

Quite commonly, an upstream server uses the Host header in the request to determine which content to serve. If you get unexpected 404 errors from the server, or anything else that suggests it is serving the wrong content, this is the first thing to check. Then include the proxy_set_header directive in the configuration to set the appropriate value for the header:

location / {

proxy_pass http://backend;

# Rewrite the 'Host' header to the value in the client request

# or primary server name

proxy_set_header Host $host;

# Alternatively, put the value in the config:

#proxy_set_header Host www.example.com;

}Advanced Load Balancing Using NGINX Plus

A range of advanced features in NGINX Plus make it an ideal load balancer in front of farms of upstream servers:

- Load balancing and session persistence – Better load balancing across worker processes and session persistence methods to identify and honor application sessions

- HTTP health checking and server slow start – Asynchronous ‘synthetic transactions’ to probe the correct operation of each upstream server, and graceful ‘slow start’ reintroduction of servers when they recover

- Live activity monitoring – Immediate report of activity and performance

- Dynamically configured upstream server groups – Tool to facilitate some common upstream management tasks, such as the safe and temporary removal of a server

For details about advanced load balancing and proxying, see the follow‑up post to this one, Load Balancing with NGINX and NGINX Plus, Part 2.

In Load Balancing with NGINX and NGINX Plus, Part 1, we set up a simple HTTP proxy to load balance traffic across several web servers. In this article, we’ll look at additional features, some of them available in NGINX Plus: performance optimization with keepalives, health checks, session persistence, redirects, and content rewriting.

For details of the load‑balancing features in NGINX and NGINX Plus, check out the NGINX Plus Admin Guide.

Editor – NGINX Plus Release 5 and later can also load balance TCP-based applications. TCP load balancing was significantly extended in Release 6 by the addition of health checks, dynamic reconfiguration, SSL termination, and more. In NGINX Plus Release 7 and later, the TCP load balancer has full feature parity with the HTTP load balancer. Support for UDP load balancing was introduced in Release 9.

You configure TCP and UDP load balancing in the stream context instead of the http context. The available directives and parameters differ somewhat because of inherent differences between HTTP and TCP/UDP; for details, see the documentation for the HTTP and TCP/UDP Upstream modules.

A Quick Review

To review, this is the configuration we built in the previous article:

server {

listen 80;

location / {

proxy_pass http://backend;

# Rewrite the 'Host' header to the value in the client request,

# or primary server name

proxy_set_header Host $host;

# Alternatively, put the value in the config:

# proxy_set_header Host www.example.com;

}

}

upstream backend {

zone backend 64k; # Use NGINX Plus' shared memory

least_conn;

server webserver1 weight=1;

server webserver2 weight=4;

}In this article, we’ll look at a few simple ways to configure NGINX and NGINX Plus that improve the effectiveness of load balancing.

HTTP Keepalives

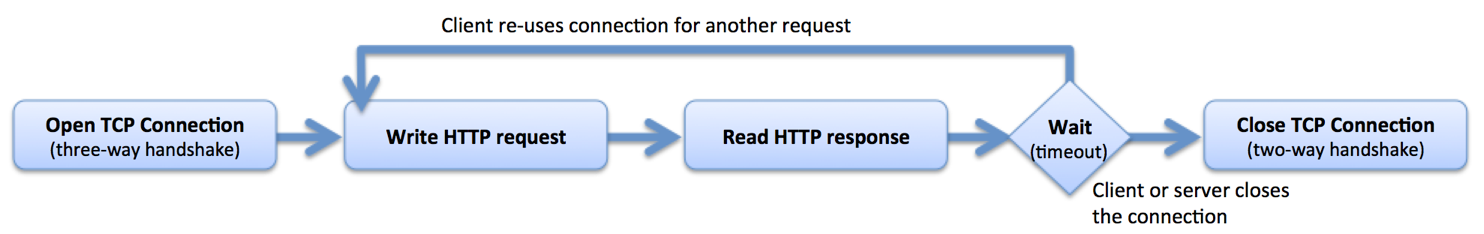

Enabling HTTP keepalives between NGINX or NGINX Plus and the upstream servers improves performance (by reducing latency) and reduces the likelihood that NGINX runs out of ephemeral ports.

The HTTP protocol uses underlying TCP connections to transmit HTTP requests and receive HTTP responses. HTTP keepalive connections allow for the reuse of these TCP connections, thus avoiding the overhead of creating and destroying a connection for each request:

NGINX is a full proxy and manages connections from clients (frontend keepalive connections) and connections to servers (upstream keepalive connections) independently:

NGINX maintains a “cache” of keepalive connections – a set of idle keepalive connections to the upstream servers – and when it needs to forward a request to an upstream, it uses an already established keepalive connection from the cache rather than creating a new TCP connection. This reduces the latency for transactions between NGINX and the upstream servers and reduces the rate at which ephemeral ports are used, so NGINX is able to absorb and load balance large volumes of traffic. With a large spike of traffic, the cache can be emptied and in that case NGINX establishes new HTTP connections to the upstream servers.

With other load‑balancing tools, this technique is sometimes called multiplexing, connection pooling, connection reuse, or OneConnect.

You configure the keepalive connection cache by including the proxy_http_version, proxy_set_header, and keepalive directives in the configuration:

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

}

}

upstream backend {

server webserver1;

server webserver2;

# maintain a maximum of 20 idle connections to each upstream server

keepalive 20;

}Health Checks

Enabling health checks increases the reliability of your load‑balanced service, reduces the likelihood of end users seeing error messages, and can also facilitate common maintenance operations.

The health check feature in NGINX Plus can be used to detect the failure of upstream servers. NGINX Plus probes each server using “synthetic transactions,” and checks the response against the parameters you configure on the health_check directive (and, when you include the match parameter, the associated match configuration block):

server {

listen 80;

location / {

proxy_pass http://backend;

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# The health checks inherit other proxy settings

proxy_set_header Host www.foo.com;

}

}

match statusok {

# Used for /test.php health check

status 200;

header Content-Type = text/html;

body ~ "Server[0-9]+ is alive";

}The health check inherits some parameters from its parent location block. This can cause problems if you use runtime variables in your configuration. For example, the following configuration works for real HTTP traffic because it extracts the value of the Host header from the client request. It probably does not work for the synthetic transactions that the health check uses because the Host header is not set for them, meaning no Host header is used in the synthetic transaction.

location / {

proxy_pass http://backend;

# This health check might not work...

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# Extract the 'Host' header from the request

proxy_set_header Host $host;

}One good solution is to create a dummy location block that statically defines all of the parameters used by the health check transaction:

location /internal-health-check1 {

internal; # Prevent external requests from matching this location block

proxy_pass http://backend;

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# Explicitly set request parameters; don't use run-time variables

proxy_set_header Host www.example.com;

}For more information, check out the NGINX Plus Admin Guide.

Session Persistence

With session persistence, applications that cannot be deployed in a cluster can be load balanced and scaled reliably. Applications that store and replicate session state operate more efficiently and end user performance improves.

Certain applications sometimes store state information on the upstream servers, for example when a user places an item in a virtual shopping cart or edits an uploaded image. In these cases, you might want to direct all subsequent requests from that user to the same server.

Session persistence specifies where a request must be routed to, whereas load balancing gives NGINX the freedom to select the optimal upstream server. The two processes can coexist using NGINX Plus’ session‑persistence capability:

If the request matches a session persistence rule

then use the target upstream server

else apply the load‑balancing algorithm to select the upstream server

If the session persistence decision fails because the target server is not available, then NGINX Plus makes a load‑balancing decision.

The simplest session persistence method is the “sticky cookie” approach, where NGINX Plus inserts a cookie in the first response that identifies the sticky upstream server:

sticky cookie srv_id expires=1h domain=.example.com path=/;In the alternative “sticky route” method, NGINX selects the upstream server based on request parameters such as the JSESSIONID cookie:

upstream backend {

server backend1.example.com route=a;

server backend2.example.com route=b;

# select first non-empty variable; it should contain either 'a' or 'b'

sticky route $route_cookie $route_uri;

}For more information, check out the NGINX Plus Admin Guide.

Rewriting HTTP Redirects

Rewrite HTTP redirects if some redirects are broken, and particularly if you find you are redirected from the proxy to the real upstream server.

When you proxy to an upstream server, the server publishes the application on a local address, but you access the application through a different address – the address of the proxy. These addresses typically resolve to domain names, and problems can arise if the server and the proxy have different domains.

For example, in a testing environment, you might address your proxy directly (by IP address) or as localhost. However, the upstream server might listen on a real domain name (such as www.nginx.com). When the upstream server issues a redirect message (using a 3xx status and Location header, or using a Refresh header), the message might include the server’s real domain.

NGINX tries to intercept and correct the most common instances of this problem. If you need full control to force particular rewrites, use the proxy_redirect directive as follows:

proxy_redirect http://staging.mysite.com/ http://$host/;Rewriting HTTP Responses

Sometimes you need to rewrite the content in an HTTP response. Perhaps, as in the example above, the response contains absolute links that refer to a server other than the proxy.

You can use the sub_filter directive to define the rewrite to apply:

sub_filter /blog/ /blog-staging/;

sub_filter_once off;One very common gotcha is the use of HTTP compression. If the client signals that it can accept compressed data, and the server then compresses the response, NGINX cannot inspect and modify the response. The simplest measure is to remove the Accept-Encoding header from the client’s request by setting it to the empty string (""):

proxy_set_header Accept-Encoding "";A Complete Example

Here’s a template for a load‑balancing configuration that employs all of the techniques discussed in this article. The advanced features available in NGINX Plus are highlighted in orange.

[Editor – The following configuration has been updated to use the NGINX Plus API for live activity monitoring and dynamic configuration of upstream groups, replacing the separate modules that were originally used.]

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_set_header Accept-Encoding "";

proxy_redirect http://staging.example.com/ http://$host/;

# Rewrite the Host header to the value in the client request

proxy_set_header Host $host;

# Replace any references inline to staging.example.com

sub_filter http://staging.example.com/ /;

sub_filter_once off;

}

location /internal-health-check1 {

internal; # Prevent external requests from matching this location block

proxy_pass http://backend;

health_check interval=2s fails=1 passes=5 uri=/test.php match=statusok;

# Explicitly set request parameters; don't use runtime variables

proxy_set_header Host www.example.com;

}

upstream backend {

zone backend 64k; # Use NGINX Plus' shared memory

least_conn;

keepalive 20;

# Apply session persistence for this upstream group

sticky cookie srv_id expires=1h domain=.example.com path=/servlet;

server webserver1 weight=1;

server webserver2 weight=4;

}

match statusok {

# Used for /test.php health check

status 200;

header Content-Type = text/html;

body ~ "Server[0-9]+ is alive";

}

server {

listen 8080;

root /usr/share/nginx/html;

location = /api {

api write=on; # Live activity monitoring and

# dynamic configuration of upstream groups

allow 127.0.0.1; # permit access from localhost

deny all; # deny access from everywhere else

}

}Try out all the great load‑balancing features in NGINX Plus for yourself – start your free 30-day trial today or contact us to discuss your use cases.

nignx 负载均衡的几种算法介绍 - 那家那人那小伙 - 博客园 https://www.cnblogs.com/lvgg/p/6140584.html

nignx 负载均衡的几种算法介绍

一、Nginx负载均衡算法

1、轮询(默认)

每个请求按时间顺序逐一分配到不同的后端服务,如果后端某台服务器死机,自动剔除故障系统,使用户访问不受影响。

2、weight(轮询权值)

weight的值越大分配到的访问概率越高,主要用于后端每台服务器性能不均衡的情况下。或者仅仅为在主从的情况下设置不同的权值,达到合理有效的地利用主机资源。

3、ip_hash

每个请求按访问IP的哈希结果分配,使来自同一个IP的访客固定访问一台后端服务器,并且可以有效解决动态网页存在的session共享问题。

4、fair

比 weight、ip_hash更加智能的负载均衡算法,fair算法可以根据页面大小和加载时间长短智能地进行负载均衡,也就是根据后端服务器的响应时间 来分配请求,响应时间短的优先分配。Nginx本身不支持fair,如果需要这种调度算法,则必须安装upstream_fair模块。

5、url_hash

按访问的URL的哈希结果来分配请求,使每个URL定向到一台后端服务器,可以进一步提高后端缓存服务器的效率。Nginx本身不支持url_hash,如果需要这种调度算法,则必须安装Nginx的hash软件包。

一、轮询(默认)

每个请求按时间顺序逐一分配到不同的后端服务器,如果后端服务器down掉,能自动剔除。

二、weight

指定轮询几率,weight和访问比率成正比,用于后端服务器性能不均的情况。

例如:

server 192.168.0.14 weight=10;

server 192.168.0.15 weight=10;

}

三、ip_hash

每个请求按访问ip的hash结果分配,这样每个访客固定访问一个后端服务器,可以解决session的问题。

例如:

ip_hash;

server 192.168.0.14:88;

server 192.168.0.15:80;

}

四、fair(第三方)

按后端服务器的响应时间来分配请求,响应时间短的优先分配。

server server1;

server server2;

fair;

}

五、url_hash(第三方)

按访问url的hash结果来分配请求,使每个url定向到同一个后端服务器,后端服务器为缓存时比较有效。

例:在upstream中加入hash语句,server语句中不能写入weight等其他的参数,hash_method是使用的hash算法

server squid1:3128;

server squid2:3128;

hash $request_uri;

hash_method crc32;

二、Nginx负载均衡调度状态

在Nginx upstream模块中,可以设定每台后端服务器在负载均衡调度中的状态,常用的状态有:

1、down,表示当前的server暂时不参与负载均衡

2、backup,预留的备份机器。当其他所有的非backup机器出现故障或者忙的时候,才会请求backup机器,因此这台机器的访问压力最低

3、max_fails,允许请求失败的次数,默认为1,当超过最大次数时,返回proxy_next_upstream模块定义的错误。

4、fail_timeout,请求失败超时时间,在经历了max_fails次失败后,暂停服务的时间。max_fails和fail_timeout可以一起使用。

如果Nginx没有仅仅只能代理一台服务器的话,那它也不可能像今天这么火,Nginx可以配置代理多台服务器,当一台服务器宕机之后,仍能保持系统可用。具体配置过程如下:

1. 在http节点下,添加upstream节点。

upstream linuxidc {

server 10.0.6.108:7080;

server 10.0.0.85:8980;

}

2. 将server节点下的location节点中的proxy_pass配置为:http:// + upstream名称,即“

http://linuxidc”.

location / {

root html;

index index.html index.htm;

proxy_pass http://linuxidc;

}

3. 现在负载均衡初步完成了。upstream按照轮询(默认)方式进行负载,每个请求按时间顺序逐一分配到不同的后端服务器,如果后端服务器down掉,能自动剔除。虽然这种方式简便、成本低廉。但缺点是:可靠性低和负载分配不均衡。适用于图片服务器集群和纯静态页面服务器集群。

除此之外,upstream还有其它的分配策略,分别如下:

weight(权重)

指定轮询几率,weight和访问比率成正比,用于后端服务器性能不均的情况。如下所示,10.0.0.88的访问比率要比10.0.0.77的访问比率高一倍。

upstream linuxidc{

server 10.0.0.77 weight=5;

server 10.0.0.88 weight=10;

}

ip_hash(访问ip)

每个请求按访问ip的hash结果分配,这样每个访客固定访问一个后端服务器,可以解决session的问题。

upstream favresin{

ip_hash;

server 10.0.0.10:8080;

server 10.0.0.11:8080;

}

fair(第三方)

按后端服务器的响应时间来分配请求,响应时间短的优先分配。与weight分配策略类似。

upstream favresin{

server 10.0.0.10:8080;

server 10.0.0.11:8080;

fair;

}

url_hash(第三方)

按访问url的hash结果来分配请求,使每个url定向到同一个后端服务器,后端服务器为缓存时比较有效。

注意:在upstream中加入hash语句,server语句中不能写入weight等其他的参数,hash_method是使用的hash算法。

upstream resinserver{

server 10.0.0.10:7777;

server 10.0.0.11:8888;

hash $request_uri;

hash_method crc32;

}

upstream还可以为每个设备设置状态值,这些状态值的含义分别如下:

down 表示单前的server暂时不参与负载.

weight 默认为1.weight越大,负载的权重就越大。

max_fails :允许请求失败的次数默认为1.当超过最大次数时,返回proxy_next_upstream 模块定义的错误.

fail_timeout : max_fails次失败后,暂停的时间。

backup: 其它所有的非backup机器down或者忙的时候,请求backup机器。所以这台机器压力会最轻。

upstream bakend{ #定义负载均衡设备的Ip及设备状态

ip_hash;

server 10.0.0.11:9090 down;

server 10.0.0.11:8080 weight=2;

server 10.0.0.11:6060;

server 10.0.0.11:7070 backup;

}

Load Balancing with NGINX 负载均衡算法的更多相关文章

- Nginx 负载均衡算法

Nginx 负载均衡算法 1.轮询(默认) 每个请求按时间顺序逐一分配到不同的后端服务,如果后端某台服务器死机,自动剔除故障系统,使用户访问不受影响. upstream tomcat_server { ...

- nginx负载均衡算法

配置方式 NGINX配置负载均衡主要是在nginx.conf文件中里upstream模块 1.upstream模块应放于nginx.conf配置的http{}标签内2.upstream模块默认算法是w ...

- nginx负载均衡tomcat和配置ssl

目录 tomcat 组件功能 engine host context connector service server valve logger realm UserDatabaseRealm 工作流 ...

- Nginx几种负载均衡算法及配置实例

本文装载自: https://yq.aliyun.com/articles/114683 Nginx负载均衡(工作在七层"应用层")功能主要是通过upstream模块实现,Ngin ...

- nginx 负载均衡(默认算法)

使用 nginx 的upstream模块只需要几步就可以实现一个负载均衡: 在 nginx 配置文件中添加两个server server { listen ; server_name 192.168. ...

- nginx的常用负载均衡算法,分别是

随机分配,hash一致性分配,最小连接数分配,主备分配 随机,轮训,一致性哈希,主备,https://blog.csdn.net/liu88010988/article/details/5154741 ...

- 面试题:Nginx负载均衡的算法怎么实现的?为什么要做动静分离?

面试题 Nginx负载均衡的算法怎么实现的?Nginx 有哪些负载均衡策略?Nginx为什么要做动静分离? 面试官心理剖析 主要是看应聘人员对Nginx的基本原理是否熟悉,需要应聘人员能够根据实际业务 ...

- nginx负载均衡中常见的算法及原理有哪些?

一.nginx负载均衡常用算法 1.1 轮询 轮询,nginx默认方式.一次将请求分配给各个后台服务器. upstream backserver { server 10.0.0.7; server 1 ...

- Nginx负载均衡的4种方式 :轮询-Round Robin 、Ip地址-ip_hash、最少连接-least_conn、加权-weight=n

这里对负载均衡概念和nginx负载均衡实现方式做一个总结: 先说一下负载均衡的概念: Load Balance负载均衡是用于解决一台机器(一个进程)无法解决所有请求而产生的一种算法. 我们知道单台服务 ...

随机推荐

- 找电影资源最强攻略,知道这些你就牛B了!

找电影资源最强攻略,知道这些你就牛B了! 电影工厂 2015-07-01 · 分享 点击题目下方环球电影,关注中国顶尖电影微杂志 我们也许没有机会去走遍千山万水,却可以通过电影进入各种各样的角色来 ...

- 【C语言】23-typedef

一.typedef作用简介 * 我们可以使用typedef关键字为各种数据类型定义一个新名字(别名). 1 #include <stdio.h> 2 3 typedef int Integ ...

- CM本地Yum源的搭建

CM本地Yum源的搭建 以本地yum源安装CM5为例,解释本地yum源的安装和利用本地yum源安装CM5. Cloudera Manager 5(以下简称CM)默认采用在线安装的方式,给不能联互联网或 ...

- HDFS设计思路,HDFS使用,查看集群状态,HDFS,HDFS上传文件,HDFS下载文件,yarn web管理界面信息查看,运行一个mapreduce程序,mapreduce的demo

26 集群使用初步 HDFS的设计思路 l 设计思想 分而治之:将大文件.大批量文件,分布式存放在大量服务器上,以便于采取分而治之的方式对海量数据进行运算分析: l 在大数据系统中作用: 为各类分布式 ...

- FreeRTOS 调试方法(printf---打印任务执行情况)

以下转载自安富莱电子: http://forum.armfly.com/forum.php 本章节为大家介绍 FreeRTOS 的调试方法,这里的调试方法主要是教会大家如何获取任务的执行情况,通过获取 ...

- json中把非json格式的字符串转换成json对象再转换成json字符串

JSON.toJson(str).toString()假如key和value都是整数的时候,先转换成jsonObject对象,再转换成json字符串

- lua错误收集

这里放一些我遇到的lua错误,希望大家分享一些错误给我,统一放在这里. 1.lua表的引用传值 上面的代码运行后会发现t2[2],t2[3]表里的内容也被删除了,实际上它们 与t2[1]表里的内容都是 ...

- Entity Framework(七):Fluent API配置案例

一.配置主键 要显式将某个属性设置为主键,可使用 HasKey 方法.在以下示例中,使用了 HasKey 方法对 Product 类型配置 ProductId 主键. 1.新加Product类 usi ...

- MyBatis 本是apache的一个开源项目iBatis

MyBatis 本是apache的一个开源项目iBatis, 2010年这个项目由apache software foundation 迁移到了google code,并且改名为MyBatis .20 ...

- 关系运算符:instanceof

关系运算符:instanceof a instanceof Animal;(这个式子的结果是一个布尔表达式) a为对象变量,Animal是类名. 上面语句是判定a是否可以贴Animal标签.如果可以贴 ...