在 CentOS7 部署 ELK8.0.1

在 CentOS7 部署 ELK8.0.1

1 下载软件:

- CentOS 7.4 IOS下载

- 华为镜像

- Rsyslog:CentOS 7 默认安装rsyslog

2 环境准备:

2.1 关闭防火墙和SELinux

setenforce 0 #临时关闭SELinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config #永久关闭SELnux(重启生效)

2.2 修改Linux最大打开文件数

cat /etc/security/limits.conf | grep -v "^#" | grep -v "^$"

* soft nproc 65536

* hard nproc 65536

* soft nofile 65536

* hard nofile 65536

cat /etc/sysctl.conf | grep -v "^#"

vm.max_map_count = 655360

# 应用配置

[root@aclab ~]# sysctl -p

[root@aclab ~]# cat /etc/systemd/system.conf | grep -v "^#"

[Manager]

DefaultLimitNOFILE=655360

DefaultLimitNPROC=655360

2.3 安装java环境

- 下载地址:jdk11

yum install -y jdk-11.0.15.1_linux-x64_bin.rpm

# 查看java版本

java -version

java version "11.0.15.1" 2022-04-22 LTS

Java(TM) SE Runtime Environment 18.9 (build 11.0.15.1+2-LTS-10)

Java HotSpot(TM) 64-Bit Server VM 18.9 (build 11.0.15.1+2-LTS-10, mixed mode)

# Java_Path

export JAVA_HOME=/usr/java/jdk-11.0.15.1/

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

# LogStash_Java_Path

export LS_JAVA_HOME=/usr/java/jdk-11.0.15.1/

3 elasticsearch

3.1 安装elasticsearch

# 安装,安装过程会自动创建superuser的密码

yum install -y elasticsearch-8.0.1-x86_64.rpm

----- Security autoconfiguration information -----

Authentication and authorization are enabled.

TLS for the transport and HTTP layers is enabled and configured.

The generated password for the elastic built-in superuser is : 5v=1nnaJ-G3Tq=sAVy-n

If this node should join an existing cluster, you can reconfigure this with

'/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token <token-here>'

after creating an enrollment token on your existing cluster.

You can complete the following actions at any time:

Reset the password of the elastic built-in superuser with

'/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic'.

Generate an enrollment token for Kibana instances with

'/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana'.

Generate an enrollment token for Elasticsearch nodes with

'/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s node'.

# 查看软件安装位置

rpm -ql elasticsearch

3.2 配置elasticsearch

# nano /etc/elasticsearch/elasticsearch.yml

# 设置内存不使用交换分区

bootstrap.memory_lock: false

# 设置允许所有ip可以连接该elasticsearch,这里根据项目需求自行修改

network.host: 0.0.0.0

# 开启监听的端口,默认为9200

http.port: 9200

# 安全特性:8.0.1默认开启,关闭为以下配置

xpack.security.enabled: false

xpack.security.enrollment.enabled: false

并注释所有xpack相关的配置。

#Elasticsearch在7.0.0之后免费使用x-pack,也为了系统业务数据安全,所以我们使用x-pack对Elasticsearch进行密码设置。

# 参考:https://www.cnblogs.com/kaysenliang/p/15515522.html

# 防火墙放行9200端口

[root@aclab ~]# firewall-cmd --add-port=9200/tcp --permanent

[root@aclab ~]# firewall-cmd --reload

# 开机启动elasticsearch

systemctl enable elasticsearch

# 启动elasticsearch

systemctl start elasticsearch

# 测试elasticsearch

浏览器访问:https://192.168.210.19:9200/

elastic:5v=1nnaJ-G3Tq=sAVy-n

curl https://192.168.210.19:9200 --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic

4Kibana

4.1 安装Kibana

# 安装

yum install -y kibana-8.0.1-x86_64.rpm

# 查看软件安装位置

rpm -ql kibana

4.2 配置Kibana

# nano /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

server.name: "aclab.kibana"

elasticsearch.hosts: ["http://localhost:9200"]

# server.publicBaseUrl 缺失,在生产环境中运行时应配置。某些功能可能运行不正常。

# 这里地址改为你访问kibana的地址,不能以 / 结尾

server.publicBaseUrl: "http://192.168.210.19:5601"

# Kibana 修改中文

在kibana.yml配置文件中添加一行配置

i18n.locale: "zh-CN"

# 生成kibana加密密钥,并将生成的密钥加入到kibana配置文件中

# xpack.encryptedSavedObjects.encryptionKey: Used to encrypt stored objects such as dashboards and visualizations

# xpack.reporting.encryptionKey: Used to encrypt saved reports

# xpack.security.encryptionKey: Used to encrypt session information

[root@aclab ~]# /usr/share/kibana/bin/kibana-encryption-keys generate

xpack.encryptedSavedObjects.encryptionKey: 5bb5e37c09fd6b05958be5a3edc82cf9

xpack.reporting.encryptionKey: b2b873b52ab8ec55171bd8141095302c

xpack.security.encryptionKey: 30670e386fab78f50b012e25cb284e88

# 防火墙放行5601端口

[root@aclab ~]# firewall-cmd --add-port=5601/tcp --permanent

[root@aclab ~]# firewall-cmd --reload

# 重启

# 开机启动kibana

systemctl enable kibana

# 启动kibana

systemctl start kibana

# 生成kibana令牌

[root@aclab ~]# /usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana

eyJ2ZXIiOiI4LjAuMSIsImFkciI6WyIxOTIuMTY4LjIxMC4xOTo5MjAwIl0sImZnciI6IjMzYjUwYTkxN2VmYjIwZjhjYzFjMmM0ZjFhMDdlY2Q2MTliZGUxOTU4MzMyOGY2MTJjMzMyODFjNzI0ODQ5NDYiLCJrZXkiOiJBemgtXzRBQnBtQ3lIN2p4MG1VdDpNN0tiNTFMNlM5NnhwU1lTdGpIOUVRIn0=

# 测试kibana,浏览器访问:

http://192.168.210.19:5601/

# 在tonken处输入刚刚的令牌

eyJ2ZXIiOiI4LjAuMSIsImFkciI6WyIxOTIuMTY4LjIxMC4xOTo5MjAwIl0sImZnciI6IjMzYjUwYTkxN2VmYjIwZjhjYzFjMmM0ZjFhMDdlY2Q2MTliZGUxOTU4MzMyOGY2MTJjMzMyODFjNzI0ODQ5NDYiLCJrZXkiOiJBemgtXzRBQnBtQ3lIN2p4MG1VdDpNN0tiNTFMNlM5NnhwU1lTdGpIOUVRIn0=

# 在服务器中检索验证码

sh /usr/share/kibana/bin/kibana-verification-code

# 输入Elasticsearch的用户名密码,进入系统

elastic:5v=1nnaJ-G3Tq=sAVy-n

# 进入kibana后台后可以根据需要修改elastic密码

5 Logstash

5.1 安装Logstash

# 安装

yum install -y logstash-8.0.1-x86_64.rpm

# 查看软件安装位置

rpm -ql logstash

5.2 配置Logstash

5.2.1 rsyslog配置

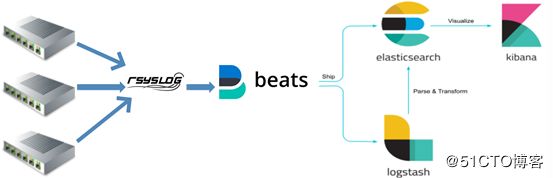

# 采集日志数据,需要有个数据源,这里我们使用 rsyslog 进行测试。

Linux 日志机制的核心是 rsyslog 守护进程,该服务负责监听 Linux下 的日志信息,并把日志信息追加到对应的日志文件中,一般在 /var/log 目录下。 它还可以把日志信息通过网络协议发送到另一台 Linux 服务器上,或者将日志存储在 MySQL 或 Oracle 等数据库中。

# 修改 rsyslog 配置:

vi /etc/rsyslog.conf

# remote host is: name/ip:port, e.g. 192.168.0.1:514, port optional

*.* @@192.168.210.19:10514

# 重启 rsyslog

systemctl restart rsyslog

# 防火墙配置

firewall-cmd --add-service=syslog --permanent

firewall-cmd --reload

5.2.1.1 将rsyslog默认514端口修改为5140

# Provides UDP syslog reception

$ModLoad imudp

$UDPServerRun 5140

# Provides TCP syslog reception

$ModLoad imtcp

$InputTCPServerRun 5140

# syslog的UDP和TCP传输的端口不是随便设置的,可通过以下命令查看

semanage port -l | grep syslog

# 将5140设置为syslog的UDP端口

semanage port -a -t syslogd_port_t -p udp 5140

# 将5140设置为syslog的TCP端口

semanage port -a -t syslogd_port_t -p tcp 5140

5.2.3 配置Logstash

# 配置分析设备

cat /etc/logstash/conf.d/syslog.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

syslog {

type => "system-syslog"

port => "5044"

}

}

output {

elasticsearch {

hosts => ["https://192.168.210.19:9200"]

index => "system-syslog-%{+YYYY.MM}"

user => "elastic"

password => "Admin123"

cacert => "/etc/logstash/certs/http_ca.crt"

}

}

# 创建一个软连接

[root@aclab ~]# ln -s /usr/share/logstash/bin/logstash /bin/

# 验证配置是否有效,配置文件OK才能正常启动logstash

[root@aclab ~]# logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

# 复制证书文件

[root@aclab ~]# mkdir /etc/logstash/certs

[root@aclab ~]# cp /etc/elasticsearch/certs/http_ca.crt !$

cp /etc/elasticsearch/certs/http_ca.crt /etc/logstash/certs

# logstash 的监听 IP 是127.0.0.1这个本地 IP,本地 IP 无法远程通信,所以需要修改一下配置文件,配置一下监听的 IP:

vim /etc/logstash/logstash.yml

api.http.host: 192.168.210.19

api.http.port: 9600

5.2.2 为Logstash配置权限

注:以RPM包安装Logstash时,默认以Logstash用户启动Logstash,在root用户下配置的文件,需要修改logstash的配置文件的权限。

cd /var/lib/logstash

chown -R logstash:logstash *

cd /var/log/logstash

chown -R logstash:logstash *

cd /etc/logstash/

chown -R logstash:logstash *

# 防火墙放行5044端口

[root@aclab ~]# firewall-cmd --add-port=5044/tcp --permanent

[root@aclab ~]# firewall-cmd --reload

# 重启

# 开机启动 logstash,配置文件OK才能正常启动logstash

systemctl enable logstash

# 启动 logstash

systemctl start logstash

# 测试logstash

logstash -e 'input { stdin { } } output { stdout {} }'

输入:hello

{

"host" => {

"hostname" => "aclab.ac"

},

"message" => "hello",

"event" => {

"original" => "hello"

},

"@timestamp" => 2022-05-21T09:40:31.869638Z,

"@version" => "1"

# 查看主机是否监听了5044与9600端口

[root@aclab ~]# netstat -pantu | grep "5044\|9600"

# debug logstash

[root@aclab ~]# journalctl -xef -u logstash

5.3 Logstash无法监听514端口的解决方案

Linux默认端口在1024下的程序是要在root下才能使用的,所以logstash直接监听514需要用root用户启动

1. 修改logstash启动参数

vim /etc/logstash/startup.options

LS_USER=root

LS_GROUP=root

2. 设置自动启动服务

/usr/share/logstash/bin/system-install /etc/logstash/startup.options systemd

3. 重启logstash

systemctl restart logstash

4. 查看是否监听514端口

# netstat -anlp | grep 514

6 filebeat(读取本地日志文件需要安装)

6.1 安装filebeat

# 安装

yum install -y filebeat-8.0.1-x86_64.rpm

# 查看软件安装位置

rpm -ql filebeat

6.2 配置filebeat

# nano /etc/filebeat/filebeat.yml

# ========= Filebeat 输入配置 =========

filebeat.inputs:

- type: log

enabled: true

# 每 5 秒检测一次文件是否有新的一行内容需要读取

backoff: "5s"

# 是否从文件末尾开始读取

tail_files: false

# 需要收集的数据所在的目录

paths:

- D:/web/openweb/Log/2021/*.log

# 自定义字段,在logstash中会根据该字段来在ES中建立不同的索引

fields:

filetype: apiweb_producelog

# 这里是收集的第二个数据,多个依次往下添加

- type: log

enabled: true

backoff: "5s"

tail_files: false

paths:

- D:/web/openweb/Logs/Warn/*.log

fields:

filetype: apiweb_supplierlog

# ========= Filebeat modules =========

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

# ========= Filebeat 输出配置 =========

output.redis:

enabled: true

# redis地址

hosts: ["192.168.1.103:6379"]

# redis密码,没有密码则不添加该配置项

password: 123456

# 数据存储到redis的key值

key: apilog

# 数据存储到redis的第几个库

db: 1

# 数据存储类型

datatype: list

# ========= Processors =========

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

7 ELK读取网络设备日志

7.1 方法一:ELK+filebeat+rsyslog

使用ELK收集网络设备日志的案例_运维开发故事的博客-CSDN博客_elk 网络设备

7.1.1 rsyslog配置

# 创建日志存放目录

[root@aclab ~]# mkdir -p /logdata/h3c/

[root@aclab ~]# mkdir /logdata/huawei/

[root@aclab ~]# mkdir /logdata/cisco/

# 设置日志接收位置

[root@aclab ~]# egrep -v "*#|^$" /etc/rsyslog.conf

$ModLoad imudp

$UDPServerRun 514

$ModLoad imtcp

$InputTCPServerRun 514

$WorkDirectory /var/lib/rsyslog

$ActionFileDefaultTemplate RSYSLOG_TraditionalFileFormat

$IncludeConfig /etc/rsyslog.d/*.conf

$OmitLocalLogging on

$IMJournalStateFile imjournal.state

*.info;mail.none;authpriv.none;cron.none;local6.none;local5.none;local4.none /var/log/messages

$template h3c,"/logdata/h3c/%FROMHOST-IP%.log"

local6.* ?h3c

$template huawei,"/logdata/huawei/%FROMHOST-IP%.log"

local5.* ?huawei

$template cisco,"/logdata/cisco/%FROMHOST-IP%.log"

local4.* ?cisco

注意:

*.info;mail.none;authpriv.none;cron.none;local6.none;local5.none;local4.none /var/log/messages

# 默认没有添加local6.none;local5.none;local4.none 命令,网络日志在写入对应的文件的同时会写入/var/log/messages 中 配置中各厂商的设备对应的local

/logdata/huawei --- local6

/logdata/h3c --- local5

/logdata/cisco --- local4

7.1.2 网络设备配置

# Huawei

info-center loghost source Vlanif99

info-center loghost your_ip facility local5

# H3C

info-center loghost source Vlan-interface99

info-center loghost your_ip facility local6

# CISCO

(config)#logging on

(config)#logging your_ip

(config)#logging facility local4

(config)#logging source-interface e0

# Ruijie

logging buffered warnings

logging source interface VLAN 99

logging facility local6

logging server your_ip

7.1.3 编辑filebeat配置文件

[root@aclab ~]# egrep -v "^#|^$" /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /logdata/huawei/*.log

tags: ["huawei"]

include_lines: ['Failed','failed','error','ERROR','\bDOWN\b','\bdown\b','\bUP\b','\bup\b']

drop_fields:

fields: ["beat","input_type","source","offset","prospector"]

- type: log

paths:

- /logdata/h3c/*.log

tags: ["h3c"]

include_lines: ['Failed','failed','error','ERROR','\bDOWN\b','\bdown\b','\bUP\b','\bup\b']

drop_fields:

fields: ["beat","input_type","source","offset","prospector"]

- type: log

paths:

- /logdata/cisco/*.log

tags: ["h3c"]

include_lines: ['Failed','failed','error','ERROR','\bDOWN\b','\bdown\b','\bUP\b','\bup\b']

drop_fields:

fields: ["beat","input_type","source","offset","prospector"]

setup.template.settings:

index.number_of_shards: 3

output.logstash:

hosts: ["your_ip:5044"]

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

7.1.4 Logstash 配置

[root@aclab ~]# egrep -v "^#|^$" /etc/logstash/conf.d/networklog.conf

input {

beats {

port => 5044

}

}

filter {

if "cisco" in [tags] {

grok {

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{NUMBER:log_sequence}: .%{SYSLOGTIMESTAMP:timestamp}: %%%{DATA:facility}-%{POSINT:severity}-%{CISCO_REASON:mnemonic}: %{GREEDYDATA:message}" }

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{NUMBER:log_sequence}: %{SYSLOGTIMESTAMP:timestamp}: %%{DATA:facility}-%{POSINT:severity}-%{CISCO_REASON:mnemonic}: %{GREEDYDATA:message}" }

add_field => {"severity_code" => "%{severity}"}

overwrite => ["message"]

}

}

else if "h3c" in [tags] {

grok {

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{SYSLOGTIMESTAMP:timestamp} %{YEAR:year} %{DATA:hostname} %%%{DATA:vvmodule}/%{POSINT:severity}/%{DATA:digest}: %{GREEDYDATA:message}" }

remove_field => [ "year" ]

add_field => {"severity_code" => "%{severity}"}

overwrite => ["message"]

}

}

else if "huawei" in [tags] {

grok {

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{SYSLOGTIMESTAMP:timestamp} %{DATA:hostname} %%%{DATA:ddModuleName}/%{POSINT:severity}/%{DATA:Brief}:%{GREEDYDATA:message}"}

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{SYSLOGTIMESTAMP:timestamp} %{DATA:hostname} %{DATA:ddModuleName}/%{POSINT:severity}/%{DATA:Brief}:%{GREEDYDATA:message}"}

remove_field => [ "timestamp" ]

add_field => {"severity_code" => "%{severity}"}

overwrite => ["message"]

}

}

mutate {

gsub => [

"severity", "0", "Emergency",

"severity", "1", "Alert",

"severity", "2", "Critical",

"severity", "3", "Error",

"severity", "4", "Warning",

"severity", "5", "Notice",

"severity", "6", "Informational",

"severity", "7", "Debug"

]

}

}

output{

elasticsearch {

hosts => ["https://192.168.210.19:9200"]

index => "system-syslog-%{+YYYY.MM}"

user => "elastic"

password => "Admin123"

cacert => "/etc/logstash/certs/http_ca.crt"

}

}

7.2 方法二:ELK

7.2.1 配置网络设备日志

# 思科交换机

logging host Your_ip transport udp port 5002

# H3C

info-center enable

info-center source default channel 2 trap state off

# 必要,不然日志会出现不符合级别的 alert 日志

info-center loghost Your_ip port 5003

# huawei

info-center enable

info-center loghost Your_ip

info-center timestamp log short-date

info-center timestamp trap short-date

7.2.2 配置Logstash解析网络设备日志

Logstash 的配置:

华为设备日志端口无法设置

input{

tcp {

port => 5002

type => "cisco"

}

udp {

port => 514

type => "huawei"

}

udp {

port => 5002

type => "cisco"

}

udp {

port => 5003

type => "h3c"

}

}

filter {

if [type] == "cisco" {

grok {

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{NUMBER:log_sequence}: .%{SYSLOGTIMESTAMP:timestamp}: %%%{DATA:facility}-%{POSINT:severity}-%{CISCO_REASON:mnemonic}: %{GREEDYDATA:message}" }

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{NUMBER:log_sequence}: %{SYSLOGTIMESTAMP:timestamp}: %%{DATA:facility}-%{POSINT:severity}-%{CISCO_REASON:mnemonic}: %{GREEDYDATA:message}" }

add_field => {"severity_code" => "%{severity}"}

overwrite => ["message"]

}

}

else if [type] == "h3c" {

grok {

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{SYSLOGTIMESTAMP:timestamp} %{YEAR:year} %{DATA:hostname} %%%{DATA:vvmodule}/%{POSINT:severity}/%{DATA:digest}: %{GREEDYDATA:message}" }

remove_field => [ "year" ]

add_field => {"severity_code" => "%{severity}"}

overwrite => ["message"]

}

}

else if [type] == "huawei" {

grok {

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{SYSLOGTIMESTAMP:timestamp} %{DATA:hostname} %%%{DATA:ddModuleName}/%{POSINT:severity}/%{DATA:Brief}:%{GREEDYDATA:message}"}

match => { "message" => "<%{BASE10NUM:syslog_pri}>%{SYSLOGTIMESTAMP:timestamp} %{DATA:hostname} %{DATA:ddModuleName}/%{POSINT:severity}/%{DATA:Brief}:%{GREEDYDATA:message}"}

remove_field => [ "timestamp" ]

add_field => {"severity_code" => "%{severity}"}

overwrite => ["message"]

}

}

mutate {

gsub => [

"severity", "0", "Emergency",

"severity", "1", "Alert",

"severity", "2", "Critical",

"severity", "3", "Error",

"severity", "4", "Warning",

"severity", "5", "Notice",

"severity", "6", "Informational",

"severity", "7", "Debug"

]

}

}

output{

elasticsearch {

hosts => ["https://Your_ip:9200"]

index => "system-syslog-%{+YYYY.MM}"

user => "elastic"

password => "Admin123"

cacert => "/etc/logstash/certs/http_ca.crt"

}

}

8 参考博客

ELK 架构之 Logstash 和 Filebeat 安装配置 - 田园里的蟋蟀 - 博客园 (cnblogs.com)

在 CentOS7 部署 ELK8.0.1的更多相关文章

- centos7 部署 open-falcon 0.2.0

=============================================== 2019/4/29_第3次修改 ccb_warlock 更新 ...

- Centos7 部署.netCore2.0项目

最近在学习.netCore2.0,学习了在Centos上部署.netCore的方法,中间遇到过坑,特意贴出来供大家分享,在此我只是简单的在CentOS上运行.NETCore网站,没有运用到nginx等 ...

- CentOS7 部署 ElasticSearch7.0.1 集群

环境 主机名 IP 操作系统 ES 版本 test1 192.168.1.2 CentOS7.5 7.0.1 test2 192.168.1.3 CentOS7.5 7.0.1 test3 192.1 ...

- 阿里云CentOS7部署MySql8.0

本文主要介绍了阿里云CentOS7如何安装MySql8.0,并对所踩的坑加以记录; 环境.工具.准备工作 服务器:阿里云CentOS 7.4.1708版本; 客户端:Windows 10; SFTP客 ...

- Centos7部署open-falcon 0.2

参考: https://www.cnblogs.com/straycats/p/7199209.html http://book.open-falcon.org/zh_0_2/quick_instal ...

- Centos7部署Open-falcon 0.2.0

官方和github上都有教程,但是对于我来说有的部署内容较为陌生,有点错误官方也未在教程中说明,故在此记录方便以后快速部署,本文部署的时间是2018/10/10. 虽然open-falcon是采用了前 ...

- centos7 部署 open-falcon 0.2.1

=============================================== 2019/4/28_第1次修改 ccb_warlock 更新 ...

- CentOS7部署CDH6.0.1大数据平台

Cloudera’s Distribution Including Apache Hadoop,简称“CDH”,基于Web的用户界面,支持大多数Hadoop组件,包括HDFS.MapReduce.Hi ...

- centos 7 部署 open-falcon 0.2.0

=============================================== 2017/12/06_第2次修改 ccb_warlock 更 ...

- [原]CentOS7部署osm2pgsql

转载请注明原作者(think8848)和出处(http://think8848.cnblogs.com) 部署Postgresql和部署PostGis请参考前两篇文章 本文主要参考GitHub上osm ...

随机推荐

- <十>关于菱形继承

代码1 #include <iostream> using namespace std; class A{ public: A(int _a):ma(_a){ cout<<&q ...

- 将 Vue.js 项目部署至静态网站托管,并开启 Gzip 压缩

摘要:关于使用 Nginx 开启静态网站 Gzip 压缩的教程已经有很多了,但是好像没几个讲怎么在对象存储的静态网站中开启 Gzip 压缩.其实也不复杂,我们一起来看下~ 本文分享自华为云社区< ...

- 网络I/O模型 解读

网络.内核 网卡能「接收所有在网络上传输的信号」,但正常情况下只接受发送到该电脑的帧和广播帧,将其余的帧丢弃. 所以网络 I/O 其实是网络与服务端(电脑内存)之间的输入与输出 内核 查看内核版本 : ...

- 【实时数仓】Day04-DWS层业务:DWS设计、访客宽表、商品主题宽表、流合并、地区主题表、FlinkSQL、关键词主题表、分词

一.DWS层与DWM设计 1.思路 之前已经进行分流 但只需要一些指标进行实时计算,将这些指标以主题宽表的形式输出 2.需求 访客.商品.地区.关键词四层的需求(可视化大屏展示.多维分析) 3.DWS ...

- JDK卸载

JDK卸载 从环境变量里的JAVA_HOME里找到jdk的主程序,删掉 把JAVA_HOME删掉,在把path里跟java_home相关的也删掉 在cmd里运行java-version

- 详解Python当中的pip常用命令

原文链接:https://mp.weixin.qq.com/s/GyUKj_7mOL_5bxUAJ5psBw 安装 在Python 3.4版本之后以及Python 2.7.9版本之后,官网的安装包当中 ...

- Kafka初学习

Kafka初学习 摘要:在之前的消息队列学习中,我已经了解了消息队列的基本概念以及基本用法,同时也了解到了市面上的几款消息队列中间件,其中我了解到了卡夫卡这款消息队列中间件是一款最为快速的消息队列 ...

- [0]为什么是SpinalHDL-Spinal简介

[0]为什么是SpinalHDL-Spinal简介 1. verilog/VHDL打咩 稍微先说两句SpinalHDL,硬件描述语言(HDL)分为verilog/VHDL和其他(雾),不过确实是这样, ...

- 杂项 NOI2020 打铁记

杂项 NOI2020 打铁记 day -一个月 他一个月前,期末考试刚刚结束,开始了NOI2020的冲刺.虽然时间并不充足,但一想到一个月后能站在国赛的赛场上,与来自全国的高手们一较高下,他充满了干劲 ...

- Codeforces Round #844 (Div.1 + Div.2) CF 1782 A~F 题解

点我看题 A. Parallel Projection 我们其实是要在这个矩形的边界上找一个点(x,y),使得(a,b)到(x,y)的曼哈顿距离和(f,g)到(x,y)的曼哈顿距离之和最小,求出最小值 ...