NEURAL NETWORKS, PART 1: BACKGROUND

NEURAL NETWORKS, PART 1: BACKGROUND

Artificial neural networks (NN for short) are practical, elegant, and mathematically fascinating models for machine learning. They are inspired by the central nervous systems of humans and animals – smaller processing units (neurons) are connected together to form a complex network that is capable of learning and adapting.

The idea of such neural networks is not new. McCulloch-Pitts (1943) described binary threshold neurons already back in 1940’s. Rosenblatt (1958) popularised the use of perceptrons, a specific type of neurons, as very flexible tools for performing a variety of tasks. The rise of neural networks was halted after Minsky and Papert (1969) published a book about the capabilities of perceptrons, and mathematically proved that they can’t really do very much. This result was quickly generalised to all neural networks, whereas it actually applied only to a specific type of perceptrons, leading to neural networks being disregarded as a viable machine learning method.

In recent years, however, the neural network has made an impressive comeback. Research in the area has become much more active, and neural networks have been found to be more than capable learners, breaking state-of-the-art results on a wide variety of tasks. This has been substantially helped by developments in computing hardware, allowing us to train very large complex networks in reasonable time. In order to learn more about neural networks, we must first understand the concept of vector space, and this is where we’ll start.

A vector space is a space where we can represent the position of a specific point or object as a vector (a sequence of numbers). You’re probably familiar with 2 or 3-dimensional coordinate systems, but we can easily extend this to much higher dimensions (think hundreds or thousands). However, it’s quite difficult to imagine a 1000-dimensional space, so we’ll stick to 2-dimensional illustations.

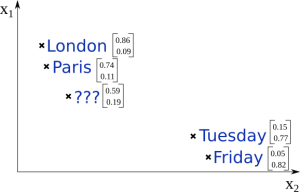

In the graph below, we have placed 4 objects in a 2-dimensional space, and each of them has a 2-dimensional vector that represents their position in this space. For machine learning and classification we make the assumption that similar objects have similar coordinates and are therefore positioned close to each other. This is true in our example, as cities are positioned in the upper left corner, and days-of-the-week are positioned a bit further in the lower right corner.

Let’s say we now get a new object (see image below) and all we know are its coordinates. What do you think, is this object a city or a day-of-the-week? It’s probably a city, because it is positioned much closer to other existing cities we already know about.

This is the kind of reasoning that machine learning tries to perform. Our example was very simple, but this problem gets more difficult when dealing with thousands of dimensions and millions of noisy datapoints.

In a traditional machine learning context these vectors are given as input to the classifier, both at training and testing time. However, there exist methods of representation learning where these vectors are learned automatically, together with the model.

Now that we know about vector spaces, it’s time to look at how the neuron works.

References

- McCulloch, Warren S., and Walter Pitts. “A logical calculus of the ideas immanent in nervous activity.” The Bulletin of Mathematical Biophysics 5.4 (1943): 115-133.

- Minsky, Marvin, and Papert Seymour. “Perceptrons.” (1969).

- Rosenblatt, Frank. “The perceptron: a probabilistic model for information storage and organization in the brain.” Psychological review 65.6 (1958): 386.

NEURAL NETWORKS, PART 1: BACKGROUND的更多相关文章

- 【转】Artificial Neurons and Single-Layer Neural Networks

原文:written by Sebastian Raschka on March 14, 2015 中文版译文:伯乐在线 - atmanic 翻译,toolate 校稿 This article of ...

- A Beginner's Guide To Understanding Convolutional Neural Networks(转)

A Beginner's Guide To Understanding Convolutional Neural Networks Introduction Convolutional neural ...

- Hacker's guide to Neural Networks

Hacker's guide to Neural Networks Hi there, I'm a CS PhD student at Stanford. I've worked on Deep Le ...

- 提高神经网络的学习方式Improving the way neural networks learn

When a golf player is first learning to play golf, they usually spend most of their time developing ...

- (转)A Beginner's Guide To Understanding Convolutional Neural Networks

Adit Deshpande CS Undergrad at UCLA ('19) Blog About A Beginner's Guide To Understanding Convolution ...

- 论文笔记之:Learning Multi-Domain Convolutional Neural Networks for Visual Tracking

Learning Multi-Domain Convolutional Neural Networks for Visual Tracking CVPR 2016 本文提出了一种新的CNN 框架来处理 ...

- 神经网络指南Hacker's guide to Neural Networks

Hi there, I'm a CS PhD student at Stanford. I've worked on Deep Learning for a few years as part of ...

- NEURAL NETWORKS, PART 2: THE NEURON

NEURAL NETWORKS, PART 2: THE NEURON A neuron is a very basic classifier. It takes a number of input ...

- [CVPR2015] Is object localization for free? – Weakly-supervised learning with convolutional neural networks论文笔记

p.p1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 13.0px "Helvetica Neue"; color: #323333 } p. ...

随机推荐

- Linux发送监控指标到内部邮箱

数据库的健康监控是个很重要的工作.重要的指标\KPI监控结果会有专门的採集.监控.告警系统来做相关事情. 而一些不是很重要的或者还在设计和调试阶段的相关指标,我仅仅是想发送到我自己邮箱,本文就针对在s ...

- careercup-栈与队列 3.5

3.5 实现一个MyQueue类,该类用两个栈来实现一个队列. 解答 队列是先进先出的数据结构(FIFO),栈是先进后出的数据结构(FILO), 用两个栈来实现队列的最简单方式是:进入队列则往第一个栈 ...

- spring beans源码解读之--XmlBeanFactory

导读: XmlBeanFactory继承自DefaultListableBeanFactory,扩展了从xml文档中读取bean definition的能力.从本质上讲,XmlBeanFactory等 ...

- ubuntu 14.04 编译安装 nginx

下载源码包 nginx 地址:http://nginx.org/en/download.html 下载nginx 1.4.7 编译前先安装两个包: 直接编译安装会碰到缺少pcre等问题,这时候只要到 ...

- 删除mysql重复记录的办法

网上有很多的办法,但是大多数都是通过临时表的办法,其实你是可以用一句简单的sql就可以做到: alter ignore table SOMETABLE add primary key(fields n ...

- 关于css的兼容

这篇随笔为了方便自己后期的学习和查找,用来记录平时遇到的一些问题,后期会陆续更新 1.背景图 :background-position属性,在ff下不支持该属性的拆分写法(background-pos ...

- 如何在 Debian / Ubuntu 服务器上架设 L2TP / IPSec VPN

本站的 Rio 最近在一台 Ubuntu 和一台 Debian 主机上配置了 L2TP / IPSec VPN,并在自己的博客上做了记录.原文以英文写就,我把它大致翻译了一下,结合我和 Rio 在设置 ...

- 关于jQuery,$(":button") 中的冒号是什么意思?

$(":button") 表示匹配所有的按钮.$("input:checked")表示匹配所有选中的被选中元素(复选框.单选框等,不包括select中的opti ...

- 阿里云服务器(Win 2008 R2 Standard)安装MSSM 2008 R2之1033和2052问题

最近在给租用的阿里云服务器安装Sql Server 2008 R2 Express时,遭遇下面的问题.经过几番折腾后,终于解决问题,完成安装,这里总结分享我的解决方法,希望能给遇到相同问题的小伙伴们节 ...

- AngularJS track by $index引起的思考

今天写了一段程序,只是一个简答的table数据绑定,但是绑定select的数据之后,发现ng-change事件失去了效果,不知道什么原因. 主要用到的代码如下: <div id="ri ...