heartbeat+drdb+nfs实现高可用

一、环境

nfsserver01:192.168.127.101 心跳:192.168.42.101 centos7.3

nfsserver02:192.168.127.102 心跳:192.168.42.102 centos7.3

VIP:192.168.127.103

nfsclient01:192.168.127.100 centos7.3

二、原理

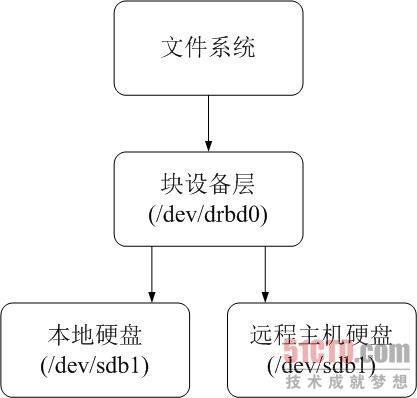

1、DRDB,分布式复制块设备(Distributed Replicated Block Device),基于linux的软件组件;primary负责接受写入的数据,并且把写入的数据发送给Secondary端。

支持底层设备:磁盘/分区、LVM逻辑卷、soft raid设备等。

复制模式:

协议A:异步复制协议。一旦本地磁盘写入已经完成,数据包已在发送队列中,则写被认为是完成的,在一个节点发生故障时,可能发生数据丢失,因为被写入到远程节点上的数据可能仍在发送队列尽管,在故障转移节点上的数据是一致的,但没有及时更新,这通常用于地理上分开的点。

协议B:内存同步(半同步)复制协议。一旦本地磁盘写入已完成,且复制数据包达到了对等节点则认为写在主节点上被认为是完成的,数据丢失可能发生在参加的两个节点同时故障的情况下,因为在传输中的数据可能不会被提交到磁盘。

协议C:同步复制协议。只有在本地和远程节点的磁盘已经确认了写操作完成,写才被认为完成。没有任何数据丢失,所以这是一个群集节点的流行模式,但I/0吞吐量依赖于网络带宽。

三、安装

1、DRDB

下载地址:http://oss.linbit.com/drbd

nfsserver01[root@nfsserver01 ~]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.127.101 nfsserver01192.168.127.102 nfsserver02[root@nfsserver01 ~]# fdisk -l|grep sdb磁盘 /dev/sdb:1073 MB, 1073741824 字节,2097152 个扇区

[root@nfsserver01 ~]# crontab -e

*/ * * * * ntpdate cn.pool.ntp.org[root@nfsserver01 ~]# crontab -l*/5 * * * * ntpdate cn.pool.ntp.org[root@node01 ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org [root@node01 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm ####配置elrepo源获取http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm获取http://elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm准备中... ################################# [100%]正在升级/安装... 1:elrepo-release-7.0-3.el7.elrepo ################################# [100%][root@node01 ~]# yum install -y kmod-drbd84 drbd84-utils kernel* ####安装drbd,完成后重启

[root@nfsserver01 ~]# cat /etc/drbd.conf # You can find an example in /usr/share/doc/drbd.../drbd.conf.example

include "drbd.d/global_common.conf";include "drbd.d/*.res";[root@nfsserver01 ~]# cp /etc/drbd.d/global_common.conf /etc/drbd.d/global_common.conf.ori ####全局配置文件您在 /var/spool/mail/root 中有新邮件[root@nfsserver01 ~]# vim /etc/drbd.d/global_common.conf

# DRBD is the result of over a decade of development by LINBIT.# In case you need professional services for DRBD or have# feature requests visit http://www.linbit.com

global { usage-count no;

# Decide what kind of udev symlinks you want for "implicit" volumes # (those without explicit volume <vnr> {} block, implied vnr=0): # /dev/drbd/by-resource/<resource>/<vnr> (explicit volumes) # /dev/drbd/by-resource/<resource> (default for implict) udev-always-use-vnr; # treat implicit the same as explicit volumes

# minor-count dialog-refresh disable-ip-verification # cmd-timeout-short 5; cmd-timeout-medium 121; cmd-timeout-long 600;}

common { protocol C; handlers { # These are EXAMPLE handlers only. # They may have severe implications, # like hard resetting the node under certain circumstances. # Be careful when choosing your poison.

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f"; pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f"; local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f"; # fence-peer "/usr/lib/drbd/crm-fence-peer.sh"; # split-brain "/usr/lib/drbd/notify-split-brain.sh root"; # out-of-sync "/usr/lib/drbd/notify-out-of-sync.sh root"; # before-resync-target "/usr/lib/drbd/snapshot-resync-target-lvm.sh -p 15 -- -c 16k"; # after-resync-target /usr/lib/drbd/unsnapshot-resync-target-lvm.sh; # quorum-lost "/usr/lib/drbd/notify-quorum-lost.sh root"; }

startup { # wfc-timeout degr-wfc-timeout outdated-wfc-timeout wait-after-sb }

options { # cpu-mask on-no-data-accessible

# RECOMMENDED for three or more storage nodes with DRBD 9: # quorum majority; # on-no-quorum suspend-io | io-error; }

disk { on-io-error detach; # size on-io-error fencing disk-barrier disk-flushes # disk-drain md-flushes resync-rate resync-after al-extents # c-plan-ahead c-delay-target c-fill-target c-max-rate # c-min-rate disk-timeout }

net { # protocol timeout max-epoch-size max-buffers # connect-int ping-int sndbuf-size rcvbuf-size ko-count # allow-two-primaries cram-hmac-alg shared-secret after-sb-0pri # after-sb-1pri after-sb-2pri always-asbp rr-conflict # ping-timeout data-integrity-alg tcp-cork on-congestion # congestion-fill congestion-extents csums-alg verify-alg # use-rle } syncer { rate 1024M; }}

[root@nfsserver01 ~]# vim /etc/drbd.d/nfsserver.res resource nfsserver { protocol C; meta-disk internal; device /dev/drbd1; syncer { verify-alg sha1; } net { allow-two-primaries; } on nfsserver01 { disk /dev/sdb; address 192.168.127.101:7789; } on nfsserver02 { disk /dev/sdb; address 192.168.127.102:7789; }}

[root@nfsserver01 ~]# scp -rp /etc/drbd.d/* nfsserver02:/etc/drbd.d/The authenticity of host 'nfsserver02 (192.168.127.102)' can't be established.ECDSA key fingerprint is 47:2b:8b:e2:56:38:a5:45:6f:1c:c7:62:5b:de:9d:20.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added 'nfsserver02,192.168.127.102' (ECDSA) to the list of known hosts.root@nfsserver02's password: global_common.conf 100% 2621 2.6KB/s 00:00 global_common.conf.ori 100% 2563 2.5KB/s 00:00 nfsserver.res 100% 261 0.3KB/s 00:00

[root@nfsserver01 ~]# drbdadm create-md nfsserver #####初始化initializing activity loginitializing bitmap (32 KB) to all zeroWriting meta data...New drbd meta data block successfully created

[root@nfsserver01 /]# modprobe drbd #####加载到内核[root@nfsserver01 /]# drbdadm up nfsserver ###启用nfsserver资源组[root@nfsserver01 /]# drbdadm primary nfsserver --force ###将nfsserver01设为主节点[root@nfsserver01 /]# systemctl start drbd[root@nfsserver01 /]# systemctl enable drbdCreated symlink from /etc/systemd/system/multi-user.target.wants/drbd.service to /usr/lib/systemd/system/drbd.service.[root@nfsserver01 /]# drbdadm cstate nfsserverConnected[root@nfsserver01 /]# drbdadm role nfsserverPrimary[root@nfsserver01 /]# drbdadm dstate nfsserverUpToDate/UpToDate#########################################

本地和对等节点的硬盘有可能为下列状态之一:

注:

Diskless 无盘:本地没有块设备分配给DRBD使用,这表示没有可用的设备,或者使用drbdadm命令手工分离或是底层的I/O错误导致自动分离

Attaching:读取无数据时候的瞬间状态

Failed 失败:本地块设备报告I/O错误的下一个状态,其下一个状态为Diskless无盘

Negotiating:在已经连接的DRBD设置进行Attach读取无数据前的瞬间状态

Inconsistent:数据是不一致的,在两个节点上(初始的完全同步前)这种状态出现后立即创建一个新的资源。此外,在同步期间(同步目标)在一个节点上出现这种状态

Outdated:数据资源是一致的,但是已经过时

DUnknown:当对等节点网络连接不可用时出现这种状态

Consistent:一个没有连接的节点数据一致,当建立连接时,它决定数据是UpToDate或是Outdated

UpToDate:一致的最新的数据状态,这个状态为正常状态

#############################################

[root@nfsserver01 /]# drbd-overview NOTE: drbd-overview will be deprecated soon.Please consider using drbdtop. 1:nfsserver/0 Connected(2*) Primar/Second UpToDa/UpToDa /share xfs 1021M 33M 989M 4%

#######在nfsserver02上做同样操作,但是不要设置为主节点,默认都为从节点

[root@nfsserver01 ~]# mkfs.xfs /dev/drbd1 meta-data=/dev/drbd1 isize=512 agcount=4, agsize=65532 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0data = bsize=4096 blocks=262127, imaxpct=25 = sunit=0 swidth=0 blksnaming =version 2 bsize=4096 ascii-ci=0 ftype=1log =internal log bsize=4096 blocks=855, version=2 = sectsz=512 sunit=0 blks, lazy-count=1realtime =none extsz=4096 blocks=0, rtextents=0您在 /var/spool/mail/root 中有新邮件[root@nfsserver01 ~]# mount /dev/drbd1 /share/[root@nfsserver01 ~]# df -h文件系统 容量 已用 可用 已用% 挂载点/dev/sda2 18G 1.7G 17G 10% /devtmpfs 99M 0 99M 0% /devtmpfs 110M 0 110M 0% /dev/shmtmpfs 110M 4.6M 105M 5% /runtmpfs 110M 0 110M 0% /sys/fs/cgroup/dev/sda1 197M 149M 49M 76% /boottmpfs 22M 0 22M 0% /run/user/0/dev/drbd1 1021M 33M 989M 4% /share

#######验证如下#####nfsserver01[root@nfsserver01 /]# cd /share/[root@nfsserver01 share]# touch {1..9}.txt[root@nfsserver01 share]# ls1.txt 2.txt 3.txt 4.txt 5.txt 6.txt 7.txt 8.txt 9.txt[root@nfsserver01 /]# umount /share/[root@nfsserver01 /]# drbdadm secondary nfsserver#######nfsserver02[root@nfsserver02 /]# drbdadm primary nfsserver您在 /var/spool/mail/root 中有新邮件[root@nfsserver02 /]# mount /dev/drbd1 /share/[root@nfsserver02 /]# ls /share/1.txt 2.txt 3.txt 4.txt 5.txt 6.txt 7.txt 8.txt 9.txt

2、heartbeat安装

参考:https://www.cnblogs.com/suffergtf/p/9525416.html

########nfsserver01上

[root@nfsserver01 ~]# ssh-keygen[root@nfsserver01 ~]# ssh-copy-id node02[root@nfsserver01 ~]# yum groupinstall "Development tools" ####安装开发工具 [root@nfsserver01 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo ####安装epel源[root@nfsserver01 ~]# yum -y install glib2-devel libtool-ltdl-devel net-snmp-devel bzip2-devel ncurses-devel openssl-devel libtool \libxml2-devel gettext bison flex zlib-devel mailx which libxslt docbook-dtds docbook-style-xsl PyXML shadow-utils opensp autoconf automake bzip2[root@nfsserver01 ~]# yum install -y glib2-devel libxml2 libxml2-devel bzip2-devel e2fsprogs-devel libxslt-devel libtool-ltdl-devel \make wget docbook-dtds docbook-style-xsl bzip2-devel asciidoc libuuid-devel psmisc ####安装heartbeat依赖 [root@nfsserver01 ~]# groupadd haclient[root@nfsserver01 ~]# useradd -g haclient hacluster -M -s /sbin/nologin ###添加heartbeat默认用户和组[root@nfsserver01 ~]# cd /server/tools/[root@nfsserver01 tools]# tar -jxf 0a7add1d9996.tar.bz2[root@nfsserver01 tools]# cd Reusable-Cluster-Components-glue--0a7add1d9996/[root@nfsserver01 Reusable-Cluster-Components-glue--0a7add1d9996]# ./autogen.sh...Now run ./configure[root@nfsserver01 Reusable-Cluster-Components-glue--0a7add1d9996]# ./configure --prefix=/application/heartbeat[root@nfsserver01 Reusable-Cluster-Components-glue--0a7add1d9996]# make && make install[root@nfsserver01 Reusable-Cluster-Components-glue--0a7add1d9996]# cd .. [root@nfsserver01 tools]# rz [root@nfsserver01 tools]# tar xf resource-agents-3.9.6.tar.gz [root@nfsserver01 tools]# cd resource-agents-3.9.6[root@nfsserver01 resource-agents-3.9.6]# ./autogen.sh[root@nfsserver01 resource-agents-3.9.6]# export CFLAGS="$CFLAGS -I/application/heartbeat/include -L/application/heartbeat/lib"[root@nfsserver01 resource-agents-3.9.6]# ./configure --prefix=/application/heartbeat/[root@nfsserver01 resource-agents-3.9.6]# ln -s /application/heartbeat/lib/* /lib/[root@nfsserver01 resource-agents-3.9.6]# ln -s /application/heartbeat/lib/* /lib64/[root@nfsserver01 resource-agents-3.9.6]# make &&make install[root@nfsserver01 tools]# tar -jxf 958e11be8686.tar.bz2 [root@nfsserver01 tools]# cd Heartbeat-3-0-958e11be8686/[root@nfsserver01 Heartbeat-3-0-958e11be8686]# ./bootstrap[root@nfsserver01 Heartbeat-3-0-958e11be8686]# ./configure --prefix=/application/heartbeat/[root@nfsserver01 Heartbeat-3-0-958e11be8686]# vim /application/heartbeat/include/heartbeat/glue_config.h#define HA_HBCONF_DIR "/application/heartbeat/etc/ha.d/" #########删除该行(最后)[root@nfsserver01 Heartbeat-3-0-958e11be8686]# make && make install[root@nfsserver01 heartbeat]# cd /application/heartbeat/share/doc/heartbeat/[root@nfsserver01 heartbeat]# cp -a ha.cf authkeys haresources /application/heartbeat/etc/ha.d/[root@nfsserver01 heartbeat]# chmod 600 authkeys [root@nfsserver01 heartbeat]# ln -svf /application/heartbeat/lib64/heartbeat/plugins/RAExec/* /application/heartbeat/lib/heartbeat/plugins/RAExec/"/application/heartbeat/lib/heartbeat/plugins/RAExec/*" -> "/application/heartbeat/lib64/heartbeat/plugins/RAExec/*"[root@nfsserver01 heartbeat]# ln -svf /application/heartbeat/lib64/heartbeat/plugins/* /application/heartbeat/lib/heartbeat/plugins/"/application/heartbeat/lib/heartbeat/plugins/HBauth" -> "/application/heartbeat/lib64/heartbeat/plugins/HBauth""/application/heartbeat/lib/heartbeat/plugins/HBcomm" -> "/application/heartbeat/lib64/heartbeat/plugins/HBcomm""/application/heartbeat/lib/heartbeat/plugins/quorum" -> "/application/heartbeat/lib64/heartbeat/plugins/quorum""/application/heartbeat/lib/heartbeat/plugins/tiebreaker" -> "/application/heartbeat/lib64/heartbeat/plugins/tiebreaker" [root@nfsserver01 heartbeat]# cd /application/heartbeat/etc/ha.d/[root@nfsserver01 ha.d]# vim ha.cf debugfile /var/log/ha-debuglogfile /var/log/ha-logkeepalive 2deadtime 30warntime 10initdead 120udpport 694bcast ens37ucast ens37 192.168.42.102auto_failback onnode nfsserver01node nfsserver02ping 192.168.42.101respawn hacluster /application/heartbeat/libexec/heartbeat/ipfailapiauth ipfail gid=haclient uid=hacluster [root@nfsserver01 heartbeat]# vim authkeys ####主备相同 auth 22 sha1 hello! [root@nfsserver01 heartbeat]# vim haresources ######主备相同 nfsserver01 192.168.127.103/24/ens33:0

3、nfs安装

参考:https://www.cnblogs.com/suffergtf/p/9486250.html

########服务端nfsserver01[root@nfsserver01 ~]# yum install rpcbind nfs-utils -y[root@nfsserver01 ~]# systemctl start rpcbind[root@nfsserver01 ~]# systemctl enable rpcbind [root@nfsserver01 ~]# systemctl start nfs[root@nfsserver01 ~]# systemctl enable nfsCreated symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.[root@nfsserver01 ~]# vim /etc/exports /share (rw,async)[root@nfsserver01 ~]# showmount -e localhostExport list for localhost:/share 192.168.127.0/24[root@nfsserver01 ~]# chown nfsnobody.nfsnobody /share##########nfsserver02如上##########客户端[root@nfsclient01 ~]# yum install rpcbind nfs-utils -y[root@nfsclient01 ~]# mount -t nfs 192.168.127.103:/share /mnt[root@nfsclient01 ~]# df -h文件系统 容量 已用 可用 已用% 挂载点/dev/sda2 18G 1.3G 17G 7% /devtmpfs 101M 0 101M 0% /devtmpfs 111M 0 111M 0% /dev/shmtmpfs 111M 4.6M 106M 5% /runtmpfs 111M 0 111M 0% /sys/fs/cgroup/dev/sda1 197M 94M 104M 48% /boottmpfs 23M 0 23M 0% /run/user/0192.168.127.103:/share 18G 2.2G 16G 12% /mnt

4、整合

heartbeat服务[root@nfsserver01 resource.d]# vim /application/heartbeat/etc/ha.d/haresources nfsserver01 IPaddr::/ens33: drbddisk::nfsserver Filesystem::/dev/drbd1::/share::xfs killnfsd

注:该文件内IPaddr,Filesystem等脚本存放路径在安装路径下的/etc/ha.d/resource.d/下,也可在该目录下存放服务启动脚本(例如:mysql,www),将相同脚本名称添加到/etc/ha.d/haresources内容中,从而跟随heartbeat启动而启动该脚本。

IPaddr::192.168.0.190/24/eth0:用IPaddr脚本配置对外服务的浮动虚拟IP

drbddisk::r0:用drbddisk脚本实现DRBD主从节点资源组的挂载和卸载,

Filesystem::/dev/drbd1::/share::xfs:用Filesystem脚本实现磁盘挂载和卸载

killnfsd这个为控制nfs启动的脚本,自己写

调用脚本请先确定脚本存在于安装路径下的/etc/ha.d/resource.d/

[root@nfsserver01 resource.d]# vim /application/heartbeat/etc/ha.d/resource.d/killnfsd

#!/bin/bash

killall -9 nfs;systemctl restart nfs; exit 0

nfs服务

客户端挂载

[root@nfsclient01 ~]# mount -t nfs 192.168.127.103:/share /mnt

四、测试

1、关闭nfsserver01,查看客户端是否还能正常访问共享,如果正常,表示DRBD正常切换主从,heartbeat虚拟ip正常漂移。

2、开启nfsserver01后,关闭nfsserver02,查看客户端是否还能正常访问共享

heartbeat+drdb+nfs实现高可用的更多相关文章

- Heartbeat+DRBD+NFS 构建高可用的文件系统

1.实验拓扑图 2.修改主机名 1 2 3 vim /etc/sysconfig/network vim /etc/hosts drbd1.free.com drbd2.free.com 3. ...

- Heartbeat实现web服务器高可用

一.Heartbeat概述: Heartbeat的工作原理:heartbeat最核心的包括两个部分,心跳监测部分和资源接管部分,心跳监测可以通过网络链路和串口进行,而且支持冗余链路,它们之间相互发送报 ...

- 配置:heartbeat+nginx+mysqld+drbd高可用笔记(OK)

参考资料:http://www.centoscn.com/CentosServer/cluster/2015/0605/5604.html 背景需求: 使用heartbeat来做HA高可用,并且把 ...

- Heartbeat实现集群高可用热备

公司最近需要针对服务器实现热可用热备,这几天也一直在琢磨这个方面的东西,今天做了一些Heartbeat方面的工作,在此记录下来,给需要的人以参考. Heartbeat 项目是 Linux-HA 工程的 ...

- Centos下部署DRBD+NFS+Keepalived高可用环境记录

使用NFS服务器(比如图片业务),一台为主,一台为备.通常主到备的数据同步是通过rsync来做(可以结合inotify做实时同步).由于NFS服务是存在单点的,出于对业务在线率和数据安全的保障,可以采 ...

- MySQL+heartbeat+nfs做高可用

一.环境准备节点两个node1:10.10.10.202node2:10.10.10.203nfs服务器:node3:10.10.10.204系统环境CentOS release 6.5 (Final ...

- DRBD+NFS+Keepalived高可用环境

1.前提条件 准备两台配置相同的服务器 2.安装DRBD [root@server139 ~]# yum -y update kernel kernel-devel [root@server139 ~ ...

- lvs+heartbeat搭建负载均衡高可用集群

[172.25.48.1]vm1.example.com [172.25.48.4]vm4.example.com 集群依赖软件:

- nfs+keepalived高可用

1台nfs主被服务器都下载nfs.keepalived yum install nfs-utils rpcbind keepalived -y 2台nfs服务器nfs挂载目录及配置必须相同 3.在主n ...

随机推荐

- Java相关书籍阅读

- java数据结构----堆

1.堆:堆是一种树,由它实现的优先级队列的插入和删除的时间复杂度都是O(logn),用堆实现的优先级队列虽然和数组实现相比较删除慢了些,但插入的时间快的多了.当速度很重要且有很多插入操作时,可以选择堆 ...

- 25 Groovy 相关资料

Groovy Homepage Groovy API page Groovy documentation Groovy Goodness blog series from Hubert Klein I ...

- aix 推荐使用重启

重启os AIX 主机 推荐 shutdown –Fr 在客户一次停机维护中,发现了这个问题. 环境是ORACLE 10G RAC for AIX6,使用了HACMP管理共享磁盘. 在停机维护时间段内 ...

- 解决“程序包管理器控制台”输入命令找不到Nuget包问题

问题: 问题原因: Nuget源的地址上不去 解决办法: 1.将Nuget源更新为可以国内使用的官方Nuget源. 1)打开VS2013:工具-->Nuget程序包管理器-->程序包管理器 ...

- Java-IDEA环境搭建swagger

1.项目POM导入包(使用Maven管理的代码) 2.POM文件导入包 <dependencyManagement> <dependencies> <dependency ...

- maven创建springMVC项目(一)

1.Eclipse配置 添加maven集成安装包:路径是maven下载安装的解压位置,如果不知道如何下载安装请点击这里看我的另一篇安装文章,这里不多说 这里需要注意的是: a.settings.xml ...

- WPF之Binding【转】

WPF之Binding[转] 看到WPF如此之炫,也想用用,可是一点也不会呀. 从需求谈起吧: 首先可能要做一个很炫的界面.见MaterialDesignInXAMLToolKit. 那,最主要的呢, ...

- IOS弹出视图preferredContentSize

UIViewController.preferredContentSize代理旧方法 contentSizeForViewInPopover. self.contentSizeForViewInPop ...

- 在前台引用JSON对象

<script type="text/javascript" src="js/jquery-1.11.0.min.js"></script&g ...