019 Ceph整合openstack

一、整合 glance ceph

1.1 查看servverb关于openstack的用户

[root@serverb ~]# vi ./keystonerc_admin

unset OS_SERVICE_TOKEN

export OS_USERNAME=admin

export OS_PASSWORD=9f0b699989a04a05

export OS_AUTH_URL=http://172.25.250.11:5000/v2.0

export PS1='[\u@\h \W(keystone_admin)]\$ '

export OS_TENANT_NAME=admin

export OS_REGION_NAME=RegionOne

[root@serverb ~(keystone_admin)]# openstack service list

1.2 安装ceph包

[root@serverb ~(keystone_admin)]# yum -y install ceph-common

[root@serverb ~(keystone_admin)]# chown ceph:ceph /etc/ceph/

1.3 创建RBD镜像

root@serverc ~]# ceph osd pool create images 128 128

pool 'images' created

[root@serverc ~]# ceph osd pool application enable images rbd

enabled application 'rbd' on pool 'images'

[root@serverc ~]# ceph osd pool ls

images

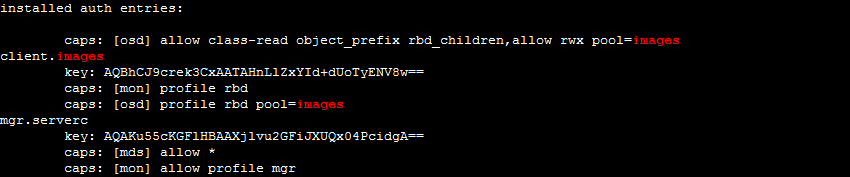

1.4创建Ceph池用户

[root@serverc ~]# ceph auth get-or-create client.images mon 'profile rbd' osd 'profile rbd pool=images' -o /etc/ceph/ceph.client.images.keyring

[root@serverc ~]# ll /etc/ceph/ceph.client.images.keyring

-rw-r--r-- root root Mar : /etc/ceph/ceph.client.images.keyring

[root@serverc ~]# ceph auth list|grep -A 4 images

1.5 复制到serverb节点并验证

[root@serverc ~]# scp /etc/ceph/ceph.conf ceph@serverb:/etc/ceph/ceph.conf

ceph.conf % .4KB/s :

[root@serverc ~]# scp /etc/ceph/ceph.client.images.keyring ceph@serverb:/etc/ceph/ceph.client.images.keyring

ceph.client.images.keyring % .2KB/s :

[root@serverb ~(keystone_admin)]# ceph --id images -s

cluster:

id: 2d58e9ec-9bc0-4d43-831c-24b345fc2a94

health: HEALTH_OK services:

mon: daemons, quorum serverc,serverd,servere

mgr: serverc(active), standbys: serverd, servere

osd: osds: up, in data:

pools: pools, pgs

objects: objects, kB

usage: MB used, GB / GB avail

pgs: active+clean

1.6 修改秘钥环权限

[root@serverb ~(keystone_admin)]# chgrp glance /etc/ceph/ceph.client.images.keyring

[root@serverb ~(keystone_admin)]# chmod 0640 /etc/ceph/ceph.client.images.keyring

1.7 修改glance后端存储

[root@serverb ~(keystone_admin)]# vim /etc/glance/glance-api.conf

[glance_store]

stores = rbd

default_store = rbd

filesystem_store_datadir = /var/lib/glance/images/

rbd_store_chunk_size =

rbd_store_pool = images

rbd_store_user = images

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rados_connect_timeout =

os_region_name=RegionOne

[root@serverb ~(keystone_admin)]# grep -Ev "^$|^[#;]" /etc/glance/glance-api.conf

[DEFAULT]

bind_host = 0.0.0.0

bind_port =

workers =

image_cache_dir = /var/lib/glance/image-cache

registry_host = 0.0.0.0

debug = False

log_file = /var/log/glance/api.log

log_dir = /var/log/glance

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://glance:27c082e7c4a9413c@172.25.250.11/glance

[glance_store]

stores = rbd

default_store = rbd

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

rbd_store_chunk_size =

rbd_store_pool = images

rbd_store_user = images

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rados_connect_timeout =

os_region_name=RegionOne

[image_format]

[keystone_authtoken]

auth_uri = http://172.25.250.11:5000/v2.0

auth_type = password

project_name=services

username=glance

password=99b29d9142514f0f

auth_url=http://172.25.250.11:35357

[matchmaker_redis]

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

policy_file = /etc/glance/policy.json

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

[root@serverb ~(keystone_admin)]# systemctl restart openstack-glance-api

1.8 创建image测试

[root@serverb ~(keystone_admin)]# wget http://materials/small.img

[root@serverb ~(keystone_admin)]# openstack image create --container-format bare --disk-format raw --file ./small.img "Small Image"

+------------------+------------------------------------------------------+

| Field | Value |

+------------------+------------------------------------------------------+

| checksum | ee1eca47dc88f4879d8a229cc70a07c6 |

| container_format | bare |

| created_at | --30T06::40Z |

| disk_format | raw |

| file | /v2/images/f60f2b0f-8c7d-42c2-b158-53d9a436efc9/file |

| id | f60f2b0f-8c7d-42c2-b158-53d9a436efc9 |

| min_disk | |

| min_ram | |

| name | Small Image |

| owner | 79cf145d371e48ef96f608cbf85d1788 |

| protected | False |

| schema | /v2/schemas/image |

| size | |

| status | active |

| tags | |

| updated_at | --30T06::41Z |

| virtual_size | None |

| visibility | private |

+------------------+------------------------------------------------------+

[root@serverb ~(keystone_admin)]# rbd --id images -p images ls

14135e67-39bb-4b1c-aa11-fd5b26599ee7

-33b2--8b63-119a0dbc12d4

f60f2b0f-8c7d-42c2-b158-53d9a436efc9

[root@serverb ~(keystone_admin)]# glance image-list

+--------------------------------------+-------------+

| ID | Name |

+--------------------------------------+-------------+

| f60f2b0f-8c7d-42c2-b158-53d9a436efc9 | Small Image |

+--------------------------------------+-------------+

[root@serverb ~(keystone_admin)]# rbd --id images info images/f60f2b0f-8c7d-42c2-b158-53d9a436efc9

rbd image 'f60f2b0f-8c7d-42c2-b158-53d9a436efc9':

size kB in objects

order ( kB objects)

block_name_prefix: rbd_data.109981f1d12

format:

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

create_timestamp: Sat Mar ::

1.9 删除一个镜像操作

[root@serverb ~(keystone_admin)]# openstack image delete "Small Image"

[root@serverb ~(keystone_admin)]# openstack image list

[root@serverb ~(keystone_admin)]# rbd --id images -p images ls

14135e67-39bb-4b1c-aa11-fd5b26599ee7

-33b2--8b63-119a0dbc12d4

[root@serverb ~(keystone_admin)]# ceph osd pool ls --id images

images

二、整合ceph和cinder

2.1 创建RBD镜像

[root@serverc ~]# ceph osd pool create volumes 128

pool 'volumes' created

[root@serverc ~]# ceph osd pool application enable volumes rbd

enabled application 'rbd' on pool 'volumes'

2.2 创建ceph用户

[root@serverc ~]# ceph auth get-or-create client.volumes mon 'profile rbd' osd 'profile rbd pool=volumes,profile rbd pool=images' -o /etc/ceph/ceph.client.volumes.keyring

[root@serverc ~]# ceph auth list|grep -A 4 volumes

installed auth entries: client.volumes

key: AQBOEZ9ckRr3BxAAaWB8lpYRrUQ+z/Bgk3Rfbg==

caps: [mon] profile rbd

caps: [osd] profile rbd pool=volumes,profile rbd pool=images

mgr.serverc

key: AQAKu55cKGFlHBAAXjlvu2GFiJXUQx04PcidgA==

caps: [mds] allow *

caps: [mon] allow profile mgr

2.3 复制到serverb节点

[root@serverc ~]# scp /etc/ceph/ceph.client.volumes.keyring ceph@serverb:/etc/ceph/ceph.client.volumes.keyring

ceph@serverb's password:

ceph.client.volumes.keyring % .3KB/s :

[root@serverc ~]# ceph auth get-key client.volumes|ssh ceph@serverb tee ./client.volumes.key

ceph@serverb's password:

AQBOEZ9ckRr3BxAAaWB8lpYRrUQ+z/Bgk3Rfbg==[root@serverc ~]#

2.3 serverb节点确认验证

[root@serverb ~(keystone_admin)]# ceph --id volumes -s

cluster:

id: 2d58e9ec-9bc0-4d43-831c-24b345fc2a94

health: HEALTH_OK services:

mon: daemons, quorum serverc,serverd,servere

mgr: serverc(active), standbys: serverd, servere

osd: osds: up, in data:

pools: pools, pgs

objects: objects, kB

usage: MB used, GB / GB avail

pgs: active+clean

2.4 修改秘钥环权限

[root@serverb ~(keystone_admin)]# chgrp cinder /etc/ceph/ceph.client.volumes.keyring

[root@serverb ~(keystone_admin)]# chmod 0640 /etc/ceph/ceph.client.volumes.keyring

2.5 生成uuid

[root@serverb ~(keystone_admin)]# uuidgen |tee ~/myuuid.txt

f3fbcf03-e208-4fba-9c47-9ff465847468

2.6 修改cinder后端存储

[root@serverb ~(keystone_admin)]# vi /etc/cinder/cinder.conf

enabled_backends = ceph

glance_api_version =

#default_volume_type = iscsi [ceph]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_user = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_secret_uuid = f3fbcf03-e208-4fba-9c47-9ff465847468

rbd_max_clone_depth =

rbd_store_chunk_size =

rados_connect_timeout = -

# 指定volume_backend_name,可忽略

volume_backend_name = ceph

2.7 启动并查看日志

[root@serverb ~(keystone_admin)]# systemctl restart openstack-cinder-api

[root@serverb ~(keystone_admin)]# systemctl restart openstack-cinder-volume

[root@serverb ~(keystone_admin)]# systemctl restart openstack-cinder-scheduler

[student@serverb ~]$ sudo tail -20 /var/log/cinder/volume.log

-- ::05.800 INFO cinder.volume.manager [req-76edfdb3-dd84--9a4e-de5f79391609 - - - - -] Driver initialization completed successfully.

-- ::05.819 INFO cinder.volume.manager [req-76edfdb3-dd84--9a4e-de5f79391609 - - - - -] Initializing RPC dependent components of volume driver RBDDriver (1.2.)

-- ::05.871 INFO cinder.volume.manager [req-76edfdb3-dd84--9a4e-de5f79391609 - - - - -] Driver post RPC initialization completed successfully.

-- ::30.398 INFO cinder.volume.manager [req-ba2a8ef1-e3f0-4a36-a0eb-5a300367c60c - - - - -] Driver initialization completed successfully.

-- ::30.420 INFO cinder.volume.manager [req-ba2a8ef1-e3f0-4a36-a0eb-5a300367c60c - - - - -] Initializing RPC dependent components of volume driver RBDDriver (1.2.)

-- ::30.474 INFO cinder.volume.manager [req-ba2a8ef1-e3f0-4a36-a0eb-5a300367c60c - - - - -] Driver post RPC initialization completed successfully.

2.8 创建XML模板

[root@serverb ~(keystone_admin)]# vim ~/ceph.xml

<secret ephemeral="no" private="no">

<uuid>f3fbcf03-e208-4fba-9c47-9ff465847468</uuid>

<usage type="ceph">

<name>client.volumes secret</name>

</usage>

</secret>

[root@serverb ~(keystone_admin)]# virsh secret-define --file ~/ceph.xml

Secret f3fbcf03-e208-4fba-9c47-9ff465847468 created

[root@serverb ~(keystone_admin)]# virsh secret-set-value --secret f3fbcf03-e208-4fba-9c47-9ff465847468 --base64 $(cat /home/ceph/client.volumes.key)

Secret value set

2.9 创建volume卷测试

[root@serverb ~(keystone_admin)]# openstack volume create --description "Test Volume" --size 1 testvolume

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | --30T07::26.436978 |

| description | Test Volume |

| encrypted | False |

| id | b9cf60d5-3cff-4cde-ab1d-4747adff7943 |

| migration_status | None |

| multiattach | False |

| name | testvolume |

| properties | |

| replication_status | disabled |

| size | |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| type | None |

| updated_at | None |

| user_id | 8e0be34493e04722ba03ab30fbbf3bf8 |

+---------------------+--------------------------------------+

2.9 确认验证

[root@serverb ~(keystone_admin)]# openstack volume list -c ID -c 'Display Name' -c Status -c Size

+--------------------------------------+--------------+-----------+------+

| ID | Display Name | Status | Size |

+--------------------------------------+--------------+-----------+------+

| b9cf60d5-3cff-4cde-ab1d-4747adff7943 | testvolume | available | |

+--------------------------------------+--------------+-----------+------+

[root@serverb ~(keystone_admin)]# rbd --id volumes -p volumes ls

volume-b9cf60d5-3cff-4cde-ab1d-4747adff7943

[root@serverb ~(keystone_admin)]# rbd --id volumes -p volumes info volumes/volume-b9cf60d5-3cff-4cde-ab1d-4747adff7943

rbd image 'volume-b9cf60d5-3cff-4cde-ab1d-4747adff7943':

size MB in objects

order ( kB objects)

block_name_prefix: rbd_data.10e3589b2aec

format:

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

create_timestamp: Sat Mar ::

2.10 删除操作

[root@serverb ~(keystone_admin)]# openstack volume delete testvolume

[root@serverb ~(keystone_admin)]# openstack volume list

[root@serverb ~(keystone_admin)]# rbd --id volumes -p volumes ls

[root@serverb ~(keystone_admin)]# rm ~/ceph.xml

[root@serverb ~(keystone_admin)]# rm ~/myuuid.txt

博主声明:本文的内容来源主要来自红帽官方指导手册,由本人实验完成操作验证,需要的朋友可以关注红帽网站,https://access.redhat.com/documentation/en-us/red_hat_ceph_storage/2/html/ceph_block_device_to_openstack_guide/

019 Ceph整合openstack的更多相关文章

- Ceph与OpenStack整合(仅为云主机提供云盘功能)

1. Ceph与OpenStack整合(仅为云主机提供云盘功能) 创建: linhaifeng,最新修改: 大约1分钟以前 ceph ceph osd pool create volumes 128 ...

- OpenStack H版与 Ceph 整合的现状

转自:https://www.ustack.com/blog/openstack_and_ceph/ Contents 1 Ceph与Nova整合 2 Ceph与Cinder整合 3 相关Patch ...

- “CEPH浅析”系列之六——CEPH与OPENSTACK

在 <"Ceph浅析"系列之二--Ceph概况>中即已提到,关注Ceph的原因之一,就是OpenStack社区对于Ceph的重视.因此,本文将对Ceph在OpenSta ...

- Ceph在OpenStack中的地位

对Ceph在OpenStack中的价值进行简要介绍,并且对Ceph和Swift进行对比. 对于一个IaaS系统,涉及到存储的部分主要是块存储服务模块.对象存储服务模块.镜像管理模块和计算服务模块.具体 ...

- ceph对接openstack环境(4)

ceph对接openstack环境 环境准备: 保证openstack节点的hosts文件里有ceph集群的各个主机名,也要保证ceph集群节点有openstack节点的各个主机名 一.使用rbd方式 ...

- The Dos and Don'ts for Ceph for OpenStack

Ceph和OpenStack是一个非常有用和非常受欢迎的组合. 不过,部署Ceph / OpenStack经常会有一些容易避免的缺点 - 我们将帮助你解决它们 使用 show_image_direct ...

- ceph与openstack对接

对接分为三种,也就是存储为openstack提供的三类功能1.云盘,就好比我们新加的硬盘2.原本的镜像也放在ceph里,但是我没有选择这种方式,原因是因为后期有要求,但是我会把这个也写出来,大家自己对 ...

- Ceph与OpenStack的Nova相结合

https://ervikrant06.wordpress.com/2015/10/24/how-to-configure-ceph-as-nova-compute-backend/ 在Ceph的ad ...

- Ceph与OpenStack的Glance相结合

http://docs.ceph.com/docs/master/rbd/rbd-openstack/?highlight=nova#kilo 在Ceoh的admin-node上进行如下操作: 1. ...

随机推荐

- Java练习 SDUT-2504_多项式求和

多项式求和 Time Limit: 1000 ms Memory Limit: 65536 KiB Problem Description 多项式描述如下: 1 - 1/2 + 1/3 - 1/4 + ...

- CF1238E.Keyboard Purchase 题解 状压/子集划分DP

作者:zifeiy 标签:状压DP,子集划分DP 题目链接:https://codeforces.com/contest/1238/problem/E 题目大意: 给你一个长度为 \(n(n \le ...

- oracle函数 LOWER(c1)

[功能]:将字符串全部转为小写 [参数]:c1,字符表达式 [返回]:字符型 [示例] SQL> select lower('AaBbCcDd')AaBbCcDd from dual; AABB ...

- 红帽Linux6虚拟机克隆后操作

1.首先需要修改root密码 开机后按2次e进入以下界面 按e编辑 在quiet后输入single 1 输入好了之后,“回车”,返回到了刚刚的界面,再输入“b”,让boot引导进入系统. 进入单用户模 ...

- laravel 5 自定义全局函数,怎么弄呢?

在app/Helpers/(目录可以自己随便来) 下新建一个文件 functions.php 在functions.php 中加入这个方法 然后在 bootstrap/autoload.php 中添加 ...

- 30 Cool Open Source Software I Discovered in 2013

30 Cool Open Source Software I Discovered in 2013 #1 Replicant – Fully free Android distribution Rep ...

- jquery tab点击切换的问题

问题: 页面结构见下 <div id="wrap"> <li> <a href="#" class="active&qu ...

- css超出盒子隐藏

效果如图1-1. 效果图1-1 css代码: white-space: nowrap;overflow: hidden; text-overflow: ellipsis; display: inlin ...

- 极简触感反馈Button组件

一个简单的React触感反馈的button组件 import React from 'react'; import './index.scss'; class Button extends React ...

- Jenkins安装总结

Jenkins官方文档说的安装步骤,http://jenkins.io/zh/doc/pipeline/tour/getting-started/ 相关安装资源可在官方文档下载 安装Jenkins之前 ...