spark 33G表

http://192.168.2.51:4041

http://hadoop1:8088/proxy/application_1512362707596_0006/executors/

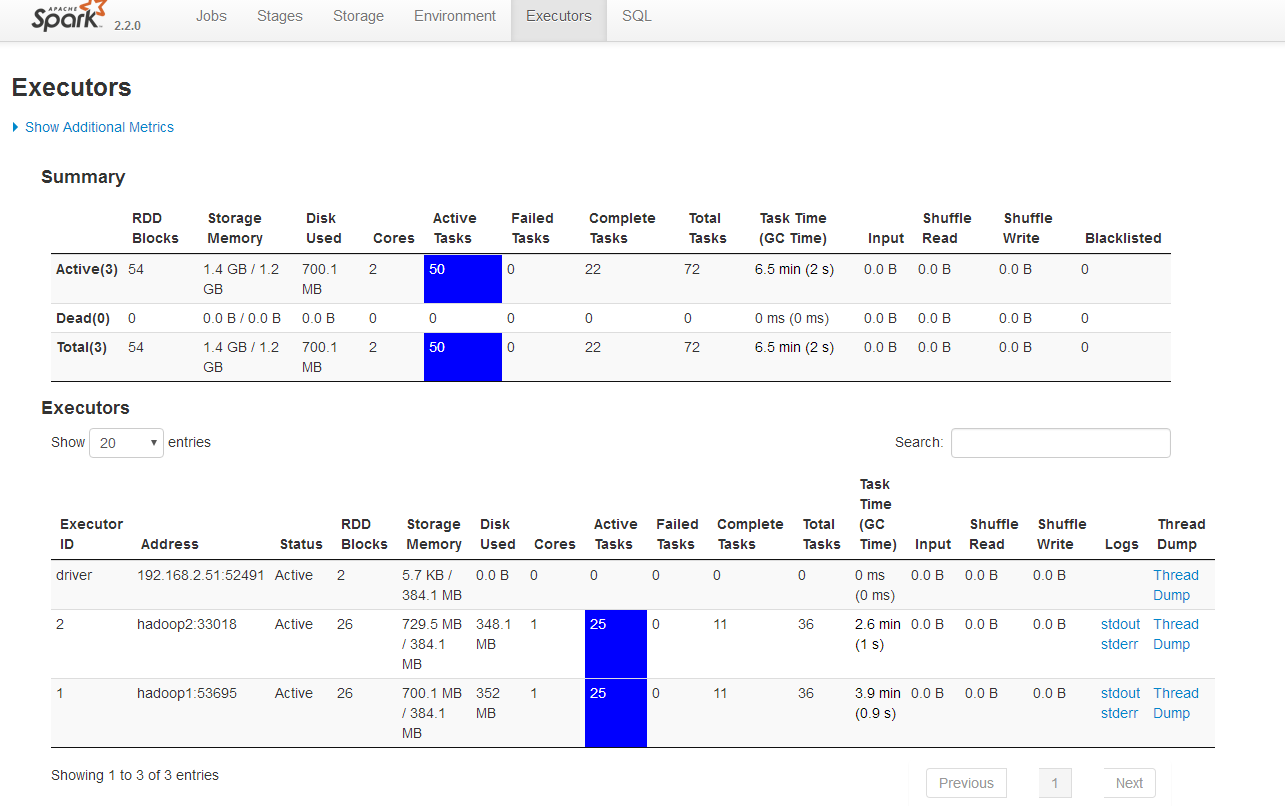

Executors

Summary

| RDD Blocks | Storage Memory | Disk Used | Cores | Active Tasks | Failed Tasks | Complete Tasks | Total Tasks | Task Time (GC Time) | Input | Shuffle Read | Shuffle Write | Blacklisted | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Active(3) | 54 | 1.4 GB / 1.2 GB | 700.1 MB | 2 | 50 | 0 | 22 | 72 | 6.5 min (2 s) | 0.0 B | 0.0 B | 0.0 B | 0 |

| Dead(0) | 0 | 0.0 B / 0.0 B | 0.0 B | 0 | 0 | 0 | 0 | 0 | 0 ms (0 ms) | 0.0 B | 0.0 B | 0.0 B | 0 |

| Total(3) | 54 | 1.4 GB / 1.2 GB | 700.1 MB | 2 | 50 | 0 | 22 | 72 | 6.5 min (2 s) | 0.0 B | 0.0 B | 0.0 B | 0 |

Executors

20

40

60

100

All

entries

| Executor ID | Address | Status | RDD Blocks | Storage Memory | Disk Used | Cores | Active Tasks | Failed Tasks | Complete Tasks | Total Tasks | Task Time (GC Time) | Input | Shuffle Read | Shuffle Write | Logs | Thread Dump |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| driver | 192.168.2.51:52491 | Active | 2 | 5.7 KB / 384.1 MB | 0.0 B | 0 | 0 | 0 | 0 | 0 | 0 ms (0 ms) | 0.0 B | 0.0 B | 0.0 B | Thread Dump | |

| 2 | hadoop2:33018 | Active | 26 | 729.5 MB / 384.1 MB | 348.1 MB | 1 | 25 | 0 | 11 | 36 | 2.6 min (1 s) | 0.0 B | 0.0 B | 0.0 B | Thread Dump | |

| 1 | hadoop1:53695 | Active | 26 | 700.1 MB / 384.1 MB | 352 MB | 1 | 25 | 0 | 11 | 36 | 3.9 min (0.9 s) | 0.0 B | 0.0 B | 0.0 B | Thread Dump |

from pyspark.sql import SparkSession my_spark = SparkSession \

.builder \

.appName("myAppYarn-10g") \

.master('yarn') \

.config("spark.mongodb.input.uri", "mongodb://pyspark_admin:admin123@192.168.2.50/recommendation.article") \

.config("spark.mongodb.output.uri", "mongodb://pyspark_admin:admin123@192.168.2.50/recommendation.article") \

.getOrCreate() db_rows = my_spark.read.format("com.mongodb.spark.sql.DefaultSource").load().collect()

Summary

| RDD Blocks | Storage Memory | Disk Used | Cores | Active Tasks | Failed Tasks | Complete Tasks | Total Tasks | Task Time (GC Time) | Input | Shuffle Read | Shuffle Write | Blacklisted | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Active(3) | 31 | 748.4 MB / 1.2 GB | 75.7 MB | 2 | 27 | 0 | 0 | 27 | 0 ms (0 ms) | 0.0 B | 0.0 B | 0.0 B | 0 |

| Dead(2) | 56 | 1.5 GB / 768.2 MB | 790.3 MB | 2 | 0 | 0 | 77 | 77 | 2.7 h (2 s) | 0.0 B | 0.0 B | 0.0 B | 0 |

| Total(5) | 87 | 2.3 GB / 1.9 GB | 865.9 MB | 4 | 27 | 0 | 77 | 104 | 2.7 h (2 s) | 0.0 B | 0.0 B | 0.0 B | 0 |

Executors

20

40

60

100

All

entries

| Executor ID | Address | Status | RDD Blocks | Storage Memory | Disk Used | Cores | Active Tasks | Failed Tasks | Complete Tasks | Total Tasks | Task Time (GC Time) | Input | Shuffle Read | Shuffle Write | Logs | Thread Dump |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| driver | 192.168.2.51:52491 | Active | 2 | 5.7 KB / 384.1 MB | 0.0 B | 0 | 0 | 0 | 0 | 0 | 0 ms (0 ms) | 0.0 B | 0.0 B | 0.0 B | Thread Dump | |

| 4 | hadoop2:34394 | Active | 12 | 315.9 MB / 384.1 MB | 0.0 B | 1 | 11 | 0 | 0 | 11 | 0 ms (0 ms) | 0.0 B | 0.0 B | 0.0 B | Thread Dump | |

| 3 | hadoop1:39620 | Active | 17 | 432.5 MB / 384.1 MB | 75.7 MB | 1 | 16 | 0 | 0 | 16 | 0 ms (0 ms) | 0.0 B | 0.0 B | 0.0 B | Thread Dump | |

| 2 | hadoop2:33018 | Dead | 27 | 758.7 MB / 384.1 MB | 390.4 MB | 1 | 0 | 0 | 38 | 38 | 1.3 h (1 s) | 0.0 B | 0.0 B | 0.0 B | Thread Dump | |

| 1 | hadoop1:53695 | Dead | 29 | 775.9 MB / 384.1 MB | 399.9 MB | 1 | 0 | 0 | 39 | 39 | 1.4 h (0.9 s) | 0.0 B | 0.0 B | 0.0 B | Thread Dump |

Logs for container_1512362707596_0006_02_000002 |

|

|

Showing 4096 bytes. Click here for full log Manager: Dropping block taskresult_48 from memory |

|

spark 33G表的更多相关文章

- 基于spark实现表的join操作

1. 自连接 假设存在如下文件: [root@bluejoe0 ~]# cat categories.csv 1,生活用品,0 2,数码用品,1 3,手机,2 4,华为Mate7,3 每一行的格式为: ...

- 利用spark将表中数据拆分

i# coding:utf-8from pyspark.sql import SparkSession import os if __name__ == '__main__': os.environ[ ...

- spark使用Hive表操作

spark Hive表操作 之前很长一段时间是通过hiveServer操作Hive表的,一旦hiveServer宕掉就无法进行操作. 比如说一个修改表分区的操作 一.使用HiveServer的方式 v ...

- Databricks 第6篇:Spark SQL 维护数据库和表

Spark SQL 表的命名方式是db_name.table_name,只有数据库名称和数据表名称.如果没有指定db_name而直接引用table_name,实际上是引用default 数据库下的表. ...

- Spark SQL概念学习系列之如何使用 Spark SQL(六)

val sqlContext = new org.apache.spark.sql.SQLContext(sc) // 在这里引入 sqlContext 下所有的方法就可以直接用 sql 方法进行查询 ...

- spark基础知识介绍2

dataframe以RDD为基础的分布式数据集,与RDD的区别是,带有Schema元数据,即DF所表示的二维表数据集的每一列带有名称和类型,好处:精简代码:提升执行效率:减少数据读取; 如果不配置sp ...

- 新手福利:Apache Spark入门攻略

[编者按]时至今日,Spark已成为大数据领域最火的一个开源项目,具备高性能.易于使用等特性.然而作为一个年轻的开源项目,其使用上存在的挑战亦不可为不大,这里为大家分享SciSpike软件架构师Ash ...

- Spark入门之DataFrame/DataSet

目录 Part I. Gentle Overview of Big Data and Spark Overview 1.基本架构 2.基本概念 3.例子(可跳过) Spark工具箱 1.Dataset ...

- 6.3 使用Spark SQL读写数据库

Spark SQL可以支持Parquet.JSON.Hive等数据源,并且可以通过JDBC连接外部数据源 一.通过JDBC连接数据库 1.准备工作 ubuntu安装mysql教程 在Linux中启动M ...

随机推荐

- AWR报告中Parse CPU to Parse Elapsd%的理解

AWR报告中Parse CPU to Parse Elapsd%的理解 原文自:http://dbua.iteye.com/blog/827243 Parse CPU to Parse Ela ...

- HackerRank# Red John is Back

原题地址 简单动归+素数判定,没用筛法也能过 代码: #include <cmath> #include <cstdio> #include <vector> #i ...

- oracle查询正在执行的语句以及正被锁的对象

--查询Oracle正在执行的sql语句及执行该语句的用户 b.username 登录Oracle用户名, b.serial#, spid 操作系统ID, paddr, ...

- 路飞学城详细步骤 part1

详细步骤 1 添加登录页面 步骤: Header.vue 写一个登录按钮,<router-link to = ' /xx'> 在路由的 index.js中添加这个 新的路由,{'path' ...

- centos 7如何配置网络、网卡、ip命令

Linux网络相关配置文件 Linux网络配置相关的文件根据不同的发行版目录名称有所不同,但大同小异,主要有似下目录或文件. (1)/etc/hostname:主要功能在于修改主机名称. (2)/et ...

- tyvj 1061 Mobile Service

线性DP 本题的阶段很明显,就是完成了几个请求,但是信息不足以转移,我们还需要存储三个服务员的位置,但是三个人都存的话会T,我们发现在阶段 i 处,一定有一个服务员在 p[i] 处,所以我们可以只存另 ...

- Java面试题集(六)

以下为框架补充部分: Struts 2中,Action通过什么方式获得用户从页面输入的数据,又是通过什么方式把其自身的数据传给视图的? Action从页面获取数据有三种方式: ①通过Action属性接 ...

- HDU 4770 Lights Against Dudely 暴力枚举+dfs

又一发吐血ac,,,再次明白了用函数(代码重用)和思路清晰的重要性. 11779687 2014-10-02 20:57:53 Accepted 4770 0MS 496K 2976 B G++ cz ...

- T1013 求先序排列 codevs

http://codevs.cn/problem/1013/ 时间限制: 1 s 空间限制: 128000 KB 题目等级 : 黄金 Gold 题解 查看运行结果 题目描述 Descr ...

- 树莓派LED指示灯说明

原文:http://shumeipai.nxez.com/2014/09/30/raspberry-pi-led-status-detail.html?variant=zh-cn LED亮灯状态 LE ...