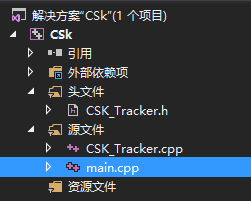

图像跟踪(1) CSK

依赖OPENCV

1功能

- 鼠标选取目标 S按键取消重新选择

- 共享内存目标框 中心 X Y 边框W H

/////////////////////////////////////////////////////////////////////////

// Author: Zhongze Hu

// Subject: Circulant Structure of Tracking-by-detection with Kernels

// Algorithm: ECCV12, Jo~ao F. Henriques, Exploiting the Circulant

// Structure of Tracking-by-detection with Kernels

// Matlab code: http://home.isr.uc.pt/~henriques/circulant/index.html

// Date: 01/13/2015 ///////////////////////////////////////////////////////////////////////// #include "CSK_Tracker.h"

#include <iostream>

#include <fstream> using namespace std; bool tracking_flag = false;

cv::Mat org, dst, img, tmp;

int Wid, Hei, X, Y;

void on_mouse(int event, int x, int y, int flags, void *ustc)//event鼠标事件代号,x,y鼠标坐标,flags拖拽和键盘操作的代号

{ static cv::Point pre_pt = (-1, -1);//初始坐标

static cv::Point cur_pt = (-1, -1);//实时坐标

char temp[16]; if (!tracking_flag) { if (event == CV_EVENT_LBUTTONDOWN)//左键按下,读取初始坐标,并在图像上该点处划圆

{

//org.copyTo(img);//将原始图片复制到img中

org = img.clone();

sprintf(temp, "(%d,%d)", x, y);

pre_pt = cv::Point(x, y);

putText(img, temp, pre_pt, cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0, 255), 1, 8);//在窗口上显示坐标

circle(img, pre_pt, 2, cv::Scalar(255, 0, 0, 0), CV_FILLED, CV_AA, 0);//划圆

imshow("img", img);

}

else if (event == CV_EVENT_MOUSEMOVE && !(flags & CV_EVENT_FLAG_LBUTTON))//左键没有按下的情况下鼠标移动的处理函数

{

img.copyTo(tmp);//将img复制到临时图像tmp上,用于显示实时坐标

sprintf(temp, "(%d,%d)", x, y);

cur_pt = cv::Point(x, y);

putText(tmp, temp, cur_pt, cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0, 255));//只是实时显示鼠标移动的坐标

imshow("img", tmp);

}

else if (event == CV_EVENT_MOUSEMOVE && (flags & CV_EVENT_FLAG_LBUTTON))//左键按下时,鼠标移动,则在图像上划矩形

{

img.copyTo(tmp);

sprintf(temp, "(%d,%d)", x, y);

cur_pt = cv::Point(x, y);

putText(tmp, temp, cur_pt, cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0, 255));

rectangle(tmp, pre_pt, cur_pt, cv::Scalar(0, 255, 0, 0), 1, 8, 0);//在临时图像上实时显示鼠标拖动时形成的矩形

imshow("img", tmp);

}

else if (event == CV_EVENT_LBUTTONUP)//左键松开,将在图像上划矩形

{

//org.copyTo(img);

img.copyTo(tmp);

sprintf(temp, "(%d,%d)", x, y);

cur_pt = cv::Point(x, y);

putText(img, temp, cur_pt, cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0, 255));

circle(img, pre_pt, 2, cv::Scalar(255, 0, 0, 0), CV_FILLED, CV_AA, 0);

rectangle(img, pre_pt, cur_pt, cv::Scalar(0, 255, 0, 0), 1, 8, 0);//根据初始点和结束点,将矩形画到img上

imshow("img", img);

img.copyTo(tmp);

//截取矩形包围的图像,并保存到dst中

int width = abs(pre_pt.x - cur_pt.x);

int height = abs(pre_pt.y - cur_pt.y);

if (width == 0 || height == 0)

{

printf("width == 0 || height == 0");

return;

}

Wid = width;

Hei = height;

X = pre_pt.x + width / 2;

Y = pre_pt.y + height / 2;

tracking_flag = true;

//dst = org(Rect(min(cur_pt.x, pre_pt.x), min(cur_pt.y, pre_pt.y), width, height));

//namedWindow("dst");

//imshow("dst", dst);

//waitKey(0);

} } } void main()

{

TCHAR szName[] = TEXT("Local\\FHY_SYSTEM_0");

TrackBox BOX;

hMapFile = CreateFileMapping(

INVALID_HANDLE_VALUE, // use paging file

NULL, // default security

PAGE_READWRITE, // read/write access

0, // maximum object size (high-order DWORD)

BUF_SIZE, // maximum object size (low-order DWORD)

szName); // name of mapping object if (hMapFile == NULL)

{

/*printf(TEXT("Could not create file mapping object (%d).\n"),

GetLastError());*/

return;

} pBuffer = (LPTSTR)MapViewOfFile(hMapFile, // handle to map object

FILE_MAP_ALL_ACCESS, // read/write permission

0,

0,

BUF_SIZE); if (pBuffer == NULL)

{

/*printf(TEXT("Could not map view of file (%d).\n"),

GetLastError());*/ CloseHandle(hMapFile); return;

} BOX.x = 0;

BOX.y = 0;

BOX.flag = tracking_flag; CSK_Tracker my_tracker;

string file_name;

ifstream infile("input/Dudek/Name.txt");

//getline(infile,file_name);

//my_tracker.run_tracker("..\\..\\data\\tiger.avi",Point(16 + 36/2,28 + 36/2),36);

//my_tracker.run_tracker("..\\..\\data\\boy.avi",Point(374+68/2, 77+68/2),68);

//my_tracker.run_tracker("..\\..\\CSK\\data\\oldman.avi",Point(186+50/2, 118+50/2),50); //VideoCapture capture("input/bike1.avi");

//Mat frame = imread(file_name);

////if (!capture.isOpened())

//if(frame.empty())

//{

// cout << "open video failed!" << endl;

// return;

//}

//int frame_count = int(capture.get(CV_CAP_PROP_FRAME_COUNT));

int frame_count = 1490;

//double rate = capture.get(CV_CAP_PROP_FPS);

//int width = capture.get(CV_CAP_PROP_FRAME_WIDTH);

//int height = capture.get(CV_CAP_PROP_FRAME_HEIGHT);

int width = 320;

int height = 240;

cv::Mat frame;

cv::Mat frame_last;

cv::Mat alphaf; cv::VideoCapture capture(0);

capture.set(CV_CAP_PROP_FRAME_WIDTH, 1920);

capture.set(CV_CAP_PROP_FRAME_HEIGHT, 1080); while (true)

{

if (!tracking_flag)

{

capture.read(img);

cv::namedWindow("img");//定义一个img窗口

cv::setMouseCallback("img", on_mouse, 0);//调用回调函数

imshow("img", img);

memcpy(BOX_DATA, &BOX, sizeof(TrackBox));

char key = cvWaitKey(10);

if (key == 'q') break;

}

else

{

cv::Point pos_first = cv::Point(X, Y);

int target_sz[2] = { Hei,Wid };

my_tracker.tracke_one(pos_first,target_sz, capture, tracking_flag); } }

}

//#ifdef _CSK_TRACKER_H_

//#define _CSK_TRACKER_H_ #include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <iostream>

#include <fstream>

#include <Windows.h> #define FRAME_SIZE 1920*1080

#define BUF_SIZE FRAME_SIZE*60 typedef struct

{

int x;

int y;

/*int zx;

int zy;*/

int width;

int height; int flag = 0; }TrackBox; //目标检测的上下顶点; typedef struct

{

int width;

int height;

int type;

}imgInfHead; #define BUF_SIZE FRAME_SIZE*10

#define FRAME_SIZE 1920*1080 extern HANDLE hMapFile;

extern LPCTSTR pBuffer; #define BOX_DATA (char*)pBuffer+FRAME_SIZE*0 // #define SEND_IMG00_HEAD (char*)pBuffer+FRAME_SIZE*1 //图像头信息首地址

#define SEND_IMG00_DATA (char*)pBuffer+FRAME_SIZE*2 //图像数据区首地址 //using namespace cv;

using namespace std; class CSK_Tracker

{ public:

CSK_Tracker();

~CSK_Tracker(); TrackBox BOX; void hann2d(cv::Mat& m);

cv::Mat dense_guess_kernel(double digma, cv::Mat x, cv::Mat y);

cv::Mat dense_guess_kernel(double digma, cv::Mat x);

cv::Mat get_subwindow(cv::Mat im, cv::Point pos, int* sz, cv::Mat cos_window);

//void run_tracker(string video_name, Point pos, int target_sz);

//void tracke_one(ifstream &infile, cv::Point pos_first, int frame_count, int* target_sz, cv::VideoCapture capture);

void tracke_one(cv::Point pos_first, int* target_sz, cv::VideoCapture capture, bool &tracking_flag);

void tracke_one(ifstream &infile, cv::Point pos_first, int frame_count, int* target_sz, cv::VideoCapture capture);

cv::Mat conj(cv::Mat a);

cv::Mat c_div(cv::Mat a, cv::Mat b);//a./b

cv::Mat c_mul(cv::Mat a, cv::Mat b);//a.*b

cv::Mat fft2d(cv::Mat src); void print_mat(cv::Mat a, string file_name);//打印矩阵,debug用

void print_img(cv::Mat a, string file_name);//打印图片灰度值 private:

static const double padding;

static const double output_sigma_factor;

static const double sigma;

static const double lambda;

static const double interp_factor; static const string test_file; }; //#endif

#include "CSK_Tracker.h" using namespace std; const double CSK_Tracker::padding = 1;

const double CSK_Tracker::output_sigma_factor = 1.0/16;

const double CSK_Tracker::sigma = 0.2;

const double CSK_Tracker::lambda = 0.01;

const double CSK_Tracker::interp_factor = 0.075;

const string CSK_Tracker::test_file = "H:\\CV\\CSK\\data\\result_c.txt"; HANDLE hMapFile;

LPCTSTR pBuffer; CSK_Tracker::CSK_Tracker()

{ } CSK_Tracker::~CSK_Tracker()

{

} void CSK_Tracker::hann2d(cv::Mat& m)

{

cv::Mat a(m.rows,1,CV_32FC1);

cv::Mat b(m.cols,1,CV_32FC1);

for (int i = 0; i < m.rows; i++)

{

float t = 0.5 * (1 - cos(2*CV_PI*i/(m.rows - 1)));

a.at<float>(i,0) = t;

}

for (int i = 0; i < m.cols; i++)

{

float t = 0.5 * (1 - cos(2*CV_PI*i/(m.cols - 1)));

b.at<float>(i,0) = t;

}

m = a * b.t();

} cv::Mat CSK_Tracker::dense_guess_kernel(double sigma, cv::Mat x, cv::Mat y)

{

//xf = fft2(x)

cv::Mat xf = fft2d(x);

vector<cv::Mat> xf_ri(xf.channels());

cv::split(xf,xf_ri); //xx = x(:)' * x(:);

double xx = 0;

cv::Scalar sum_x = cv::sum(x.mul(x));

for (int i = 0; i < sum_x.cols; i++)

{

xx += sum_x[i];

} //yf = fft2(y)

cv::Mat yf = fft2d(y);

vector<cv::Mat> yf_ri(yf.channels());

cv::split(yf,yf_ri); //yy = y(:)' * y(:);

double yy = 0;

cv::Scalar sum_y = sum(y.mul(y));

for (int i = 0; i < sum_y.cols; i++)

{

yy += sum_y[i];

} //xyf = xf. * conj(yf)

cv::Mat xyf = c_mul(xf,conj(yf)); //xy = real(circshift(ifft2(xyf), floor(size(x)/2)));

idft(xyf,xyf);

xyf = xyf/(xyf.rows*xyf.cols); vector<cv::Mat> xy_ri(xyf.channels());

cv::split(xyf,xy_ri);

cv::Mat xy = xy_ri[0]; int cx = xy.cols/2;

int cy = xy.rows/2;

cv::Mat q0(xy, cv::Rect(0, 0, cx, cy)); // Top-Left - Create a ROI per quadrant

cv::Mat q1(xy, cv::Rect(cx, 0, cx, cy)); // Top-Right

cv::Mat q2(xy, cv::Rect(0, cy, cx, cy)); // Bottom-Left

cv::Mat q3(xy, cv::Rect(cx, cy, cx, cy)); // Bottom-Right

cv::Mat tmp; // swap quadrants (Top-Left with Bottom-Right)

q0.copyTo(tmp);

q3.copyTo(q0);

tmp.copyTo(q3);

q1.copyTo(tmp); // swap quadrant (Top-Right with Bottom-Left)

q2.copyTo(q1);

tmp.copyTo(q2); int numel_x = x.rows*x.cols;

cv::Mat k;

exp((-1/pow(sigma,2))*(cv::max)((xx+yy-2*xy)/numel_x,0),k); return k;

} cv::Mat CSK_Tracker::dense_guess_kernel(double sigma, cv::Mat x)

{

//xf = fft2(x)

cv::Mat xf = fft2d(x);

vector<cv::Mat> xf_ri(xf.channels());

cv::split(xf,xf_ri); //xx = x(:)' * x(:);

double xx = 0;

cv::Scalar sum_x = sum(x.mul(x));

for (int i = 0; i < sum_x.cols; i++)

{

xx += sum_x[i];

} //yf = xf

//yy = xx

cv::Mat yf;

xf.copyTo(yf);

double yy = xx;

vector<cv::Mat> yf_ri(yf.channels());

cv::split(yf,yf_ri); //xyf = xf. * conj(yf)

cv::Mat xyf = c_mul(xf,conj(yf)); //xy = real(circshift(ifft2(xyf), floor(size(x)/2)));

idft(xyf,xyf);

xyf = xyf/(xyf.rows*xyf.cols); vector<cv::Mat> xy_ri(xyf.channels());

cv::split(xyf,xy_ri);

cv::Mat xy = xy_ri[0];

//print_mat(xy,"xyf.txt"); int cx = xy.cols/2;

int cy = xy.rows/2;

cv::Mat q0(xy, cv::Rect(0, 0, cx, cy)); // Top-Left - Create a ROI per quadrant

cv::Mat q1(xy, cv::Rect(cx, 0, cx, cy)); // Top-Right

cv::Mat q2(xy, cv::Rect(0, cy, cx, cy)); // Bottom-Left

cv::Mat q3(xy, cv::Rect(cx, cy, cx, cy)); // Bottom-Right

cv::Mat tmp; // swap quadrants (Top-Left with Bottom-Right)

q0.copyTo(tmp);

q3.copyTo(q0);

tmp.copyTo(q3);

q1.copyTo(tmp); // swap quadrant (Top-Right with Bottom-Left)

q2.copyTo(q1);

tmp.copyTo(q2); int numel_x = x.rows*x.cols;

cv::Mat k;

exp((-1/pow(sigma,2))*(cv::max)((xx+yy-2*xy)/numel_x,0),k); return k;

} cv::Mat CSK_Tracker::get_subwindow(cv::Mat im, cv::Point pos, int* sz, cv::Mat cos_window)

{

//xs = floor(pos(2)) + (1:sz(2)) - floor(sz(2)/2);

//ys = floor(pos(1)) + (1:sz(1)) - floor(sz(1)/2);

vector<int> xs(sz[1]);

vector<int> ys(sz[0]);

for (int i = 0; i < sz[1]; i++)

{

xs[i] = floor(pos.x) + i - floor(sz[1]/2);

xs[i] = (cv::max)((cv::min)(xs[i],im.cols - 1),0);

}

for (int i = 0; i < sz[0]; i++){

ys[i] = floor(pos.y) + i - floor(sz[0]/2);

ys[i] = (cv::max)((cv::min)(ys[i],im.rows - ,0);

} //cout << xs[0]<<" "<< xs[1]<< " "<<xs[2]<<'\n';

//cout << ys[0]<<" "<< ys[1]<< " "<<ys[2];

//xs(xs < 1) = 1;

//ys(ys < 1) = 1;

//xs(xs > size(im,2)) = size(im,2);

//ys(ys > size(im,1)) = size(im,1);

/*for (int i = 0; i < sz[0]; i++)

{

xs[i] = max(min(xs[i],im.cols - 1),0);

ys[i] = max(min(ys[i],im.cols - 1),0);

}*/ cv::Mat out(sz[0],sz[1],CV_32FC1);

for (int i = 0; i < sz[0]; i++)

{

for (int j = 0; j < sz[1]; j++)

{

out.at<float>(i,j) = float(im.at<uchar>(ys[i],xs[j]))/255 - 0.5;

}

}

//print_mat(out,"out.txt");

out = cos_window.mul(out);

//print_mat(out,"out.txt");

return out;

} void CSK_Tracker::tracke_one(cv::Point pos_first, int* target_sz, cv::VideoCapture capture, bool &tracking_flag)

{ //%window size, taking padding into account

int sz[2] = { floor(target_sz[0] * (1 + padding)),floor(target_sz[1] * (1 + padding)) }; //%desired output (gaussian shaped), bandwidth proportional to target size

double output_sigma = sqrt(target_sz[0] * target_sz[1])*output_sigma_factor;

cv::Mat rs(sz[0], sz[1], CV_32FC1);

cv::Mat cs(sz[0], sz[1], CV_32FC1);

for (int i = 0; i < sz[0]; i++)

{

for (int j = 0; j < sz[1]; j++)

{

rs.at<float>(i, j) = i - sz[0] / 2 + 1;

cs.at<float>(i, j) = j - sz[1] / 2 + 1;

}

}

//print_mat(rs,"rs.txt");

//print_mat(cs,"cs.txt"); cv::Mat y;

exp((-0.5 / pow(output_sigma, 2))*(rs.mul(rs) + cs.mul(cs)), y);

//print_mat(y,"y.txt"); //yf = fft2(y)

cv::Mat yf;

//IplImage *y_temp = &IplImage(y);

yf = fft2d(y);

vector<cv::Mat> yf_ri(yf.channels());

cv::split(yf, yf_ri); //%store pre-computed cosine window

cv::Mat cos_window(sz[0], sz[1], CV_32FC1);

hann2d(cos_window);

//print_mat(cos_window,"cos_window.txt"); cv::Mat frame;

cv::Mat org;

cv::Mat x;

cv::Mat k;

cv::Mat z;

cv::Mat alphaf;

cv::Mat new_alphaf; cv::Point pos = pos_first; //VideoCapture capture(0);

cv::namedWindow("img");

string file_name;

int i = 0;

while (tracking_flag)

{ capture.read(org); if (org.empty())

{

cout << "fail to open frame" << i << endl;

break;

} if (org.channels() > 1)

{

cvtColor(org, frame, CV_BGR2GRAY);

}

//%extract and pre-process subwindow

/*ofstream F("frame.txt");

for(int p = 0;p < frame.rows;p ++){

for(int q = 0;q < frame.cols;q++){

F << int(frame.at<uchar>(p,q)) << " ";

}

F << '\n';

}*/

//cout<<frame.rows<<" "<<frame.cols<<endl;

//imshow("track_frame",frame);

//cvWaitKey(10);

//cout<< int(frame.at<float>(239,10)) << int(frame.at<float>(239,20))<< endl;

//imwrite("frame.jpg",frame);

//print_img(frame,"frame.txt");

x = get_subwindow(frame, pos, sz, cos_window);

//print_mat(x,"x.txt"); if (i > 0)

{

k = dense_guess_kernel(sigma, x, z);

//print_mat(k,"k.txt"); //kf = fft2(k)

//IplImage* k_temp = &IplImage(k);

cv::Mat kf = fft2d(k);

vector<cv::Mat> kf_ri(kf.channels());

cv::split(kf, kf_ri);

//print_mat(kf_ri[0],"kf.txt"); //response = real(ifft2(alphaf .* fft2(k))); %(Eq. 9) vector<cv::Mat> response_ri(2);

cv::Mat response = c_mul(alphaf, kf);

idft(response, response);

response = response / (response.rows*response.cols);

cv::split(response, response_ri);

//print_mat(response_ri[0],"response.txt"); //%target location is at the maximum response

int max_row, max_col;

double max_response = 0;

for (int j = 0; j < response_ri[0].rows; j++)

{

for (int k = 0; k < response_ri[0].cols; k++)

{

if (response_ri[0].at<float>(j, k) > max_response)

{

max_response = response_ri[0].at<float>(j, k);

max_row = j;

max_col = k;

}

}

}

pos = pos - cv::Point(floor(sz[1] / 2), floor(sz[0] / 2)) + cv::Point(max_col + 1, max_row + 1);

} x = get_subwindow(frame, pos, sz, cos_window);

//print_mat(x,"x.txt");

k = dense_guess_kernel(sigma, x);

//print_mat(k,"k.txt");

//new_alphaf = yf ./ (fft2(k) + lambda); %(Eq. 7)

//IplImage *k_t = &IplImage(k); new_alphaf = c_div(yf, (fft2d(k) + lambda));

vector<cv::Mat> new_alphaf_ri(2);

cv::split(new_alphaf, new_alphaf_ri);

//print_mat(new_alphaf_ri[0],"new_alphaf.txt"); cv::Mat new_z = x; if (i == 0)

{

alphaf = new_alphaf;

z = x;

}

else

{

alphaf = (1 - interp_factor) * alphaf + interp_factor*new_alphaf;

z = (1 - interp_factor) * z + interp_factor * new_z;

} i++; cv::Mat frame_print;

org.copyTo(frame_print);

rectangle(frame_print, cv::Point(pos.x - target_sz[1] / 2, pos.y - target_sz[0] / 2), cv::Point(pos.x + target_sz[1] / 2, pos.y + target_sz[0] / 2), CV_RGB(255, 255, 255), 1);

circle(frame_print, cv::Point(pos.x, pos.y), 2, cvScalar(255, 0, 0)); //rectangle(frame_print, cv::Point(frame_print.cols/2 - 30, frame_print.rows/2 - 30), cv::Point(frame_print.cols / 2 + 30, frame_print.rows / 2 + 30), CV_RGB(0, 255, 0), 1);

circle(frame_print, cv::Point(frame_print.cols/2, frame_print.rows/2), 15, cvScalar(255, 0, 255)); imshow("img", frame_print); BOX.x = pos.x;

BOX.y = pos.y;

BOX.width = target_sz[1];

BOX.height = target_sz[0];

BOX.flag = tracking_flag;

memcpy(BOX_DATA, &BOX, sizeof(TrackBox)); imgInfHead img_inf_head;

img_inf_head.width = org.cols;

img_inf_head.height = org.rows;

img_inf_head.type = org.type();

int channels = org.channels(); memcpy(SEND_IMG00_HEAD, &img_inf_head, sizeof(imgInfHead));

memcpy(SEND_IMG00_DATA, org.data, org.cols*org.rows*channels); if (cvWaitKey(10) == 's')

{

tracking_flag = false;

} } return;

} void CSK_Tracker::tracke_one(ifstream &infile, cv::Point pos_first, int frame_count, int* target_sz, cv::VideoCapture capture)

{ //%window size, taking padding into account

int sz[2] = { floor(target_sz[0] * (1 + padding)),floor(target_sz[1] * (1 + padding)) }; //%desired output (gaussian shaped), bandwidth proportional to target size

double output_sigma = sqrt(target_sz[0] * target_sz[1])*output_sigma_factor;

cv::Mat rs(sz[0], sz[1], CV_32FC1);

cv::Mat cs(sz[0], sz[1], CV_32FC1);

for (int i = 0; i < sz[0]; i++)

{

for (int j = 0; j < sz[1]; j++)

{

rs.at<float>(i, j) = i - sz[0] / 2 + 1;

cs.at<float>(i, j) = j - sz[1] / 2 + 1;

}

}

//print_mat(rs,"rs.txt");

//print_mat(cs,"cs.txt"); cv::Mat y;

exp((-0.5 / pow(output_sigma, 2))*(rs.mul(rs) + cs.mul(cs)), y);

//print_mat(y,"y.txt"); //yf = fft2(y)

cv::Mat yf;

//IplImage *y_temp = &IplImage(y);

yf = fft2d(y);

vector<cv::Mat> yf_ri(yf.channels());

cv::split(yf, yf_ri); //%store pre-computed cosine window

cv::Mat cos_window(sz[0], sz[1], CV_32FC1);

hann2d(cos_window);

//print_mat(cos_window,"cos_window.txt"); cv::Mat frame;

cv::Mat x;

cv::Mat k;

cv::Mat z;

cv::Mat alphaf;

cv::Mat new_alphaf; cv::Point pos = pos_first; cv::namedWindow("haha");

string file_name; for (int i = 0; i < frame_count; ++i) { double totaltime; if (!capture.read(frame))

{

cout << "读取视频失败" << endl;

return;

} /*getline(infile,file_name);

frame = imread(file_name);*/ if (frame.empty())

{

cout << "fail to open frame" << i << endl;

break;

} if (frame.channels() > 1)

{

cvtColor(frame, frame, CV_BGR2GRAY);

}

//%extract and pre-process subwindow

/*ofstream F("frame.txt");

for(int p = 0;p < frame.rows;p ++){

for(int q = 0;q < frame.cols;q++){

F << int(frame.at<uchar>(p,q)) << " ";

}

F << '\n';

}*/

//cout<<frame.rows<<" "<<frame.cols<<endl;

//imshow("track_frame",frame);

//cvWaitKey(10);

//cout<< int(frame.at<float>(239,10)) << int(frame.at<float>(239,20))<< endl;

//imwrite("frame.jpg",frame);

//print_img(frame,"frame.txt");

x = get_subwindow(frame, pos, sz, cos_window);

//print_mat(x,"x.txt"); if (i > 0)

{

k = dense_guess_kernel(sigma, x, z);

//print_mat(k,"k.txt"); //kf = fft2(k)

//IplImage* k_temp = &IplImage(k);

cv::Mat kf = fft2d(k);

vector<cv::Mat> kf_ri(kf.channels());

cv::split(kf, kf_ri);

//print_mat(kf_ri[0],"kf.txt"); //response = real(ifft2(alphaf .* fft2(k))); %(Eq. 9) vector<cv::Mat> response_ri(2);

cv::Mat response = c_mul(alphaf, kf);

idft(response, response);

response = response / (response.rows*response.cols);

cv::split(response, response_ri);

//print_mat(response_ri[0],"response.txt"); //%target location is at the maximum response

int max_row, max_col;

double max_response = 0;

for (int j = 0; j < response_ri[0].rows; j++)

{

for (int k = 0; k < response_ri[0].cols; k++)

{

if (response_ri[0].at<float>(j, k) > max_response)

{

max_response = response_ri[0].at<float>(j, k);

max_row = j;

max_col = k;

}

}

}

pos = pos - cv::Point(floor(sz[1] / 2), floor(sz[0] / 2)) + cv::Point(max_col + 1, max_row + 1);

} x = get_subwindow(frame, pos, sz, cos_window);

//print_mat(x,"x.txt");

k = dense_guess_kernel(sigma, x);

//print_mat(k,"k.txt");

//new_alphaf = yf ./ (fft2(k) + lambda); %(Eq. 7)

//IplImage *k_t = &IplImage(k); new_alphaf = c_div(yf, (fft2d(k) + lambda));

vector<cv::Mat> new_alphaf_ri(2);

cv::split(new_alphaf, new_alphaf_ri);

//print_mat(new_alphaf_ri[0],"new_alphaf.txt"); cv::Mat new_z = x; if (i == 0)

{

alphaf = new_alphaf;

z = x;

}

else

{

alphaf = (1 - interp_factor) * alphaf + interp_factor*new_alphaf;

z = (1 - interp_factor) * z + interp_factor * new_z;

} //draw

// rectangle(frame,Point(pos.x - target_sz/2,pos.y - target_sz/2),Point(pos.x + target_sz/2,pos.y + target_sz/2),CV_RGB(255,255,255),2);

// imshow("haha",frame);

// uchar key;

// key = waitKey(10);

// if (key == 'q')

// {

// break;

// } cv::Mat frame_print;

frame.copyTo(frame_print);

cv::rectangle(frame_print, cv::Point(pos.x - target_sz[1] / 2, pos.y - target_sz[0] / 2), cv::Point(pos.x + target_sz[1] / 2, pos.y + target_sz[0] / 2), CV_RGB(255, 255, 255), 1);

cv::circle(frame_print, cv::Point(pos.x, pos.y), 2, cvScalar(255, 0, 0)); /*totaltime = (double)(finish - start) / CLOCKS_PER_SEC;*/

//cout << "\n此程序的运行时间为" << totaltime * 1000 << "ms!" << endl;

imshow("haha", frame_print);

cvWaitKey(10); } return;

} //void CSK_Tracker::run_tracker(string video_name, Point pos, int target_sz)

//{

//

// VideoCapture capture(video_name);

// if (!capture.isOpened())

// {

// cout << "Fail to open video " << video_name << endl;

// return;

// }

// int frame_count = int(capture.get(CV_CAP_PROP_FRAME_COUNT));

// double rate = capture.get(CV_CAP_PROP_FPS);

// int width = capture.get(CV_CAP_PROP_FRAME_WIDTH);

// int height = capture.get(CV_CAP_PROP_FRAME_HEIGHT);

//

// //%window size, taking padding into account

// int sz = floor(target_sz * (1 + padding));

//

// //%desired output (gaussian shaped), bandwidth proportional to target size

// double output_sigma = target_sz*output_sigma_factor;

// Mat rs(sz,sz,CV_32FC1);

// Mat cs(sz,sz,CV_32FC1);

// for (int i = 0; i < sz; i++)

// {

// for (int j = 0; j < sz; j++)

// {

// rs.at<float>(i,j) = i - sz/2 +1;

// cs.at<float>(i,j) = j - sz/2 +1;

// }

// }

//

// Mat y;

// exp((-0.5/pow(output_sigma,2))*(rs.mul(rs) + cs.mul(cs)),y);

//

// //yf = fft2(y)

// Mat yf;

// yf = fft2d(y);

// vector<Mat> yf_ri(yf.channels());

// cv::split(yf,yf_ri);

//

//

// //%store pre-computed cosine window

// Mat cos_window(sz,sz,CV_32FC1);

// hann2d(cos_window);

//

// vector<Point> position(frame_count);

//

// Mat frame;

// Mat x;

// Mat k;

// Mat z;

// Mat alphaf;

// Mat new_alphaf;

//

// namedWindow("haha");

//

// for (int i = 0; i < frame_count; i++)

// {

// if (!capture.read(frame))

// {

// cout << "read frame failed!" << endl;

// }

// if (frame.channels() > 1)

// {

// cvtColor(frame,frame,CV_BGR2GRAY);

// }

//

// //%extract and pre-process subwindow

//

// x = get_subwindow(frame, pos, sz, cos_window);

//

//

// if (i > 0)

// {

// k = dense_guess_kernel(sigma,x,z);

//

// //kf = fft2(k)

// Mat kf = fft2d(k);

// vector<Mat> kf_ri(kf.channels());

// cv::split(kf,kf_ri);

//

// //response = real(ifft2(alphaf .* fft2(k))); %(Eq. 9)

//

// vector<Mat> response_ri(2);

// Mat response = c_mul(alphaf,kf);

// idft(response,response);

// response = response/(response.rows*response.cols);

// cv::split(response,response_ri);

//

// //%target location is at the maximum response

// int max_row, max_col;

// double max_response = 0;

// for (int j = 0; j < response_ri[0].rows; j++)

// {

// for (int k = 0; k < response_ri[0].cols; k++)

// {

// if (response_ri[0].at<float>(j,k) > max_response)

// {

// max_response = response_ri[0].at<float>(j,k);

// max_row = j;

// max_col = k;

// }

// }

// }

// pos = pos - Point(floor(sz/2),floor(sz/2)) + Point(max_col,max_row);

// }

//

// x = get_subwindow(frame,pos,sz,cos_window);

// k = dense_guess_kernel(sigma,x);

// //new_alphaf = yf ./ (fft2(k) + lambda); %(Eq. 7)

// new_alphaf = c_div(yf,(fft2d(k) + lambda));

// vector<Mat> new_alphaf_ri(2);

// cv::split(new_alphaf,new_alphaf_ri);

//

// Mat new_z = x;

//

// if (i == 0)

// {

// alphaf = new_alphaf;

// z = x;

// }

// else

// {

// alphaf = (1 - interp_factor) * alphaf +interp_factor*new_alphaf;

// z = (1 - interp_factor) * z + interp_factor * new_z;

// }

//

// position[i] = pos;

//

// //draw

// rectangle(frame,Point(pos.x - target_sz/2,pos.y - target_sz/2),Point(pos.x + target_sz/2,pos.y + target_sz/2),CV_RGB(255,255,255),2);

// imshow("haha",frame);

// uchar key;

// key = waitKey(10);

// if (key == 'q')

// {

// break;

// }

// }

//} cv::Mat CSK_Tracker::conj(cv::Mat a)

{

cv::Mat b;

a.copyTo(b);

vector<cv::Mat> b_ri(2);

cv::split(b,b_ri);

b_ri[1] = -b_ri[1];

merge(b_ri,b);

return b;

} cv::Mat CSK_Tracker::c_mul(cv::Mat a, cv::Mat b)

{

if (!(a.channels() == 2 || b.channels() == 2))

{

cout << "c_mul error!" << endl;

}

vector<cv::Mat> a_ri(2);

vector<cv::Mat> b_ri(2);

cv::split(a,a_ri);

cv::split(b,b_ri);

vector<cv::Mat> c_ri(2);

c_ri[0] = a_ri[0].mul(b_ri[0]) - a_ri[1].mul(b_ri[1]);

c_ri[1] = a_ri[0].mul(b_ri[1]) + a_ri[1].mul(b_ri[0]);

cv::Mat c;

merge(c_ri,c);

return c;

}

cv::Mat CSK_Tracker::c_div(cv::Mat a, cv::Mat b)

{

cv::Mat c;

c = c_mul(a,conj(b));

vector<cv::Mat> c_ri(2);

cv::split(c,c_ri);

vector<cv::Mat> mag_b_ri(2);

cv::Mat mag_b = c_mul(b,conj(b));

cv::split(mag_b,mag_b_ri);

c_ri[0] = c_ri[0]/mag_b_ri[0];

c_ri[1] = c_ri[1]/mag_b_ri[0];

merge(c_ri,c);

return c;

} cv::Mat CSK_Tracker::fft2d(cv::Mat a)

{

cv::Mat padded_a;

//int m_a = getOptimalDFTSize(a.rows);

//int n_a = getOptimalDFTSize(a.cols);

int m_a = a.rows;

int n_a = a.cols;

copyMakeBorder(a, padded_a, 0, m_a - a.rows, 0, n_a - a.cols, cv::BORDER_CONSTANT, cv::Scalar::all(0));

cv::Mat planes_a[] = { cv::Mat_<float>(padded_a), cv::Mat::zeros(padded_a.size(),CV_32F)};

cv::Mat af;

merge(planes_a, 2, af);

dft(af,af);

return af;

} //Mat CSK_Tracker::fft2d(IplImage *src)

//{ //实部、虚部

// IplImage *image_Re = 0, *image_Im = 0, *Fourier = 0;

// IplImage *D;

// // int i, j;

// image_Re = cvCreateImage(cvGetSize(src), IPL_DEPTH_64F, 1); //实部

// //Imaginary part

// image_Im = cvCreateImage(cvGetSize(src), IPL_DEPTH_64F, 1); //虚部

// //2 channels (image_Re, image_Im)

// Fourier = cvCreateImage(cvGetSize(src), IPL_DEPTH_64F, 2);

// // Real part conversion from u8 to 64f (double)

// cvConvertScale(src, image_Re);

// // Imaginary part (zeros)

// cvZero(image_Im);

// // Join real and imaginary parts and stock them in Fourier image

// cvMerge(image_Re, image_Im, 0, 0, Fourier);

//

// // Application of the forward Fourier transform

// cvDFT(Fourier, D, CV_DXT_FORWARD);

// cvReleaseImage(&image_Re);

// cvReleaseImage(&image_Im);

// cvReleaseImage(&Fourier);

// Mat dst = Mat(D);

// return dst;

//} void CSK_Tracker::print_mat(cv::Mat a, string file_name)

{

ofstream fout(file_name);

int col = a.cols;

int row = a.rows;

for(int i = 0; i< row; i++){

for(int j = 0; j < col; j++){

fout << a.at<float>(i,j) << " ";

}

fout << '\n';

}

fout.close();

} void CSK_Tracker::print_img(cv::Mat a, string file_name)

{

ofstream fout(file_name);

int col = a.cols;

int row = a.rows;

for(int i = 0; i< row; i++){

for(int j = 0; j < col; j++){

fout << float(a.at<uchar>(i,j)) << " ";

}

fout << endl;

}

fout.close();

}

图像跟踪(1) CSK的更多相关文章

- python + opencv: kalman 跟踪

之前博文中讲解过kalman滤波的原理和应用,这里用一个跟踪鼠标的例程来演示怎么在opencv里用自带的kalman函数进行目标跟踪,文章的内容对做图像跟踪有借鉴意义.文章主要是网络资源进行整理和简单 ...

- 目标跟踪ObjectT综述介绍

此文也很详细:http://blog.csdn.net/maochongsandai110/article/details/11530045 原文链接:http://blog.csdn.net/pp5 ...

- HMS Core AR Engine 2D图片/3D物体跟踪技术 助力打造更智能AR交互体验

AR技术已经被广泛应用于营销.教育.游戏.展览等场景.通过2D图像跟踪技术和3D物体跟踪技术,用户只需使用一台手机进行拍摄,即可实现海报.卡牌等平面物体以及文物.手办等立体物体的AR效果.尽管近年来2 ...

- [Object Tracking] Overview of algorithms for Object Tracking

From: https://www.zhihu.com/question/26493945 可以载入史册的知乎贴 目标跟踪之NIUBILITY的相关滤波 - 专注于分享目标跟踪中非常高效快速的相关滤波 ...

- opencv笔记6:角点检测

time:2015年10月09日 星期五 23时11分58秒 # opencv笔记6:角点检测 update:从角点检测,学习图像的特征,这是后续图像跟踪.图像匹配的基础. 角点检测是什么鬼?前面一篇 ...

- 机器学习:Python实现聚类算法(三)之总结

考虑到学习知识的顺序及效率问题,所以后续的几种聚类方法不再详细讲解原理,也不再写python实现的源代码,只介绍下算法的基本思路,使大家对每种算法有个直观的印象,从而可以更好的理解函数中参数的意义及作 ...

- m100(2) 云台自动跟踪

大疆 210云台控制 https://developer.dji.com/onboard-sdk/documentation/sample-doc/advanced-sensing-target-tr ...

- Dreamweaver 1 网页制作

1.站点 1.1 创建站点 点击菜单栏中站点进行站点创建,输入站点名称,路径 1.2 设置图像文件夹 1.3 站点管理 站点的编辑.复制.删除 2.页面属性栏 2.1 外观 1.设置页面整体的字体.大 ...

- 视觉库—OpenCV

视频会议软件的视频质量除了与外置设备.编码器相关外,还与视频的后处理技术相关,视频图像通过后处理技术,如图像增强.图像去噪等,图像质量会得到主观上较大的提高.而我们通常的视频后处理技术会采用开源的项目 ...

随机推荐

- AngularJS+Ionic开发-2.项目结构介绍

使用上篇博客<开发环境搭建>中的命令创建完成IonicHelloWorld项目,在VSCode中的左侧,显示该项目的结构信息,如下图所示: 1 .sourcesmaps文件夹 调试状态的j ...

- Java学习笔记之——常用快捷键(eclipse)

* Ctrl+C 复制 * Ctrl+V 粘贴 * Ctrl+A 全选 * Ctrl+S 保存 * Ctrl+Z 撤销 * Ctrl+Y 还原 * Ctrl+X 剪切 * Ctrl+F 查找 * ...

- Java框架之Struts2(一)

在学习Struts2之前,我们要知道Java为什么要有反射机制呢?反射机制可以说是填补Java不能动态访问某一个类的空白.利用反射机制,动态的创建一个对象.动态的访问类的某个属性,而且访问哪一个属性自 ...

- 【Java POI】1、Java POI的使用

很多时候,一个软件应用程序需要生成Microsoft Excel文件格式的报告.有时,一个应用程序甚至希望将Excel文件作为输入数据.例如,一个公司开发的应用程序将财务部门需要所有输出生成自己的Ex ...

- Vsftpd+Tengine+SpringMVC实现上传图片

第三部分:SpringMVC实现上传 1.1 思路 (1)使用SpringMVC上传组件,从页面表单接收图片 (2)使用vsftpd组件,将图片上传到Linux服务器 a.服务端:在Linux上安装f ...

- 数组式访问-ArrayAccess

以前对ArrayAccess不是很熟悉,现在整理下下有关ArrayAccess相关的知识,ArrayAccess接口就是提供像访问数组一样访问对象的能力的接口. 接口内容如下: ArrayAccess ...

- javascript如何操作数组

说明 如需求:后台返回一个用户列表数组,该数组可能为空,最多只可能会有10个用户, 页面中A,B两处展示用户列表,B处不管如何都会展示返回的所有用户,A处需要展示10个用户,不足10个展示默认用户, ...

- SQL Server 中的一些概念

学习SQL Server 2012编程入门经典(第4版)的笔记 1.事务日志 任意数据库的更改起初不进入数据库本身,而是不断地被写入到事务日志. 日志是数据进入磁盘上的最先位置. 2.表 数据库中实际 ...

- js之跑马灯广告

目标效果:每过1秒重复把广告的第一个字符放到最后,达到动态跑马灯效果 代码如下: <!DOCTYPE html> <html lang="en"> < ...

- SPOJ GSS3 (动态dp)

题意 题目链接 Sol 这题可以动态dp做. 设\(f[i]\)表示以\(i\)为结尾的最大子段和,\(g[i]\)表示\(1-i\)的最大子段和 那么 \(f[i] = max(f[i - 1] + ...