Hadoop生态圈-Sqoop部署以及基本使用方法

Hadoop生态圈-Sqoop部署以及基本使用方法

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.部署Sqoop工具

1>.下载Sqoop软件(下载地址:http://mirrors.hust.edu.cn/apache/sqoop/1.4.7/,建议下载最新版本,截止2018-06-14时,最新版本为1.4.7。)

2>.解压并创建符号链接

[yinzhengjie@s101 data]$ tar zxf sqoop-1.4..bin__hadoop-2.6..tar.gz -C /soft/

[yinzhengjie@s101 data]$ ln -s /soft/sqoop-1.4..bin__hadoop-2.6./ /soft/sqoop

[yinzhengjie@s101 data]$

3>.配置环境变量并使之生效

[yinzhengjie@s101 ~]$ sudo vi /etc/profile

[sudo] password for yinzhengjie:

[yinzhengjie@s101 ~]$ tail - /etc/profile

#ADD SQOOP

SQOOP_HOME=/soft/sqoop

PATH=$PATH:$SQOOP_HOME/bin

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ source /etc/profile

[yinzhengjie@s101 ~]$

4>.创建sqoop-env.sh配置文件

[yinzhengjie@s101 ~]$ cp /soft/sqoop/conf/sqoop-env-template.sh /soft/sqoop/conf/sqoop-env.sh

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more /soft/sqoop/conf/sqoop-env.sh | grep -v ^# | grep -v ^$

export HADOOP_COMMON_HOME=/soft/hadoop

export HADOOP_MAPRED_HOME=/soft/hadoop

export HBASE_HOME=/soft/hbase

export HIVE_HOME=/soft/hive

export ZOOCFGDIR=/soft/zk/conf

[yinzhengjie@s101 ~]$

5>.将mysql驱动放置在sqoop/lib下

[yinzhengjie@s101 ~]$ cp /soft/hive/lib/mysql-connector-java-5.1..jar /soft/sqoop/lib/

[yinzhengjie@s101 ~]$

6>.sqoop version验证安装

[yinzhengjie@s101 ~]$ sqoop version

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Sqoop 1.4.

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec :: STD

[yinzhengjie@s101 ~]$

二.基本使用

1>.使用sqoop命令行链接MySQL数据库

[yinzhengjie@s101 ~]$ sqoop list-databases --connect jdbc:mysql://s101 --username root -P

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

information_schema

hive

mysql

performance_schema

[yinzhengjie@s101 ~]$

2>.sqoop查看帮助

[yinzhengjie@s101 ~]$ sqoop help

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

usage: sqoop COMMAND [ARGS] Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information See 'sqoop help COMMAND' for information on a specific command.

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sqoop help

[yinzhengjie@s101 ~]$ sqoop import --help

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

usage: sqoop import [GENERIC-ARGS] [TOOL-ARGS] Common arguments:

--connect <jdbc-uri> Specify JDBC

connect

string

--connection-manager <class-name> Specify

connection

manager

class name

--connection-param-file <properties-file> Specify

connection

parameters

file

--driver <class-name> Manually

specify JDBC

driver class

to use

--hadoop-home <hdir> Override

$HADOOP_MAPR

ED_HOME_ARG

--hadoop-mapred-home <dir> Override

$HADOOP_MAPR

ED_HOME_ARG

--help Print usage

instructions

--metadata-transaction-isolation-level <isolationlevel> Defines the

transaction

isolation

level for

metadata

queries. For

more details

check

java.sql.Con

nection

javadoc or

the JDBC

specificaito

n

--oracle-escaping-disabled <boolean> Disable the

escaping

mechanism of

the

Oracle/OraOo

p connection

managers

-P Read

password

from console

--password <password> Set

authenticati

on password

--password-alias <password-alias> Credential

provider

password

alias

--password-file <password-file> Set

authenticati

on password

file path

--relaxed-isolation Use

read-uncommi

tted

isolation

for imports

--skip-dist-cache Skip copying

jars to

distributed

cache

--temporary-rootdir <rootdir> Defines the

temporary

root

directory

for the

import

--throw-on-error Rethrow a

RuntimeExcep

tion on

error

occurred

during the

job

--username <username> Set

authenticati

on username

--verbose Print more

information

while

working Import control arguments:

--append Imports data

in append

mode

--as-avrodatafile Imports data

to Avro data

files

--as-parquetfile Imports data

to Parquet

files

--as-sequencefile Imports data

to

SequenceFile

s

--as-textfile Imports data

as plain

text

(default)

--autoreset-to-one-mapper Reset the

number of

mappers to

one mapper

if no split

key

available

--boundary-query <statement> Set boundary

query for

retrieving

max and min

value of the

primary key

--columns <col,col,col...> Columns to

import from

table

--compression-codec <codec> Compression

codec to use

for import

--delete-target-dir Imports data

in delete

mode

--direct Use direct

import fast

path

--direct-split-size <n> Split the

input stream

every 'n'

bytes when

importing in

direct mode

-e,--query <statement> Import

results of

SQL

'statement'

--fetch-size <n> Set number

'n' of rows

to fetch

from the

database

when more

rows are

needed

--inline-lob-limit <n> Set the

maximum size

for an

inline LOB

-m,--num-mappers <n> Use 'n' map

tasks to

import in

parallel

--mapreduce-job-name <name> Set name for

generated

mapreduce

job

--merge-key <column> Key column

to use to

join results

--split-by <column-name> Column of

the table

used to

split work

units

--split-limit <size> Upper Limit

of rows per

split for

split

columns of

Date/Time/Ti

mestamp and

integer

types. For

date or

timestamp

fields it is

calculated

in seconds.

split-limit

should be

greater than --table <table-name> Table to

read

--target-dir <dir> HDFS plain

table

destination

--validate Validate the

copy using

the

configured

validator

--validation-failurehandler <validation-failurehandler> Fully

qualified

class name

for

ValidationFa

ilureHandler

--validation-threshold <validation-threshold> Fully

qualified

class name

for

ValidationTh

reshold

--validator <validator> Fully

qualified

class name

for the

Validator

--warehouse-dir <dir> HDFS parent

for table

destination

--where <where clause> WHERE clause

to use

during

import

-z,--compress Enable

compression Incremental import arguments:

--check-column <column> Source column to check for incremental

change

--incremental <import-type> Define an incremental import of type

'append' or 'lastmodified'

--last-value <value> Last imported value in the incremental

check column Output line formatting arguments:

--enclosed-by <char> Sets a required field enclosing

character

--escaped-by <char> Sets the escape character

--fields-terminated-by <char> Sets the field separator character

--lines-terminated-by <char> Sets the end-of-line character

--mysql-delimiters Uses MySQL's default delimiter set:

fields: , lines: \n escaped-by: \

optionally-enclosed-by: '

--optionally-enclosed-by <char> Sets a field enclosing character Input parsing arguments:

--input-enclosed-by <char> Sets a required field encloser

--input-escaped-by <char> Sets the input escape

character

--input-fields-terminated-by <char> Sets the input field separator

--input-lines-terminated-by <char> Sets the input end-of-line

char

--input-optionally-enclosed-by <char> Sets a field enclosing

character Hive arguments:

--create-hive-table Fail if the target hive

table exists

--external-table-dir <hdfs path> Sets where the external

table is in HDFS

--hive-database <database-name> Sets the database name to

use when importing to hive

--hive-delims-replacement <arg> Replace Hive record \0x01

and row delimiters (\n\r)

from imported string fields

with user-defined string

--hive-drop-import-delims Drop Hive record \0x01 and

row delimiters (\n\r) from

imported string fields

--hive-home <dir> Override $HIVE_HOME

--hive-import Import tables into Hive

(Uses Hive's default

delimiters if none are

set.)

--hive-overwrite Overwrite existing data in

the Hive table

--hive-partition-key <partition-key> Sets the partition key to

use when importing to hive

--hive-partition-value <partition-value> Sets the partition value to

use when importing to hive

--hive-table <table-name> Sets the table name to use

when importing to hive

--map-column-hive <arg> Override mapping for

specific column to hive

types. HBase arguments:

--column-family <family> Sets the target column family for the

import

--hbase-bulkload Enables HBase bulk loading

--hbase-create-table If specified, create missing HBase tables

--hbase-row-key <col> Specifies which input column to use as the

row key

--hbase-table <table> Import to <table> in HBase HCatalog arguments:

--hcatalog-database <arg> HCatalog database name

--hcatalog-home <hdir> Override $HCAT_HOME

--hcatalog-partition-keys <partition-key> Sets the partition

keys to use when

importing to hive

--hcatalog-partition-values <partition-value> Sets the partition

values to use when

importing to hive

--hcatalog-table <arg> HCatalog table name

--hive-home <dir> Override $HIVE_HOME

--hive-partition-key <partition-key> Sets the partition key

to use when importing

to hive

--hive-partition-value <partition-value> Sets the partition

value to use when

importing to hive

--map-column-hive <arg> Override mapping for

specific column to

hive types. HCatalog import specific options:

--create-hcatalog-table Create HCatalog before import

--drop-and-create-hcatalog-table Drop and Create HCatalog before

import

--hcatalog-storage-stanza <arg> HCatalog storage stanza for table

creation Accumulo arguments:

--accumulo-batch-size <size> Batch size in bytes

--accumulo-column-family <family> Sets the target column family for

the import

--accumulo-create-table If specified, create missing

Accumulo tables

--accumulo-instance <instance> Accumulo instance name.

--accumulo-max-latency <latency> Max write latency in milliseconds

--accumulo-password <password> Accumulo password.

--accumulo-row-key <col> Specifies which input column to

use as the row key

--accumulo-table <table> Import to <table> in Accumulo

--accumulo-user <user> Accumulo user name.

--accumulo-visibility <vis> Visibility token to be applied to

all rows imported

--accumulo-zookeepers <zookeepers> Comma-separated list of

zookeepers (host:port) Code generation arguments:

--bindir <dir> Output directory for

compiled objects

--class-name <name> Sets the generated class

name. This overrides

--package-name. When

combined with --jar-file,

sets the input class.

--escape-mapping-column-names <boolean> Disable special characters

escaping in column names

--input-null-non-string <null-str> Input null non-string

representation

--input-null-string <null-str> Input null string

representation

--jar-file <file> Disable code generation; use

specified jar

--map-column-java <arg> Override mapping for

specific columns to java

types

--null-non-string <null-str> Null non-string

representation

--null-string <null-str> Null string representation

--outdir <dir> Output directory for

generated code

--package-name <name> Put auto-generated classes

in this package Generic Hadoop command-line arguments:

(must preceed any tool-specific arguments)

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines. The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions] At minimum, you must specify --connect and --table

Arguments to mysqldump and other subprograms may be supplied

after a '--' on the command line. [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sqoop import --help

3>.sqoop列出表

[yinzhengjie@s101 ~]$ sqoop list-tables --connect jdbc:mysql://s101/yinzhengjie --username root -P

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Classmate

word

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sqoop list-tables --connect jdbc:mysql://s101/yinzhengjie --username root -P

4>.Sqoop列出数据库

[yinzhengjie@s101 ~]$ sqoop list-databases --connect jdbc:mysql://s101 --username root -P

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

information_schema

hive

mysql

performance_schema

yinzhengjie

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sqoop list-databases --connect jdbc:mysql://s101 --username root -P

三.Sqoop将数据导入HDFS(需要启动hdfs,yarn,MySQL等相关服务)

1>.在数据库进行授权操作

mysql> grant all PRIVILEGES on *.* to root@'s101' identified by 'yinzhengjie';

Query OK, rows affected (0.31 sec) mysql> grant all PRIVILEGES on *.* to root@'s102' identified by 'yinzhengjie';

Query OK, rows affected (0.02 sec) mysql> grant all PRIVILEGES on *.* to root@'s103' identified by 'yinzhengjie';

Query OK, rows affected (0.00 sec) mysql> grant all PRIVILEGES on *.* to root@'s104' identified by 'yinzhengjie';

Query OK, rows affected (0.00 sec) mysql> grant all PRIVILEGES on *.* to root@'s105' identified by 'yinzhengjie';

Query OK, rows affected (0.00 sec) mysql> flush privileges;

Query OK, rows affected (0.02 sec) mysql>

2>.将数据库的数据导入到hdfs中

[yinzhengjie@s101 ~]$ sqoop import --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --target-dir /wc -m 1

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

// :: INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /soft/hadoop

Note: /tmp/sqoop-yinzhengjie/compile/506dbf41a3a9165eebe93e9d2ec30818/word.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

// :: INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-yinzhengjie/compile/506dbf41a3a9165eebe93e9d2ec30818/word.jar

// :: WARN manager.MySQLManager: It looks like you are importing from mysql.

// :: WARN manager.MySQLManager: This transfer can be faster! Use the --direct

// :: WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

// :: INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

// :: INFO mapreduce.ImportJobBase: Beginning import of word

// :: INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

// :: INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

// :: INFO db.DBInputFormat: Using read commited transaction isolation

// :: INFO mapreduce.JobSubmitter: number of splits:

// :: INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1528967628934_0002

// :: INFO impl.YarnClientImpl: Submitted application application_1528967628934_0002

// :: INFO mapreduce.Job: The url to track the job: http://s101:8088/proxy/application_1528967628934_0002/

// :: INFO mapreduce.Job: Running job: job_1528967628934_0002

// :: INFO mapreduce.Job: Job job_1528967628934_0002 running in uber mode : false

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: Task Id : attempt_1528967628934_0002_m_000000_0, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: java.sql.SQLException: null, message from server: "Host 's105' is not allowed to connect to this MySQL server"

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:)

at org.apache.hadoop.mapred.YarnChild$.run(YarnChild.java:)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:)

Caused by: java.lang.RuntimeException: java.sql.SQLException: null, message from server: "Host 's105' is not allowed to connect to this MySQL server"

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setDbConf(DBInputFormat.java:)

... more

Caused by: java.sql.SQLException: null, message from server: "Host 's105' is not allowed to connect to this MySQL server"

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:)

at com.mysql.jdbc.MysqlIO.doHandshake(MysqlIO.java:)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:)

at java.lang.reflect.Constructor.newInstance(Constructor.java:)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:)

at java.sql.DriverManager.getConnection(DriverManager.java:)

at java.sql.DriverManager.getConnection(DriverManager.java:)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:)

... more Container killed by the ApplicationMaster.

Container killed on request. Exit code is

Container exited with a non-zero exit code // :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: Job job_1528967628934_0002 completed successfully

// :: INFO mapreduce.Job: Counters:

File System Counters

FILE: Number of bytes read=

FILE: Number of bytes written=

FILE: Number of read operations=

FILE: Number of large read operations=

FILE: Number of write operations=

HDFS: Number of bytes read=

HDFS: Number of bytes written=

HDFS: Number of read operations=

HDFS: Number of large read operations=

HDFS: Number of write operations=

Job Counters

Failed map tasks=

Launched map tasks=

Other local map tasks=

Total time spent by all maps in occupied slots (ms)=

Total time spent by all reduces in occupied slots (ms)=

Total time spent by all map tasks (ms)=

Total vcore-milliseconds taken by all map tasks=

Total megabyte-milliseconds taken by all map tasks=

Map-Reduce Framework

Map input records=

Map output records=

Input split bytes=

Spilled Records=

Failed Shuffles=

Merged Map outputs=

GC time elapsed (ms)=

CPU time spent (ms)=

Physical memory (bytes) snapshot=

Virtual memory (bytes) snapshot=

Total committed heap usage (bytes)=

File Input Format Counters

Bytes Read=

File Output Format Counters

Bytes Written=

// :: INFO mapreduce.ImportJobBase: Transferred bytes in 29.3085 seconds (2.5249 bytes/sec)

// :: INFO mapreduce.ImportJobBase: Retrieved records.

[yinzhengjie@s101 ~]$ hdfs dfs -cat /wc/part-m-

hello world

yinzhengjie hadoop

yinzhengjie hive

yinzhengjie hbase

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sqoop import --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --target-dir /wc -m 1

[yinzhengjie@s101 ~]$ hdfs dfs -cat /wc/part-m-

hello world

yinzhengjie hadoop

yinzhengjie hive

yinzhengjie hbase

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ hdfs dfs -cat /wc/part-m-00000

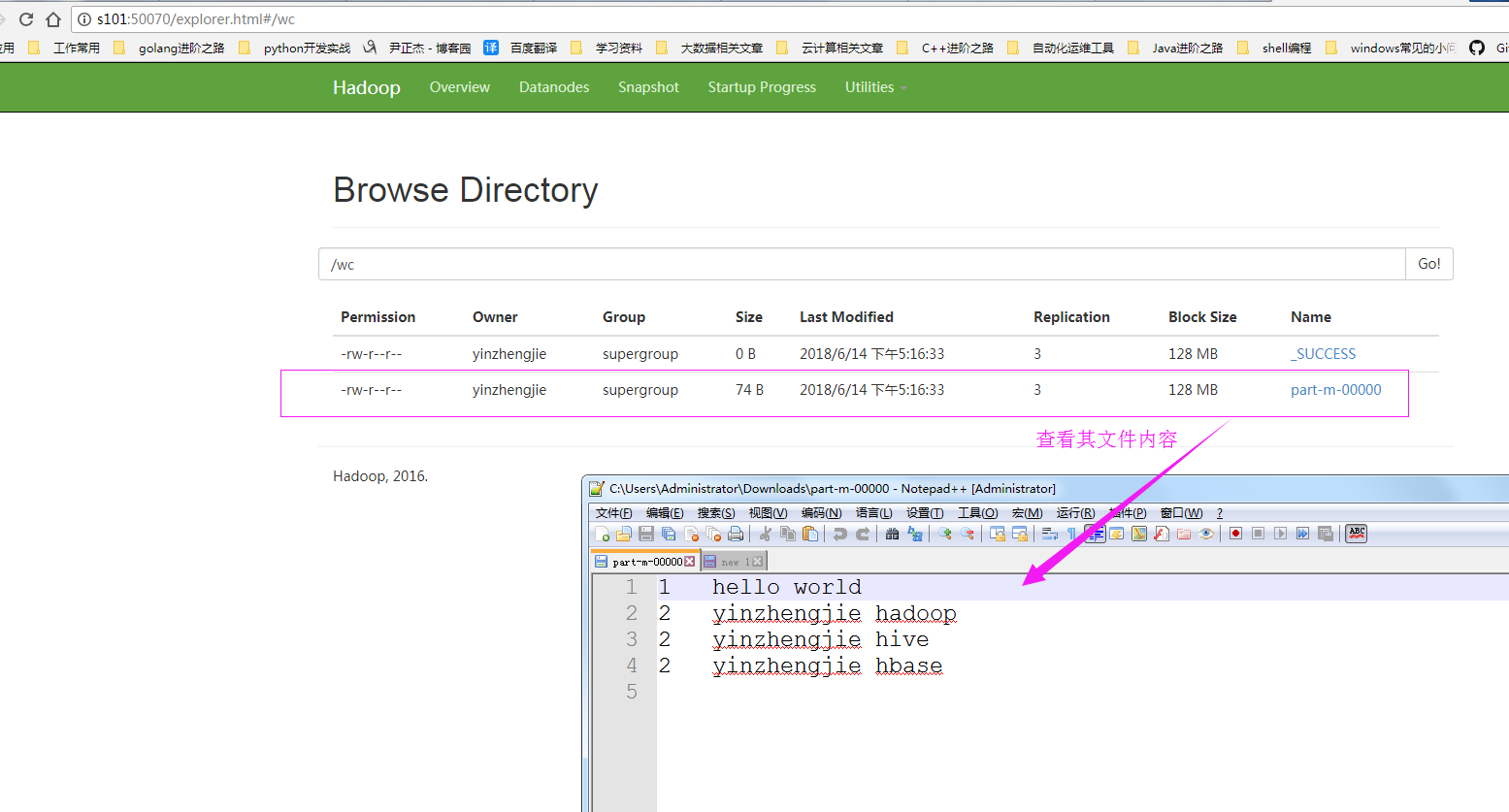

3>.在hdfs的WebUI中查看数据

4>.其他参数介绍

--table //指定导入mysql表

-m //mapper数量

--target-dir //指定导入hdfs的目录

--fields-terminated-by //指定列分隔符

--lines-terminated-by //指定行分隔符

--append //追加

--as-avrodatafile //设置文件格式为avrodatafile

--as-parquetfile · //设置文件格式为parquetfile

--as-sequencefile //设置文件格式为sequencefile

--as-textfile //设置文件格式为textfile

--columns <col,col,col...> //指定导入的mysql列

--compression-codec <codec> //制定压缩

四.sqoop导入mysql数据到hive(需要启动hdfs,yarn,MySQL等相关服务,hive不需要手动启动,因为导入的时候它自己会自行启动)

1>.修改sqoop-env.sh

[yinzhengjie@s101 ~]$ tail - /soft/sqoop/conf/sqoop-env.sh

#ADD BY YINZHENGJIE

export HIVE_CONF_DIR=/soft/hive/conf

[yinzhengjie@s101 ~]$

2>.编辑环境变量

[yinzhengjie@s101 ~]$ sudo vi /etc/profile

[sudo] password for yinzhengjie:

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ tail - /etc/profile

#ADD sqool import hive

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ source /etc/profile

[yinzhengjie@s101 ~]$

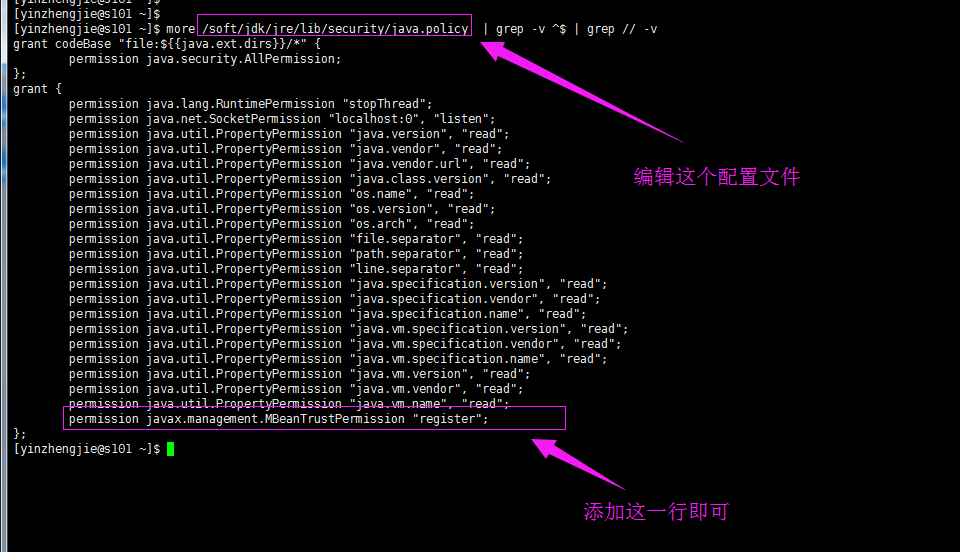

3>.关闭安全方面的异常信息(不修改也不会影响测试结果)

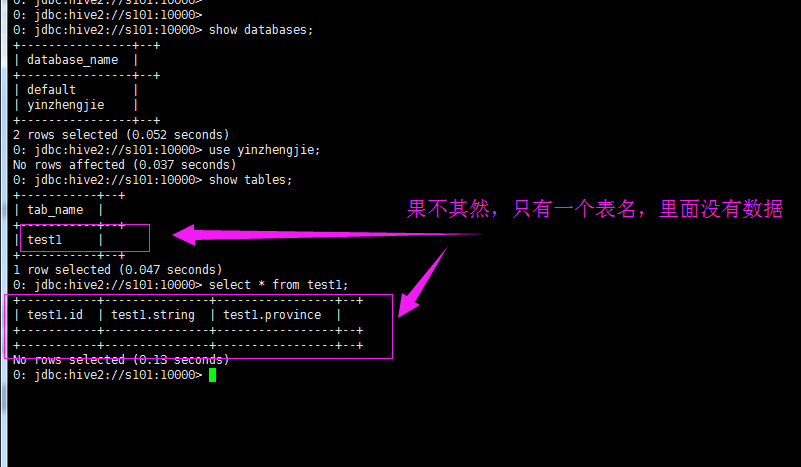

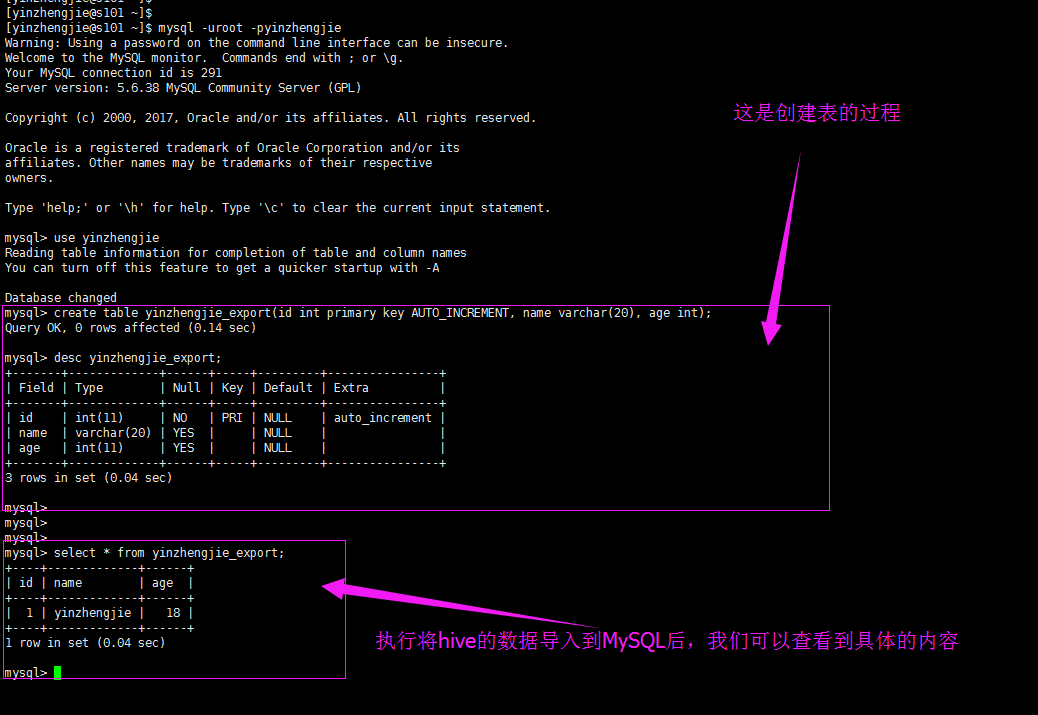

4>.导入数据到hive中

: jdbc:hive2://s101:10000> show tables;

+---------------+--+

| tab_name |

+---------------+--+

| pv |

| user_orc |

| user_parquet |

| user_rc |

| user_seq |

| user_text |

| users |

+---------------+--+

rows selected (0.061 seconds)

: jdbc:hive2://s101:10000>

导入之前(0: jdbc:hive2://s101:10000> show tables;)

[yinzhengjie@s101 ~]$ sqoop import --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --hive-import --create-hive-table --hive-database yinzhengjie --hive-table wc -m 1

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

// :: INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /soft/hadoop

Note: /tmp/sqoop-yinzhengjie/compile/a904d79d3e86841540489a5459400e8b/word.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

// :: INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-yinzhengjie/compile/a904d79d3e86841540489a5459400e8b/word.jar

// :: WARN manager.MySQLManager: It looks like you are importing from mysql.

// :: WARN manager.MySQLManager: This transfer can be faster! Use the --direct

// :: WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

// :: INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

// :: INFO mapreduce.ImportJobBase: Beginning import of word

// :: INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

// :: INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

// :: INFO db.DBInputFormat: Using read commited transaction isolation

// :: INFO mapreduce.JobSubmitter: number of splits:

// :: INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1528967628934_0005

// :: INFO impl.YarnClientImpl: Submitted application application_1528967628934_0005

// :: INFO mapreduce.Job: The url to track the job: http://s101:8088/proxy/application_1528967628934_0005/

// :: INFO mapreduce.Job: Running job: job_1528967628934_0005

// :: INFO mapreduce.Job: Job job_1528967628934_0005 running in uber mode : false

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: Job job_1528967628934_0005 completed successfully

// :: INFO mapreduce.Job: Counters:

File System Counters

FILE: Number of bytes read=

FILE: Number of bytes written=

FILE: Number of read operations=

FILE: Number of large read operations=

FILE: Number of write operations=

HDFS: Number of bytes read=

HDFS: Number of bytes written=

HDFS: Number of read operations=

HDFS: Number of large read operations=

HDFS: Number of write operations=

Job Counters

Launched map tasks=

Other local map tasks=

Total time spent by all maps in occupied slots (ms)=

Total time spent by all reduces in occupied slots (ms)=

Total time spent by all map tasks (ms)=

Total vcore-milliseconds taken by all map tasks=

Total megabyte-milliseconds taken by all map tasks=

Map-Reduce Framework

Map input records=

Map output records=

Input split bytes=

Spilled Records=

Failed Shuffles=

Merged Map outputs=

GC time elapsed (ms)=

CPU time spent (ms)=

Physical memory (bytes) snapshot=

Virtual memory (bytes) snapshot=

Total committed heap usage (bytes)=

File Input Format Counters

Bytes Read=

File Output Format Counters

Bytes Written=

// :: INFO mapreduce.ImportJobBase: Transferred bytes in 58.4206 seconds (1.2667 bytes/sec)

// :: INFO mapreduce.ImportJobBase: Retrieved records.

// :: INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table word

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO hive.HiveImport: Loading uploaded data into Hive

// :: INFO conf.HiveConf: Found configuration file file:/soft/hive/conf/hive-site.xml Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

// :: INFO SessionState:

Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

// :: INFO metastore.HiveMetaStore: : Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

// :: INFO metastore.ObjectStore: ObjectStore, initialize called

// :: INFO DataNucleus.Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

// :: INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

// :: INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

// :: INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

// :: INFO metastore.ObjectStore: Initialized ObjectStore

// :: INFO metastore.HiveMetaStore: Added admin role in metastore

// :: INFO metastore.HiveMetaStore: Added public role in metastore

// :: INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty

// :: INFO metastore.HiveMetaStore: : get_all_functions

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_all_functions

// :: INFO metadata.Hive: Registering function parsejson cn.org.yinzhengjie.udf.ParseJson

// :: WARN metadata.Hive: Failed to register persistent function parsejson:cn.org.yinzhengjie.udf.ParseJson. Ignore and continue.

// :: INFO metadata.Hive: Registering function parsejson cn.org.yinzhengjie.udf.MyUDTF

// :: WARN metadata.Hive: Failed to register persistent function parsejson:cn.org.yinzhengjie.udf.MyUDTF. Ignore and continue.

// :: INFO metadata.Hive: Registering function todate cn.org.yinzhengjie.udf.MyUDTF

// :: WARN metadata.Hive: Failed to register persistent function todate:cn.org.yinzhengjie.udf.MyUDTF. Ignore and continue.

// :: INFO session.SessionState: Created HDFS directory: /tmp/hive/yinzhengjie/ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO session.SessionState: Created local directory: /home/yinzhengjie/yinzhengjie/ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO session.SessionState: Created HDFS directory: /tmp/hive/yinzhengjie/ab78aeaa-274a-4ed6-bff0-ffa488a2c8df/_tmp_space.db

// :: INFO conf.HiveConf: Using the default value passed in for log id: ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO session.SessionState: Updating thread name to ab78aeaa-274a-4ed6-bff0-ffa488a2c8df main

// :: INFO conf.HiveConf: Using the default value passed in for log id: ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO ql.Driver: Compiling command(queryId=yinzhengjie_20180614030207_d95714d9-84da-405e-97b6-d9f36436e2f2): CREATE TABLE `yinzhengjie`.`wc` ( `id` INT, `string` STRING) COMMENT 'Imported by sqoop on 2018/06/14 03:01:43' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\011' LINES TERMINATED BY '\012' STORED AS TEXTFILE

// :: INFO parse.CalcitePlanner: Starting Semantic Analysis

// :: INFO parse.CalcitePlanner: Creating table yinzhengjie.wc position=

// :: INFO metastore.HiveMetaStore: : get_database: yinzhengjie

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_database: yinzhengjie

// :: INFO sqlstd.SQLStdHiveAccessController: Created SQLStdHiveAccessController for session context : HiveAuthzSessionContext [sessionString=ab78aeaa-274a-4ed6-bff0-ffa488a2c8df, clientType=HIVECLI]

// :: WARN session.SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

// :: INFO hive.metastore: Mestastore configuration hive.metastore.filter.hook changed from org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl to org.apache.hadoop.hive.ql.security.authorization.plugin.AuthorizationMetaStoreFilterHook

// :: INFO metastore.HiveMetaStore: : Cleaning up thread local RawStore...

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Cleaning up thread local RawStore...

// :: INFO metastore.HiveMetaStore: : Done cleaning up thread local RawStore

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Done cleaning up thread local RawStore

// :: INFO ql.Driver: Semantic Analysis Completed

// :: INFO ql.Driver: Returning Hive schema: Schema(fieldSchemas:null, properties:null)

// :: INFO ql.Driver: Completed compiling command(queryId=yinzhengjie_20180614030207_d95714d9-84da-405e-97b6-d9f36436e2f2); Time taken: 2.447 seconds

// :: INFO ql.Driver: Concurrency mode is disabled, not creating a lock manager

// :: INFO ql.Driver: Executing command(queryId=yinzhengjie_20180614030207_d95714d9-84da-405e-97b6-d9f36436e2f2): CREATE TABLE `yinzhengjie`.`wc` ( `id` INT, `string` STRING) COMMENT 'Imported by sqoop on 2018/06/14 03:01:43' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\011' LINES TERMINATED BY '\012' STORED AS TEXTFILE

// :: INFO ql.Driver: Starting task [Stage-:DDL] in serial mode

// :: INFO exec.DDLTask: creating table yinzhengjie.wc on null

// :: INFO metastore.HiveMetaStore: : create_table: Table(tableName:wc, dbName:yinzhengjie, owner:yinzhengjie, createTime:, lastAccessTime:, retention:, sd:StorageDescriptor(cols:[FieldSchema(name:id, type:int, comment:null), FieldSchema(name:string, type:string, comment:null)], location:null, inputFormat:org.apache.hadoop.mapred.TextInputFormat, outputFormat:org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat, compressed:false, numBuckets:-, serdeInfo:SerDeInfo(name:null, serializationLib:org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, parameters:{serialization.format= , line.delim=

, field.delim= }), bucketCols:[], sortCols:[], parameters:{}, skewedInfo:SkewedInfo(skewedColNames:[], skewedColValues:[], skewedColValueLocationMaps:{}), storedAsSubDirectories:false), partitionKeys:[], parameters:{totalSize=, numRows=, rawDataSize=, COLUMN_STATS_ACCURATE={"BASIC_STATS":"true"}, numFiles=, comment=Imported by sqoop on // ::}, viewOriginalText:null, viewExpandedText:null, tableType:MANAGED_TABLE, privileges:PrincipalPrivilegeSet(userPrivileges:{yinzhengjie=[PrivilegeGrantInfo(privilege:INSERT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:SELECT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:UPDATE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:DELETE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true)]}, groupPrivileges:null, rolePrivileges:null), temporary:false)

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=create_table: Table(tableName:wc, dbName:yinzhengjie, owner:yinzhengjie, createTime:, lastAccessTime:, retention:, sd:StorageDescriptor(cols:[FieldSchema(name:id, type:int, comment:null), FieldSchema(name:string, type:string, comment:null)], location:null, inputFormat:org.apache.hadoop.mapred.TextInputFormat, outputFormat:org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat, compressed:false, numBuckets:-, serdeInfo:SerDeInfo(name:null, serializationLib:org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, parameters:{serialization.format= , line.delim=

, field.delim= }), bucketCols:[], sortCols:[], parameters:{}, skewedInfo:SkewedInfo(skewedColNames:[], skewedColValues:[], skewedColValueLocationMaps:{}), storedAsSubDirectories:false), partitionKeys:[], parameters:{totalSize=, numRows=, rawDataSize=, COLUMN_STATS_ACCURATE={"BASIC_STATS":"true"}, numFiles=, comment=Imported by sqoop on // ::}, viewOriginalText:null, viewExpandedText:null, tableType:MANAGED_TABLE, privileges:PrincipalPrivilegeSet(userPrivileges:{yinzhengjie=[PrivilegeGrantInfo(privilege:INSERT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:SELECT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:UPDATE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:DELETE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true)]}, groupPrivileges:null, rolePrivileges:null), temporary:false)

// :: INFO metastore.HiveMetaStore: : Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

// :: INFO metastore.ObjectStore: ObjectStore, initialize called

// :: INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

// :: INFO metastore.ObjectStore: Initialized ObjectStore

// :: INFO common.FileUtils: Creating directory if it doesn't exist: hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/wc

// :: INFO metadata.Hive: Dumping metastore api call timing information for : execution phase

// :: INFO metadata.Hive: Total time spent in this metastore function was greater than 1000ms : createTable_(Table, )=

// :: INFO ql.Driver: Completed executing command(queryId=yinzhengjie_20180614030207_d95714d9-84da-405e-97b6-d9f36436e2f2); Time taken: 1.668 seconds

OK

// :: INFO ql.Driver: OK

Time taken: 4.152 seconds

// :: INFO CliDriver: Time taken: 4.152 seconds

// :: INFO conf.HiveConf: Using the default value passed in for log id: ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO session.SessionState: Resetting thread name to main

// :: INFO conf.HiveConf: Using the default value passed in for log id: ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO session.SessionState: Updating thread name to ab78aeaa-274a-4ed6-bff0-ffa488a2c8df main

// :: INFO ql.Driver: Compiling command(queryId=yinzhengjie_20180614030211_2b878339-754f-4d7d-985d-fe9b86f5ec88):

LOAD DATA INPATH 'hdfs://mycluster/user/yinzhengjie/word' INTO TABLE `yinzhengjie`.`wc`

// :: INFO metastore.HiveMetaStore: : get_table : db=yinzhengjie tbl=wc

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_table : db=yinzhengjie tbl=wc

// :: INFO ql.Driver: Semantic Analysis Completed

// :: INFO ql.Driver: Returning Hive schema: Schema(fieldSchemas:null, properties:null)

// :: INFO ql.Driver: Completed compiling command(queryId=yinzhengjie_20180614030211_2b878339-754f-4d7d-985d-fe9b86f5ec88); Time taken: 0.987 seconds

// :: INFO ql.Driver: Concurrency mode is disabled, not creating a lock manager

// :: INFO ql.Driver: Executing command(queryId=yinzhengjie_20180614030211_2b878339-754f-4d7d-985d-fe9b86f5ec88):

LOAD DATA INPATH 'hdfs://mycluster/user/yinzhengjie/word' INTO TABLE `yinzhengjie`.`wc`

// :: INFO ql.Driver: Starting task [Stage-:MOVE] in serial mode

Loading data to table yinzhengjie.wc

// :: INFO exec.Task: Loading data to table yinzhengjie.wc from hdfs://mycluster/user/yinzhengjie/word

// :: INFO metastore.HiveMetaStore: : get_table : db=yinzhengjie tbl=wc

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_table : db=yinzhengjie tbl=wc

// :: INFO metastore.HiveMetaStore: : get_table : db=yinzhengjie tbl=wc

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_table : db=yinzhengjie tbl=wc

// :: ERROR hdfs.KeyProviderCache: Could not find uri with key [dfs.encryption.key.provider.uri] to create a keyProvider !!

// :: INFO metastore.HiveMetaStore: : alter_table: db=yinzhengjie tbl=wc newtbl=wc

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=alter_table: db=yinzhengjie tbl=wc newtbl=wc

// :: INFO ql.Driver: Starting task [Stage-:STATS] in serial mode

// :: INFO exec.StatsTask: Executing stats task

// :: INFO metastore.HiveMetaStore: : get_table : db=yinzhengjie tbl=wc

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_table : db=yinzhengjie tbl=wc

// :: INFO metastore.HiveMetaStore: : get_table : db=yinzhengjie tbl=wc

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_table : db=yinzhengjie tbl=wc

// :: INFO metastore.HiveMetaStore: : alter_table: db=yinzhengjie tbl=wc newtbl=wc

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=alter_table: db=yinzhengjie tbl=wc newtbl=wc

// :: INFO hive.log: Updating table stats fast for wc

// :: INFO hive.log: Updated size of table wc to

// :: INFO exec.StatsTask: Table yinzhengjie.wc stats: [numFiles=, numRows=, totalSize=, rawDataSize=]

// :: INFO ql.Driver: Completed executing command(queryId=yinzhengjie_20180614030211_2b878339-754f-4d7d-985d-fe9b86f5ec88); Time taken: 0.858 seconds

OK

// :: INFO ql.Driver: OK

Time taken: 1.847 seconds

// :: INFO CliDriver: Time taken: 1.847 seconds

// :: INFO conf.HiveConf: Using the default value passed in for log id: ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO session.SessionState: Resetting thread name to main

// :: INFO conf.HiveConf: Using the default value passed in for log id: ab78aeaa-274a-4ed6-bff0-ffa488a2c8df

// :: INFO session.SessionState: Deleted directory: /tmp/hive/yinzhengjie/ab78aeaa-274a-4ed6-bff0-ffa488a2c8df on fs with scheme hdfs

// :: INFO session.SessionState: Deleted directory: /home/yinzhengjie/yinzhengjie/ab78aeaa-274a-4ed6-bff0-ffa488a2c8df on fs with scheme file

// :: INFO metastore.HiveMetaStore: : Cleaning up thread local RawStore...

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Cleaning up thread local RawStore...

// :: INFO metastore.HiveMetaStore: : Done cleaning up thread local RawStore

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Done cleaning up thread local RawStore

// :: INFO hive.HiveImport: Hive import complete.

// :: INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

[yinzhengjie@s101 ~]$

将数据导入到hive中([yinzhengjie@s101 ~]$ sqoop import --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --hive-import --create-hive-table --hive-database yinzhengjie --hive-table wc -m 1)

: jdbc:hive2://s101:10000> show tables;

+---------------+--+

| tab_name |

+---------------+--+

| pv |

| user_orc |

| user_parquet |

| user_rc |

| user_seq |

| user_text |

| users |

| wc |

+---------------+--+

rows selected (0.19 seconds)

: jdbc:hive2://s101:10000> select * from wc;

+--------+---------------------+--+

| wc.id | wc.string |

+--------+---------------------+--+

| | hello world |

| | yinzhengjie hadoop |

| | yinzhengjie hive |

| | yinzhengjie hbase |

+--------+---------------------+--+

rows selected (2.717 seconds)

: jdbc:hive2://s101:10000>

导入之后(0: jdbc:hive2://s101:10000> select * from wc;)

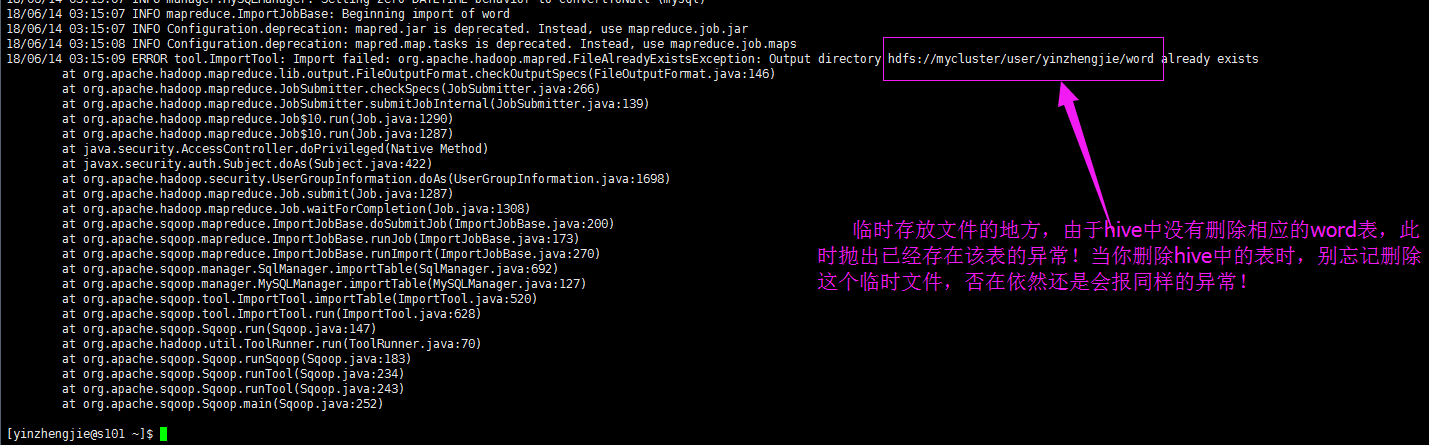

注意:将数据导入到hive的过程中,估计大家也发现了一个显现,数据会临时保存到hdfs上,等MapReduce运行完毕之后,再将数据load到服务器上,将数据加载到hive之后,hdfs临时存在的文件就会被自动删除。这个时候如果你在重新将同一张表导入到hive的同一个数据库时,就会抛出表已经存在的异常(如下图)。想要解决这个问题,除了删除hive中的表还要删除hdfs的临时文件,否在再次运行该命令依然会抛出同样的异常哟!

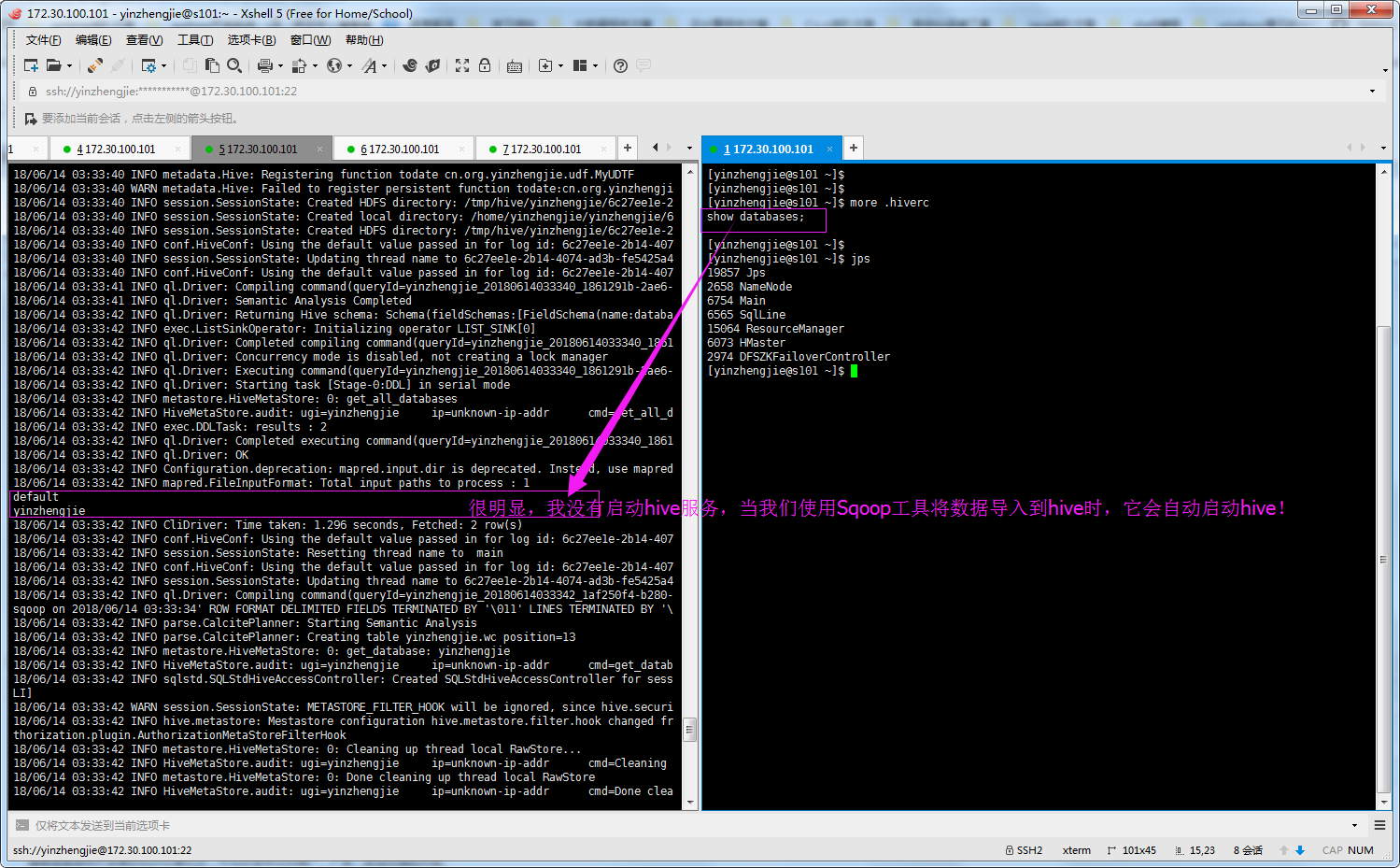

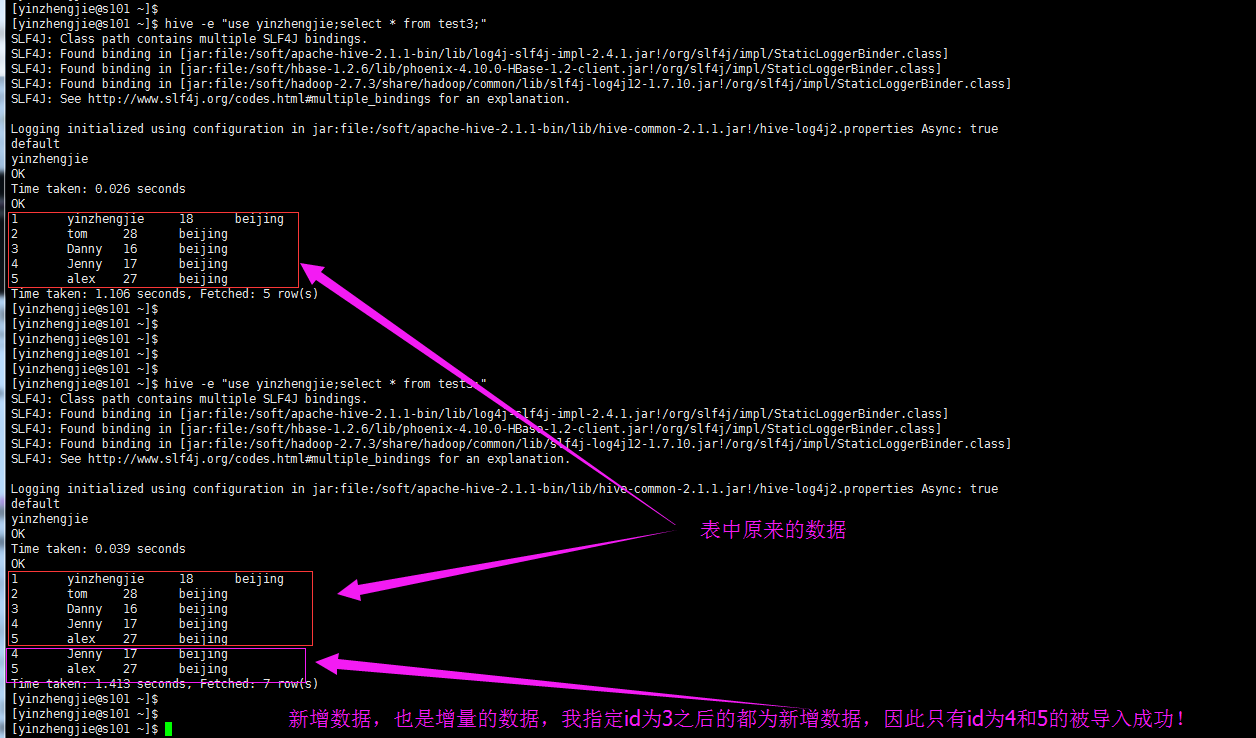

5>.Sqoop将MySQL数据导入到hive中不需要启动服务,验证如下(我们需要编写配置文件“.hiverc”)

6>.其他常用参数介绍

--create-hive-table //改参数表示如果表不存在就创建,若存在就忽略该参数

--external-table-dir <hdfs path> //指定外部表路径

--hive-database <database-name> //指定hive的数据库

--hive-import //指定导入hive表

--hive-partition-key <partition-key> //指定分区的key

--hive-partition-value <partition-value> //指定分区的value

--hive-table <table-name> //指定hive的表

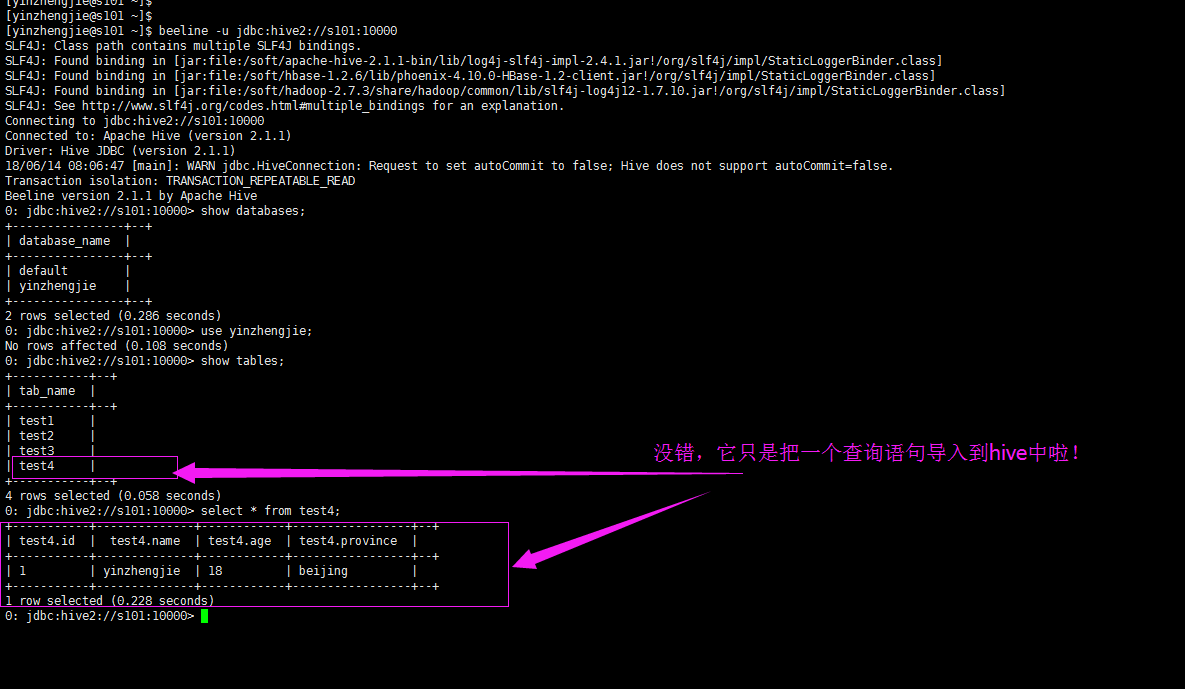

7>.sqoop只创建hive表

[yinzhengjie@s101 ~]$ sqoop create-hive-table --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --hive-database yinzhengjie --hive-table test1 --hive-partition-key province --hive-partition-value beijing

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO hive.HiveImport: Loading uploaded data into Hive

// :: INFO conf.HiveConf: Found configuration file file:/soft/hive/conf/hive-site.xml Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

// :: INFO SessionState:

Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

// :: INFO metastore.HiveMetaStore: : Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

// :: INFO metastore.ObjectStore: ObjectStore, initialize called

// :: INFO DataNucleus.Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

// :: INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

// :: INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

// :: INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

// :: INFO metastore.ObjectStore: Initialized ObjectStore

// :: INFO metastore.HiveMetaStore: Added admin role in metastore

// :: INFO metastore.HiveMetaStore: Added public role in metastore

// :: INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty

// :: INFO metastore.HiveMetaStore: : get_all_functions

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_all_functions

// :: INFO metadata.Hive: Registering function parsejson cn.org.yinzhengjie.udf.ParseJson

// :: WARN metadata.Hive: Failed to register persistent function parsejson:cn.org.yinzhengjie.udf.ParseJson. Ignore and continue.

// :: INFO metadata.Hive: Registering function parsejson cn.org.yinzhengjie.udf.MyUDTF

// :: WARN metadata.Hive: Failed to register persistent function parsejson:cn.org.yinzhengjie.udf.MyUDTF. Ignore and continue.

// :: INFO metadata.Hive: Registering function todate cn.org.yinzhengjie.udf.MyUDTF

// :: WARN metadata.Hive: Failed to register persistent function todate:cn.org.yinzhengjie.udf.MyUDTF. Ignore and continue.

// :: INFO session.SessionState: Created HDFS directory: /tmp/hive/yinzhengjie/96f23e30-9bca---beb3260c29c0

// :: INFO session.SessionState: Created local directory: /home/yinzhengjie/yinzhengjie/96f23e30-9bca---beb3260c29c0

// :: INFO session.SessionState: Created HDFS directory: /tmp/hive/yinzhengjie/96f23e30-9bca---beb3260c29c0/_tmp_space.db

// :: INFO conf.HiveConf: Using the default value passed in for log id: 96f23e30-9bca---beb3260c29c0

// :: INFO session.SessionState: Updating thread name to 96f23e30-9bca---beb3260c29c0 main

// :: INFO conf.HiveConf: Using the default value passed in for log id: 96f23e30-9bca---beb3260c29c0

// :: INFO ql.Driver: Compiling command(queryId=yinzhengjie_20180614063244_016a0a4f-e1c4--907f-6990016f3010): show databases

// :: INFO ql.Driver: Semantic Analysis Completed

// :: INFO ql.Driver: Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null)

// :: INFO exec.ListSinkOperator: Initializing operator LIST_SINK[]

// :: INFO ql.Driver: Completed compiling command(queryId=yinzhengjie_20180614063244_016a0a4f-e1c4--907f-6990016f3010); Time taken: 1.491 seconds

// :: INFO ql.Driver: Concurrency mode is disabled, not creating a lock manager

// :: INFO ql.Driver: Executing command(queryId=yinzhengjie_20180614063244_016a0a4f-e1c4--907f-6990016f3010): show databases

// :: INFO ql.Driver: Starting task [Stage-:DDL] in serial mode

// :: INFO metastore.HiveMetaStore: : get_all_databases

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_all_databases

// :: INFO exec.DDLTask: results :

// :: INFO ql.Driver: Completed executing command(queryId=yinzhengjie_20180614063244_016a0a4f-e1c4--907f-6990016f3010); Time taken: 0.036 seconds

// :: INFO ql.Driver: OK

// :: INFO Configuration.deprecation: mapred.input.dir is deprecated. Instead, use mapreduce.input.fileinputformat.inputdir

// :: INFO mapred.FileInputFormat: Total input paths to process :

default

yinzhengjie

// :: INFO CliDriver: Time taken: 1.534 seconds, Fetched: row(s)

// :: INFO conf.HiveConf: Using the default value passed in for log id: 96f23e30-9bca---beb3260c29c0

// :: INFO session.SessionState: Resetting thread name to main

// :: INFO conf.HiveConf: Using the default value passed in for log id: 96f23e30-9bca---beb3260c29c0

// :: INFO session.SessionState: Updating thread name to 96f23e30-9bca---beb3260c29c0 main

// :: INFO ql.Driver: Compiling command(queryId=yinzhengjie_20180614063246_b8444d13-d69e-40c4-b717-7917e2bce6af): CREATE TABLE IF NOT EXISTS `yinzhengjie`.`test1` ( `id` INT, `string` STRING) COMMENT 'Imported by sqoop on 2018/06/14 06:32:35' PARTITIONED BY (province STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\011' LINES TERMINATED BY '\012' STORED AS TEXTFILE

// :: INFO parse.CalcitePlanner: Starting Semantic Analysis

// :: INFO parse.CalcitePlanner: Creating table yinzhengjie.test1 position=

// :: INFO metastore.HiveMetaStore: : get_table : db=yinzhengjie tbl=test1

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_table : db=yinzhengjie tbl=test1

// :: INFO metastore.HiveMetaStore: : get_database: yinzhengjie

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=get_database: yinzhengjie

// :: INFO sqlstd.SQLStdHiveAccessController: Created SQLStdHiveAccessController for session context : HiveAuthzSessionContext [sessionString=96f23e30-9bca---beb3260c29c0, clientType=HIVECLI]

// :: WARN session.SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

// :: INFO hive.metastore: Mestastore configuration hive.metastore.filter.hook changed from org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl to org.apache.hadoop.hive.ql.security.authorization.plugin.AuthorizationMetaStoreFilterHook

// :: INFO metastore.HiveMetaStore: : Cleaning up thread local RawStore...

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Cleaning up thread local RawStore...

// :: INFO metastore.HiveMetaStore: : Done cleaning up thread local RawStore

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Done cleaning up thread local RawStore

// :: INFO ql.Driver: Semantic Analysis Completed

// :: INFO ql.Driver: Returning Hive schema: Schema(fieldSchemas:null, properties:null)

// :: INFO ql.Driver: Completed compiling command(queryId=yinzhengjie_20180614063246_b8444d13-d69e-40c4-b717-7917e2bce6af); Time taken: 0.176 seconds

// :: INFO ql.Driver: Concurrency mode is disabled, not creating a lock manager

// :: INFO ql.Driver: Executing command(queryId=yinzhengjie_20180614063246_b8444d13-d69e-40c4-b717-7917e2bce6af): CREATE TABLE IF NOT EXISTS `yinzhengjie`.`test1` ( `id` INT, `string` STRING) COMMENT 'Imported by sqoop on 2018/06/14 06:32:35' PARTITIONED BY (province STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\011' LINES TERMINATED BY '\012' STORED AS TEXTFILE

// :: INFO ql.Driver: Starting task [Stage-:DDL] in serial mode

// :: INFO exec.DDLTask: creating table yinzhengjie.test1 on null

// :: INFO metastore.HiveMetaStore: : create_table: Table(tableName:test1, dbName:yinzhengjie, owner:yinzhengjie, createTime:, lastAccessTime:, retention:, sd:StorageDescriptor(cols:[FieldSchema(name:id, type:int, comment:null), FieldSchema(name:string, type:string, comment:null)], location:null, inputFormat:org.apache.hadoop.mapred.TextInputFormat, outputFormat:org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat, compressed:false, numBuckets:-, serdeInfo:SerDeInfo(name:null, serializationLib:org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, parameters:{serialization.format= , line.delim=

, field.delim= }), bucketCols:[], sortCols:[], parameters:{}, skewedInfo:SkewedInfo(skewedColNames:[], skewedColValues:[], skewedColValueLocationMaps:{}), storedAsSubDirectories:false), partitionKeys:[FieldSchema(name:province, type:string, comment:null)], parameters:{comment=Imported by sqoop on // ::}, viewOriginalText:null, viewExpandedText:null, tableType:MANAGED_TABLE, privileges:PrincipalPrivilegeSet(userPrivileges:{yinzhengjie=[PrivilegeGrantInfo(privilege:INSERT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:SELECT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:UPDATE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:DELETE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true)]}, groupPrivileges:null, rolePrivileges:null), temporary:false)

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=create_table: Table(tableName:test1, dbName:yinzhengjie, owner:yinzhengjie, createTime:, lastAccessTime:, retention:, sd:StorageDescriptor(cols:[FieldSchema(name:id, type:int, comment:null), FieldSchema(name:string, type:string, comment:null)], location:null, inputFormat:org.apache.hadoop.mapred.TextInputFormat, outputFormat:org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat, compressed:false, numBuckets:-, serdeInfo:SerDeInfo(name:null, serializationLib:org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, parameters:{serialization.format= , line.delim=

, field.delim= }), bucketCols:[], sortCols:[], parameters:{}, skewedInfo:SkewedInfo(skewedColNames:[], skewedColValues:[], skewedColValueLocationMaps:{}), storedAsSubDirectories:false), partitionKeys:[FieldSchema(name:province, type:string, comment:null)], parameters:{comment=Imported by sqoop on // ::}, viewOriginalText:null, viewExpandedText:null, tableType:MANAGED_TABLE, privileges:PrincipalPrivilegeSet(userPrivileges:{yinzhengjie=[PrivilegeGrantInfo(privilege:INSERT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:SELECT, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:UPDATE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true), PrivilegeGrantInfo(privilege:DELETE, createTime:-, grantor:yinzhengjie, grantorType:USER, grantOption:true)]}, groupPrivileges:null, rolePrivileges:null), temporary:false)

// :: INFO metastore.HiveMetaStore: : Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

// :: INFO metastore.ObjectStore: ObjectStore, initialize called

// :: INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

// :: INFO metastore.ObjectStore: Initialized ObjectStore

// :: INFO common.FileUtils: Creating directory if it doesn't exist: hdfs://mycluster/user/hive/warehouse/yinzhengjie.db/test1

// :: INFO ql.Driver: Completed executing command(queryId=yinzhengjie_20180614063246_b8444d13-d69e-40c4-b717-7917e2bce6af); Time taken: 0.334 seconds

OK

// :: INFO ql.Driver: OK

Time taken: 0.511 seconds

// :: INFO CliDriver: Time taken: 0.511 seconds

// :: INFO conf.HiveConf: Using the default value passed in for log id: 96f23e30-9bca---beb3260c29c0

// :: INFO session.SessionState: Resetting thread name to main

// :: INFO conf.HiveConf: Using the default value passed in for log id: 96f23e30-9bca---beb3260c29c0

// :: INFO session.SessionState: Deleted directory: /tmp/hive/yinzhengjie/96f23e30-9bca---beb3260c29c0 on fs with scheme hdfs

// :: INFO session.SessionState: Deleted directory: /home/yinzhengjie/yinzhengjie/96f23e30-9bca---beb3260c29c0 on fs with scheme file

// :: INFO metastore.HiveMetaStore: : Cleaning up thread local RawStore...

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Cleaning up thread local RawStore...

// :: INFO metastore.HiveMetaStore: : Done cleaning up thread local RawStore

// :: INFO HiveMetaStore.audit: ugi=yinzhengjie ip=unknown-ip-addr cmd=Done cleaning up thread local RawStore

// :: INFO hive.HiveImport: Hive import complete.

[yinzhengjie@s101 ~]$ echo $? [yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sqoop create-hive-table --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --hive-database yinzhengjie --hive-table test1 --hive-partition-key province --hive-partition-value beijing

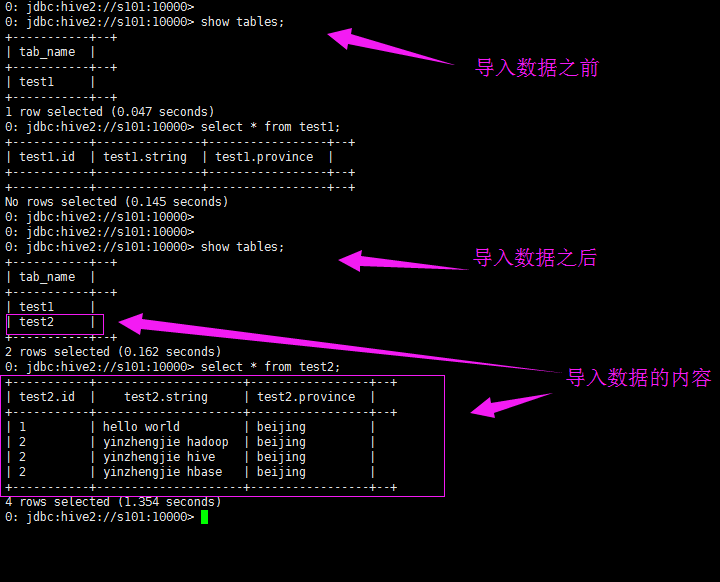

8>.sqoop导入hive分区表(hive导入分区表时会进行自动创建,hive导入分区表只能静态导入,支持一个分区 省去创建文件夹流程)

[yinzhengjie@s101 ~]$ sqoop import --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --hive-import --create-hive-table --hive-database yinzhengjie --hive-table test2 --hive-partition-key province --hive-partition-value beijing -m 1

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

// :: INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /soft/hadoop

Note: /tmp/sqoop-yinzhengjie/compile/2ff01e5a4aa9de071eea44aba493fc22/word.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

// :: INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-yinzhengjie/compile/2ff01e5a4aa9de071eea44aba493fc22/word.jar

// :: WARN manager.MySQLManager: It looks like you are importing from mysql.

// :: WARN manager.MySQLManager: This transfer can be faster! Use the --direct

// :: WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

// :: INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

// :: INFO mapreduce.ImportJobBase: Beginning import of word

// :: INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

// :: INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

// :: ERROR tool.ImportTool: Import failed: org.apache.hadoop.mapred.FileAlreadyExistsException: Output directory hdfs://mycluster/user/yinzhengjie/word already exists

at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:)

at org.apache.hadoop.mapreduce.Job$.run(Job.java:)

at org.apache.hadoop.mapreduce.Job$.run(Job.java:)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:)

at org.apache.sqoop.mapreduce.ImportJobBase.doSubmitJob(ImportJobBase.java:)

at org.apache.sqoop.mapreduce.ImportJobBase.runJob(ImportJobBase.java:)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:)

at org.apache.sqoop.manager.SqlManager.importTable(SqlManager.java:)

at org.apache.sqoop.manager.MySQLManager.importTable(MySQLManager.java:)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:)

at org.apache.sqoop.Sqoop.run(Sqoop.java:)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:)

at org.apache.sqoop.Sqoop.main(Sqoop.java:) [yinzhengjie@s101 ~]$ hdfs dfs -rm -r /user/yinzhengjie/word

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

// :: INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = minutes, Emptier interval = minutes.

Deleted /user/yinzhengjie/word

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sqoop import --connect jdbc:mysql://s101/yinzhengjie --username root -P --table word --fields-terminated-by '\t' --hive-import --create-hive-table --hive-database yinzhengjie --hive-table test2 --hive-partition-key province --hive-partition-value beijing -m 1

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /soft/sqoop-1.4..bin__hadoop-2.6./bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

// :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

Enter password:

// :: INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

// :: INFO tool.CodeGenTool: Beginning code generation

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/phoenix-4.10.-HBase-1.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/hbase-1.2./lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /soft/hadoop

Note: /tmp/sqoop-yinzhengjie/compile/c8d9be59546846cfb07ab171c91ca0ac/word.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

// :: INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-yinzhengjie/compile/c8d9be59546846cfb07ab171c91ca0ac/word.jar

// :: WARN manager.MySQLManager: It looks like you are importing from mysql.

// :: WARN manager.MySQLManager: This transfer can be faster! Use the --direct

// :: WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

// :: INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

// :: INFO mapreduce.ImportJobBase: Beginning import of word

// :: INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

// :: INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

// :: INFO db.DBInputFormat: Using read commited transaction isolation

// :: INFO mapreduce.JobSubmitter: number of splits:

// :: INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1528967628934_0014

// :: INFO impl.YarnClientImpl: Submitted application application_1528967628934_0014

// :: INFO mapreduce.Job: The url to track the job: http://s101:8088/proxy/application_1528967628934_0014/

// :: INFO mapreduce.Job: Running job: job_1528967628934_0014

// :: INFO mapreduce.Job: Job job_1528967628934_0014 running in uber mode : false

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: map % reduce %

// :: INFO mapreduce.Job: Job job_1528967628934_0014 completed successfully

// :: INFO mapreduce.Job: Counters:

File System Counters

FILE: Number of bytes read=

FILE: Number of bytes written=

FILE: Number of read operations=

FILE: Number of large read operations=

FILE: Number of write operations=

HDFS: Number of bytes read=

HDFS: Number of bytes written=

HDFS: Number of read operations=

HDFS: Number of large read operations=

HDFS: Number of write operations=

Job Counters

Launched map tasks=

Other local map tasks=

Total time spent by all maps in occupied slots (ms)=

Total time spent by all reduces in occupied slots (ms)=

Total time spent by all map tasks (ms)=

Total vcore-milliseconds taken by all map tasks=

Total megabyte-milliseconds taken by all map tasks=

Map-Reduce Framework

Map input records=

Map output records=

Input split bytes=

Spilled Records=

Failed Shuffles=

Merged Map outputs=

GC time elapsed (ms)=

CPU time spent (ms)=

Physical memory (bytes) snapshot=

Virtual memory (bytes) snapshot=

Total committed heap usage (bytes)=

File Input Format Counters

Bytes Read=

File Output Format Counters

Bytes Written=

// :: INFO mapreduce.ImportJobBase: Transferred bytes in 38.8416 seconds (1.9052 bytes/sec)

// :: INFO mapreduce.ImportJobBase: Retrieved records.

// :: INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table word

// :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `word` AS t LIMIT

// :: INFO hive.HiveImport: Loading uploaded data into Hive

// :: INFO conf.HiveConf: Found configuration file file:/soft/hive/conf/hive-site.xml Logging initialized using configuration in jar:file:/soft/apache-hive-2.1.-bin/lib/hive-common-2.1..jar!/hive-log4j2.properties Async: true

// :: INFO SessionState: