lucene源码分析(7)Analyzer分析

1.Analyzer的使用

Analyzer使用在IndexWriter的构造方法

/**

* Constructs a new IndexWriter per the settings given in <code>conf</code>.

* If you want to make "live" changes to this writer instance, use

* {@link #getConfig()}.

*

* <p>

* <b>NOTE:</b> after ths writer is created, the given configuration instance

* cannot be passed to another writer.

*

* @param d

* the index directory. The index is either created or appended

* according <code>conf.getOpenMode()</code>.

* @param conf

* the configuration settings according to which IndexWriter should

* be initialized.

* @throws IOException

* if the directory cannot be read/written to, or if it does not

* exist and <code>conf.getOpenMode()</code> is

* <code>OpenMode.APPEND</code> or if there is any other low-level

* IO error

*/

public IndexWriter(Directory d, IndexWriterConfig conf) throws IOException {

enableTestPoints = isEnableTestPoints();

conf.setIndexWriter(this); // prevent reuse by other instances

config = conf;

infoStream = config.getInfoStream();

softDeletesEnabled = config.getSoftDeletesField() != null;

// obtain the write.lock. If the user configured a timeout,

// we wrap with a sleeper and this might take some time.

writeLock = d.obtainLock(WRITE_LOCK_NAME); boolean success = false;

try {

directoryOrig = d;

directory = new LockValidatingDirectoryWrapper(d, writeLock); analyzer = config.getAnalyzer();

mergeScheduler = config.getMergeScheduler();

mergeScheduler.setInfoStream(infoStream);

codec = config.getCodec();

OpenMode mode = config.getOpenMode();

final boolean indexExists;

final boolean create;

if (mode == OpenMode.CREATE) {

indexExists = DirectoryReader.indexExists(directory);

create = true;

} else if (mode == OpenMode.APPEND) {

indexExists = true;

create = false;

} else {

// CREATE_OR_APPEND - create only if an index does not exist

indexExists = DirectoryReader.indexExists(directory);

create = !indexExists;

} // If index is too old, reading the segments will throw

// IndexFormatTooOldException. String[] files = directory.listAll(); // Set up our initial SegmentInfos:

IndexCommit commit = config.getIndexCommit(); // Set up our initial SegmentInfos:

StandardDirectoryReader reader;

if (commit == null) {

reader = null;

} else {

reader = commit.getReader();

} if (create) { if (config.getIndexCommit() != null) {

// We cannot both open from a commit point and create:

if (mode == OpenMode.CREATE) {

throw new IllegalArgumentException("cannot use IndexWriterConfig.setIndexCommit() with OpenMode.CREATE");

} else {

throw new IllegalArgumentException("cannot use IndexWriterConfig.setIndexCommit() when index has no commit");

}

} // Try to read first. This is to allow create

// against an index that's currently open for

// searching. In this case we write the next

// segments_N file with no segments:

final SegmentInfos sis = new SegmentInfos(Version.LATEST.major);

if (indexExists) {

final SegmentInfos previous = SegmentInfos.readLatestCommit(directory);

sis.updateGenerationVersionAndCounter(previous);

}

segmentInfos = sis;

rollbackSegments = segmentInfos.createBackupSegmentInfos(); // Record that we have a change (zero out all

// segments) pending:

changed(); } else if (reader != null) {

// Init from an existing already opened NRT or non-NRT reader: if (reader.directory() != commit.getDirectory()) {

throw new IllegalArgumentException("IndexCommit's reader must have the same directory as the IndexCommit");

} if (reader.directory() != directoryOrig) {

throw new IllegalArgumentException("IndexCommit's reader must have the same directory passed to IndexWriter");

} if (reader.segmentInfos.getLastGeneration() == 0) {

// TODO: maybe we could allow this? It's tricky...

throw new IllegalArgumentException("index must already have an initial commit to open from reader");

} // Must clone because we don't want the incoming NRT reader to "see" any changes this writer now makes:

segmentInfos = reader.segmentInfos.clone(); SegmentInfos lastCommit;

try {

lastCommit = SegmentInfos.readCommit(directoryOrig, segmentInfos.getSegmentsFileName());

} catch (IOException ioe) {

throw new IllegalArgumentException("the provided reader is stale: its prior commit file \"" + segmentInfos.getSegmentsFileName() + "\" is missing from index");

} if (reader.writer != null) { // The old writer better be closed (we have the write lock now!):

assert reader.writer.closed; // In case the old writer wrote further segments (which we are now dropping),

// update SIS metadata so we remain write-once:

segmentInfos.updateGenerationVersionAndCounter(reader.writer.segmentInfos);

lastCommit.updateGenerationVersionAndCounter(reader.writer.segmentInfos);

} rollbackSegments = lastCommit.createBackupSegmentInfos();

} else {

// Init from either the latest commit point, or an explicit prior commit point: String lastSegmentsFile = SegmentInfos.getLastCommitSegmentsFileName(files);

if (lastSegmentsFile == null) {

throw new IndexNotFoundException("no segments* file found in " + directory + ": files: " + Arrays.toString(files));

} // Do not use SegmentInfos.read(Directory) since the spooky

// retrying it does is not necessary here (we hold the write lock):

segmentInfos = SegmentInfos.readCommit(directoryOrig, lastSegmentsFile); if (commit != null) {

// Swap out all segments, but, keep metadata in

// SegmentInfos, like version & generation, to

// preserve write-once. This is important if

// readers are open against the future commit

// points.

if (commit.getDirectory() != directoryOrig) {

throw new IllegalArgumentException("IndexCommit's directory doesn't match my directory, expected=" + directoryOrig + ", got=" + commit.getDirectory());

} SegmentInfos oldInfos = SegmentInfos.readCommit(directoryOrig, commit.getSegmentsFileName());

segmentInfos.replace(oldInfos);

changed(); if (infoStream.isEnabled("IW")) {

infoStream.message("IW", "init: loaded commit \"" + commit.getSegmentsFileName() + "\"");

}

} rollbackSegments = segmentInfos.createBackupSegmentInfos();

} commitUserData = new HashMap<>(segmentInfos.getUserData()).entrySet(); pendingNumDocs.set(segmentInfos.totalMaxDoc()); // start with previous field numbers, but new FieldInfos

// NOTE: this is correct even for an NRT reader because we'll pull FieldInfos even for the un-committed segments:

globalFieldNumberMap = getFieldNumberMap(); validateIndexSort(); config.getFlushPolicy().init(config);

bufferedUpdatesStream = new BufferedUpdatesStream(infoStream);

docWriter = new DocumentsWriter(flushNotifications, segmentInfos.getIndexCreatedVersionMajor(), pendingNumDocs,

enableTestPoints, this::newSegmentName,

config, directoryOrig, directory, globalFieldNumberMap);

readerPool = new ReaderPool(directory, directoryOrig, segmentInfos, globalFieldNumberMap,

bufferedUpdatesStream::getCompletedDelGen, infoStream, conf.getSoftDeletesField(), reader);

if (config.getReaderPooling()) {

readerPool.enableReaderPooling();

}

// Default deleter (for backwards compatibility) is

// KeepOnlyLastCommitDeleter: // Sync'd is silly here, but IFD asserts we sync'd on the IW instance:

synchronized(this) {

deleter = new IndexFileDeleter(files, directoryOrig, directory,

config.getIndexDeletionPolicy(),

segmentInfos, infoStream, this,

indexExists, reader != null); // We incRef all files when we return an NRT reader from IW, so all files must exist even in the NRT case:

assert create || filesExist(segmentInfos);

} if (deleter.startingCommitDeleted) {

// Deletion policy deleted the "head" commit point.

// We have to mark ourself as changed so that if we

// are closed w/o any further changes we write a new

// segments_N file.

changed();

} if (reader != null) {

// We always assume we are carrying over incoming changes when opening from reader:

segmentInfos.changed();

changed();

} if (infoStream.isEnabled("IW")) {

infoStream.message("IW", "init: create=" + create + " reader=" + reader);

messageState();

} success = true; } finally {

if (!success) {

if (infoStream.isEnabled("IW")) {

infoStream.message("IW", "init: hit exception on init; releasing write lock");

}

IOUtils.closeWhileHandlingException(writeLock);

writeLock = null;

}

}

}

2.Analyzer的定义

/**

* An Analyzer builds TokenStreams, which analyze text. It thus represents a

* policy for extracting index terms from text.

* <p>

* In order to define what analysis is done, subclasses must define their

* {@link TokenStreamComponents TokenStreamComponents} in {@link #createComponents(String)}.

* The components are then reused in each call to {@link #tokenStream(String, Reader)}.

* <p>

* Simple example:

* <pre class="prettyprint">

* Analyzer analyzer = new Analyzer() {

* {@literal @Override}

* protected TokenStreamComponents createComponents(String fieldName) {

* Tokenizer source = new FooTokenizer(reader);

* TokenStream filter = new FooFilter(source);

* filter = new BarFilter(filter);

* return new TokenStreamComponents(source, filter);

* }

* {@literal @Override}

* protected TokenStream normalize(TokenStream in) {

* // Assuming FooFilter is about normalization and BarFilter is about

* // stemming, only FooFilter should be applied

* return new FooFilter(in);

* }

* };

* </pre>

* For more examples, see the {@link org.apache.lucene.analysis Analysis package documentation}.

* <p>

* For some concrete implementations bundled with Lucene, look in the analysis modules:

* <ul>

* <li><a href="{@docRoot}/../analyzers-common/overview-summary.html">Common</a>:

* Analyzers for indexing content in different languages and domains.

* <li><a href="{@docRoot}/../analyzers-icu/overview-summary.html">ICU</a>:

* Exposes functionality from ICU to Apache Lucene.

* <li><a href="{@docRoot}/../analyzers-kuromoji/overview-summary.html">Kuromoji</a>:

* Morphological analyzer for Japanese text.

* <li><a href="{@docRoot}/../analyzers-morfologik/overview-summary.html">Morfologik</a>:

* Dictionary-driven lemmatization for the Polish language.

* <li><a href="{@docRoot}/../analyzers-phonetic/overview-summary.html">Phonetic</a>:

* Analysis for indexing phonetic signatures (for sounds-alike search).

* <li><a href="{@docRoot}/../analyzers-smartcn/overview-summary.html">Smart Chinese</a>:

* Analyzer for Simplified Chinese, which indexes words.

* <li><a href="{@docRoot}/../analyzers-stempel/overview-summary.html">Stempel</a>:

* Algorithmic Stemmer for the Polish Language.

* </ul>

*/

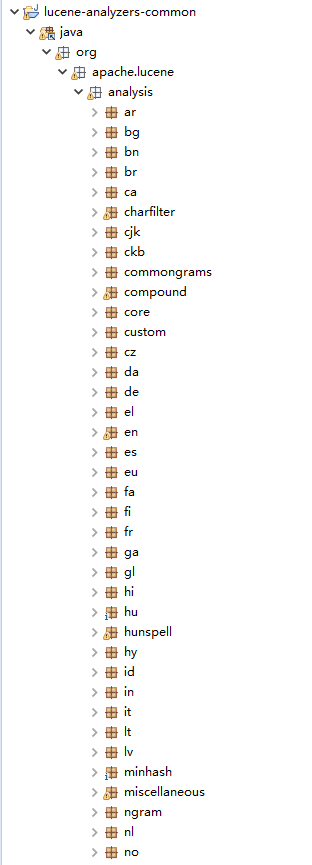

可以看出,Analyzer针对不同的语言给出了不同的方式

其中,common抽象出所有Analyzer类,如下图所示

lucene源码分析(7)Analyzer分析的更多相关文章

- Lucene 源码分析之倒排索引(三)

上文找到了 collect(-) 方法,其形参就是匹配的文档 Id,根据代码上下文,其中 doc 是由 iterator.nextDoc() 获得的,那 DefaultBulkScorer.itera ...

- 一个lucene源码分析的博客

ITpub上的一个lucene源码分析的博客,写的比较全面:http://blog.itpub.net/28624388/cid-93356-list-1/

- lucene源码分析的一些资料

针对lucene6.1较新的分析:http://46aae4d1e2371e4aa769798941cef698.devproxy.yunshipei.com/conansonic/article/d ...

- ArrayList源码和多线程安全问题分析

1.ArrayList源码和多线程安全问题分析 在分析ArrayList线程安全问题之前,我们线对此类的源码进行分析,找出可能出现线程安全问题的地方,然后代码进行验证和分析. 1.1 数据结构 Arr ...

- Okhttp3源码解析(3)-Call分析(整体流程)

### 前言 前面我们讲了 [Okhttp的基本用法](https://www.jianshu.com/p/8e404d9c160f) [Okhttp3源码解析(1)-OkHttpClient分析]( ...

- Okhttp3源码解析(2)-Request分析

### 前言 前面我们讲了 [Okhttp的基本用法](https://www.jianshu.com/p/8e404d9c160f) [Okhttp3源码解析(1)-OkHttpClient分析]( ...

- Spring mvc之源码 handlerMapping和handlerAdapter分析

Spring mvc之源码 handlerMapping和handlerAdapter分析 本篇并不是具体分析Spring mvc,所以好多细节都是一笔带过,主要是带大家梳理一下整个Spring mv ...

- HashMap的源码学习以及性能分析

HashMap的源码学习以及性能分析 一).Map接口的实现类 HashTable.HashMap.LinkedHashMap.TreeMap 二).HashMap和HashTable的区别 1).H ...

- ThreadLocal源码及相关问题分析

前言 在高并发的环境下,当我们使用一个公共的变量时如果不加锁会出现并发问题,例如SimpleDateFormat,但是加锁的话会影响性能,对于这种情况我们可以使用ThreadLocal.ThreadL ...

- 物联网防火墙himqtt源码之MQTT协议分析

物联网防火墙himqtt源码之MQTT协议分析 himqtt是首款完整源码的高性能MQTT物联网防火墙 - MQTT Application FireWall,C语言编写,采用epoll模式支持数十万 ...

随机推荐

- 集合(一)ArrayList

前言 这个分类中,将会写写Java中的集合.集合是Java中非常重要而且基础的内容,因为任何数据必不可少的就是该数据是如何存储的,集合的作用就是以一定的方式组织.存储数据.这里写的集合,一部分是比较常 ...

- 教程-Delphi调用百度地图API(XE8+WIN7)

unit U_map; interface //---------------------------------------------------// //----------COPY BY 无言 ...

- Delphi Dll 动态调用例子(3)-仔细看一下

http://blog.163.com/bxf_0011/blog/static/35420330200952075114318/ Delphi 动态链接库的动态和静态调用 为了让人能快速的理解 静态 ...

- 微信Web APP应用

微信Web APP即微信公众账号,对web APP的提供者来说这是一个门槛极低,容易到达数亿真实用户且确保用户黏性的分发平台;对用户来说,这是一种前所未有及其简单的应用使用方式;对腾讯来 说,将形成微 ...

- 在Azure DevOps Server (TFS) 中修改团队项目名称

概述 [团队项目]: 在Azure DevOps Server (原名TFS)中,团队项目(Team Project)是一个最基本的数据组织容器,包含了一个团队或者信息系统中的所有信息,包括源代码.文 ...

- INDEX--创建索引和删除索引时的SCH_M锁

最近有一个困惑,生产服务器上有一表索引建得乱七八糟,经过整理后需要新建几个索引,再删除几个索引,建立索引时使用联机(ONLINE=ON)创建,查看下服务器负载(磁盘和CPU压力均比较低的情况)后就选择 ...

- C#——调用C++的DLL 数据类型转换

/C++中的DLL函数原型为 //extern "C" __declspec(dllexport) bool 方法名一(const char* 变量名1, unsig ...

- JVM内存回收区域+对象存活的判断+引用类型+垃圾回收线程

此文已由作者赵计刚薪授权网易云社区发布. 欢迎访问网易云社区,了解更多网易技术产品运营经验. 注意:本文主要参考自<深入理解Java虚拟机(第二版)> 说明:查看本文之前,推荐先知道JVM ...

- JIT与JVM的三种执行模式:解释模式、编译模式、混合模式

Java JIT(just in time)即时编译器是sun公司采用了hotspot虚拟机取代其开发的classic vm之后引入的一项技术,目的在于提高java程序的性能,改变人们“java比C/ ...

- day 57 Bootstrap 第一天

一 .bootstrap是什么 http://v3.bootcss.com/css/#grid-options(参考博客) 是一个前端开发的框架. HTML CSS JS 下载地址:https:// ...