【colab pytorch】使用tensorboard可视化

import datetime import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from torchvision import transforms, utils, datasets from tensorflow import summary

%load_ext tensorboard

根据情况换成

%load_ext tensorboard.notebook

class Network(nn.Module):

def __init__(self):

super(Network, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5, 1)

self.conv2 = nn.Conv2d(20, 50, 5, 1)

self.fc1 = nn.Linear(4*4*50, 500)

self.fc2 = nn.Linear(500, 10) def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(-1, 4*4*50)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return F.log_softmax(x, dim=1)

class Config:

def __init__(self, **kwargs):

for key, value in kwargs.items():

setattr(self, key, value) model_config = Config(

cuda = True if torch.cuda.is_available() else False,

device = torch.device("cuda" if torch.cuda.is_available() else "cpu"),

seed = 2,

lr = 0.01,

epochs = 4,

save_model = False,

batch_size = 32,

log_interval = 100

) class Trainer: def __init__(self, config): self.cuda = config.cuda

self.device = config.device

self.seed = config.seed

self.lr = config.lr

self.epochs = config.epochs

self.save_model = config.save_model

self.batch_size = config.batch_size

self.log_interval = config.log_interval self.globaliter = 0

#self.tb = TensorBoardColab() torch.manual_seed(self.seed)

kwargs = {'num_workers': 1, 'pin_memory': True} if self.cuda else {} self.train_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=self.batch_size, shuffle=True, **kwargs) self.test_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=self.batch_size, shuffle=True, **kwargs) self.model = Network().to(self.device)

self.optimizer = optim.Adam(self.model.parameters(), lr=self.lr) def train(self, epoch): self.model.train()

for batch_idx, (data, target) in enumerate(self.train_loader): self.globaliter += 1

data, target = data.to(self.device), target.to(self.device) self.optimizer.zero_grad()

predictions = self.model(data) loss = F.nll_loss(predictions, target)

loss.backward()

self.optimizer.step() if batch_idx % self.log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(self.train_loader.dataset),

100. * batch_idx / len(self.train_loader), loss.item())) with train_summary_writer.as_default():

summary.scalar('loss', loss.item(), step=self.globaliter) def test(self, epoch):

self.model.eval()

test_loss = 0

correct = 0 with torch.no_grad():

for data, target in self.test_loader:

data, target = data.to(self.device), target.to(self.device)

predictions = self.model(data) test_loss += F.nll_loss(predictions, target, reduction='sum').item()

prediction = predictions.argmax(dim=1, keepdim=True)

correct += prediction.eq(target.view_as(prediction)).sum().item() test_loss /= len(self.test_loader.dataset)

accuracy = 100. * correct / len(self.test_loader.dataset) print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(self.test_loader.dataset), accuracy))

with test_summary_writer.as_default():

summary.scalar('loss', test_loss, step=self.globaliter)

summary.scalar('accuracy', accuracy, step=self.globaliter) def main(): trainer = Trainer(model_config) for epoch in range(1, trainer.epochs + 1):

trainer.train(epoch)

trainer.test(epoch) if (trainer.save_model):

torch.save(trainer.model.state_dict(),"mnist_cnn.pt")

current_time = str(datetime.datetime.now().timestamp())

train_log_dir = 'logs/tensorboard/train/' + current_time

test_log_dir = 'logs/tensorboard/test/' + current_time

train_summary_writer = summary.create_file_writer(train_log_dir)

test_summary_writer = summary.create_file_writer(test_log_dir)

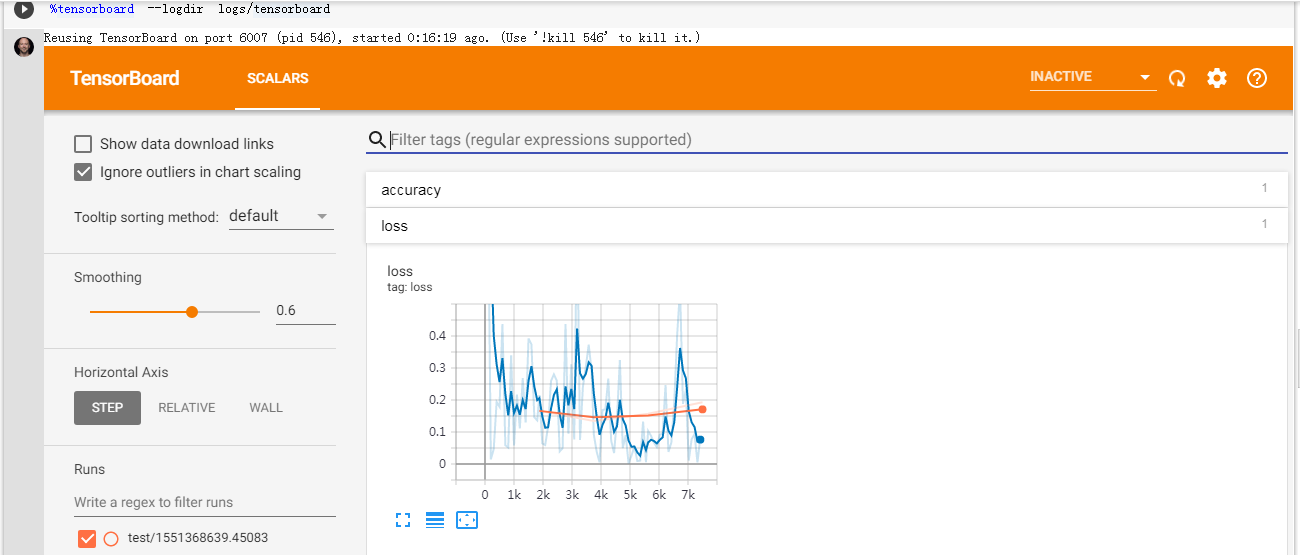

%tensorboard --logdir logs/tensorboard

main()

Train Epoch: 1 [0/60000 (0%)] Loss: 2.320306

Train Epoch: 1 [3200/60000 (5%)] Loss: 0.881239

Train Epoch: 1 [6400/60000 (11%)] Loss: 0.014427

Train Epoch: 1 [9600/60000 (16%)] Loss: 0.046511

Train Epoch: 1 [12800/60000 (21%)] Loss: 0.194090

Train Epoch: 1 [16000/60000 (27%)] Loss: 0.178779

Train Epoch: 1 [19200/60000 (32%)] Loss: 0.437568

Train Epoch: 1 [22400/60000 (37%)] Loss: 0.058614

Train Epoch: 1 [25600/60000 (43%)] Loss: 0.051354

Train Epoch: 1 [28800/60000 (48%)] Loss: 0.339627

Train Epoch: 1 [32000/60000 (53%)] Loss: 0.057814

Train Epoch: 1 [35200/60000 (59%)] Loss: 0.216959

Train Epoch: 1 [38400/60000 (64%)] Loss: 0.111091

Train Epoch: 1 [41600/60000 (69%)] Loss: 0.268371

Train Epoch: 1 [44800/60000 (75%)] Loss: 0.129569

Train Epoch: 1 [48000/60000 (80%)] Loss: 0.392319

Train Epoch: 1 [51200/60000 (85%)] Loss: 0.374106

Train Epoch: 1 [54400/60000 (91%)] Loss: 0.145877

Train Epoch: 1 [57600/60000 (96%)] Loss: 0.136342 Test set: Average loss: 0.1660, Accuracy: 9497/10000 (95%) Train Epoch: 2 [0/60000 (0%)] Loss: 0.215095

Train Epoch: 2 [3200/60000 (5%)] Loss: 0.064202

Train Epoch: 2 [6400/60000 (11%)] Loss: 0.059504

Train Epoch: 2 [9600/60000 (16%)] Loss: 0.116854

Train Epoch: 2 [12800/60000 (21%)] Loss: 0.259310

Train Epoch: 2 [16000/60000 (27%)] Loss: 0.280154

Train Epoch: 2 [19200/60000 (32%)] Loss: 0.260245

Train Epoch: 2 [22400/60000 (37%)] Loss: 0.039311

Train Epoch: 2 [25600/60000 (43%)] Loss: 0.049329

Train Epoch: 2 [28800/60000 (48%)] Loss: 0.437081

Train Epoch: 2 [32000/60000 (53%)] Loss: 0.094939

Train Epoch: 2 [35200/60000 (59%)] Loss: 0.311777

Train Epoch: 2 [38400/60000 (64%)] Loss: 0.076921

Train Epoch: 2 [41600/60000 (69%)] Loss: 0.800094

Train Epoch: 2 [44800/60000 (75%)] Loss: 0.074938

Train Epoch: 2 [48000/60000 (80%)] Loss: 0.240811

Train Epoch: 2 [51200/60000 (85%)] Loss: 0.303044

Train Epoch: 2 [54400/60000 (91%)] Loss: 0.372847

Train Epoch: 2 [57600/60000 (96%)] Loss: 0.290946 Test set: Average loss: 0.1341, Accuracy: 9634/10000 (96%) Train Epoch: 3 [0/60000 (0%)] Loss: 0.092767

Train Epoch: 3 [3200/60000 (5%)] Loss: 0.038457

Train Epoch: 3 [6400/60000 (11%)] Loss: 0.005179

Train Epoch: 3 [9600/60000 (16%)] Loss: 0.168411

Train Epoch: 3 [12800/60000 (21%)] Loss: 0.171331

Train Epoch: 3 [16000/60000 (27%)] Loss: 0.267252

Train Epoch: 3 [19200/60000 (32%)] Loss: 0.072991

Train Epoch: 3 [22400/60000 (37%)] Loss: 0.034315

Train Epoch: 3 [25600/60000 (43%)] Loss: 0.143128

Train Epoch: 3 [28800/60000 (48%)] Loss: 0.324783

Train Epoch: 3 [32000/60000 (53%)] Loss: 0.049743

Train Epoch: 3 [35200/60000 (59%)] Loss: 0.090172

Train Epoch: 3 [38400/60000 (64%)] Loss: 0.002107

Train Epoch: 3 [41600/60000 (69%)] Loss: 0.025945

Train Epoch: 3 [44800/60000 (75%)] Loss: 0.054859

Train Epoch: 3 [48000/60000 (80%)] Loss: 0.009291

Train Epoch: 3 [51200/60000 (85%)] Loss: 0.010495

Train Epoch: 3 [54400/60000 (91%)] Loss: 0.132548

Train Epoch: 3 [57600/60000 (96%)] Loss: 0.005778 Test set: Average loss: 0.1570, Accuracy: 9553/10000 (96%) Train Epoch: 4 [0/60000 (0%)] Loss: 0.103177

Train Epoch: 4 [3200/60000 (5%)] Loss: 0.087844

Train Epoch: 4 [6400/60000 (11%)] Loss: 0.066604

Train Epoch: 4 [9600/60000 (16%)] Loss: 0.052869

Train Epoch: 4 [12800/60000 (21%)] Loss: 0.091576

Train Epoch: 4 [16000/60000 (27%)] Loss: 0.094903

Train Epoch: 4 [19200/60000 (32%)] Loss: 0.247008

Train Epoch: 4 [22400/60000 (37%)] Loss: 0.037751

Train Epoch: 4 [25600/60000 (43%)] Loss: 0.067071

Train Epoch: 4 [28800/60000 (48%)] Loss: 0.191988

Train Epoch: 4 [32000/60000 (53%)] Loss: 0.403029

Train Epoch: 4 [35200/60000 (59%)] Loss: 0.547171

Train Epoch: 4 [38400/60000 (64%)] Loss: 0.187923

Train Epoch: 4 [41600/60000 (69%)] Loss: 0.231193

Train Epoch: 4 [44800/60000 (75%)] Loss: 0.010785

Train Epoch: 4 [48000/60000 (80%)] Loss: 0.077892

Train Epoch: 4 [51200/60000 (85%)] Loss: 0.093144

Train Epoch: 4 [54400/60000 (91%)] Loss: 0.004715

Train Epoch: 4 [57600/60000 (96%)] Loss: 0.083726 Test set: Average loss: 0.1932, Accuracy: 9584/10000 (96%)

核心就是标红的地方。

【colab pytorch】使用tensorboard可视化的更多相关文章

- Pytorch的网络结构可视化(tensorboardX)(详细)

版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明.本文链接:https://blog.csdn.net/xiaoxifei/article/det ...

- 【猫狗数据集】利用tensorboard可视化训练和测试过程

数据集下载地址: 链接:https://pan.baidu.com/s/1l1AnBgkAAEhh0vI5_loWKw提取码:2xq4 创建数据集:https://www.cnblogs.com/xi ...

- 使用 TensorBoard 可视化模型、数据和训练

使用 TensorBoard 可视化模型.数据和训练 在 60 Minutes Blitz 中,我们展示了如何加载数据,并把数据送到我们继承 nn.Module 类的模型,在训练数据上训练模型,并在测 ...

- 利用Tensorboard可视化模型、数据和训练过程

在60分钟闪电战中,我们像你展示了如何加载数据,通过为我们定义的nn.Module的子类的model提供数据,在训练集上训练模型,在测试集上测试模型.为了了解发生了什么,我们在模型训练时打印了一些统计 ...

- Tensorflow学习笔记3:TensorBoard可视化学习

TensorBoard简介 Tensorflow发布包中提供了TensorBoard,用于展示Tensorflow任务在计算过程中的Graph.定量指标图以及附加数据.大致的效果如下所示, Tenso ...

- 学习TensorFlow,TensorBoard可视化网络结构和参数

在学习深度网络框架的过程中,我们发现一个问题,就是如何输出各层网络参数,用于更好地理解,调试和优化网络?针对这个问题,TensorFlow开发了一个特别有用的可视化工具包:TensorBoard,既可 ...

- tensorboard可视化节点却没有显示图像的解决方法---注意路径问题加中文文件名

问题:完成graph中的算子,并执行tf.Session后,用tensorboard可视化节点时,没有显示图像 1. tensorboard 1.10 我是将log文件存储在E盘下面的,所以直接在E盘 ...

- 在Keras中使用tensorboard可视化acc等曲线

1.使用tensorboard可视化ACC,loss等曲线 keras.callbacks.TensorBoard(log_dir='./Graph', histogram_freq= 0 , wri ...

- 超简单tensorflow入门优化程序&&tensorboard可视化

程序1 任务描述: x = 3.0, y = 100.0, 运算公式 x×W+b = y,求 W和b的最优解. 使用tensorflow编程实现: #-*- coding: utf-8 -*-) im ...

- 使用TensorBoard可视化工具

title: 使用TensorBoard可视化工具 date: 2018-04-01 13:04:00 categories: deep learning tags: TensorFlow Tenso ...

随机推荐

- CPU内核、用户模式

本文由是阅读该文章做下的笔记. CPU分内核与用户模式. 三言蔽之 内核模式下,应用可以直接存取内存,能够执行任何CPU指令.一般来说驱动运行在该模式下.内核模式的应用一旦崩溃,整个操作系统都会崩溃. ...

- 2019牛客多校(第十场)F Popping Balloons —— 线段树+枚举

https://ac.nowcoder.com/acm/contest/890/F 题意:二维平面中有n个气球,你可以横着社三法子弹,竖着射三发子弹,且横着子弹的关系是y,y+r,y+2*r,竖着是x ...

- OpenCV 特征描述

#include <stdio.h> #include <iostream> #include "opencv2/core/core.hpp" #inclu ...

- redis下载安装及php配置redis

下载及安装redis 1.首先去github网站上下载https://github.com/dmajkic/redis/downloads: 2.根据实际情况,将64bit的内容cp到自定义盘符目录, ...

- 吴裕雄--天生自然 R语言开发学习:基本图形(续三)

#---------------------------------------------------------------# # R in Action (2nd ed): Chapter 6 ...

- 如何在sublime中调试html文件

一.安装View In Browser插件 快捷键 Ctrl+Shift+P(菜单栏Tools->Command Paletter),输入 pcip选中Install Package并回车,输入 ...

- Python爬虫实战之爬取百度贴吧帖子

大家好,上次我们实验了爬取了糗事百科的段子,那么这次我们来尝试一下爬取百度贴吧的帖子.与上一篇不同的是,这次我们需要用到文件的相关操作. 本篇目标 对百度贴吧的任意帖子进行抓取 指定是否只抓取楼主发帖 ...

- LG承认手机业务遭到中国厂商碾压!这是输得心服口服的节奏?

近日,关于LG手机业退出中国市场的消息传的沸沸洋洋.不少相关媒体也对此事向LG北京办事处求证,得到的结果确实是手机业务退出中国市场.并且据韩媒报道,LG还将会逐渐取消高端手机业务,也就是说未来V系列和 ...

- Leetcode 946. Validate Stack Sequences 验证栈序列

946. Validate Stack Sequences 题目描述 Given two sequences pushed and popped with distinct values, retur ...

- webpack debug

chrome地址栏输入:chrome://inspect/#devices 点击 Open dedicated DevTools for Node 在需要打断点的地方加入debugger 控制台输入 ...