[C2P1] Andrew Ng - Machine Learning

About this Course

Machine learning is the science of getting computers to act without being explicitly programmed. In the past decade, machine learning has given us self-driving cars, practical speech recognition, effective web search, and a vastly improved understanding of the human genome. Machine learning is so pervasive today that you probably use it dozens of times a day without knowing it. Many researchers also think it is the best way to make progress towards human-level AI. In this class, you will learn about the most effective machine learning techniques, and gain practice implementing them and getting them to work for yourself. More importantly, you'll learn about not only the theoretical underpinnings of learning, but also gain the practical know-how needed to quickly and powerfully apply these techniques to new problems. Finally, you'll learn about some of Silicon Valley's best practices in innovation as it pertains to machine learning and AI.

This course provides a broad introduction to machine learning, datamining, and statistical pattern recognition. Topics include: (i) Supervised learning (parametric/non-parametric algorithms, support vector machines, kernels, neural networks). (ii) Unsupervised learning (clustering, dimensionality reduction, recommender systems, deep learning). (iii) Best practices in machine learning (bias/variance theory; innovation process in machine learning and AI). The course will also draw from numerous case studies and applications, so that you'll also learn how to apply learning algorithms to building smart robots (perception, control), text understanding (web search, anti-spam), computer vision, medical informatics, audio, database mining, and other areas.

Introduction

Welcome to Machine Learning! In this module, we introduce the core idea of teaching a computer to learn concepts using data—without being explicitly programmed. The Course Wiki is under construction. Please visit the resources tab for the most complete and up-to-date information.

5 videos, 4 readings

Video: Welcome to Machine Learning!

What is machine learning? You probably use it dozens of times a day without even knowing it. Each time you do a web search on Google or Bing, that works so well because their machine learning software has figured out how to rank what pages. When Facebook or Apple's photo application recognizes your friends in your pictures, that's also machine learning. Each time you read your email and a spam filter saves you from having to wade through tons of spam, again, that's because your computer has learned to distinguish spam from non-spam email. So, that's machine learning. There's a science of getting computers to learn without being explicitly programmed. One of the research projects that I'm working on is getting robots to tidy up the house. How do you go about doing that? Well what you can do is have the robot watch you demonstrate the task and learn from that. The robot can then watch what objects you pick up and where to put them and try to do the same thing even when you aren't there. For me, one of the reasons I'm excited about this is the AI, or artificial intelligence problem. Building truly intelligent machines, we can do just about anything that you or I can do. Many scientists think the best way to make progress on this is through learning algorithms called neural networks, which mimic how the human brain works, and I'll teach you about that, too. In this class, you learn about machine learning and get to implement them yourself. I hope you sign up on our website and join us.

Video: Welcome

Welcome to this free online class on machine learning. Machine learning is one of the most exciting recent technologies. And in this class, you learn about the state of the art and also gain practice implementing and deploying these algorithms yourself. You've probably use a learning algorithm dozens of times a day without knowing it. Every time you use a web search engine like Google or Bing to search the internet, one of the reasons that works so well is because a learning algorithm, one implemented by Google or Microsoft, has learned how to rank web pages. Every time you use Facebook or Apple's photo typing application and it recognizes your friends' photos, that's also machine learning. Every time you read your email and your spam filter saves you from having to wade through tons of spam email, that's also a learning algorithm. For me one of the reasons I'm excited is the AI dream of someday building machines as intelligent as you or me. We're a long way away from that goal, but many AI researchers believe that the best way to towards that goal is through learning algorithms that try to mimic how the human brain learns. I'll tell you a little bit about that too in this class. In this class you learn about state-of-the-art machine learning algorithms. But it turns out just knowing the algorithms and knowing the math isn't that much good if you don't also know how to actually get this stuff to work on problems that you care about. So, we've also spent a lot of time developing exercises for you to implement each of these algorithms and see how they work fot yourself. So why is machine learning so prevalent today? It turns out that machine learning is a field that had grown out of the field of AI, or artificial intelligence. We wanted to build intelligent machines and it turns out that there are a few basic things that we could program a machine to do such as how to find the shortest path from A to B. But for the most part we just did not know how to write AI programs to do the more interesting things such as web search or photo tagging or email anti-spam. There was a realization that the only way to do these things was to have a machine learn to do it by itself. So, machine learning was developed as a new capability for computers and today it touches many segments of industry and basic science. For me, I work on machine learning and in a typical week I might end up talking to helicopter pilots, biologists, a bunch of computer systems people (so my colleagues here at Stanford) and averaging two or three times a week I get email from people in industry from Silicon Valley contacting me who have an interest in applying learning algorithms to their own problems. This is a sign of the range of problems that machine learning touches. There is autonomous robotics, computational biology, tons of things in Silicon Valley that machine learning is having an impact on. Here are some other examples of machine learning. There's database mining. One of the reasons machine learning has so pervaded is the growth of the web and the growth of automation All this means that we have much larger data sets than ever before. So, for example tons of Silicon Valley companies are today collecting web click data, also called clickstream data, and are trying to use machine learning algorithms to mine this data to understand the users better and to serve the users better, that's a huge segment of Silicon Valley right now. Medical records. With the advent of automation, we now have electronic medical records, so if we can turn medical records into medical knowledge, then we can start to understand disease better. Computational biology. With automation again, biologists are collecting lots of data about gene sequences, DNA sequences, and so on, and machines running algorithms are giving us a much better understanding of the human genome, and what it means to be human. And in engineering as well, in all fields of engineering, we have larger and larger, and larger and larger data sets, that we're trying to understand using learning algorithms. A second range of machinery applications is ones that we cannot program by hand. So for example, I've worked on autonomous helicopters for many years. We just did not know how to write a computer program to make this helicopter fly by itself. The only thing that worked was having a computer learn by itself how to fly this helicopter. [Helicopter whirling]

4:37

Handwriting recognition. It turns out one of the reasons it's so inexpensive today to route a piece of mail across the countries, in the US and internationally, is that when you write an envelope like this, it turns out there's a learning algorithm that has learned how to read your handwriting so that it can automatically route this envelope on its way, and so it costs us a few cents to send this thing thousands of miles. And in fact if you've seen the fields of natural language processing or computer vision, these are the fields of AI pertaining to understanding language or understanding images. Most of natural language processing and most of computer vision today is applied machine learning. Learning algorithms are also widely used for self- customizing programs. Every time you go to Amazon or Netflix or iTunes Genius, and it recommends the movies or products and music to you, that's a learning algorithm. If you think about it they have million users; there is no way to write a million different programs for your million users. The only way to have software give these customized recommendations is to become learn by itself to customize itself to your preferences. Finally learning algorithms are being used today to understand human learning and to understand the brain. We'll talk about how researches are using this to make progress towards the big AI dream. A few months ago, a student showed me an article on the top twelve IT skills. The skills that information technology hiring managers cannot say no to. It was a slightly older article, but at the top of this list of the twelve most desirable IT skills was machine learning. Here at Stanford, the number of recruiters that contact me asking if I know any graduating machine learning students is far larger than the machine learning students we graduate each year. So I think there is a vast, unfulfilled demand for this skill set, and this is a great time to be learning about machine learning, and I hope to teach you a lot about machine learning in this class. In the next video, we'll start to give a more formal definition of what is machine learning. And we'll begin to talk about the main types of machine learning problems and algorithms. You'll pick up some of the main machine learning terminology, and start to get a sense of what are the different algorithms, and when each one might be appropriate.

Video: What is Machine Learning?

What is machine learning? In this video, we will try to define what it is and also try to give you a sense of when you want to use machine learning. Even among machine learning practitioners, there isn't a well accepted definition of what is and what isn't machine learning. But let me show you a couple of examples of the ways that people have tried to define it. Here's a definition of what is machine learning as due to Arthur Samuel. He defined machine learning as the field of study that gives computers the ability to learn without being explicitly learned.

0:33

Samuel's claim to fame was that back in the 1950, he wrote a checkers playing program and the amazing thing about this checkers playing program was that Arthur Samuel himself wasn't a very good checkers player. But what he did was he had to programmed maybe tens of thousands of games against himself, and by watching what sorts of board positions tended to lead to wins and what sort of board positions tended to lead to losses, the checkers playing program learned over time what are good board positions and what are bad board positions. And eventually learn to play checkers better than the Arthur Samuel himself was able to. This was a remarkable result. Arthur Samuel himself turns out not to be a very good checkers player. But because a computer has the patience to play tens of thousands of games against itself, no human has the patience to play that many games. By doing this, a computer was able to get so much checkers playing experience that it eventually became a better checkers player than Arthur himself.

1:32

This is a somewhat informal definition and an older one. Here's a slightly more recent definition by Tom Mitchell who's a friend of Carnegie Melon. So Tom defines machine learning by saying that a well-posed learning problem is defined as follows. He says, a computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E. I actually think he came out with this definition just to make it rhyme. For the checkers playing examples, the experience E would be the experience of having the program play tens of thousands of games itself. The task T would be the task of playing checkers, and the performance measure P will be the probability that wins the next game of checkers against some new opponent.

2:24

Throughout these videos, besides me trying to teach you stuff, I'll occasionally ask you a question to make sure you understand the content. Here's one.

2:33

On top is a definition of machine learning by Tom Mitchell. Let's say your email program watches which emails you do or do not mark as spam. So in an email client like this, you might click the Spam button to report some email as spam but not other emails. And based on which emails you mark as spam, say your email program learns better how to filter spam email. What is the task T in this setting? In a few seconds, the video will pause and when it does so, you can use your mouse to select one of these four radio buttons to let me know which of these four you think is the right answer to this question.

3:16

So hopefully you got that this is the right answer, classifying emails is the task T. In fact, this definition defines a task T performance measure P and some experience E. And so, watching you label emails as spam or not spam, this would be the experience E and and the fraction of emails correctly classified, that might be a performance measure P. And so on the task of systems performance, on the performance measure P will improve after the experience E.

3:55

In this class, I hope to teach you about various different types of learning algorithms. There are several different types of learning algorithms. The main two types are what we call supervised learning and unsupervised learning. I'll define what these terms mean more in the next couple videos. It turns out that in supervised learning, the idea is we're going to teach the computer how to do something. Whereas in unsupervised learning, we're going to let it learn by itself. Don't worry if these two terms don't make sense yet. In the next two videos, I'm going to say exactly what these two types of learning are. You might also hear other ghost terms such as reinforcement learning and recommender systems. These are other types of machine learning algorithms that we'll talk about later. But the two most use types of learning algorithms are probably supervised learning and unsupervised learning. And I'll define them in the next two videos and we'll spend most of this class talking about these two types of learning algorithms. It turns out what are the other things to spend a lot of time on in this class is practical advice for applying learning algorithms. This is something that I feel pretty strongly about. And exactly something that I don't know if any other university teachers. Teaching about learning algorithms is like giving a set of tools. And equally important or more important than giving you the tools as they teach you how to apply these tools. I like to make an analogy to learning to become a carpenter. Imagine that someone is teaching you how to be a carpenter, and they say, here's a hammer, here's a screwdriver, here's a saw, good luck. Well, that's no good. You have all these tools but the more important thing is to learn how to use these tools properly.

5:36

There's a huge difference between people that know how to use these machine learning algorithms, versus people that don't know how to use these tools well. Here, in Silicon Valley where I live, when I go visit different companies even at the top Silicon Valley companies, very often I see people trying to apply machine learning algorithms to some problem and sometimes they have been going at for six months. But sometimes when I look at what their doing, I say, I could have told them like, gee, I could have told you six months ago that you should be taking a learning algorithm and applying it in like the slightly modified way and your chance of success will have been much higher. So what we're going to do in this class is actually spend a lot of the time talking about how if you're actually trying to develop a machine learning system, how to make those best practices type decisions about the way in which you build your system. So that when you're finally learning algorithim, you're less likely to end up one of those people who end up persuing something after six months that someone else could have figured out just a waste of time for six months. So I'm actually going to spend a lot of time teaching you those sorts of best practices in machine learning and AI and how to get the stuff to work and how the best people do it in Silicon Valley and around the world. I hope to make you one of the best people in knowing how to design and build serious machine learning and AI systems. So that's machine learning, and these are the main topics I hope to teach. In the next video, I'm going to define what is supervised learning and after that what is unsupervised learning. And also time to talk about when you would use each of them.

Reading: What is Machine Learning?

What is Machine Learning?

Two definitions of Machine Learning are offered. Arthur Samuel described it as: "the field of study that gives computers the ability to learn without being explicitly programmed." This is an older, informal definition.

Tom Mitchell provides a more modern definition: "A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E."

Example: playing checkers.

E = the experience of playing many games of checkers

T = the task of playing checkers.

P = the probability that the program will win the next game.

In general, any machine learning problem can be assigned to one of two broad classifications:

Supervised learning and Unsupervised learning.

Video: Supervised Learning

In this video, I'm going to define what is probably the most common type of Machine Learning problem, which is Supervised Learning. I'll define Supervised Learning more formally later, but it's probably best to explain or start with an example of what it is, and we'll do the formal definition later. Let's say you want to predict housing prices. A while back a student collected data sets from the City of Portland, Oregon, and let's say you plot the data set and it looks like this. Here on the horizontal axis, the size of different houses in square feet, and on the vertical axis, the price of different houses in thousands of dollars. So, given this data, let's say you have a friend who owns a house that is say 750 square feet, and they are hoping to sell the house, and they want to know how much they can get for the house. So, how can the learning algorithm help you? One thing a learning algorithm might be want to do is put a straight line through the data, also fit a straight line to the data. Based on that, it looks like maybe their house can be sold for maybe about \$150,000. But maybe this isn't the only learning algorithm you can use, and there might be a better one. For example, instead of fitting a straight line to the data, we might decide that it's better to fit a quadratic function, or a second-order polynomial to this data. If you do that and make a prediction here, then it looks like, well, maybe they can sell the house for closer to \$200,000. One of the things we'll talk about later is how to choose, and how to decide, do you want to fit a straight line to the data? Or do you want to fit a quadratic function to the data? There's no fair picking whichever one gives your friend the better house to sell. But each of these would be a fine example of a learning algorithm. So, this is an example of a Supervised Learning algorithm. The term Supervised Learning refers to the fact that we gave the algorithm a data set in which the, called, "right answers" were given. That is we gave it a data set of houses in which for every example in this data set, we told it what is the right price. So, what was the actual price that that house sold for, and the task of the algorithm was to just produce more of these right answers such as for this new house that your friend may be trying to sell. To define a bit more terminology, this is also called a regression problem. By regression problem, I mean we're trying to predict a continuous valued output. Namely the price. So technically, I guess prices can be rounded off to the nearest cent. So, maybe prices are actually discrete value. But usually, we think of the price of a house as a real number, as a scalar value, as a continuous value number, and the term regression refers to the fact that we're trying to predict the sort of continuous values attribute. Here's another Supervised Learning examples. Some friends and I were actually working on this earlier. Let's say you want to look at medical records and try to predict of a breast cancer as malignant or benign. If someone discovers a breast tumor, a lump in their breast, a malignant tumor is a tumor that is harmful and dangerous, and a benign tumor is a tumor that is harmless. So obviously, people care a lot about this. Let's see collected data set. Suppose you are in your dataset, you have on your horizontal axis the size of the tumor, and on the vertical axis, I'm going to plot one or zero, yes or no, whether or not these are examples of tumors we've seen before are malignant, which is one, or zero or not malignant or benign. So, let's say your dataset looks like this, where we saw a tumor of this size that turned out to be benign, one of this size, one of this size, and so on. Sadly, we also saw a few malignant tumors cell, one of that size, one of that size, one of that size, so on. So in this example, I have five examples of benign tumors shown down here, and five examples of malignant tumors shown with a vertical axis value of one. Let's say a friend who tragically has a breast tumor, and let's say her breast tumor size is maybe somewhere around this value, the Machine Learning question is, can you estimate what is the probability, what's the chance that a tumor as malignant versus benign? To introduce a bit more terminology, this is an example of a classification problem. The term classification refers to the fact, that here, we're trying to predict a discrete value output zero or one, malignant or benign. It turns out that in classification problems, sometimes you can have more than two possible values for the output. As a concrete example, maybe there are three types of breast cancers. So, you may try to predict a discrete value output zero, one, two, or three, where zero may mean benign, benign tumor, so no cancer, and one may mean type one cancer, maybe three types of cancer, whatever type one means, and two mean a second type of cancer, and three may mean a third type of cancer. But this will also be a classification problem because this are the discrete value set of output corresponding to you're no cancer, or cancer type one, or cancer type two, or cancer types three. In classification problems, there is another way to plot this data. Let me show you what I mean. I'm going to use a slightly different set of symbols to plot this data. So, if tumor size is going to be the attribute that I'm going to use to predict malignancy or benignness, I can also draw my data like this. I'm going to use different symbols to denote my benign and malignant, or my negative and positive examples. So, instead of drawing crosses, I'm now going to draw O's for the benign tumors, like so, and I'm going to keep using X's to denote my malignant tumors. I hope this figure makes sense. All I did was I took my data set on top, and I just mapped it down to this real line like so, and started to use different symbols, circles and crosses to denote malignant versus benign examples. Now, in this example, we use only one feature or one attribute, namely the tumor size in order to predict whether a tumor is malignant or benign. In other machine learning problems, when we have more than one feature or more than one attribute. Here's an example, let's say that instead of just knowing the tumor size, we know both the age of the patients and the tumor size. In that case, maybe your data set would look like this, where I may have a set of patients with those ages, and that tumor size, and they look like this, and different set of patients that look a little different, whose tumors turn out to be malignant as denoted by the crosses. So, let's say you have a friend who tragically has a tumor, and maybe their tumor size and age falls around there. So, given a data set like this, what the learning algorithm might do is fit a straight line to the data to try to separate out the malignant tumors from the benign ones, and so the learning algorithm may decide to put a straight line like that to separate out the two causes of tumors. With this, hopefully we can decide that your friend's tumor is more likely, if it's over there that hopefully your learning algorithm will say that your friend's tumor falls on this benign side and is therefore more likely to be benign than malignant. In this example, we had two features namely, the age of the patient and the size of the tumor. In other Machine Learning problems, we will often have more features. My friends that worked on this problem actually used other features like these, which is clump thickness, clump thickness of the breast tumor, uniformity of cell size of the tumor, uniformity of cell shape the tumor, and so on, and other features as well. It turns out one of the most interesting learning algorithms that we'll see in this course, as the learning algorithm that can deal with not just two, or three, or five features, but an infinite number of features. On this slide, I've listed a total of five different features. Two on the axis and three more up here. But it turns out that for some learning problems what you really want is not to use like three or five features, but instead you want to use an infinite number of features, an infinite number of attributes, so that your learning algorithm has lots of attributes, or features, or cues with which to make those predictions. So, how do you deal with an infinite number of features? How do you even store an infinite number of things in the computer when your computer is going to run out of memory? It turns out that when we talk about an algorithm called the Support Vector Machine, there will be a neat mathematical trick that will allow a computer to deal with an infinite number of features. Imagine that I didn't just write down two features here and three features on the right, but imagine that I wrote down an infinitely long list. I just kept writing more and more features, like an infinitely long list of features. It turns out we will come up with an algorithm that can deal with that. So, just to recap, in this course, we'll talk about Supervised Learning, and the idea is that in Supervised Learning, in every example in our data set, we are told what is the correct answer that we would have quite liked the algorithms have predicted on that example. Such as the price of the house, or whether a tumor is malignant or benign. We also talked about the regression problem, and by regression that means that our goal is to predict a continuous valued output. We talked about the classification problem where the goal is to predict a discrete value output. Just a quick wrap up question. Suppose you're running a company and you want to develop learning algorithms to address each of two problems. In the first problem, you have a large inventory of identical items. So, imagine that you have thousands of copies of some identical items to sell, and you want to predict how many of these items you sell over the next three months. In the second problem, problem two, you have lots of users, and you want to write software to examine each individual of your customer's accounts, so each one of your customer's accounts. For each account, decide whether or not the account has been hacked or compromised. So, for each of these problems, should they be treated as a classification problem or as a regression problem? When the video pauses, please use your mouse to select whichever of these four options on the left you think is the correct answer.

11:19

So hopefully, you got that. This is the answer. For problem one, I would treat this as a regression problem because if I have thousands of items, well, I would probably just treat this as a real value, as a continuous value. Therefore, the number of items I sell as a continuous value. For the second problem, I would treat that as a classification problem, because I might say set the value I want to predict with zero to denote the account has not been hacked, and set the value one to denote an account that has been hacked into. So, just like your breast cancers where zero is benign, one is malignant. So, I might set this be zero or one depending on whether it's been hacked, and have an algorithm try to predict each one of these two discrete values. Because there's a small number of discrete values, I would therefore treat it as a classification problem. So, that's it for Supervised Learning. In the next video, I'll talk about Unsupervised Learning, which is the other major category of learning algorithm.

Reading: Supervised Learning

Supervised Learning

In supervised learning, we are given a data set and already know what our correct output should look like, having the idea that there is a relationship between the input and the output.

Supervised learning problems are categorized into "regression" and "classification" problems. In a regression problem, we are trying to predict results within a continuous output, meaning that we are trying to map input variables to some continuous function. In a classification problem, we are instead trying to predict results in a discrete output. In other words, we are trying to map input variables into discrete categories.

Example 1:

Given data about the size of houses on the real estate market, try to predict their price. Price as a function of size is a continuous output, so this is a regression problem.

We could turn this example into a classification problem by instead making our output about whether the house "sells for more or less than the asking price." Here we are classifying the houses based on price into two discrete categories.

Example 2:

(a) Regression - Given a picture of a person, we have to predict their age on the basis of the given picture

(b) Classification - Given a patient with a tumor, we have to predict whether the tumor is malignant or benign.

Video: Unsupervised Learning

In this video, we'll talk about the second major type of machine learning problem, called Unsupervised Learning.

0:06

In the last video, we talked about Supervised Learning. Back then, recall data sets that look like this, where each example was labeled either as a positive or negative example, whether it was a benign or a malignant tumor.

0:20

So for each example in Supervised Learning, we were told explicitly what is the so-called right answer, whether it's benign or malignant. In Unsupervised Learning, we're given data that looks different than data that looks like this that doesn't have any labels or that all has the same label or really no labels.

0:39

So we're given the data set and we're not told what to do with it and we're not told what each data point is. Instead we're just told, here is a data set. Can you find some structure in the data? Given this data set, an Unsupervised Learning algorithm might decide that the data lives in two different clusters. And so there's one cluster

0:59

and there's a different cluster.

1:01

And yes, Supervised Learning algorithm may break these data into these two separate clusters.

1:06

So this is called a clustering algorithm. And this turns out to be used in many places.

1:11

One example where clustering is used is in Google News and if you have not seen this before, you can actually go to this URL news.google.com to take a look. What Google News does is everyday it goes and looks at tens of thousands or hundreds of thousands of new stories on the web and it groups them into cohesive news stories.

1:30

For example, let's look here.

1:33

The URLs here link to different news stories about the BP Oil Well story.

1:41

So, let's click on one of these URL's and we'll click on one of these URL's. What I'll get to is a web page like this. Here's a Wall Street Journal article about, you know, the BP Oil Well Spill stories of "BP Kills Macondo", which is a name of the spill and if you click on a different URL

2:00

from that group then you might get the different story. Here's the CNN story about a game, the BP Oil Spill,

2:07

and if you click on yet a third link, then you might get a different story. Here's the UK Guardian story about the BP Oil Spill.

2:16

So what Google News has done is look for tens of thousands of news stories and automatically cluster them together. So, the news stories that are all about the same topic get displayed together. It turns out that clustering algorithms and Unsupervised Learning algorithms are used in many other problems as well.

2:35

Here's one on understanding genomics.

2:38

Here's an example of DNA microarray data. The idea is put a group of different individuals and for each of them, you measure how much they do or do not have a certain gene. Technically you measure how much certain genes are expressed. So these colors, red, green, gray and so on, they show the degree to which different individuals do or do not have a specific gene.

3:02

And what you can do is then run a clustering algorithm to group individuals into different categories or into different types of people.

3:10

So this is Unsupervised Learning because we're not telling the algorithm in advance that these are type 1 people, those are type 2 persons, those are type 3 persons and so on and instead what were saying is yeah here's a bunch of data. I don't know what's in this data. I don't know who's and what type. I don't even know what the different types of people are, but can you automatically find structure in the data from the you automatically cluster the individuals into these types that I don't know in advance? Because we're not giving the algorithm the right answer for the examples in my data set, this is Unsupervised Learning.

3:44

Unsupervised Learning or clustering is used for a bunch of other applications.

3:48

It's used to organize large computer clusters.

3:51

I had some friends looking at large data centers, that is large computer clusters and trying to figure out which machines tend to work together and if you can put those machines together, you can make your data center work more efficiently.

4:04

This second application is on social network analysis.

4:07

So given knowledge about which friends you email the most or given your Facebook friends or your Google+ circles, can we automatically identify which are cohesive groups of friends, also which are groups of people that all know each other?

4:22

Market segmentation.

4:24

Many companies have huge databases of customer information. So, can you look at this customer data set and automatically discover market segments and automatically

4:33

group your customers into different market segments so that you can automatically and more efficiently sell or market your different market segments together?

4:44

Again, this is Unsupervised Learning because we have all this customer data, but we don't know in advance what are the market segments and for the customers in our data set, you know, we don't know in advance who is in market segment one, who is in market segment two, and so on. But we have to let the algorithm discover all this just from the data.

5:01

Finally, it turns out that Unsupervised Learning is also used for surprisingly astronomical data analysis and these clustering algorithms gives surprisingly interesting useful theories of how galaxies are formed. All of these are examples of clustering, which is just one type of Unsupervised Learning. Let me tell you about another one. I'm gonna tell you about the cocktail party problem.

5:26

So, you've been to cocktail parties before, right? Well, you can imagine there's a party, room full of people, all sitting around, all talking at the same time and there are all these overlapping voices because everyone is talking at the same time, and it is almost hard to hear the person in front of you. So maybe at a cocktail party with two people,

5:45

two people talking at the same time, and it's a somewhat small cocktail party. And we're going to put two microphones in the room so there are microphones, and because these microphones are at two different distances from the speakers, each microphone records a different combination of these two speaker voices.

6:05

Maybe speaker one is a little louder in microphone one and maybe speaker two is a little bit louder on microphone 2 because the 2 microphones are at different positions relative to the 2 speakers, but each microphone would cause an overlapping combination of both speakers' voices.

6:23

So here's an actual recording

6:26

of two speakers recorded by a researcher. Let me play for you the first, what the first microphone sounds like. One (uno), two (dos), three (tres), four (cuatro), five (cinco), six (seis), seven (siete), eight (ocho), nine (nueve), ten (y diez).

6:41

All right, maybe not the most interesting cocktail party, there's two people counting from one to ten in two languages but you know. What you just heard was the first microphone recording, here's the second recording.

6:57

Uno (one), dos (two), tres (three), cuatro (four), cinco (five), seis (six), siete (seven), ocho (eight), nueve (nine) y diez (ten). So we can do, is take these two microphone recorders and give them to an Unsupervised Learning algorithm called the cocktail party algorithm, and tell the algorithm - find structure in this data for you. And what the algorithm will do is listen to these audio recordings and say, you know it sounds like the two audio recordings are being added together or that have being summed together to produce these recordings that we had. Moreover, what the cocktail party algorithm will do is separate out these two audio sources that were being added or being summed together to form other recordings and, in fact, here's the first output of the cocktail party algorithm.

7:39

One, two, three, four, five, six, seven, eight, nine, ten.

7:47

So, I separated out the English voice in one of the recordings.

7:52

And here's the second of it. Uno, dos, tres, quatro, cinco, seis, siete, ocho, nueve y diez. Not too bad, to give you

8:03

one more example, here's another recording of another similar situation, here's the first microphone : One, two, three, four, five, six, seven, eight, nine, ten.

8:16

OK so the poor guy's gone home from the cocktail party and he 's now sitting in a room by himself talking to his radio.

8:23

Here's the second microphone recording.

8:28

One, two, three, four, five, six, seven, eight, nine, ten.

8:33

When you give these two microphone recordings to the same algorithm, what it does, is again say, you know, it sounds like there are two audio sources, and moreover,

8:42

the album says, here is the first of the audio sources I found.

8:47

One, two, three, four, five, six, seven, eight, nine, ten.

8:54

So that wasn't perfect, it got the voice, but it also got a little bit of the music in there. Then here's the second output to the algorithm.

9:10

Not too bad, in that second output it managed to get rid of the voice entirely. And just, you know, cleaned up the music, got rid of the counting from one to ten.

9:18

So you might look at an Unsupervised Learning algorithm like this and ask how complicated this is to implement this, right? It seems like in order to, you know, build this application, it seems like to do this audio processing you need to write a ton of code or maybe link into like a bunch of synthesizer Java libraries that process audio, seems like a really complicated program, to do this audio, separating out audio and so on.

9:42

It turns out the algorithm, to do what you just heard, that can be done with one line of code - shown right here.

9:50

It take researchers a long time to come up with this line of code. I'm not saying this is an easy problem, But it turns out that when you use the right programming environment, many learning algorithms can be really short programs.

10:03

So this is also why in this class we're going to use the Octave programming environment.

10:08

Octave, is free open source software, and using a tool like Octave or Matlab, many learning algorithms become just a few lines of code to implement. Later in this class, I'll just teach you a little bit about how to use Octave and you'll be implementing some of these algorithms in Octave. Or if you have Matlab you can use that too.

10:27

It turns out the Silicon Valley, for a lot of machine learning algorithms, what we do is first prototype our software in Octave because software in Octave makes it incredibly fast to implement these learning algorithms.

10:38

Here each of these functions like for example the SVD function that stands for singular value decomposition; but that turns out to be a linear algebra routine, that is just built into Octave.

10:49

If you were trying to do this in C++ or Java, this would be many many lines of code linking complex C++ or Java libraries. So, you can implement this stuff as C++ or Java or Python, it's just much more complicated to do so in those languages.

11:03

What I've seen after having taught machine learning for almost a decade now, is that, you learn much faster if you use Octave as your programming environment, and if you use Octave as your learning tool and as your prototyping tool, it'll let you learn and prototype learning algorithms much more quickly.

11:22

And in fact what many people will do to in the large Silicon Valley companies is in fact, use an algorithm like Octave to first prototype the learning algorithm, and only after you've gotten it to work, then you migrate it to C++ or Java or whatever. It turns out that by doing things this way, you can often get your algorithm to work much faster than if you were starting out in C++.

11:44

So, I know that as an instructor, I get to say "trust me on this one" only a finite number of times, but for those of you who've never used these Octave type programming environments before, I am going to ask you to trust me on this one, and say that you, you will, I think your time, your development time is one of the most valuable resources.

12:04

And having seen lots of people do this, I think you as a machine learning researcher, or machine learning developer will be much more productive if you learn to start in prototype, to start in Octave, in some other language.

12:17

Finally, to wrap up this video, I have one quick review question for you.

12:24

We talked about Unsupervised Learning, which is a learning setting where you give the algorithm a ton of data and just ask it to find structure in the data for us. Of the following four examples, which ones, which of these four do you think would will be an Unsupervised Learning algorithm as opposed to Supervised Learning problem. For each of the four check boxes on the left, check the ones for which you think Unsupervised Learning algorithm would be appropriate and then click the button on the lower right to check your answer. So when the video pauses, please answer the question on the slide.

13:01

So, hopefully, you've remembered the spam folder problem. If you have labeled data, you know, with spam and non-spam e-mail, we'd treat this as a Supervised Learning problem.

13:11

The news story example, that's exactly the Google News example that we saw in this video, we saw how you can use a clustering algorithm to cluster these articles together so that's Unsupervised Learning.

13:23

The market segmentation example I talked a little bit earlier, you can do that as an Unsupervised Learning problem because I am just gonna get my algorithm data and ask it to discover market segments automatically.

13:35

And the final example, diabetes, well, that's actually just like our breast cancer example from the last video. Only instead of, you know, good and bad cancer tumors or benign or malignant tumors we instead have diabetes or not and so we will use that as a supervised, we will solve that as a Supervised Learning problem just like we did for the breast tumor data.

13:58

So, that's it for Unsupervised Learning and in the next video, we'll delve more into specific learning algorithms and start to talk about just how these algorithms work and how we can, how you can go about implementing them.

Reading: Unsupervised Learning

Unsupervised Learning

Unsupervised learning allows us to approach problems with little or no idea what our results should look like. We can derive structure from data where we don't necessarily know the effect of the variables.

We can derive this structure by clustering the data based on relationships among the variables in the data.

With unsupervised learning there is no feedback based on the prediction results.

Example:

Clustering: Take a collection of 1,000,000 different genes, and find a way to automatically group these genes into groups that are somehow similar or related by different variables, such as lifespan, location, roles, and so on.

Non-clustering: The "Cocktail Party Algorithm", allows you to find structure in a chaotic environment. (i.e. identifying individual voices and music from a mesh of sounds at a cocktail party).

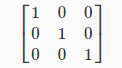

Reading: Lecture Slides

Linear Algebra Review

This optional module provides a refresher on linear algebra concepts. Basic understanding of linear algebra is necessary for the rest of the course, especially as we begin to cover models with multiple variables.

6 videos, 7 readings

Video: Matrices and Vectors

Let's get started with our linear algebra review.

0:02

In this video I want to tell you what are matrices and what are vectors.

0:09

A matrix is a rectangular array of numbers written between square brackets.

0:16

So, for example, here is a matrix on the right, a left square bracket.

0:22

And then, write in a bunch of numbers.

0:27

These could be features from a learning problem or it could be data from somewhere else, but

0:35

the specific values don't matter, and then I'm going to close it with another right bracket on the right. And so that's one matrix. And, here's another example of the matrix, let's write 3, 4, 5,6. So matrix is just another way for saying, is a 2D or a two dimensional array.

0:53

And the other piece of knowledge that we need is that the dimension of the matrix is going to be written as the number of row times the number of columns in the matrix. So, concretely, this example on the left, this has 1, 2, 3, 4 rows and has 2 columns,

1:14

and so this example on the left is a 4 by 2 matrix - number of rows by number of columns. So, four rows, two columns. This one on the right, this matrix has two rows. That's the first row, that's the second row, and it has three columns.

1:35

That's the first column, that's the second column, that's the third column So, this second matrix we say it is a 2 by 3 matrix.

1:45

So we say that the dimension of this matrix is 2 by 3.

1:50

Sometimes you also see this written out, in the case of left, you will see this written out as R4 by 2 or concretely what people will sometimes say this matrix is an element of the set R 4 by 2. So, this thing here, this just means the set of all matrices that of dimension 4 by 2 and this thing on the right, sometimes this is written out as a matrix that is an R 2 by 3. So if you ever see, 2 by 3. So if you ever see something like this are 4 by 2 or are 2 by 3, people are just referring to matrices of a specific dimension.

2:26

Next, let's talk about how to refer to specific elements of the matrix. And by matrix elements, other than the matrix I just mean the entries, so the numbers inside the matrix.

2:37

So, in the standard notation, if A is this matrix here, then A sub-strip IJ is going to refer to the i, j entry, meaning the entry in the matrix in the ith row and jth column.

2:51

So for example a1-1 is going to refer to the entry in the 1st row and the 1st column, so that's the first row and the first column and so a1-1 is going to be equal to 1, 4, 0, 2. Another example, 8 1 2 is going to refer to the entry in the first row and the second column and so A 1 2 is going to be equal to one nine one.

3:20

This come from a quick examples.

3:22

Let's see, A, oh let's say A 3 2, is going to refer to the entry in the 3rd row, and second column,

3:33

right, because that's 3 2 so that's equal to 1 4 3 7. And finally, 8 4 1 is going to refer to this one right, fourth row, first column is equal to 1 4 7 and if, hopefully you won't, but if you were to write and say well this A 4 3, well, that refers to the fourth row, and the third column that, you know, this matrix has no third column so this is undefined,

4:06

you know, or you can think of this as an error. There's no such element as 8 4 3, so, you know, you shouldn't be referring to 8 4 3. So, the matrix gets you a way of letting you quickly organize, index and access lots of data. In case I seem to be tossing up a lot of concepts, a lot of new notations very rapidly, you don't need to memorize all of this, but on the course website where we have posted the lecture notes, we also have all of these definitions written down. So you can always refer back, you know, either to these slides, possible coursework, so audible lecture notes if you forget well, A41 was that? Which row, which column was that? Don't worry about memorizing everything now. You can always refer back to the written materials on the course website, and use that as a reference. So that's what a matrix is. Next, let's talk about what is a vector. A vector turns out to be a special case of a matrix. A vector is a matrix that has only 1 column so you have an N x 1 matrix, then that's a remember, right? N is the number of rows, and 1 here is the number of columns, so, so matrix with just one column is what we call a vector. So here's an example of a vector, with I guess I have N equals four elements here.

5:23

so we also call this thing, another term for this is a four dmensional

5:30

vector, just means that

5:32

this is a vector with four elements, with four numbers in it. And, just as earlier for matrices you saw this notation R3 by 2 to refer to 2 by 3 matrices, for this vector we are going to refer to this as a vector in the set R4.

5:49

So this R4 means a set of four-dimensional vectors.

5:56

Next let's talk about how to refer to the elements of the vector.

6:01

We are going to use the notation yi to refer to the ith element of the vector y. So if y is this vector, y subscript i is the ith element. So y1 is the first element,four sixty, y2 is equal to the second element,

6:19

two thirty two -there's the first. There's the second. Y3 is equal to 315 and so on, and only y1 through y4 are defined consistency 4-dimensional vector.

6:32

Also it turns out that there are actually 2 conventions for how to index into a vector and here they are. Sometimes, people will use one index and sometimes zero index factors. So this example on the left is a one in that specter where the element we write is y1, y2, y3, y4.

6:53

And this example in the right is an example of a zero index factor where we start the indexing of the elements from zero.

7:01

So the elements go from a zero up to y three. And this is a bit like the arrays of some primary languages

7:09

where the arrays can either be indexed starting from one. The first element of an array is sometimes a Y1, this is sequence notation I guess, and sometimes it's zero index depending on what programming language you use. So it turns out that in most of math, the one index version is more common For a lot of machine learning applications, zero index

7:33

vectors gives us a more convenient notation.

7:36

So what you should usually do is, unless otherwised specified,

7:40

you should assume we are using one index vectors. In fact, throughout the rest of these videos on linear algebra review, I will be using one index vectors.

7:50

But just be aware that when we are talking about machine learning applications, sometimes I will explicitly say when we need to switch to, when we need to use the zero index

7:59

vectors as well. Finally, by convention, usually when writing matrices and vectors, most people will use upper case to refer to matrices. So we're going to use capital letters like A, B, C, you know, X, to refer to matrices,

8:16

and usually we'll use lowercase, like a, b, x, y,

8:21

to refer to either numbers, or just raw numbers or scalars or to vectors. This isn't always true but this is the more common notation where we use lower case "Y" for referring to vector and we usually use upper case to refer to a matrix.

8:37

So, you now know what are matrices and vectors. Next, we'll talk about some of the things you can do with them.

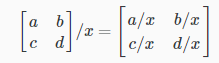

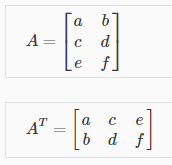

Reading: Matrices and Vectors

Matrices and Vectors

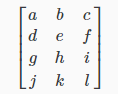

Matrices are 2-dimensional arrays:

The above matrix has four rows and three columns, so it is a 4 x 3 matrix.

A vector is a matrix with one column and many rows:

So vectors are a subset of matrices. The above vector is a 4 x 1 matrix.

Notation and terms:

- \(A_{ij}\) refers to the element in the ith row and jth column of matrix A.

- A vector with 'n' rows is referred to as an 'n'-dimensional vector.

- \(v_i\) refers to the element in the ith row of the vector.

- In general, all our vectors and matrices will be 1-indexed. Note that for some programming languages, the arrays are 0-indexed.

- Matrices are usually denoted by uppercase names while vectors are lowercase.

- "Scalar" means that an object is a single value, not a vector or matrix.

- \(\mathbb{R}\) refers to the set of scalar real numbers.

- \(\mathbb{R^n}\) refers to the set of n-dimensional vectors of real numbers.

Run the cell below to get familiar with the commands in Octave/Matlab. Feel free to create matrices and vectors and try out different things.

% The ; denotes we are going back to a new row.

A = [1, 2, 3; 4, 5, 6; 7, 8, 9; 10, 11, 12]

% Initialize a vector

v = [1;2;3]

% Get the dimension of the matrix A where m = rows and n = columns

[m,n] = size(A)

% You could also store it this way

dim_A = size(A)

% Get the dimension of the vector v

dim_v = size(v)

% Now let's index into the 2nd row 3rd column of matrix A

A_23 = A(2,3)

Video: Addition and Scalar Multiplication

In this video we'll talk about matrix addition and subtraction, as well as how to multiply a matrix by a number, also called Scalar Multiplication. Let's start an example. Given two matrices like these, let's say I want to add them together. How do I do that? And so, what does addition of matrices mean? It turns out that if you want to add two matrices, what you do is you just add up the elements of these matrices one at a time. So, my result of adding two matrices is going to be itself another matrix and the first element again just by taking one and four and multiplying them and adding them together, so I get five. The second element I get by taking two and two and adding them, so I get four; three plus three plus zero is three, and so on. I'm going to stop changing colors, I guess. And, on the right is open five, ten and two.

0:56

And it turns out you can add only two matrices that are of the same dimensions. So this example is a three by two matrix,

1:07

because this has 3 rows and 2 columns, so it's 3 by 2. This is also a 3 by 2 matrix, and the result of adding these two matrices is a 3 by 2 matrix again. So you can only add matrices of the same dimension, and the result will be another matrix that's of the same dimension as the ones you just added.

1:29

Where as in contrast, if you were to take these two matrices, so this one is a 3 by 2 matrix, okay, 3 rows, 2 columns. This here is a 2 by 2 matrix. And because these two matrices are not of the same dimension, you know, this is an error, so you cannot add these two matrices and, you know, their sum is not well-defined. So that's matrix addition. Next, let's talk about multiplying matrices by a scalar number. And the scalar is just a, maybe a overly fancy term for, you know, a number or a real number. Alright, this means real number. So let's take the number 3 and multiply it by this matrix. And if you do that, the result is pretty much what you'll expect. You just take your elements of the matrix and multiply them by 3, one at a time. So, you know, one times three is three. What, two times three is six, 3 times 3 is 9, and let's see, I'm going to stop changing colors again. Zero times 3 is zero. Three times 5 is 15, and 3 times 1 is three. And so this matrix is the result of multiplying that matrix on the left by 3. And you notice, again, this is a 3 by 2 matrix and the result is a matrix of the same dimension. This is a 3 by 2, both of these are 3 by 2 dimensional matrices. And by the way, you can write multiplication, you know, either way. So, I have three times this matrix. I could also have written this matrix and 0, 2, 5, 3, 1, right. I just copied this matrix over to the right. I can also take this matrix and multiply this by three. So whether it's you know, 3 times the matrix or the matrix times three is the same thing and this thing here in the middle is the result. You can also take a matrix and divide it by a number. So, turns out taking this matrix and dividing it by four, this is actually the same as taking the number one quarter, and multiplying it by this matrix. 4, 0, 6, 3 and so, you can figure the answer, the result of this product is, one quarter times four is one, one quarter times zero is zero. One quarter times six is, what, three halves, about six over four is three halves, and one quarter times three is three quarters. And so that's the results of computing this matrix divided by four. Vectors give you the result. Finally, for a slightly more complicated example, you can also take these operations and combine them together. So in this calculation, I have three times a vector plus a vector minus another vector divided by three. So just make sure we know where these are, right. This multiplication. This is an example of scalar multiplication because I am taking three and multiplying it. And this is, you know, another scalar multiplication. Or more like scalar division, I guess. It really just means one zero times this. And so if we evaluate these two operations first, then what we get is this thing is equal to, let's see, so three times that vector is three, twelve, six, plus my vector in the middle which is a 005 minus

4:59

one, zero, two-thirds, right? And again, just to make sure we understand what is going on here, this plus symbol, that is matrix addition, right? I really, since these are vectors, remember, vectors are special cases of matrices, right? This, you can also call this vector addition This minus sign here, this is again a matrix subtraction, but because this is an n by 1, really a three by one matrix, that this is actually a vector, so this is also vector, this column. We call this matrix a vector subtraction, as well. OK? And finally to wrap this up. This therefore gives me a vector, whose first element is going to be 3+0-1, so that's 3-1, which is 2. The second element is 12+0-0, which is 12. And the third element of this is, what, 6+5-(2/3), which is 11-(2/3), so that's 10 and one-third and see, you close this square bracket. And so this gives me a 3 by 1 matrix, which is also just called a 3 dimensional vector, which is the outcome of this calculation over here. So that's how you add and subtract matrices and vectors and multiply them by scalars or by row numbers. So far I have only talked about how to multiply matrices and vectors by scalars, by row numbers. In the next video we will talk about a much more interesting step, of taking 2 matrices and multiplying 2 matrices together.

Reading: Addition and Scalar Multiplication

Addition and Scalar Multiplication

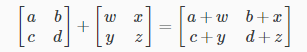

Addition and subtraction are element-wise, so you simply add or subtract each corresponding element:

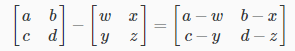

Subtracting Matrices:

To add or subtract two matrices, their dimensions must be the same.

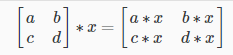

In scalar multiplication, we simply multiply every element by the scalar value:

In scalar division, we simply divide every element by the scalar value:

Experiment below with the Octave/Matlab commands for matrix addition and scalar multiplication. Feel free to try out different commands. Try to write out your answers for each command before running the cell below.

% Initialize matrix A and B

A = [1, 2, 4; 5, 3, 2]

B = [1, 3, 4; 1, 1, 1]

% Initialize constant s

s = 2

% What happens if we have a Matrix + scalar?

add_As = A + s

Video: Matrix Vector Multiplication

In this video, I'd like to start talking about how to multiply together two matrices. We'll start with a special case of that, of matrix vector multiplication - multiplying a matrix together with a vector. Let's start with an example. Here is a matrix, and here is a vector, and let's say we want to multiply together this matrix with this vector, what's the result? Let me just work through this example and then we can step back and look at just what the steps were. It turns out the result of this multiplication process is going to be, itself, a vector. And I'm just going work with this first and later we'll come back and see just what I did here. To get the first element of this vector I am going to take these two numbers and multiply them with the first row of the matrix and add up the corresponding numbers. Take one multiplied by one, and take three and multiply it by five, and that's what, that's one plus fifteen so that gives me sixteen. I'm going to write sixteen here. then for the second row, second element, I am going to take the second row and multiply it by this vector, so I have four times one, plus zero times five, which is equal to four, so you'll have four there. And finally for the last one I have two one times one five, so two by one, plus one by 5, which is equal to a 7, and so I get a 7 over there. It turns out that the results of multiplying that's a 3x2 matrix by a 2x1 matrix is also just a two-dimensional vector. The result of this is going to be a 3x1 matrix, so that's why three by one 3x1 matrix, in other words a 3x1 matrix is just a three dimensional vector. So I realize that I did that pretty quickly, and you're probably not sure that you can repeat this process yourself, but let's look in more detail at what just happened and what this process of multiplying a matrix by a vector looks like. Here's the details of how to multiply a matrix by a vector. Let's say I have a matrix A and want to multiply it by a vector x. The result is going to be some vector y. So the matrix A is a m by n dimensional matrix, so m rows and n columns and we are going to multiply that by a n by 1 matrix, in other words an n dimensional vector. It turns out this "n" here has to match this "n" here. In other words, the number of columns in this matrix, so it's the number of n columns. The number of columns here has to match the number of rows here. It has to match the dimension of this vector. And the result of this product is going to be an n-dimensional vector y. Rows here. "M" is going to be equal to the number of rows in this matrix "A". So how do you actually compute this vector "Y"? Well it turns out to compute this vector "Y", the process is to get "Y""I", multiply "A's" "I'th" row with the elements of the vector "X" and add them up. So here's what I mean. In order to get the first element of "Y", that first number--whatever that turns out to be--we're gonna take the first row of the matrix "A" and multiply them one at a time with the elements of this vector "X". So I take this first number multiply it by this first number. Then take the second number multiply it by this second number. Take this third number whatever that is, multiply it the third number and so on until you get to the end. And I'm gonna add up the results of these products and the result of paying that out is going to give us this first element of "Y". Then when we want to get the second element of "Y", let's say this element. The way we do that is we take the second row of A and we repeat the whole thing. So we take the second row of A, and multiply it elements-wise, so the elements of X and add up the results of the products and that would give me the second element of Y. And you keep going to get and we going to take the third row of A, multiply element Ys with the vector x, sum up the results and then I get the third element and so on, until I get down to the last row like so, okay? So that's the procedure. Let's do one more example. Here's the example: So let's look at the dimensions. Here, this is a three by four dimensional matrix. This is a four-dimensional vector, or a 4 x 1 matrix, and so the result of this, the result of this product is going to be a three-dimensional vector. Write, you know, the vector, with room for three elements. Let's do the, let's carry out the products. So for the first element, I'm going to take these four numbers and multiply them with the vector X. So I have 1x1, plus 2x3, plus 1x2, plus 5x1, which is equal to - that's 1+6, plus 2+6, which gives me 14. And then for the second element, I'm going to take this row now and multiply it with this vector (0x1)+3. All right, so 0x1+ 3x3 plus 0x2 plus 4x1, which is equal to, let's see that's 9+4, which is 13. And finally, for the last element, I'm going to take this last row, so I have minus one times one. You have minus two, or really there's a plus next to a two I guess. Times three plus zero times two plus zero times one, and so that's going to be minus one minus six, which is going to make this seven, and so that's vector seven. Okay? So my final answer is this vector fourteen, just to write to that without the colors, fourteen, thirteen, negative seven.

7:01

And as promised, the result here is a three by one matrix. So that's how you multiply a matrix and a vector. I know that a lot just happened on this slide, so if you're not quite sure where all these numbers went, you know, feel free to pause the video you know, and so take a slow careful look at this big calculation that we just did and try to make sure that you understand the steps of what just happened to get us these numbers,fourteen, thirteen and eleven. Finally, let me show you a neat trick. Let's say we have a set of four houses so 4 houses with 4 sizes like these. And let's say I have a hypotheses for predicting what is the price of a house, and let's say I want to compute, you know, H of X for each of my 4 houses here. It turns out there's neat way of posing this, applying this hypothesis to all of my houses at the same time. It turns out there's a neat way to pose this as a Matrix Vector multiplication. So, here's how I'm going to do it. I am going to construct a matrix as follows. My matrix is going to be 1111 times, and I'm going to write down the sizes of my four houses here and I'm going to construct a vector as well, And my vector is going to this vector of two elements, that's minus 40 and 0.25. That's these two co-efficients; data 0 and data 1. And what I am going to do is to take matrix and that vector and multiply them together, that times is that multiplication symbol. So what do I get? Well this is a four by two matrix. This is a two by one matrix. So the outcome is going to be a four by one vector, all right. So, let me, so this is going to be a 4 by 1 matrix is the outcome or really a four diminsonal vector, so let me write it as one of my four elements in my four real numbers here. Now it turns out and so this first element of this result, the way I am going to get that is, I am going to take this and multiply it by the vector. And so this is going to be -40 x 1 + 4.25 x 2104. By the way, on the earlier slides I was writing 1 x -40 and 2104 x 0.25, but the order doesn't matter, right? -40 x 1 is the same as 1 x -40. And this first element, of course, is "H" applied to 2104. So it's really the predicted price of my first house. Well, how about the second element? Hope you can see where I am going to get the second element. Right? I'm gonna take this and multiply it by my vector. And so that's gonna be -40 x 1 + 0.25 x 1416. And so this is going be "H" of 1416. Right?

10:25

And so on for the third and the fourth elements of this 4 x 1 vector. And just there, right? This thing here that I just drew the green box around, that's a real number, OK? That's a single real number, and this thing here that I drew the magenta box around--the purple, magenta color box around--that's a real number, right? And so this thing on the right--this thing on the right overall, this is a 4 by 1 dimensional matrix, was a 4 dimensional vector. And, the neat thing about this is that when you're actually implementing this in software--so when you have four houses and when you want to use your hypothesis to predict the prices, predict the price "Y" of all of these four houses. What this means is that, you know, you can write this in one line of code. When we talk about octave and program languages later, you can actually, you'll actually write this in one line of code. You write prediction equals my, you know, data matrix times parameters, right? Where data matrix is this thing here, and parameters is this thing here, and this times is a matrix vector multiplication. And if you just do this then this variable prediction - sorry for my bad handwriting - then just implement this one line of code assuming you have an appropriate library to do matrix vector multiplication. If you just do this, then prediction becomes this 4 by 1 dimensional vector, on the right, that just gives you all the predicted prices. And your alternative to doing this as a matrix vector multiplication would be to write eomething like , you know, for I equals 1 to 4, right? And you have say a thousand houses it would be for I equals 1 to a thousand or whatever. And then you have to write a prediction, you know, if I equals. and then do a bunch more work over there and it turns out that When you have a large number of houses, if you're trying to predict the prices of not just four but maybe of a thousand houses then it turns out that when you implement this in the computer, implementing it like this, in any of the various languages. This is not only true for Octave, but for Supra Server Java or Python, other high-level, other languages as well. It turns out, that, by writing code in this style on the left, it allows you to not only simplify the code, because, now, you're just writing one line of code rather than the form of a bunch of things inside. But, for subtle reasons, that we will see later, it turns out to be much more computationally efficient to make predictions on all of the prices of all of your houses doing it the way on the left than the way on the right than if you were to write your own formula. I'll say more about this later when we talk about vectorization, but, so, by posing a prediction this way, you get not only a simpler piece of code, but a more efficient one. So, that's it for matrix vector multiplication and we'll make good use of these sorts of operations as we develop the living regression in other models further. But, in the next video we're going to take this and generalize this to the case of matrix matrix multiplication.

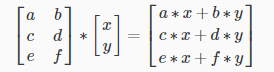

Reading: Matrix Vector Multiplication

Matrix-Vector Multiplication

We map the column of the vector onto each row of the matrix, multiplying each element and summing the result.

The result is a vector. The number of columns of the matrix must equal the number of rows of the vector.

An m x n matrix multiplied by an n x 1 vector results in an m x 1 vector.

Below is an example of a matrix-vector multiplication. Make sure you understand how the multiplication works. Feel free to try different matrix-vector multiplications.

% Initialize matrix A

A = [1, 2, 3; 4, 5, 6;7, 8, 9]

% Initialize vector v

v = [1; 1; 1]

% Multiply A * v

Av = A * v

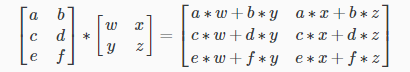

Video: Matrix Matrix Multiplication

In this video we'll talk about matrix-matrix multiplication, or how to multiply two matrices together. When we talk about the method in linear regression for how to solve for the parameters theta 0 and theta 1 all in one shot, without needing an iterative algorithm like gradient descent. When we talk about that algorithm, it turns out that matrix-matrix multiplication is one of the key steps that you need to know.

0:24

So let's, as usual, start with an example.

0:28

Let's say I have two matrices and I want to multiply them together. Let me again just run through this example and then I'll tell you a little bit of what happened. So the first thing I'm gonna do is I'm going to pull out the first column of this matrix on the right. And I'm going to take this matrix on the left and multiply it by a vector that is just this first column.

0:55

And it turns out, if I do that, I'm going to get the vector 11, 9. So this is the same matrix-vector multiplication as you saw in the last video.

1:06

I worked this out in advance, so I know it's 11, 9. And then the second thing I want to do is I'm going to pull out the second column of this matrix on the right. And I'm then going to take this matrix on the left, so take that matrix, and multiply it by that second column on the right. So again, this is a matrix-vector multiplication step which you saw from the previous video. And it turns out that if you multiply this matrix and this vector you get 10, 14. And by the way, if you want to practice your matrix-vector multiplication, feel free to pause the video and check this product yourself.

1:43

Then I'm just gonna take these two results and put them together, and that'll be my answer. So it turns out the outcome of this product is gonna be a two by two matrix. And the way I'm gonna fill in this matrix is just by taking my elements 11, 9, and plugging them here. And taking 10, 14 and plugging them into the second column, okay? So that was the mechanics of how to multiply a matrix by another matrix. You basically look at the second matrix one column at a time and you assemble the answers. And again, we'll step through this much more carefully in a second. But I just want to point out also, this first example is a 2x3 matrix. Multiply that by a 3x2 matrix, and the outcome of this product turns out to be a 2x2 matrix. And again, we'll see in a second why this was the case. All right, that was the mechanics of the calculation. Let's actually look at the details and look at what exactly happened. Here are the details. I have a matrix A and I want to multiply that with a matrix B and the result will be some new matrix C.

2:55

It turns out you can only multiply together matrices whose dimensions match. So A is an m x n matrix, so m rows, n columns. And we multiply with an n x o matrix. And it turns out this n here must match this n here. So the number of columns in the first matrix must equal to the number of rows in the second matrix. And the result of this product will be a m x o matrix, like the matrix C here. And in the previous video everything we did corresponded to the special case of o being equal to 1. That was to the case of B being a vector. But now we're gonna deal with the case of values of o larger than 1. So here's how you multiply together the two matrices. What I'm going to do is I'm going to take the first column of B and treat that as a vector, and multiply the matrix A by the first column of B. And the result of that will be a n by 1 vector, and I'm gonna put that over here.

4:05

Then I'm gonna take the second column of B, right? So this is another n by 1 vector. So this column here, this is n by 1. It's an n-dimensional vector. Gonna multiply this matrix with this n by 1 vector. The result will be a m-dimensional vector, which we'll put there, and so on.

4:29

And then I'm gonna take the third column, multiply it by this matrix. I get a m-dimensional vector. And so on, until you get to the last column. The matrix times the last column gives you the last column of C.

4:46

Just to say that again, the ith column of the matrix C is obtained by taking the matrix A and multiplying the matrix A with the ith column of the matrix B for the values of i = 1, 2, up through o. So this is just a summary of what we did up there in order to compute the matrix C.

5:11