Win10+VirtualBox+Openstack Mitaka

首先VirtualBox安装的话,没有什么可演示的,去官网(https://www.virtualbox.org/wiki/Downloads)下载,或者可以去(https://www.virtualbox.org/wiki/Download_Old_Builds)下载旧版本。

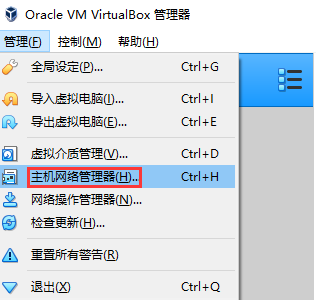

接下来设置virtualbox的网络

这里需要注意的是IP地址栏中的信息,必须全部删除然后切换为英文输入法,再次输入。

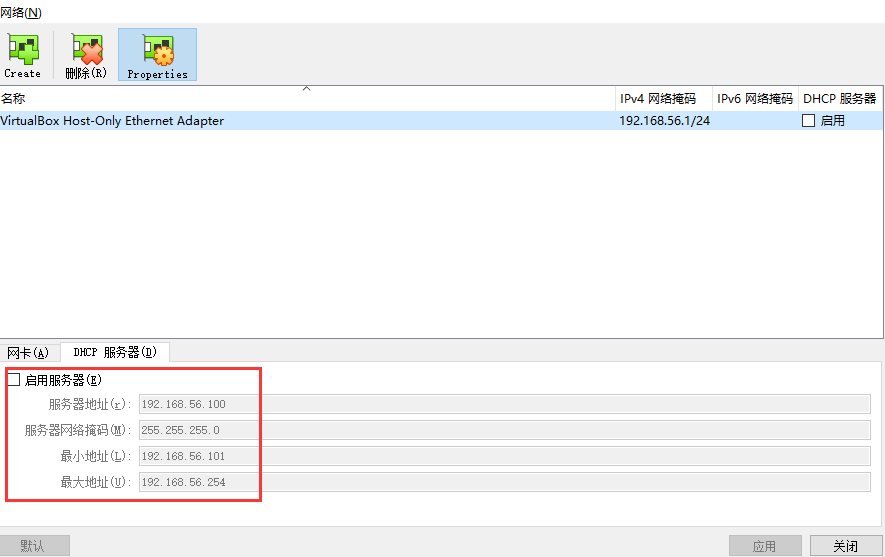

接下来配置Host-Only

以下是确认没有启用DHCP

接下来就是安装ubuntu了,

点击新建虚拟机,选择linux,发行版本选择ubuntu 64 bit

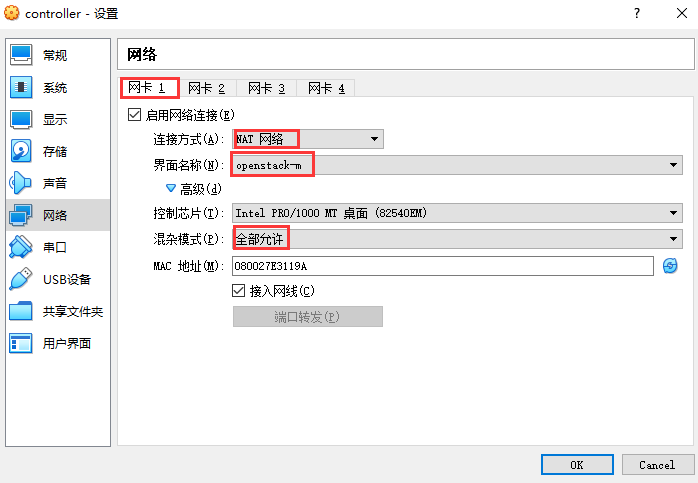

这里安装过程不再演示,但是在配置网络的时候要安装如下所示配置

网卡2的配置如下

接下来就是添加存储,选择之前下载好的ubuntu-14.04.5-server-amd64.iso镜像文件,下载地址(http://mirrors.aliyun.com/ubuntu-releases/14.04/ubuntu-14.04.5-server-amd64.iso)

点击“OK”之后,开启虚拟机即可开始安装

语言:English(回车)

Ubuntu: Install Ubuntu Server(回车)

接下来直接敲回车即可,直到:

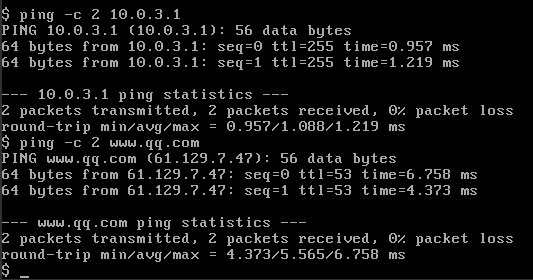

由于需要使用Nat访问外网,所以这里选择eth0.回车之后,直接选择‘cancel’,回车会告警,忽略这个告警直接点击“continue”,会提示让配置网络,选择手动配置,回车:

IP address:10.0.3.10

Netmask: 255.255.255.0

Gateway:10.0.30.1

Name server addresses: 114.114.114.114

Hostname: controller

Domain name: 不设置,直接回车即可,

Full name for the new user: openstack

Username for your account: openstack

Choose a password for the new user: 123456

Re-enter password to verify: 123456

Use weak password? 选择“yes”,回车

Encrypt your home directory? 选择“No”,回车

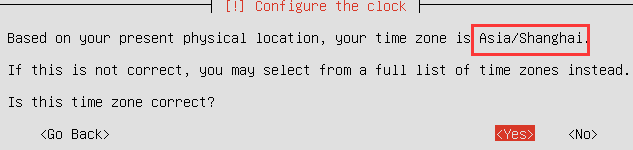

接下来需要确认当前的时区是上海,如果是上海,选择“yes”进行下一步;不是上海选择“No”,然后在列表中选择上海。

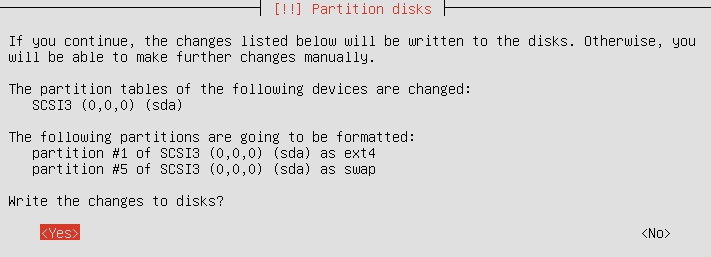

在Partition disks选项中,选择“Guided - user entire disk",然后回车,回车,出现如下所示,选择“Yes”,回车

Configure the package manager: 不设置HTTP proxy,直接选择continue,回车

Configuring apt两步直接回车取消掉即可

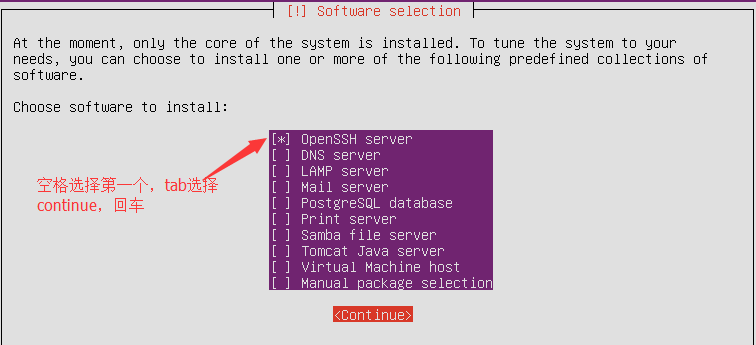

Configuring taskel: No automatic updates, 回车之后选择安装OpenSSH server

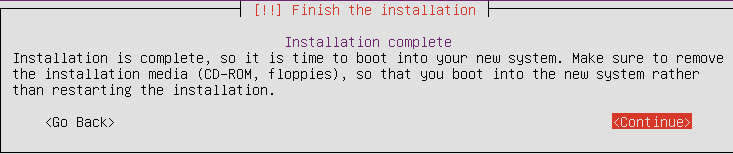

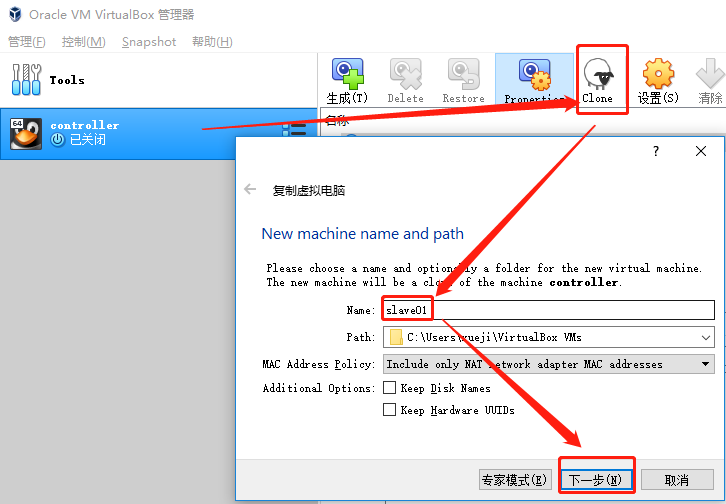

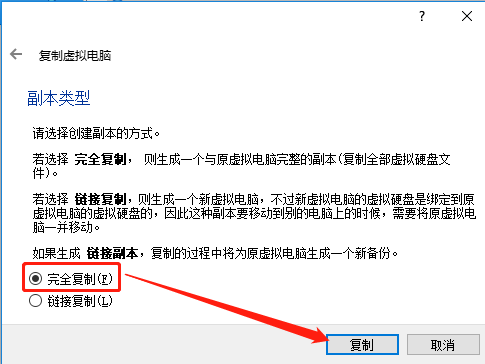

安装已完成,系统会自动重启,重启完成,关机,然后进行克隆操作:

选择“完全复制”。

接下来开始配置系统环境,选择刚刚创建好的虚拟机,点击启动,然后找到这个网址(https://github.com/JiYou/openstack-m/blob/master/os/interfaces)这是网卡配置文件,接下来开始查看并编辑网卡配置文件interfaces

- openstack@controller:~$ cat /etc/network/interfaces

- # This file describes the network interfaces available on your system

- # and how to activate them. For more information, see interfaces().

- # The loopback network interface

- auto lo

- iface lo inet loopback

- # The primary network interface

- auto eth0

- iface eth0 inet static

- address 10.0.3.10

- netmask 255.255.255.0

- network 10.0.3.0

- broadcast 10.0.3.255

- gateway 10.0.3.1

- # dns-* options are implemented by the resolvconf package, if installed

- dns-nameservers 114.114.114.114

- auto eth1

- iface eth1 inet static

- address 192.168.56.10

- netmask 255.255.255.0

- gateway 192.168.56.1

- dns-nameservers 114.114.114.114

重启系统生效,然后使用xshell、putty或其他远程管理工具,我这里使用的是Gitbash,连接测试

- xueji@xueji MINGW64 ~

- $ ssh openstack@192.168.56.10

- The authenticity of host '192.168.56.10 (192.168.56.10)' can't be established.

- ECDSA key fingerprint is SHA256:DvbqAHwl6bcmX3FcvaJZ1REpRR8Oup89ST+a8WFBY7Y.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added '192.168.56.10' (ECDSA) to the list of known hosts.

- openstack@192.168.56.10's password:

- Welcome to Ubuntu 14.04. LTS (GNU/Linux 4.4.--generic x86_64)

- * Documentation: https://help.ubuntu.com/

- System information as of Tue Jan :: CST

- System load: 0.11 Processes:

- Usage of /: 0.6% of .78GB Users logged in:

- Memory usage: % IP address for eth0: 10.0.3.10

- Swap usage: % IP address for eth1: 192.168.56.10

- Graph this data and manage this system at:

- https://landscape.canonical.com/

- packages can be updated.

- updates are security updates.

- New release '16.04.5 LTS' available.

- Run 'do-release-upgrade' to upgrade to it.

- Last login: Tue Jan ::

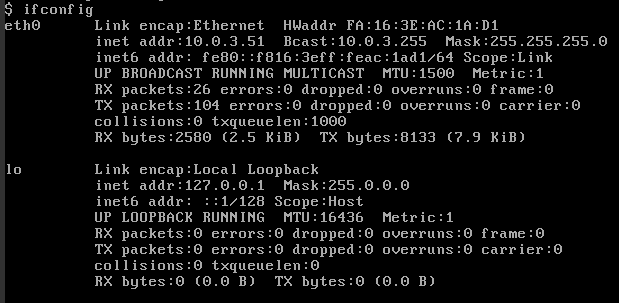

- openstack@controller:~$ ifconfig

登录成功,

接下来开始准备openstack的包

- openstack@controller:~$ sudo -s

- [sudo] password for openstack:

- root@controller:~# apt-get update

- root@controller:~# apt-get install -y software-properties-common

- root@controller:~# add-apt-repository cloud-archive:mitaka

- Ubuntu Cloud Archive for OpenStack Mitaka

- More info: https://wiki.ubuntu.com/ServerTeam/CloudArchive

- Press [ENTER] to continue or ctrl-c to cancel adding it

- # 回车

- Reading package lists...

- Building dependency tree...

- Reading state information...

- The following NEW packages will be installed:

- ubuntu-cloud-keyring

- upgraded, newly installed, to remove and not upgraded.

- Need to get , B of archives.

- After this operation, 34.8 kB of additional disk space will be used.

- Get: http://us.archive.ubuntu.com/ubuntu/ trusty/universe ubuntu-cloud-keyring all 2012.08.14 [5,086 B]

- Fetched , B in 0s (11.0 kB/s)

- Selecting previously unselected package ubuntu-cloud-keyring.

- (Reading database ... files and directories currently installed.)

- Preparing to unpack .../ubuntu-cloud-keyring_2012..14_all.deb ...

- Unpacking ubuntu-cloud-keyring (2012.08.) ...

- Setting up ubuntu-cloud-keyring (2012.08.) ...

- Importing ubuntu-cloud.archive.canonical.com keyring

- OK

- Processing ubuntu-cloud.archive.canonical.com removal keyring

- gpg: /etc/apt/trustdb.gpg: trustdb created

- OK

- root@controller:~# apt-get update && apt-get dist-upgrade

- root@controller:~# apt-get install -y python-openstackclient

安装NTP、MySQL

- root@controller:~# hostname -I

- 10.0.3.10 192.168.56.10

- root@controller:~# tail -n - /etc/hosts

- 10.0.3.10 controller

- 192.168.56.10 controller

- root@controller:~# vim /etc/chrony/chrony.conf

- # 注释掉以下四行,接着在下面添加server controller iburst

- #server .debian.pool.ntp.org offline minpoll

- #server .debian.pool.ntp.org offline minpoll

- #server .debian.pool.ntp.org offline minpoll

- #server .debian.pool.ntp.org offline minpoll

- server controller iburst

- root@controller:~# chronyc sources

- Number of sources =

- MS Name/IP address Stratum Poll Reach LastRx Last sample

- ===============================================================================

- ^? controller 10y +0ns[ +0ns] +/- 0ns

- 安装mysql

- root@controller:~# apt-get install -y mariadb-server python-pymysql

- 在弹出的mysql数据库密码输入框中输入123456

- root@controller:~# cd /etc/mysql/

- root@controller:/etc/mysql# ls

- conf.d debian.cnf debian-start my.cnf

- root@controller:/etc/mysql# cp my.cnf{,.bak}

- root@controller:/etc/mysql# vim my.cnf

- [mysqld] #该行下面添加如下四行内容

- default-storage-engine = innodb

- innodb_file_per_table

- max_connections =

- collation-server = utf8_general_ci

- character-set-server = utf8

- bind-address = 0.0.0.0 #原值是127.0.0.

- 重启mysql

- root@controller:/etc/mysql# service mariadb restart

- mariadb: unrecognized service

- root@controller:/etc/mysql# service mysql restart

- * Stopping MariaDB database server mysqld [ OK ]

- * Starting MariaDB database server mysqld [ OK ]

- * Checking for corrupt, not cleanly closed and upgrade needing tables.

- 安全初始化

- root@controller:/etc/mysql# mysql_secure_installation

- /usr/bin/mysql_secure_installation: : /usr/bin/mysql_secure_installation: find_mysql_client: not found

- NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB

- SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

- In order to log into MariaDB to secure it, we'll need the current

- password for the root user. If you've just installed MariaDB, and

- you haven't set the root password yet, the password will be blank,

- so you should just press enter here.

- Enter current password for root (enter for none):

- OK, successfully used password, moving on...

- Setting the root password ensures that nobody can log into the MariaDB

- root user without the proper authorisation.

- You already have a root password set, so you can safely answer 'n'.

- Change the root password? [Y/n] n

- ... skipping.

- By default, a MariaDB installation has an anonymous user, allowing anyone

- to log into MariaDB without having to have a user account created for

- them. This is intended only for testing, and to make the installation

- go a bit smoother. You should remove them before moving into a

- production environment.

- Remove anonymous users? [Y/n] n

- ... skipping.

- Normally, root should only be allowed to connect from 'localhost'. This

- ensures that someone cannot guess at the root password from the network.

- Disallow root login remotely? [Y/n] n

- ... skipping.

- By default, MariaDB comes with a database named 'test' that anyone can

- access. This is also intended only for testing, and should be removed

- before moving into a production environment.

- Remove test database and access to it? [Y/n] n

- ... skipping.

- Reloading the privilege tables will ensure that all changes made so far

- will take effect immediately.

- Reload privilege tables now? [Y/n] y

- ... Success!

- Cleaning up...

- All done! If you've completed all of the above steps, your MariaDB

- installation should now be secure.

- Thanks for using MariaDB!

- 测试连接

- root@controller:/etc/mysql# mysql -uroot -p123456

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | mysql |

- | performance_schema |

- +--------------------+

- rows in set (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- root@controller:/etc/mysql# mysql -uroot -p123456 -h10.0.3.

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | mysql |

- | performance_schema |

- +--------------------+

- rows in set (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- root@controller:/etc/mysql# mysql -uroot -p123456 -h192.168.56.

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | mysql |

- | performance_schema |

- +--------------------+

- rows in set (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- root@controller:/etc/mysql# mysql -uroot -p123456 -h127.0.0.

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | mysql |

- | performance_schema |

- +--------------------+

- rows in set (0.00 sec)

- MariaDB [(none)]> \q

- Bye

安装mongodb

- root@controller:~# apt-get install -y mongodb-server mongodb-clients python-pymongo

- root@controller:~# cp /etc/mongodb.conf{,.bak}

- root@controller:~# vim /etc/mongodb.conf

- bind_ip = 0.0.0.0 #原值127.0.0.

- smallfiles = true #添加此行内容

- root@controller:~# service mongodb stop

- mongodb stop/waiting

- root@controller:~# ls /var/lib/mongodb/journal/

- # 如果这个目录下有prealloc开头的文件,全部删除

- root@controller:~# service mongodb start

- mongodb start/running, process

安装rabbitmq

- root@controller:~# apt-get install -y rabbitmq-server

- 添加openstack用户

- root@controller:~# rabbitmqctl add_user openstack

- Creating user "openstack" ...

- 赋予“openstack”用户读写权限

- root@controller:~# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

- Setting permissions for user "openstack" in vhost "/" ...

安装memecached

- root@controller:~# apt-get install -y memcached python-memcache

- root@controller:~# cp /etc/memcached.conf{,.bak}

- root@controller:~# vim /etc/memcached.conf

- -l 0.0.0.0 #原值127.0.0.

- 重启memcache

- root@controller:~# service memcached restart

- Restarting memcached: memcached.

- root@controller:~# service memcached status

- * memcached is running

- root@controller:~# ps aux | grep memcached

- memcache 0.0 0.0 ? Sl : : /usr/bin/memcached -m -p -u memcache -l 0.0.0.0

- root 0.0 0.0 pts/ S+ : : grep --color=auto memcached

开始安装keystone

- root@controller:~# mysql -uroot -p123456

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> create database keystone;

- Query OK, row affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on keystone.* to 'keystone'@'localhost' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on keystone.* to 'keystone'@'%' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- root@controller:~# mysql -ukeystone -p123456 -h 127.0.0.1

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | keystone |

- +--------------------+

- rows in set (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- root@controller:~# mysql -ukeystone -p123456 -h 10.0.3.10

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | keystone |

- +--------------------+

- rows in set (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- root@controller:~# mysql -ukeystone -p123456 -h 192.168.56.10

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> show databases;

- +--------------------+

- | Database |

- +--------------------+

- | information_schema |

- | keystone |

- +--------------------+

- rows in set (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- # 连接都没问题

- 接着安装keystone软件包

- root@controller:~# echo "manual" > /etc/init/keystone.override

- root@controller:~# apt-get install keystone apache2 libapache2-mod-wsgi

- 配置keystone.conf

- root@controller:~# cp /etc/keystone/keystone.conf{,.bak}

- root@controller:~# vim /etc/keystone/keystone.conf

- admin_token =

- connection = mysql+pymysql://keystone:123456@controller/keystone

- provider = fernet

- # 同步数据库

- root@controller:~# su -s /bin/sh -c "keystone-manage db_sync" keystone

- 初始化fernet-keys

- root@controller:~# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

- -- ::34.134 INFO keystone.token.providers.fernet.utils [-] [fernet_tokens] key_repository does not appear to exist; attempting to create it

- -- ::34.135 INFO keystone.token.providers.fernet.utils [-] Created a new key: /etc/keystone/fernet-keys/

- -- ::34.135 INFO keystone.token.providers.fernet.utils [-] Starting key rotation with key files: ['/etc/keystone/fernet-keys/0']

- -- ::34.135 INFO keystone.token.providers.fernet.utils [-] Current primary key is:

- -- ::34.136 INFO keystone.token.providers.fernet.utils [-] Next primary key will be:

- -- ::34.136 INFO keystone.token.providers.fernet.utils [-] Promoted key to be the primary:

- -- ::34.137 INFO keystone.token.providers.fernet.utils [-] Created a new key: /etc/keystone/fernet-keys/

- root@controller:~# echo $?

- 配置Apache HTTP

- root@controller:~# cp /etc/apache2/apache2.conf{,.bak}

- root@controller:~# vim /etc/apache2/apache2.conf

- root@controller:~# grep 'ServerName' /etc/apache2/apache2.conf

- ServerName controller #末尾添加此行

配置Apache HTPP

- root@controller:~# cp /etc/apache2/apache2.conf{,.bak}

- root@controller:~# vim /etc/apache2/apache2.conf

- root@controller:~# grep 'ServerName' /etc/apache2/apache2.conf

- ServerName controller

- 接着创建wsgi-keystone.conf文件

- root@controller:~# vim /etc/apache2/sites-available/wsgi-keystone.conf

- Listen

- Listen

- <VirtualHost *:>

- WSGIDaemonProcess keystone-public processes= threads= user=keystone group=keystone display-name=%{GROUP}

- WSGIProcessGroup keystone-public

- WSGIScriptAlias / /usr/bin/keystone-wsgi-public

- WSGIApplicationGroup %{GLOBAL}

- WSGIPassAuthorization On

- ErrorLogFormat "%{cu}t %M"

- ErrorLog /var/log/apache2/keystone.log

- CustomLog /var/log/apache2/keystone_access.log combined

- <Directory /usr/bin>

- Require all granted

- </Directory>

- </VirtualHost>

- <VirtualHost *:>

- WSGIDaemonProcess keystone-admin processes= threads= user=keystone group=keystone display-name=%{GROUP}

- WSGIProcessGroup keystone-admin

- WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

- WSGIApplicationGroup %{GLOBAL}

- WSGIPassAuthorization On

- ErrorLogFormat "%{cu}t %M"

- ErrorLog /var/log/apache2/keystone.log

- CustomLog /var/log/apache2/keystone_access.log combined

- <Directory /usr/bin>

- Require all granted

- </Directory>

- </VirtualHost>

- ~

开启认证服务虚拟主机

- root@controller:~# ln -s /etc/apache2/sites-available/wsgi-keystone.conf /etc/apache2/sites-enabled

重启apache

- root@controller:~# service apache2 restart

- * Restarting web server apache2 [ OK ]

- root@controller:~# rm -rf /var/lib/keystone/keystone.db

- root@controller:~# lsof -i:

- COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

- apache2 root 6u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 6u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 6u IPv6 0t0 TCP *: (LISTEN)

- root@controller:~# lsof -i:

- COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

- apache2 root 8u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 8u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 8u IPv6 0t0 TCP *: (LISTEN)

安装python-openstackclient

- root@controller:~# apt-get install -y python-openstackclient

配置rootrc环境

- root@controller:~# vim rootrc

- root@controller:~# cat rootrc

- export OS_TOKEN=

- export OS_URL=http://controller:35357/v3

- export OS_IDENTITY_API_VERSION=

- export PS1="rootrc@\u@\h:\w\$"

- # 加载rootrc环境

- root@controller:~# source rootrc

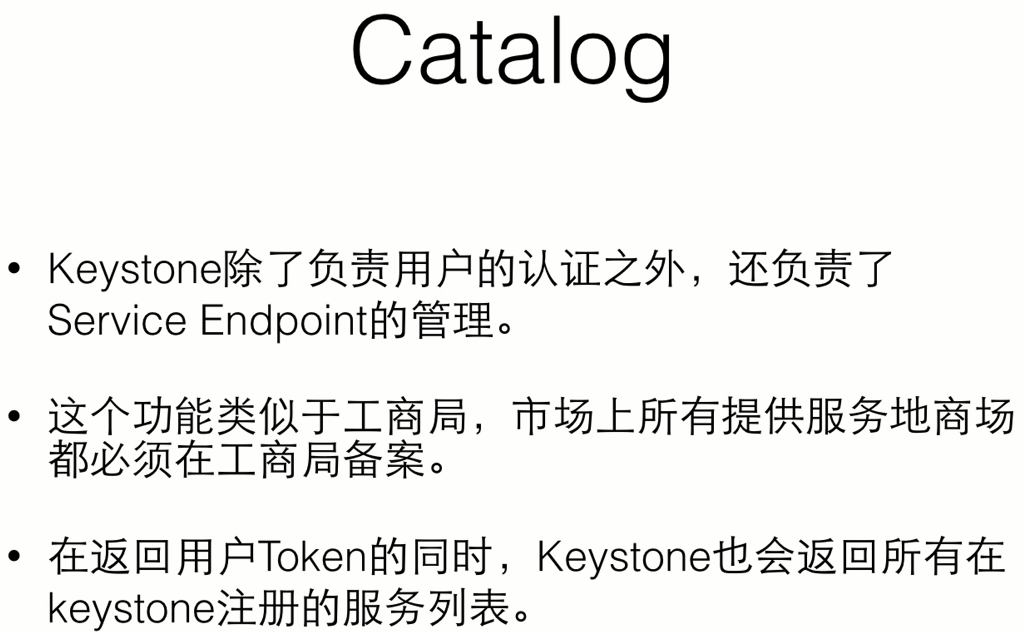

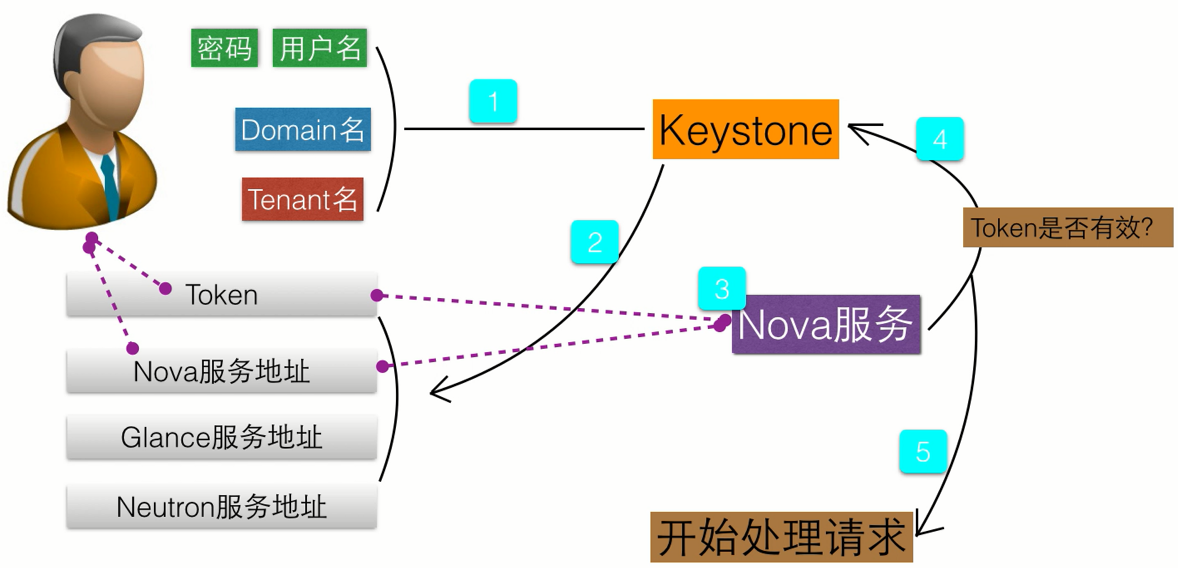

向keystone中注册服务

值得注意的是:35357一般为管理员登录使用,5000端口一般发布到外部用户使用

创建服务实体和API端点

- adminrc@root@controller:~$source rootrc

- rootrc@root@controller:~$openstack service create --name keystone --description "OpenStack Identify" identity

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | OpenStack Identify |

- | enabled | True |

- | id | 7052e2715c874ae18dc520ec21026a34 |

- | name | keystone |

- | type | identity |

- +-------------+----------------------------------+

- rootrc@root@controller:~$openstack endpoint create --region RegionOne identity internal http://controller:5000/v3

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | ac731860b374450484034b024e643004 |

- | interface | internal |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 7052e2715c874ae18dc520ec21026a34 |

- | service_name | keystone |

- | service_type | identity |

- | url | http://controller:5000/v3 |

- +--------------+----------------------------------+

- rootrc@root@controller:~$openstack endpoint create --region RegionOne identity public http://controller:5000/v3

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | d1f7296477a748ef82ad4970580d50b2 |

- | interface | public |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 7052e2715c874ae18dc520ec21026a34 |

- | service_name | keystone |

- | service_type | identity |

- | url | http://controller:5000/v3 |

- +--------------+----------------------------------+

- rootrc@root@controller:~$openstack endpoint create --region RegionOne identity admin http://controller:35357/v3

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | df4eb1f2b08f474fa7b83ef979ebd0fb |

- | interface | admin |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 7052e2715c874ae18dc520ec21026a34 |

- | service_name | keystone |

- | service_type | identity |

- | url | http://controller:35357/v3 |

- +--------------+----------------------------------+

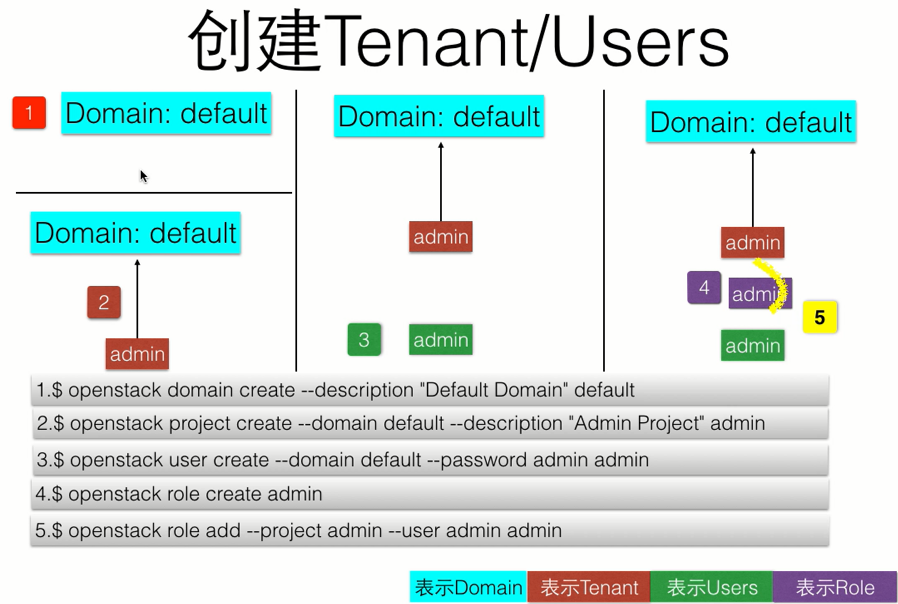

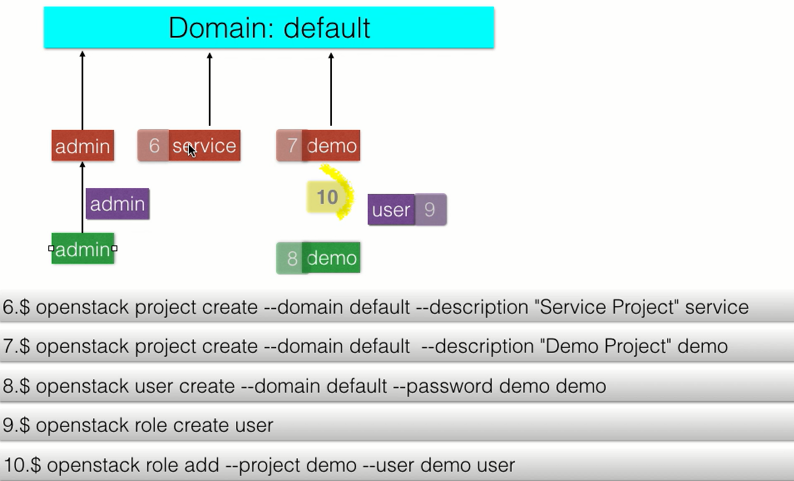

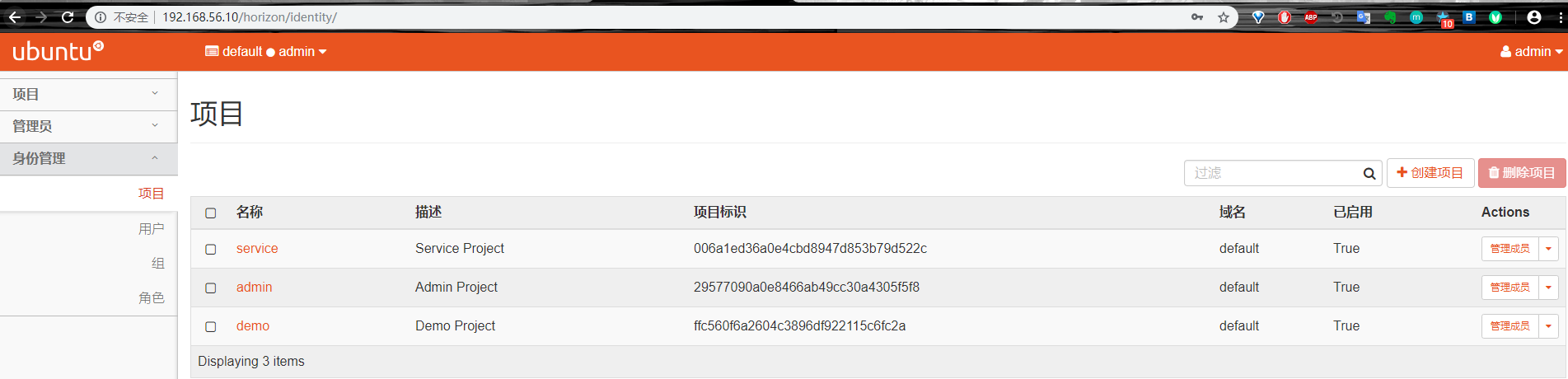

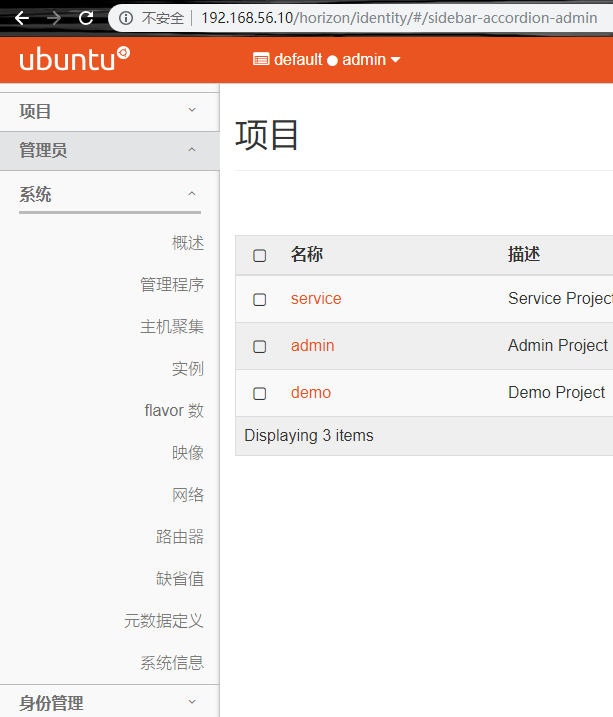

接着创建域、项目、用户和角色

- rootrc@root@controller:~$openstack domain create --description "Default Domain" default

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | Default Domain |

- | enabled | True |

- | id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | name | default |

- +-------------+----------------------------------+

- rootrc@root@controller:~$openstack project create --domain default --description "Admin Project" admin

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | Admin Project |

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | 29577090a0e8466ab49cc30a4305f5f8 |

- | is_domain | False |

- | name | admin |

- | parent_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- +-------------+----------------------------------+

- rootrc@root@controller:~$openstack user create --domain default --password admin admin

- +-----------+----------------------------------+

- | Field | Value |

- +-----------+----------------------------------+

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | 653177098fac40a28734093706299e66 |

- | name | admin |

- +-----------+----------------------------------+

- rootrc@root@controller:~$openstack role create admin

- +-----------+----------------------------------+

- | Field | Value |

- +-----------+----------------------------------+

- | domain_id | None |

- | id | 6abd897a6f134b8ea391377d1617a2f8 |

- | name | admin |

- +-----------+----------------------------------+

- rootrc@root@controller:~$openstack role add --project admin --user admin admin

- rootrc@root@controller:~$ #没有提示就是最好的提示了

创建service项目

- rootrc@root@controller:~$openstack project create --domain default --description "Service Project" service

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | Service Project |

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | 006a1ed36a0e4cbd8947d853b79d522c |

- | is_domain | False |

- | name | service |

- | parent_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- +-------------+----------------------------------+

- rootrc@root@controller:~$openstack project create --domain default --description "Demo Project" demo

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | Demo Project |

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | ffc560f6a2604c3896df922115c6fc2a |

- | is_domain | False |

- | name | demo |

- | parent_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- +-------------+----------------------------------+

- rootrc@root@controller:~$openstack user create --domain default --password demo demo

- +-----------+----------------------------------+

- | Field | Value |

- +-----------+----------------------------------+

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | c4de9fac882740838aa26e9119b30cb9 |

- | name | demo |

- +-----------+----------------------------------+

- rootrc@root@controller:~$openstack role create user

- +-----------+----------------------------------+

- | Field | Value |

- +-----------+----------------------------------+

- | domain_id | None |

- | id | e69817f50d6448fe888a64e51e025351 |

- | name | user |

- +-----------+----------------------------------+

- rootrc@root@controller:~$openstack role add --project demo --user demo user

- rootrc@root@controller:~$echo $?

验证adminrc

- rootrc@root@controller:~$vim adminrc

- rootrc@root@controller:~$cat adminrc

- unset OS_TOKEN

- unset OS_URL

- unset OS_IDENTITY_API_VERSION

- export OS_PROJECT_DOMAIN_NAME=default

- export OS_USER_DOMAIN_NAME=default

- export OS_PROJECT_NAME=admin

- export OS_USERNAME=admin

- export OS_PASSWORD=admin

- export OS_AUTH_URL=http://controller:35357/v3

- export OS_IDENTITY_API_VERSION=

- export OS_IMAGE_API_VERSION=

- export PS1="adminrc@\u@\h:\w\$"

加载adminrc环境并尝试获取keystone token

- rootrc@root@controller:~$source adminrc

- adminrc@root@controller:~$openstack token issue

- +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

- | Field | Value |

- +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

- | expires | --14T21::.000000Z |

- | id | gAAAAABcPPIQK270ipb9EgRW7feWYLunIVPaX9cTjhvgvTvMmpG8j8K_AkwPv5UL4WUFFzfDnO30A7WflnaOyufilAi7DCmbQ2YLlsGuAzgbCRYooV5pIJTkuqbhmRJDmFX068zliOri_rXL2CsTq9um3UtCPnOj7-7LxmXcFm5LwsP6OyzY4Ts |

- | project_id | 29577090a0e8466ab49cc30a4305f5f8 |

- | user_id | 653177098fac40a28734093706299e66 |

- +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

- adminrc@root@controller:~$date

- Tue Jan :: CST

验证demorc

- adminrc@root@controller:~$vim demorc

- adminrc@root@controller:~$cat demorc

- unset OS_TOKEN

- unset OS_URL

- unset OS_IDENTITY_API_VERSION

- export OS_PROJECT_DOMAIN_NAME=default

- export OS_USER_DOMAIN_NAME=default

- export OS_PROJECT_NAME=demo

- export OS_USERNAME=demo

- export OS_PASSWORD=demo

- export OS_AUTH_URL=http://controller:5000/v3

- export OS_IDENTITY_API_VERSION=

- export OS_IMAGE_API_VERSION=

- export PS1="demorc@\u@\h:\w\$"

获取demo用户的token

- adminrc@root@controller:~$source demorc

- demorc@root@controller:~$openstack token issue

- +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

- | Field | Value |

- +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

- | expires | --14T21::.000000Z |

- | id | gAAAAABcPPPSLXi6E581bb8P0MpmHOLg-p0_vt9YLNWXn6feHLF6QONWq3Ny8JT4ceOvkKiv5TltLA4WRyn6XghcvZn-X0tuhOl07Eh6KXxGiGtEwgZyPFO-AFhykXims1FH0Tz4lp-fI_ExelOAcT50OFeKC3bB5vlGlYgR0pmdiVj8L73Boiw |

- | project_id | ffc560f6a2604c3896df922115c6fc2a |

- | user_id | c4de9fac882740838aa26e9119b30cb9 |

- +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

- demorc@root@controller:~$date

- Tue Jan :: CST

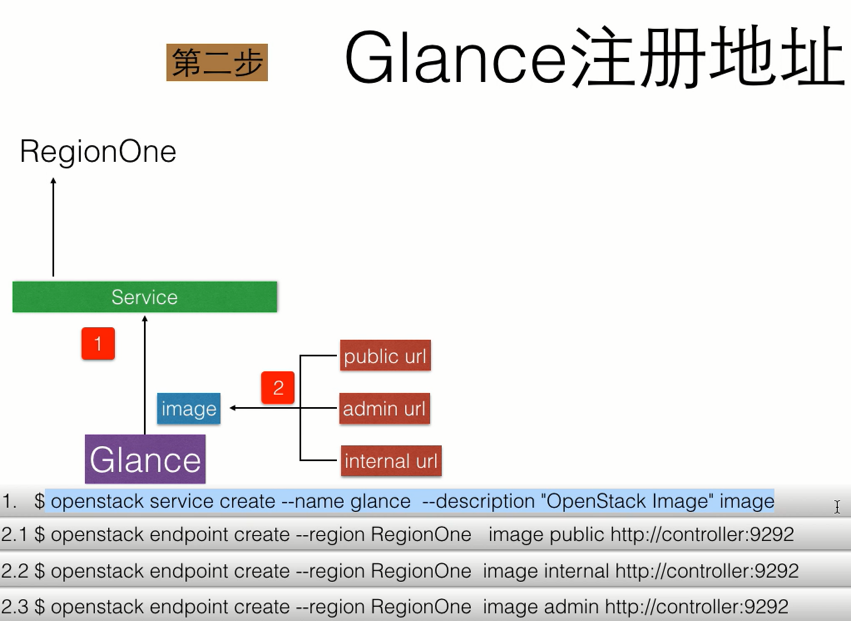

开始安装glance服务

- demorc@root@controller:~$mysql -uroot -p123456

- Welcome to the MariaDB monitor. Commands end with ; or \g.

- Your MariaDB connection id is

- Server version: 5.5.-MariaDB-1ubuntu0.14.04. (Ubuntu)

- Copyright (c) , , Oracle, MariaDB Corporation Ab and others.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- MariaDB [(none)]> create database glance;

- Query OK, row affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on glance.* to 'glance'@'localhost' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on glance.* to 'glance'@'%' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> \q

- Bye

- demorc@root@controller:~$source adminrc

- adminrc@root@controller:~$

1111

- rootrc@root@controller:~$source adminrc

- adminrc@root@controller:~$openstack service create --name glance --description "OpenStack Image" image

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | OpenStack Image |

- | enabled | True |

- | id | 24eba17c530946fea53413104b8d2035 |

- | name | glance |

- | type | image |

- +-------------+----------------------------------+

- adminrc@root@controller:~$ps -aux | grep -v "grep" | grep keystone

- keystone 0.0 0.2 ? Sl : : (wsgi:keystone-pu -k start

- keystone 0.0 3.0 ? Sl : : (wsgi:keystone-pu -k start

- keystone 0.0 2.1 ? Sl : : (wsgi:keystone-pu -k start

- keystone 0.0 0.2 ? Sl : : (wsgi:keystone-pu -k start

- keystone 0.0 0.2 ? Sl : : (wsgi:keystone-pu -k start

- keystone 0.0 3.1 ? Sl : : (wsgi:keystone-ad -k start

- keystone 0.0 3.0 ? Sl : : (wsgi:keystone-ad -k start

- keystone 0.0 2.2 ? Sl : : (wsgi:keystone-ad -k start

- keystone 0.0 3.1 ? Sl : : (wsgi:keystone-ad -k start

- keystone 0.0 3.1 ? Sl : : (wsgi:keystone-ad -k start

- adminrc@root@controller:~$lsof -i:

- COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

- apache2 root 6u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 6u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 6u IPv6 0t0 TCP *: (LISTEN)

- adminrc@root@controller:~$lsof -i:

- COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

- apache2 root 8u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 8u IPv6 0t0 TCP *: (LISTEN)

- apache2 www-data 8u IPv6 0t0 TCP *: (LISTEN)

- adminrc@root@controller:~$tail /var/log/keystone/keystone-wsgi-admin.log

11111

- adminrc@root@controller:~$openstack endpoint create --region RegionOne image internal http://controller:9292

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | 83d13b44fbae4abbb89b7f1a9f1519d6 |

- | interface | internal |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 24eba17c530946fea53413104b8d2035 |

- | service_name | glance |

- | service_type | image |

- | url | http://controller:9292 |

- +--------------+----------------------------------+

- adminrc@root@controller:~$openstack endpoint create --region RegionOne image admin http://controller:9292

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | c9708f196a6946f987652cb40b9a8aea |

- | interface | admin |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 24eba17c530946fea53413104b8d2035 |

- | service_name | glance |

- | service_type | image |

- | url | http://controller:9292 |

- +--------------+----------------------------------+

111

- adminrc@root@controller:~$openstack user create --domain default --password glance glance

- +-----------+----------------------------------+

- | Field | Value |

- +-----------+----------------------------------+

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | b9c7a987bc494e72899d6ffa7c68c3d0 |

- | name | glance |

- +-----------+----------------------------------+

- adminrc@root@controller:~$openstack role add --project service --user glance admin

- adminrc@root@controller:~$sudo -s

- root@controller:~# apt-get install -y glance

- root@controller:~# echo $?

配置glance-api.conf

- root@controller:~# cp /etc/glance/glance-api.conf{,.bak}

- root@controller:~# vim /etc/glance/glance-api.conf

- ......

- connection = mysql+pymysql://glance:123456@controller/glance

- ......

- [keystone_authtoken]

- auth_uri = http://controller:5000

- auth_url = http://controller:35357

- memcached_servers = controller:

- auth_type = password

- project_domain_name = default

- user_domain_name = default

- project_name = service

- username = glance

- password = glance

- [paste_deploy]

- flavor = keystone

- [glance_store]

- stores = file,http

- default_store = file

- filesystem_store_datadir = /var/lib/glance/images/

配置

- root@controller:~# cp /etc/glance/glance-registry.conf{,.bak}

- root@controller:~# vim /etc/glance/glance-registry.conf

- .......

- connection = mysql+pymysql://glance:123456@localhost/glance

- .......

- [keystone_authtoken]

- auth_uri = http://controller:5000

- auth_url = http://controller:35357

- memcached_servers = controller:

- auth_type = password

- project_domain_name = default

- user_domain_name = default

- project_name = service

- username = glance

- password = glance

- ........

- [paste_deploy]

- flavor = keystone

写入镜像服务数据库中

- root@controller:~# su -s /bin/sh -c "glance-manage db_sync" glance

- ............

- -- ::43.570 INFO migrate.versioning.api [-] done

配置完成重启服务

- root@controller:~# service glance-registry restart

- glance-registry stop/waiting

- glance-registry start/running, process

- root@controller:~# service glance-api restart

- glance-api stop/waiting

- glance-api start/running, process

获取admin凭证来获取只有管理员能执行的命令的访问权限

- root@controller:~# source adminrc

- adminrc@root@controller:~$wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

- adminrc@root@controller:~$ls -al cirros-0.3.-x86_64-disk.img

- -rw-r--r-- root root May cirros-0.3.-x86_64-disk.img

- adminrc@root@controller:~$file cirros-0.3.-x86_64-disk.img

- cirros-0.3.-x86_64-disk.img: QEMU QCOW Image (v2), bytes

- adminrc@root@controller:~$openstack image create "cirrors" --file cirros-0.3.-x86_64-disk.img --disk-format qcow2 --container-format bare --public

- +------------------+------------------------------------------------------+

- | Field | Value |

- +------------------+------------------------------------------------------+

- | checksum | ee1eca47dc88f4879d8a229cc70a07c6 |

- | container_format | bare |

- | created_at | --14T22::08Z |

- | disk_format | qcow2 |

- | file | /v2/images/39d73bcf-e60b-4caf--cca17de00d7e/file |

- | id | 39d73bcf-e60b-4caf--cca17de00d7e |

- | min_disk | |

- | min_ram | |

- | name | cirrors |

- | owner | 29577090a0e8466ab49cc30a4305f5f8 |

- | protected | False |

- | schema | /v2/schemas/image |

- | size | |

- | status | active |

- | tags | |

- | updated_at | --14T22::08Z |

- | virtual_size | None |

- | visibility | public |

- +------------------+------------------------------------------------------+

查看镜像列表

- adminrc@root@controller:~$openstack image list

- +--------------------------------------+---------+--------+

- | ID | Name | Status |

- +--------------------------------------+---------+--------+

- | 39d73bcf-e60b-4caf--cca17de00d7e | cirrors | active |

- +--------------------------------------+---------+--------+

也可以直接去机器上glance对应的的images目录下查看

- adminrc@root@controller:~$ls /var/lib/glance/images/

- 39d73bcf-e60b-4caf--cca17de00d7e

遇到的问题

错误信息

- adminrc@root@controller:~$openstack image create "cirrors" --file cirros-0.3.-x86_64-disk.img --disk-format qcow2 --container-format bare --public

- Service Unavailable: The server is currently unavailable. Please try again at a later time. (HTTP )

- adminrc@root@controller:~$cd /var/log/glance/

- adminrc@root@controller:/var/log/glance$ls

- glance-api.log glance-registry.log

- adminrc@root@controller:/var/log/glance$tail glance-api.log

- -- ::06.887 INFO glance.common.wsgi [-] Started child

- -- ::06.889 INFO eventlet.wsgi.server [-] () wsgi starting up on http://0.0.0.0:9292

- -- ::59.019 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- -- ::59.071 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- -- ::59.071 CRITICAL keystonemiddleware.auth_token [-] Unable to validate token: Identity server rejected authorization necessary to fetch token data

- -- ::59.078 INFO eventlet.wsgi.server [-] 10.0.3.10 - - [/Jan/ ::] "GET /v2/schemas/image HTTP/1.1" 0.170589

- -- ::01.259 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- -- ::01.301 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- -- ::01.302 CRITICAL keystonemiddleware.auth_token [-] Unable to validate token: Identity server rejected authorization necessary to fetch token data

- -- ::01.306 INFO eventlet.wsgi.server [-] 10.0.3.10 - - [/Jan/ ::] "GET /v2/schemas/image HTTP/1.1" 0.089388

- adminrc@root@controller:/var/log/glance$grep -rHn "ERROR"

- adminrc@root@controller:/var/log/glance$grep -rHn "error"

- glance-api.log::-- ::59.019 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- glance-api.log::-- ::59.071 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- glance-api.log::-- ::01.259 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- glance-api.log::-- ::01.301 WARNING keystonemiddleware.auth_token [-] Identity response: {"error": {"message": "The request you have made requires authentication.", "code": , "title": "Unauthorized"}}

- adminrc@root@controller:~$openstack image create "cirrors" --file cirros-0.3.-x86_64-disk.img --disk-format qcow2 --container-format bare --public

- Service Unavailable: The server is currently unavailable. Please try again at a later time. (HTTP )

- adminrc@root@controller:~$tail /var/log/keystone/keystone-wsgi-admin.log

- -- ::32.353 INFO keystone.token.providers.fernet.utils [req-749b2de5-d2be-47e8--083c54fe488d - - - - -] Loaded encryption keys (max_active_keys=) from: /etc/keystone/fernet-keys/

- -- ::32.358 INFO keystone.common.wsgi [req-62e3bb30-ef7b-476a-8f49-dc062c1a9452 - - - - -] POST http://controller:35357/v3/auth/tokens

- -- ::32.552 INFO keystone.token.providers.fernet.utils [req-62e3bb30-ef7b-476a-8f49-dc062c1a9452 - - - - -] Loaded encryption keys (max_active_keys=) from: /etc/keystone/fernet-keys/

- -- ::32.561 INFO keystone.token.providers.fernet.utils [req-2540636c-0a56--adbc-deeaf0063210 - - - - -] Loaded encryption keys (max_active_keys=) from: /etc/keystone/fernet-keys/

- -- ::32.682 INFO keystone.common.wsgi [req-2540636c-0a56--adbc-deeaf0063210 653177098fac40a28734093706299e66 29577090a0e8466ab49cc30a4305f5f8 - 1495769d2bbb44d192eee4c9b2f91ca3 1495769d2bbb44d192eee4c9b2f91ca3] GET http://controller:35357/v3/services/image

- -- ::32.686 WARNING keystone.common.wsgi [req-2540636c-0a56--adbc-deeaf0063210 653177098fac40a28734093706299e66 29577090a0e8466ab49cc30a4305f5f8 - 1495769d2bbb44d192eee4c9b2f91ca3 1495769d2bbb44d192eee4c9b2f91ca3] Could not find service: image

- -- ::32.691 INFO keystone.token.providers.fernet.utils [req-c4a9af14-d206--a693-23055fcb16e3 - - - - -] Loaded encryption keys (max_active_keys=) from: /etc/keystone/fernet-keys/

- -- ::32.807 INFO keystone.common.wsgi [req-c4a9af14-d206--a693-23055fcb16e3 653177098fac40a28734093706299e66 29577090a0e8466ab49cc30a4305f5f8 - 1495769d2bbb44d192eee4c9b2f91ca3 1495769d2bbb44d192eee4c9b2f91ca3] GET http://controller:35357/v3/services?name=image

- -- ::32.816 INFO keystone.token.providers.fernet.utils [req-cc99a9ba-db21--9c32-4eb39b931efa - - - - -] Loaded encryption keys (max_active_keys=) from: /etc/keystone/fernet-keys/

- -- ::32.939 INFO keystone.common.wsgi [req-cc99a9ba-db21--9c32-4eb39b931efa 653177098fac40a28734093706299e66 29577090a0e8466ab49cc30a4305f5f8 - 1495769d2bbb44d192eee4c9b2f91ca3 1495769d2bbb44d192eee4c9b2f91ca3] GET http://controller:35357/v3/services?type=image

解决办法

- 在glance-api.conf和glance-registry.conf文件中

- [keystone_authtoken]

- username = glance

- password =

- 这里跟glance数据库密码搞混了,应该是glance

- 因为上面这条命令openstack user create --domain default --password glance glance

安装nova

- MariaDB [(none)]> create database nova_api;

- Query OK, row affected (0.00 sec)

- MariaDB [(none)]> create database nova;

- Query OK, row affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on nova_api.* to 'nova'@'localhost' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on nova_api.* to 'nova'@'%' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on nova.* to 'nova'@'%' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on nova.* to 'nova'@'localhost' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> \q

- Bye

创建nova用户

- adminrc@root@controller:~$openstack user create --domain default --password nova nova

- +-----------+----------------------------------+

- | Field | Value |

- +-----------+----------------------------------+

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | e4fc73ea1f6d47269ae4ab95ff999326 |

- | name | nova |

- +-----------+----------------------------------+

- 给nova用户添加admin角色

- adminrc@root@controller:~$openstack role add --project service --user nova admin

创建nova服务实体

- adminrc@root@controller:~$openstack role add --project service --user nova admin

- adminrc@root@controller:~$openstack service create --name nova --description "OpenStack Compute" compute

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | OpenStack Compute |

- | enabled | True |

- | id | 872de5b67b1547adb4826ca1f7ef96b3 |

- | name | nova |

- | type | compute |

- +-------------+----------------------------------+

创建compute服务api端点

- adminrc@root@controller:~$openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1/%\(tenant_id\)s

- +--------------+-------------------------------------------+

- | Field | Value |

- +--------------+-------------------------------------------+

- | enabled | True |

- | id | 8e42256f67e446cc88568903286ed462 |

- | interface | public |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 872de5b67b1547adb4826ca1f7ef96b3 |

- | service_name | nova |

- | service_type | compute |

- | url | http://controller:8774/v2.1/%(tenant_id)s |

- +--------------+-------------------------------------------+

- adminrc@root@controller:~$openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1/%\(tenant_id\)s

- +--------------+-------------------------------------------+

- | Field | Value |

- +--------------+-------------------------------------------+

- | enabled | True |

- | id | b07f3be5fff4444db57323bb04376d33 |

- | interface | internal |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 872de5b67b1547adb4826ca1f7ef96b3 |

- | service_name | nova |

- | service_type | compute |

- | url | http://controller:8774/v2.1/%(tenant_id)s |

- +--------------+-------------------------------------------+

- adminrc@root@controller:~$openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1/%\(tenant_id\)s

- +--------------+-------------------------------------------+

- | Field | Value |

- +--------------+-------------------------------------------+

- | enabled | True |

- | id | 91dc56e437e640c397696318ee1dcc21 |

- | interface | admin |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | 872de5b67b1547adb4826ca1f7ef96b3 |

- | service_name | nova |

- | service_type | compute |

- | url | http://controller:8774/v2.1/%(tenant_id)s |

- +--------------+-------------------------------------------+

安装nova组件包

- adminrc@root@controller:~$apt-get install -y nova-api nova-conductor nova-consoleauth nova-novncproxy nova-scheduler

配置

- adminrc@root@controller:~$cp /etc/nova/nova.conf{,.bak}

- adminrc@root@controller:~$vim /etc/nova/nova.conf

- [DEFAULT]

- ........

- rpc_backend=rabbit

- auth_strategy=keystone

- my_ip=10.0.3.10

- use_neutron=True

- firewall_driver=nova.virt.firewall.NoopFirewallDriver

- [database]

- connection=mysql+pymysql://nova:123456@controller/nova

- [api_database]

- connection=mysql+pymysql://nova:123456@controller/nova_api

- [oslo_messaging_rabbit]

- rabbit_host = controller

- rabbit_userid = openstack

- rabbit_password =

- [keystone_authtoken]

- auth_uri = http://controller:5000

- auth_url = http://controller:35357

- memcached_servers = controller:

- auth_type = password

- project_domain_name = default

- user_domain_name = default

- project_name = service

- username = nova

- password = nova

- [vnc]

- vncserver_listen = 0.0.0.0

- vncserver_proxyclient_address = 0.0.0.0

- [oslo_concurrency]

- lock_path = /var/lib/nova/tmp

同步数据库

- adminrc@root@controller:~$su -s /bin/sh -c "nova-manage api_db sync" nova

- Option "logdir" from group "DEFAULT" is deprecated. Use option "log-dir" from group "DEFAULT".

- Option "verbose" from group "DEFAULT" is deprecated for removal. Its value may be silently ignored in the future.

- ...........

- -- ::43.731 INFO migrate.versioning.api [-] done

- adminrc@root@controller:~$echo $?

- adminrc@root@controller:~$su -s /bin/sh -c "nova-manage db sync" nova

- .......

- -- ::19.955 INFO migrate.versioning.api [-] done

- adminrc@root@controller:~$echo $?

重启服务

- adminrc@root@controller:~$service nova-api restart

- nova-api stop/waiting

- nova-api start/running, process

- adminrc@root@controller:~$service nova-consoleauth restart

- nova-consoleauth stop/waiting

- nova-consoleauth start/running, process

- adminrc@root@controller:~$service nova-scheduler restart

- nova-scheduler stop/waiting

- nova-scheduler start/running, process

- adminrc@root@controller:~$service nova-conductor restart

- nova-conductor stop/waiting

- nova-conductor start/running, process

- adminrc@root@controller:~$service nova-novncproxy restart

- nova-novncproxy stop/waiting

- nova-novncproxy start/running, process

查看服务是否启动起来

- adminrc@root@controller:/var/log/nova$openstack compute service list

- +----+------------------+------------+----------+---------+-------+----------------------------+

- | Id | Binary | Host | Zone | Status | State | Updated At |

- +----+------------------+------------+----------+---------+-------+----------------------------+

- | | nova-consoleauth | controller | internal | enabled | up | --14T23::50.000000 |

- | | nova-scheduler | controller | internal | enabled | up | --14T23::46.000000 |

- | | nova-conductor | controller | internal | enabled | up | --14T23::49.000000 |

- +----+------------------+------------+----------+---------+-------+----------------------------+

安装nova-compute节点,因为这里是单节点安装,所以nova-compute也是安装在controller节点上

- adminrc@root@controller:~$apt-get install nova-compute

重新配置nova.conf

- adminrc@root@controller:~$cp /etc/nova/nova.conf{,.back}

- adminrc@root@controller:~$vim /etc/nova/nova.conf #其他项保持不变

- [vnc]

- enabled = True

- vncserver_listen = 0.0.0.0

- vncserver_proxyclient_address = $my_ip

- novncproxy_base_url = http://192.168.56.10:6080/vnc_auto.html

确定计算节点是否支持虚拟机硬件加速

- adminrc@root@controller:~$egrep -c '(vmx|svm)' /proc/cpuinfo

- # 不支持

- 需要更改nova-compute.conf文件

- adminrc@root@controller:~$cp /etc/nova/nova-compute.conf{,.bak}

- adminrc@root@controller:~$vim /etc/nova/nova-compute.conf

- [libvirt]

- virt_type=qemu #原值是kvm

- 重启计算服务

- adminrc@root@controller:~$service nova-compute restart

- nova-compute stop/waiting

- nova-compute start/running, process

- adminrc@root@controller:~$openstack compute service list

- +----+------------------+------------+----------+---------+-------+----------------------------+

- | Id | Binary | Host | Zone | Status | State | Updated At |

- +----+------------------+------------+----------+---------+-------+----------------------------+

- | | nova-consoleauth | controller | internal | enabled | up | --15T00::51.000000 |

- | | nova-scheduler | controller | internal | enabled | up | --15T00::57.000000 |

- | | nova-conductor | controller | internal | enabled | up | --15T00::50.000000 |

- | | nova-compute | controller | nova | enabled | up | --15T00::54.000000 |

- +----+------------------+------------+----------+---------+-------+----------------------------+

- 如果查看nova-api服务的话,需要

- adminrc@root@controller:~$service nova-api status

- nova-api start/running, process

安装网络neutron服务

- MariaDB [(none)]> create database neutron;

- Query OK, row affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on neutron.* to 'neutron'@'localhost' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> grant all privileges on neutron.* to 'neutron'@'%' identified by '';

- Query OK, rows affected (0.00 sec)

- MariaDB [(none)]> \q

创建neutron用户

- adminrc@root@controller:~$openstack user create --domain default --password neutron neutron

- +-----------+----------------------------------+

- | Field | Value |

- +-----------+----------------------------------+

- | domain_id | 1495769d2bbb44d192eee4c9b2f91ca3 |

- | enabled | True |

- | id | 081dc309806c45198a3bd6c39bf9947f |

- | name | neutron |

- +-----------+----------------------------------+

- adminrc@root@controller:~$openstack role add --project service --user neutron admin

- adminrc@root@controller:~$

创建neutron服务实体

- adminrc@root@controller:~$openstack service create --name neutron --description "OpenStack Networking" network

- +-------------+----------------------------------+

- | Field | Value |

- +-------------+----------------------------------+

- | description | OpenStack Networking |

- | enabled | True |

- | id | c661b602f11d45cfb068027c77fd519e |

- | name | neutron |

- | type | network |

- +-------------+----------------------------------+

创建neutron服务端点

- adminrc@root@controller:~$openstack endpoint create --region RegionOne network public http://controller:9696

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | 0192ba47a7b348ec88bb5f71c82f8f4c |

- | interface | public |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | c661b602f11d45cfb068027c77fd519e |

- | service_name | neutron |

- | service_type | network |

- | url | http://controller:9696 |

- +--------------+----------------------------------+

- adminrc@root@controller:~$openstack endpoint create --region RegionOne network internal http://controller:9696

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | bdf4b9663ccb4ef695cde0638231943a |

- | interface | internal |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | c661b602f11d45cfb068027c77fd519e |

- | service_name | neutron |

- | service_type | network |

- | url | http://controller:9696 |

- +--------------+----------------------------------+

- adminrc@root@controller:~$openstack endpoint create --region RegionOne network admin http://controller:9696

- +--------------+----------------------------------+

- | Field | Value |

- +--------------+----------------------------------+

- | enabled | True |

- | id | ffc7a793985e494fa839fd76ea5bdcef |

- | interface | admin |

- | region | RegionOne |

- | region_id | RegionOne |

- | service_id | c661b602f11d45cfb068027c77fd519e |

- | service_name | neutron |

- | service_type | network |

- | url | http://controller:9696 |

- +--------------+----------------------------------+

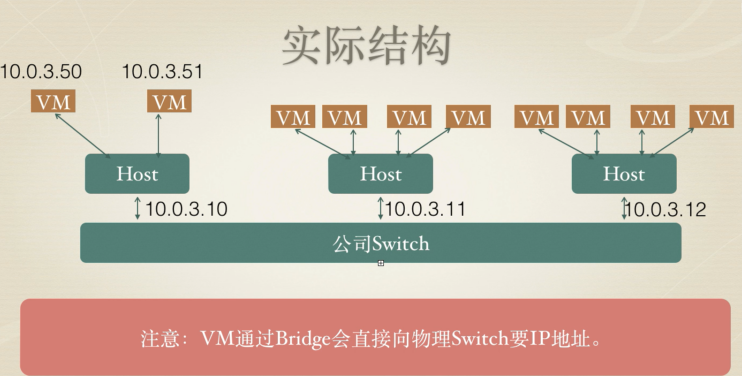

配置网络选项,网络选项有两种:

1.公共网络

2.私有网络

对于公共网络

首先安装安全组件

- adminrc@root@controller:~$apt-get install -y neutron-server neutron-plugin-ml2 neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

- adminrc@root@controller:~$cp /etc/neutron/neutron.conf{,.bak}

- adminrc@root@controller:~$vim /etc/neutron/neutron.conf

- #需要更改的地方

- [database]

- connection = mysql+pymysql://neutron:123456@controller/neutron

- [DEFAULT]

- rpc_backend = rabbit

- core_plugin = ml2

- service_plugins =

- auth_strategy = keystone

- notify_nova_on_port_status_changes = True

- notify_nova_on_port_data_changes = True

- [oslo_messaging_rabbit]

- rabbit_host = controller

- rabbit_userid = openstack

- rabbit_password = RABBIT_PASS

- [keystone_authtoken]

- auth_uri = http://controller:5000

- auth_url = http://controller:35357

- memcached_servers = controller:

- auth_type = password

- project_domain_name = default

- user_domain_name = default

- project_name = service

- username = neutron

- password = neutron

- [nova]

- auth_url = http://controller:35357

- auth_type = password

- project_domain_name = default

- user_domain_name = default

- region_name = RegionOne

- project_name = service

- username = nova

- password = nova

配置ML2插件

- adminrc@root@controller:~$cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

- adminrc@root@controller:~$vim /etc/neutron/plugins/ml2/ml2_conf.ini

- # 需要更改的项

- [ml2]

- type_drivers = flat,vlan

- tenant_network_types =

- mechanism_drivers = linuxbridge

- extension_drivers = port_security

- [ml2_type_flat]

- flat_networks = provider

- [securitygroup]

- enable_ipset = True

配置linuxbridge.ini

- adminrc@root@controller:~$cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

- adminrc@root@controller:~$vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

- [linux_bridge]

- physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

- [vxlan]

- enable_vxlan = False

- [securitygroup]

- enable_security_group = True

- firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置dhcp_agent.ini

- adminrc@root@controller:~$cp /etc/neutron/dhcp_agent.ini{,.bak}

- adminrc@root@controller:~$vim /etc/neutron/dhcp_agent.ini

- interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

- dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

- enable_isolated_metadata = True

配置元数据代理

- adminrc@root@controller:~$cp /etc/neutron/metadata_agent.ini{,.bak}

- adminrc@root@controller:~$vim /etc/neutron/metadata_agent.ini

- [DEFAULT]

- nova_metadata_ip = controller

- metadata_proxy_shared_secret = METADATA_SECRET

配置计算节点网络服务

- adminrc@root@controller:~$vim /etc/nova/nova.conf

- [neutron] 末尾添加这些内容

- url = http://controller:9696

- auth_url = http://controller:35357

- auth_type = password

- project_domain_name = default

- user_domain_name = default

- region_name = RegionOne

- project_name = service

- username = neutron

- password = NEUTRON_PASS

- service_metadata_proxy = True

- metadata_proxy_shared_secret = METADATA_SECRET

同步数据库

- su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

- --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

重启计算API服务、Networking服务

- adminrc@root@controller:~$service nova-api restart

- nova-api stop/waiting

- nova-api start/running, process

- adminrc@root@controller:~$service neutron-server restart

- neutron-server stop/waiting

- neutron-server start/running, process

- adminrc@root@controller:~$service neutron-server restart

- neutron-server stop/waiting

- neutron-server start/running, process

- adminrc@root@controller:~$service neutron-linuxbridge-agent restart

- neutron-linuxbridge-agent stop/waiting

- neutron-linuxbridge-agent start/running, process

- adminrc@root@controller:~$service neutron-dhcp-agent restart

- neutron-dhcp-agent stop/waiting

- neutron-dhcp-agent start/running, process

- adminrc@root@controller:~$service neutron-metadata-agent restart

- neutron-metadata-agent stop/waiting

- neutron-metadata-agent start/running, process

重启neutron-l3-agent

- adminrc@root@controller:~$service neutron-l3-agent restart

- neutron-l3-agent stop/waiting

- neutron-l3-agent start/running, process

重启

- adminrc@root@controller:~$service nova-compute restart

- nova-compute stop/waiting

- nova-compute start/running, process

- adminrc@root@controller:~$service neutron-linuxbridge-agent restart

- neutron-linuxbridge-agent stop/waiting

- neutron-linuxbridge-agent start/running, process

查看是否有网络创建

- adminrc@root@controller:~$openstack network list

输出为空,因为还没有创建任何网络

验证neutron-server是否正常启动

- adminrc@root@controller:~$neutron ext-list

- +---------------------------+-----------------------------------------------+

- | alias | name |

- +---------------------------+-----------------------------------------------+

- | default-subnetpools | Default Subnetpools |

- | availability_zone | Availability Zone |

- | network_availability_zone | Network Availability Zone |

- | auto-allocated-topology | Auto Allocated Topology Services |

- | binding | Port Binding |

- | agent | agent |

- | subnet_allocation | Subnet Allocation |

- | dhcp_agent_scheduler | DHCP Agent Scheduler |

- | tag | Tag support |

- | external-net | Neutron external network |

- | net-mtu | Network MTU |

- | network-ip-availability | Network IP Availability |

- | quotas | Quota management support |

- | provider | Provider Network |

- | multi-provider | Multi Provider Network |

- | address-scope | Address scope |

- | timestamp_core | Time Stamp Fields addition for core resources |

- | extra_dhcp_opt | Neutron Extra DHCP opts |

- | security-group | security-group |

- | rbac-policies | RBAC Policies |

- | standard-attr-description | standard-attr-description |

- | port-security | Port Security |

- | allowed-address-pairs | Allowed Address Pairs |

- +---------------------------+-----------------------------------------------+

验证

- adminrc@root@controller:~$neutron agent-list

- +--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

- | id | agent_type | host | availability_zone | alive | admin_state_up | binary |

- +--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

- | 0cafd3ff-6da0--a6dd-9a60136af93a | DHCP agent | controller | nova | :-) | True | neutron-dhcp-agent |

- | 53fce606-311d--8af0-efd6f9087e34 | Open vSwitch agent | controller | | :-) | True | neutron-openvswitch-agent |

- | b5dffa68-a505-448f-8fa6-7d8bb16eb07a | Linux bridge agent | controller | | :-) | True | neutron-linuxbridge-agent |

- | dc161e12-8b23-4f49--b7d68cfe2197 | Metadata agent | controller | | :-) | True | neutron-metadata-agent |

- +--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

创建一个实例

首先需要创建一个虚拟网络

创建一个提供者网络

- adminrc@root@controller:~$neutron net-create --shared --provider:physical_network provider --provider:network_type flat provider

- Invalid input for operation: network_type value 'flat' not supported.

- Neutron server returns request_ids: ['req-e9d3cb26-4156-4eb1-bc9e-9528dbbd1dc9']

根据错误提示,需要检查下ml2.conf.ini文件

- [ml2]

- type_drivers = flat,vlan #确认这行内容有flat

重启服务再次运行创建网络

- adminrc@root@controller:~$service neutron-server restart

- neutron-server stop/waiting

- neutron-server start/running, process

- adminrc@root@controller:~$neutron net-create --shared --provider:physical_network provider --provider:network_type flat provider

- Created a new network:

- +---------------------------+--------------------------------------+

- | Field | Value |

- +---------------------------+--------------------------------------+

- | admin_state_up | True |

- | availability_zone_hints | |

- | availability_zones | |

- | created_at | --15T12:: |

- | description | |

- | id | ab73ff8f-2d19--811c-85c068290eeb |

- | ipv4_address_scope | |

- | ipv6_address_scope | |

- | mtu | |

- | name | provider |

- | port_security_enabled | True |

- | provider:network_type | flat |

- | provider:physical_network | provider |

- | provider:segmentation_id | |

- | router:external | False |

- | shared | True |

- | status | ACTIVE |

- | subnets | |

- | tags | |

- | tenant_id | 29577090a0e8466ab49cc30a4305f5f8 |

- | updated_at | --15T12:: |

- +---------------------------+--------------------------------------+

接着创建一个子网

- adminrc@root@controller:~$neutron subnet-create --name provider --allocation-pool start=10.0.3.50,end=10.0.3.253 --dns-nameserver 114.114.114.114 --gateway 10.0.3.1 provider 10.0.3.0/

- Created a new subnet:

- +-------------------+---------------------------------------------+

- | Field | Value |

- +-------------------+---------------------------------------------+

- | allocation_pools | {"start": "10.0.3.50", "end": "10.0.3.253"} |

- | cidr | 10.0.3.0/ |

- | created_at | --15T12:: |

- | description | |

- | dns_nameservers | 114.114.114.114 |

- | enable_dhcp | True |

- | gateway_ip | 10.0.3.1 |

- | host_routes | |

- | id | 48faef6d-ee9d-4b46-a56d-3c196a766224 |

- | ip_version | |

- | ipv6_address_mode | |

- | ipv6_ra_mode | |

- | name | provider |

- | network_id | ab73ff8f-2d19--811c-85c068290eeb |

- | subnetpool_id | |

- | tenant_id | 29577090a0e8466ab49cc30a4305f5f8 |

- | updated_at | --15T12:: |

- +-------------------+---------------------------------------------+

接着创建一个虚拟主机

- adminrc@root@controller:~$openstack flavor create --id --vcpus --ram --disk m1.nano

- +----------------------------+---------+

- | Field | Value |

- +----------------------------+---------+

- | OS-FLV-DISABLED:disabled | False |

- | OS-FLV-EXT-DATA:ephemeral | |

- | disk | |

- | id | |

- | name | m1.nano |

- | os-flavor-access:is_public | True |

- | ram | |

- | rxtx_factor | 1.0 |

- | swap | |

- | vcpus | |

- +----------------------------+---------+

生成一个键值对

- adminrc@root@controller:~$pwd

- /home/openstack

- adminrc@root@controller:~$ssh-keygen

- Generating public/private rsa key pair.

- Enter file in which to save the key (/root/.ssh/id_rsa):

- Created directory '/root/.ssh'.

- Enter passphrase (empty for no passphrase):

- Enter same passphrase again:

- Your identification has been saved in /root/.ssh/id_rsa.

- Your public key has been saved in /root/.ssh/id_rsa.pub.

- The key fingerprint is:

- 8a:e5:a2:f3:f4:1e::1a:c1:8d::d1:fd:fa:4b: root@controller

- The key's randomart image is:

- +--[ RSA ]----+

- | |

- | . . |

- | . . . |

- | . o . . |

- | + = S . . E|

- | B o . . . |

- | = * . . |

- | .o = o o |

- | .oo.o o. |

- +-----------------+

- adminrc@root@controller:~$ls -al /root/.ssh/id_rsa.pub

- -rw-r--r-- root root Jan : /root/.ssh/id_rsa.pub

添加密钥对

- adminrc@root@controller:~$openstack keypair create --public-key /root/.ssh/id_rsa.pub rootkey

- +-------------+-------------------------------------------------+

- | Field | Value |

- +-------------+-------------------------------------------------+

- | fingerprint | 8a:e5:a2:f3:f4:1e::1a:c1:8d::d1:fd:fa:4b: |

- | name | rootkey |

- | user_id | 653177098fac40a28734093706299e66 |

- +-------------+-------------------------------------------------+

验证密钥对

- adminrc@root@controller:~$openstack keypair list

- +---------+-------------------------------------------------+

- | Name | Fingerprint |

- +---------+-------------------------------------------------+

- | rootkey | 8a:e5:a2:f3:f4:1e::1a:c1:8d::d1:fd:fa:4b: |

- +---------+-------------------------------------------------+

增加安全组规则

- adminrc@root@controller:~$openstack security group rule create --proto icmp default

- +-----------------------+--------------------------------------+

- | Field | Value |

- +-----------------------+--------------------------------------+

- | id | a4c8ad46-42eb--b09f-af5dcfef2ad1 |

- | ip_protocol | icmp |

- | ip_range | 0.0.0.0/ |

- | parent_group_id | 968f5f33-c569-46b4--8a3f614ae670 |

- | port_range | |

- | remote_security_group | |

- +-----------------------+--------------------------------------+

- adminrc@root@controller:~$openstack security group rule create --proto tcp --dst-port default

- +-----------------------+--------------------------------------+

- | Field | Value |

- +-----------------------+--------------------------------------+

- | id | 8ed34a22----94ec284e4764 |

- | ip_protocol | tcp |

- | ip_range | 0.0.0.0/ |

- | parent_group_id | 968f5f33-c569-46b4--8a3f614ae670 |

- | port_range | : |

- | remote_security_group | |

- +-----------------------+--------------------------------------+

开始创建实例

- # 列出可用类型

- adminrc@root@controller:~$openstack flavor list

- +----+-----------+-------+------+-----------+-------+-----------+

- | ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public |

- +----+-----------+-------+------+-----------+-------+-----------+

- | | m1.nano | | | | | True |

- | | m1.tiny | | | | | True |

- | | m1.small | | | | | True |

- | | m1.medium | | | | | True |

- | | m1.large | | | | | True |

- | | m1.xlarge | | | | | True |

- +----+-----------+-------+------+-----------+-------+-----------+

- # 列出可用镜像

- adminrc@root@controller:~$openstack image list

- +--------------------------------------+---------+--------+

- | ID | Name | Status |

- +--------------------------------------+---------+--------+

- | 39d73bcf-e60b-4caf--cca17de00d7e | cirrors | active |

- +--------------------------------------+---------+--------+

- # 列出可用网络

- adminrc@root@controller:~$openstack network list

- +--------------------------------------+----------+--------------------------------------+

- | ID | Name | Subnets |

- +--------------------------------------+----------+--------------------------------------+

- | ab73ff8f-2d19--811c-85c068290eeb | provider | 48faef6d-ee9d-4b46-a56d-3c196a766224 |

- +--------------------------------------+----------+--------------------------------------+

- # 列出可用安全组规则

- adminrc@root@controller:~$openstack security group list

- +--------------------------------------+---------+------------------------+----------------------------------+

- | ID | Name | Description | Project |

- +--------------------------------------+---------+------------------------+----------------------------------+

- | 968f5f33-c569-46b4--8a3f614ae670 | default | Default security group | 29577090a0e8466ab49cc30a4305f5f8 |

- +--------------------------------------+---------+------------------------+----------------------------------+

- # 创建实例

- adminrc@root@controller:~$openstack server create --flavor m1.nano --image cirros --nic net-id=ab73ff8f-2d19--811c-85c068290eeb --security-group default --key-name rootkey test-instance

- No image with a name or ID of 'cirros' exists.

- # 好吧 又有事情了

- # 再次查看可用镜像,好像发现问题所在了,我输入的是cirros,而可用镜像的name的值cirrors。

- adminrc@root@controller:~$openstack image list

- +--------------------------------------+---------+--------+

- | ID | Name | Status |

- +--------------------------------------+---------+--------+

- | 39d73bcf-e60b-4caf--cca17de00d7e | cirrors | active |

- +--------------------------------------+---------+--------+

- adminrc@root@controller:~$openstack server create --flavor m1.nano --image cirrors --nic net-id=ab73ff8f-2d19--811c-85c068290eeb --security-group default --key-name rootkey test-instance

- +--------------------------------------+------------------------------------------------+

- | Field | Value |

- +--------------------------------------+------------------------------------------------+

- | OS-DCF:diskConfig | MANUAL |

- | OS-EXT-AZ:availability_zone | |

- | OS-EXT-SRV-ATTR:host | None |

- | OS-EXT-SRV-ATTR:hypervisor_hostname | None |

- | OS-EXT-SRV-ATTR:instance_name | instance- |

- | OS-EXT-STS:power_state | |

- | OS-EXT-STS:task_state | scheduling |

- | OS-EXT-STS:vm_state | building |

- | OS-SRV-USG:launched_at | None |

- | OS-SRV-USG:terminated_at | None |

- | accessIPv4 | |

- | accessIPv6 | |

- | addresses | |

- | adminPass | WeVy7yd6BXcc |

- | config_drive | |

- | created | --15T13::19Z |

- | flavor | m1.nano () |

- | hostId | |

- | id | 9eb49f96-7d68--bb37-7583e457edc6 |

- | image | cirrors (39d73bcf-e60b-4caf--cca17de00d7e) |

- | key_name | rootkey |

- | name | test-instance |

- | os-extended-volumes:volumes_attached | [] |

- | progress | |

- | project_id | 29577090a0e8466ab49cc30a4305f5f8 |

- | properties | |

- | security_groups | [{u'name': u'default'}] |

- | status | BUILD |

- | updated | --15T13::20Z |

- | user_id | 653177098fac40a28734093706299e66 |

- +--------------------------------------+------------------------------------------------+

- 创建成功

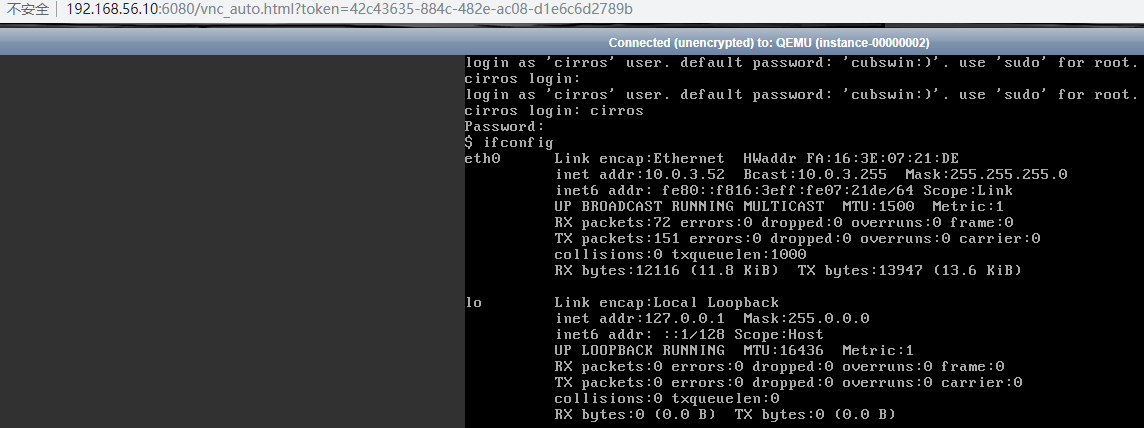

查看相关实例

- adminrc@root@controller:~$openstack server list

- +--------------------------------------+---------------+--------+--------------------+

- | ID | Name | Status | Networks |

- +--------------------------------------+---------------+--------+--------------------+

- | 9eb49f96-7d68--bb37-7583e457edc6 | test-instance | ACTIVE | provider=10.0.3.51 |

- +--------------------------------------+---------------+--------+--------------------+

- adminrc@root@controller:~$nova image-list

- +--------------------------------------+---------+--------+--------+

- | ID | Name | Status | Server |

- +--------------------------------------+---------+--------+--------+

- | 39d73bcf-e60b-4caf--cca17de00d7e | cirrors | ACTIVE | |

- +--------------------------------------+---------+--------+--------+

- adminrc@root@controller:~$glance image-list

- +--------------------------------------+---------+

- | ID | Name |

- +--------------------------------------+---------+

- | 39d73bcf-e60b-4caf--cca17de00d7e | cirrors |

- +--------------------------------------+---------+

- adminrc@root@controller:~$nova list

- +--------------------------------------+---------------+--------+------------+-------------+--------------------+

- | ID | Name | Status | Task State | Power State | Networks |

- +--------------------------------------+---------------+--------+------------+-------------+--------------------+

- | 9eb49f96-7d68--bb37-7583e457edc6 | test-instance | ACTIVE | - | Running | provider=10.0.3.51 |

- +--------------------------------------+---------------+--------+------------+-------------+--------------------+

启动实例的命令

- adminrc@root@controller:~$openstack boot --flavor m1.nano --image cirrors --nic net-id=ab73ff8f-2d19--811c-85c068290eeb --security-group default --key-name rootkey test-instance

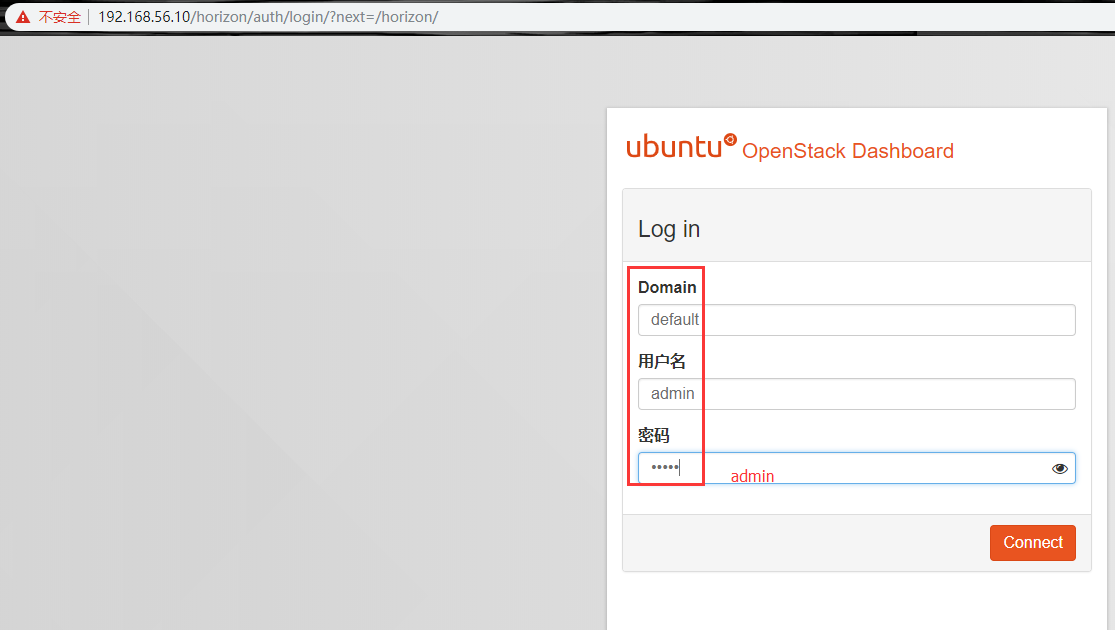

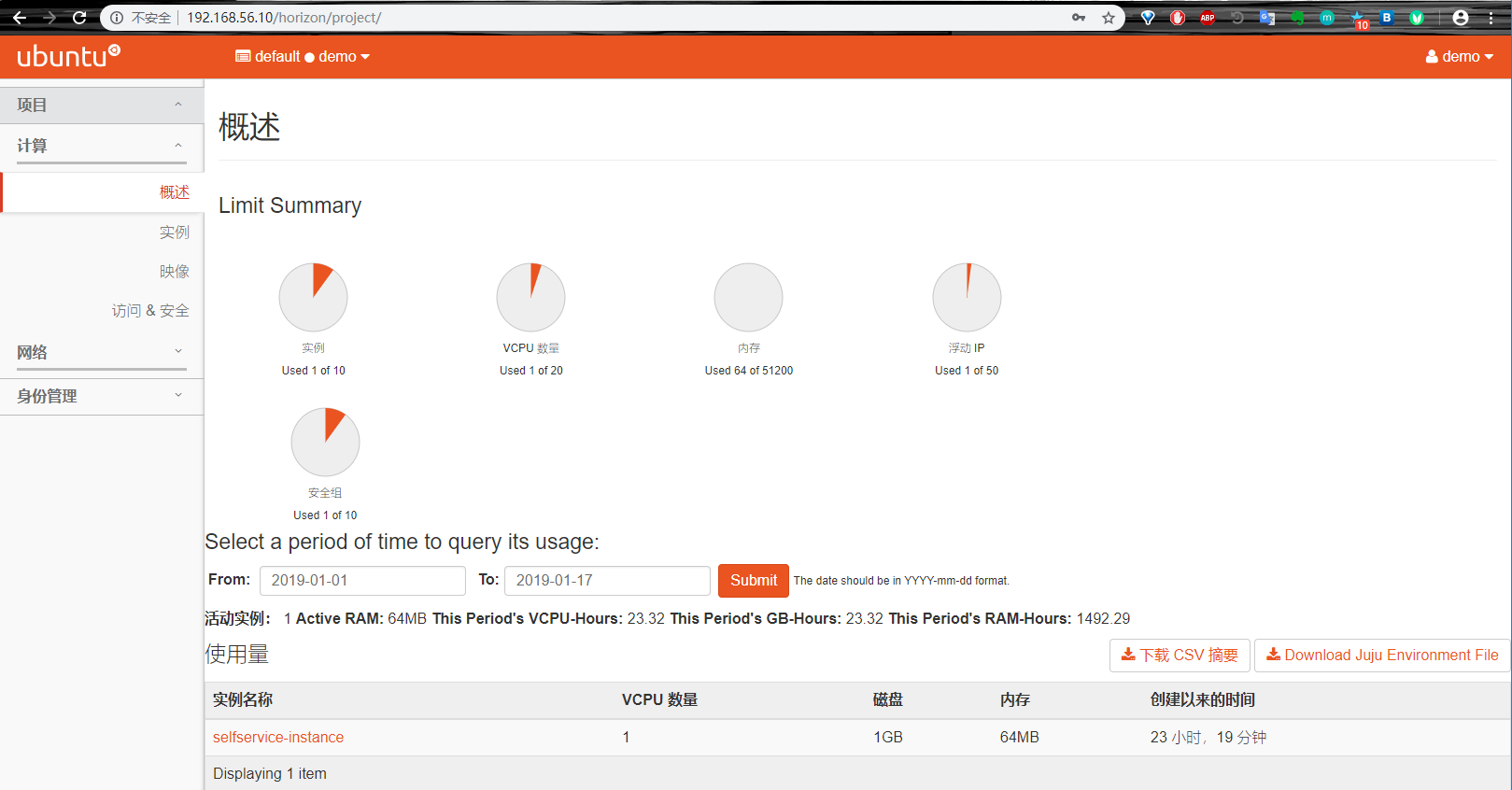

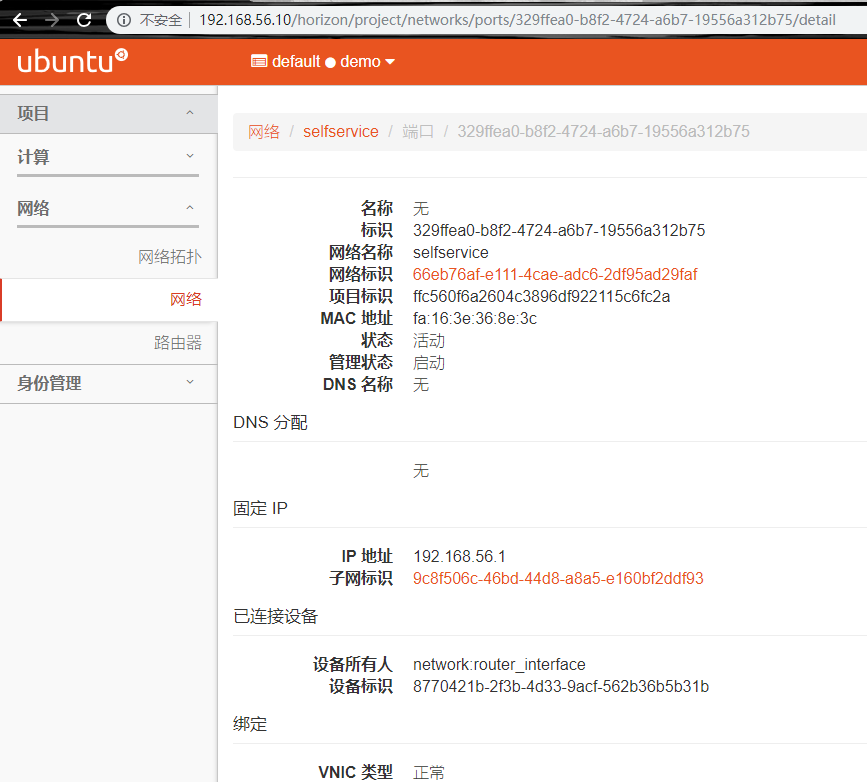

debug