Design Of A Modern Cache

http://highscalability.com/blog/2016/1/25/design-of-a-modern-cache.html

This is a guest post by Benjamin Manes, who did engineery things for Google and is now doing engineery things for a new load documentation startup, LoadDocs.

Caching is a common approach for improving performance, yet most implementations use strictly classical techniques. In this article we will explore the modern methods used by Caffeine, an open-source Java caching library, that yield high hit rates and excellent concurrency. These ideas can be translated to your favorite language and hopefully some readers will be inspired to do just that.

Eviction Policy

A cache’s eviction policy tries to predict which entries are most likely to be used againin the near future, thereby maximizing the hit ratio. The Least Recently Used (LRU) policy is perhaps the most popular due to its simplicity, good runtime performance, and a decent hit rate in common workloads. Its ability to predict the future is limited to the history of the entries residing in the cache, preferring to give the last access the highest priority by guessing that it is the most likely to be reused again soon.

Modern caches extend the usage history to include the recent past and give preference to entries based on recency and frequency. One approach for retaining history is to use a popularity sketch (a compact, probabilistic data structure) to identify the “heavy hitters” in a large stream of events. Take for example CountMin Sketch, which uses a matrix of counters and multiple hash functions. The addition of an entry increments a counter in each row and the frequency is estimated by taking the minimum value observed. This approach lets us tradeoff between space, efficiency, and the error rate due to collisions by adjusting the matrix’s width and depth.

Window TinyLFU (W-TinyLFU) uses the sketch as a filter, admitting a new entry if it has a higher frequency than the entry that would have to be evicted to make room for it. Instead of filtering immediately, an admission window gives an entry a chance to build up its popularity. This avoids consecutive misses, especially in cases like sparse bursts where an entry may not be deemed suitable for long-term retention. To keep the history fresh an aging process is performed periodically or incrementally to halve all of the counters.

W-TinyLFU uses the Segmented LRU (SLRU) policy for long term retention. An entry starts in the probationary segment and on a subsequent access it is promoted to the protected segment (capped at 80% capacity). When the protected segment is full it evicts into the probationary segment, which may trigger a probationary entry to be discarded. This ensures that entries with a small reuse interval (the hottest) are retained and those that are less often reused (the coldest) become eligible for eviction.

As the database and search traces show, there is a lot of opportunity to improve upon LRU by taking into account recency and frequency. More advanced policies such as ARC, LIRS, andW-TinyLFU narrow the gap to provide a near optimal hit rate. For additional workloads see the research papers and try our simulator if you have your own traces to experiment with.

Expiration Policy

Expiration is often implemented as variable per entry and expired entries are evicted lazily due to a capacity constraint. This pollutes the cache with dead items, so sometimes a scavenger thread is used to periodically sweep the cache and reclaim free space. This strategy tends to work better than ordering entries by their expiration time on a O(lg n) priority queue due to hiding the cost from the user instead of incurring a penalty on every read or write operation.

Caffeine takes a different approach by observing that most often a fixed duration is preferred. This constraint allows for organizing entries on O(1) time ordered queues. A time to live duration is a write order queue and a time to idle duration is an access order queue. The cache can reuse the eviction policy’s queues and the concurrency mechanism described below, so that expired entries are discarded during the cache’s maintenance phase.

Concurrency

Concurrent access to a cache is viewed as a difficult problem because in most policies every access is a write to some shared state. The traditional solution is to guard the cache with a single lock. This might then be improved through lock striping by splitting the cache into many smaller independent regions. Unfortunately that tends to have a limited benefit due to hot entries causing some locks to be more contented than others. When contention becomes a bottleneck the next classic step has been to update only per entry metadata and use either a random sampling or a FIFO-based eviction policy. Those techniques can have great read performance, poor write performance, and difficulty in choosing a good victim.

An alternative is to borrow an idea from database theory where writes are scaled by using a commit log. Instead of mutating the data structures immediately, the updates are written to a log and replayed in asynchronous batches. This same idea can be applied to a cache by performing the hash table operation, recording the operation to a buffer, and scheduling the replay activity against the policy when deemed necessary. The policy is still guarded by a lock, or a try lock to be more precise, but shifts contention onto appending to the log buffers instead.

In Caffeine separate buffers are used for cache reads and writes. An access is recorded into a striped ring buffer where the stripe is chosen by a thread specific hash and the number of stripes grows when contention is detected. When a ring buffer is full an asynchronous drain is scheduled and subsequent additions to that buffer are discarded until space becomes available. When the access is not recorded due to a full buffer the cached value is still returned to the caller. The loss of policy information does not have a meaningful impact because W-TinyLFU is able to identify the hot entries that we wish to retain. By using a thread-specific hash instead of the key’s hash the cache avoids popular entries from causing contention by more evenly spreading out the load.

In the case of a write a more traditional concurrent queue is used and every change schedules an immediate drain. While data loss is unacceptable, there are still ways to optimize the write buffer. Both types of buffers are written to by multiple threads but only consumed by a single one at a given time. This multiple producer / single consumer behavior allows for simpler, more efficient algorithms to be employed.

The buffers and fine grained writes introduce a race condition where operations for an entry may be recorded out of order. An insertion, read, update, and removal can be replayed in any order and if improperly handled the policy could retain dangling references. The solution to this is a state machine defining the lifecycle of an entry.

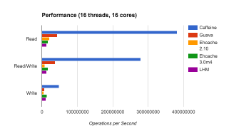

In benchmarks the cost of the buffers is relatively cheap and scales with the underlying hash table. Reads scale linearly with the number of CPUs at about 33% of the hash table’s throughput. Writes have a 10% penalty, but only because contention when updating the hash table is the dominant cost.

Conclusion

There are many pragmatic topics that have not been covered. This could include tricks to minimize the memory overhead, testing techniques to retain quality as complexity grows, and ways to analyze performance to determine whether a optimization is worthwhile. These are areas that practitioners must keep an eye on, because once neglected it can be difficult to restore confidence in one’s own ability to manage the ensuing complexity.

The design and implementation of Caffeine is the result of numerous insights and the hard work of many contributors. Its evolution over the years wouldn’t have been possible without the help from the following people: Charles Fry, Adam Zell, Gil Einziger, Roy Friedman, Kevin Bourrillion, Bob Lee, Doug Lea, Josh Bloch, Bob Lane, Nitsan Wakart, Thomas Müeller, Dominic Tootell, Louis Wasserman, and Vladimir Blagojevic. Thanks to Nitsan Wakart, Adam Zell, Roy Friedman, and Will Chu for their feedback on drafts of this article.

Related Articles

- On HackerNews

- On Reddit

- TinyLFU - cache admission policy

Design Of A Modern Cache的更多相关文章

- Design a high performance cache for multi-threaded environment

如何设计一个支持高并发的高性能缓存库 不 考虑并发情况下的缓存的设计大家应该都比较清楚,基本上就是用map/hashmap存储键值,然后用双向链表记录一个LRU来用于缓存的清理.这篇文章 应该是讲得很 ...

- Cheatsheet: 2016 01.01 ~ 01.31

Mobile An Introduction to Cordova: Basics Web Angular 2 versus React: There Will Be Blood How to Bec ...

- 高性能缓存 Caffeine 原理及实战

一.简介 Caffeine 是基于Java 8 开发的.提供了近乎最佳命中率的高性能本地缓存组件,Spring5 开始不再支持 Guava Cache,改为使用 Caffeine. 下面是 Caffe ...

- Networked Graphics: Building Networked Games and Virtual Environments (Anthony Steed / Manuel Fradinho Oliveira 著)

PART I GROUNDWORK CHAPTER 1 Introduction CHAPTER 2 One on One (101) CHAPTER 3 Overview of the Intern ...

- How to receive a million packets per second

Last week during a casual conversation I overheard a colleague saying: "The Linux network stack ...

- JDK下sun.net.www.protocol.http.HttpURLConnection类-----Http客户端实现类的实现分析

HttpClient类是进行TCP连接的实现类, package sun.net.www.http; import java.io.*; import java.net.*; import java. ...

- 开源sip server & sip client 和开发库 一览

http://www.voip-info.org/wiki/view/Open+Source+VOIP+Software http://blog.csdn.net/xuyunzhang/article ...

- magento增加左侧导航栏

1.打开 app\design\frontend\default\modern\layout\catalog.xml,在适当位置加入以下代码: <reference name=”left”> ...

- Django 2.0.1 官方文档翻译: 文档目录 (Page 1)

Django documentation contents 翻译完成后会做标记. 文档按照官方提供的内容一页一页的进行翻译,有些内容涉及到其他节的内容,会慢慢补上.所有的翻译内容按自己的理解来写,尽量 ...

随机推荐

- SQLite入门与分析(六)---再谈SQLite的锁

写在前面:SQLite封锁机制的实现需要底层文件系统的支持,不管是Linux,还是Windows,都提供了文件锁的机制,而这为SQLite提供了强大的支持.本节就来谈谈SQLite使用到的文件锁——主 ...

- wcf教程

WCF Tutorial WCF stands for Windows Communication Foundation. It is a framework for building, config ...

- BZOJ3530: [Sdoi2014]数数

3530: [Sdoi2014]数数 Time Limit: 10 Sec Memory Limit: 512 MBSubmit: 322 Solved: 188[Submit][Status] ...

- [编译] g++ 与 Makefile

g++ -c CppSoureFile -o ObjectCodeFile -c 编译而不链接 -lm 链接数学库 -static 生成静态链接的程序

- apache开源项目--Cassandra

Apache Cassandra是一套开源分布式Key-Value存储系统.它最初由Facebook开发,用于储存特别大的数据.Facebook目前在使用此系统. 主要特性: 分布式 基于column ...

- iOS上百度输入法引起的问题

/* UIKeyboardWillShowNotification 通知下的数据 百度 { UIKeyboardAnimationCurveU ...

- asp.net mvc ChildActionOnly 和ActionName的用法

ChildActionOnly的目的主要就是让这个Action不通过直接在地址栏输入地址来访问,而是需要通过RenderAction来调用它. <a href="javascript: ...

- STL总结之bitset

STL的bitset是一个对位进行存储和操作的容器,可以轻松对bit位进行访问. bitset的模板声明如下: template<size_t _Bits> class bitset; ...

- POJ 3013

思路:ans = 每条边(u,v)*v的子树节点的w = 所有的dist[v]*w[v]之和; #include<iostream> #include<queue> #incl ...

- [Tommas] dateadd() 函数用法

DATEADD() 函数在日期中添加或减去指定的时间间隔. 语法 DATEADD(datepart,number,date) date 参数是合法的日期表达式.number 是您希望添加的间隔数:对于 ...