全网最详细中英文ChatGPT接口文档(二)30分钟开始使用ChatGPT——快速入门

Quickstart 快速启动

OpenAI has trained cutting-edge language models that are very good at understanding and generating text. Our API provides access to these models and can be used to solve virtually any task that involves processing language.

OpenAI已经训练出了非常擅长理解和生成文本的尖端语言模型。我们的API提供了对这些模型的访问,并可用于解决几乎任何涉及处理语言的任务。

In this quickstart tutorial, you’ll build a simple sample application. Along the way, you’ll learn key concepts and techniques that are fundamental to using the API for any task, including:

在本快速入门教程中,您将构建一个简单的示例应用程序。在此过程中,您将学习使用API执行任何任务所需的关键概念和技术,包括:

- Content generation 内容生成

- Summarization 总结

- Classification, categorization, and sentiment analysis

- 分类、归类和情感分析

- Data extraction 数据提取

- Translation 翻译

- Many more! 还有很多!

Introduction 导言

The completions endpoint is the core of our API and provides a simple interface that’s extremely flexible and powerful. You input some text as a prompt, and the API will return a text completion that attempts to match whatever instructions or context you gave it.

完成端点是我们API的核心,它提供了一个非常灵活和强大的简单接口。您输入一些文本作为提示,API将返回一个文本完成,尝试匹配您给出的任何指令或上下文。

Prompt 提示: Write a tagline for an ice cream shop.

为一家冰淇淋店写一句口号。

Completion 完成: We serve up smiles with every scoop!

每一勺我们都微笑奉上!

You can think of this as a very advanced autocomplete — the model processes your text prompt and tries to predict what’s most likely to come next.

你可以把它看作是一个非常高级的自动完成--模型处理你的文本提示,并试图预测接下来最有可能发生的事情。

1 Start with an instruction 从说明开始

Imagine you want to create a pet name generator. Coming up with names from scratch is hard!

假设您要创建一个昵称生成器。从零开始想名字是很难的!

First, you’ll need a prompt that makes it clear what you want. Let’s start with an instruction. Submit this prompt to generate your first completion.

首先,你需要一个提示,让你清楚地知道你想要什么。我们先从一个说明开始。提交此提示以生成您的第一个完成。

Suggest one name for a horse. 为一匹马取名

Not bad! Now, try making your instruction more specific.

不错!现在,试着让你的指令更具体一些。

Suggest one name for a black horse. 为一匹黑马取名

As you can see, adding a simple adjective to our prompt changes the resulting completion. Designing your prompt is essentially how you “program” the model.

正如您所看到的,在提示语中添加一个简单的形容词会改变最终的完成方式。设计提示符本质上就是如何“编程”模型。

2 Add some examples 添加一些示例

Crafting good instructions is important for getting good results, but sometimes they aren’t enough. Let’s try making your instruction even more complex.

精心设计好的指导对于获得好的结果很重要,但有时候这还不够。让我们试着让你的指令变得更复杂。

Suggest three names for a horse that is a superhero. 给一匹超级英雄马取三个名字

This completion isn't quite what we want. These names are pretty generic, and it seems like the model didn't pick up on the horse part of our instruction. Let’s see if we can get it to come up with some more relevant suggestions.

这种完整性并不是我们想要的。这些名称非常通用,而且看起来模型没有领会我们指令中的马部分。我们看看能不能让它提出一些更相关的建议。

In many cases, it’s helpful to both show and tell the model what you want. Adding examples to your prompt can help communicate patterns or nuances. Try submitting this prompt which includes a couple examples.

在许多情况下,向模型显示并告诉模型您需要什么是很有帮助的。在提示中添加示例有助于交流模式或细微差别。请尝试提交此提示,其中包括几个示例。

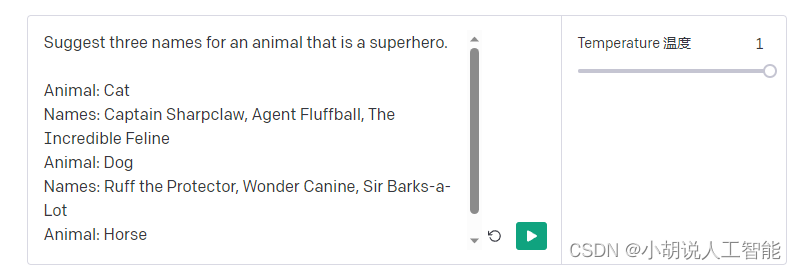

Suggest three names for an animal that is a superhero.

————————————————————————————

Animal: Cat

Names: Captain Sharpclaw, Agent Fluffball, The Incredible Feline

Animal: Dog

Names: Ruff the Protector, Wonder Canine, Sir Barks-a-Lot

Animal: Horse

Names:

Nice! Adding examples of the output we’d expect for a given input helped the model provide the types of names we were looking for.

不错!添加我们对给定输入所期望的输出示例有助于模型提供我们所寻找的名称类型。

3 Adjust your settings 调整您的设置

Prompt design isn’t the only tool you have at your disposal. You can also control completions by adjusting your settings. One of the most important settings is called temperature.

提示设计并不是你唯一可以使用的工具。您还可以通过调整设置来控制完成。最重要的设置之一叫做温度。

You may have noticed that if you submitted the same prompt multiple times in the examples above, the model would always return identical or very similar completions. This is because your temperature was set to 0.

您可能已经注意到,如果您在上面的示例中多次提交相同的提示,模型将始终返回相同或非常相似的完成。这是因为您的体温设置为0。

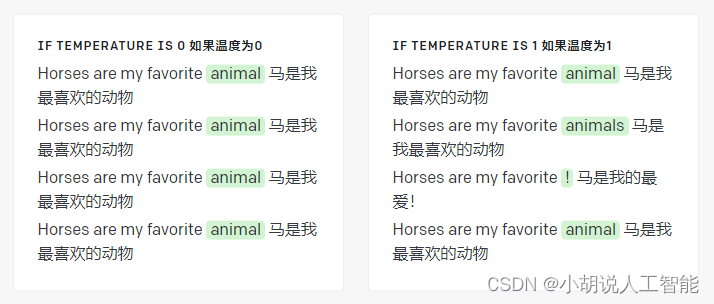

Try re-submitting the same prompt a few times with temperature set to 1.

尝试在温度设置为1的情况下重新提交相同的提示几次。

See what happened? When temperature is above 0, submitting the same prompt results in different completions each time.

看到发生了什么吗?当温度高于0时,每次提交相同的提示会导致不同的完成。

Remember that the model predicts which text is most likely to follow the text preceding it. Temperature is a value between 0 and 1 that essentially lets you control how confident the model should be when making these predictions. Lowering temperature means it will take fewer risks, and completions will be more accurate and deterministic. Increasing temperature will result in more diverse completions.

请记住,模型预测哪个文本最有可能跟在它前面的文本后面。温度是一个介于0和1之间的值,它本质上允许您控制模型在进行这些预测时的置信度。降低温度意味着风险更小,完成更准确、更确定。温度升高会导致完成更加多样化。

DEEP DIVE 深入了解

Understanding tokens and probabilities 理解记号和概率

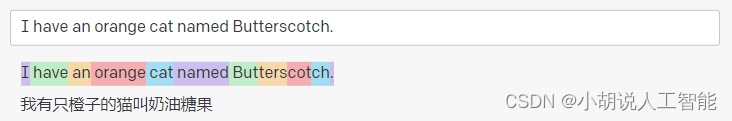

Our models process text by breaking it down into smaller units called tokens. Tokens can be words, chunks of words, or single characters. Edit the text below to see how it gets tokenized.

我们的模型通过将文本分解为更小的单元(称为标记)来处理文本。标记可以是单词、单词块或单个字符。编辑下面的文本,看看它是如何被标记的。

Common words like “cat” are a single token, while less common words are often broken down into multiple tokens. For example, “Butterscotch” translates to four tokens: “But”, “ters”, “cot”, and “ch”. Many tokens start with a whitespace, for example “ hello” and “ bye”.

像“cat”这样的常用词是单个标记,而不太常用的词通常被分解为多个标记。例如,“Butterscotch”转换为四个标记:“but”,“ters”,“cot”和“ch”。许多标记以空格开头,例如“hello”和“bye”。

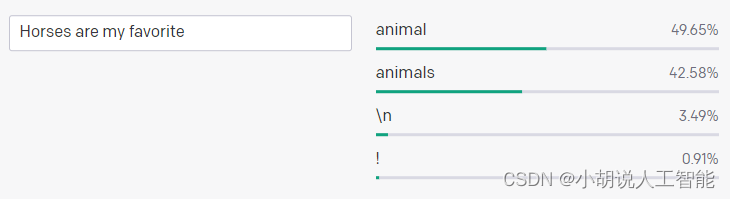

Given some text, the model determines which token is most likely to come next. For example, the text “Horses are my favorite” is most likely to be followed with the token “ animal”.

给定一些文本,模型确定哪个标记最有可能出现在下一个文本中。例如,文本“Horses are my favorite”最有可能跟有标记“animal”。

This is where temperature comes into play. If you submit this prompt 4 times with temperature set to 0, the model will always return “ animal” next because it has the highest probability. If you increase the temperature, it will take more risks and consider tokens with lower probabilities.

这就是温度起作用的地方。如果您在温度设置为0时提交此提示4次,则模型将始终返回“animal”,因为它具有最高的概率。如果你提高温度,它会冒更大的风险,考虑概率较低的标记。

It’s usually best to set a low temperature for tasks where the desired output is well-defined. Higher temperature may be useful for tasks where variety or creativity are desired, or if you'd like to generate a few variations for your end users or human experts to choose from.

通常最好为所需输出定义明确的任务设置低温。较高的温度可能对需要变化或创造性的任务有用,或者如果您想生成一些变化供最终用户或人类专家选择。

For your pet name generator, you probably want to be able to generate a lot of name ideas. A moderate temperature of 0.6 should work well.

对于你的宠物名字生成器,你可能希望能够生成很多名字的想法。0.6的中等温度应该可以很好地工作。

4 Build your application 构建应用程序

Now that you’ve found a good prompt and settings, you’re ready to build your pet name generator! We’ve written some code to get you started — follow the instructions below to download the code and run the app.

现在你已经找到了一个好的提示和设置,你已经准备好建立你的昵称生成器!我们编写了一些代码来帮助你入门-按照下面的说明下载代码并运行应用程序。

4.1NODE.JS

Setup

If you don’t have Node.js installed, install it from here. Then download the code by cloning this repository.

如果您没有安装Node.js,请从这里安装。然后通过克隆这个存储库来下载代码。

git clone https://github.com/openai/openai-quickstart-node.git

If you prefer not to use git, you can alternatively download the code using this zip file.

如果你不想使用git,你也可以使用这个zip文件下载代码。

Add your API key 添加您的API密钥

To get the app working, you’ll need an API key. You can get one by signing up for an account and returning to this page.

要使应用正常工作,您需要API密钥。您可以通过注册帐户并返回此页面来获取一个。

Run the app 运行应用程序

Run the following commands in the project directory to install the dependencies and run the app.

在项目目录中运行以下命令以安装依赖项并运行应用程序。

npm install

npm run dev

Open http://localhost:3000 in your browser and you should see the pet name generator!

在浏览器中打开 http://localhost:3000 ,您应该会看到昵称生成器!

Understand the code 了解代码

Open up generate.js in the openai-quickstart-node/pages/api folder. At the bottom, you’ll see the function that generates the prompt that we were using above. Since users will be entering the type of animal their pet is, it dynamically swaps out the part of the prompt that specifies the animal.

打开 openai-quickstart-node/pages/api 文件夹中的 generate.js 。在底部,您将看到生成我们在上面使用的提示符的函数。由于用户需要输入宠物的动物类型,因此它会动态地替换掉提示符中指定动物的部分。

function generatePrompt(animal) {

const capitalizedAnimal = animal[0].toUpperCase() + animal.slice(1).toLowerCase();

return `Suggest three names for an animal that is a superhero.

Animal: Cat

Names: Captain Sharpclaw, Agent Fluffball, The Incredible Feline

Animal: Dog

Names: Ruff the Protector, Wonder Canine, Sir Barks-a-Lot

Animal: ${capitalizedAnimal}

Names:`;

}

On line 9 in generate.js, you’ll see the code that sends the actual API request. As mentioned above, it uses the completions endpoint with a temperature of 0.6.

在 generate.js 的第9行,您将看到发送实际API请求的代码。如上所述,它使用温度为0.6的完成终点。

const completion = await openai.createCompletion({

model: "text-davinci-003",

prompt: generatePrompt(req.body.animal),

temperature: 0.6,

});

And that’s it! You should now have a full understanding of how your (superhero) pet name generator uses the OpenAI API!

就是这样!现在您应该已经完全了解了您的(超级英雄)昵称生成器是如何使用OpenAI API的!

4.2 PYTHON (FLASK)

Setup

If you don’t have Python installed, install it from here. Then download the code by cloning this repository.

如果你还没有安装Python,可以从这里安装。然后通过克隆这个存储库来下载代码。

git clone https://github.com/openai/openai-quickstart-python.git

If you prefer not to use git, you can alternatively download the code using this zip file.

如果你不想使用git,你也可以使用这个zip文件下载代码。

Add your API key 添加您的API密钥

To get the app working, you’ll need an API key. You can get one by signing up for an account and returning to this page.

要使应用正常工作,您需要API密钥。您可以通过注册帐户并返回此页面来获取一个。

Run the app 运行应用程序

Run the following commands in the project directory to install the dependencies and run the app. When running the commands, you may need to type python3/pip3 instead of python/pip depending on your setup.

在项目目录中运行以下命令以安装依赖项并运行应用。运行命令时,可能需要键入 python3/pip3 而不是 python/pip ,具体取决于您的设置。

python -m venv venv

venv/bin/activate

pip install -r requirements.txt

flask run

Open http://localhost:5000 in your browser and you should see the pet name generator!

在浏览器中打开 http://localhost:5000 ,您应该会看到昵称生成器!

Understand the code 了解代码

Open up app.py in the openai-quickstart-python folder. At the bottom, you’ll see the function that generates the prompt that we were using above. Since users will be entering the type of animal their pet is, it dynamically swaps out the part of the prompt that specifies the animal.

打开 openai-quickstart-python 文件夹中的 app.py 。在底部,您将看到生成我们在上面使用的提示符的函数。由于用户需要输入宠物的动物类型,因此它会动态地替换掉提示符中指定动物的部分。

def generate_prompt(animal):

return """Suggest three names for an animal that is a superhero.

Animal: Cat

Names: Captain Sharpclaw, Agent Fluffball, The Incredible Feline

Animal: Dog

Names: Ruff the Protector, Wonder Canine, Sir Barks-a-Lot

Animal: {}

Names:""".format(animal.capitalize())

On line 14 in app.py, you’ll see the code that sends the actual API request. As mentioned above, it uses the completions endpoint with a temperature of 0.6.

在 app.py 的第14行,您将看到发送实际API请求的代码。如上所述,它使用温度为0.6的完成终点。

response = openai.Completion.create(

model="text-davinci-003",

prompt=generate_prompt(animal),

temperature=0.6

)

And that’s it! You should now have a full understanding of how your (superhero) pet name generator uses the OpenAI API!

就是这样!现在您应该已经完全了解了您的(超级英雄)昵称生成器是如何使用OpenAI API的!

Closing

These concepts and techniques will go a long way in helping you build your own application. That said, this simple example demonstrates just a sliver of what’s possible! The completions endpoint is flexible enough to solve virtually any language processing task, including content generation, summarization, semantic search, topic tagging, sentiment analysis, and so much more.

这些概念和技术将在很大程度上帮助您构建自己的应用程序。也就是说,这个简单的例子只是展示了可能性的一小部分!补全端点非常灵活,几乎可以解决任何语言处理任务,包括内容生成、摘要、语义搜索、主题标记、情感分析等等。

One limitation to keep in mind is that, for most models, a single API request can only process up to 2,048 tokens (roughly 1,500 words) between your prompt and completion.

需要记住的一个限制是,对于大多数模型,在提示符和完成符之间,单个API请求最多只能处理2,048个令牌(大约1,500个单词)。

DEEP DIVE 深入了解

Models and pricing 型号和定价

We offer a spectrum of models with different capabilities and price points. In this tutorial, we used text-davinci-003, our most capable natural language model. We recommend using this model while experimenting since it will yield the best results. Once you’ve got things working, you can see if the other models can produce the same results with lower latency and costs.

我们提供一系列具有不同功能和价位的模型。在本教程中,我们使用了 text-davinci-003,这是我们最强大的自然语言模型。我们建议在实验时使用此模型,因为它将产生最佳结果。一旦你让事情运转起来,你就可以看看其他模型是否能以更低的延迟和成本产生相同的结果。

The total number of tokens processed in a single request (both prompt and completion) can’t exceed the model's maximum context length. For most models, this is 2,048 tokens or about 1,500 words. As a rough rule of thumb, 1 token is approximately 4 characters or 0.75 words for English text.

在单个请求(提示和完成)中处理的标记总数不能超过模型的最大上下文长度。对于大多数模型,这是2,048个标记或大约1,500个单词。根据粗略的经验,对于英语文本,1个标记大约为4个字符或0.75个单词。

Pricing is pay-as-you-go per 1,000 tokens, with $5 in free credit that can be used during your first 3 months. Learn more.

定价为每1,000个虚拟币现收现付,并可在前3个月内使用5美元的免费积分。 了解更多信息。

For more advanced tasks, you might find yourself wishing you could provide more examples or context than you can fit in a single prompt. The fine-tuning API is a great option for more advanced tasks like this. Fine-tuning allows you to provide hundreds or even thousands of examples to customize a model for your specific use case.

对于更高级的任务,您可能会发现自己希望提供更多的示例或上下文,而不是一个提示符所能容纳的。对于像这样的高级任务,微调API是一个很好的选择。微调允许您提供数百甚至数千个示例,以便为您的特定用例定制模型。

Next steps 下一步步骤

To get inspired and learn more about designing prompts for different tasks:

要获得灵感并了解有关为不同任务设计提示的详细信息,请执行以下操作:

Read our completion guide.

阅读我们的完成指南。

Explore our library of example prompts.

探索我们的示例提示库。

Start experimenting in the Playground.

开始在游乐场体验吧。

Keep our usage policies in mind as you start building.

开始构建时请牢记我们的使用策略。

其它资料下载

如果大家想继续了解人工智能相关学习路线和知识体系,欢迎大家翻阅我的另外一篇博客《重磅 | 完备的人工智能AI 学习——基础知识学习路线,所有资料免关注免套路直接网盘下载》

这篇博客参考了Github知名开源平台,AI技术平台以及相关领域专家:Datawhale,ApacheCN,AI有道和黄海广博士等约有近100G相关资料,希望能帮助到所有小伙伴们。

全网最详细中英文ChatGPT接口文档(二)30分钟开始使用ChatGPT——快速入门的更多相关文章

- Django框架之drf:9、接口文档,coreapi的使用,JWT原理、介绍、快速使用、定制、认证

目录 Django框架之drf 一.接口文档 二.CoreAPI文档生成器 1.使用方法 三.JWT 1.JWT原理及介绍 2.JWP快速使用 3.定制返回格式 4.JTW的认证类 Django框架之 ...

- ShiWangMeSDK Android版接口文档 0.2.0 版

# ShiWangMeSDK Android版接口文档 0.2.0 版 android 总共有 14 个接口,分别涉及到初始化和对界面的一些细节的控制.下面详细介绍接口,如果没有特殊说明,接口都在 S ...

- 使用swagger实现web api在线接口文档

一.前言 通常我们的项目会包含许多对外的接口,这些接口都需要文档化,标准的接口描述文档需要描述接口的地址.参数.返回值.备注等等:像我们以前的做法是写在word/excel,通常是按模块划分,例如一个 ...

- SpringBoot整合Swagger2,再也不用维护接口文档了!

前后端分离后,维护接口文档基本上是必不可少的工作.一个理想的状态是设计好后,接口文档发给前端和后端,大伙按照既定的规则各自开发,开发好了对接上了就可以上线了.当然这是一种非常理想的状态,实际开发中却很 ...

- Asp.Net Core 轻松学-利用 Swagger 自动生成接口文档

前言 目前市场上主流的开发模式,几乎清一色的前后端分离方式,作为服务端开发人员,我们有义务提供给各个客户端良好的开发文档,以方便对接,减少沟通时间,提高开发效率:对于开发人员来说,编写接口文档 ...

- webapi 利用webapiHelp和swagger生成接口文档

webapi 利用webapiHelp和swagger生成接口文档.均依赖xml(需允许项目生成注释xml) webapiHelp:微软技术自带,仅含有模块.方法.请求-相应参数的注释. swagge ...

- 使用sphinx制作接口文档并托管到readthedocs

此sphinx可不是彼sphinx,此篇是指生成文档的工具,是python下最流行的文档生成工具,python官方文档即是它生成,官方网站是http://www.sphinx-doc.org,这里是一 ...

- swagger2的接口文档

以前见过一个swagger2的接口文档,特别好用,好看,对接口中入参描述的很详细:适合用于项目的开发 后来自己做项目的时候,没有找到这个swagger版本 <dependency> < ...

- 从零开始学 Web 之 Ajax(四)接口文档,验证用户名唯一性案例

大家好,这里是「 从零开始学 Web 系列教程 」,并在下列地址同步更新...... github:https://github.com/Daotin/Web 微信公众号:Web前端之巅 博客园:ht ...

- SpringBoot 使用Swagger2打造在线接口文档(附汉化教程)

原文地址: https://www.jianshu.com/p/7e543f0f0bd8 SpringBoot + Swagger2 UI界面-汉化教程 1.默认的英文界面UI 想必很多小伙伴都曾经使 ...

随机推荐

- Python基础数据类型-Tuple(元组)

a = () b = (1) # 不是元组类型,是int型 c = (1,) # 只有一个元素的时候,要加逗号才能表示是元组 d = (1, 2, 3, 4, 5, 6, 1) print(type( ...

- 运行代码后出现Process finished with exit code 0

pycharm_运行不出结果,也不报错_Process finished with exit code 0用pycharm运行程序的时候,运行不出结果 ,也不报错,且正常退出解决1:将 run → e ...

- 通过flask完成web实时播放视频

def gen(): r = redis.StrictRedis(host=REDIS_HOST, port=REDIS_PORT, db=REDIS_DB, password=PASS_WORD) ...

- 狐漠漠养成日记 Cp.00003 第二周

上一周整周都在做Unity Newbies Jam,除了一些必要的比如考试或者课程,其他的都推后了. 为了赶项目进度,这一周我可以说是废寝忘食,基本上每天一顿饭,就睡仨小时那种. 以至于到最后一天,也 ...

- mybatis中xml配置文件头部

<?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE configuration PUBLIC ...

- NOIP2010普及组

T2]接水问题 有一些小细节,比如如果最小值存在多个,比如最后还需要一个完全结束的最大值 #include<iostream> #include<cstring> #inclu ...

- 【Unity】阅读LuaFramework_UGUI的一种方法

写在前面 我第一次接触到LuaFramework_UGUI是在一个工作项目中,当时也是第一次知道toLua.但我刚开始了解LuaFramework_UGUI时十分混乱,甚至将LuaFramework_ ...

- 『教程』mariadb的主从复制

一.MariaDB简介 MariaDB数据库的主从复制方案,是其自带的功能,并且主从复制并不是复制磁盘上的数据库文件,而是通过binlog日志复制到需要同步的从服务器上. MariaDB数据库支持单向 ...

- idea鼠标光标变黑块

在编辑文档和在编程时经常敲着敲着竖线就变成了黑块,这样输入新的代码就会改变其他代码,这是因为输入方式改成了改写模式 只要按fn+insert就可以解决了 搜索 复制

- 图算法之BFS

深度优先搜索(Breadth First Search),类似于树的层序遍历,搜索模型是队列,还是以下面的无向图为例: 实验环境是Ubuntu 14.04 x86 伪代码实现如下: 其中u 为 v ...