normalization, standardization and regularization

Normalization

Normalization refers to rescaling real valued numeric attributes into the range 0 and 1. It is useful to scale the input attributes for a model that relies on the magnitude of values, such as distance measures used in k-nearest neighbors and in the preparation of coefficients in regression.

normalization 是将数据的每个样本(向量)变换为单位范数的向量,各样本之间是相互独立的.其实际上,是对向量中的每个分量值除以正规化因子.常用的正规化因子有 L1, L2 和 Max.假设,对长度为 n 的向量,其正规化因子 z 的计算公式,如下所示:

注意:Max 与无穷范数  不同,无穷范数

不同,无穷范数 是需要先对向量的所有分量取绝对值,然后取其中的最大值;而 Max 是向量中的最大分量值,不需要取绝对值的操作.

是需要先对向量的所有分量取绝对值,然后取其中的最大值;而 Max 是向量中的最大分量值,不需要取绝对值的操作.

补充:一阶范数也称为曼哈顿距离(Manhanttan distance)或街区距离;二阶范数也称为欧式距离(Euclidean distance)

#!/usr/bin/env python

# -*- coding: utf8 -*-

# author: klchang

# Use sklearn.preprocessing.Normalizer class to normalize data.

from __future__ import print_function

import numpy as np

from sklearn.preprocessing import Normalizer x = np.array([1, 2, 3, 4], dtype='float32').reshape(1,-1) print("Before normalization: ", x) options = ['l1', 'l2', 'max']

for opt in options:

norm_x = Normalizer(norm=opt).fit_transform(x)

print("After %s normalization: " % opt.capitalize(), norm_x)

#!/usr/bin/env python

# -*- coding: utf8 -*-

# author: klchang

# Use sklearn.preprocessing.normalize function to normalize data. from __future__ import print_function

import numpy as np

from sklearn.preprocessing import normalize x = np.array([1, 2, 3, 4], dtype='float32').reshape(1,-1) print("Before normalization: ", x) options = ['l1', 'l2', 'max']

for opt in options:

norm_x = normalize(x, norm=opt)

print("After %s normalization: " % opt.capitalize(), norm_x)

Standardizaton

Standardization refers to shifting the distribution of each attribute to have a mean of zero and a standard deviation of one (unit variance). It is useful to standardize attributes for a model that relies on the distribution of attributes such as Gaussian processes.

# Standardize the data attributes for the Iris dataset.

from sklearn.datasets import load_iris

from sklearn import preprocessing

# load the Iris dataset

iris = load_iris()

print(iris.data.shape)

# separate the data and target attributes

X = iris.data

y = iris.target

# standardize the data attributes

standardized_X = preprocessing.scale(X)

from 机器学习里的黑色艺术:normalization, standardization, regularization;

第一部分:大的层面上讲

1. normalization一般是把数据限定在需要的范围,比如一般都是【0,1】,从而消除了数据量纲对建模的影响。standardization 一般是指将数据正态化,使平均值0方差为1. 因此normalization和standardization 是针对数据而言的,消除一些数值差异带来的特种重要性偏见。经过归一化的数据,能加快训练速度,促进算法的收敛。

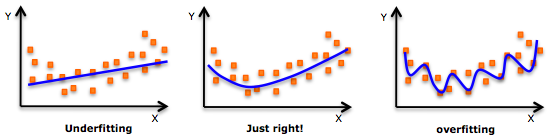

2.而regularization是在cost function里面加惩罚项,增加建模的模糊性,从而把捕捉到的趋势从局部细微趋势,调整到整体大概趋势。虽然一定程度上的放宽了建模要求,但是能有效防止over-fitting的问题(如图,来源于网上),增加模型准确性。因此,regularization是针对模型而言。

这三个term说的是不同的事情。

第二部分:方法

总结下normalization, standardization,和regularization的方法。

Normalization 和 Standardization

(1).最大最小值normalization: x'=(x-min)/(max-min). 这种方法的本质还是线性变换,简单直接。缺点就是新数据的加入,可能会因数值范围的扩大需要重新regularization。

(2). 对数归一化:x'=log10(x)/log10(xmax)或者log10(x)。推荐第一种,除以最大值,这样使数据落到【0,1】区间

(3).反正切归一化。x'=2atan(x)/pi。能把数据投影到【-1,1】区间。

(4).zero mean normalization归一化,也是standardization. x'=(x-mean)/std.

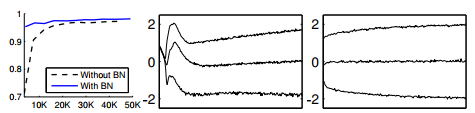

有无normalization,模型的学习曲线是不一样的,甚至会收敛结果不一样。比如在深度学习中,batch normalization有无,学习曲线对比是这样的:图一 蓝色线有batch normalization (BN),黑色虚线是没有BN. 黑色线放大,是图二的样子,蓝色线放大是图三的样子。reference:Batch Normalization: Accelerating Deep Network Training by Reducing

Internal Covariate Shift, Sergey Ioffe.

Regularization 方法

一般形式,应该是 min , R是regularization term。一般方法有

- L1 regularization: 对整个绝对值只和进行惩罚。

- L2 regularization:对系数平方和进行惩罚。

- Elastic-net 混合regularization。

from Differences between normalization, standardization and regularization;

Normalization

Normalization usually rescales features to [0,1][0,1].1 That is,

x′=x−min(x)max(x)−min(x)x′=x−min(x)max(x)−min(x)

It will be useful when we are sure enough that there are no anomalies (i.e. outliers) with extremely large or small values. For example, in a recommender system, the ratings made by users are limited to a small finite set like {1,2,3,4,5}{1,2,3,4,5}.

In some situations, we may prefer to map data to a range like [−1,1][−1,1] with zero-mean.2 Then we should choose mean normalization.3

x′=x−mean(x)max(x)−min(x)x′=x−mean(x)max(x)−min(x)

In this way, it will be more convenient for us to use other techniques like matrix factorization.

Standardization

Standardization is widely used as a preprocessing step in many learning algorithms to rescale the features to zero-mean and unit-variance.3

x′=x−μσx′=x−μσ

Regularization

Different from the feature scaling techniques mentioned above, regularization is intended to solve the overfitting problem. By adding an extra part增加惩罚项 to the loss function, the parameters in learning algorithms are more likely to converge to smaller values, which can significantly reduce overfitting.

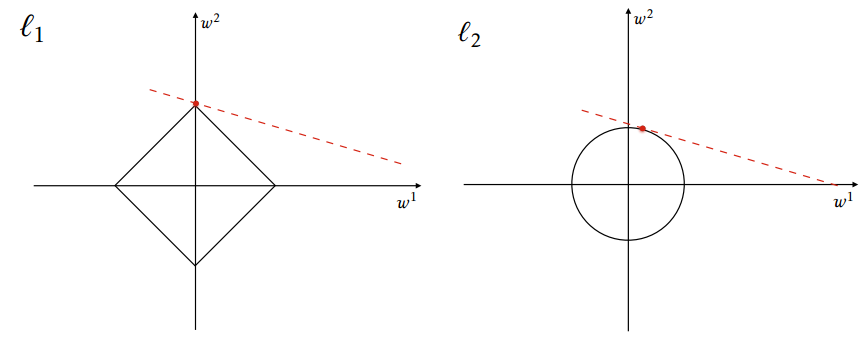

There are mainly two basic types of regularization: L1-norm (lasso) and L2-norm (ridge regression).4

L1-norm5

The original loss function is denoted by f(x)f(x), and the new one is F(x)F(x).

F(x)=f(x)+λ∥x∥1F(x)=f(x)+λ‖x‖1

where

∥x∥p=p ⎷n∑i=1|xi|p‖x‖p=∑i=1n|xi|pp

L1 regularization is better when we want to train a sparse model, since the absolute value function is not differentiable at 0.

L2-norm56

F(x)=f(x)+λ∥x∥22F(x)=f(x)+λ‖x‖22

L2 regularization is preferred in ill-posed problems for smoothing.

Here is a comparison between L1 and L2 regularizations.

From https://en.wikipedia.org/wiki/Regularization_(mathematics)

References

https://stats.stackexchange.com/a/10298 ↩

https://www.quora.com/What-is-the-difference-between-normalization-standardization-and-regularization-for-data/answer/Enzo-Tagliazucchi?share=c48b6752&srid=51VPj ↩

https://en.wikipedia.org/wiki/Regularization_%28mathematics%29 ↩

https://www.quora.com/What-is-the-difference-between-L1-and-L2-regularization-How-does-it-solve-the-problem-of-overfitting-Which-regularizer-to-use-and-when/answer/Kenneth-Tran?share=400c336d&srid=51VPj ↩ ↩2

https://en.wikipedia.org/wiki/Ridge_regression ↩

normalization, standardization and regularization的更多相关文章

- zz先睹为快:神经网络顶会ICLR 2019论文热点分析

先睹为快:神经网络顶会ICLR 2019论文热点分析 - lqfarmer的文章 - 知乎 https://zhuanlan.zhihu.com/p/53011934 作者:lqfarmer链接:ht ...

- 数据预处理中归一化(Normalization)与损失函数中正则化(Regularization)解惑

背景:数据挖掘/机器学习中的术语较多,而且我的知识有限.之前一直疑惑正则这个概念.所以写了篇博文梳理下 摘要: 1.正则化(Regularization) 1.1 正则化的目的 1.2 正则化的L1范 ...

- 学习笔记57—归一化 (Normalization)、标准化 (Standardization)和中心化/零均值化 (Zero-centered)

1 概念 归一化:1)把数据变成(0,1)或者(1,1)之间的小数.主要是为了数据处理方便提出来的,把数据映射到0-1范围之内处理,更加便捷快速.2)把有量纲表达式变成无量纲表达式,便于不同单位或 ...

- 归一化 (Normalization)、标准化 (Standardization)和中心化/零均值化 (Zero-centered)

博主学习的源头,感谢!https://www.jianshu.com/p/95a8f035c86c 归一化 (Normalization).标准化 (Standardization)和中心化/零均值化 ...

- 【转】Standardization(标准化)和Normalization(归一化)的区别

Standardization(标准化)和Normalization(归一化)的区别 https://blog.csdn.net/Dhuang159/article/details/83627146 ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week3, Hyperparameter tuning, Batch Normalization and Programming Frameworks

Tuning process 下图中的需要tune的parameter的先后顺序, 红色>黄色>紫色,其他基本不会tune. 先讲到怎么选hyperparameter, 需要随机选取(sa ...

- 课程二(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization),第三周(Hyperparameter tuning, Batch Normalization and Programming Frameworks) —— 2.Programming assignments

Tensorflow Welcome to the Tensorflow Tutorial! In this notebook you will learn all the basics of Ten ...

- 归一化(Normalization)和标准化(Standardization)

归一化和标准化是机器学习和深度学习中经常使用两种feature scaling的方式,这里主要讲述以下这两种feature scaling的方式如何计算,以及一般在什么情况下使用. 归一化的计算方式: ...

- 归一化方法 Normalization Method

1. 概要 数据预处理在众多深度学习算法中都起着重要作用,实际情况中,将数据做归一化和白化处理后,很多算法能够发挥最佳效果.然而除非对这些算法有丰富的使用经验,否则预处理的精确参数并非显而易见. 2. ...

随机推荐

- [HNOI2016]网络 [树链剖分,可删除堆]

考虑在 |不在| 这条链上的所有点上放上一个 \(x\),删除也是,然后用可删除堆就随便草掉了. // powered by c++11 // by Isaunoya #pragma GCC opti ...

- JMeter-做性能测试从何开始

JMeter-性能测试 参考文档:https://jmeter.apache.org/usermanual/boss.html 一.问题 1.预计的平均用户数是多少(正常负载)? 2.预计的高峰用户数 ...

- Spark学习之路 (十一)SparkCore的调优之Spark内存模型[转]

概述 Spark 作为一个基于内存的分布式计算引擎,其内存管理模块在整个系统中扮演着非常重要的角色.理解 Spark 内存管理的基本原理,有助于更好地开发 Spark 应用程序和进行性能调优.本文旨在 ...

- 《C++面向对象程序设计》第6章课后编程题2拓展

设计一个程序,其中有3个类CBank.BBank.GBank,分别为中国银行类,工商银行类和农业银行类.每个类都包含一个私有数据成员balance用于存放储户在该行的存款数,另有一个友元函数Total ...

- BIOS和DOS中断大全

DOS中断: 1.字符功能调用类(Character-Oriented Function)01H.07H和08H —从标准输入设备输入字符02H —字符输出03H —辅助设备的输入04H —辅助设备的 ...

- youhua

- jQuery---突出展示案例

突出展示案例 <!DOCTYPE html> <html> <head lang="en"> <meta charset="UT ...

- 手写数字识别——利用keras高层API快速搭建并优化网络模型

在<手写数字识别——手动搭建全连接层>一文中,我们通过机器学习的基本公式构建出了一个网络模型,其实现过程毫无疑问是过于复杂了——不得不考虑诸如数据类型匹配.梯度计算.准确度的统计等问题,但 ...

- 【巨杉数据库SequoiaDB】助力金融科技升级,巨杉数据库闪耀金融展

11月4日,以“科技助创新 开放促改革 发展惠民生”为主题的2019中国国际金融展和深圳国际金融博览会在深圳会展中心盛大开幕. 中国人民银行党委委员.副行长范一飞,深圳市人民政府副市长.党组成员艾学峰 ...

- idea软件操作

1.快捷键: 1.1.格式化代码:crtl+alt+L 1.2.一些构造啊,setter/getter等方法:alt+insert 1.3.crtl + f 搜素当前页面