jenkins-gitlab-harbor-ceph基于Kubernetes的CI/CD运用(四)

前景提要

jenkins与gitlab结合,实现代码自动拉取:https://www.cnblogs.com/zisefeizhu/p/12548662.html

jenkins与kubernetes结合,实现jenkins-slave自动部署和销毁:https://www.cnblogs.com/zisefeizhu/p/12556013.html

从0到1,构建一个tomcat pod:https://www.cnblogs.com/zisefeizhu/p/12563272.html

集群状态检查

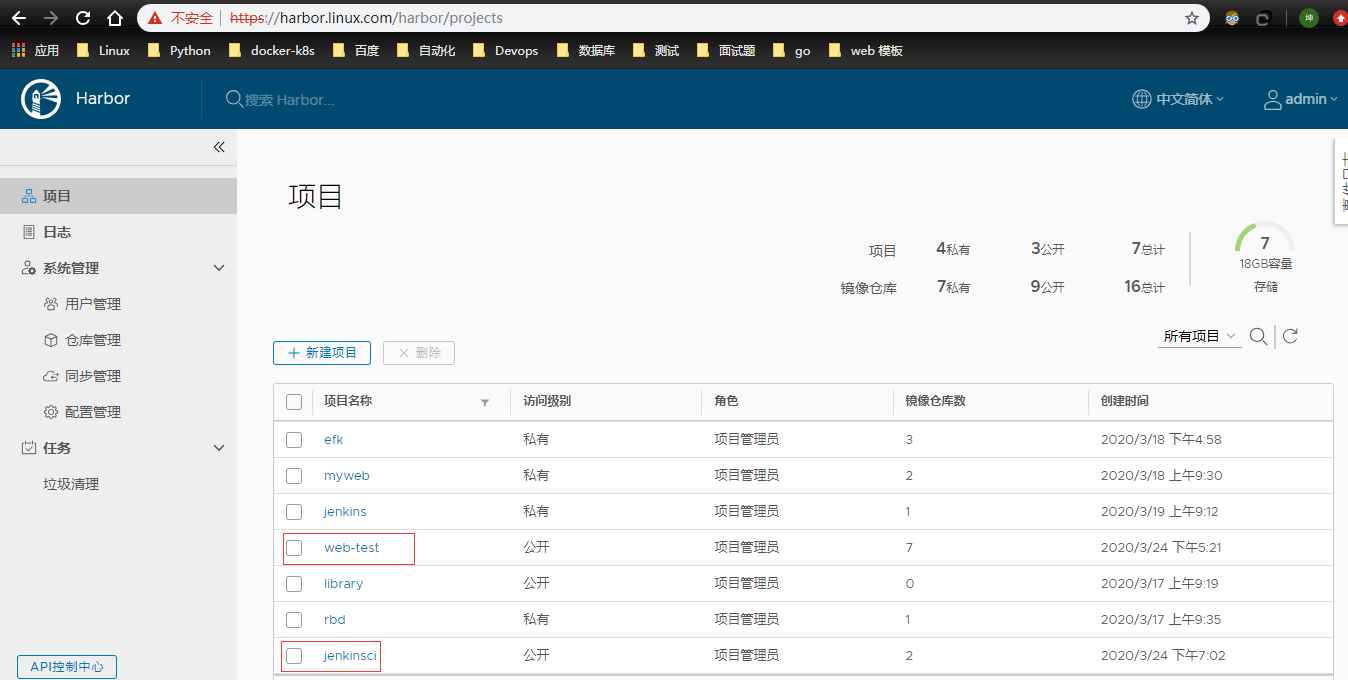

harbor状态

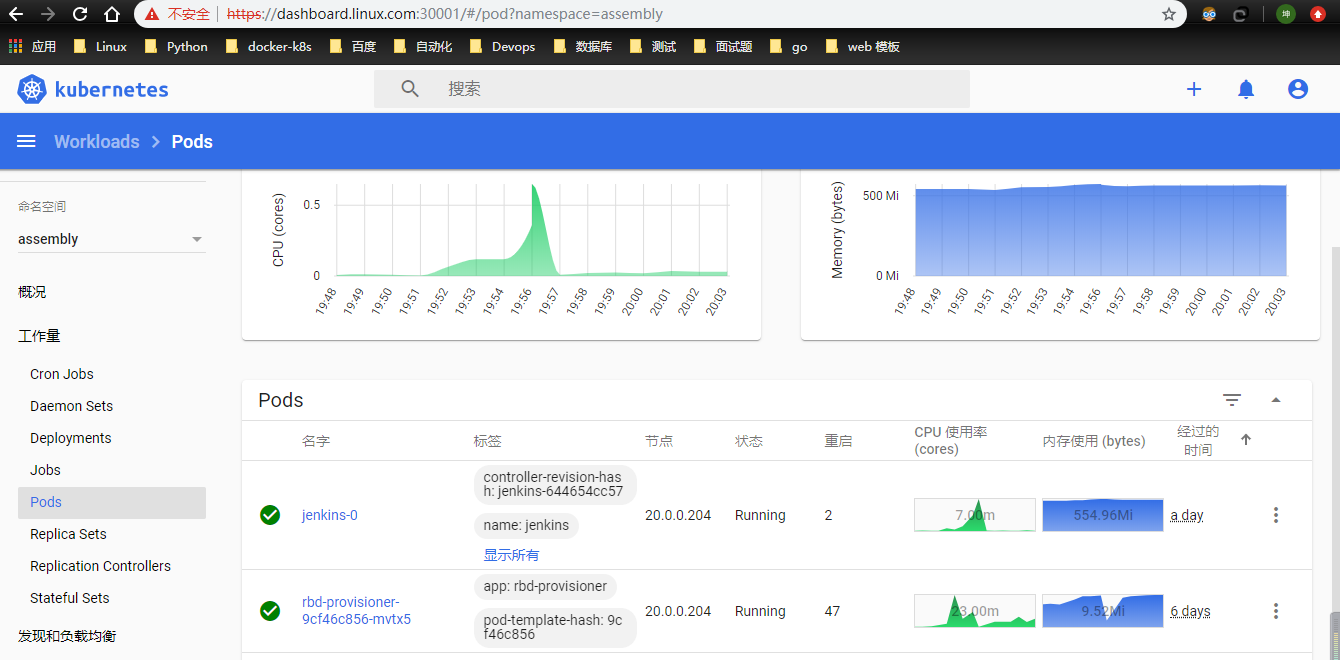

kubernetes状态

ceph状态

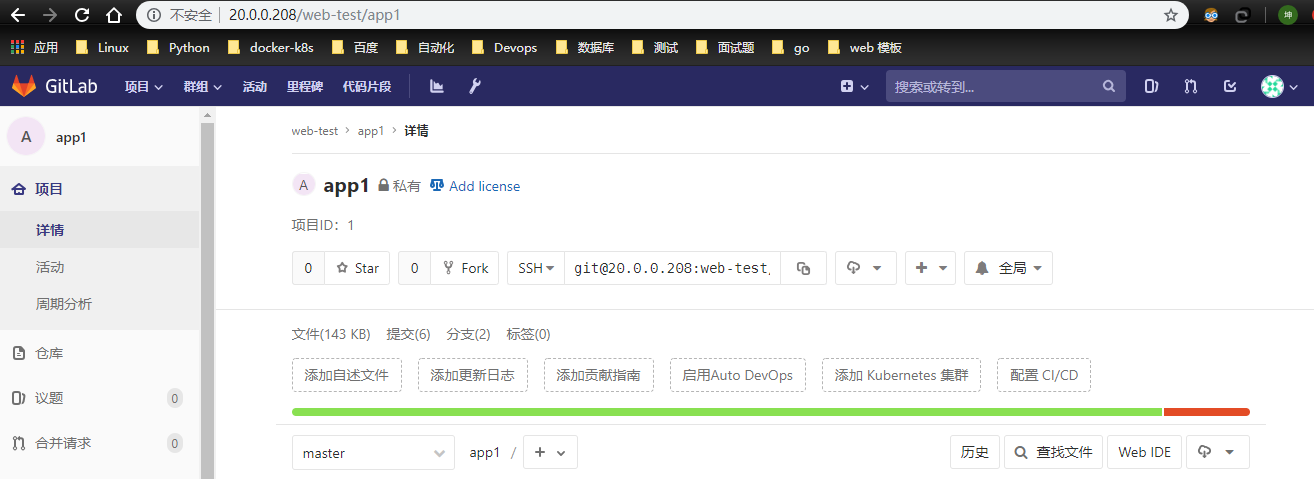

gitlab状态

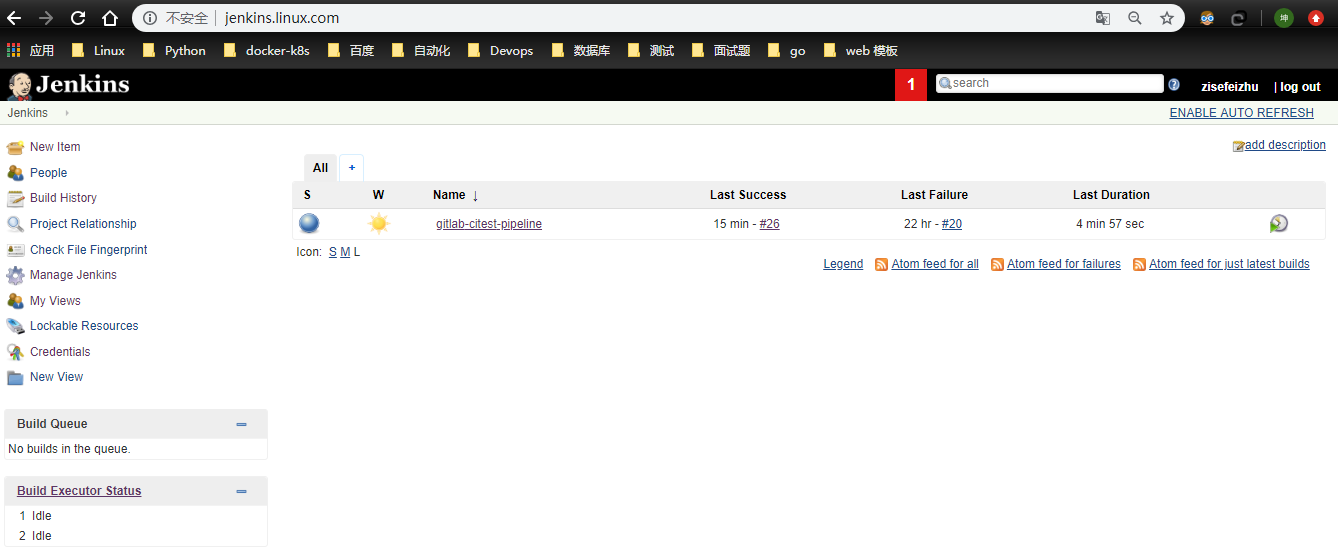

jenkins状态

所需集群服务状态检查完毕

项目上传到gitlab

[root@bs-k8s-gitlab app1]# ll

总用量 52

-rw-r--r-- 1 root root 148 3月 24 21:23 build-command.sh

-rw-r--r-- 1 root root 23611 3月 24 21:23 catalina.sh

-rw-r--r-- 1 root root 402 3月 24 21:23 filebeat.yml

drwxr-xr-x 2 root root 24 3月 24 21:23 myapp

-rw-r--r-- 1 root root 364 3月 24 21:23 run_tomcat.sh

-rw-r--r-- 1 root root 6460 3月 24 21:23 server.xml

-rw-r--r-- 1 root root 1015 3月 24 21:23 tomcat-app1.yaml

[root@bs-k8s-gitlab app1]# git add .

[root@bs-k8s-gitlab app1]# git commit -m "Testing gitlab and jenkins and kubernetes"

[root@bs-k8s-gitlab app1]# git push origin master

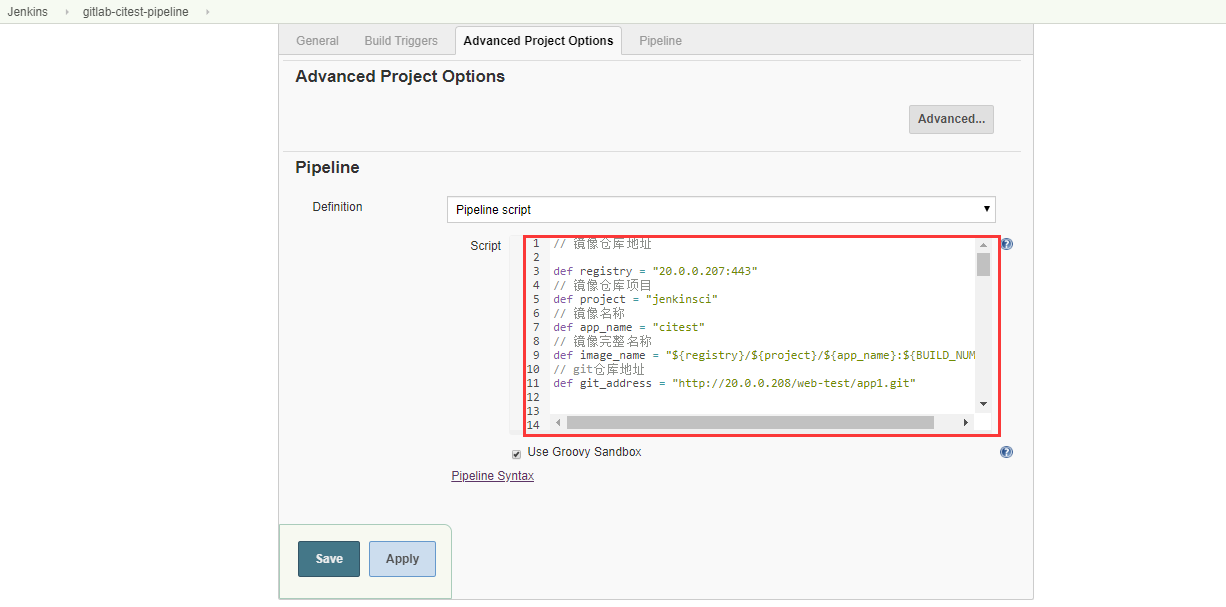

jenkins配置

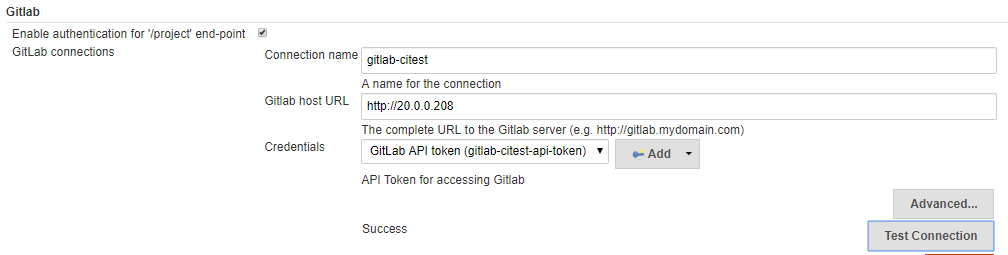

configure system配置

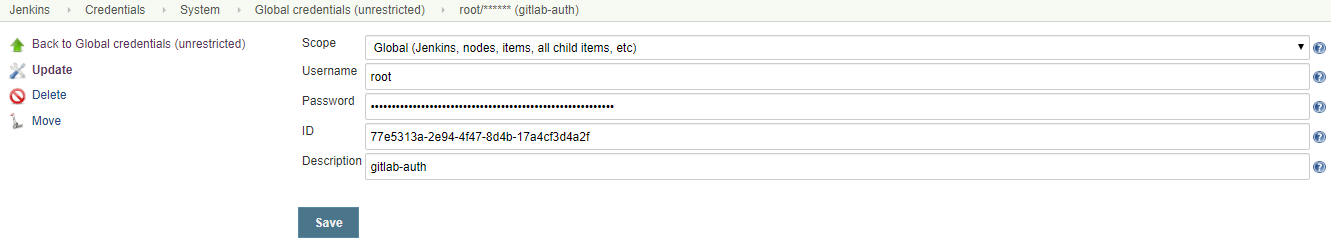

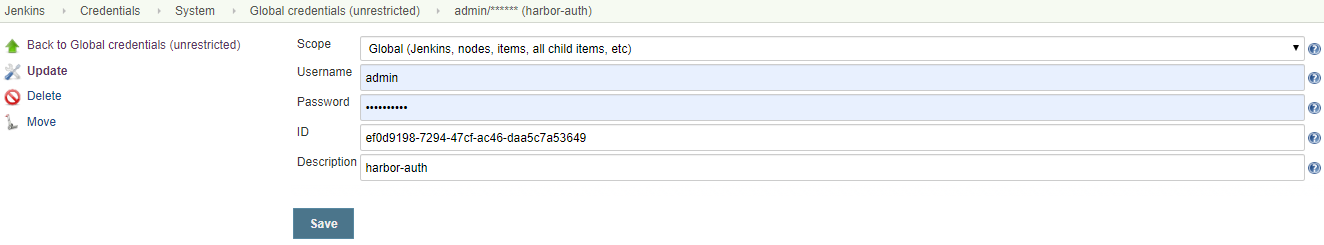

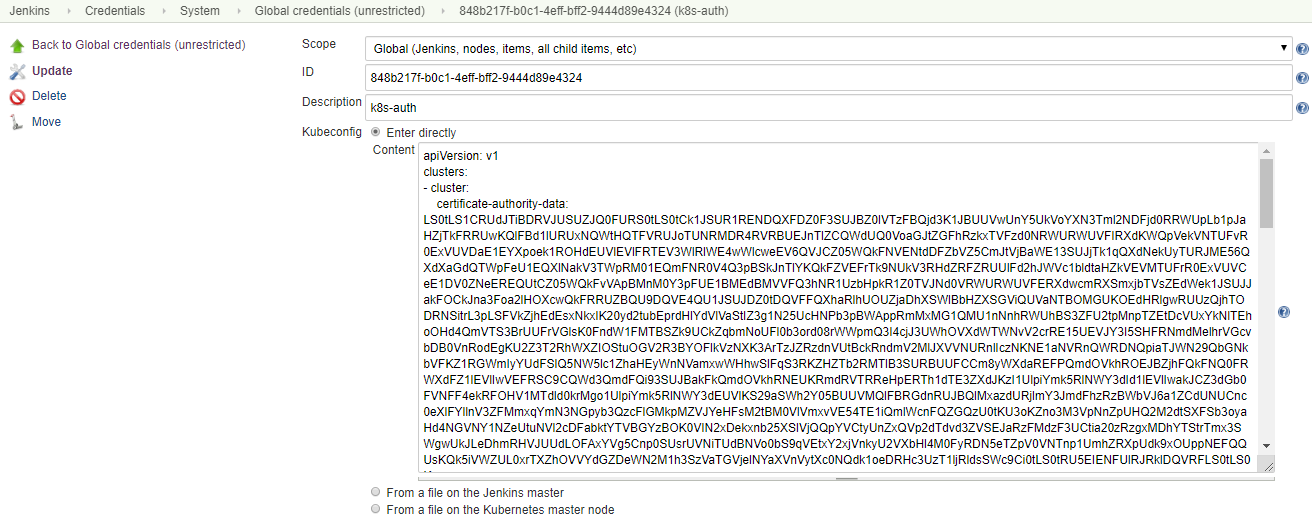

credentials配置

# cat /root/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR1RENDQXFDZ0F3SUJBZ0lVTzFBQjd3K1JBUUVwUnY5UkVoYXN3Tml2NDFjd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SUJjTk1qQXdNekUyTURJME56QXdXaGdQTWpFeU1EQXlNakV3TWpRM01EQmFNR0V4Q3pBSkJnTlYKQkFZVEFrTk9NUkV3RHdZRFZRUUlFd2hJWVc1bldtaHZkVEVMTUFrR0ExVUVCeE1DV0ZNeEREQUtCZ05WQkFvVApBMnM0Y3pFUE1BMEdBMVVFQ3hNR1UzbHpkR1Z0TVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1JSUJJakFOCkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXhaRlhUOUZjaDhXSWlBbHZXSGViQUVaNTBOMGUKOEdHRlgwRUUzQjhTODRNSitrL3pLSFVkZjhEdEsxNkxIK20yd2tubEprdHlYdVlVaStIZ3g1N25UcHNPb3pBWAppRmMxMG1QMU1nNnhRWUhBS3ZFU2tpMnpTZEtDcVUxYkNlTEhoOHd4QmVTS3BrUUFrVGlsK0FndW1FMTBSZk9UCkZqbmNoUFl0b3ord08rWWpmQ3I4cjJ3UWhOVXdWTWNvV2crRE15UEVJY3I5SHFRNmdMelhrVGcvbDB0VnRodEgKU2Z3T2RhWXZIOStuOGV2R3BYOFlkVzNXK3ArTzJZRzdnVUtBckRndmV2MlJXVVNURnlIczNKNE1aNVRnQWRDNQpiaTJWN29QbGNkbVFKZ1RGWmIyYUdFSlQ5NW5lc1ZhaHEyWnNVamxwWHhwSlFqS3RKZHZTb2RMTlB3SURBUUFCCm8yWXdaREFPQmdOVkhROEJBZjhFQkFNQ0FRWXdFZ1lEVlIwVEFRSC9CQWd3QmdFQi93SUJBakFkQmdOVkhRNEUKRmdRVTRReHpERTh1dTE3ZXdJKzI1UlpiYmk5RlNWY3dId1lEVlIwakJCZ3dGb0FVNFF4ekRFOHV1MTdld0krMgo1UlpiYmk5RlNWY3dEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBQlMxazdURjlmY3JmdFhzRzBWbVJ6a1ZCdUNUCnc0eXlFYllnV3ZFMmxqYmN3NGpyb3QzcFlGMkpMZVJYeHFsM2tBM0VlVmxvVE54TE1iQmlWcnFQZGQzU0tKU3oKZno3M3VpNnZpUHQ2M2dtSXFSb3oyaHd4NGVNY1NZeUtuNVl2cDFabktYTVBGYzBOK0VIN2xDekxnb25XSlVjQQpYVCtyUnZxQVp2dTdvd3ZVSEJaRzFMdzF3UCtia20zRzgxMDhYTStrTmx3SWgwUkJLeDhmRHVJUUdLOFAxYVg5Cnp0SUsrUVNiTUdBNVo0bS9qVEtxY2xjVnkyU2VXbHl4M0FyRDN5eTZpV0VNTnp1UmhZRXpUdk9xOUppNEFQQUsKQk5iVWZUL0xrTXZhOVVYdGZDeWN2M1h3SzVaTGVjelNYaXVnVytXc0NQdk1oeDRHc3UzT1ljRldsSWc9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://20.0.0.201:6443

name: cluster1

contexts:

- context:

cluster: cluster1

user: admin

name: cluster1

current-context: cluster1

kind: Config

preferences: {}

users:

- name: admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQxekNDQXIrZ0F3SUJBZ0lVWnh5dm4vQm5CcWVvZFdhTC9uek1zajZSQ3Njd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SUJjTk1qQXdNekUyTURJME56QXdXaGdQTWpBM01EQXpNRFF3TWpRM01EQmFNR2N4Q3pBSkJnTlYKQkFZVEFrTk9NUkV3RHdZRFZRUUlFd2hJWVc1bldtaHZkVEVMTUFrR0ExVUVCeE1DV0ZNeEZ6QVZCZ05WQkFvVApEbk41YzNSbGJUcHRZWE4wWlhKek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweERqQU1CZ05WQkFNVEJXRmtiV2x1Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBdll4VzdOaGVnTjBMUXhJZ2l6Sm4KcXlPaU5JeFJLS0V2MGpEMXl5bVJDN2diazZWeDd2R0llaUtvNjNPckR5ZjFpYVh0ZVIyQVMwSGxBeThJdlBqcQpHVDA1SnJ6UDBGRERlOSt0bUdUc2tSdVp3ZFp4TU81dzFoemxFNFM1N1NsOG1UNUlTdzFnM2V6aHlQa1ZHMHRUCm1HYWpSc0xyOGdzMTdtMTRPQnNCbnVrdFdRT2JlNWtwdnJOdjAwRTJPN3crcHdWaFBZbGUzdnpiWjF3N1BGR0EKMDFQalh3T1l4TGVwMkFwUHJ4UDlWTFdrT0ZxeWM5MSs1aFlaaW5OMHMrMTV0eWpiaTJSaXNrSkp2WjVmZTdTcApnUURPV0pGaXVrekNKQlZQNTFpaEVOMUxwemJXVXpkSG5qekw0KzNYdVNkMVUzUEtPK3M1NUJmTGlQakh4MW50CktRSURBUUFCbzM4d2ZUQU9CZ05WSFE4QkFmOEVCQU1DQmFBd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUcKQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdIUVlEVlIwT0JCWUVGRmZic05xeHV1Rlp1cVpKUUVadwpoUlFRR2JoQU1COEdBMVVkSXdRWU1CYUFGT0VNY3d4UExydGUzc0NQdHVVV1cyNHZSVWxYTUEwR0NTcUdTSWIzCkRRRUJDd1VBQTRJQkFRQnU1SVpLSUFkamd3TkpkL1liZnhtbnlOcUFLekNnQnJVTUYwU2JaOHA2RHBCUTFBcDYKS3JaYUtYVnp4V2I0VExrQURmWXhWWEFaWUJ0bnJaM0MvQ2s1YWxpNzk2cTNQMnBsMzlCR295SWQvczVBT0JJRQpiUndwQ1IyMkN4WUc2cUFMKzZHR0lRRHk5eWNGK3BndlFqZWdjYWEwaXVDbnc4OXZwUTQ5MlpxeXhoZVJGVTNDCkdLKzkxOU1JaE10N2xSaHRZTjlzMjZkTjZWVGs4S3c1bnI1QWx2dnZWVXlsVTZCd1U0L1Z4U1VISFJXZytJajYKUGhlQ0RNVi9DMVdTS2JzUDczdUhsR3hVa21VclhKOExOUlloMlRaazZlVkRpaDltRVRSRy9HNWZkK2d4UUVIQgpIWnZmU1U1MmRzK0ZCeUFBT3BPOXd6TG9rdU9Pb1k1WFB2aUYKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBdll4VzdOaGVnTjBMUXhJZ2l6Sm5xeU9pTkl4UktLRXYwakQxeXltUkM3Z2JrNlZ4Cjd2R0llaUtvNjNPckR5ZjFpYVh0ZVIyQVMwSGxBeThJdlBqcUdUMDVKcnpQMEZERGU5K3RtR1Rza1J1WndkWngKTU81dzFoemxFNFM1N1NsOG1UNUlTdzFnM2V6aHlQa1ZHMHRUbUdhalJzTHI4Z3MxN20xNE9Cc0JudWt0V1FPYgplNWtwdnJOdjAwRTJPN3crcHdWaFBZbGUzdnpiWjF3N1BGR0EwMVBqWHdPWXhMZXAyQXBQcnhQOVZMV2tPRnF5CmM5MSs1aFlaaW5OMHMrMTV0eWpiaTJSaXNrSkp2WjVmZTdTcGdRRE9XSkZpdWt6Q0pCVlA1MWloRU4xTHB6YlcKVXpkSG5qekw0KzNYdVNkMVUzUEtPK3M1NUJmTGlQakh4MW50S1FJREFRQUJBb0lCQURuQ3dWNXRWT2NKOVB1YwpVNFIzZUxYakp2UENhcHpwK1l1ZzFkWHlOYndQZjMvUG85ZC9qT3BERTV5a1k5VTdoUXhNSHdDUVo5OEhGb1dRCjJLWFZhR2tHaWhydTRKa0hjM3FWSCs1WG11dGhNTXFyMFZScTVNR3FqbmdUTXlFOVNqWGNqK1VuRDRTeFFlQ2YKWHJveGl5amU1aFNUSTVsZnB6Q2Y5VW1MbVVQV0hrZzZZaXJPb0dLdjJZUlVHamxkcWRiNHRUQ084Y2g1d0puSwowZ2RsRXloV1F3V2RXTTQ3c3F1MTNEY2U3L1o3TTJKeEJ5Qjd0VHEvbXlvR0F2Y1RoMG1HZ1FLUCswYkNhWGZrCksvZUI0RVdLVHoySjllK1o1R2FqUE5US2NQd255bE1vU1prZktaTE5QRUVyZXRqclZSdWlKUUsydVdBMUpEdjgKS2t6Ylc3RUNnWUVBNTl2R1VUb3h5bEVVZmpRMHJ6U3lMdjg0UTJ5TG96dXZLTnVxVFZKZHN1QnRtcm82eCtDcwo5OHk3Y05BTzRWMmRDbG0zTTBzbC9xN3J6TzVEMVNxUEZPMHk3bnRnT0lEZkdFWEVjNUtORmpMdU4vcXNITHBrClB3SHJvbUh2SVRtbEFuSTFkRnZxNzNjRVVvMVFEQVRhMlFqUi9GWW1jeXBFbDZzejBWdHRmODBDZ1lFQTBVakcKK0N6MTl0bWozUklnVXppNXdBU3RmYTdrV1FndHpjYzRHcEl4NnBGRWoxT3p2K2xhNDhteUhQQmpIcU9SYmtjNwpxS3dCd3ptbmRnZ2R4RnovVFA4Mk5GSDdGMkRvODhmL1o2Y1c4SkZUQkRMSXU3RlZIOFVRNkFNUnVIY3ZscndLCmx1MWFwQmRLSDl3Q2ovbzFvNlpiem5ublZZTlRtY2tHRnhWQzdzMENnWUVBd0NPcXR3bUkxV1pYbXpaY0tvMTYKMCtPQWxxOVBFSmlYVVQ2b1pLZExLcjg5VlNuYktHU1NNbEFoVFIyK0Z4amkyUDc4Q0svUDdyeTZmL3M3ajExVApjVDRZSlBWdENhWVNPeVVsNWJpZTNyU2FJUjNFbjVIL0hRL1VXNTdZOGltNUNzdm1iR1QzempaTkNMUStqNWMrClhQa29PdnFScG9KeWtWVzQvVmZNNkVrQ2dZRUFnWE1ycHl4Z1EzemhUNGU3dU40NGZ0NGwxYnpNLzRrQWsrenEKOWZ5QnBaNlBNcnhLVGFWd2s3OFpUYThmRUQrS1lCVHRnT3BMK001N2w1VnpuQmNOenpsNDlLblV6dVFoazFDYwoyU2RRR0NNN256Z3VVM0pmdmRQdUhpUnYzSkxVTzc4Nktack0ydnlRMjk0Qk03OUhXRjg1SUNEbEIwN0E4am1XCmE4YTU3TzBDZ1lFQXhtWkc3NE5QdnRqTFBPZ1haNmVWTjdiaUdHRUpHb2M4OEdGdVkwZXF0bktpbVpLVTd6YnYKdEdGejRNS2lTQ09BMXQ0aDArWm5mYWRTZXRUR0Z6L3hnV2g3S0xNbWMraUl1VWo3QVhIdFZTRWRIZVpabnl1ZQp3dUpXdEFvc0ZBQ0laN1dBakJETjVrTU1LKzZKUHFub2ZmUnpoekJYcWFrTFpDTi83eUpDT1hvPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Il9wNERQb2tOU2pMRkdoTXlDSDRIOVh5R3pLdnA2ektIMHhXQVBucEdldFUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWNqNWw0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkNGExM2ZhZC1mNDI3LTQzNWItODZhNy02ZGZjNTM0ZTkyNmQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.T65yeuBa2ExprRigERC-hPG-WSdaW7B-04O5qRcXn7SLKpK_4tMM8rlraClGmc-ppSDIi35ZjK0SVb8YGDeUnt2psJlRLYVEPsJXHwYiNUfrigVs67Uo3aMGhSdjPEaqdZxsnRrReSW_rfX8odjXF0-wGKx7uA8GelUJuRNIZ0eBSu_iGJchpZxU_K3AdU_dmcyHidKzDxbPLVgAb8m7wE9wcelWVK9g6UOeg71bO0gJtlXrjWrBMfBjvnC4oLDBYs9ze96KmeOLwjWTOlwXaYg4nIuVRL13BaqmBJB9lcRa3jrCDsRT0oBZrBymvqxbCCN2VVjDmz-kZXh7BcWVLg

// 镜像仓库地址

def registry = "20.0.0.207:443"

// 镜像仓库项目

def project = "jenkinsci"

// 镜像名称

def app_name = "citest"

// 镜像完整名称

def image_name = "${registry}/${project}/${app_name}:${BUILD_NUMBER}"

// git仓库地址

def git_address = "http://20.0.0.208/web-test/app1.git"

// 认证

def harbor_auth = "ef0d9198-7294-47cf-ac46-daa5c7a53649"

def gitlab_auth = "77e5313a-2e94-4f47-8d4b-17a4cf3d4a2f"

// K8s认证

def k8s_auth = "848b217f-b0c1-4eff-bff2-9444d89e4324"

// harbor仓库secret_name

def harbor_registry_secret = "k8s-harbor-login"

// k8s部署后暴露的nodePort

def nodePort = "33333"

podTemplate(label: 'jenkins-agent', cloud: 'kubernetes',

containers: [

containerTemplate(

name: 'jnlp',

image: "${registry}/jenkinsci/jenkins-slave-jdk:1.8"

)],

volumes: [

hostPathVolume(mountPath: '/var/run/docker.sock', hostPath: '/var/run/docker.sock'),

hostPathVolume(mountPath: '/usr/bin/docker', hostPath: '/usr/bin/docker')

])

{

node("jenkins-agent"){

stage('拉取代码') { // for display purposes

checkout([$class: 'GitSCM', branches: [[name: '*/master']], userRemoteConfigs: [[credentialsId: "${gitlab_auth}", url: "${git_address}"]]])

sh "ls"

}

// stage('代码编译') {

echo 'ok'

//

stage('构建镜像') {

withCredentials([usernamePassword(credentialsId: "${harbor_auth}", passwordVariable: 'password', usernameVariable: 'username')]) {

sh """

echo '

#tomcat web1

FROM harbor.linux.com/web-test/tomcat-base:v8.0.32

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

ADD myapp/* /data/tomcat/webapps/myapp/

#ADD app1.tar.gz /data/tomcat/webapps/myapp/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD filebeat.yml /etc/filebeat/filebeat.yml

RUN chown -R tomcat.tomcat /data/ /apps/ && chmod a+x /apps/tomcat/bin/*.sh

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

' > Dockerfile

docker build -t ${image_name} .

docker login -u ${username} -p '${password}' ${registry}

docker push ${image_name}

"""

}

echo 'ok'

}

stage('部署到K8s'){

sh """

sed -i 's#\$IMAGE_NAME#${image_name}#' tomcat-app1.yaml

sed -i 's#\$SECRET_NAME#${harbor_registry_secret}#' tomcat-app1.yaml

sed -i 's#\$NODE_PORT#${nodePort}#' tomcat-app1.yaml

"""

kubernetesDeploy configs: 'tomcat-app1.yaml', kubeconfigId: "${k8s_auth}"

}

}

}

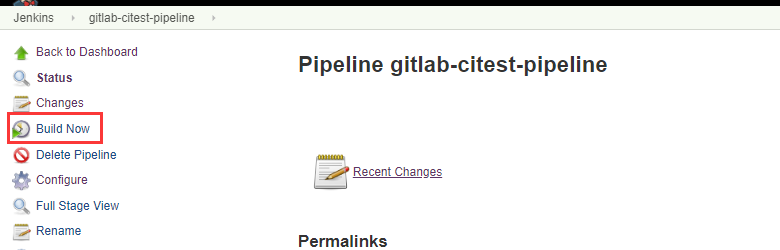

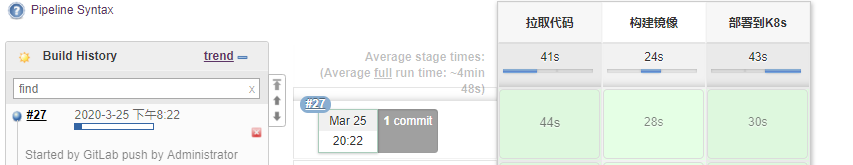

一键部署

# kubectl get pods -n assembly -w

NAME READY STATUS RESTARTS AGE

jenkins-0 1/1 Running 2 27h

rbd-provisioner-9cf46c856-mvtx5 1/1 Running 47 6d9h

jenkins-agent-jrfgc-c2mfb 0/1 Pending 0 0s

jenkins-agent-jrfgc-c2mfb 0/1 Pending 0 0s

jenkins-agent-jrfgc-c2mfb 0/1 ContainerCreating 0 1s

jenkins-agent-jrfgc-c2mfb 1/1 Running 0 4s

web-test-tomcat1-deployment-8c67d47df-565fw 0/1 Pending 0 0s

web-test-tomcat1-deployment-8c67d47df-565fw 0/1 Pending 0 0s

web-test-tomcat1-deployment-8c67d47df-565fw 0/1 ContainerCreating 0 0s

jenkins-agent-jrfgc-c2mfb 1/1 Terminating 0 2m16s

web-test-tomcat1-deployment-8c67d47df-565fw 1/1 Running 0 6s

jenkins-agent-jrfgc-c2mfb 0/1 Terminating 0 2m25s

jenkins-agent-jrfgc-c2mfb 0/1 Terminating 0 2m26s

jenkins-agent-jrfgc-c2mfb 0/1 Terminating 0 2m26s

console output

Started by GitLab push by Administrator

Running in Durability level: MAX_SURVIVABILITY

[Pipeline] Start of Pipeline

[Pipeline] podTemplate

[Pipeline] {

[Pipeline] node

Created Pod: jenkins-agent-jrfgc-c2mfb in namespace assembly

Still waiting to schedule task

‘jenkins-agent-jrfgc-c2mfb’ is offline

Agent jenkins-agent-jrfgc-c2mfb is provisioned from template jenkins-agent-jrfgc

---

apiVersion: "v1"

kind: "Pod"

metadata:

annotations:

buildUrl: "http://jenkins.assembly.svc.cluster.local/job/gitlab-citest-pipeline/27/"

runUrl: "job/gitlab-citest-pipeline/27/"

labels:

jenkins: "slave"

jenkins/label: "jenkins-agent"

name: "jenkins-agent-jrfgc-c2mfb"

spec:

containers:

- env:

- name: "JENKINS_SECRET"

value: "********"

- name: "JENKINS_AGENT_NAME"

value: "jenkins-agent-jrfgc-c2mfb"

- name: "JENKINS_NAME"

value: "jenkins-agent-jrfgc-c2mfb"

- name: "JENKINS_AGENT_WORKDIR"

value: "/home/jenkins/agent"

- name: "JENKINS_URL"

value: "http://jenkins.assembly.svc.cluster.local/"

image: "20.0.0.207:443/jenkinsci/jenkins-slave-jdk:1.8"

imagePullPolicy: "IfNotPresent"

name: "jnlp"

resources:

limits: {}

requests: {}

securityContext:

privileged: false

tty: false

volumeMounts:

- mountPath: "/var/run/docker.sock"

name: "volume-0"

readOnly: false

- mountPath: "/usr/bin/docker"

name: "volume-1"

readOnly: false

- mountPath: "/home/jenkins/agent"

name: "workspace-volume"

readOnly: false

nodeSelector:

beta.kubernetes.io/os: "linux"

restartPolicy: "Never"

securityContext: {}

volumes:

- hostPath:

path: "/var/run/docker.sock"

name: "volume-0"

- hostPath:

path: "/usr/bin/docker"

name: "volume-1"

- emptyDir:

medium: ""

name: "workspace-volume"

Running on jenkins-agent-jrfgc-c2mfb in /home/jenkins/agent/workspace/gitlab-citest-pipeline

[Pipeline] {

[Pipeline] stage

[Pipeline] { (拉取代码)

[Pipeline] checkout

using credential 77e5313a-2e94-4f47-8d4b-17a4cf3d4a2f

Cloning the remote Git repository

Cloning repository http://20.0.0.208/web-test/app1.git

skipping resolution of commit remotes/origin/master, since it originates from another repository

Checking out Revision fc5b47ef6461a023faa52123eeaaffbdf50cb90d (refs/remotes/origin/master)

> git init /home/jenkins/agent/workspace/gitlab-citest-pipeline # timeout=10

Fetching upstream changes from http://20.0.0.208/web-test/app1.git

> git --version # timeout=10

using GIT_ASKPASS to set credentials gitlab-auth

> git fetch --tags --progress http://20.0.0.208/web-test/app1.git +refs/heads/*:refs/remotes/origin/* # timeout=10

> git config remote.origin.url http://20.0.0.208/web-test/app1.git # timeout=10

> git config --add remote.origin.fetch +refs/heads/*:refs/remotes/origin/* # timeout=10

> git config remote.origin.url http://20.0.0.208/web-test/app1.git # timeout=10

Fetching upstream changes from http://20.0.0.208/web-test/app1.git

using GIT_ASKPASS to set credentials gitlab-auth

> git fetch --tags --progress http://20.0.0.208/web-test/app1.git +refs/heads/*:refs/remotes/origin/* # timeout=10

> git rev-parse refs/remotes/origin/master^{commit} # timeout=10

> git rev-parse refs/remotes/origin/origin/master^{commit} # timeout=10

> git config core.sparsecheckout # timeout=10

> git checkout -f fc5b47ef6461a023faa52123eeaaffbdf50cb90d # timeout=10

Commit message: "Update index.html"

> git rev-list --no-walk 56a05f7cfabb39e805104f68edf29d4a2a62a00e # timeout=10

[Pipeline] sh

+ ls

app1.tar.gz

build-command.sh

catalina.sh

filebeat.yml

myapp

run_tomcat.sh

server.xml

tomcat-app1.yaml

[Pipeline] }

[Pipeline] // stage

[Pipeline] echo

ok

[Pipeline] stage

[Pipeline] { (构建镜像)

[Pipeline] withCredentials

Masking supported pattern matches of $username or $password

[Pipeline] {

[Pipeline] sh

+ echo '

#tomcat web1

FROM harbor.linux.com/web-test/tomcat-base:v8.0.32

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

ADD myapp/* /data/tomcat/webapps/myapp/

#ADD app1.tar.gz /data/tomcat/webapps/myapp/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD filebeat.yml /etc/filebeat/filebeat.yml

RUN chown -R tomcat.tomcat /data/ /apps/ && chmod a+x /apps/tomcat/bin/*.sh

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

'

+ docker build -t 20.0.0.207:443/jenkinsci/citest:27 .

Sending build context to Docker daemon 138.8kB

Step 1/9 : FROM harbor.linux.com/web-test/tomcat-base:v8.0.32

---> 57a68cb49838

Step 2/9 : ADD catalina.sh /apps/tomcat/bin/catalina.sh

---> Using cache

---> 952bd7aedf45

Step 3/9 : ADD server.xml /apps/tomcat/conf/server.xml

---> Using cache

---> 514e8fd3fb0a

Step 4/9 : ADD myapp/* /data/tomcat/webapps/myapp/

---> 955e12db7ba0

Step 5/9 : ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

---> b602420d4cee

Step 6/9 : ADD filebeat.yml /etc/filebeat/filebeat.yml

---> eb303540006c

Step 7/9 : RUN chown -R tomcat.tomcat /data/ /apps/ && chmod a+x /apps/tomcat/bin/*.sh

---> Running in 25733526d18c

Removing intermediate container 25733526d18c

---> e0bdc01f0e60

Step 8/9 : EXPOSE 8080 8443

---> Running in cb73eba5c8cc

Removing intermediate container cb73eba5c8cc

---> de3838fd9278

Step 9/9 : CMD ["/apps/tomcat/bin/run_tomcat.sh"]

---> Running in 1eb7e3aea8c5

Removing intermediate container 1eb7e3aea8c5

---> 6e76d78777a4

Successfully built 6e76d78777a4

Successfully tagged 20.0.0.207:443/jenkinsci/citest:27

+ docker login -u **** -p **** 20.0.0.207:443

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

+ docker push 20.0.0.207:443/jenkinsci/citest:27

The push refers to repository [20.0.0.207:443/jenkinsci/citest]

16d625dc57d6: Preparing

cf823686624a: Preparing

234132b6269c: Preparing

c5c916246c3b: Preparing

0d62fdc12392: Preparing

8e3632860e66: Preparing

b08c99aa7244: Preparing

af6ca6732cdd: Preparing

5a30b566c369: Preparing

2dded0b9e69e: Preparing

8979abf3f442: Preparing

5660fcdf24c6: Preparing

06915737382e: Preparing

33cb7880c818: Preparing

97275cad22f5: Preparing

74aad83882e8: Preparing

4826cdadf1ef: Preparing

2dded0b9e69e: Waiting

8979abf3f442: Waiting

5660fcdf24c6: Waiting

06915737382e: Waiting

b08c99aa7244: Waiting

33cb7880c818: Waiting

97275cad22f5: Waiting

8e3632860e66: Waiting

74aad83882e8: Waiting

af6ca6732cdd: Waiting

5a30b566c369: Waiting

0d62fdc12392: Layer already exists

8e3632860e66: Layer already exists

b08c99aa7244: Layer already exists

cf823686624a: Pushed

c5c916246c3b: Pushed

234132b6269c: Pushed

5a30b566c369: Layer already exists

af6ca6732cdd: Layer already exists

2dded0b9e69e: Layer already exists

06915737382e: Layer already exists

33cb7880c818: Layer already exists

8979abf3f442: Layer already exists

5660fcdf24c6: Layer already exists

97275cad22f5: Layer already exists

74aad83882e8: Layer already exists

4826cdadf1ef: Layer already exists

16d625dc57d6: Pushed

27: digest: sha256:087c08554de2af922353410f9583b2ded4c536040f2ced73fb050530e9a2ee6e size: 3879

[Pipeline] }

[Pipeline] // withCredentials

[Pipeline] echo

ok

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (部署到K8s)

[Pipeline] sh

+ sed -i 's#$IMAGE_NAME#20.0.0.207:443/jenkinsci/citest:27#' tomcat-app1.yaml

+ sed -i 's#$SECRET_NAME#k8s-harbor-login#' tomcat-app1.yaml

+ sed -i 's#$NODE_PORT#33333#' tomcat-app1.yaml

[Pipeline] kubernetesDeploy

Starting Kubernetes deployment

Loading configuration: /home/jenkins/agent/workspace/gitlab-citest-pipeline/tomcat-app1.yaml

Created V1Deployment: class V1Deployment {

apiVersion: apps/v1

kind: Deployment

metadata: class V1ObjectMeta {

annotations: null

clusterName: null

creationTimestamp: 2020-03-25T12:24:48.000Z

deletionGracePeriodSeconds: null

deletionTimestamp: null

finalizers: null

generateName: null

generation: 1

initializers: null

labels: {app=tomcat-app1-test}

managedFields: null

name: web-test-tomcat1-deployment

namespace: assembly

ownerReferences: null

resourceVersion: 463562

selfLink: /apis/apps/v1/namespaces/assembly/deployments/web-test-tomcat1-deployment

uid: 28a27040-6804-4c65-91bd-4183211e1402

}

spec: class V1DeploymentSpec {

minReadySeconds: null

paused: null

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector: class V1LabelSelector {

matchExpressions: null

matchLabels: {app=tomcat-app1-test}

}

strategy: class V1DeploymentStrategy {

rollingUpdate: class V1RollingUpdateDeployment {

maxSurge: 25%

maxUnavailable: 25%

}

type: RollingUpdate

}

template: class V1PodTemplateSpec {

metadata: class V1ObjectMeta {

annotations: null

clusterName: null

creationTimestamp: null

deletionGracePeriodSeconds: null

deletionTimestamp: null

finalizers: null

generateName: null

generation: null

initializers: null

labels: {app=tomcat-app1-test}

managedFields: null

name: null

namespace: null

ownerReferences: null

resourceVersion: null

selfLink: null

uid: null

}

spec: class V1PodSpec {

activeDeadlineSeconds: null

affinity: null

automountServiceAccountToken: null

containers: [class V1Container {

args: null

command: null

env: null

envFrom: null

image: 20.0.0.207:443/jenkinsci/citest:27

imagePullPolicy: Always

lifecycle: null

livenessProbe: null

name: web-test-tomcat1-spec

ports: [class V1ContainerPort {

containerPort: 8080

hostIP: null

hostPort: null

name: http

protocol: TCP

}]

readinessProbe: null

resources: class V1ResourceRequirements {

limits: null

requests: null

}

securityContext: null

stdin: null

stdinOnce: null

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

tty: null

volumeDevices: null

volumeMounts: null

workingDir: null

}]

dnsConfig: null

dnsPolicy: ClusterFirst

enableServiceLinks: null

hostAliases: null

hostIPC: null

hostNetwork: null

hostPID: null

hostname: null

imagePullSecrets: [class V1LocalObjectReference {

name: k8s-harbor-login

}]

initContainers: null

nodeName: null

nodeSelector: null

preemptionPolicy: null

priority: null

priorityClassName: null

readinessGates: null

restartPolicy: Always

runtimeClassName: null

schedulerName: default-scheduler

securityContext: class V1PodSecurityContext {

fsGroup: null

runAsGroup: null

runAsNonRoot: null

runAsUser: null

seLinuxOptions: null

supplementalGroups: null

sysctls: null

windowsOptions: null

}

serviceAccount: null

serviceAccountName: null

shareProcessNamespace: null

subdomain: null

terminationGracePeriodSeconds: 30

tolerations: null

volumes: null

}

}

}

status: class V1DeploymentStatus {

availableReplicas: null

collisionCount: null

conditions: null

observedGeneration: null

readyReplicas: null

replicas: null

unavailableReplicas: null

updatedReplicas: null

}

}

Applied V1Service: class V1Service {

apiVersion: v1

kind: Service

metadata: class V1ObjectMeta {

annotations: null

clusterName: null

creationTimestamp: 2020-03-24T13:53:32.000Z

deletionGracePeriodSeconds: null

deletionTimestamp: null

finalizers: null

generateName: null

generation: null

initializers: null

labels: {app=tomcat-app1-test}

managedFields: null

name: web-test-tomcat1-spec

namespace: assembly

ownerReferences: null

resourceVersion: 422339

selfLink: /api/v1/namespaces/assembly/services/web-test-tomcat1-spec

uid: 72cca44f-a4a1-4695-8b0e-212448fe1026

}

spec: class V1ServiceSpec {

clusterIP: 10.68.198.131

externalIPs: null

externalName: null

externalTrafficPolicy: Cluster

healthCheckNodePort: null

loadBalancerIP: null

loadBalancerSourceRanges: null

ports: [class V1ServicePort {

name: http

nodePort: 33333

port: 80

protocol: TCP

targetPort: 8080

}]

publishNotReadyAddresses: null

selector: {app=tomcat-app1-test}

sessionAffinity: None

sessionAffinityConfig: null

type: NodePort

}

status: class V1ServiceStatus {

loadBalancer: class V1LoadBalancerStatus {

ingress: null

}

}

}

Finished Kubernetes deployment

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] }

[Pipeline] // podTemplate

[Pipeline] End of Pipeline

Finished: SUCCESS

删除web-test-tomcat1 pod

先删除 deployment 在删除rs

未完成任务

邮件发送

pipeline 改jenkins

没有一键回滚

没有gitlab 分支策略

后期抽空完成,最近实属很忙,都会完成的

jenkins-gitlab-harbor-ceph基于Kubernetes的CI/CD运用(四)的更多相关文章

- jenkins-gitlab-harbor-ceph基于Kubernetes的CI/CD运用(一)

注:这部分的学习还是要靠自己多点点 多尝试尝试 这部分19年3月份我是玩的很溜的,一年没用,基本忘光光了. 学习要温故而知新! 流程拓扑图 前提准备 部署应用服务 部署kubernetes 集群:ht ...

- jenkins-gitlab-harbor-ceph基于Kubernetes的CI/CD运用(三)

从最基础镜像到业务容器 构建 [为gitlab项目部署做铺垫] 业务镜像设计规划 目录结构 # pwd /data/k8s/app/myapp # tree . . ├── dockerfile │ ...

- jenkins-gitlab-harbor-ceph基于Kubernetes的CI/CD运用(二)

一张网图 因为我们使用了Docker in Docker技术,就是把jenkins部署在k8s里.jenkins master会动态创建slave pod,使用slave pod运行代码克隆,项目构建 ...

- 基于docker搭建Jenkins+Gitlab+Harbor+Rancher架构实现CI/CD操作

一.各个组件的功能描述: Docker 是一个开源的应用容器引擎. Jenkis 是一个开源自动化服务器. (1).负责监控gitlab代码.gitlab中配置文件的变动: (2).负责执行镜像文件的 ...

- 容器云平台No.10~通过gogs+drone+kubernetes实现CI/CD

什么是CI/CD 持续集成(Continous Intergration,CI)是一种软件开发实践,即团队开发成员经常集成它们的工作,通常每个成员每天至少集成一次,也就意味着每天可能会发生多次集成.每 ...

- 基于docker搭建Jenkins+Gitlab+Harbor+Rancher架构实现CI/CD操作(续)---Harbor的安装

前期安装文档:https://www.cnblogs.com/lq-93/p/11828626.html Harbor的作用: 开发提交代码至gitlab容器中,Jenkins拉取代码构建镜像 ...

- 基于docker搭建Jenkins+Gitlab+Harbor+Rancher架构实现CI/CD操作(续)

说明:前期的安装,请转向https://www.cnblogs.com/lq-93/p/11824039.html (4).查看gitlab镜像是否启动成功 docker inspect 容器id ...

- kubernetes的CI/CD

部署流程:把编码上传到gitlab上,使用webhook链接jenkins自动去编译docker镜像,然后上传到harbor本地docker镜像库中,再自动下载docker镜像,使用k8s控制dock ...

- Dockerfile+Jenkinsfile+GitLab轻松实现.NetCore程序的CI&CD

一.相关介绍 Dockerfile:关于Dockerfile的使用说明,我在文章<让.NetCore程序跑在任何有docker的地方>中有说到,这里不在赘述,需要的可以先看下,本文主要介绍 ...

随机推荐

- react 踩坑记

yarn node-sass 安装失败 yarn config set sass-binary-site http://npm.taobao.org/mirrors/node-sass yarn i ...

- 日志框架之2 slf4j+logback实现日志架构 · 远观钱途

如何从缤纷复杂的日志系统世界筛选出适合自己的日志框架以及slf4j+logback的组合美妙之处?此文可能有帮助 logback介绍 Logback是由log4j创始人设计的另一个开源日志组件,官方网 ...

- Bitstream or PCM?

背景 提问 讨论精选 一 二 三 四 五 最后 电视上同轴输出的做法. 背景 USB通道下播放声音格式为AAC的视频文件,同轴输出设置为Auto,功放没有声音,设置成PCM,有声音. 提问 Auto/ ...

- python爬虫之数据加密解密

一.什么叫数据加密 数据加密是指利用加密算法和秘钥将明文转变为密文的过程. 二.数据加密的方式 1.单向加密 指只能加密数据而不能解密数据,这种加密方式主要是为了保证数据的完整性,常见的加密算法有MD ...

- AF(操作者框架)系列(3)-创建第一个Actor的程序

这节课的内容,语言描述基本是无趣的,就是一个纯程序编写,直接上图了. 如果想做其他练习,可参考前面的文章: https://zhuanlan.zhihu.com/p/105133597 1. 新建一个 ...

- java单链表的实现自己动手写一个单链表

单链表:单链表是一种链式存取的数据结构,用一组地址任意的存储单元存放线性表中的数据元素.链表中的数据是以结点来表示的,每个结点的构成:元素(数据元素的映象) + 指针(指示后继元素存储位置),元素就是 ...

- 设计模式-11享元模式(Flyweight Pattern)

1.模式动机 在面向对象程序设计过程中,有时会面临要创建大量相同或相似对象实例的问题.创建那么多的对象将会耗费很多的系统资源,它是系统性能提高的一个瓶颈. 享元模式就是把相同或相似对象的公共部分提取出 ...

- APScheduler使用总结

安装 pip install apscheduler APScheduler组件 1.triggers(触发器) 触发器中包含调度逻辑,每个作业都由自己的触发器来决定下次运行时间.除了他们自己初始配置 ...

- 《一步步成为 Hacker_Day 01》

环境搭建 传统运行模式 - 一台机器同时只能运行一个操作系统 |:----------|----------:| | 应用程序 | 应用程序 | |:----------|----------:| | ...

- JavaScript实现树结构(二)

JavaScript实现树结构(二) 一.二叉搜索树的封装 二叉树搜索树的基本属性: 如图所示:二叉搜索树有四个最基本的属性:指向节点的根(root),节点中的键(key).左指针(right).右指 ...