毕设一:python 爬取苏宁的商品评论

毕设需要大量的商品评论,网上找的数据比较旧了,自己动手

代理池用的proxypool,github:https://github.com/jhao104/proxy_pool

ua:fake_useragent

# 评价较多的店铺(苏宁推荐)

https://tuijian.suning.com/recommend-portal/recommendv2/biz.jsonp?parameter=%E5%8D%8E%E4%B8%BA&sceneIds=2-1&count=10 # 评价

https://review.suning.com/ajax/cluster_review_lists/general-30259269-000000010748901691-0000000000-total-1-default-10-----reviewList.htm?callback=reviewList # clusterid

# 在查看全部评论的href中,实际测试发现是执行js加上的,两种方案

# 1.去匹配js中的clusterId

# 2.或者用selenium/phantomjs去请求执行js之后的页面然后解析html获得href

代码:

# -*- coding: utf-8 -*-

# @author: Tele

# @Time : 2019/04/15 下午 8:20

import time

import requests

import os

import json

import re

from fake_useragent import UserAgent class SNSplider:

flag = True

regex_cluser_id = re.compile("\"clusterId\":\"(.{8})\"")

regex_comment = re.compile("reviewList\((.*)\)") @staticmethod

def get_proxy():

return requests.get("http://127.0.0.1:5010/get/").content.decode() @staticmethod

def get_ua():

ua = UserAgent()

return ua.random def __init__(self, kw_list):

self.kw_list = kw_list

# 评论url 参数顺序:cluser_id,sugGoodsCode,页码

self.url_temp = "https://review.suning.com/ajax/cluster_review_lists/general-{}-{}-0000000000-total-{}-default-10-----reviewList.htm"

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36",

}

self.proxies = {

"http": None

}

self.parent_dir = None

self.file_dir = None # ua,proxy

def check(self):

self.headers["User-Agent"] = SNSplider.get_ua()

proxy = "http://" + SNSplider.get_proxy()

self.proxies["http"] = proxy

print("ua:", self.headers["User-Agent"])

print("proxy:", self.proxies["http"]) # 评论

def parse_url(self, cluster_id, sugGoodsCode, page):

url = self.url_temp.format(cluster_id, sugGoodsCode, page)

response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

if response.status_code == 200:

print(url)

if len(response.content) < 0:

return

data = json.loads(SNSplider.regex_comment.findall(response.content.decode())[0])

if "commodityReviews" in data:

# 评论

comment_list = data["commodityReviews"]

if len(comment_list) > 0:

item_list = list()

for comment in comment_list:

item = dict()

try:

# 商品名

item["referenceName"] = comment["commodityInfo"]["commodityName"]

except:

item["referenceName"] = None

# 评论时间

item["creationTime"] = comment["publishTime"]

# 内容

item["content"] = comment["content"]

# label

item["label"] = comment["labelNames"]

item_list.append(item) # 保存

with open(self.file_dir, "a", encoding="utf-8") as file:

file.write(json.dumps(item_list, ensure_ascii=False, indent=2))

file.write("\n")

time.sleep(5)

else:

SNSplider.flag = False

else:

print("评论页出错") # 提取商品信息

def get_product_info(self):

url_temp = "https://tuijian.suning.com/recommend-portal/recommendv2/biz.jsonp?parameter={}&sceneIds=2-1&count=10"

result_list = list()

for kw in self.kw_list:

url = url_temp.format(kw)

response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

if response.status_code == 200:

kw_dict = dict()

id_list = list()

data = json.loads(response.content.decode())

skus_list = data["sugGoods"][0]["skus"]

if len(skus_list) > 0:

for skus in skus_list:

item = dict()

sugGoodsCode = skus["sugGoodsCode"]

# 请求cluserId

item["sugGoodsCode"] = sugGoodsCode

item["cluster_id"] = self.get_cluster_id(sugGoodsCode)

id_list.append(item)

kw_dict["title"] = kw

kw_dict["id_list"] = id_list

result_list.append(kw_dict)

else:

pass

return result_list # cluserid

def get_cluster_id(self, sugGoodsCode):

self.check()

url = "https://product.suning.com/0000000000/{}.html".format(sugGoodsCode[6::])

response = requests.get(url, headers=self.headers, proxies=self.proxies, verify=False)

if response.status_code == 200:

cluser_id = None

try:

cluser_id = SNSplider.regex_cluser_id.findall(response.content.decode())[0]

except:

pass

return cluser_id

else:

print("请求cluster id出错") def get_comment(self, item_list):

if len(item_list) > 0:

for item in item_list:

id_list = item["id_list"]

item_title = item["title"]

if len(id_list) > 0:

self.parent_dir = "f:/sn_comment/" + item_title + time.strftime("-%Y-%m-%d-%H-%M-%S",

time.localtime(time.time()))

if not os.path.exists(self.parent_dir):

os.makedirs(self.parent_dir)

for product_code in id_list:

# 检查proxy,ua

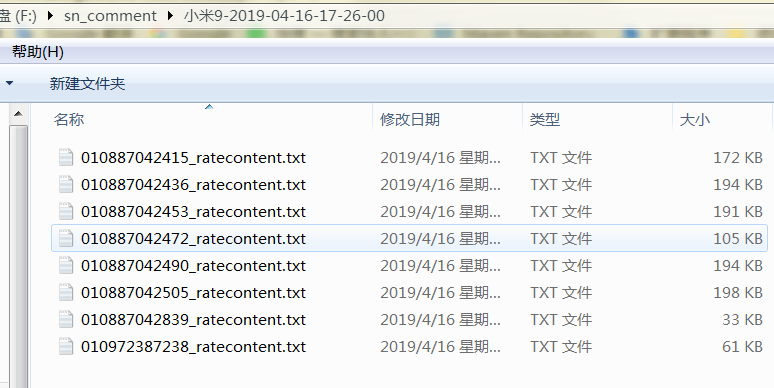

sugGoodsCode = product_code["sugGoodsCode"]

cluster_id = product_code["cluster_id"]

if not cluster_id:

continue

page = 1

# 检查目录

self.file_dir = self.parent_dir + "/" + sugGoodsCode[6::] + "_ratecontent.txt"

self.check()

while SNSplider.flag:

self.parse_url(cluster_id, sugGoodsCode, page)

page += 1

SNSplider.flag = True

else:

print("---error,empty id list---")

else:

print("---error,empty item list---") def run(self):

self.check()

item_list = self.get_product_info()

print(item_list)

self.get_comment(item_list) def main():

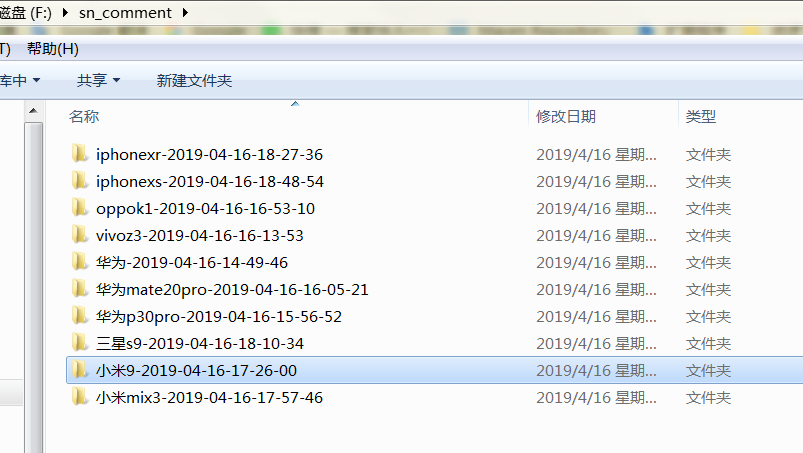

# , "华为mate20pro", "vivoz3", "oppok1", "荣耀8x", "小米9", "小米mix3", "三星s9", "iphonexr", "iphonexs"

# "华为p30pro", "华为mate20pro", "vivoz3""oppok1""荣耀8x", "小米9"

kw_list = ["小米mix3", "三星s9", "iphonexr", "iphonexs"]

splider = SNSplider(kw_list)

splider.run() if __name__ == '__main__':

main()

毕设一:python 爬取苏宁的商品评论的更多相关文章

- 毕设之Python爬取天气数据及可视化分析

写在前面的一些P话:(https://jq.qq.com/?_wv=1027&k=RFkfeU8j) 天气预报我们每天都会关注,我们可以根据未来的天气增减衣物.安排出行,每天的气温.风速风向. ...

- Python爬取淘宝店铺和评论

1 安装开发需要的一些库 (1) 安装mysql 的驱动:在Windows上按win+r输入cmd打开命令行,输入命令pip install pymysql,回车即可. (2) 安装自动化测试的驱动s ...

- python爬取网易云音乐歌曲评论信息

网易云音乐是广大网友喜闻乐见的音乐平台,区别于别的音乐平台的最大特点,除了“它比我还懂我的音乐喜好”.“小清新的界面设计”就是它独有的评论区了——————各种故事汇,各种金句频出.我们可以透过歌曲的评 ...

- 用Python爬取了三大相亲软件评论区,结果...

小三:怎么了小二?一副愁眉苦脸的样子. 小二:唉!这不是快过年了吗,家里又催相亲了 ... 小三:现在不是流行网恋吗,你可以试试相亲软件呀. 小二:这玩意靠谱吗? 小三:我也没用过,你自己看看软件评论 ...

- 毕设二:python 爬取京东的商品评论

# -*- coding: utf-8 -*- # @author: Tele # @Time : 2019/04/14 下午 3:48 # 多线程版 import time import reque ...

- 【Python爬虫案例学习】Python爬取淘宝店铺和评论

安装开发需要的一些库 (1) 安装mysql 的驱动:在Windows上按win+r输入cmd打开命令行,输入命令pip install pymysql,回车即可. (2) 安装自动化测试的驱动sel ...

- Python爬取网易云热歌榜所有音乐及其热评

获取特定歌曲热评: 首先,我们打开网易云网页版,击排行榜,然后点击左侧云音乐热歌榜,如图: 关于如何抓取指定的歌曲的热评,参考这篇文章,很详细,对小白很友好: 手把手教你用Python爬取网易云40万 ...

- Python 爬取所有51VOA网站的Learn a words文本及mp3音频

Python 爬取所有51VOA网站的Learn a words文本及mp3音频 #!/usr/bin/env python # -*- coding: utf-8 -*- #Python 爬取所有5 ...

- python爬取网站数据

开学前接了一个任务,内容是从网上爬取特定属性的数据.正好之前学了python,练练手. 编码问题 因为涉及到中文,所以必然地涉及到了编码的问题,这一次借这个机会算是彻底搞清楚了. 问题要从文字的编码讲 ...

随机推荐

- 1.2 Use Cases中 Commit Log官网剖析(博主推荐)

不多说,直接上干货! 一切来源于官网 http://kafka.apache.org/documentation/ Commit Log 提交日志 Kafka can serve as a kind ...

- 为什么出现ORM

ORM(Object Relational Mapping)对象关系映射,是一种为了解决面向对象与关系数据库存在的互不匹配的现象的技术 . 为什么出现ORM? 面向对象的特征:我们通常使用的开发语言J ...

- 机房收费 & 廊院食堂

做机房收费系统时.常常想这个一般用户指的是谁?我当初以为是学生......可能是被数据库中的student带跑偏了...... 事实上把我们的系统联系一下实际,就会非常easy想到一般用户指的是谁的位 ...

- win7管理工具不可用

看看这个路径的文件夹是否还在C:\ProgramData\Microsoft\Windows\Start Menu\Programs\Administrative Tools如果有缺失的文件夹就自己新 ...

- GO语言学习(七)Go 语言变量

Go 语言变量 变量来源于数学,是计算机语言中能储存计算结果或能表示值抽象概念.变量可以通过变量名访问. Go 语言变量名由字母.数字.下划线组成,其中首个字母不能为数字. 声明变量的一般形式是使用 ...

- Redis学习笔记--String(四)

Redis的第一个数据类型string 1.命令 1.1赋值 语法:SET key value Set key value; > OK 1.2取值 语法:GET key > get tes ...

- eclipse启动tomcat报错

错误信息: 严重: A child container failed during start java.util.concurrent.ExecutionException: org.apach ...

- IOS使用AsyncSocket进行Socket通信

首先导入CFNetwork.framework框架 1.下载ASyncSocket库源码 2.把ASyncSocket库源码加入项目 3.在项目增加CFNetwork框架 使用AsyncSocket开 ...

- AM335x(TQ335x)学习笔记——Nand&&网卡驱动移植

移植完毕声卡驱动之后本想再接再励,移植网卡驱动,但没想到的是TI维护的内核太健壮,移植网卡驱动跟之前移植按键驱动一样简单,Nand驱动也是如此,于是,本人将Nand和网卡放在同一篇文章中介绍.介绍之前 ...

- POJ 2823 Sliding Window 线段树

http://poj.org/problem?id=2823 出太阳啦~^ ^被子拿去晒了~晚上还要数学建模,刚才躺在床上休息一下就睡着了,哼,还好我强大,没有感冒. 话说今年校运会怎么没下雨!!!说 ...