DEX-6-caffe模型转成pytorch模型办法

在python2.7环境下

文件下载位置:https://data.vision.ee.ethz.ch/cvl/rrothe/imdb-wiki/

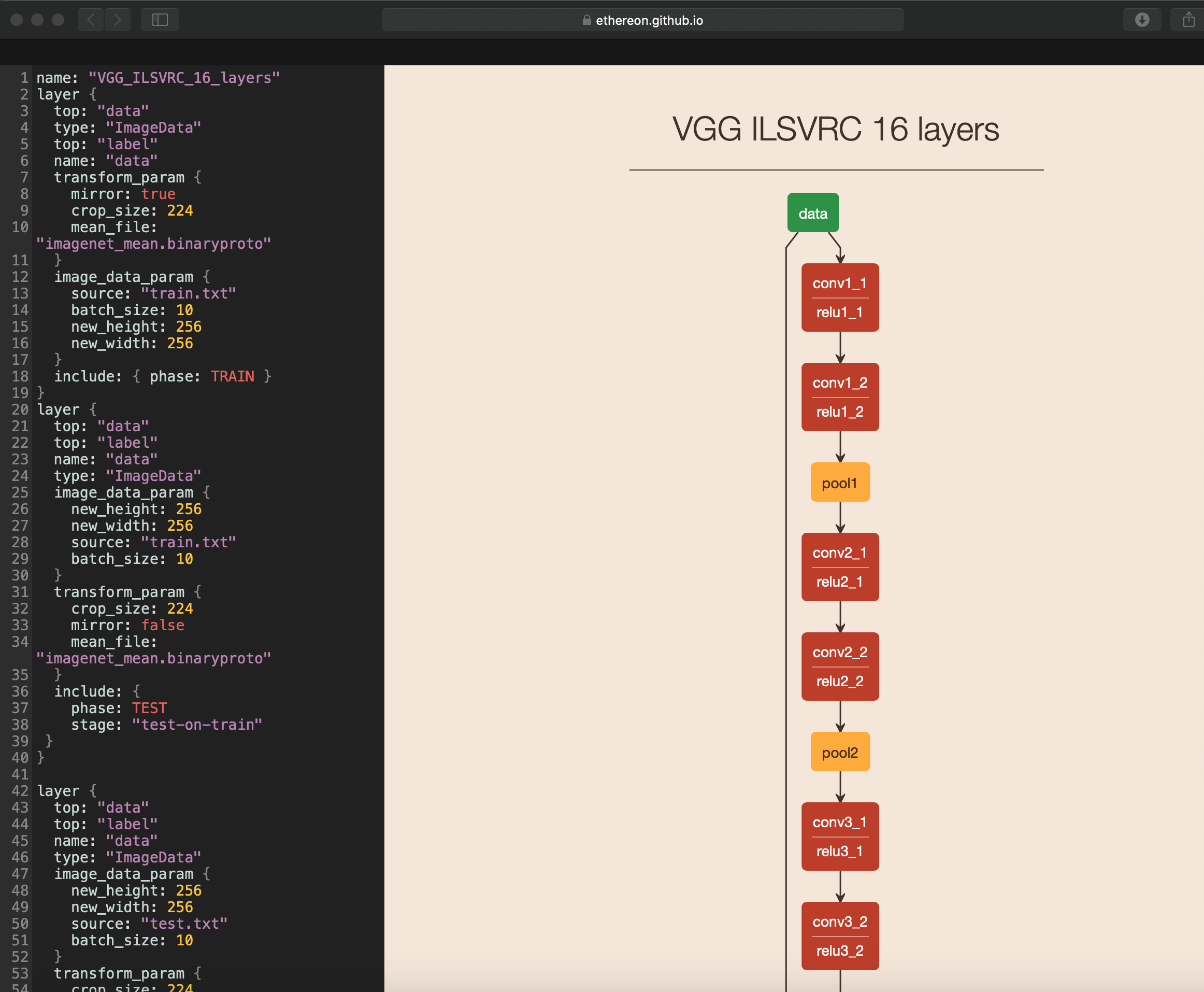

1.可视化模型文件prototxt

1)在线可视化

网址为:https://ethereon.github.io/netscope/#/editor

将prototxt文件的内容复制到左边,然后按shift-enter键即可:

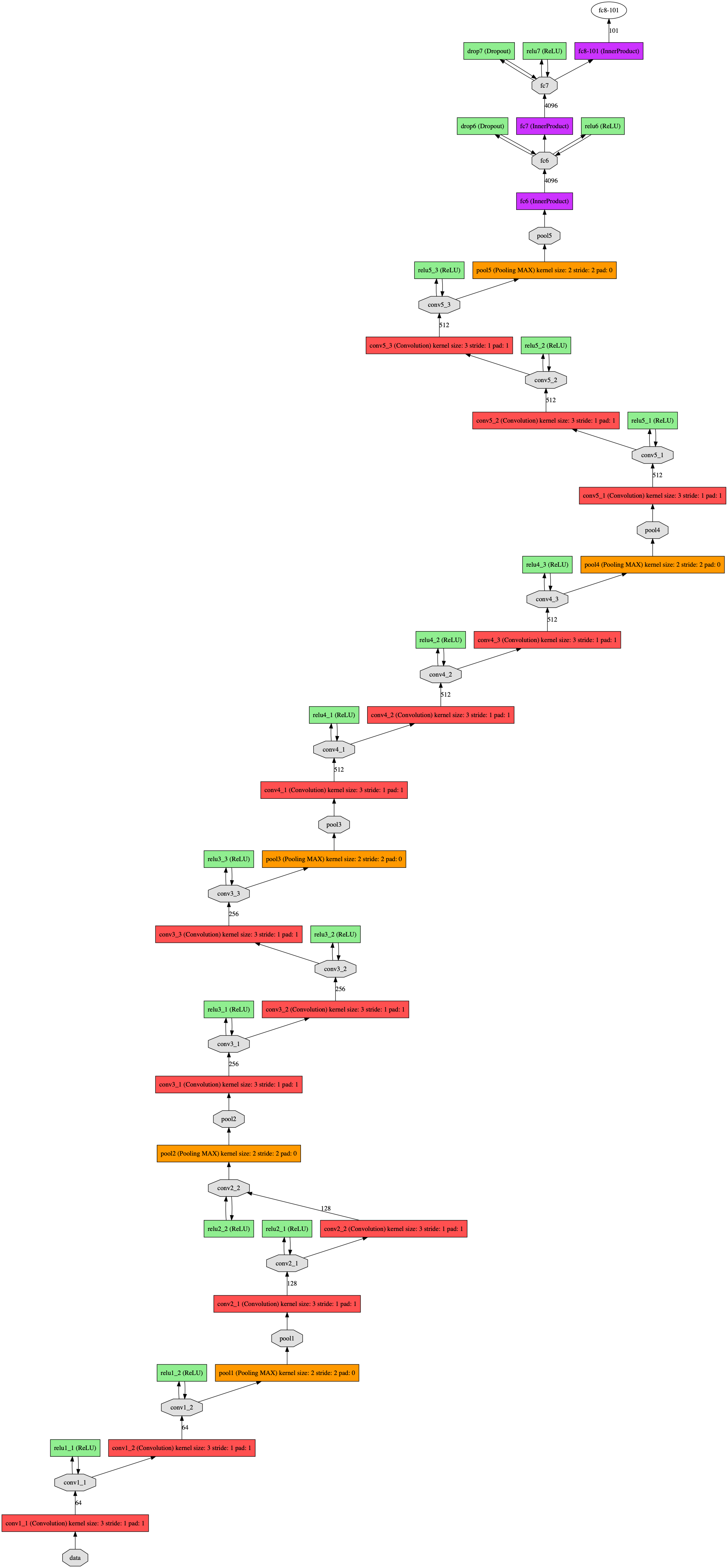

2)本地可视化

先安装:

(deeplearning2) userdeMacBook-Pro:~ user$ brew install graphviz

再安装:

(deeplearning2) userdeMacBook-Pro:~ user$ pip install pydot

前提是安装好了caffe,然后运行:

(deeplearning2) userdeMacBook-Pro:face_data user$ python /Users/user/caffe/python/draw_net.py /Users/user/pytorch/face_data/age_train.prototxt age_train.png --rankdir=BT

Drawing net to age_train.png

参数意思:

- 参数1:网络模型的prototxt文件

- 参数2:保存的图片路径及名字

- 参数3:--rankdir=x , x 有四种选项,分别是LR, RL, TB, BT 。用来表示网络的方向,分别是从左到右,从右到左,从上到小,从下到上。默认为LR

这是更改输入、损失和精确度层的图,为:

没更改过的为:

(deeplearning2) userdeMacBook-Pro:face_data user$ python /Users/user/caffe/python/draw_net.py /Users/user/pytorch/face_data/age_train.prototxt.txt age_train_2.png --rankdir=BT

Drawing net to age_train_2.png

图为:

2.网络结构说明

name: "VGG_ILSVRC_16_layers" # 声明该网络结构的名称

# 下面先是指明使用的数据集

layer { #指明用于训练的数据,训练集

top: "data"

type: "ImageData"

top: "label"

name: "data"

transform_param {

mirror: true

crop_size:

mean_file: "imagenet_mean.binaryproto"

}

image_data_param {

source: "train.txt" #指明存放位置

batch_size:

new_height:

new_width:

}

include: { phase: TRAIN } #指明用于训练

}

layer { #指明用于验证的数据,验证集

top: "data"

top: "label"

name: "data"

type: "ImageData"

image_data_param {

new_height:

new_width:

source: "train.txt"

batch_size:

}

transform_param {

crop_size:

mirror: false

mean_file: "imagenet_mean.binaryproto"

}

include: {

phase: TEST

stage: "test-on-train"

}

} layer { #指明用于测试的数据,测试集

top: "data"

top: "label"

name: "data"

type: "ImageData"

image_data_param {

new_height:

new_width:

source: "test.txt"

batch_size:

}

transform_param {

crop_size:

mirror: false

mean_file: "imagenet_mean.binaryproto"

}

include: {

phase: TEST

stage: "test-on-test"

}

} #下面就是网络结构了,这里以第一个卷积层为例 layer {

bottom: "data" #该层的上一层为data数据层,即其输入

top: "conv1_1" #该层的输出

name: "conv1_1" #该层的名称conv1_1

param { #权重weight的参数

lr_mult: #权重weight的学习率

decay_mult: #权重weight的衰减系数

}

param { #偏置bias的参数

lr_mult: #偏置bias的学习率

decay_mult: #偏置bias的衰减系数

}

type: "Convolution" #该层的类型,为卷积

convolution_param { #卷积层的参数

num_output: #卷积核个数,即输出的channels数

pad: #padding大小

kernel_size: #卷积核的大小

}

}

layer {

bottom: "conv1_1"

top: "conv1_1"

name: "relu1_1"

type: "ReLU" #该层的类型,为激活函数,无参数

}

layer {

bottom: "conv1_1"

top: "conv1_2"

name: "conv1_2" #该层的名称conv1_1

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

type: "Convolution"

convolution_param {

num_output:

pad:

kernel_size:

}

}

layer {

bottom: "conv1_2"

top: "conv1_2"

name: "relu1_2"

type: "ReLU" #该层的类型,为激活函数,无参数

}

layer {

bottom: "conv1_2"

top: "pool1"

name: "pool1"

type: "Pooling" #该层的类型,为池化层

pooling_param {

pool: MAX #为最大池化层

kernel_size: #卷积核的大小

stride: #步长大小

}

} # 中间其他卷积层省略

... # 全连接层

layer {

bottom: "pool5"

top: "fc6"

name: "fc6"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

type: "InnerProduct" #该层的类型,为全连接层

inner_product_param { #输出个数为4096

num_output:

}

}

layer {

bottom: "fc6"

top: "fc6"

name: "relu6"

type: "ReLU"

}

layer {

bottom: "fc6"

top: "fc6"

name: "drop6"

type: "Dropout" #该层的类型,为dropout层

dropout_param {

dropout_ratio: 0.5 #dropout比率为0.

}

}

layer {

bottom: "fc6"

top: "fc7"

name: "fc7"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

type: "InnerProduct"

inner_product_param {

num_output:

}

}

layer {

bottom: "fc7"

top: "fc7"

name: "relu7"

type: "ReLU"

}

layer {

bottom: "fc7"

top: "fc7"

name: "drop7"

type: "Dropout"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

bottom: "fc7"

top: "fc8-101"

name: "fc8-101"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

type: "InnerProduct"

inner_product_param {

num_output:

weight_filler { #权重初始化方法参数

type: "gaussian" #使用的初始化方法为高斯分布

std: 0.01 #标准差为0.

}

bias_filler { #偏置初始化方法参数

type: "constant" #初始化为定值0

value:

}

}

} layer {

bottom: "fc8-101"

bottom: "label"

name: "loss"

type: "SoftmaxWithLoss" #最后对结果求损失,使用的方法为SoftmaxWithLoss

include: { phase: TRAIN }

} # 如果是测试则仅使用Softmax求概率 layer {

name: "prob"

type: "Softmax"

bottom: "fc8-101"

top: "prob"

include {

phase: TEST

}

} # 当进行验证时进行的求top1、top5和top10的操作,求精确度

layer {

name: "accuracy_train_top01"

type: "Accuracy"

bottom: "fc8-101"

bottom: "label"

top: "accuracy_train_top01"

include {

phase: TEST

stage: "test-on-train"

}

} layer {

name: "accuracy_train_top05"

type: "Accuracy"

bottom: "fc8-101"

bottom: "label"

top: "accuracy_train_top05"

accuracy_param {

top_k:

}

include {

phase: TEST

stage: "test-on-train"

}

} layer {

name: "accuracy_train_top10"

type: "Accuracy"

bottom: "fc8-101"

bottom: "label"

top: "accuracy_train_top10"

accuracy_param {

top_k:

}

include {

phase: TEST

stage: "test-on-train"

}

} #测试时也一样,求top1、top5和top10的操作,求精确度

layer {

name: "accuracy_test_top01"

type: "Accuracy"

bottom: "fc8-101"

bottom: "label"

top: "accuracy_test_top01"

include {

phase: TEST

stage: "test-on-test"

}

} layer {

name: "accuracy_test_top05"

type: "Accuracy"

bottom: "fc8-101"

bottom: "label"

top: "accuracy_test_top05"

accuracy_param {

top_k:

}

include {

phase: TEST

stage: "test-on-test"

}

} layer {

name: "accuracy_test_top10"

type: "Accuracy"

bottom: "fc8-101"

bottom: "label"

top: "accuracy_test_top10"

accuracy_param {

top_k:

}

include {

phase: TEST

stage: "test-on-test"

}

}

3.自己定义的VGG16模型-models.py

然后我自己定义的pytorch模型为:

# coding:utf-

import torchvision.models as models

import torch.nn as nn

import torch

from torch.autograd import Variable

import torch.nn.functional as F # -100岁

class AgeModel(nn.Module):

def __init__(self, classes=):

super(AgeModel,self).__init__()

model = models.vgg16()

layers = list(model.children())

# 一共只有3层,卷积层,池化层和全连接层

# print(len(layers)) #

self.model = nn.Sequential(*layers[:-])

# -1层为整个连接层

# print(layers[]) #池化层AdaptiveAvgPool2d

# print(layers[-]) #全连接层

self.fullCon = nn.Sequential(nn.Linear(**, ),

nn.ReLU(True),

nn.Dropout(0.5, inplace=True),

nn.Linear(, ),

nn.ReLU(True),

nn.Dropout(0.5, inplace=True),

nn.Linear(, classes) #将最后一层全连接层改成自己的输出101

) def forward(self, x):

x = self.model(x)

x = x.view(x.size(), -)

x = self.fullCon(x)

x = F.softmax(x, dim=)

return x def test():

net = AgeModel()

for module in net.children():

print(module)

output = net(Variable(torch.randn(,,,)))

print('output :', output.size())

print(type(output)) if __name__ == '__main__':

test()

4.下载对应的caffemodel模型并转换

(deeplearning2) userdeMacBook-Pro:pytorch-DEX-master user$ curl -o age_model_imdb.caffemodel https://data.vision.ee.ethz.ch/cvl/rrothe/imdb-wiki/static/dex_imdb_wiki.caffemodel

然后转换代码为:

# coding:utf-

import caffe

import torch from models import AgeModel def convert_age():

age_torch_net = AgeModel() # 导入caffe模型,caffe.TRAIN表示使用训练模式,caffe.TEST表示使用测试模式

# 不同模式使用的prototxt文件是不同的

caffe_net = caffe.Net('age_train.prototxt', "age_model_imdb.caffemodel", caffe.TRAIN)

# caffe_net = caffe.Net('age.prototxt', "age_model_imdb.caffemodel", caffe.TEST) # 对应得到的是权重weight和偏置bias的参数

caffe_params = caffe_net.params

print(caffe_params) # #查看索引表示

# print(age_torch_net.model)

# print(age_torch_net.model[][])

#

# print(age_torch_net.fullCon)

# print(age_torch_net.fullCon[]) mappings = {

'conv1_1': age_torch_net.model[][],

'conv1_2': age_torch_net.model[][],

'conv2_1': age_torch_net.model[][],

'conv2_2': age_torch_net.model[][],

'conv3_1': age_torch_net.model[][],

'conv3_2': age_torch_net.model[][],

'conv3_3': age_torch_net.model[][],

'conv4_1': age_torch_net.model[][],

'conv4_2': age_torch_net.model[][],

'conv4_3': age_torch_net.model[][],

'conv5_1': age_torch_net.model[][],

'conv5_2': age_torch_net.model[][],

'conv5_3': age_torch_net.model[][],

'fc6': age_torch_net.fullCon[],

'fc7': age_torch_net.fullCon[],

'fc8-101': age_torch_net.fullCon[],

} for k, layer in mappings.items():

# # 第一个迭代:k=conv1_1,layer=Conv2d(, , kernel_size=(, ), stride=(, ), padding=(, ))

# print(k, layer) # 将caffe模型中的参数信息导入定义好的pytorch模型中

layer.weight.data.copy_(torch.from_numpy(caffe_params[k][].data)) # 权重weight参数

layer.bias.data.copy_(torch.from_numpy(caffe_params[k][].data)) # 偏置bias的参数

# 然后仅保存模型参数即可

torch.save(age_torch_net.state_dict(), './age.pth') if __name__ == '__main__':

convert_age()

过程中出现了错误:

RuntimeError: unidentifiable C++ exception

Segmentation fault:

因为:

caffe_net = caffe.Net('age_train.prototxt.txt', "dex_imdb_wiki.caffemodel", caffe.TRAIN)

这里我下载时是age_train.prototxt.txt,但是运行是导入是age_train.prototxt,添加.txt即可,或者更改格式

然后还出现错误:

(deeplearning2) userdeMacBook-Pro:face_data user$ python convert.py

Traceback (most recent call last):

File "convert.py", line 2, in <module>

import caffe

File "/Users/user/caffe/python/caffe/__init__.py", line 1, in <module>

from .pycaffe import Net, SGDSolver, NesterovSolver, AdaGradSolver, RMSPropSolver, AdaDeltaSolver, AdamSolver, NCCL, Timer

File "/Users/user/caffe/python/caffe/pycaffe.py", line 13, in <module>

from ._caffe import Net, SGDSolver, NesterovSolver, AdaGradSolver, \

ImportError: dlopen(/Users/user/caffe/python/caffe/_caffe.so, 2): Library not loaded: @rpath/libpython2.7.dylib

Referenced from: /Users/user/caffe/python/caffe/_caffe.so

Reason: image not found

(deeplearning2) userdeMacBook-Pro:face_data user$ python convert.py

Traceback (most recent call last):

File "convert.py", line 2, in <module>

import caffe

File "/Users/user/caffe/python/caffe/__init__.py", line 1, in <module>

from .pycaffe import Net, SGDSolver, NesterovSolver, AdaGradSolver, RMSPropSolver, AdaDeltaSolver, AdamSolver, NCCL, Timer

File "/Users/user/caffe/python/caffe/pycaffe.py", line 13, in <module>

from ._caffe import Net, SGDSolver, NesterovSolver, AdaGradSolver, \

ImportError: dlopen(/Users/user/caffe/python/caffe/_caffe.so, 2): Library not loaded: @rpath/libpython2.7.dylib

Referenced from: /Users/user/caffe/python/caffe/_caffe.so

Reason: image not found

因为我是使用anaconda3的python2.7环境,其下面已经有libpython2.7.dylib,这里报错的原因是@rpath的导向中没有anaconda3的python2.7环境的路径,使用下面的命令添加即可:

(deeplearning2) userdeMacBook-Pro:caffe user$ install_name_tool -add_rpath /anaconda3/envs/deeplearning2/lib /Users/user/caffe/python/caffe/_caffe.so

还出现错误:

I0812 14:26:19.981719 245106112 layer_factory.hpp:77] Creating layer data

F0812 14:26:19.981747 245106112 layer_factory.hpp:81] Check failed: registry.count(type) == 1 (0 vs. 1) Unknown layer type: ImageData (known types: AbsVal, Accuracy, ArgMax, BNLL, BatchNorm, BatchReindex, Bias, Clip, Concat, ContrastiveLoss, Convolution, Crop, Data, Deconvolution, Dropout, DummyData, ELU, Eltwise, Embed, EuclideanLoss, Exp, Filter, Flatten, HDF5Data, HDF5Output, HingeLoss, Im2col, InfogainLoss, InnerProduct, Input, LRN, LSTM, LSTMUnit, Log, MVN, MemoryData, MultinomialLogisticLoss, PReLU, Parameter, Pooling, Power, RNN, ReLU, Reduction, Reshape, SPP, Scale, Sigmoid, SigmoidCrossEntropyLoss, Silence, Slice, Softmax, SoftmaxWithLoss, Split, Swish, TanH, Threshold, Tile)

*** Check failure stack trace: ***

Abort trap:

这是因为在配置Makefile.config时没有取消掉下面一行的注释,所以读取不了python写的layer:

# Uncomment to support layers written in Python (will link against Python libs)

WITH_PYTHON_LAYER :=

将该行去掉,然后重新编译caffe,即make clean后,再从make all-make test-make runtest-make pycaffe,并且还要跟上面一样在~/.bash_profile文件的path中添加~/caffe/python这个caffe路径,并source /etc/profile

但是这样仍然没有解决问题,后面发现如果你的报错是:

Check failed: registry.count(type) == 1 (0 vs. 1) Unknown layer type:python

才使用上面的方法,但是注释掉这个总没有错。这个更改我已经合并到上面的完整配置代码中了,你如果跟着上面配置走应该就不会遇见该问题

后面查看该caffe模型的文件,其使用了ImageData来导入数据,但是我没有他指定的文件,所以将其的prototxt文件数据导入部分删除,改为:

input: "data"

input_dim:

input_dim:

input_dim:

input_dim:

把后面的一些损失和精确度内容也删除

然后再运行即可:

(deeplearning2) wanghuideMacBook-Pro:face_data wanghui$ python convert.py

WARNING: Logging before InitGoogleLogging() is written to STDERR

W0812 ::50.179870 _caffe.cpp:] DEPRECATION WARNING - deprecated use of Python interface

W0812 ::50.180747 _caffe.cpp:] Use this instead (with the named "weights" parameter):

W0812 ::50.180763 _caffe.cpp:] Net('age_train.prototxt', , weights='dex_imdb_wiki.caffemodel')

I0812 ::50.188591 upgrade_proto.cpp:] Attempting to upgrade input file specified using deprecated input fields: age_train.prototxt

I0812 ::50.188863 upgrade_proto.cpp:] Successfully upgraded file specified using deprecated input fields.

W0812 ::50.188877 upgrade_proto.cpp:] Note that future Caffe releases will only support input layers and not input fields.

I0812 ::50.189504 net.cpp:] Initializing net from parameters:

name: "VGG_ILSVRC_16_layers"

state {

phase: TRAIN

level:

}

layer {

name: "input"

type: "Input"

top: "data"

input_param {

shape {

dim:

dim:

dim:

dim:

}

}

}

layer {

name: "conv1_1"

type: "Convolution"

...

layer {

name: "fc8-101"

type: "InnerProduct"

bottom: "fc7"

top: "fc8-101"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

inner_product_param {

num_output:

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value:

}

}

}

I0812 ::50.190675 layer_factory.hpp:] Creating layer input

I0812 ::50.190943 net.cpp:] Creating Layer input

I0812 ::50.190958 net.cpp:] input -> data

I0812 ::50.191754 net.cpp:] Setting up input

I0812 ::50.191768 net.cpp:] Top shape: ()

I0812 ::50.192245 net.cpp:] Memory required for data:

I0812 ::50.192253 layer_factory.hpp:] Creating layer conv1_1

I0812 ::50.192262 net.cpp:] Creating Layer conv1_1

I0812 ::50.192267 net.cpp:] conv1_1 <- data

I0812 ::50.192273 net.cpp:] conv1_1 -> conv1_1

I0812 ::50.192760 net.cpp:] Setting up conv1_1

I0812 ::50.192771 net.cpp:] Top shape: ()

I0812 ::50.192780 net.cpp:] Memory required for data:

I0812 ::50.192903 layer_factory.hpp:] Creating layer relu1_1

I0812 ::50.192927 net.cpp:] Creating Layer relu1_1

I0812 ::50.192940 net.cpp:] relu1_1 <- conv1_1

I0812 ::50.192955 net.cpp:] relu1_1 -> conv1_1 (in-place)

I0812 ::50.192987 net.cpp:] Setting up relu1_1

I0812 ::50.193004 net.cpp:] Top shape: ()

I0812 ::50.193015 net.cpp:] Memory required for data:

I0812 ::50.193022 layer_factory.hpp:] Creating layer conv1_2

I0812 ::50.193029 net.cpp:] Creating Layer conv1_2

I0812 ::50.193034 net.cpp:] conv1_2 <- conv1_1

I0812 ::50.193042 net.cpp:] conv1_2 -> conv1_2

I0812 ::50.193079 net.cpp:] Setting up conv1_2

I0812 ::50.193085 net.cpp:] Top shape: ()

I0812 ::50.193092 net.cpp:] Memory required for data:

I0812 ::50.193099 layer_factory.hpp:] Creating layer relu1_2

I0812 ::50.193106 net.cpp:] Creating Layer relu1_2

I0812 ::50.193111 net.cpp:] relu1_2 <- conv1_2

I0812 ::50.193116 net.cpp:] relu1_2 -> conv1_2 (in-place)

I0812 ::50.193122 net.cpp:] Setting up relu1_2

I0812 ::50.193126 net.cpp:] Top shape: ()

I0812 ::50.193132 net.cpp:] Memory required for data:

I0812 ::50.193137 layer_factory.hpp:] Creating layer pool1

I0812 ::50.193142 net.cpp:] Creating Layer pool1

I0812 ::50.193147 net.cpp:] pool1 <- conv1_2

I0812 ::50.193152 net.cpp:] pool1 -> pool1

I0812 ::50.193373 net.cpp:] Setting up pool1

I0812 ::50.193385 net.cpp:] Top shape: ()

I0812 ::50.193394 net.cpp:] Memory required for data:

I0812 ::50.193400 layer_factory.hpp:] Creating layer conv2_1

I0812 ::50.193408 net.cpp:] Creating Layer conv2_1

I0812 ::50.193414 net.cpp:] conv2_1 <- pool1

I0812 ::50.193421 net.cpp:] conv2_1 -> conv2_1

I0812 ::50.193460 net.cpp:] Setting up conv2_1

I0812 ::50.193466 net.cpp:] Top shape: ()

I0812 ::50.193473 net.cpp:] Memory required for data:

I0812 ::50.193481 layer_factory.hpp:] Creating layer relu2_1

I0812 ::50.193487 net.cpp:] Creating Layer relu2_1

I0812 ::50.193492 net.cpp:] relu2_1 <- conv2_1

I0812 ::50.193497 net.cpp:] relu2_1 -> conv2_1 (in-place)

I0812 ::50.193504 net.cpp:] Setting up relu2_1

I0812 ::50.193508 net.cpp:] Top shape: ()

I0812 ::50.193514 net.cpp:] Memory required for data:

I0812 ::50.193519 layer_factory.hpp:] Creating layer conv2_2

I0812 ::50.193526 net.cpp:] Creating Layer conv2_2

I0812 ::50.193531 net.cpp:] conv2_2 <- conv2_1

I0812 ::50.193536 net.cpp:] conv2_2 -> conv2_2

I0812 ::50.193588 net.cpp:] Setting up conv2_2

I0812 ::50.193596 net.cpp:] Top shape: ()

I0812 ::50.193604 net.cpp:] Memory required for data:

I0812 ::50.193610 layer_factory.hpp:] Creating layer relu2_2

I0812 ::50.193616 net.cpp:] Creating Layer relu2_2

I0812 ::50.193620 net.cpp:] relu2_2 <- conv2_2

I0812 ::50.193625 net.cpp:] relu2_2 -> conv2_2 (in-place)

I0812 ::50.193631 net.cpp:] Setting up relu2_2

I0812 ::50.193635 net.cpp:] Top shape: ()

I0812 ::50.193641 net.cpp:] Memory required for data:

I0812 ::50.193645 layer_factory.hpp:] Creating layer pool2

I0812 ::50.193651 net.cpp:] Creating Layer pool2

I0812 ::50.193655 net.cpp:] pool2 <- conv2_2

I0812 ::50.193661 net.cpp:] pool2 -> pool2

I0812 ::50.193668 net.cpp:] Setting up pool2

I0812 ::50.193672 net.cpp:] Top shape: ()

I0812 ::50.193678 net.cpp:] Memory required for data:

I0812 ::50.193682 layer_factory.hpp:] Creating layer conv3_1

I0812 ::50.193688 net.cpp:] Creating Layer conv3_1

I0812 ::50.193694 net.cpp:] conv3_1 <- pool2

I0812 ::50.193699 net.cpp:] conv3_1 -> conv3_1

I0812 ::50.193792 net.cpp:] Setting up conv3_1

I0812 ::50.193799 net.cpp:] Top shape: ()

I0812 ::50.193805 net.cpp:] Memory required for data:

I0812 ::50.193814 layer_factory.hpp:] Creating layer relu3_1

I0812 ::50.193819 net.cpp:] Creating Layer relu3_1

I0812 ::50.193825 net.cpp:] relu3_1 <- conv3_1

I0812 ::50.193832 net.cpp:] relu3_1 -> conv3_1 (in-place)

I0812 ::50.193838 net.cpp:] Setting up relu3_1

I0812 ::50.193843 net.cpp:] Top shape: ()

I0812 ::50.193850 net.cpp:] Memory required for data:

I0812 ::50.193855 layer_factory.hpp:] Creating layer conv3_2

I0812 ::50.193861 net.cpp:] Creating Layer conv3_2

I0812 ::50.193866 net.cpp:] conv3_2 <- conv3_1

I0812 ::50.193871 net.cpp:] conv3_2 -> conv3_2

I0812 ::50.194085 net.cpp:] Setting up conv3_2

I0812 ::50.194097 net.cpp:] Top shape: ()

I0812 ::50.194105 net.cpp:] Memory required for data:

I0812 ::50.194113 layer_factory.hpp:] Creating layer relu3_2

I0812 ::50.194119 net.cpp:] Creating Layer relu3_2

I0812 ::50.194124 net.cpp:] relu3_2 <- conv3_2

I0812 ::50.194130 net.cpp:] relu3_2 -> conv3_2 (in-place)

I0812 ::50.194137 net.cpp:] Setting up relu3_2

I0812 ::50.194141 net.cpp:] Top shape: ()

I0812 ::50.194147 net.cpp:] Memory required for data:

I0812 ::50.194151 layer_factory.hpp:] Creating layer conv3_3

I0812 ::50.194159 net.cpp:] Creating Layer conv3_3

I0812 ::50.194162 net.cpp:] conv3_3 <- conv3_2

I0812 ::50.194169 net.cpp:] conv3_3 -> conv3_3

I0812 ::50.194345 net.cpp:] Setting up conv3_3

I0812 ::50.194353 net.cpp:] Top shape: ()

I0812 ::50.194360 net.cpp:] Memory required for data:

I0812 ::50.194366 layer_factory.hpp:] Creating layer relu3_3

I0812 ::50.194373 net.cpp:] Creating Layer relu3_3

I0812 ::50.194377 net.cpp:] relu3_3 <- conv3_3

I0812 ::50.194383 net.cpp:] relu3_3 -> conv3_3 (in-place)

I0812 ::50.194388 net.cpp:] Setting up relu3_3

I0812 ::50.194393 net.cpp:] Top shape: ()

I0812 ::50.194398 net.cpp:] Memory required for data:

I0812 ::50.194403 layer_factory.hpp:] Creating layer pool3

I0812 ::50.194411 net.cpp:] Creating Layer pool3

I0812 ::50.194416 net.cpp:] pool3 <- conv3_3

I0812 ::50.194422 net.cpp:] pool3 -> pool3

I0812 ::50.194428 net.cpp:] Setting up pool3

I0812 ::50.194433 net.cpp:] Top shape: ()

I0812 ::50.194438 net.cpp:] Memory required for data:

I0812 ::50.194443 layer_factory.hpp:] Creating layer conv4_1

I0812 ::50.194450 net.cpp:] Creating Layer conv4_1

I0812 ::50.194454 net.cpp:] conv4_1 <- pool3

I0812 ::50.194460 net.cpp:] conv4_1 -> conv4_1

I0812 ::50.194834 net.cpp:] Setting up conv4_1

I0812 ::50.194847 net.cpp:] Top shape: ()

I0812 ::50.194854 net.cpp:] Memory required for data:

I0812 ::50.194861 layer_factory.hpp:] Creating layer relu4_1

I0812 ::50.194867 net.cpp:] Creating Layer relu4_1

I0812 ::50.194874 net.cpp:] relu4_1 <- conv4_1

I0812 ::50.194880 net.cpp:] relu4_1 -> conv4_1 (in-place)

I0812 ::50.194885 net.cpp:] Setting up relu4_1

I0812 ::50.194890 net.cpp:] Top shape: ()

I0812 ::50.194895 net.cpp:] Memory required for data:

I0812 ::50.194900 layer_factory.hpp:] Creating layer conv4_2

I0812 ::50.194905 net.cpp:] Creating Layer conv4_2

I0812 ::50.194911 net.cpp:] conv4_2 <- conv4_1

I0812 ::50.194917 net.cpp:] conv4_2 -> conv4_2

I0812 ::50.195816 net.cpp:] Setting up conv4_2

I0812 ::50.195835 net.cpp:] Top shape: ()

I0812 ::50.195843 net.cpp:] Memory required for data:

I0812 ::50.195854 layer_factory.hpp:] Creating layer relu4_2

I0812 ::50.195863 net.cpp:] Creating Layer relu4_2

I0812 ::50.195868 net.cpp:] relu4_2 <- conv4_2

I0812 ::50.195874 net.cpp:] relu4_2 -> conv4_2 (in-place)

I0812 ::50.195881 net.cpp:] Setting up relu4_2

I0812 ::50.195886 net.cpp:] Top shape: ()

I0812 ::50.195892 net.cpp:] Memory required for data:

I0812 ::50.195896 layer_factory.hpp:] Creating layer conv4_3

I0812 ::50.195904 net.cpp:] Creating Layer conv4_3

I0812 ::50.195907 net.cpp:] conv4_3 <- conv4_2

I0812 ::50.195914 net.cpp:] conv4_3 -> conv4_3

I0812 ::50.197036 net.cpp:] Setting up conv4_3

I0812 ::50.197062 net.cpp:] Top shape: ()

I0812 ::50.197072 net.cpp:] Memory required for data:

I0812 ::50.197079 layer_factory.hpp:] Creating layer relu4_3

I0812 ::50.197088 net.cpp:] Creating Layer relu4_3

I0812 ::50.197094 net.cpp:] relu4_3 <- conv4_3

I0812 ::50.197100 net.cpp:] relu4_3 -> conv4_3 (in-place)

I0812 ::50.197108 net.cpp:] Setting up relu4_3

I0812 ::50.197111 net.cpp:] Top shape: ()

I0812 ::50.197116 net.cpp:] Memory required for data:

I0812 ::50.197120 layer_factory.hpp:] Creating layer pool4

I0812 ::50.197126 net.cpp:] Creating Layer pool4

I0812 ::50.197130 net.cpp:] pool4 <- conv4_3

I0812 ::50.197136 net.cpp:] pool4 -> pool4

I0812 ::50.197144 net.cpp:] Setting up pool4

I0812 ::50.197149 net.cpp:] Top shape: ()

I0812 ::50.197154 net.cpp:] Memory required for data:

I0812 ::50.197158 layer_factory.hpp:] Creating layer conv5_1

I0812 ::50.197166 net.cpp:] Creating Layer conv5_1

I0812 ::50.197171 net.cpp:] conv5_1 <- pool4

I0812 ::50.197176 net.cpp:] conv5_1 -> conv5_1

I0812 ::50.198091 net.cpp:] Setting up conv5_1

I0812 ::50.198110 net.cpp:] Top shape: ()

I0812 ::50.198118 net.cpp:] Memory required for data:

I0812 ::50.198124 layer_factory.hpp:] Creating layer relu5_1

I0812 ::50.198132 net.cpp:] Creating Layer relu5_1

I0812 ::50.198137 net.cpp:] relu5_1 <- conv5_1

I0812 ::50.198143 net.cpp:] relu5_1 -> conv5_1 (in-place)

I0812 ::50.198149 net.cpp:] Setting up relu5_1

I0812 ::50.198153 net.cpp:] Top shape: ()

I0812 ::50.198159 net.cpp:] Memory required for data:

I0812 ::50.198163 layer_factory.hpp:] Creating layer conv5_2

I0812 ::50.198170 net.cpp:] Creating Layer conv5_2

I0812 ::50.198174 net.cpp:] conv5_2 <- conv5_1

I0812 ::50.198179 net.cpp:] conv5_2 -> conv5_2

I0812 ::50.199494 net.cpp:] Setting up conv5_2

I0812 ::50.199513 net.cpp:] Top shape: ()

I0812 ::50.199522 net.cpp:] Memory required for data:

I0812 ::50.199528 layer_factory.hpp:] Creating layer relu5_2

I0812 ::50.199539 net.cpp:] Creating Layer relu5_2

I0812 ::50.199545 net.cpp:] relu5_2 <- conv5_2

I0812 ::50.199551 net.cpp:] relu5_2 -> conv5_2 (in-place)

I0812 ::50.199558 net.cpp:] Setting up relu5_2

I0812 ::50.199563 net.cpp:] Top shape: ()

I0812 ::50.199568 net.cpp:] Memory required for data:

I0812 ::50.199571 layer_factory.hpp:] Creating layer conv5_3

I0812 ::50.199579 net.cpp:] Creating Layer conv5_3

I0812 ::50.199582 net.cpp:] conv5_3 <- conv5_2

I0812 ::50.199589 net.cpp:] conv5_3 -> conv5_3

I0812 ::50.200711 net.cpp:] Setting up conv5_3

I0812 ::50.200731 net.cpp:] Top shape: ()

I0812 ::50.200739 net.cpp:] Memory required for data:

I0812 ::50.200747 layer_factory.hpp:] Creating layer relu5_3

I0812 ::50.200753 net.cpp:] Creating Layer relu5_3

I0812 ::50.200758 net.cpp:] relu5_3 <- conv5_3

I0812 ::50.200764 net.cpp:] relu5_3 -> conv5_3 (in-place)

I0812 ::50.200790 net.cpp:] Setting up relu5_3

I0812 ::50.200794 net.cpp:] Top shape: ()

I0812 ::50.200800 net.cpp:] Memory required for data:

I0812 ::50.200804 layer_factory.hpp:] Creating layer pool5

I0812 ::50.200810 net.cpp:] Creating Layer pool5

I0812 ::50.200814 net.cpp:] pool5 <- conv5_3

I0812 ::50.200820 net.cpp:] pool5 -> pool5

I0812 ::50.200830 net.cpp:] Setting up pool5

I0812 ::50.200836 net.cpp:] Top shape: ()

I0812 ::50.200855 net.cpp:] Memory required for data:

I0812 ::50.200860 layer_factory.hpp:] Creating layer fc6

I0812 ::50.200866 net.cpp:] Creating Layer fc6

I0812 ::50.200870 net.cpp:] fc6 <- pool5

I0812 ::50.200876 net.cpp:] fc6 -> fc6

I0812 ::50.257817 net.cpp:] Setting up fc6

I0812 ::50.257875 net.cpp:] Top shape: ()

I0812 ::50.257887 net.cpp:] Memory required for data:

I0812 ::50.257897 layer_factory.hpp:] Creating layer relu6

I0812 ::50.257906 net.cpp:] Creating Layer relu6

I0812 ::50.257912 net.cpp:] relu6 <- fc6

I0812 ::50.257920 net.cpp:] relu6 -> fc6 (in-place)

I0812 ::50.257927 net.cpp:] Setting up relu6

I0812 ::50.257931 net.cpp:] Top shape: ()

I0812 ::50.257936 net.cpp:] Memory required for data:

I0812 ::50.257941 layer_factory.hpp:] Creating layer drop6

I0812 ::50.257947 net.cpp:] Creating Layer drop6

I0812 ::50.257952 net.cpp:] drop6 <- fc6

I0812 ::50.257957 net.cpp:] drop6 -> fc6 (in-place)

I0812 ::50.257974 net.cpp:] Setting up drop6

I0812 ::50.257978 net.cpp:] Top shape: ()

I0812 ::50.257983 net.cpp:] Memory required for data:

I0812 ::50.257987 layer_factory.hpp:] Creating layer fc7

I0812 ::50.257994 net.cpp:] Creating Layer fc7

I0812 ::50.257998 net.cpp:] fc7 <- fc6

I0812 ::50.258003 net.cpp:] fc7 -> fc7

I0812 ::50.303862 net.cpp:] Setting up fc7

I0812 ::50.303889 net.cpp:] Top shape: ()

I0812 ::50.303900 net.cpp:] Memory required for data:

I0812 ::50.303908 layer_factory.hpp:] Creating layer relu7

I0812 ::50.303918 net.cpp:] Creating Layer relu7

I0812 ::50.303923 net.cpp:] relu7 <- fc7

I0812 ::50.303942 net.cpp:] relu7 -> fc7 (in-place)

I0812 ::50.303951 net.cpp:] Setting up relu7

I0812 ::50.303956 net.cpp:] Top shape: ()

I0812 ::50.303961 net.cpp:] Memory required for data:

I0812 ::50.303966 layer_factory.hpp:] Creating layer drop7

I0812 ::50.303972 net.cpp:] Creating Layer drop7

I0812 ::50.303977 net.cpp:] drop7 <- fc7

I0812 ::50.303982 net.cpp:] drop7 -> fc7 (in-place)

I0812 ::50.303987 net.cpp:] Setting up drop7

I0812 ::50.303992 net.cpp:] Top shape: ()

I0812 ::50.303997 net.cpp:] Memory required for data:

I0812 ::50.304002 layer_factory.hpp:] Creating layer fc8-

I0812 ::50.304008 net.cpp:] Creating Layer fc8-

I0812 ::50.304013 net.cpp:] fc8- <- fc7

I0812 ::50.304019 net.cpp:] fc8- -> fc8-

I0812 ::50.312885 net.cpp:] Setting up fc8-

I0812 ::50.312916 net.cpp:] Top shape: ()

I0812 ::50.312927 net.cpp:] Memory required for data:

I0812 ::50.312934 net.cpp:] fc8- does not need backward computation.

I0812 ::50.312940 net.cpp:] drop7 does not need backward computation.

I0812 ::50.312944 net.cpp:] relu7 does not need backward computation.

I0812 ::50.312949 net.cpp:] fc7 does not need backward computation.

I0812 ::50.312953 net.cpp:] drop6 does not need backward computation.

I0812 ::50.312958 net.cpp:] relu6 does not need backward computation.

I0812 ::50.312963 net.cpp:] fc6 does not need backward computation.

I0812 ::50.312968 net.cpp:] pool5 does not need backward computation.

I0812 ::50.312973 net.cpp:] relu5_3 does not need backward computation.

I0812 ::50.312978 net.cpp:] conv5_3 does not need backward computation.

I0812 ::50.312981 net.cpp:] relu5_2 does not need backward computation.

I0812 ::50.312986 net.cpp:] conv5_2 does not need backward computation.

I0812 ::50.312991 net.cpp:] relu5_1 does not need backward computation.

I0812 ::50.312995 net.cpp:] conv5_1 does not need backward computation.

I0812 ::50.313000 net.cpp:] pool4 does not need backward computation.

I0812 ::50.313005 net.cpp:] relu4_3 does not need backward computation.

I0812 ::50.313009 net.cpp:] conv4_3 does not need backward computation.

I0812 ::50.313014 net.cpp:] relu4_2 does not need backward computation.

I0812 ::50.313019 net.cpp:] conv4_2 does not need backward computation.

I0812 ::50.313024 net.cpp:] relu4_1 does not need backward computation.

I0812 ::50.313027 net.cpp:] conv4_1 does not need backward computation.

I0812 ::50.313032 net.cpp:] pool3 does not need backward computation.

I0812 ::50.313037 net.cpp:] relu3_3 does not need backward computation.

I0812 ::50.313041 net.cpp:] conv3_3 does not need backward computation.

I0812 ::50.313046 net.cpp:] relu3_2 does not need backward computation.

I0812 ::50.313050 net.cpp:] conv3_2 does not need backward computation.

I0812 ::50.313055 net.cpp:] relu3_1 does not need backward computation.

I0812 ::50.313060 net.cpp:] conv3_1 does not need backward computation.

I0812 ::50.313064 net.cpp:] pool2 does not need backward computation.

I0812 ::50.313069 net.cpp:] relu2_2 does not need backward computation.

I0812 ::50.313073 net.cpp:] conv2_2 does not need backward computation.

I0812 ::50.313078 net.cpp:] relu2_1 does not need backward computation.

I0812 ::50.313082 net.cpp:] conv2_1 does not need backward computation.

I0812 ::50.313087 net.cpp:] pool1 does not need backward computation.

I0812 ::50.313092 net.cpp:] relu1_2 does not need backward computation.

I0812 ::50.313097 net.cpp:] conv1_2 does not need backward computation.

I0812 ::50.313102 net.cpp:] relu1_1 does not need backward computation.

I0812 ::50.313107 net.cpp:] conv1_1 does not need backward computation.

I0812 ::50.313110 net.cpp:] input does not need backward computation.

I0812 ::50.313114 net.cpp:] This network produces output fc8-

I0812 ::50.313129 net.cpp:] Network initialization done.

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:] Reading dangerously large protocol message. If the message turns out to be larger than bytes, parsing will be halted for security reasons. To increase the limit (or to disable these warnings), see CodedInputStream::SetTotalBytesLimit() in google/protobuf/io/coded_stream.h.

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:] The total number of bytes read was

I0812 ::51.490310 net.cpp:] Ignoring source layer data

I0812 ::51.597520 net.cpp:] Ignoring source layer loss

OrderedDict([('conv1_1', <caffe._caffe.BlobVec object at 0x13cafcf30>), ('conv1_2', <caffe._caffe.BlobVec object at 0x13cafcfa0>), ('conv2_1', <caffe._caffe.BlobVec object at 0x158bf0050>), ('conv2_2', <caffe._caffe.BlobVec object at 0x158bf00c0>), ('conv3_1', <caffe._caffe.BlobVec object at 0x158bf0130>), ('conv3_2', <caffe._caffe.BlobVec object at 0x158bf01a0>), ('conv3_3', <caffe._caffe.BlobVec object at 0x158bf0210>), ('conv4_1', <caffe._caffe.BlobVec object at 0x158bf0280>), ('conv4_2', <caffe._caffe.BlobVec object at 0x158bf02f0>), ('conv4_3', <caffe._caffe.BlobVec object at 0x158bf0360>), ('conv5_1', <caffe._caffe.BlobVec object at 0x158bf03d0>), ('conv5_2', <caffe._caffe.BlobVec object at 0x158bf0440>), ('conv5_3', <caffe._caffe.BlobVec object at 0x158bf04b0>), ('fc6', <caffe._caffe.BlobVec object at 0x158bf0520>), ('fc7', <caffe._caffe.BlobVec object at 0x158bf0590>), ('fc8-101', <caffe._caffe.BlobVec object at 0x158bf0600>)])

然后就成功生成了age_train.pth模型

5.测试

使用保存好的pytorch模型来测试图片的年龄:

from PIL import Image

from torchvision import transforms as T

import torch

from models import AgeModel def expected_age(outputs):

age_output = [] for batch, output in enumerate(outputs):

age =

for i, j in enumerate(output):

age += i*j

age_output.append(age.item()) return torch.FloatTensor(age_output) def test(img_path, model_path):

transfrom = T.Compose([

T.Resize(),

T.CenterCrop(),

T.ToTensor(),

T.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

img = Image.open(img_path)

img = transfrom(img).unsqueeze() model = AgeModel()

model.load_state_dict(torch.load(model_path))

age = model(img)

print(age)

print(age.size())

age = expected_age(age)

print(age)

print(age.size()) if __name__ == '__main__':

img_path = './Tom_Hanks_54745.png'

model_path = './age_train.pth'

test(img_path, model_path)

返回:

(deeplearning2) userdeMacBook-Pro:face_data user$ python check_model.py

tensor([[0.0032, 0.0039, 0.0027, 0.0020, 0.0026, 0.0031, 0.0029, 0.0035, 0.0051,

0.0046, 0.0056, 0.0069, 0.0073, 0.0065, 0.0071, 0.0071, 0.0090, 0.0143,

0.0225, 0.0269, 0.0288, 0.0373, 0.0335, 0.0343, 0.0282, 0.0303, 0.0256,

0.0263, 0.0227, 0.0210, 0.0210, 0.0176, 0.0140, 0.0144, 0.0135, 0.0121,

0.0107, 0.0112, 0.0110, 0.0088, 0.0109, 0.0092, 0.0086, 0.0140, 0.0096,

0.0106, 0.0123, 0.0128, 0.0139, 0.0132, 0.0110, 0.0111, 0.0144, 0.0127,

0.0110, 0.0153, 0.0103, 0.0113, 0.0117, 0.0136, 0.0147, 0.0124, 0.0096,

0.0091, 0.0091, 0.0097, 0.0070, 0.0072, 0.0068, 0.0077, 0.0067, 0.0069,

0.0062, 0.0065, 0.0056, 0.0047, 0.0045, 0.0045, 0.0048, 0.0049, 0.0040,

0.0031, 0.0031, 0.0026, 0.0033, 0.0024, 0.0023, 0.0020, 0.0020, 0.0021,

0.0020, 0.0016, 0.0021, 0.0016, 0.0016, 0.0014, 0.0014, 0.0013, 0.0015,

0.0013, 0.0020]], grad_fn=<SoftmaxBackward>)

(, )

tensor([39.2897])

(,)

该图片为:

中间有出现一个错误:

RuntimeError: The size of tensor a () must match the size of tensor b () at non-singleton dimension

然后查看输入的图像,发现其为RGBA格式的:

<PIL.PngImagePlugin.PngImageFile image mode=RGBA size=524x473 at 0x10138FFD0>

所以要将其更改为RGB格式:

img = img.convert("RGB")

DEX-6-caffe模型转成pytorch模型办法的更多相关文章

- 【tensorflow-v2.0】如何将模型转换成tflite模型

前言 TensorFlow Lite 提供了转换 TensorFlow 模型,并在移动端(mobile).嵌入式(embeded)和物联网(IoT)设备上运行 TensorFlow 模型所需的所有工具 ...

- 「新手必看」Python+Opencv实现摄像头调用RGB图像并转换成HSV模型

在ROS机器人的应用开发中,调用摄像头进行机器视觉处理是比较常见的方法,现在把利用opencv和python语言实现摄像头调用并转换成HSV模型的方法分享出来,希望能对学习ROS机器人的新手们一点帮助 ...

- 使用C++调用pytorch模型(Linux)

前言 模型转换思路通常为: Pytorch -> ONNX -> TensorRT Pytorch -> ONNX -> TVM Pytorch -> 转换工具 -> ...

- 从零搭建Pytorch模型教程(四)编写训练过程--参数解析

前言 训练过程主要是指编写train.py文件,其中包括参数的解析.训练日志的配置.设置随机数种子.classdataset的初始化.网络的初始化.学习率的设置.损失函数的设置.优化方式的设置. ...

- pytorch模型部署在MacOS或者IOS

pytorch训练出.pth模型如何在MacOS上或者IOS部署,这是个问题. 然而我们有了onnx,同样我们也有了coreML. ONNX: onnx是一种针对机器学习设计的开放式文件格式,用来存储 ...

- PyTorch模型读写、参数初始化、Finetune

使用了一段时间PyTorch,感觉爱不释手(0-0),听说现在已经有C++接口.在应用过程中不可避免需要使用Finetune/参数初始化/模型加载等. 模型保存/加载 1.所有模型参数 训练过程中,有 ...

- 资源分享 | PyTea:不用运行代码,静态分析pytorch模型的错误

前言 本文介绍一个Pytorch模型的静态分析器 PyTea,它不需要运行代码,即可在几秒钟之内扫描分析出模型中的张量形状错误.文末附使用方法. 本文转载自机器之心 编辑:CV技 ...

- 从零搭建Pytorch模型教程(三)搭建Transformer网络

前言 本文介绍了Transformer的基本流程,分块的两种实现方式,Position Emebdding的几种实现方式,Encoder的实现方式,最后分类的两种方式,以及最重要的数据格式的介绍. ...

- Pytorch模型量化

在深度学习中,量化指的是使用更少的bit来存储原本以浮点数存储的tensor,以及使用更少的bit来完成原本以浮点数完成的计算.这么做的好处主要有如下几点: 更少的模型体积,接近4倍的减少: 可以更快 ...

随机推荐

- LFU(最近最不常用)实现(python)

from collections import defaultdict, OrderedDict class Node: __slots__ = 'key', 'val', 'cnt' def __i ...

- Angular CLI behind the scenes, part one

原文:https://commandlinefanatic.com/cgi-bin/showarticle.cgi?article=art074 --------------------------- ...

- lomback插件在日志管理方面的应用

由于现在使用日志可以省去在解决bug时候的很多麻烦, lomback为我们提供了很方便的打印日志的管理 @RunWith(SpringRunner.class) @SpringBootTest @Sl ...

- MenuOS扩展(课堂时间作业)

MenuOS扩展实验 1.编译内核 2.制作根文件系统 rm menu -rf 强制删除原menu文件 git clone https://github.com/mengning/menu.git 从 ...

- VS Code中配置Markdown

其实,对我来说是反过来的,我是为了使用Markdown而安装VS Code(虽然久仰大名) 安装VS Code 安装Markdown插件 使用篇 1. 安装vscode 之所以啰嗦一下,是因为据说安装 ...

- 汇编语言笔记 CALL和RET指令

转载地址:http://www.cnblogs.com/dennisOne ☞模块化程序设计 模块化程序设计 汇编语言通过call和ret指令实现了模块化程序设计.可以实现多个相互联系.功能独立的子程 ...

- HDU-盐水的故事

http://acm.hdu.edu.cn/showproblem.php?pid=1408 这是一道高精度问题: 在自己错了数十遍之后找到了不少规律: 首先是Output limit exceede ...

- Pytest权威教程11-模块及测试文件中集成doctest测试

目录 模块及测试文件中集成doctest测试 编码 使用doctest选项 输出格式 pytest-specific 特性 返回: Pytest权威教程 模块及测试文件中集成doctest测试 编码 ...

- docker技术入门(1)

1Docker技术介绍 DOCKER是一个基于LXC技术之上构建的container容器引擎,通过内核虚拟化技术(namespace及cgroups)来提供容器的资源隔离与安全保障,KVM是通过硬件实 ...

- receipt

receipt - 必应词典 美[riˈsiːt]英[rɪ'siːt] n.收据:收入:接受:字据 v.开收据 网络收到:收条:发票 变形复数:receipts: 搭配give receipt:sig ...