吴裕雄 python 机器学习-DMT(2)

import matplotlib.pyplot as plt decisionNode = dict(boxstyle="sawtooth", fc="0.8")

leafNode = dict(boxstyle="round4", fc="0.8")

arrow_args = dict(arrowstyle="<-") def getNumLeafs(myTree):

numLeafs = 0

for i in myTree.keys():

firstStr = i

break

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':

numLeafs += getNumLeafs(secondDict[key])

else: numLeafs +=1

return numLeafs def getTreeDepth(myTree):

maxDepth = 0

for i in myTree.keys():

firstStr = i

break

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':

thisDepth = 1 + getTreeDepth(secondDict[key])

else: thisDepth = 1

if thisDepth > maxDepth: maxDepth = thisDepth

return maxDepth def plotNode(nodeTxt, centerPt, parentPt, nodeType):

createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction',xytext=centerPt, textcoords='axes fraction',va="center", ha="center", bbox=nodeType, arrowprops=arrow_args ) def plotMidText(cntrPt, parentPt, txtString):

xMid = (parentPt[0]-cntrPt[0])/2.0 + cntrPt[0]

yMid = (parentPt[1]-cntrPt[1])/2.0 + cntrPt[1]

createPlot.ax1.text(xMid, yMid, txtString, va="center", ha="center", rotation=30) def plotTree(myTree, parentPt, nodeTxt):

numLeafs = getNumLeafs(myTree)

depth = getTreeDepth(myTree)

for i in myTree.keys():

firstStr = i

break

cntrPt = (plotTree.xOff + (1.0 + float(numLeafs))/2.0/plotTree.totalW, plotTree.yOff)

plotMidText(cntrPt, parentPt, nodeTxt)

plotNode(firstStr, cntrPt, parentPt, decisionNode)

secondDict = myTree[firstStr]

plotTree.yOff = plotTree.yOff - 1.0/plotTree.totalD

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':

plotTree(secondDict[key],cntrPt,str(key))

else:

plotTree.xOff = plotTree.xOff + 1.0/plotTree.totalW

plotNode(secondDict[key], (plotTree.xOff, plotTree.yOff), cntrPt, leafNode)

plotMidText((plotTree.xOff, plotTree.yOff), cntrPt, str(key))

plotTree.yOff = plotTree.yOff + 1.0/plotTree.totalD def createPlot(inTree):

fig = plt.figure(1, facecolor='white')

fig.clf()

axprops = dict(xticks=[], yticks=[])

createPlot.ax1 = plt.subplot(111, frameon=False, **axprops)

#createPlot.ax1 = plt.subplot(111, frameon=False) #ticks for demo puropses

plotTree.totalW = float(getNumLeafs(inTree))

plotTree.totalD = float(getTreeDepth(inTree))

plotTree.xOff = -0.5/plotTree.totalW; plotTree.yOff = 1.0;

plotTree(inTree, (0.5,1.0), '')

plt.show() def retrieveTree(i):

listOfTrees =[{'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}},

{'no surfacing': {0: 'no', 1: {'flippers': {0: {'head': {0: 'no', 1: 'yes'}}, 1: 'no'}}}}

]

return listOfTrees[i] thisTree = retrieveTree(0)

createPlot(thisTree)

thisTree = retrieveTree(1)

createPlot(thisTree)

import numpy as np

import operator as op

from math import log def calcShannonEnt(dataSet):

labelCounts = {}

for featVec in dataSet:

currentLabel = featVec[-1]

if(currentLabel not in labelCounts.keys()):

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

shannonEnt = 0.0

rowNum = len(dataSet)

for key in labelCounts:

prob = float(labelCounts[key])/rowNum

shannonEnt -= prob * log(prob,2)

return shannonEnt def splitDataSet(dataSet, axis, value):

retDataSet = []

for featVec in dataSet:

if(featVec[axis] == value):

reducedFeatVec = featVec[:axis]

reducedFeatVec.extend(featVec[axis+1:])

retDataSet.append(reducedFeatVec)

return retDataSet def chooseBestFeatureToSplit(dataSet):

numFeatures = np.shape(dataSet)[1]-1

baseEntropy = calcShannonEnt(dataSet)

bestInfoGain = 0.0

bestFeature = -1

for i in range(numFeatures):

featList = [example[i] for example in dataSet]

uniqueVals = set(featList)

newEntropy = 0.0

for value in uniqueVals:

subDataSet = splitDataSet(dataSet, i, value)

prob = len(subDataSet)/float(len(dataSet))

newEntropy += prob * calcShannonEnt(subDataSet)

infoGain = baseEntropy - newEntropy

if (infoGain > bestInfoGain):

bestInfoGain = infoGain

bestFeature = i

return bestFeature def majorityCnt(classList):

classCount={}

for vote in classList:

if(vote not in classCount.keys()):

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def createTree(dataSet,labels):

classList = [example[-1] for example in dataSet]

if(classList.count(classList[0]) == len(classList)):

return classList[0]

if len(dataSet[0]) == 1:

return majorityCnt(classList)

bestFeat = chooseBestFeatureToSplit(dataSet)

bestFeatLabel = labels[bestFeat]

myTree = {bestFeatLabel:{}}

del(labels[bestFeat])

featValues = [example[bestFeat] for example in dataSet]

uniqueVals = set(featValues)

for value in uniqueVals:

subLabels = labels[:]

myTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value),subLabels)

return myTree def classify(inputTree,featLabels,testVec):

for i in inputTree.keys():

firstStr = i

break

secondDict = inputTree[firstStr]

featIndex = featLabels.index(firstStr)

key = testVec[featIndex]

valueOfFeat = secondDict[key]

if isinstance(valueOfFeat, dict):

classLabel = classify(valueOfFeat, featLabels, testVec)

else:

classLabel = valueOfFeat

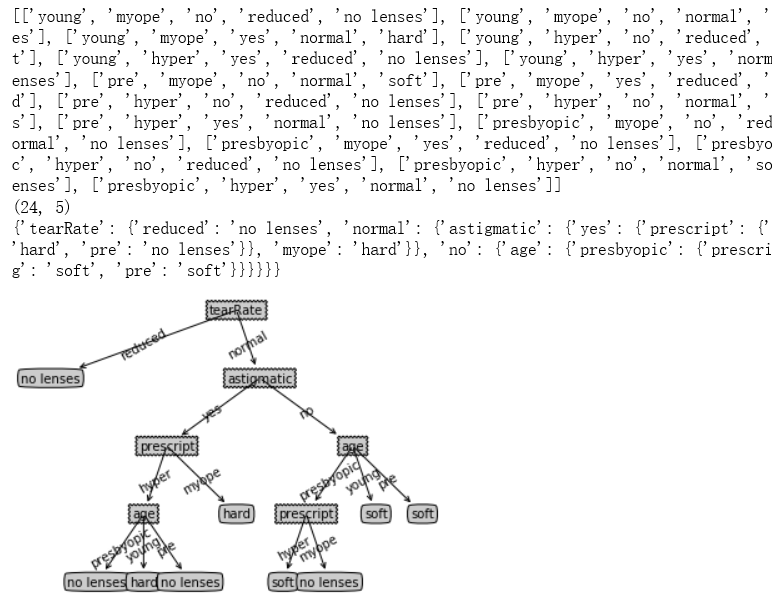

return classLabel data = open("D:\\LearningResource\\machinelearninginaction\\Ch03\\lenses.txt")

dataSet = [inst.strip().split("\t") for inst in data.readlines()]

print(dataSet)

print(np.shape(dataSet))

labels = ["age","prescript","astigmatic","tearRate"]

tree = createTree(dataSet,labels)

print(tree) import matplotlib.pyplot as plt decisionNode = dict(boxstyle="sawtooth", fc="0.8")

leafNode = dict(boxstyle="round4", fc="0.8")

arrow_args = dict(arrowstyle="<-") def getNumLeafs(myTree):

numLeafs = 0

for i in myTree.keys():

firstStr = i

break

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':

numLeafs += getNumLeafs(secondDict[key])

else: numLeafs +=1

return numLeafs def getTreeDepth(myTree):

maxDepth = 0

for i in myTree.keys():

firstStr = i

break

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':

thisDepth = 1 + getTreeDepth(secondDict[key])

else: thisDepth = 1

if thisDepth > maxDepth: maxDepth = thisDepth

return maxDepth def plotNode(nodeTxt, centerPt, parentPt, nodeType):

createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction',xytext=centerPt, textcoords='axes fraction',va="center", ha="center", bbox=nodeType, arrowprops=arrow_args ) def plotMidText(cntrPt, parentPt, txtString):

xMid = (parentPt[0]-cntrPt[0])/2.0 + cntrPt[0]

yMid = (parentPt[1]-cntrPt[1])/2.0 + cntrPt[1]

createPlot.ax1.text(xMid, yMid, txtString, va="center", ha="center", rotation=30) def plotTree(myTree, parentPt, nodeTxt):

numLeafs = getNumLeafs(myTree)

depth = getTreeDepth(myTree)

for i in myTree.keys():

firstStr = i

break

cntrPt = (plotTree.xOff + (1.0 + float(numLeafs))/2.0/plotTree.totalW, plotTree.yOff)

plotMidText(cntrPt, parentPt, nodeTxt)

plotNode(firstStr, cntrPt, parentPt, decisionNode)

secondDict = myTree[firstStr]

plotTree.yOff = plotTree.yOff - 1.0/plotTree.totalD

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':

plotTree(secondDict[key],cntrPt,str(key))

else:

plotTree.xOff = plotTree.xOff + 1.0/plotTree.totalW

plotNode(secondDict[key], (plotTree.xOff, plotTree.yOff), cntrPt, leafNode)

plotMidText((plotTree.xOff, plotTree.yOff), cntrPt, str(key))

plotTree.yOff = plotTree.yOff + 1.0/plotTree.totalD def createPlot(inTree):

fig = plt.figure(1, facecolor='white')

fig.clf()

axprops = dict(xticks=[], yticks=[])

createPlot.ax1 = plt.subplot(111, frameon=False, **axprops)

#createPlot.ax1 = plt.subplot(111, frameon=False) #ticks for demo puropses

plotTree.totalW = float(getNumLeafs(inTree))

plotTree.totalD = float(getTreeDepth(inTree))

plotTree.xOff = -0.5/plotTree.totalW; plotTree.yOff = 1.0;

plotTree(inTree, (0.5,1.0), '')

plt.show() createPlot(tree)

吴裕雄 python 机器学习-DMT(2)的更多相关文章

- 吴裕雄 python 机器学习-DMT(1)

import numpy as np import operator as op from math import log def createDataSet(): dataSet = [[1, 1, ...

- 吴裕雄 python 机器学习——分类决策树模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets from sklearn.model_s ...

- 吴裕雄 python 机器学习——回归决策树模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets from sklearn.model_s ...

- 吴裕雄 python 机器学习——线性判断分析LinearDiscriminantAnalysis

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——逻辑回归

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——ElasticNet回归

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——Lasso回归

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets, linear_model from s ...

- 吴裕雄 python 机器学习——岭回归

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets, linear_model from s ...

- 吴裕雄 python 机器学习——线性回归模型

import numpy as np from sklearn import datasets,linear_model from sklearn.model_selection import tra ...

随机推荐

- 30 个 OpenStack 经典面试问题和解答

现在,大多数公司都试图将它们的 IT 基础设施和电信设施迁移到私有云, 如 OpenStack.如果你打算面试 OpenStack 管理员这个岗位,那么下面列出的这些面试问题可能会帮助你通过面试.-- ...

- es6(11)--Proxy,Reflect

//Proxy,Reflect { let obj={ time:'2018-06-25', name:'net', _r:123 }; let monitor = new Proxy(obj,{ / ...

- ELK集群部署实例(转)

转载自:http://blog.51cto.com/ckl893/1772287,感谢原博. 一.ELK说明 二.架构图 三.规划说明 四.安装部署nginx+logstash 五.安装部署redis ...

- mysql查询当前时间,一天内,一周,一个月内的sql语句

查询一天:select * from 表名 where to_days(时间字段名) = to_days(now()); 昨天 SELECT * FROM 表名 WHERE TO_DAYS( NOW( ...

- JVM总结-异常处理

众所周知,异常处理的两大组成要素是抛出异常和捕获异常.这两大要素共同实现程序控制流的非正常转移. 抛出异常可分为显式和隐式两种.显式抛异常的主体是应用程序,它指的是在程序中使用“throw”关键字,手 ...

- postgresql copy的使用方式

方法一: 将数据库表复制到磁盘文件: copy "Test" to 'G:/Test.csv' delimiter ',' csv header encoding 'GBK'; 从 ...

- angularjs探秘<二>表达式、指令、数据绑定

距离第一篇笔记好久了,抽空把angular的笔记梳理梳理. ng-init:初始化指令,这里可以声明变量,且变量不用指定数据类型(类似js中的var用法). 数值变量与字符串相加默认做字符串拼接运算. ...

- [转]asp+oracle分页

PageSize:每页显示的记录数.PageCount:根据用户设定好的PageSize和表中的总记录数,系统自动算出总页数.RecordCount:表中的总记录数.AbsolutePage:表示当前 ...

- 快速了解CSS3当中的HSLA 颜色值怎么算

CSS3文档中提到:(HSLA) H是色度,取值在0度~360度之间,0度是红色,120度是绿色,240度是蓝色.360度也是红色. S是饱和度,是色彩的纯度,是一个百分比的值,取值在0%~100%, ...

- 4.安装mitmproxy问题处理

上次进行到安装 证书出现问题: 1.主要原因是:对应python版本不一致 需要3.6以上的版本 才能在cmd黑窗口执行 mitmdump命令 生成证书 如图: windows 版本 双击安装 mit ...