21-hadoop-weibo推送广告

1, tf-idf

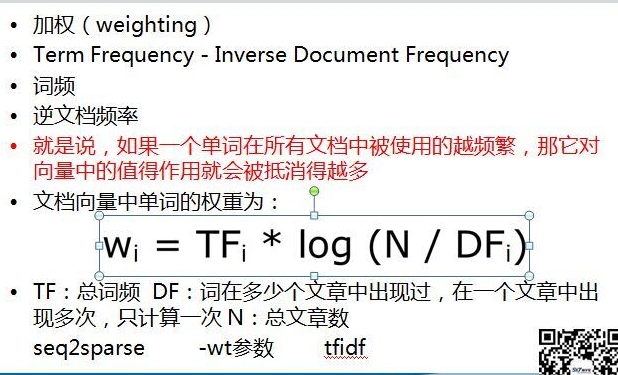

计算每个人的词条中的重要度

需要3个mapreduce 的 job执行, 第一个计算 TF 和 n, 第二个计算 DF, 第三个代入公式计算结果值

1, 第一个job

- package com.wenbronk.weibo;

- import java.io.IOException;

- import java.io.StringReader;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.LongWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Mapper;

- import org.wltea.analyzer.core.IKSegmenter;

- import org.wltea.analyzer.core.Lexeme;

- /**

- * 第一个map, 计算 TF 和 N

- *

- * @author root

- *

- */

- public class FirstMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

- /**

- * TF 在一个文章中出现的词频 N 总共多少文章

- * 按行传入

- */

- @Override

- protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context)

- throws IOException, InterruptedException {

- String[] values = value.toString().trim().split("\t");

- if (values.length >= ) {

- String id = values[].trim();

- String content = values[].trim();

- // 分词

- StringReader stringReader = new StringReader(content);

- IKSegmenter ikSegmenter = new IKSegmenter(stringReader, true);

- Lexeme word = null;

- while ((word = ikSegmenter.next()) != null ) {

- String w = word.getLexemeText();

- context.write(new Text(w + "_" + id), new IntWritable());

- }

- context.write(new Text("count"), new IntWritable());

- }else {

- System.out.println(values.toString() + "---");

- }

- }

- }

reduce

- package com.wenbronk.weibo;

- import java.io.IOException;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Reducer;

- /**

- * 统计tf, n

- * @author root

- *

- */

- public class FirstReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

- @Override

- protected void reduce(Text arg0, Iterable<IntWritable> arg1,

- Reducer<Text, IntWritable, Text, IntWritable>.Context arg2) throws IOException, InterruptedException {

- int sum = ;

- for (IntWritable intWritable : arg1) {

- sum += intWritable.get();

- }

- if (arg0.equals(new Text("count"))) {

- System.err.println(arg0.toString() + "---");

- }

- arg2.write(arg0, new IntWritable(sum));

- }

- }

partition

- package com.wenbronk.weibo;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.lib.partition.HashPartitioner;

- /**

- * 决定分区, 计划分4个, n一个, tf三个

- * @author root

- *

- */

- public class FirstPartition extends HashPartitioner<Text, IntWritable>{

- @Override

- public int getPartition(Text key, IntWritable value, int numReduceTasks) {

- if (key.equals(new Text("count"))) {

- return ;

- }else {

- return super.getPartition(key, value, numReduceTasks - );

- }

- }

- }

mainJob

- package com.wenbronk.weibo;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.fs.FileSystem;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Job;

- import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

- import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

- public class FirstJob {

- public static void main(String[] args) {

- Configuration config = new Configuration();

- config.set("fs.defaults", "hdfs://192.168.208.106:8020");

- config.set("yarn.resourcemanager.hostname", "192.168.208.106");

- // config.set("maper.jar", "E:\\sxt\\target\\weibo1.jar");

- try {

- Job job = Job.getInstance(config);

- job.setJarByClass(FirstJob.class);

- job.setJobName("first");

- job.setPartitionerClass(FirstPartition.class);

- job.setMapperClass(FirstMapper.class);

- job.setNumReduceTasks();

- job.setCombinerClass(FirstReducer.class);

- job.setReducerClass(FirstReducer.class);

- job.setMapOutputKeyClass(Text.class);

- job.setMapOutputValueClass(IntWritable.class);

- FileInputFormat.addInputPath(job, new Path("E:\\sxt\\1-MapReduce\\data\\weibo2.txt"));

- FileSystem fileSystem = FileSystem.get(config);

- Path outPath = new Path("E:\\sxt\\1-MapReduce\\data\\weibo1");

- if (fileSystem.exists(outPath)) {

- fileSystem.delete(outPath);

- }

- FileOutputFormat.setOutputPath(job, outPath);

- boolean waitForCompletion = job.waitForCompletion(true);

- if (waitForCompletion) {

- System.out.println("first success");

- }

- }catch (Exception e) {

- e.printStackTrace();

- }

- }

- }

2, 第二个

- package com.wenbronk.weibo;

- import java.io.IOException;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.LongWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Mapper;

- import org.apache.hadoop.mapreduce.lib.input.FileSplit;

- /**

- * 计算 DFi的值, 在多少个文章中出现过

- *

- */

- public class SecondMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

- @Override

- protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, IntWritable>.Context context)

- throws IOException, InterruptedException {

- // 获取当前maptask的数据片段

- FileSplit inputSplit = (FileSplit) context.getInputSplit();

- // count不被统计

- if (!inputSplit.getPath().getName().contains("part-r-00003")) {

- String[] values = value.toString().trim().split("\t");

- if (values.length >= ) {

- String[] split = values[].trim().split("_");

- if (split.length >= ) {

- String id = split[];

- context.write(new Text(id), new IntWritable());

- }

- }

- }else {

- System.out.println(value.toString() + "----");

- }

- }

- }

reduce

- package com.wenbronk.weibo;

- import java.io.IOException;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Reducer;

- /**

- *

- * @author root

- *

- */

- public class SecondReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

- @Override

- protected void reduce(Text arg0, Iterable<IntWritable> arg1,

- Reducer<Text, IntWritable, Text, IntWritable>.Context arg2) throws IOException, InterruptedException {

- int sum = ;

- for (IntWritable intWritable : arg1) {

- sum += intWritable.get();

- }

- arg2.write(new Text(arg0), new IntWritable(sum));

- }

- }

mainjob

- package com.wenbronk.weibo;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.fs.FileSystem;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Job;

- import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

- import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

- public class SecondJob {

- public static void main(String[] args) {

- Configuration config = new Configuration();

- config.set("fs.default", "hdfs://192.168.208.106:8020");

- config.set("yarn.resourcemanager.hostname", "192.168.208.106");

- try {

- Job job = Job.getInstance(config);

- job.setJarByClass(SecondJob.class);

- job.setJobName("second");

- job.setMapperClass(SecondMapper.class);

- job.setCombinerClass(SecondReducer.class);

- job.setReducerClass(SecondReducer.class);

- job.setOutputKeyClass(Text.class);

- job.setOutputValueClass(IntWritable.class);

- FileInputFormat.addInputPath(job, new Path("E:\\sxt\\1-MapReduce\\data\\weibo1"));

- FileSystem fileSystem = FileSystem.get(config);

- Path outPath = new Path("E:\\sxt\\1-MapReduce\\data\\weibo2");

- if (fileSystem.exists(outPath)) {

- fileSystem.delete(outPath);

- }

- FileOutputFormat.setOutputPath(job, outPath);

- boolean f = job.waitForCompletion(true);

- if (f) {

- System.out.println("job2 success");

- }

- }catch(Exception e) {

- e.printStackTrace();

- }

- }

- }

3, 第三个Job

- package com.wenbronk.weibo;

- import java.io.BufferedReader;

- import java.io.IOException;

- import java.io.InputStreamReader;

- import java.net.URI;

- import java.text.NumberFormat;

- import java.util.HashMap;

- import java.util.Map;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.fs.FSDataInputStream;

- import org.apache.hadoop.fs.FileSystem;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.LongWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Mapper;

- import org.apache.hadoop.mapreduce.lib.input.FileSplit;

- public class ThirdMapper extends Mapper<LongWritable, Text, Text, Text>{

- //存放微博总数, 将小数据缓存进内存, 预加载

- public static Map<String, Integer> cmap = null;

- //存放df

- public static Map<String, Integer> df = null;

- // 在初始化类时执行, 将数据预加载进map

- protected void setup(Context context)

- throws IOException, InterruptedException {

- System.out.println("*****");

- if (cmap == null || cmap.size() == || df == null || df.size() == ) {

- URI[] cacheFiles = context.getCacheFiles();

- if (cacheFiles != null) {

- for (URI uri : cacheFiles) {

- if (uri.getPath().endsWith("part-r-00003")) {

- Path path = new Path(uri.getPath());

- // 获取文件

- Configuration configuration = context.getConfiguration();

- FileSystem fs = FileSystem.get(configuration);

- FSDataInputStream open = fs.open(path);

- BufferedReader reader = new BufferedReader(new InputStreamReader(open));

- // BufferedReader reader = new BufferedReader(new FileReader(path.getName()));

- String line = reader.readLine();

- if (line.startsWith("count")) {

- String[] split = line.split("\t");

- cmap = new HashMap<>();

- cmap.put(split[], Integer.parseInt(split[].trim()));

- }

- reader.close();

- }else if (uri.getPath().endsWith("part-r-00000")) {

- df = new HashMap<>();

- Path path = new Path(uri.getPath());

- // 获取文件

- Configuration configuration = context.getConfiguration();

- FileSystem fs = FileSystem.get(configuration);

- FSDataInputStream open = fs.open(path);

- BufferedReader reader = new BufferedReader(new InputStreamReader(open));

- // BufferedReader reader = new BufferedReader(new FileReader(path.getName()));

- String line = null;

- while ((line = reader.readLine()) != null) {

- String[] ls = line.split("\t");

- df.put(ls[], Integer.parseInt(ls[].trim()));

- }

- reader.close();

- }

- }

- }

- }

- }

- @Override

- protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, Text>.Context context)

- throws IOException, InterruptedException {

- // 获取分片

- FileSplit inputSplit = (FileSplit) context.getInputSplit();

- if (!inputSplit.getPath().getName().contains("part-r-00003")) {

- String[] values = value.toString().trim().split("\t");

- if (values.length >= ) {

- int tf = Integer.parseInt(values[].trim());

- String[] ss = values[].split("_");

- if (ss.length >= ) {

- String word = ss[];

- String id = ss[];

- // 公式

- Double s = tf * Math.log(cmap.get("count")) / df.get(word);

- NumberFormat format = NumberFormat.getInstance();

- // 取小数点后5位

- format.setMaximumFractionDigits();

- context.write(new Text(id), new Text(word + ": " + format.format(s)));

- }else {

- System.out.println(value.toString() + "------");

- }

- }

- }

- }

- }

reduce

- package com.wenbronk.weibo;

- import java.io.IOException;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Reducer;

- public class ThirdReducer extends Reducer<Text, Text, Text, Text>{

- @Override

- protected void reduce(Text arg0, Iterable<Text> arg1, Reducer<Text, Text, Text, Text>.Context arg2)

- throws IOException, InterruptedException {

- StringBuffer sb = new StringBuffer();

- for (Text text : arg1) {

- sb.append(text.toString() + "\t");

- }

- arg2.write(arg0, new Text(sb.toString()));

- }

- }

mainJob

- package com.wenbronk.weibo;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.fs.FileSystem;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Job;

- import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

- import org.apache.hadoop.mapreduce.lib.input.KeyValueTextInputFormat;

- import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

- public class ThirdJob {

- public static void main(String[] args) {

- Configuration config = new Configuration();

- config.set("fs.defaults", "hdfs://192.168.208.106:8020");

- config.set("yarn.resourcemanager.hostname", "192.168.208.106");

- try {

- Job job = Job.getInstance(config);

- job.setJarByClass(ThirdJob.class);

- job.setJobName("third");

- // job.setInputFormatClass(KeyValueTextInputFormat.class);

- //把微博总数加载到内存

- job.addCacheFile(new Path("E:\\sxt\\1-MapReduce\\data\\weibo1\\part-r-00003").toUri());

- //把df加载到内存

- job.addCacheFile(new Path("E:\\sxt\\1-MapReduce\\data\\weibo2\\part-r-00000").toUri());

- job.setMapperClass(ThirdMapper.class);

- job.setReducerClass(ThirdReducer.class);

- job.setMapOutputKeyClass(Text.class);

- job.setMapOutputValueClass(Text.class);

- FileSystem fs = FileSystem.get(config);

- FileInputFormat.addInputPath(job, new Path("E:\\sxt\\1-MapReduce\\data\\weibo1"));

- Path path = new Path("E:\\sxt\\1-MapReduce\\data\\weibo3");

- if (fs.exists(path)) {

- fs.delete(path);

- }

- FileOutputFormat.setOutputPath(job, path);

- boolean waitForCompletion = job.waitForCompletion(true);

- if(waitForCompletion) {

- System.out.println("执行job成功");

- }

- }catch (Exception e) {

- e.printStackTrace();

- }

- }

- }

系列来自尚学堂视频

21-hadoop-weibo推送广告的更多相关文章

- ADSafe净网大师----所谓的去广告神器竟然在偷偷推送广告

今天刚开发完的网站上线联调, 偶然发现<head>里多了一个脚本引用: <script async src="http://c.cnzz.com/core.php" ...

- Android消息推送之GCM方式(二)

<声明> 转载请保留本来源地址: http://blog.csdn.net/wzg_1987/article/details/9148023 上一节讲了GCM方式实现前的一些必要准备工作, ...

- android极光推送

版权声明:本文为博主原创文章,未经博主允许不得转载. Android开发记录18-集成推送服务的一点说明 关于推送服务,国内有很多选择,笔者也对它们进行了一个详细的对比,一般我们产品选择推送服务主要考 ...

- MIUI(ADUI)关闭广告推送步骤方法

MIUI自从到了版本MIUI8之后,系统增加了各种推送,让人们所诟病.很多消费者因为这个原因,不再考虑小米手机,尽管小米手机确实很便宜. 下面就说一下如何关闭所有的MIUI 8的广告推送.方法源自MI ...

- 如何用Nearby Service开发针对附近人群的精准广告推送功能

当你想找一家餐厅吃饭,却不知道去哪家,这时候手机跳出一条通知,为你自动推送附近优质餐厅的信息,你会点击查看吗?当你还在店内纠结于是否买下一双球鞋时,手机应用给了你发放了老顾客5折优惠券,这样的广告 ...

- 使用用WCF中的双工(Duplex)模式将广告图片推送到每个Winform客户端机子上

参考资料地址:http://www.cnblogs.com/server126/archive/2011/08/11/2134942.html 代码实现: WCF宿主(服务端) IServices.c ...

- iOS10推送必看UNNotificationServiceExtension

转:http://www.cocoachina.com/ios/20161017/17769.html (收录供个人学习用) iOS10推送UNNotificationServic 招聘信息: 产品经 ...

- (转)在SAE使用Apple Push Notification Service服务开发iOS应用, 实现消息推送

在SAE使用Apple Push Notification Service服务开发iOS应用, 实现消息推送 From: http://saeapns.sinaapp.com/doc.html 1,在 ...

- 基于 WebSocket 的 MQTT 移动推送方案

WebSphere MQ Telemetry Transport 简介 WebSphere MQ Telemetry Transport (MQTT) 是一项异步消息传输协议,是 IBM 在分析了他们 ...

随机推荐

- Page页面生命周期——微信小程序

onLoad:function (options) { //页面初始化 console.log('index Load') }, onShow:function () { // ...

- python使用数据库的一些操作

学py感觉还是用linux操作系统比较好,下载安装mysql很简单,linux里面都有自带的,但是要用python去用mysql我们就得安装一下他的模块,因为python里面没有自带他的模块,用yum ...

- day22(过滤器Filter)

过滤器 生命周期:初始化 -----过滤-------销毁 作用:过滤url ,特定字符 创建方式:实现一个接口继承Filter package com.baidu.filter; import ja ...

- NameNode工作机制

NameNode工作机制

- 省赛在即!最大流问题回顾学习!!DInic

Dinic是很好的算法,但是我还是从ek算法复习起步 面对最大流问题,印象最深的就是反向边的思想,他给我们提供了反悔的机会,其实现在放到实际上来想,可以相当于两边的水都流了这条边,只是方向不一样,放到 ...

- poj2481

题意:给定一些线段(s, e),起点为s,终点为e,求每一段线段被多少线段包含(不包括相等) 思路:很明显的树状数组题目..但是做的时候想了挺久..(下面的x为线段起点, y为线段终点) 做法1:先对 ...

- Android-Kotlin-单例模式

先看一个案例,非单例模式的案例: 描述Dog对象: package cn.kotlin.kotlin_oop08 class Dog(var name:String, var color:String ...

- Linux-IO重定向与管道

1. 输入与输出 标准输入 STDIN 文件描述符:0,默认:键盘输入 标准输出 STDOUT 文件描述符:1,默认:屏幕输出 错误输出 STDERR 文件描述符:2,默认:屏幕输出 2. 标准输出重 ...

- Python 中的深拷贝和浅拷贝

一.浅拷贝python中 对象赋值时 默认是浅拷贝,满足如下规律:1. 对于 不可变对象(字符串,元组 等),赋值 实际上是创建一个新的对象:例如: >>> person=['nam ...

- JS学习笔记9_JSON

1.JSON概述 JavaScript Object Natation,js对象表示法,(像XML一样)是一种数据格式,它与js有相同的语法形式 P.S.一点小历史:JSON之父是道格拉斯,<J ...