ansible api调用及二次封装详解

ansible 2.7调用

程序

import json

from collections import namedtuple

from ansible.parsing.dataloader import DataLoader

from ansible.vars.manager import VariableManager

from ansible.inventory.manager import InventoryManager

from ansible.playbook.play import Play

from ansible.executor.task_queue_manager import TaskQueueManager

from ansible.plugins.callback import CallbackBase class ResultsCollector(CallbackBase):

"""重构执行结果"""

def __init__(self, *args, **kwargs):

super(ResultsCollector, self).__init__(*args, **kwargs)

self.host_ok = {}

self.host_unreachable = {}

self.host_failed = {} def v2_runner_on_unreachable(self, result, *args, **kwargs):

"""不可达"""

self.host_unreachable[result._host.get_name()] = result def v2_runner_on_ok(self, result, *args, **kwargs):

"""执行成功"""

self.host_ok[result._host.get_name()] = result def v2_runner_on_failed(self, result, *args, **kwargs):

"""执行失败"""

self.host_failed[result._host.get_name()] = result def run_ansible(module_name,module_args,host_list,option_dict):

# 初始化需要的对象

Options = namedtuple('Options',

['connection', 'module_path', 'forks', 'become',

'become_method', 'private_key_file','become_user',

'remote_user', 'check', 'diff']

)

#负责查找和读取yaml、json和ini文件

loader = DataLoader() options = Options(connection='ssh', module_path=None, forks=5, become=option_dict['become'],

become_method='sudo',private_key_file="/root/.ssh/id_rsa",

become_user='root', remote_user=option_dict['remote_user'], check=False, diff=False

) passwords = dict(vault_pass='secret') # 实例化ResultCallback来处理结果

callback = ResultsCollector() # 创建库存(inventory)并传递给VariableManager

inventory = InventoryManager(loader=loader, sources=['/etc/ansible/hosts'])

variable_manager = VariableManager(loader=loader, inventory=inventory) # 创建任务

host = ",".join(host_list)

play_source = dict(

name="Ansible Play",

hosts=host,

gather_facts='no',

tasks=[

dict(action=dict(module=module_name, args=module_args), register='shell_out'),

]

)

play = Play().load(play_source, variable_manager=variable_manager, loader=loader) # 开始执行

tqm = None tqm = TaskQueueManager(

inventory=inventory,

variable_manager=variable_manager,

loader=loader,

options=options,

passwords=passwords,

stdout_callback=callback,

)

result = tqm.run(play) result_raw = {'success': {}, 'failed': {}, 'unreachable': {}} for host, result in callback.host_ok.items():

result_raw['success'][host] = result._result['stdout_lines'] for host, result in callback.host_failed.items():

result_raw['failed'][host] = result._result['stderr_lines'] for host, result in callback.host_unreachable.items():

result_raw['unreachable'][host] = result._result["msg"] return json.dumps(result_raw, indent=4) if __name__ == "__main__":

option_dict={"become":True,"remote_user":"opadmin"}

module_name = 'shell'

module_args = "hostname"

host_list = ['10.0.0.131','10.0.0.132']

ret = run_ansible(module_name,module_args,host_list,option_dict)

print(ret)

运行结果:

[root@mcw1 ~]$ python3 runapi.py

{

"success": {},

"failed": {},

"unreachable": {

"10.0.0.132": "Failed to connect to the host via ssh: opadmin@10.0.0.132: Permission denied (publickey,password).\r\n",

"10.0.0.131": "Failed to connect to the host via ssh: opadmin@10.0.0.131: Permission denied (publickey,password,keyboard-interactive).\r\n"

}

}

[root@mcw1 ~]$

又上面程序可知,是用opadmin用户连接,而我并没有这个用户,改成root当前连接用户就行了

执行结果如下:

[root@mcw1 ~]$ python3 runapi.py

{

"success": {

"10.0.0.132": [

"mcw2"

],

"10.0.0.131": [

"mcw1"

]

},

"failed": {},

"unreachable": {}

}

新增主机133,但是这台主机并没有运行,肯定连不上

[root@mcw1 ~]$ python3 runapi.py

[WARNING]: Could not match supplied host pattern, ignoring: 10.0.0.133 {

"success": {

"10.0.0.132": [

"mcw2"

],

"10.0.0.131": [

"mcw1"

]

},

"failed": {},

"unreachable": {}

}

打印结果:

for host, result in callback.host_ok.items():

result_raw['success'][host] = result._result['stdout_lines']

print('chenggongde',result)

for host, result in callback.host_failed.items():

result_raw['failed'][host] = result._result['stderr_lines']

print('shibaide',result)

[root@mcw1 ~]$ python3 runapi.py

[WARNING]: Could not match supplied host pattern, ignoring: 10.0.0.133 chenggongde <ansible.executor.task_result.TaskResult object at 0x7f39929c5828>

chenggongde <ansible.executor.task_result.TaskResult object at 0x7f399215ad30>

{

"success": {

"10.0.0.132": [

"mcw2"

],

"10.0.0.131": [

"mcw1"

]

},

"failed": {},

"unreachable": {}

}

[root@mcw1 ~]$ vim runapi.py

[root@mcw1 ~]$

[root@mcw1 ~]$

for host, result in callback.host_ok.items():

result_raw['success'][host] = result._result['stdout_lines']

print('chenggongde',result._result)

for host, result in callback.host_failed.items():

result_raw['failed'][host] = result._result['stderr_lines']

print('shibaide',result.result)

[root@mcw1 ~]$ python3 runapi.py

[WARNING]: Could not match supplied host pattern, ignoring: 10.0.0.133 chenggongde {'changed': True, 'end': '2021-12-14 11:43:50.453528', 'stdout': 'mcw2', 'cmd': 'hostname', 'rc': 0, 'start': '2021-12-14 11:43:50.438420', 'stderr': '', 'delta': '0:00:00.015108', 'invocation': {'module_args': {'creates': None, 'executable': None, '_uses_shell': True, '_raw_params': 'hostname', 'removes': None, 'argv': None, 'warn': True, 'chdir': None, 'stdin': None}}, '_ansible_parsed': True, 'stdout_lines': ['mcw2'], 'stderr_lines': [], '_ansible_no_log': False}

chenggongde {'changed': True, 'end': '2021-12-14 03:43:49.826931', 'stdout': 'mcw1', 'cmd': 'hostname', 'rc': 0, 'start': '2021-12-14 03:43:49.820276', 'stderr': '', 'delta': '0:00:00.006655', 'invocation': {'module_args': {'creates': None, 'executable': None, '_uses_shell': True, '_raw_params': 'hostname', 'removes': None, 'argv': None, 'warn': True, 'chdir': None, 'stdin': None}}, '_ansible_parsed': True, 'stdout_lines': ['mcw1'], 'stderr_lines': [], '_ansible_no_log': False}

{

"success": {

"10.0.0.132": [

"mcw2"

],

"10.0.0.131": [

"mcw1"

]

},

"failed": {},

"unreachable": {}

}

成功返回结果JSON格式

{

'changed': True,

'end': '2021-12-14 11:43:50.453528',

'stdout': 'mcw2',

'cmd': 'hostname',

'rc': 0,

'start': '2021-12-14 11:43:50.438420',

'stderr': '',

'delta': '0:00:00.015108',

'invocation': {

'module_args': {

'creates': None,

'executable': None,

'_uses_shell': True,

'_raw_params': 'hostname',

'removes': None,

'argv': None,

'warn': True,

'chdir': None,

'stdin': None

}

},

'_ansible_parsed': True,

'stdout_lines': ['mcw2'],

'stderr_lines': [],

'_ansible_no_log': False

}

成功返回结果

ansible的setup模块返回信息

[root@mcw1 ~]$ ansible 10.0.0.132 -m setup

10.0.0.132 | SUCCESS => {

"ansible_facts": {

"ansible_all_ipv4_addresses": [

"172.16.1.132",

"10.0.0.132"

],

"ansible_all_ipv6_addresses": [

"fe80::20c:29ff:fe01:a8d1",

"fe80::20c:29ff:fe01:a8c7"

],

"ansible_apparmor": {

"status": "disabled"

},

"ansible_architecture": "x86_64",

"ansible_bios_date": "07/02/2015",

"ansible_bios_version": "6.00",

"ansible_cmdline": {

"BOOT_IMAGE": "/vmlinuz-3.10.0-693.el7.x86_64",

"LANG": "en_US.UTF-8",

"crashkernel": "auto",

"quiet": true,

"rhgb": true,

"ro": true,

"root": "UUID=a6a0174b-0f9f-4b72-a404-439c25a15ec9"

},

"ansible_date_time": {

"date": "2021-12-14",

"day": "14",

"epoch": "1639454188",

"hour": "11",

"iso8601": "2021-12-14T03:56:28Z",

"iso8601_basic": "20211214T115628396754",

"iso8601_basic_short": "20211214T115628",

"iso8601_micro": "2021-12-14T03:56:28.396754Z",

"minute": "56",

"month": "12",

"second": "28",

"time": "11:56:28",

"tz": "CST",

"tz_offset": "+0800",

"weekday": "Tuesday",

"weekday_number": "2",

"weeknumber": "50",

"year": "2021"

},

"ansible_default_ipv4": {

"address": "10.0.0.132",

"alias": "ens33",

"broadcast": "10.0.0.255",

"gateway": "10.0.0.2",

"interface": "ens33",

"macaddress": "00:0c:29:01:a8:c7",

"mtu": 1500,

"netmask": "255.255.255.0",

"network": "10.0.0.0",

"type": "ether"

},

"ansible_default_ipv6": {},

"ansible_device_links": {

"ids": {

"sr0": [

"ata-VMware_Virtual_IDE_CDROM_Drive_10000000000000000001"

]

},

"labels": {

"sr0": [

"CentOS\\x207\\x20x86_64"

]

},

"masters": {},

"uuids": {

"sda1": [

"20f697d6-9ca0-4b9e-9f20-56325684f2ca"

],

"sda2": [

"6042e061-f29b-4ac1-9f32-87980ddf0e1f"

],

"sda3": [

"a6a0174b-0f9f-4b72-a404-439c25a15ec9"

],

"sr0": [

"2017-09-06-10-51-00-00"

]

}

},

"ansible_devices": {

"sda": {

"holders": [],

"host": "SCSI storage controller: LSI Logic / Symbios Logic 53c1030 PCI-X Fusion-MPT Dual Ultra320 SCSI (rev 01)",

"links": {

"ids": [],

"labels": [],

"masters": [],

"uuids": []

},

"model": "VMware Virtual S",

"partitions": {

"sda1": {

"holders": [],

"links": {

"ids": [],

"labels": [],

"masters": [],

"uuids": [

"20f697d6-9ca0-4b9e-9f20-56325684f2ca"

]

},

"sectors": "1015808",

"sectorsize": 512,

"size": "496.00 MB",

"start": "2048",

"uuid": "20f697d6-9ca0-4b9e-9f20-56325684f2ca"

},

"sda2": {

"holders": [],

"links": {

"ids": [],

"labels": [],

"masters": [],

"uuids": [

"6042e061-f29b-4ac1-9f32-87980ddf0e1f"

]

},

"sectors": "1587200",

"sectorsize": 512,

"size": "775.00 MB",

"start": "1017856",

"uuid": "6042e061-f29b-4ac1-9f32-87980ddf0e1f"

},

"sda3": {

"holders": [],

"links": {

"ids": [],

"labels": [],

"masters": [],

"uuids": [

"a6a0174b-0f9f-4b72-a404-439c25a15ec9"

]

},

"sectors": "39337984",

"sectorsize": 512,

"size": "18.76 GB",

"start": "2605056",

"uuid": "a6a0174b-0f9f-4b72-a404-439c25a15ec9"

}

},

"removable": "0",

"rotational": "1",

"sas_address": null,

"sas_device_handle": null,

"scheduler_mode": "deadline",

"sectors": "41943040",

"sectorsize": "512",

"size": "20.00 GB",

"support_discard": "0",

"vendor": "VMware,",

"virtual": 1

},

"sr0": {

"holders": [],

"host": "IDE interface: Intel Corporation 82371AB/EB/MB PIIX4 IDE (rev 01)",

"links": {

"ids": [

"ata-VMware_Virtual_IDE_CDROM_Drive_10000000000000000001"

],

"labels": [

"CentOS\\x207\\x20x86_64"

],

"masters": [],

"uuids": [

"2017-09-06-10-51-00-00"

]

},

"model": "VMware IDE CDR10",

"partitions": {},

"removable": "1",

"rotational": "1",

"sas_address": null,

"sas_device_handle": null,

"scheduler_mode": "cfq",

"sectors": "8830976",

"sectorsize": "2048",

"size": "4.21 GB",

"support_discard": "0",

"vendor": "NECVMWar",

"virtual": 1

}

},

"ansible_distribution": "CentOS",

"ansible_distribution_file_parsed": true,

"ansible_distribution_file_path": "/etc/redhat-release",

"ansible_distribution_file_variety": "RedHat",

"ansible_distribution_major_version": "7",

"ansible_distribution_release": "Core",

"ansible_distribution_version": "7.4",

"ansible_dns": {

"nameservers": [

"2.5.5.5",

"2.6.6.6",

"223.5.5.5",

"223.6.6.6"

]

},

"ansible_domain": "",

"ansible_effective_group_id": 0,

"ansible_effective_user_id": 0,

"ansible_ens33": {

"active": true,

"device": "ens33",

"features": {

"busy_poll": "off [fixed]",

"fcoe_mtu": "off [fixed]",

"generic_receive_offload": "on",

"generic_segmentation_offload": "on",

"highdma": "off [fixed]",

"hw_tc_offload": "off [fixed]",

"l2_fwd_offload": "off [fixed]",

"large_receive_offload": "off [fixed]",

"loopback": "off [fixed]",

"netns_local": "off [fixed]",

"ntuple_filters": "off [fixed]",

"receive_hashing": "off [fixed]",

"rx_all": "off",

"rx_checksumming": "off",

"rx_fcs": "off",

"rx_vlan_filter": "on [fixed]",

"rx_vlan_offload": "on",

"rx_vlan_stag_filter": "off [fixed]",

"rx_vlan_stag_hw_parse": "off [fixed]",

"scatter_gather": "on",

"tcp_segmentation_offload": "on",

"tx_checksum_fcoe_crc": "off [fixed]",

"tx_checksum_ip_generic": "on",

"tx_checksum_ipv4": "off [fixed]",

"tx_checksum_ipv6": "off [fixed]",

"tx_checksum_sctp": "off [fixed]",

"tx_checksumming": "on",

"tx_fcoe_segmentation": "off [fixed]",

"tx_gre_csum_segmentation": "off [fixed]",

"tx_gre_segmentation": "off [fixed]",

"tx_gso_partial": "off [fixed]",

"tx_gso_robust": "off [fixed]",

"tx_ipip_segmentation": "off [fixed]",

"tx_lockless": "off [fixed]",

"tx_mpls_segmentation": "off [fixed]",

"tx_nocache_copy": "off",

"tx_scatter_gather": "on",

"tx_scatter_gather_fraglist": "off [fixed]",

"tx_sctp_segmentation": "off [fixed]",

"tx_sit_segmentation": "off [fixed]",

"tx_tcp6_segmentation": "off [fixed]",

"tx_tcp_ecn_segmentation": "off [fixed]",

"tx_tcp_mangleid_segmentation": "off",

"tx_tcp_segmentation": "on",

"tx_udp_tnl_csum_segmentation": "off [fixed]",

"tx_udp_tnl_segmentation": "off [fixed]",

"tx_vlan_offload": "on [fixed]",

"tx_vlan_stag_hw_insert": "off [fixed]",

"udp_fragmentation_offload": "off [fixed]",

"vlan_challenged": "off [fixed]"

},

"hw_timestamp_filters": [],

"ipv4": {

"address": "10.0.0.132",

"broadcast": "10.0.0.255",

"netmask": "255.255.255.0",

"network": "10.0.0.0"

},

"ipv6": [

{

"address": "fe80::20c:29ff:fe01:a8c7",

"prefix": "64",

"scope": "link"

}

],

"macaddress": "00:0c:29:01:a8:c7",

"module": "e1000",

"mtu": 1500,

"pciid": "0000:02:01.0",

"promisc": false,

"speed": 1000,

"timestamping": [

"tx_software",

"rx_software",

"software"

],

"type": "ether"

},

"ansible_ens37": {

"active": true,

"device": "ens37",

"features": {

"busy_poll": "off [fixed]",

"fcoe_mtu": "off [fixed]",

"generic_receive_offload": "on",

"generic_segmentation_offload": "on",

"highdma": "off [fixed]",

"hw_tc_offload": "off [fixed]",

"l2_fwd_offload": "off [fixed]",

"large_receive_offload": "off [fixed]",

"loopback": "off [fixed]",

"netns_local": "off [fixed]",

"ntuple_filters": "off [fixed]",

"receive_hashing": "off [fixed]",

"rx_all": "off",

"rx_checksumming": "off",

"rx_fcs": "off",

"rx_vlan_filter": "on [fixed]",

"rx_vlan_offload": "on",

"rx_vlan_stag_filter": "off [fixed]",

"rx_vlan_stag_hw_parse": "off [fixed]",

"scatter_gather": "on",

"tcp_segmentation_offload": "on",

"tx_checksum_fcoe_crc": "off [fixed]",

"tx_checksum_ip_generic": "on",

"tx_checksum_ipv4": "off [fixed]",

"tx_checksum_ipv6": "off [fixed]",

"tx_checksum_sctp": "off [fixed]",

"tx_checksumming": "on",

"tx_fcoe_segmentation": "off [fixed]",

"tx_gre_csum_segmentation": "off [fixed]",

"tx_gre_segmentation": "off [fixed]",

"tx_gso_partial": "off [fixed]",

"tx_gso_robust": "off [fixed]",

"tx_ipip_segmentation": "off [fixed]",

"tx_lockless": "off [fixed]",

"tx_mpls_segmentation": "off [fixed]",

"tx_nocache_copy": "off",

"tx_scatter_gather": "on",

"tx_scatter_gather_fraglist": "off [fixed]",

"tx_sctp_segmentation": "off [fixed]",

"tx_sit_segmentation": "off [fixed]",

"tx_tcp6_segmentation": "off [fixed]",

"tx_tcp_ecn_segmentation": "off [fixed]",

"tx_tcp_mangleid_segmentation": "off",

"tx_tcp_segmentation": "on",

"tx_udp_tnl_csum_segmentation": "off [fixed]",

"tx_udp_tnl_segmentation": "off [fixed]",

"tx_vlan_offload": "on [fixed]",

"tx_vlan_stag_hw_insert": "off [fixed]",

"udp_fragmentation_offload": "off [fixed]",

"vlan_challenged": "off [fixed]"

},

"hw_timestamp_filters": [],

"ipv4": {

"address": "172.16.1.132",

"broadcast": "172.16.1.255",

"netmask": "255.255.255.0",

"network": "172.16.1.0"

},

"ipv6": [

{

"address": "fe80::20c:29ff:fe01:a8d1",

"prefix": "64",

"scope": "link"

}

],

"macaddress": "00:0c:29:01:a8:d1",

"module": "e1000",

"mtu": 1500,

"pciid": "0000:02:05.0",

"promisc": false,

"speed": 1000,

"timestamping": [

"tx_software",

"rx_software",

"software"

],

"type": "ether"

},

"ansible_env": {

"HOME": "/root",

"LANG": "en_US.UTF-8",

"LESSOPEN": "||/usr/bin/lesspipe.sh %s",

"LOGNAME": "root",

"LS_COLORS": "rs=0:di=01;34:ln=01;36:mh=00:pi=40;33:so=01;35:do=01;35:bd=40;33;01:cd=40;33;01:or=40;31;01:mi=01;05;37;41:su=37;41:sg=30;43:ca=30;41:tw=30;42:ow=34;42:st=37;44:ex=01;32:*.tar=01;31:*.tgz=01;31:*.arc=01;31:*.arj=01;31:*.taz=01;31:*.lha=01;31:*.lz4=01;31:*.lzh=01;31:*.lzma=01;31:*.tlz=01;31:*.txz=01;31:*.tzo=01;31:*.t7z=01;31:*.zip=01;31:*.z=01;31:*.Z=01;31:*.dz=01;31:*.gz=01;31:*.lrz=01;31:*.lz=01;31:*.lzo=01;31:*.xz=01;31:*.bz2=01;31:*.bz=01;31:*.tbz=01;31:*.tbz2=01;31:*.tz=01;31:*.deb=01;31:*.rpm=01;31:*.jar=01;31:*.war=01;31:*.ear=01;31:*.sar=01;31:*.rar=01;31:*.alz=01;31:*.ace=01;31:*.zoo=01;31:*.cpio=01;31:*.7z=01;31:*.rz=01;31:*.cab=01;31:*.jpg=01;35:*.jpeg=01;35:*.gif=01;35:*.bmp=01;35:*.pbm=01;35:*.pgm=01;35:*.ppm=01;35:*.tga=01;35:*.xbm=01;35:*.xpm=01;35:*.tif=01;35:*.tiff=01;35:*.png=01;35:*.svg=01;35:*.svgz=01;35:*.mng=01;35:*.pcx=01;35:*.mov=01;35:*.mpg=01;35:*.mpeg=01;35:*.m2v=01;35:*.mkv=01;35:*.webm=01;35:*.ogm=01;35:*.mp4=01;35:*.m4v=01;35:*.mp4v=01;35:*.vob=01;35:*.qt=01;35:*.nuv=01;35:*.wmv=01;35:*.asf=01;35:*.rm=01;35:*.rmvb=01;35:*.flc=01;35:*.avi=01;35:*.fli=01;35:*.flv=01;35:*.gl=01;35:*.dl=01;35:*.xcf=01;35:*.xwd=01;35:*.yuv=01;35:*.cgm=01;35:*.emf=01;35:*.axv=01;35:*.anx=01;35:*.ogv=01;35:*.ogx=01;35:*.aac=01;36:*.au=01;36:*.flac=01;36:*.mid=01;36:*.midi=01;36:*.mka=01;36:*.mp3=01;36:*.mpc=01;36:*.ogg=01;36:*.ra=01;36:*.wav=01;36:*.axa=01;36:*.oga=01;36:*.spx=01;36:*.xspf=01;36:",

"MAIL": "/var/mail/root",

"PATH": "/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin",

"PWD": "/root",

"SHELL": "/bin/bash",

"SHLVL": "2",

"SSH_CLIENT": "10.0.0.131 45784 22",

"SSH_CONNECTION": "10.0.0.131 45784 10.0.0.132 22",

"SSH_TTY": "/dev/pts/1",

"TERM": "xterm",

"USER": "root",

"XDG_RUNTIME_DIR": "/run/user/0",

"XDG_SESSION_ID": "33",

"_": "/usr/bin/python"

},

"ansible_fibre_channel_wwn": [],

"ansible_fips": false,

"ansible_form_factor": "Other",

"ansible_fqdn": "mcw2",

"ansible_hostname": "mcw2",

"ansible_hostnqn": "",

"ansible_interfaces": [

"lo",

"ens37",

"ens33"

],

"ansible_is_chroot": false,

"ansible_iscsi_iqn": "",

"ansible_kernel": "3.10.0-693.el7.x86_64",

"ansible_kernel_version": "#1 SMP Tue Aug 22 21:09:27 UTC 2017",

"ansible_lo": {

"active": true,

"device": "lo",

"features": {

"busy_poll": "off [fixed]",

"fcoe_mtu": "off [fixed]",

"generic_receive_offload": "on",

"generic_segmentation_offload": "on",

"highdma": "on [fixed]",

"hw_tc_offload": "off [fixed]",

"l2_fwd_offload": "off [fixed]",

"large_receive_offload": "off [fixed]",

"loopback": "on [fixed]",

"netns_local": "on [fixed]",

"ntuple_filters": "off [fixed]",

"receive_hashing": "off [fixed]",

"rx_all": "off [fixed]",

"rx_checksumming": "on [fixed]",

"rx_fcs": "off [fixed]",

"rx_vlan_filter": "off [fixed]",

"rx_vlan_offload": "off [fixed]",

"rx_vlan_stag_filter": "off [fixed]",

"rx_vlan_stag_hw_parse": "off [fixed]",

"scatter_gather": "on",

"tcp_segmentation_offload": "on",

"tx_checksum_fcoe_crc": "off [fixed]",

"tx_checksum_ip_generic": "on [fixed]",

"tx_checksum_ipv4": "off [fixed]",

"tx_checksum_ipv6": "off [fixed]",

"tx_checksum_sctp": "on [fixed]",

"tx_checksumming": "on",

"tx_fcoe_segmentation": "off [fixed]",

"tx_gre_csum_segmentation": "off [fixed]",

"tx_gre_segmentation": "off [fixed]",

"tx_gso_partial": "off [fixed]",

"tx_gso_robust": "off [fixed]",

"tx_ipip_segmentation": "off [fixed]",

"tx_lockless": "on [fixed]",

"tx_mpls_segmentation": "off [fixed]",

"tx_nocache_copy": "off [fixed]",

"tx_scatter_gather": "on [fixed]",

"tx_scatter_gather_fraglist": "on [fixed]",

"tx_sctp_segmentation": "on",

"tx_sit_segmentation": "off [fixed]",

"tx_tcp6_segmentation": "on",

"tx_tcp_ecn_segmentation": "on",

"tx_tcp_mangleid_segmentation": "on",

"tx_tcp_segmentation": "on",

"tx_udp_tnl_csum_segmentation": "off [fixed]",

"tx_udp_tnl_segmentation": "off [fixed]",

"tx_vlan_offload": "off [fixed]",

"tx_vlan_stag_hw_insert": "off [fixed]",

"udp_fragmentation_offload": "on",

"vlan_challenged": "on [fixed]"

},

"hw_timestamp_filters": [],

"ipv4": {

"address": "127.0.0.1",

"broadcast": "",

"netmask": "255.0.0.0",

"network": "127.0.0.0"

},

"ipv6": [

{

"address": "::1",

"prefix": "128",

"scope": "host"

}

],

"mtu": 65536,

"promisc": false,

"timestamping": [

"rx_software",

"software"

],

"type": "loopback"

},

"ansible_local": {},

"ansible_lsb": {},

"ansible_machine": "x86_64",

"ansible_machine_id": "06fe05f799ee4e959f6d4acfaf48fb73",

"ansible_memfree_mb": 708,

"ansible_memory_mb": {

"nocache": {

"free": 799,

"used": 177

},

"real": {

"free": 708,

"total": 976,

"used": 268

},

"swap": {

"cached": 0,

"free": 774,

"total": 774,

"used": 0

}

},

"ansible_memtotal_mb": 976,

"ansible_mounts": [

{

"block_available": 97473,

"block_size": 4096,

"block_total": 126121,

"block_used": 28648,

"device": "/dev/sda1",

"fstype": "xfs",

"inode_available": 253625,

"inode_total": 253952,

"inode_used": 327,

"mount": "/boot",

"options": "rw,relatime,attr2,inode64,noquota",

"size_available": 399249408,

"size_total": 516591616,

"uuid": "20f697d6-9ca0-4b9e-9f20-56325684f2ca"

},

{

"block_available": 4445479,

"block_size": 4096,

"block_total": 4914688,

"block_used": 469209,

"device": "/dev/sda3",

"fstype": "xfs",

"inode_available": 9777400,

"inode_total": 9834496,

"inode_used": 57096,

"mount": "/",

"options": "rw,relatime,attr2,inode64,noquota",

"size_available": 18208681984,

"size_total": 20130562048,

"uuid": "a6a0174b-0f9f-4b72-a404-439c25a15ec9"

}

],

"ansible_nodename": "mcw2",

"ansible_os_family": "RedHat",

"ansible_pkg_mgr": "yum",

"ansible_proc_cmdline": {

"BOOT_IMAGE": "/vmlinuz-3.10.0-693.el7.x86_64",

"LANG": "en_US.UTF-8",

"crashkernel": "auto",

"quiet": true,

"rhgb": true,

"ro": true,

"root": "UUID=a6a0174b-0f9f-4b72-a404-439c25a15ec9"

},

"ansible_processor": [

"0",

"GenuineIntel",

"Intel(R) Core(TM) i5-6200U CPU @ 2.30GHz"

],

"ansible_processor_cores": 1,

"ansible_processor_count": 1,

"ansible_processor_threads_per_core": 1,

"ansible_processor_vcpus": 1,

"ansible_product_name": "VMware Virtual Platform",

"ansible_product_serial": "VMware-56 4d f7 ae 22 ca 1a 94-0f 51 de bd d1 01 a8 c7",

"ansible_product_uuid": "AEF74D56-CA22-941A-0F51-DEBDD101A8C7",

"ansible_product_version": "None",

"ansible_python": {

"executable": "/usr/bin/python",

"has_sslcontext": true,

"type": "CPython",

"version": {

"major": 2,

"micro": 5,

"minor": 7,

"releaselevel": "final",

"serial": 0

},

"version_info": [

2,

7,

5,

"final",

0

]

},

"ansible_python_version": "2.7.5",

"ansible_real_group_id": 0,

"ansible_real_user_id": 0,

"ansible_selinux": {

"status": "disabled"

},

"ansible_selinux_python_present": true,

"ansible_service_mgr": "systemd",

"ansible_ssh_host_key_ecdsa_public": "AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBHN5nr0MqsICjVJdjFRKBty6woRTynokXmAIMVv2KDJatAe4s+AcJbeXa/F6STBNg3lSv7E/tMuRqfhg1HNgQXo=",

"ansible_ssh_host_key_ed25519_public": "AAAAC3NzaC1lZDI1NTE5AAAAIOdVpn9SHhP8EuyhGSVsf7PhpbGXVMA/Y0JPc4J3yGrB",

"ansible_ssh_host_key_rsa_public": "AAAAB3NzaC1yc2EAAAADAQABAAABAQCwSeY75Lwu9XO7LrCAa/KB+e+5x/Sm8JtC6C70JUai/PHvL1uMWOalZtiPrCsaoNsokBLvnMjA/fqrAjY0Q+hVVifJt0tM4TgN5akeKQkrZsZ7WdxKZS8mMyxpgJFWvaPmBg+gCTDRQdafJJdXTwHJ70Gt/Fas85EF/uV4SUUMMUb/xts20BIOu0CznS9qmrWU8yS0p4AogSsmuWtnqzlqSaiEdpe38Cz6vIuZymsc0t4JltKu04cg2+D9pSp/YWXF/yMq2uVXidOgWQeTDkxX6hPu/qTau49DiSB/eS8+RUeguI5ibrr1brbl5hGODgI5e/yfCnZ/VxWXhcoJEF1D",

"ansible_swapfree_mb": 774,

"ansible_swaptotal_mb": 774,

"ansible_system": "Linux",

"ansible_system_capabilities": [

"cap_chown",

"cap_dac_override",

"cap_dac_read_search",

"cap_fowner",

"cap_fsetid",

"cap_kill",

"cap_setgid",

"cap_setuid",

"cap_setpcap",

"cap_linux_immutable",

"cap_net_bind_service",

"cap_net_broadcast",

"cap_net_admin",

"cap_net_raw",

"cap_ipc_lock",

"cap_ipc_owner",

"cap_sys_module",

"cap_sys_rawio",

"cap_sys_chroot",

"cap_sys_ptrace",

"cap_sys_pacct",

"cap_sys_admin",

"cap_sys_boot",

"cap_sys_nice",

"cap_sys_resource",

"cap_sys_time",

"cap_sys_tty_config",

"cap_mknod",

"cap_lease",

"cap_audit_write",

"cap_audit_control",

"cap_setfcap",

"cap_mac_override",

"cap_mac_admin",

"cap_syslog",

"35",

"36+ep"

],

"ansible_system_capabilities_enforced": "True",

"ansible_system_vendor": "VMware, Inc.",

"ansible_uptime_seconds": 5667,

"ansible_user_dir": "/root",

"ansible_user_gecos": "root",

"ansible_user_gid": 0,

"ansible_user_id": "root",

"ansible_user_shell": "/bin/bash",

"ansible_user_uid": 0,

"ansible_userspace_architecture": "x86_64",

"ansible_userspace_bits": "64",

"ansible_virtualization_role": "guest",

"ansible_virtualization_type": "VMware",

"discovered_interpreter_python": "/usr/bin/python",

"gather_subset": [

"all"

],

"module_setup": true

},

"changed": false

}

很多信息

ansibe2.9 api调用

简书挺详细的:https://www.jianshu.com/p/ec1e4d8438e9

官网 https://docs.ansible.com/ansible/latest/dev_guide/developing_api.html

查看版本信息

[root@mcw01 ~/mcw]$ ansible --version

ansible 2.9.27

config file = /etc/ansible/ansible.cfg

configured module search path = [u'/root/mcw', u'/root/mcw']

ansible python module location = /usr/lib/python2.7/site-packages/ansible

executable location = /usr/bin/ansible

python version = 2.7.5 (default, Aug 4 2017, 00:39:18) [GCC 4.8.5 20150623 (Red Hat 4.8.5-16)]

[root@mcw01 ~/mcw]$

api调用程序示例

#!/usr/bin/env python from __future__ import (absolute_import, division, print_function)

__metaclass__ = type import json

import shutil import ansible.constants as C

from ansible.executor.task_queue_manager import TaskQueueManager

from ansible.module_utils.common.collections import ImmutableDict

from ansible.inventory.manager import InventoryManager

from ansible.parsing.dataloader import DataLoader

from ansible.playbook.play import Play

from ansible.plugins.callback import CallbackBase

from ansible.vars.manager import VariableManager

from ansible import context # Create a callback plugin so we can capture the output

class ResultsCollectorJSONCallback(CallbackBase):

"""A sample callback plugin used for performing an action as results come in. If you want to collect all results into a single object for processing at

the end of the execution, look into utilizing the ``json`` callback plugin

or writing your own custom callback plugin.

""" def __init__(self, *args, **kwargs):

super(ResultsCollectorJSONCallback, self).__init__(*args, **kwargs)

self.host_ok = {}

self.host_unreachable = {}

self.host_failed = {} def v2_runner_on_unreachable(self, result):

host = result._host

self.host_unreachable[host.get_name()] = result def v2_runner_on_ok(self, result, *args, **kwargs):

"""Print a json representation of the result. Also, store the result in an instance attribute for retrieval later

"""

host = result._host

self.host_ok[host.get_name()] = result

print(json.dumps({host.name: result._result}, indent=4)) def v2_runner_on_failed(self, result, *args, **kwargs):

host = result._host

self.host_failed[host.get_name()] = result def main():

host_list = ['10.0.0.12']#['localhost', 'www.example.com', 'www.google.com']

# since the API is constructed for CLI it expects certain options to always be set in the context object

context.CLIARGS = ImmutableDict(connection='smart', module_path=['/to/mymodules', '/usr/share/ansible'], forks=10, become=None,

become_method=None, become_user=None, check=False, diff=False)

# required for

# https://github.com/ansible/ansible/blob/devel/lib/ansible/inventory/manager.py#L204

sources = ','.join(host_list)

if len(host_list) == 1:

sources += ',' # initialize needed objects

loader = DataLoader() # Takes care of finding and reading yaml, json and ini files

passwords = dict(vault_pass='secret') # Instantiate our ResultsCollectorJSONCallback for handling results as they come in. Ansible expects this to be one of its main display outlets

results_callback = ResultsCollectorJSONCallback() # create inventory, use path to host config file as source or hosts in a comma separated string

inventory = InventoryManager(loader=loader, sources=sources) # variable manager takes care of merging all the different sources to give you a unified view of variables available in each context

variable_manager = VariableManager(loader=loader, inventory=inventory) # instantiate task queue manager, which takes care of forking and setting up all objects to iterate over host list and tasks

# IMPORTANT: This also adds library dirs paths to the module loader

# IMPORTANT: and so it must be initialized before calling `Play.load()`.

tqm = TaskQueueManager(

inventory=inventory,

variable_manager=variable_manager,

loader=loader,

passwords=passwords,

stdout_callback=results_callback, # Use our custom callback instead of the ``default`` callback plugin, which prints to stdout

) # create data structure that represents our play, including tasks, this is basically what our YAML loader does internally.

play_source = dict(

name="Ansible Play",

hosts=host_list,

gather_facts='no',

tasks=[

dict(action=dict(module='shell', args='ls'), register='shell_out'),

dict(action=dict(module='debug', args=dict(msg='{{shell_out.stdout}}'))),

dict(action=dict(module='command', args=dict(cmd='/usr/bin/uptime'))),

]

) # Create play object, playbook objects use .load instead of init or new methods,

# this will also automatically create the task objects from the info provided in play_source

play = Play().load(play_source, variable_manager=variable_manager, loader=loader) # Actually run it

try:

result = tqm.run(play) # most interesting data for a play is actually sent to the callback's methods

finally:

# we always need to cleanup child procs and the structures we use to communicate with them

tqm.cleanup()

if loader:

loader.cleanup_all_tmp_files() # Remove ansible tmpdir

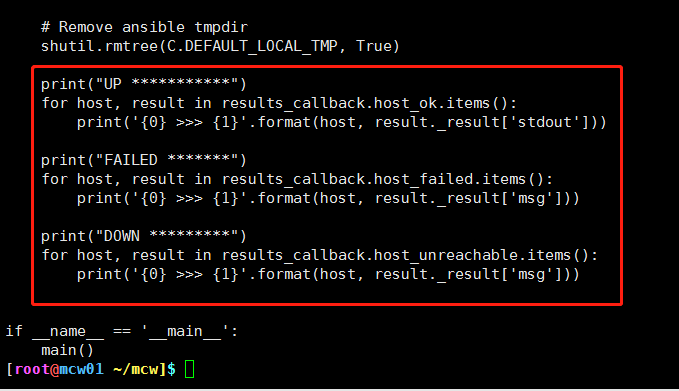

shutil.rmtree(C.DEFAULT_LOCAL_TMP, True) print("UP ***********")

for host, result in results_callback.host_ok.items():

print('{0} >>> {1}'.format(host, result._result['stdout'])) print("FAILED *******")

for host, result in results_callback.host_failed.items():

print('{0} >>> {1}'.format(host, result._result['msg'])) print("DOWN *********")

for host, result in results_callback.host_unreachable.items():

print('{0} >>> {1}'.format(host, result._result['msg'])) if __name__ == '__main__':

main()

执行结果:

[root@mcw01 ~/mcw]$ python 2.9ansibleapi.py

{

"10.0.0.12": {

"stderr_lines": [],

"changed": true,

"end": "2022-10-23 22:28:26.502922",

"_ansible_no_log": false,

"stdout": "10.0.0.11\n11.py\n2.9ansibleapi.py\nanaconda-ks.cfg\nAnsible\napache-tomcat-8.0.27\napache-tomcat-8.0.27.tar.gz\ncpis\ndemo.sh\ndump.rdb\njpress-web-newest\njpress-web-newest.tar.gz\njpress-web-newest.war\nlogs\nmcw\nmcw.py\nsite-packages.zip\nsonarqube\nsonar-scanner-cli-4.7.0.2747-linux.zip\ntomcat",

"cmd": "ls",

"start": "2022-10-23 22:28:26.491623",

"delta": "0:00:00.011299",

"stderr": "",

"rc": 0,

"invocation": {

"module_args": {

"warn": true,

"executable": null,

"_uses_shell": true,

"strip_empty_ends": true,

"_raw_params": "ls",

"removes": null,

"argv": null,

"creates": null,

"chdir": null,

"stdin_add_newline": true,

"stdin": null

}

},

"stdout_lines": [

"10.0.0.11",

"11.py",

"2.9ansibleapi.py",

"anaconda-ks.cfg",

"Ansible",

"apache-tomcat-8.0.27",

"apache-tomcat-8.0.27.tar.gz",

"cpis",

"demo.sh",

"dump.rdb",

"jpress-web-newest",

"jpress-web-newest.tar.gz",

"jpress-web-newest.war",

"logs",

"mcw",

"mcw.py",

"site-packages.zip",

"sonarqube",

"sonar-scanner-cli-4.7.0.2747-linux.zip",

"tomcat"

],

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

}

}

}

{

"10.0.0.12": {

"msg": "10.0.0.11\n11.py\n2.9ansibleapi.py\nanaconda-ks.cfg\nAnsible\napache-tomcat-8.0.27\napache-tomcat-8.0.27.tar.gz\ncpis\ndemo.sh\ndump.rdb\njpress-web-newest\njpress-web-newest.tar.gz\njpress-web-newest.war\nlogs\nmcw\nmcw.py\nsite-packages.zip\nsonarqube\nsonar-scanner-cli-4.7.0.2747-linux.zip\ntomcat",

"changed": false,

"_ansible_verbose_always": true,

"_ansible_no_log": false

}

}

{

"10.0.0.12": {

"stderr_lines": [],

"cmd": [

"/usr/bin/uptime"

],

"end": "2022-10-23 22:28:27.178912",

"_ansible_no_log": false,

"stdout": " 22:28:27 up 1 day, 6:46, 2 users, load average: 0.00, 0.01, 0.05",

"changed": true,

"rc": 0,

"start": "2022-10-23 22:28:27.165476",

"stderr": "",

"delta": "0:00:00.013436",

"invocation": {

"module_args": {

"creates": null,

"executable": null,

"_uses_shell": false,

"strip_empty_ends": true,

"_raw_params": "/usr/bin/uptime",

"removes": null,

"argv": null,

"warn": true,

"chdir": null,

"stdin_add_newline": true,

"stdin": null

}

},

"stdout_lines": [

" 22:28:27 up 1 day, 6:46, 2 users, load average: 0.00, 0.01, 0.05"

]

}

}

UP ***********

10.0.0.12 >>> 22:28:27 up 1 day, 6:46, 2 users, load average: 0.00, 0.01, 0.05

FAILED *******

DOWN *********

[root@mcw01 ~/mcw]$

我们将上面的程序,任务列表里的两个注释掉,只保留一个 执行uptime的命令。执行脚本,应该是相当于执行ansible 10.0.0.12 -m command -a "/usr/bin/uptime"

play_source = dict(

name="Ansible Play",

hosts=host_list,

gather_facts='no',

tasks=[

#dict(action=dict(module='shell', args='ls'), register='shell_out'),

# dict(action=dict(module='debug', args=dict(msg='{{shell_out.stdout}}'))),

dict(action=dict(module='command', args=dict(cmd='/usr/bin/uptime'))),

]

)

这相当于写剧本的方式。使用哪个模块,参数是什么。

看修改后的执行结果:有执行结果数据。

[root@mcw01 ~/mcw]$ python 2.9ansibleapi.py

{

"10.0.0.12": {

"stderr_lines": [],

"cmd": [

"/usr/bin/uptime"

],

"end": "2022-10-23 22:36:23.669234",

"_ansible_no_log": false,

"stdout": " 22:36:23 up 1 day, 6:54, 2 users, load average: 0.13, 0.04, 0.05",

"changed": true,

"rc": 0,

"start": "2022-10-23 22:36:23.658986",

"stderr": "",

"delta": "0:00:00.010248",

"invocation": {

"module_args": {

"creates": null,

"executable": null,

"_uses_shell": false,

"strip_empty_ends": true,

"_raw_params": "/usr/bin/uptime",

"removes": null,

"argv": null,

"warn": true,

"chdir": null,

"stdin_add_newline": true,

"stdin": null

}

},

"stdout_lines": [

" 22:36:23 up 1 day, 6:54, 2 users, load average: 0.13, 0.04, 0.05"

],

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

}

}

}

UP ***********

10.0.0.12 >>> 22:36:23 up 1 day, 6:54, 2 users, load average: 0.13, 0.04, 0.05

FAILED *******

DOWN *********

[root@mcw01 ~/mcw]$

最后的三个打印是任务执行ok或者失败或者主机不可达的结果打印。我们这里是正常执行命令获取到uptime数据。所以是ok那里打印出了数据。它是for循环后面的,然后获取到主机和主机执行命令的结果。

api调用程序分析(类似于runner ip)

我们只需要定义好hosts主机,主机组。然后定义好任务就可以了。如果有需要,可以对执行返回结果做些操作。:

[root@mcw01 ~/mcw]$ cat 2.9ansibleapi.py

#!/usr/bin/env python from __future__ import (absolute_import, division, print_function)

__metaclass__ = type import json

import shutil import ansible.constants as C

from ansible.executor.task_queue_manager import TaskQueueManager

from ansible.module_utils.common.collections import ImmutableDict

from ansible.inventory.manager import InventoryManager

from ansible.parsing.dataloader import DataLoader

from ansible.playbook.play import Play

from ansible.plugins.callback import CallbackBase

from ansible.vars.manager import VariableManager

from ansible import context # Create a callback plugin so we can capture the output

class ResultsCollectorJSONCallback(CallbackBase): #就是定义一个回到插件,继承回调基础类

"""A sample callback plugin used for performing an action as results come in. If you want to collect all results into a single object for processing at

the end of the execution, look into utilizing the ``json`` callback plugin

or writing your own custom callback plugin.

""" def __init__(self, *args, **kwargs):

super(ResultsCollectorJSONCallback, self).__init__(*args, **kwargs)

self.host_ok = {}

self.host_unreachable = {}

self.host_failed = {} def v2_runner_on_unreachable(self, result):

host = result._host

self.host_unreachable[host.get_name()] = result def v2_runner_on_ok(self, result, *args, **kwargs):

"""Print a json representation of the result. Also, store the result in an instance attribute for retrieval later

"""

host = result._host

self.host_ok[host.get_name()] = result

print(json.dumps({host.name: result._result}, indent=4)) #这就是我们在执行程序之后看到的结果json.成功才有 def v2_runner_on_failed(self, result, *args, **kwargs):

host = result._host

self.host_failed[host.get_name()] = result def main():

host_list = ['10.0.0.12']#定义主机['localhost', 'www.example.com', 'www.google.com']

# since the API is constructed for CLI it expects certain options to always be set in the context object

context.CLIARGS = ImmutableDict(connection='smart', module_path=['/to/mymodules', '/usr/share/ansible'], forks=10, become=None,

become_method=None, become_user=None, check=False, diff=False)

# required for

# https://github.com/ansible/ansible/blob/devel/lib/ansible/inventory/manager.py#L204

sources = ','.join(host_list)

if len(host_list) == 1:

sources += ',' #将主机以逗号分隔都拼接起来,如果只有一个数据,那么ip后面拼接个逗号

print(sources) # 结果: 10.0.0.12,

# initialize needed objects

loader = DataLoader() #打印结果<ansible.parsing.dataloader.DataLoader object at 0x1156fd0># Takes care of finding and reading yaml, json and ini files

passwords = dict(vault_pass='secret') #打印结果:{'vault_pass': 'secret'} # Instantiate our ResultsCollectorJSONCallback for handling results as they come in. Ansible expects this to be one of its main display outlets

results_callback = ResultsCollectorJSONCallback() #结果:回调函数对象 # create inventory, use path to host config file as source or hosts in a comma separated string

inventory = InventoryManager(loader=loader, sources=sources) #打印结果:inventory管理对象<ansible.inventory.manager.InventoryManager object at 0x1fe3550>

#数据加载对象,10.0.0.12,

# variable manager takes care of merging all the different sources to give you a unified view of variables available in each context

variable_manager = VariableManager(loader=loader, inventory=inventory) #变量管理对象<ansible.vars.manager.VariableManager object at 0x208d5d0> # instantiate task queue manager, which takes care of forking and setting up all objects to iterate over host list and tasks

# IMPORTANT: This also adds library dirs paths to the module loader

# IMPORTANT: and so it must be initialized before calling `Play.load()`.

tqm = TaskQueueManager( #打印结果:任务队列管理对象

inventory=inventory, #任务对象管理对象,传inventory管理对象,传变量管理对象,传数据加载对象,传密码,传回调函数对象

variable_manager=variable_manager, #上面跟我们相关的只有主机列表,其它不变就行啊。传递的参数都是刚刚定义好的

loader=loader,

passwords=passwords,

stdout_callback=results_callback, # Use our custom callback instead of the ``default`` callback plugin, which prints to stdout

) # create data structure that represents our play, including tasks, this is basically what our YAML loader does internally.

play_source = dict( #剧本源,是个字典,指定剧本名称吗?指定主机列表,指定是否需要收集facts

name="Ansible Play", #指定任务列表,任务列表中可以存放多个任务。

hosts=host_list, #这跟写剧本似的,剧本就是多个任务聚集起来的。每个任务有name,有使用哪个模块,使用了什么参数。

gather_facts='no', #这里也是这样。剧本里面还可以定义变量,循环,判断,注册变量等等,这些写法应该是不同的。如下,

tasks=[ #将任务一ls的执行结果注册为变量,变量名是shell_out。第二个任务调用这个注册变量的变量名,然后点stdout得到注册变量shell执行的标准输出

# dict(action=dict(module='shell', args='ls'), register='shell_out'),#这就是变量在任务之间的传递。可以参考写剧本,去找这个任务列表怎么实现对应功能的

# dict(action=dict(module='debug', args=dict(msg='{{shell_out.stdout}}'))), #所以我们需要在任务列表中执行任务,在hosts中定义主机和主机组。有这两个基本上

dict(action=dict(module='command', args=dict(cmd='/usr/bin/uptime'))), #就能写剧本了,实现剧本功能了。我们主要关注这两点

]

) # Create play object, playbook objects use .load instead of init or new methods,

# this will also automatically create the task objects from the info provided in play_source

play = Play().load(play_source, variable_manager=variable_manager, loader=loader) #打印结果:Ansible Play,估计是用了str方法了。

#实例化一个剧本对象,将剧本源(名字,主机,是否收集facts,有哪些剧本任务)导入到剧本对象;两变量管理对象导入到剧本对象中,将数据加载对象导入。估计内部会进行变量渲染等等之类的吧。暂时不需要关心

# Actually run it

try: #运行剧本。将剧本传入到任务队列管理里面的run方法中。这里面应该是对剧本任务进行消费。剧本对象传到任务队列里面了。里面包含了剧本需要的主机,剧本有哪些任务,剧本使用的变量等。

result = tqm.run(play) # most interesting data for a play is actually sent to the callback's methods

finally: #result打印结果好像只是个0,难道是显示执行成功的意思?我们看到执行的结果字典,应该就是在result和play之间哪里打印的。如果没有意外应该是result这里,运行剧本任务

# we always need to cleanup child procs and the structures we use to communicate with them #然后将运行结果在终端上打印出来这个结果字典

tqm.cleanup() #清理临时文件,也就是执行任务的过程中,会创建临时文件。任务执行完成,对临时文件进行清理。我们写程序也可以这样做。

if loader:

loader.cleanup_all_tmp_files() # Remove ansible tmpdir #C.DEFAULT_LOCAL_TMP是/root/.ansible/tmp/ansible-local-16916wo3RhL目录,里面有个临时文件101 /root/.ansible/tmp/ansible-tmp-1666015891.13-5454-129638497331557/AnsiballZ_command.py 。

shutil.rmtree(C.DEFAULT_LOCAL_TMP, True) print("results_callback: ",results_callback) #<__main__.ResultsCollectorJSONCallback object at 0x2858550>

print("results_callback.host_ok",results_callback.host_ok) #{'10.0.0.12': <ansible.executor.task_result.TaskResult object at 0x203dd90>}

print("results_callback.host_ok.items: ",results_callback.host_ok.items()) #[('10.0.0.12', <ansible.executor.task_result.TaskResult object at 0x203dd90>)]

for host, result in results_callback.host_ok.items():

print(host,result) #10.0.0.12 <ansible.executor.task_result.TaskResult object at 0x203dd90>

print("result._result: ",result._result)

print("结果对象里找表准输出,就是剧本执行的输出信息,这里就是命令uptime的执行打印结果: ",result._result['stdout']) {'stderr_lines': [], u'cmd': [u'/usr/bin/uptime'], u'end': u'2022-10-24 00:31:38.590871', '_ansible_no_log': False, u'stdout': u' 00:31:38 up 1 day, 8:49, 2 users, load average: 0.00, 0.01, 0.05', u'changed': True, u'rc': 0, u'start': u'2022-10-24 00:31:38.580309', u'stderr': u'', u'delta': u'0:00:00.010562', u'invocation': {u'module_args': {u'creates': None, u'executable': None, u'_uses_shell': False, u'strip_empty_ends': True, u'_raw_params': u'/usr/bin/uptime', u'removes': None, u'argv': None, u'warn': True, u'chdir': None, u'stdin_add_newline': True, u'stdin': None}}, 'stdout_lines': [u' 00:31:38 up 1 day, 8:49, 2 users, load average: 0.00, 0.01, 0.05'], 'ansible_facts': {u'discovered_interpreter_python': u'/usr/bin/python'}} 将数据整理如下:

{'stderr_lines': [],

u'cmd': [u'/usr/bin/uptime'],

u'end': u'2022-10-24 00:31:38.590871',

'_ansible_no_log': False,

u'stdout': u' 00:31:38 up 1 day, 8:49, 2 users, load average: 0.00, 0.01, 0.05',

u'changed': True,

u'rc': 0,

u'start': u'2022-10-24 00:31:38.580309',

u'stderr': u'',

u'delta': u'0:00:00.010562',

u'invocation': {

u'module_args': {u'creates': None,

u'executable': None,

u'_uses_shell': False,

u'strip_empty_ends': True,

u'_raw_params': u'/usr/bin/uptime',

u'removes': None,

u'argv': None,

u'warn': True,

u'chdir': None,

u'stdin_add_newline': True,

u'stdin': None

}

},

'stdout_lines': [u' 00:31:38 up 1 day, 8:49, 2 users, load average: 0.00, 0.01, 0.05'],

'ansible_facts': {u'discovered_interpreter_python': u'/usr/bin/python'}}

上面是result._result打印出来的。也就是我们需要指定的键,直接字典的方式取值就行了。标准输出就是命令的结果。回调函数,运行ok下面打印的也是这个 print("UP ***********")

for host, result in results_callback.host_ok.items():

print('{0} >>> {1}'.format(host, result._result['stdout'])) print("FAILED *******")

for host, result in results_callback.host_failed.items():

#当我们的任务里面dict(action=dict(module='command', args=dict(cmd='cat /usr/bin/uptimebbb'))),查看不存在的文件匹配到失败时。打印信息如下

print('{0} >>> {1}'.format(host, result._result['msg'])) #10.0.0.12 >>> non-zero return code

print("失败:",result._result)

失败: {'stderr_lines': [u'cat: /usr/bin/uptimebbb: No such file or directory'], u'cmd': [u'cat', u'/usr/bin/uptimebbb'], u'stdout': u'', u'msg': u'non-zero return code', u'delta': u'0:00:00.011209', 'stdout_lines': [], 'ansible_facts': {u'discovered_interpreter_python': u'/usr/bin/python'}, u'end': u'2022-10-24 00:44:23.455248', '_ansible_no_log': False, u'changed': True, u'start': u'2022-10-24 00:44:23.444039', u'stderr': u'cat: /usr/bin/uptimebbb: No such file or directory', u'rc': 1, u'invocation': {u'module_args': {u'creates': None, u'executable': None, u'_uses_shell': False, u'strip_empty_ends': True, u'_raw_params': u'cat /usr/bin/uptimebbb', u'removes': None, u'argv': None, u'warn': True, u'chdir': None, u'stdin_add_newline': True, u'stdin': None}}} 整理如下:我们这里定义打印的是msg.,

{'stderr_lines': [u'cat: /usr/bin/uptimebbb: No such file or directory'],

u'cmd': [u'cat', u'/usr/bin/uptimebbb'],

u'stdout': u'',

u'msg': u'non-zero return code',

u'delta': u'0:00:00.011209',

'stdout_lines': [],

'ansible_facts': {u'discovered_interpreter_python': u'/usr/bin/python'},

u'end': u'2022-10-24 00:44:23.455248',

'_ansible_no_log': False,

u'changed': True,

u'start': u'2022-10-24 00:44:23.444039',

u'stderr': u'cat: /usr/bin/uptimebbb: No such file or directory',

u'rc': 1,

u'invocation': {

u'module_args': {u'creates': None,

u'executable': None,

u'_uses_shell': False,

u'strip_empty_ends': True,

u'_raw_params': u'cat /usr/bin/uptimebbb',

u'removes': None,

u'argv': None,

u'warn': True,

u'chdir': None,

u'stdin_add_newline': True,

u'stdin': None}} } print("DOWN *********")

for host, result in results_callback.host_unreachable.items():

print('{0} >>> {1}'.format(host, result._result['msg'])) if __name__ == '__main__':

main()

[root@mcw01 ~/mcw]$

2.9传参的方式,执行命令,实现runner api。

[root@mcw01 ~/mcw]$ cat mytest.py

# _*_ coding:utf-8 __*__

# !/usr/bin/env python from __future__ import (absolute_import, division, print_function) __metaclass__ = type import json

import shutil import ansible.constants as C

from ansible.executor.task_queue_manager import TaskQueueManager

from ansible.module_utils.common.collections import ImmutableDict

from ansible.inventory.manager import InventoryManager

from ansible.parsing.dataloader import DataLoader

from ansible.playbook.play import Play

from ansible.plugins.callback import CallbackBase

from ansible.vars.manager import VariableManager

from ansible import context # Create a callback plugin so we can capture the output

class ResultsCollectorJSONCallback(CallbackBase):

"""A sample callback plugin used for performing an action as results come in. If you want to collect all results into a single object for processing at

the end of the execution, look into utilizing the ``json`` callback plugin

or writing your own custom callback plugin.

""" def __init__(self, *args, **kwargs):

super(ResultsCollectorJSONCallback, self).__init__(*args, **kwargs)

self.host_ok = {}

self.host_unreachable = {}

self.host_failed = {} def v2_runner_on_unreachable(self, result):

host = result._host

self.host_unreachable[host.get_name()] = result def v2_runner_on_ok(self, result, *args, **kwargs):

"""Print a json representation of the result. Also, store the result in an instance attribute for retrieval later

"""

host = result._host

self.host_ok[host.get_name()] = result

print(json.dumps({host.name: result._result}, indent=4)) def v2_runner_on_failed(self, result, *args, **kwargs):

host = result._host

self.host_failed[host.get_name()] = result def main(host_list,module_name,module_args):

host_list = host_list # ['localhost', 'www.example.com', 'www.google.com']

# since the API is constructed for CLI it expects certain options to always be set in the context object

context.CLIARGS = ImmutableDict(connection='smart', module_path=['/to/mymodules', '/usr/share/ansible'], forks=10,

become=None,

become_method=None, become_user=None, check=False, diff=False)

# required for

# https://github.com/ansible/ansible/blob/devel/lib/ansible/inventory/manager.py#L204

sources = ','.join(host_list)

if len(host_list) == 1:

sources += ','

# initialize needed objects

loader = DataLoader() # Takes care of finding and reading yaml, json and ini files

passwords = dict(vault_pass='secret')

# Instantiate our ResultsCollectorJSONCallback for handling results as they come in. Ansible expects this to be one of its main display outlets

results_callback = ResultsCollectorJSONCallback() # create inventory, use path to host config file as source or hosts in a comma separated string

inventory = InventoryManager(loader=loader, sources=sources) # variable manager takes care of merging all the different sources to give you a unified view of variables available in each context

variable_manager = VariableManager(loader=loader, inventory=inventory) # instantiate task queue manager, which takes care of forking and setting up all objects to iterate over host list and tasks

# IMPORTANT: This also adds library dirs paths to the module loader

# IMPORTANT: and so it must be initialized before calling `Play.load()`.

tqm = TaskQueueManager(

inventory=inventory,

variable_manager=variable_manager,

loader=loader,

passwords=passwords,

stdout_callback=results_callback,

# Use our custom callback instead of the ``default`` callback plugin, which prints to stdout

) # create data structure that represents our play, including tasks, this is basically what our YAML loader does internally.

play_source = dict(

name="Ansible Play",

hosts=host_list,

gather_facts='no',

tasks=[

# dict(action=dict(module='shell', args='ls'), register='shell_out'),

# dict(action=dict(module='debug', args=dict(msg='{{shell_out.stdout}}'))),

# dict(action=dict(module='command', args=dict(cmd='cat /usr/bin/uptimebbb'))),

dict(action=dict(module=module_name, args=module_args)),

]

) # Create play object, playbook objects use .load instead of init or new methods,

# this will also automatically create the task objects from the info provided in play_source

play = Play().load(play_source, variable_manager=variable_manager, loader=loader)

# Actually run it

try:

result = tqm.run(play) # most interesting data for a play is actually sent to the callback's methods

finally:

# we always need to cleanup child procs and the structures we use to communicate with them

tqm.cleanup()

if loader:

loader.cleanup_all_tmp_files() # Remove ansible tmpdir

shutil.rmtree(C.DEFAULT_LOCAL_TMP, True) print("UP ***********")

for host, result in results_callback.host_ok.items():

print('{0} >>> {1}'.format(host, result._result['stdout'])) print("FAILED *******")

for host, result in results_callback.host_failed.items():

print('{0} >>> {1}'.format(host, result._result['msg']))

print("失败:", result._result)

print("DOWN *********")

for host, result in results_callback.host_unreachable.items():

print('{0} >>> {1}'.format(host, result._result['msg']))

print("不可达:", result._result) if __name__ == '__main__':

option_dict={"become":True,"remote_user":"root"}

module_name = 'shell'

module_args = "/usr/bin/uptime"

host_list = ['10.0.0.11','10.0.0.12']

main(host_list,module_name,module_args) [root@mcw01 ~/mcw]$

执行结果:

[root@mcw01 ~/mcw]$ python mytest.py

{

"10.0.0.12": {

"stderr_lines": [],

"cmd": "/usr/bin/uptime",

"end": "2022-10-24 01:28:10.831660",

"_ansible_no_log": false,

"stdout": " 01:28:10 up 1 day, 9:46, 2 users, load average: 0.00, 0.01, 0.05",

"changed": true,

"rc": 0,

"start": "2022-10-24 01:28:10.820105",

"stderr": "",

"delta": "0:00:00.011555",

"invocation": {

"module_args": {

"creates": null,

"executable": null,

"_uses_shell": true,

"strip_empty_ends": true,

"_raw_params": "/usr/bin/uptime",

"removes": null,

"argv": null,

"warn": true,

"chdir": null,

"stdin_add_newline": true,

"stdin": null

}

},

"stdout_lines": [

" 01:28:10 up 1 day, 9:46, 2 users, load average: 0.00, 0.01, 0.05"

],

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

}

}

}

{

"10.0.0.11": {

"stderr_lines": [],

"cmd": "/usr/bin/uptime",

"end": "2022-10-24 01:28:11.153952",

"_ansible_no_log": false,

"stdout": " 01:28:11 up 1 day, 9:46, 4 users, load average: 0.68, 0.31, 0.24",

"changed": true,

"rc": 0,

"start": "2022-10-24 01:28:11.093208",

"stderr": "",

"delta": "0:00:00.060744",

"invocation": {

"module_args": {

"creates": null,

"executable": null,

"_uses_shell": true,

"strip_empty_ends": true,

"_raw_params": "/usr/bin/uptime",

"removes": null,

"argv": null,

"warn": true,

"chdir": null,

"stdin_add_newline": true,

"stdin": null

}

},

"stdout_lines": [

" 01:28:11 up 1 day, 9:46, 4 users, load average: 0.68, 0.31, 0.24"

],

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

}

}

}

UP ***********

10.0.0.11 >>> 01:28:11 up 1 day, 9:46, 4 users, load average: 0.68, 0.31, 0.24

10.0.0.12 >>> 01:28:10 up 1 day, 9:46, 2 users, load average: 0.00, 0.01, 0.05

FAILED *******

DOWN *********

[root@mcw01 ~/mcw]$

打印的东西就是我们程序能实实现的一个功能

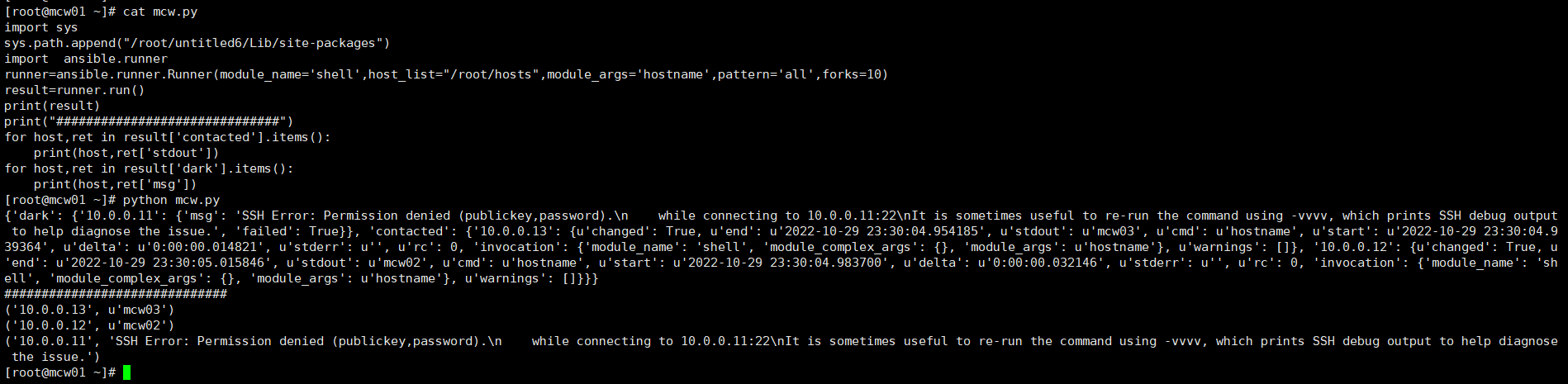

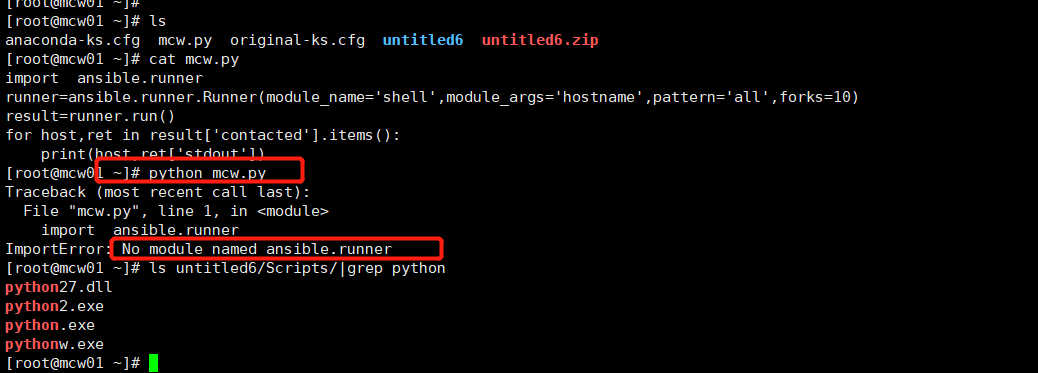

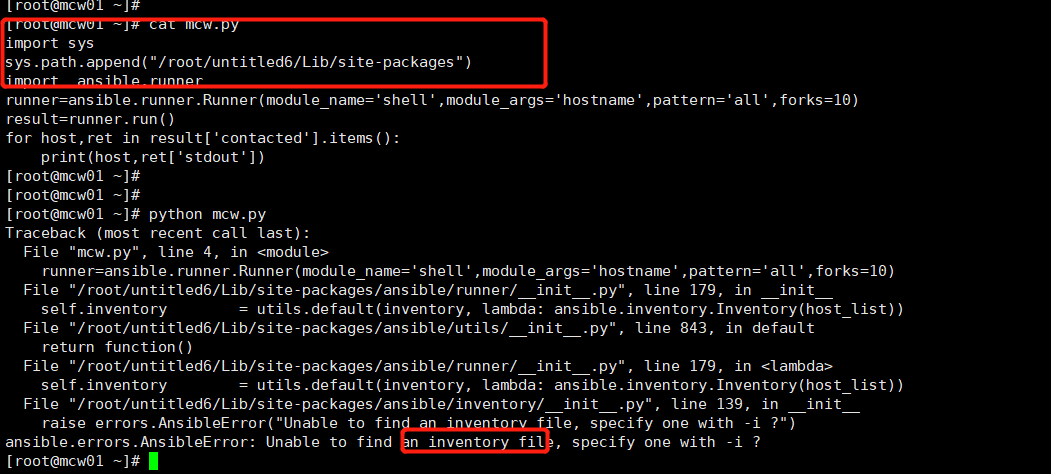

ansible 1.9.6 api调用

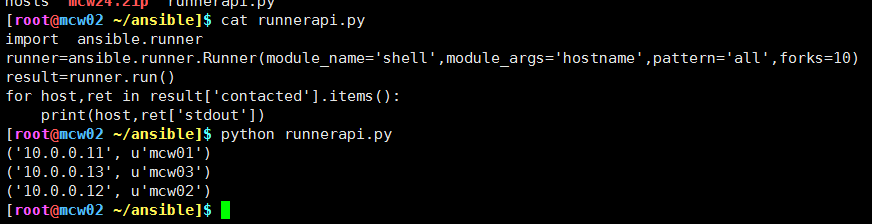

runner api 简单调用案例,以及Runner类的初始化参数大全

程序:

import ansible.runner

runner=ansible.runner.Runner(module_name='shell',module_args='hostname',pattern='all',forks=10)

result=runner.run()

for host,ret in result['contacted'].items():

print(host,ret['stdout'])

只需要将模块名字,你用的参数,以及主机和主机组传进来就可以了。因为ad-hoc模式有这三个就可以使用了。想要更多信息那就看它的其它参数

执行结果

我将源码Runner类的参数放到下面,供参数设置使用

class Runner(object):

''' core API interface to ansible ''' # see bin/ansible for how this is used... def __init__(self,

host_list=C.DEFAULT_HOST_LIST, # ex: /etc/ansible/hosts, legacy usage

module_path=None, # ex: /usr/share/ansible

module_name=C.DEFAULT_MODULE_NAME, # ex: copy

module_args=C.DEFAULT_MODULE_ARGS, # ex: "src=/tmp/a dest=/tmp/b"

forks=C.DEFAULT_FORKS, # parallelism level

timeout=C.DEFAULT_TIMEOUT, # SSH timeout

pattern=C.DEFAULT_PATTERN, # which hosts? ex: 'all', 'acme.example.org'

remote_user=C.DEFAULT_REMOTE_USER, # ex: 'username'

remote_pass=C.DEFAULT_REMOTE_PASS, # ex: 'password123' or None if using key

remote_port=None, # if SSH on different ports

private_key_file=C.DEFAULT_PRIVATE_KEY_FILE, # if not using keys/passwords

background=0, # async poll every X seconds, else 0 for non-async

basedir=None, # directory of playbook, if applicable

setup_cache=None, # used to share fact data w/ other tasks

vars_cache=None, # used to store variables about hosts

transport=C.DEFAULT_TRANSPORT, # 'ssh', 'paramiko', 'local'

conditional='True', # run only if this fact expression evals to true

callbacks=None, # used for output

module_vars=None, # a playbooks internals thing

play_vars=None, #

play_file_vars=None, #

role_vars=None, #

role_params=None, #

default_vars=None, #

extra_vars=None, # extra vars specified with he playbook(s)

is_playbook=False, # running from playbook or not?

inventory=None, # reference to Inventory object

subset=None, # subset pattern

check=False, # don't make any changes, just try to probe for potential changes

diff=False, # whether to show diffs for template files that change

environment=None, # environment variables (as dict) to use inside the command

complex_args=None, # structured data in addition to module_args, must be a dict

error_on_undefined_vars=C.DEFAULT_UNDEFINED_VAR_BEHAVIOR, # ex. False

accelerate=False, # use accelerated connection

accelerate_ipv6=False, # accelerated connection w/ IPv6

accelerate_port=None, # port to use with accelerated connection

vault_pass=None,

run_hosts=None, # an optional list of pre-calculated hosts to run on

no_log=False, # option to enable/disable logging for a given task

run_once=False, # option to enable/disable host bypass loop for a given task

become=False, # whether to run privelege escalation or not

become_method=C.DEFAULT_BECOME_METHOD,

become_user=C.DEFAULT_BECOME_USER, # ex: 'root'

become_pass=C.DEFAULT_BECOME_PASS, # ex: 'password123' or None

become_exe=C.DEFAULT_BECOME_EXE, # ex: /usr/local/bin/sudo

): # used to lock multiprocess inputs and outputs at various levels

self.output_lockfile = OUTPUT_LOCKFILE

self.process_lockfile = PROCESS_LOCKFILE if not complex_args:

complex_args = {} # storage & defaults

self.check = check

self.diff = diff

self.setup_cache = utils.default(setup_cache, lambda: ansible.cache.FactCache())

self.vars_cache = utils.default(vars_cache, lambda: collections.defaultdict(dict))

self.basedir = utils.default(basedir, lambda: os.getcwd())

self.callbacks = utils.default(callbacks, lambda: DefaultRunnerCallbacks())

self.generated_jid = str(random.randint(0, 999999999999))

self.transport = transport

self.inventory = utils.default(inventory, lambda: ansible.inventory.Inventory(host_list)) self.module_vars = utils.default(module_vars, lambda: {})

self.play_vars = utils.default(play_vars, lambda: {})

self.play_file_vars = utils.default(play_file_vars, lambda: {})

self.role_vars = utils.default(role_vars, lambda: {})

self.role_params = utils.default(role_params, lambda: {})

self.default_vars = utils.default(default_vars, lambda: {})

self.extra_vars = utils.default(extra_vars, lambda: {}) self.always_run = None

self.connector = connection.Connector(self)

self.conditional = conditional

self.delegate_to = None

self.module_name = module_name

self.forks = int(forks)

self.pattern = pattern

self.module_args = module_args

self.timeout = timeout

self.remote_user = remote_user

self.remote_pass = remote_pass

self.remote_port = remote_port

self.private_key_file = private_key_file

self.background = background

self.become = become

self.become_method = become_method

self.become_user_var = become_user

self.become_user = None

self.become_pass = become_pass

self.become_exe = become_exe

self.is_playbook = is_playbook

self.environment = environment

self.complex_args = complex_args

self.error_on_undefined_vars = error_on_undefined_vars

self.accelerate = accelerate

self.accelerate_port = accelerate_port

self.accelerate_ipv6 = accelerate_ipv6

self.callbacks.runner = self

self.omit_token = '__omit_place_holder__%s' % sha1(os.urandom(64)).hexdigest()

self.vault_pass = vault_pass

self.no_log = no_log

self.run_once = run_once

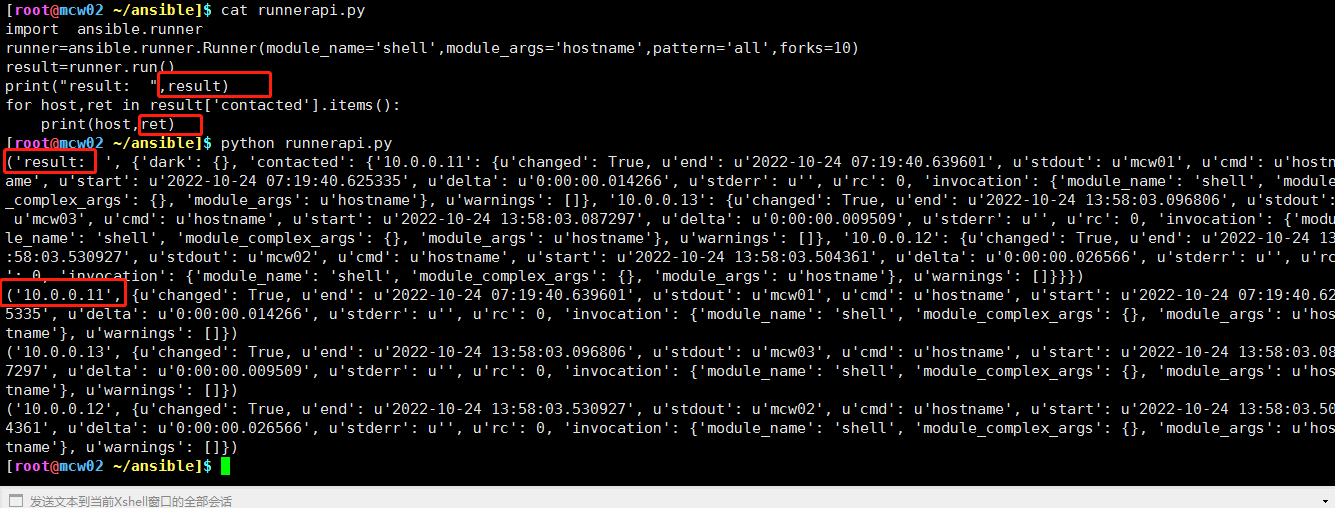

我们直接打印结果json数据。刚刚是取了标准输出了。

result是如下字典。多个主机的执行情况

{'dark': {}, 'contacted': {

'10.0.0.11': {u'changed': True, u'end': u'2022-10-24 07:19:40.639601', u'stdout': u'mcw01', u'cmd': u'hostname',

u'start': u'2022-10-24 07:19:40.625335', u'delta': u'0:00:00.014266', u'stderr': u'', u'rc': 0,

'invocation': {'module_name': 'shell', 'module_complex_args': {}, 'module_args': u'hostname'},

u'warnings': []},

'10.0.0.13': {u'changed': True, u'end': u'2022-10-24 13:58:03.096806', u'stdout': u'mcw03', u'cmd': u'hostname',

u'start': u'2022-10-24 13:58:03.087297', u'delta': u'0:00:00.009509', u'stderr': u'', u'rc': 0,

'invocation': {'module_name': 'shell', 'module_complex_args': {}, 'module_args': u'hostname'},

u'warnings': []},

'10.0.0.12': {u'changed': True, u'end': u'2022-10-24 13:58:03.530927', u'stdout': u'mcw02', u'cmd': u'hostname',

u'start': u'2022-10-24 13:58:03.504361', u'delta': u'0:00:00.026566', u'stderr': u'', u'rc': 0,

'invocation': {'module_name': 'shell', 'module_complex_args': {}, 'module_args': u'hostname'},

u'warnings': []}}}

ret单个主机的信息如下:

{'10.0.0.11':

{u'changed': True,

u'end': u'2022-10-24 07:19:40.639601',

u'stdout': u'mcw01',

u'cmd': u'hostname',

u'start': u'2022-10-24 07:19:40.625335',

u'delta': u'0:00:00.014266',

u'stderr': u'',

u'rc': 0,

'invocation': {

'module_name': 'shell',

'module_complex_args': {},

'module_args': u'hostname'

},

u'warnings': []

}

}

按理说错误信息放到dark里面了。但是我这里收不到错误信息。

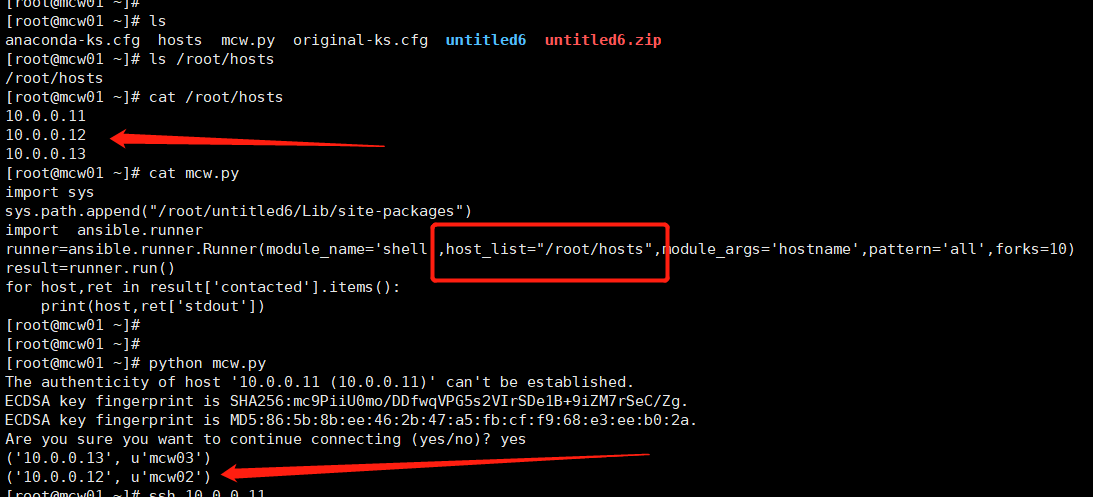

runner api 不只是打印成功的,还打印执行失败的错误信息

import sys

sys.path.append("/root/untitled6/Lib/site-packages")

import ansible.runner

runner=ansible.runner.Runner(module_name='shell',host_list="/root/hosts",module_args='hostname',pattern='all',forks=10)

result=runner.run()

print(result)

print("##############################")

for host,ret in result['contacted'].items():

print(host,ret['stdout'])

for host,ret in result['dark'].items():

print(host,ret['msg'])

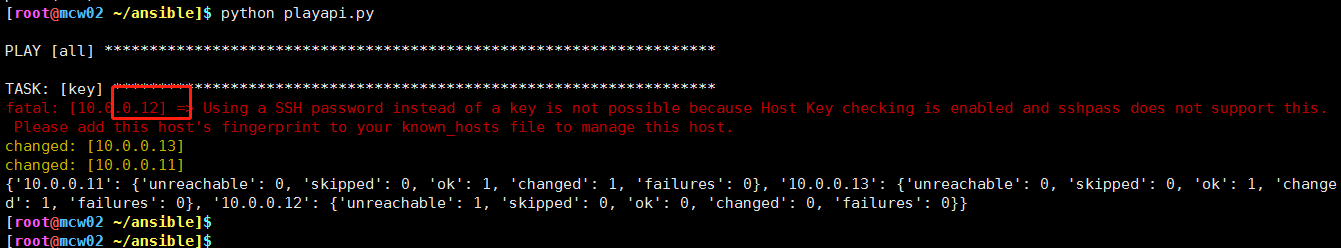

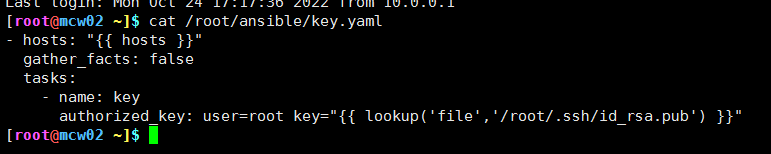

playbook api

[root@mcw02 ~/ansible]$ cat key.yaml

- hosts: "{{ hosts }}"

gather_facts: false

tasks:

- name: key

authorized_key: user=root key="{{ lookup('file','/root/.ssh/id_rsa.pub') }}"

[root@mcw02 ~/ansible]$ cat playapi.py #api.

from ansible.inventory import Inventory

from ansible.playbook import PlayBook

from ansible import callbacks

inventory=Inventory('./hosts')

stats=callbacks.AggregateStats()

playbook_cb=callbacks.PlaybookCallbacks()

runner_cb=callbacks.PlaybookRunnerCallbacks(stats)

results=PlayBook(playbook='key.yaml',callbacks=playbook_cb,runner_callbacks=runner_cb,stats=stats,inventory=inventory,extra_vars={'hosts':'all'})

res=results.run()

print(res)

[root@mcw02 ~/ansible]$

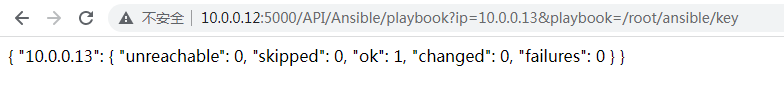

如下,确实调用了剧本key。yaml。我们传递的变量给模板,是所有主机,也是生效的。打印结果是json数据。我们指定了inventory文件。剧本实现了公钥分发到其它主机,将本机公钥写入了其它主机.ssh/authorized_keys中

返回的结果有点简单

{'10.0.0.11': {'unreachable': 0, 'skipped': 0, 'ok': 1, 'changed': 1, 'failures': 0},

'10.0.0.13': {'unreachable': 0, 'skipped': 0, 'ok': 1, 'changed': 1, 'failures': 0},

'10.0.0.12': {'unreachable': 1, 'skipped': 0, 'ok': 0, 'changed': 0, 'failures': 0}}

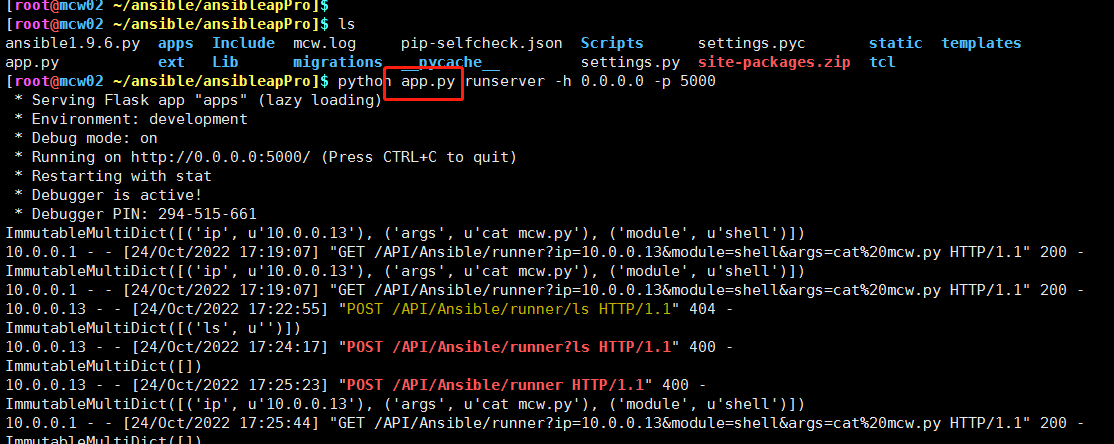

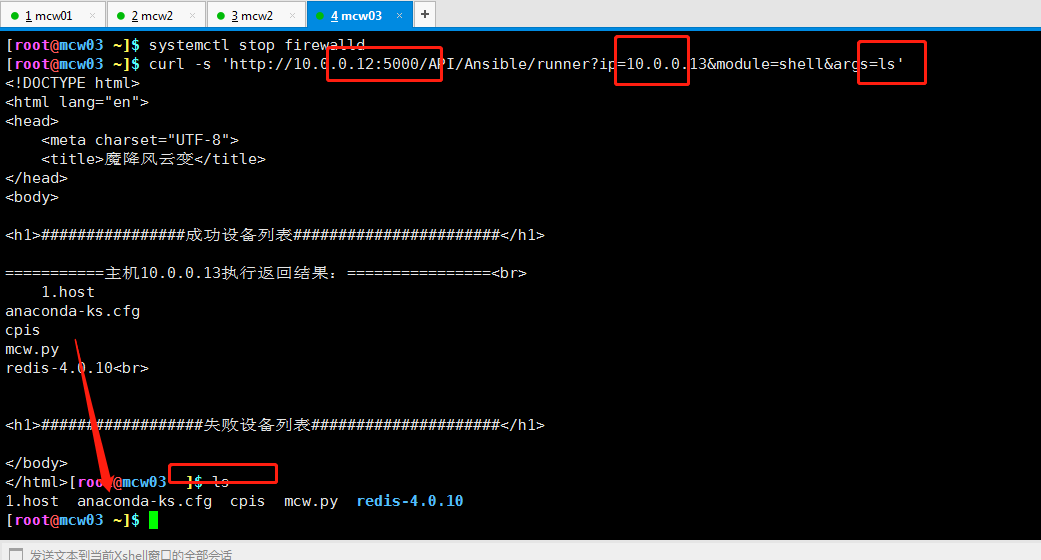

使用flask封装我们的api,提供http远程执行ansible命令

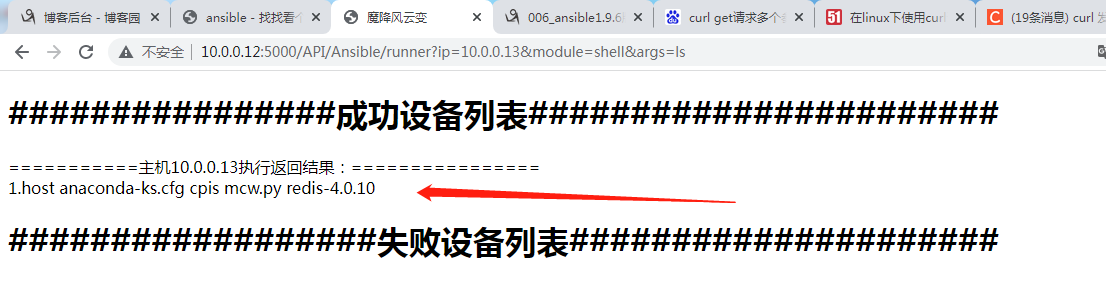

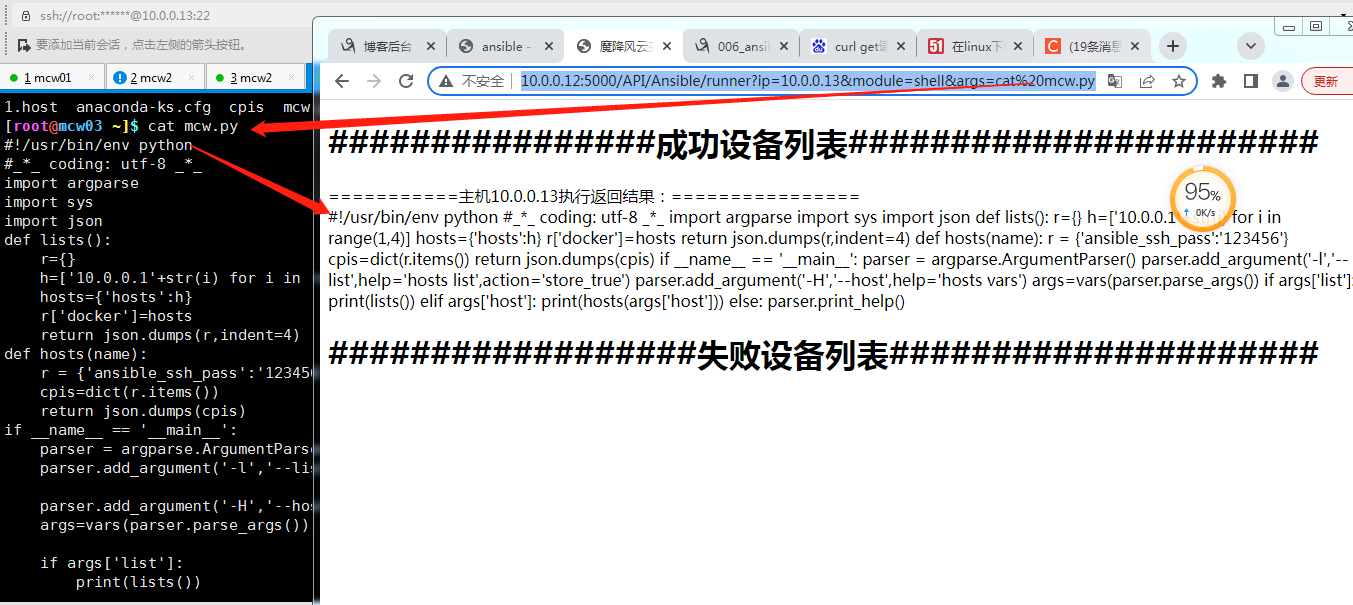

效果

我们的程序运行在主机2上

我们在主机3上执行curl命令访问接口,让主机2上的程序查主机3上的文件,成功返回执行结果,与主机3上的文件一致

我们在浏览器上访问接口,成功返回数据

此时,主机2是我们flask程序所在主机,也是ansible的主控机。我们可以通过http形式,在主机2上执行ad-hoc命令,使用各种模块。这就是使用flask封装了ansible的runner

api效果。下面我们看其他的。我们已经知道主机3中有mcw.py,那么我们看一下文件内容。我们可以看到跟主机上是一样的。由此我们就能通过写前端页面,进行各种post和get请求,是对ansible api的请求,那么我们在网页上可以实现管理一群机器了。也可以用ansible写控制台

我们根据调用剧本api,传的是剧本名称。后端会根据路径拼接成yaml文件。然后调用剧本api执行剧本。如果没有写路径,默认会从项目根路径找文件,如下。如果写了路径,就会找该路径下的文件

程序

下面就是flask蓝图

#_*_ coding:utf-8 __*__

from flask import Blueprint,request, render_template, redirect,abort

from apps.user.models import User

from ext import db import json

import ansible.runner

from ansible.inventory import Inventory

from ansible.playbook import PlayBook

from ansible import callbacks ansibleAPI_bp=Blueprint('ansibleAPI',__name__) @ansibleAPI_bp.route('/',methods=['GET','POST'])

def index():

return 'ok' hostfile='/root/ansible/hosts'

@ansibleAPI_bp.route('/API/Ansible/playbook',methods=['GET','POST'])

def Playbook():

"""

http://127.0.0.1:5000/API/Asible/playbook?ip=2.2.2.2&playbook=test

:return:

"""

print(request.args.get('playbook'))

vars={}

inventory = Inventory(hostfile)

stats = callbacks.AggregateStats()

playbook_cb = callbacks.PlaybookCallbacks()

runner_cb = callbacks.PlaybookRunnerCallbacks(stats)

hosts=request.args.get('ip')

task=request.args.get('playbook')

vars['hosts']=hosts

play=task+'.yaml'

results = PlayBook(playbook=play, callbacks=playbook_cb, runner_callbacks=runner_cb, stats=stats,

inventory=inventory, extra_vars=vars )

res = results.run()

return json.dumps(res,indent=4)

@ansibleAPI_bp.route('/API/Ansible/runner',methods=['GET','POST'])

def Runner():

"""

curl -H "Conten-Type: application/json" -X POST -d '{"ip":"1.1.1.1","module":"shell","args":"ls -l"}' http://127.0.0.1:5000/API/Ansible/runner

:return:

"""

print(request.args)

hosts=request.args.get('ip',None)

module=request.args.get('module',None)

args=request.args.get('args',None)

if hosts is None or module is None or args is None:

abort(400)

runner = ansible.runner.Runner(module_name=module, module_args=args, pattern=hosts, forks=10,host_list=hostfile)

tasks= runner.run()

cpis={}

cpis1={}

for (hostname, result) in tasks['contacted'].items():

if not 'failed' in result:

cpis[hostname]=result['stdout']

for (hostname, result) in tasks['dark'].items():

cpis1[hostname]=result['msg']

return render_template('ansibleApi/cpis.html',cpis=cpis,cpis1=cpis1)

下面是前端程序cpis.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>魔降风云变</title>

</head>

<body> <h1>################成功设备列表#######################</h1>

{% for k,v in cpis.items() %}

===========主机{{ k }}执行返回结果:================<br>

{{ v }}<br>

{% endfor %} <h1>##################失败设备列表#####################</h1>

{% for a,b in cpis1.items() %}

===========主机{{ a }}执行返回结果================<br>

{{ b }}<br>

{% endfor %}

</body>

</html>

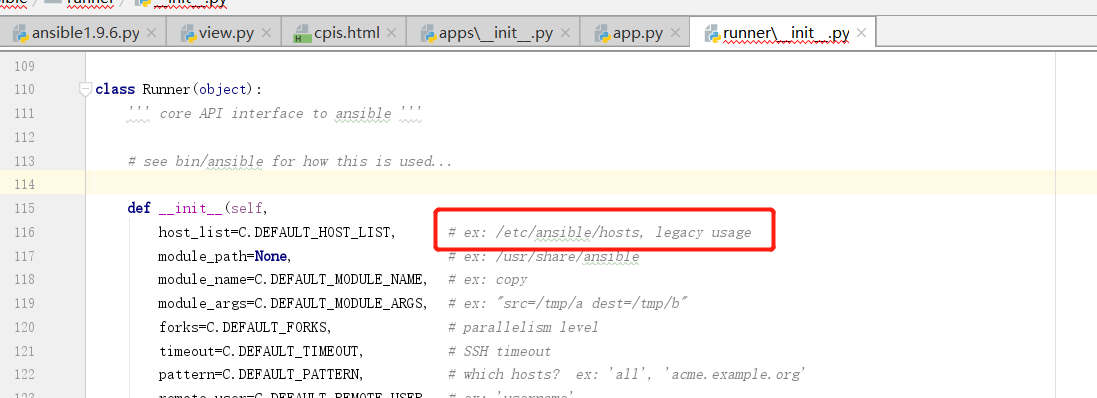

ansible 1.9 api 复制即用,不影响系统原有环境

需要用到sys.path.append(“..“),可以了解这里:https://blog.csdn.net/universe_R/article/details/123370672

遇到的问题,当我们appen加入到sys.path中后,依然找不到模块:

此时我打印sys.path查看到默认的搜索路径,根据默认的包搜索路径过滤找到了其他人安装的ansibe 2.9的包。也就是没有找到包,因为这个包的搜索顺序在前,那么我们将我们的1.9的包路径insert到列表中第一个元素前。

经过测试,当我们执行程序时,打印出的sys.path会添加程序执行当前目录,然后添加上我们insert或者append进去的路径,我们让自己的程序sleep多运行一会 ;在另外一处,我们隔两秒打印一次sys.path,发现并没有正在运行的程序添加的路径。也就是说,执行python程序,有公共的包搜索路径,如果你执行的程序加入了其它的包搜索路径是不会影响公共的python路径的,我们程序加进去的包路是临时加进去,只对当前执行的程序生效,对其它程序是没有影响的。也就是说,我们使用sys.path.append 或者是insert是安全的,不会因为当前程序的搜索包路径的优先级对其它可能存在的python程序产生影响,因为加进去的路径只对当前程序生效

import sys

sys.path.append("/root/untitled6/Lib/site-packages")

import ansible.runner

runner=ansible.runner.Runner(module_name='shell',host_list="/root/hosts",module_args='hostname',pattern='all',forks=10)

result=runner.run()

print(result)

print("##############################")

for host,ret in result['contacted'].items():

print(host,ret['stdout'])

for host,ret in result['dark'].items():

print(host,ret['msg'])

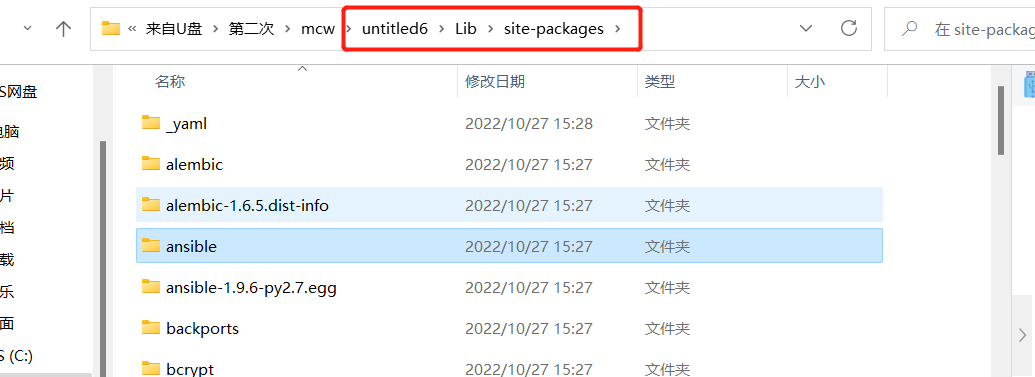

首先我们看一下pycharm中环境。untitled6是windows上pycharm创建的一个项目,带有虚拟环境的。在pycharm中安装ansibe包失败,但是ansible依赖包已经装上了。在centos7虚拟机上源码包安装了ansibe 1.9,我们从python2.7的包目录下把ansibe包拷贝到笔记本的untitled6的虚拟环境包目录下了。也就是有了如下的ansibe包

接下来我们看我们下面需要操作的Linux服务器。我们新装了三个虚拟机。目前是没有ansible的。我是提前将主机1的公钥分发到主机2和3上了,然后免密第一次登录上2和3.不太确定是否需要设置好免密。我们接下来想要将上面的项目拷贝到Linux服务器中使用。

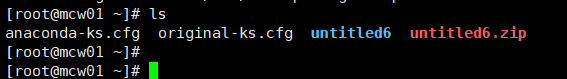

将项目解压下来

由于项目的虚拟环境python解释器是windows版本的,所以我们只能用一下Linux上原本的python解释器。后面可以考虑,虚拟环境中如何替换成Linux版python解释器,解除对别处资源的依赖性。

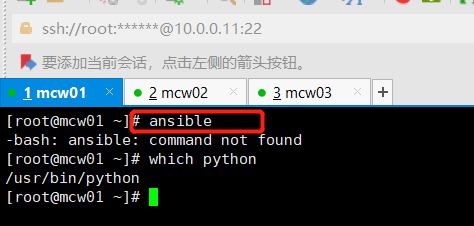

这里用Linux自身的装的python2.7执行我们写的一个简单程序,去调用ansibe api,发现无法导入模块。既然它找不到,那么我们就在执行这个文件之前指定它还能去哪里找包

将项目的包目录追加到Linux安装的python2.7找包的列表中去。让这个解释器能找到我们项目的包。结果是找到的,但是没有inventory文件,那么我们就创建一个

查看源码我们发现,这个参数应该是inventory文件,下面其实还有一个参数,但是注释是对象。而我们需要传的是文件。

如下,我们成功获取到各个主机的信息。由于我们主机1没有对连接自身做免密,所以这里就不能访问执行。由此可知,这点和ansible软件还是一样的,都需要免密。而这里我们没有在Linux上安装ansible,用的只是可以使用的python解释器,这个解释器指定路径的方式让它能找到我们项目虚拟环境中的ansibe包路径、,如果便可以使用ansibe api去执行ansibe命令了。所以即使原系统环境中有yum安装的ansibe,不管是啥版本,和我们调用ansibe api是没有关系 的,。

ansible其他一些使用总结

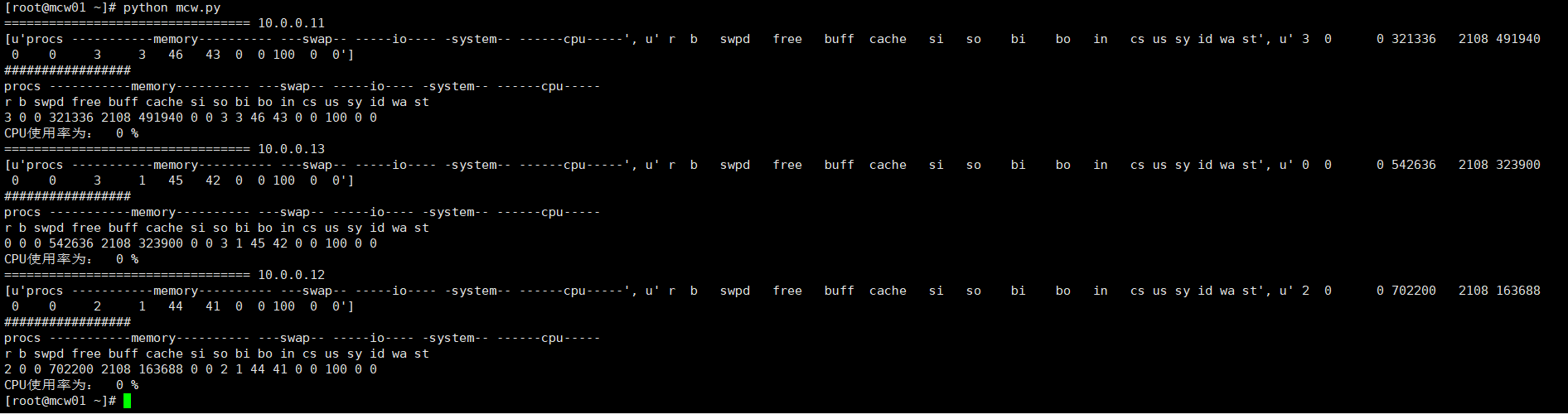

命令执行结果按原样换行

执行命令获得的结果,不是单行字符串显示,而是让它像终端执行命令一样多行展示

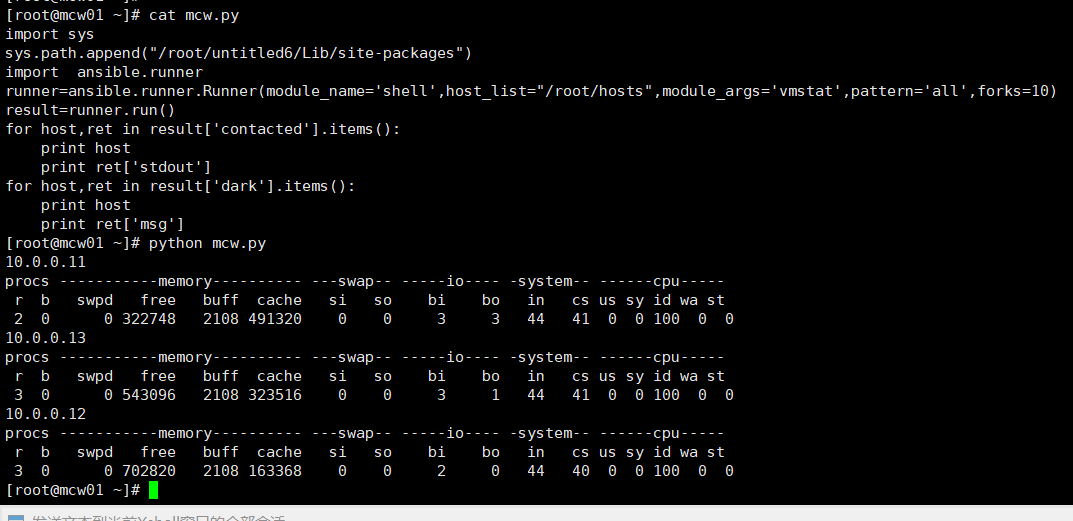

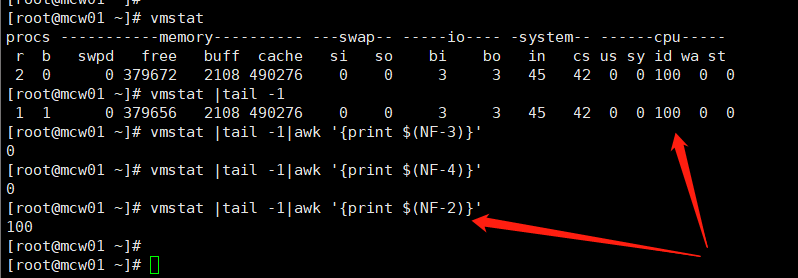

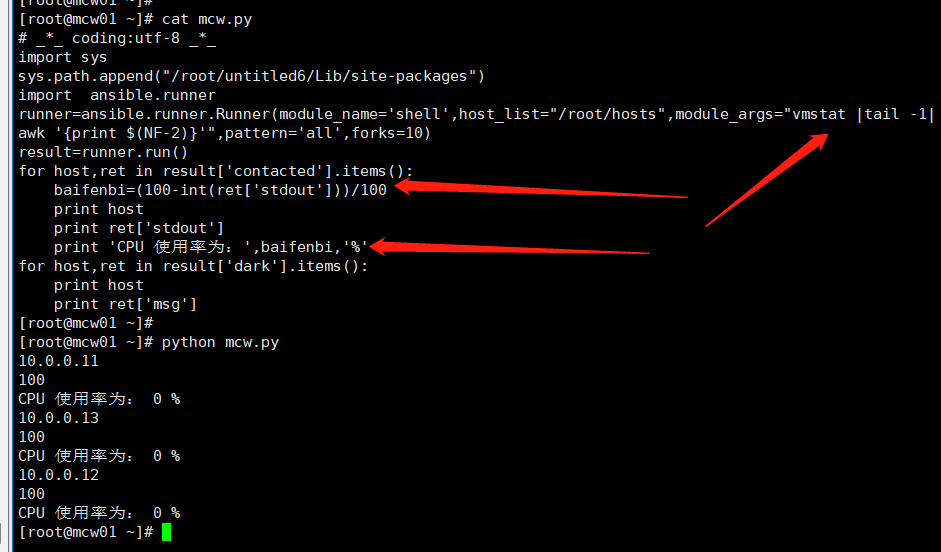

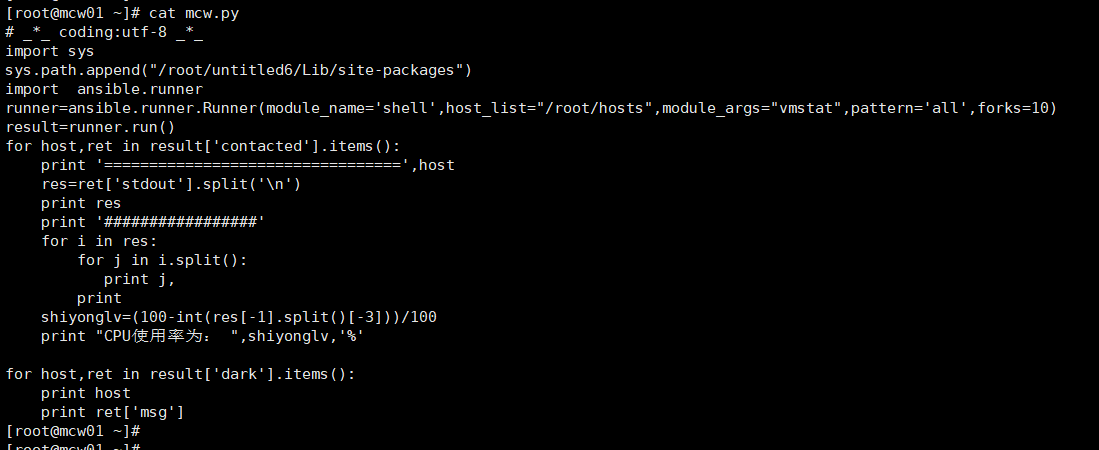

api 调用,获取命令执行结果的指定行和列数据。比如通过vmstat获取cpu使用率

shell命令取好值

根据命令获取到指定数据,然后处理数据,100减去cpu空闲的再除以100,获得cpu使用率。

api 第二种方式获取CPU使用率(shell输出指定行和指定列获取)

# _*_ coding:utf-8 _*_

import sys

sys.path.append("/root/untitled6/Lib/site-packages")

import ansible.runner

runner=ansible.runner.Runner(module_name='shell',host_list="/root/hosts",module_args="vmstat",pattern='all',forks=10)

result=runner.run()

for host,ret in result['contacted'].items():

print '=================================',host

res=ret['stdout'].split('\n')

print res

print '#################'

for i in res:

for j in i.split():

print j,

shiyonglv=(100-int(res[-1].split()[-3]))/100

print "CPU使用率为: ",shiyonglv,'%' for host,ret in result['dark'].items():

print host

print ret['msg']

api将返回数据按行和列封装到对象或者字典中

如果我们需要多个返回的数据。我们可以将数据分别保存到对象中或者时字典中,分别需要定义类和字典

ansible api调用及二次封装详解的更多相关文章

- 利用 Java 操作 Jenkins API 实现对 Jenkins 的控制详解

本文转载自利用 Java 操作 Jenkins API 实现对 Jenkins 的控制详解 导语 由于最近工作需要利用 Jenkins 远程 API 操作 Jenkins 来完成一些列操作,就抽空研究 ...

- vc中调用Com组件的方法详解

vc中调用Com组件的方法详解 转载自:网络,来源未知,如有知晓者请告知我.需求:1.创建myCom.dll,该COM只有一个组件,两个接口: IGetRes--方法Hello(), IGet ...

- Java进阶(三十二) HttpClient使用详解

Java进阶(三十二) HttpClient使用详解 Http协议的重要性相信不用我多说了,HttpClient相比传统JDK自带的URLConnection,增加了易用性和灵活性(具体区别,日后我们 ...

- 转:JAVAWEB开发之权限管理(二)——shiro入门详解以及使用方法、shiro认证与shiro授权

原文地址:JAVAWEB开发之权限管理(二)——shiro入门详解以及使用方法.shiro认证与shiro授权 以下是部分内容,具体见原文. shiro介绍 什么是shiro shiro是Apache ...

- php调用C代码的方法详解和zend_parse_parameters函数详解

php调用C代码的方法详解 在php程序中需要用到C代码,应该是下面两种情况: 1 已有C代码,在php程序中想直接用 2 由于php的性能问题,需要用C来实现部分功能 针对第一种情况,最合适的方 ...

- 2017.2.9 深入浅出MyBatis技术原理与实践-第八章 MyBatis-Spring(二)-----配置文件详解

深入浅出MyBatis技术原理与实践-第八章 MyBatis-Spring(二) ------配置文件详解 8.2 MyBatis-Spring应用 8.2.1 概述 本文主要讲述通过注解配置MyBa ...

- Azure Terraform(二)语法详解

一,引言 上篇文章开始,我们简单介绍了以下通过基础设施管理工具----- Terraform,通过它来统一管理复杂的云基础设施资源.作为入门演示,使用Terraform 部署Azure 资源组的方式直 ...

- 18.Java 封装详解/多态详解/类对象转型详解

封装概述 简述 封装是面向对象的三大特征之一. 封装优点 提高代码的安全性. 提高代码的复用性. "高内聚":封装细节,便于修改内部代码,提高可维护性. "低耦合&quo ...

- Python3调用C程序(超详解)

Python3调用C程序(超详解) Python为什么要调用C? 1.要提高代码的运算速度,C比Python快50倍以上 2.对于C语言里很多传统类库,不想用Python重写,想对从内存到文件接口这样 ...

- Java面向对象之封装详解

封装详解 封装 该露的露,该藏的藏 1.我们程序设计要追求"高内聚.低耦合".高内聚:类的内部数据操作细节自己完成,不允许外部干涉:低耦合:仅暴露少量的方法给外部使用. 封装(数据 ...

随机推荐

- 使用OHOS SDK构建vorbis

参照OHOS IDE和SDK的安装方法配置好开发环境. 从github下载源码. 执行如下命令: git clone --depth=1 https://github.com/xiph/vorbis ...

- HR必备|可视化大屏助HR实现人才资源价值最大化

人力资源管理质量的优劣关系到企业可持续发展目标的实现,在信息化时代背景下,应用信息技术加强人力资源管理过程的优化,利用技术提升人力资源管理质量和效率已是大势所趋. 利用信息技术构建信息化人力资源管理平 ...

- cas登录成功后跳转地址和退出后跳转首页

cas登录成功后跳转地址和退出后跳转首页 CAS版本5.3 1.登录页面 的登录链接地址为 login.html ...<span v-if="username == ''" ...

- Drop 、Delete、Truncate的区别是什么

Drop .Delete.Truncate 的区别是什么? DROP 删除表结构和数据,truncate 和 delete 只删除数据 truncate 操作,表和索引所占用的空间会恢复到初始大小:d ...

- 实时 3D 深度多摄像头跟踪 Real-time 3D Deep Multi-Camera Tracking

实时 3D 深度多摄像头跟踪 Real-time 3D Deep Multi-Camera Tracking 论文url https://arxiv.org/abs/2003.11753 论文简述: ...

- 安全工具分析系列-Londly01

前言 原创作者:Super403,文章分析主要用于研究教学 本期研究:[Londly01-safety-tool]工具源码 简介:自动化资产探测及漏扫脚本 工具来源:https://github.co ...

- 一个.NET内置依赖注入的小型强化版

前言 .NET生态中有许多依赖注入容器.在大多数情况下,微软提供的内置容器在易用性和性能方面都非常优秀.外加ASP.NET Core默认使用内置容器,使用很方便. 但是笔者在使用中一直有一个头疼的问题 ...

- 基于信通院 Serverless 工具链模型的实践:Serverless Devs

简介: Serverless Devs 作为开源开放的开发者工具,参编中国信通院<基于无服务器架构的工具链能力要求>标准,为行业统一规范发挥助推作用! 作者 | 江昱(阿里云 Serve ...

- 深度解读 OpenYurt:从边缘自治看 YurtHub 的扩展能力

作者 | 新胜 阿里云技术专家 导读:OpenYurt 开源两周以来,以非侵入式的架构设计融合云原生和边缘计算两大领域,引起了不少行业内同学的关注.阿里云推出开源项目 OpenYurt,一方面是把阿 ...

- 阿里云数字化安全生产平台 DPS V1.0 正式发布!

简介:数字化安全生产平台则帮助客户促进业务与 IT 的全面协同,从业务集中监控.业务流程管理.应急指挥响应等多维度来帮助客户建立完善专业的业务连续性保障体系. 作者:银桑.比扬 阿里云创立于 200 ...