007 Ceph手动部署单节点

前面已经介绍了Ceph的自动部署,本次介绍一下关于手动部署Ceph节点操作

一、环境准备

一台虚拟机部署单节点Ceph集群

IP:172.25.250.14

内核: Red Hat Enterprise Linux Server release 7.4 (Maipo)

磁盘:/dev/vab,/dev/vac,/dev/vad

二、部署mon

2.1 安装Ceph软件包

[root@cepe5 ~]# yum install -y ceph-common ceph-mon ceph-mgr ceph-mds ceph-osd ceph-radosgw

2.2 创建mon节点准备

登录到ceph5查看ceph目录是否生成

[root@ceph5 ceph]# ll

-rw-r--r-- root root Nov rbdmap

[root@ceph6 ~]# ll /etc/ceph/

-rw-r--r-- root root Nov rbdmap

执行uuidgen命令,得到一个唯一的标识,作为ceph集群的ID

[root@ceph5 ceph]# uuidgen

82bf91ae-6d5e-4c09--3d9e5992e6ef

生成ceph配置文件

[root@ceph5 ceph]# vim /etc/ceph/backup.conf

fsid = 51dda18c-7545-4edb-8ba9-27330ead81a7

mon_initial_members = ceph5

mon_host = 172.25.250.14

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public_network = 172.25.250.0/

cluster_network = 172.25.250.0/

[mgr]

mgr modules = dashboard

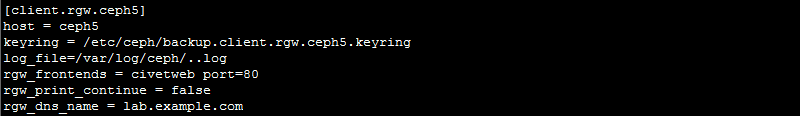

[client.rgw.ceph5]

host = ceph5

keyring = /etc/ceph/backup.client.rgw.ceph5.keyring

log_file=/var/log/ceph/$cluster.$name.log

rgw_frontends = civetweb port=

rgw_print_continue = false

rgw_dns_name = lab.example.com

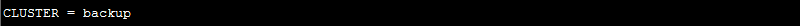

设置集群名为backup

[root@ceph5 ceph]# vim /etc/sysconfig/ceph

2.3 为监控节点创建管理密钥

创建monitor使用的key

[root@ceph5 ceph]# ceph-authtool --create-keyring /tmp/backup.mon.keyring --gen-key -n mon. --cap mon 'allow *'

为ceph amin用户创建管理集群的密钥并赋予访问权限

[root@ceph5 ceph]# ceph-authtool --create-keyring /etc/ceph/backup.client.admin.keyring --gen-key -n client.admin --set-uid=0 --cap mon 'allow *' --cap osd 'allow *' --cap mgr 'allow *' --cap mds 'allow *'

添加client.admin的秘钥到/tmp/backup.mon.keyring文件中

[root@ceph5 ceph]# ceph-authtool /tmp/backup.mon.keyring --import-keyring /etc/ceph/backup.client.admin.keyring

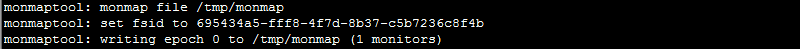

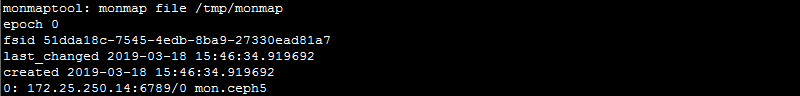

2.4 创建monitormap

[root@ceph5 ~]# monmaptool --create --add ceph5 172.25.250.14 --fsid 51dda18c-7545-4edb-8ba9-27330ead81a7 /tmp/monmap

[root@ceph5 ceph]# file /tmp/monmaptool

[root@ceph5 ceph]# monmaptool --print /tmp/monmaptool

创建monitor使用的目录

[root@ceph5 ~]# mkdir -p /var/lib/ceph/mon/backup-ceph5

设置文件相关的权限

[root@ceph5 ~]# chown ceph.ceph -R /var/lib/ceph /etc/ceph /tmp/backup.mon.keyring /tmp/monmap

2.5 初始化monitor

[root@ceph5 ~]# sudo -u ceph ceph-mon --cluster backup --mkfs -i ceph5 --monmap /tmp/monmap --keyring /tmp/backup.mon.keyring

2.6 启动monitor:

[root@ceph5 ~]# systemctl start ceph-mon@ceph5

[root@ceph5 ~]# systemctl enable ceph-mon@ceph5

Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph5.service to /usr/lib/systemd/system/ceph-mon@.service.

[root@ceph5 ~]# systemctl status ceph-mon@ceph5

ceph-mon@ceph5.service - Ceph cluster monitor daemon

Loaded: loaded (/usr/lib/systemd/system/ceph-mon@.service; enabled; vendor preset: disabled)

Active: active (running) since Mon -- :: CST; 2min 21s ago

Main PID: (ceph-mon)

CGroup: /system.slice/system-ceph\x2dmon.slice/ceph-mon@ceph5.service

└─ /usr/bin/ceph-mon -f --cluster backup --id ceph5 --setuser ceph --setgroup ceph Mar :: ceph5 systemd[]: Started Ceph cluster monitor daemon.

Mar :: ceph5 systemd[]: Starting Ceph cluster monitor daemon...

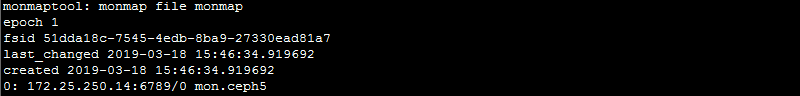

2.7 获取monitor

[root@ceph5 ~]# ceph mon getmap -o monmap --cluster backup

[root@ceph5 ~]# monmaptool --print monmap

[root@ceph5 ~]# ceph auth get mon. --cluster backup

exported keyring for mon.

[mon.]

key = AQDLTI9cCRjlLRAASL2nSyFnRX9uHSxbGXTycQ==

caps mon = "allow *"

三、配置MGR

3.1 创建秘钥

[root@ceph5 ~]# mkdir /var/lib/ceph/mgr/backup-ceph5

[root@ceph5 ~]# chown ceph.ceph -R /var/lib/ceph

[root@ceph5 ~]# ceph-authtool --create-keyring /etc/ceph/backup.mgr.ceph5.keyring --gen-key -n mgr.ceph5 --cap mon 'allow profile mgr' --cap osd 'allow *' --cap mds 'allow *'

[root@ceph5 ~]# ceph auth import -i /etc/ceph/backup.mgr.ceph5.keyring --cluster backup

[root@ceph5 ~]# ceph auth get-or-create mgr.ceph5 -o /var/lib/ceph/mgr/backup-ceph5/keyring --cluster backup

3.2 启动服务

[root@ceph5 ~]# systemctl start ceph-mgr@ceph5

[root@ceph5 ~]# systemctl enable ceph-mgr@ceph5

Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph5.service to /usr/lib/systemd/system/ceph-mgr@.service.

3.3 查看集群状态

[root@ceph5 ~]# ceph -s --cluster backup

cluster:

id: 51dda18c-7545-4edb-8ba9-27330ead81a7

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph5

mgr: ceph5(active, starting)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 bytes

usage: 0 kB used, 0 kB / 0 kB avail

pgs:

3.5 启用MGR的dashboard

[root@ceph5 ~]# ceph mgr dump --conf /etc/ceph/backup.conf

{

"epoch": ,

"active_gid": ,

"active_name": "ceph5",

"active_addr": "-",

"available": false,

"standbys": [],

"modules": [

"restful",

"status"

],

"available_modules": [

"dashboard",

"prometheus",

"restful",

"status",

"zabbix"

]

}

[root@ceph5 ~]# ceph mgr module enable dashboard --conf /etc/ceph/backup.conf

[root@ceph5 ~]# ceph mgr module ls --conf /etc/ceph/backup.conf

[

"dashboard",

"restful",

"status"

]

[root@ceph5 ~]# ceph config-key put mgr/dashboard/server_addr 172.25.250.14 --conf /etc/ceph/backup.conf

[root@ceph5 ~]# ceph config-key put mgr/dashboard/server_port 7000 --conf /etc/ceph/backup.conf

[root@ceph5 ~]# ceph config-key dump --conf /etc/ceph/backup.conf

{

"mgr/dashboard/server_addr": "172.25.250.14",

"mgr/dashboard/server_port": ""

}

做一个别名

[root@ceph5 ~]# alias ceph='ceph --cluster backup'

[root@ceph5 ~]# alias rbd='rbd --cluster backup'

四、配置OSD

4.1 添加osd.0

[root@ceph5 ~]# uuidgen

创建日志盘

[root@ceph5 ~]# sgdisk --new=1:0:+5G --change-name=1:'ceph journal' --partition-guid=1:aa25b6e9-384a-4012-b597-f4af96da3d5e --typecode=1:aa25b6e9-384a-4012-b597-f4af96da3d5e --mbrtogpt -- /dev/vdb

[root@ceph5 ~]# uuidgen

创建数据盘

[root@ceph5 ~]# sgdisk --new=2:0:0 --change-name=2:'ceph data' --partition-guid=2:5aaec435-b7fd-4a50-859f-3d26ed09f185 --typecode=2:5aaec435-b7fd-4a50-859f-3d26ed09f185 --mbrtogpt -- /dev/vdb

格式化

[root@ceph5 ~]# mkfs.xfs -f -i size=2048 /dev/vdb1

[root@ceph5 ~]# mkfs.xfs -f -i size=2048 /dev/vdb2

挂载数据盘

[root@ceph5 ~]# mkdir /var/lib/ceph/osd/backup-{0,1,2}

[root@ceph5 ~]# mount -o noatime,largeio,inode64,swalloc /dev/vdb2 /var/lib/ceph/osd/backup-0

[root@ceph5 ~]# uuid_blk=`blkid /dev/vdb2|awk '{print $2}'`

[root@ceph5 ~]# echo $uuid_blk

[root@ceph5 ~]# echo "$uuid_blk /var/lib/ceph/osd/backup-0 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab

[root@ceph5 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Jul ::

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(), findfs(), mount() and/or blkid() for more info

#

UUID=716e713d-4e91--81fd-c6cfa1b0974d / xfs defaults

UUID="2fc06d6c-39b1-47f8-b9ab-cf034599cbd1" /var/lib/ceph/osd/backup- xfs defaults,noatime,largeio,inode64,swalloc

创建Ceph osd秘钥

[root@ceph5 ~]# ceph-authtool --create-keyring /etc/ceph/backup.osd.0.keyring --gen-key -n osd.0 --cap mon 'allow profile osd' --cap mgr 'allow profile osd' --cap osd 'allow *'

[root@ceph5 ~]# ceph auth import -i /etc/ceph/backup.osd.0.keyring --cluster backup

[root@ceph5 ~]# ceph auth get-or-create osd.0 -o /var/lib/ceph/osd/backup-0/keyring --cluster backup

初始化osd数据目录

[root@ceph5 ~]# ceph-osd -i 0 --mkfs --cluster backup

-- ::46.788193 7f030624ad00 - journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

-- ::46.886027 7f030624ad00 - journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

-- ::46.886514 7f030624ad00 - read_settings error reading settings: () No such file or directory

-- ::46.992516 7f030624ad00 - created object store /var/lib/ceph/osd/backup- for osd. fsid 51dda18c--4edb-8ba9-27330ead81a7

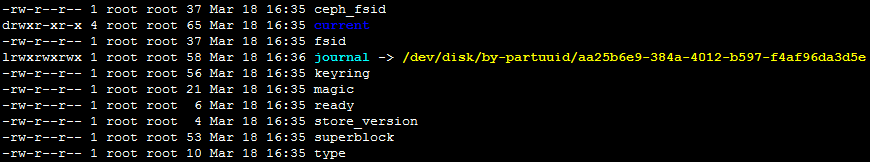

挂载日志盘

[root@ceph5 ~]# cd /var/lib/ceph/osd/backup-0

[root@ceph5 backup-0]# rm -f journal

[root@ceph5 backup-0]# partuuid_0=`blkid /dev/vdb1|awk -F "[\"\"]" '{print $8}'`

[root@ceph5 backup-0]# echo $partuuid_0

[root@ceph5 backup-0]# ln -s /dev/disk/by-partuuid/$partuuid_0 ./journal

[root@ceph5 backup-0]# ll

[root@ceph5 backup-0]# chown ceph.ceph -R /var/lib/ceph

[root@ceph5 backup-0]# ceph-osd --mkjournal -i 0 --cluster backup

[root@ceph5 backup-0]# chown ceph.ceph /dev/disk/by-partuuid/$partuuid_0

将osd节点机加入crushmap

[root@ceph5 backup-0]# ceph osd crush add-bucket ceph5 host --cluster backup

将osd节点机移动到默认的root default下面

[root@ceph5 backup-0]# ceph osd crush move ceph5 root=default --cluster backup

将osd.0添加到ceph5节点下

[root@ceph5 backup-0]# ceph osd crush add osd.0 0.01500 root=default host=ceph5 --cluster backup

启动OSD

[root@ceph5 backup-0]# systemctl start ceph-osd@0

[root@ceph5 backup-0]# systemctl enable ceph-osd@0

Created symlink from /etc/systemd/system/ceph-osd.target.wants/ceph-osd@.service to /usr/lib/systemd/system/ceph-osd@.service.

4.2 添加osd.1

[root@ceph5 backup-]# ceph osd create

[root@ceph5 backup-]# sgdisk --new=::+5G --change-name=:'ceph journal' --partition-guid=:3af9982e---bf3a-771f0e981f00 --typecode=:3af9982e---bf3a-771f0e981f00 --mbrtogpt -- /dev/vdc

[root@ceph5 backup-]# sgdisk --new=:: --change-name=:'ceph data' --partition-guid=:3f1d1456-2ebe--96dc-e8ba975a772c --typecode=:3f1d1456-2ebe--96dc-e8ba975a772c --mbrtogpt -- /dev/vdc

[root@ceph5 backup-]# mkfs.xfs -f -i size= /dev/vdc1

[root@ceph5 backup-]# mkfs.xfs -f -i size= /dev/vdc2

[root@ceph5 backup-]# mount -o noatime,largeio,inode64,swalloc /dev/vdc2 /var/lib/ceph/osd/backup-

[root@ceph5 backup-]# blkid /dev/vdc2

/dev/vdc2: UUID="e0a2ccd2-549d-474f-8d36-fd1e3f20a4f8" TYPE="xfs" PARTLABEL="ceph data" PARTUUID="3f1d1456-2ebe-4702-96dc-e8ba975a772c"

[root@ceph5 backup-]# uuid_blk_1=`blkid /dev/vdc2|awk '{print $2}'`

[root@ceph5 backup-]# echo "$uuid_blk_1 /var/lib/ceph/osd/backup-1 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab

[root@ceph5 backup-]# ceph-osd -i --mkfs --mkkey --cluster backup

-- ::46.680469 7f4156f0ed00 - asok(0x5609ed1701c0) AdminSocketConfigObs::init: failed: AdminSocket::bind_and_listen: failed to bind the UNIX domain socket to '/var/run/ceph/backup-osd.1.asok': () File exists

[root@ceph5 backup-]# cd /var/lib/ceph/osd/backup-

[root@ceph5 backup-]# rm -f journal

[root@ceph5 backup-]# partuuid_1=`blkid /dev/vdc1|awk -F "[\"\"]" '{print $8}'`

[root@ceph5 backup-]# ln -s /dev/disk/by-partuuid/$partuuid_1 ./journal

[root@ceph5 backup-]# chown ceph.ceph -R /var/lib/ceph

[root@ceph5 backup-]# ceph-osd --mkjournal -i --cluster backup

[root@ceph5 backup-]# chown ceph.ceph /dev/disk/by-partuuid/$partuuid_1

[root@ceph5 backup-]# ceph auth add osd. osd 'allow *' mon 'allow profile osd' mgr 'allow profile osd' -i /var/lib/ceph/osd/backup-/keyring --cluster backup

[root@ceph5 backup-]# ceph osd crush add osd. 0.01500 root=default host=ceph5 --cluster backup

[root@ceph5 backup-]# systemctl start ceph-osd@

[root@ceph5 backup-]# systemctl enable ceph-osd@

4.3 添加osd.2

[root@ceph5 ~]# ceph osd create

[root@ceph5 backup-]# sgdisk --new=::+5G --change-name=:'ceph journal' --partition-guid=:4e7b40e7-f303--8b57-e024d4987892 --typecode=:4e7b40e7-f303--8b57-e024d4987892 --mbrtogpt -- /dev/vdd

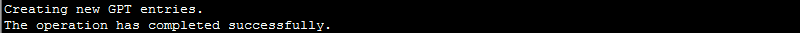

Creating new GPT entries.

chown ceph.ceph -R /var/lib/ceph

ceph-osd --mkjournal -i --cluster backup

chown ceph.ceph /dev/disk/by-partuuid/$partuuid_2

ceph auth add osd. osd 'allow *' mon 'allow profile osd' mgr 'allow profile osd' -i /var/lib/ceph/osd/backup-/keyring --cluster backup

ceph osd crush add osd. 0.01500 root=default host=ceph5 --cluster backup

systemctl start ceph-osd@

systemctl enable ceph-osd@2The operation has completed successfully.

[root@ceph5 backup-]# sgdisk --new=:: --change-name=:'ceph data' --partition-guid=:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --typecode=:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --mbrtogpt -- /dev/vdd

The operation has completed successfully.

[root@ceph5 backup-]# mkfs.xfs -f -i size= /dev/vdd1

[root@ceph5 backup-]# mkfs.xfs -f -i size= /dev/vdd2

[root@ceph5 backup-]# mount -o noatime,largeio,inode64,swalloc /dev/vdd2 /var/lib/ceph/osd/backup-

[root@ceph5 backup-]# blkid /dev/vdd2

/dev/vdd2: UUID="8458389b-c453-4c1f-b918-5ebde3a3b7b9" TYPE="xfs" PARTLABEL="ceph data" PARTUUID="c82ce8a4-a762-4ba9-b987-21ef51df2eb2"

[root@ceph5 backup-]# uuid_blk_2=`blkid /dev/vdd2|awk '{print $2}'`

[root@ceph5 backup-]# echo "$uuid_blk_2 /var/lib/ceph/osd/backup-2 xfs defaults,noatime,largeio,inode64,swalloc 0 0">> /etc/fstab

[root@ceph5 backup-]# ceph-osd -i --mkfs --mkkey --cluster backup

[root@ceph5 backup-]# cd /var/lib/ceph/osd/backup-

[root@ceph5 backup-]# rm -f journal

[root@ceph5 backup-]# partuuid_2=`blkid /dev/vdd1|awk -F "[\"\"]" '{print $8}'`

[root@ceph5 backup-]# ln -s /dev/disk/by-partuuid/$partuuid_2 ./journal

[root@ceph5 backup-]# chown ceph.ceph -R /var/lib/ceph

[root@ceph5 backup-]# ceph-osd --mkjournal -i --cluster backup

-- ::43.123486 7efe77573d00 - journal read_header error decoding journal header

[root@ceph5 backup-]# chown ceph.ceph /dev/disk/by-partuuid/$partuuid_2

[root@ceph5 backup-]# ceph auth add osd. osd 'allow *' mon 'allow profile osd' mgr 'allow profile osd' -i /var/lib/ceph/osd/backup-/keyring --cluster backup

added key for osd.

[root@ceph5 backup-]# ceph osd crush add osd. 0.01500 root=default host=ceph5 --cluster backup

add item id name 'osd.2' weight 0.015 at location {host=ceph5,root=default} to crush map

[root@ceph5 backup-]# systemctl start ceph-osd@

[root@ceph5 backup-]# systemctl enable ceph-osd@

五、验证测试

5.1 健康测试

[root@ceph5 backup-2]# ceph -s

cluster:

id: 51dda18c--4edb-8ba9-27330ead81a7

health: HEALTH_OK

services:

mon: daemons, quorum ceph5

mgr: ceph5(active)

osd: osds: up, in

data:

pools: pools, pgs

objects: objects, bytes

usage: MB used, MB / MB avail

pgs:

由于ceph默认crushmap策略是基于host的故障域,在三副本的情况下,由于只有一个host,这时创建存储池后,ceph状态会变为HEALTH_WARN,而且一直无法重平衡PG,故障表现为:

* 写入数据会hang住

* 如果安装了radosgw,会发现civetweb端口一直无法正常监听且radosgw进程反复重启

5.2 尝试创建一个池的结果

[root@ceph5 backup-]# ceph osd pool create rbd --cluster backup

pool 'rbd' created

[root@ceph5 backup-]# ceph -s

cluster:

id: 51dda18c--4edb-8ba9-27330ead81a7

health: HEALTH_WARN

too few PGs per OSD ( < min )

services:

mon: daemons, quorum ceph5

mgr: ceph5(active)

osd: osds: up, in

data:

pools: pools, pgs

objects: objects, bytes

usage: MB used, MB / MB avail

pgs: 100.000% pgs not active

creating+peering

5.3 修改为osd级别

[root@ceph5 ~]# cd /etc/ceph/

[root@ceph5 ceph]# ceph osd getcrushmap -o /etc/ceph/crushmap [root@ceph5 ceph]# crushtool -d /etc/ceph/crushmap -o /etc/ceph/crushmap.txt

[root@ceph5 ceph]# sed -i 's/step chooseleaf firstn 0 type host/step chooseleaf firstn 0 type osd/' /etc/ceph/crushmap.txt

[root@ceph5 ceph]# grep 'step chooseleaf' /etc/ceph/crushmap.txt

step chooseleaf firstn type osd

[root@ceph5 ceph]# crushtool -c /etc/ceph/crushmap.txt -o /etc/ceph/crushmap-new

[root@ceph5 ceph]# ceph osd setcrushmap -i /etc/ceph/crushmap-new

5.4 再次检测变成健康状态

[root@ceph5 ceph]# ceph -s

cluster:

id: 51dda18c--4edb-8ba9-27330ead81a7

health: HEALTH_OK

services:

mon: daemons, quorum ceph5

mgr: ceph5(active)

osd: osds: up, in

data:

pools: pools, pgs

objects: objects, bytes

usage: MB used, MB / MB avail

pgs: active+clean

[root@ceph5 ceph]# ceph osd pool application enable rbd rbd

enabled application 'rbd' on pool 'rbd'

[root@ceph5 ceph]# rbd create --size 1G test

[root@ceph5 ceph]# rbd ls

test

六、配置radosgw

[root@ceph5 backup-2]# ceph auth get-or-create client.rgw.ceph5 mon 'allow rwx' osd 'allow rwx' -o ceph.client.rgw.ceph5.keyring --backup cluster --conf /etc/ceph/backup.conf

添加配置文件

启动服务

[root@ceph5 ceph]# systemctl start ceph-radosgw@rgw.ceph5

[root@ceph5 ceph]# systemctl enbale ceph-radosgw@rgw.ceph5

所有命令

#可以把这些命令直接写一个bash文件执行安装,也可以进行修改,做一个shell脚本一键安装

yum install -y ceph-common ceph-mon ceph-mgr ceph-mds ceph-osd ceph-radosgw

echo 'CLUSTER = backup'>>/etc/sysconfig/ceph

uuid=`uuidgen`

echo "fsid = $uuid

mon_initial_members = ceph5

mon_host = 172.25.250.14 auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx public_network = 172.25.250.0/

cluster_network = 172.25.250.0/ [mgr]

mgr modules = dashboard [client.rgw.ceph5]

host = ceph5

keyring = /etc/ceph/backup.client.rgw.ceph5.keyring

log_file=/var/log/ceph/$cluster.$name.log

rgw_frontends = civetweb port=

rgw_print_continue = false

rgw_dns_name = lab.example.com " >>/etc/ceph/backup.conf

ceph-authtool --create-keyring /tmp/backup.mon.keyring --gen-key -n mon. --cap mon 'allow *'

ceph-authtool --create-keyring /etc/ceph/backup.client.admin.keyring --gen-key -n client.admin --set-uid= --cap mon 'allow *' --cap osd 'allow *' --cap mgr 'allow *' --cap mds 'allow *'

ceph-authtool /tmp/backup.mon.keyring --import-keyring /etc/ceph/backup.client.admin.keyring

monmaptool --create --add ceph5 172.25.250.14 --fsid $uuid /tmp/monmap

monmaptool --print /tmp/monmap

mkdir -p /var/lib/ceph/mon/backup-ceph5

chown ceph.ceph -R /var/lib/ceph /etc/ceph /tmp/backup.mon.keyring /tmp/monmap

sudo -u ceph ceph-mon --cluster backup --mkfs -i ceph5 --monmap /tmp/monmap --keyring /tmp/backup.mon.keyring

systemctl start ceph-mon@ceph5

systemctl enable ceph-mon@ceph5

ceph mon getmap -o monmap --cluster backup

monmaptool --print monmap

ceph auth get mon. --cluster backup

mkdir /var/lib/ceph/mgr/backup-ceph5

chown ceph.ceph -R /var/lib/ceph

ceph-authtool --create-keyring /etc/ceph/backup.mgr.ceph5.keyring --gen-key -n mgr.ceph5 --cap mon 'allow profile mgr' --cap osd 'allow *' --cap mds 'allow *'

ceph auth import -i /etc/ceph/backup.mgr.ceph5.keyring --cluster backup

ceph auth get-or-create mgr.ceph5 -o /var/lib/ceph/mgr/backup-ceph5/keyring --cluster backup

systemctl start ceph-mgr@ceph5

systemctl enable ceph-mgr@ceph5

ceph -s --cluster backup

ceph mgr dump --conf /etc/ceph/backup.conf

ceph mgr module enable dashboard --conf /etc/ceph/backup.conf

ceph mgr module ls --conf /etc/ceph/backup.conf

ceph config-key put mgr/dashboard/server_addr 172.25.250.14 --conf /etc/ceph/backup.conf

ceph config-key put mgr/dashboard/server_port --conf /etc/ceph/backup.conf

ceph config-key dump --conf /etc/ceph/backup.conf

alias ceph='ceph --cluster backup'

alias rbd='rbd --cluster backup'

ceph osd create

sgdisk --new=::+5G --change-name=:'ceph journal' --partition-guid=:baf21303-1f6a--965e-4b75a12ec3c6 --typecode=:baf21303-1f6a--965e-4b75a12ec3c6 --mbrtogpt -- /dev/vdb

sgdisk --new=:: --change-name=:'ceph data' --partition-guid=:04aad6e0---8b52-a289f3777f07 --typecode=:04aad6e0---8b52-a289f3777f07 --mbrtogpt -- /dev/vdb

mkfs.xfs -f -i size= /dev/vdb1

mkfs.xfs -f -i size= /dev/vdb2

mkdir /var/lib/ceph/osd/backup-{,,}

mount -o noatime,largeio,inode64,swalloc /dev/vdb2 /var/lib/ceph/osd/backup-

uuid_blk=`blkid /dev/vdb2|awk '{print $2}'`

echo "$uuid_blk /var/lib/ceph/osd/backup-0 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab

ceph-authtool --create-keyring /etc/ceph/backup.osd..keyring --gen-key -n osd. --cap mon 'allow profile osd' --cap mgr 'allow profile osd' --cap osd 'allow *'

ceph auth import -i /etc/ceph/backup.osd..keyring --cluster backup

ceph auth get-or-create osd. -o /var/lib/ceph/osd/backup-/keyring --cluster backup

ceph-osd -i --mkfs --cluster backup

cd /var/lib/ceph/osd/backup-

rm -f journal

partuuid_0=`blkid /dev/vdb1|awk -F "[\"\"]" '{print $8}'`

ln -s /dev/disk/by-partuuid/$partuuid_0 ./journal

chown ceph.ceph -R /var/lib/ceph

ceph-osd --mkjournal -i --cluster backup

chown ceph.ceph /dev/disk/by-partuuid/$partuuid_0

ceph osd crush add-bucket ceph5 host --cluster backup

ceph osd crush move ceph5 root=default --cluster backup

ceph osd crush add osd. 0.01500 root=default host=ceph5 --cluster backup

systemctl start ceph-osd@

systemctl enable ceph-osd@ ceph osd create

sgdisk --new=::+5G --change-name=:'ceph journal' --partition-guid=:3af9982e---bf3a-771f0e981f00 --typecode=:3af9982e---bf3a-771f0e981f00 --mbrtogpt -- /dev/vdc

sgdisk --new=:: --change-name=:'ceph data' --partition-guid=:3f1d1456-2ebe--96dc-e8ba975a772c --typecode=:3f1d1456-2ebe--96dc-e8ba975a772c --mbrtogpt -- /dev/vdc

mkfs.xfs -f -i size= /dev/vdc1

mkfs.xfs -f -i size= /dev/vdc2

mount -o noatime,largeio,inode64,swalloc /dev/vdc2 /var/lib/ceph/osd/backup-

blkid /dev/vdc2

uuid_blk_1=`blkid /dev/vdc2|awk '{print $2}'`

echo "$uuid_blk_1 /var/lib/ceph/osd/backup-1 xfs defaults,noatime,largeio,inode64,swalloc 0 0" >> /etc/fstab

ceph-osd -i --mkfs --mkkey --cluster backup

cd /var/lib/ceph/osd/backup-

rm -f journal

partuuid_1=`blkid /dev/vdc1|awk -F "[\"\"]" '{print $8}'`

ln -s /dev/disk/by-partuuid/$partuuid_1 ./journal

chown ceph.ceph -R /var/lib/ceph

ceph-osd --mkjournal -i --cluster backup

chown ceph.ceph /dev/disk/by-partuuid/$partuuid_1

ceph auth add osd. osd 'allow *' mon 'allow profile osd' mgr 'allow profile osd' -i /var/lib/ceph/osd/backup-/keyring --cluster backup

ceph osd crush add osd. 0.01500 root=default host=ceph5 --cluster backup

systemctl start ceph-osd@

systemctl enable ceph-osd@ ceph osd create

sgdisk --new=::+5G --change-name=:'ceph journal' --partition-guid=:4e7b40e7-f303--8b57-e024d4987892 --typecode=:4e7b40e7-f303--8b57-e024d4987892 --mbrtogpt -- /dev/vdd

sgdisk --new=:: --change-name=:'ceph data' --partition-guid=:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --typecode=:c82ce8a4-a762-4ba9-b987-21ef51df2eb2 --mbrtogpt -- /dev/vdd

mkfs.xfs -f -i size= /dev/vdd1

mkfs.xfs -f -i size= /dev/vdd2

mount -o noatime,largeio,inode64,swalloc /dev/vdd2 /var/lib/ceph/osd/backup-

blkid /dev/vdd2

uuid_blk_2=`blkid /dev/vdd2|awk '{print $2}'`

echo "$uuid_blk_2 /var/lib/ceph/osd/backup-2 xfs defaults,noatime,largeio,inode64,swalloc 0 0">> /etc/fstab

ceph-osd -i --mkfs --mkkey --cluster backup

cd /var/lib/ceph/osd/backup-

rm -f journal

partuuid_2=`blkid /dev/vdd1|awk -F "[\"\"]" '{print $8}'`

ln -s /dev/disk/by-partuuid/$partuuid_2 ./journal

chown ceph.ceph -R /var/lib/ceph

ceph-osd --mkjournal -i --cluster backup

chown ceph.ceph /dev/disk/by-partuuid/$partuuid_2

ceph auth add osd. osd 'allow *' mon 'allow profile osd' mgr 'allow profile osd' -i /var/lib/ceph/osd/backup-/keyring --cluster backup

ceph osd crush add osd. 0.01500 root=default host=ceph5 --cluster backup

systemctl start ceph-osd@

systemctl enable ceph-osd@ ceph osd getcrushmap -o /etc/ceph/crushmap

crushtool -d /etc/ceph/crushmap -o /etc/ceph/crushmap.txt

sed -i 's/step chooseleaf firstn 0 type host/step chooseleaf firstn 0 type osd/' /etc/ceph/crushmap.txt

grep 'step chooseleaf' /etc/ceph/crushmap.txt

crushtool -c /etc/ceph/crushmap.txt -o /etc/ceph/crushmap-new

ceph osd setcrushmap -i /etc/ceph/crushmap-new 命令

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!

007 Ceph手动部署单节点的更多相关文章

- 【原】docker部署单节点consul

docker下部署单节点的consul,最重要的是在run consul时,配置-bootstrap-expect=1 docker run --name consul1 -d -p : -p : - ...

- K8s二进制部署单节点 master组件 node组件 ——头悬梁

K8s二进制部署单节点 master组件 node组件 --头悬梁 1.master组件部署 2.node 组件部署 k8s集群搭建: etcd集群 flannel网络插件 搭建maste ...

- K8s二进制部署单节点 etcd集群,flannel网络配置 ——锥刺股

K8s 二进制部署单节点 master --锥刺股 k8s集群搭建: etcd集群 flannel网络插件 搭建master组件 搭建node组件 1.部署etcd集群 2.Flannel 网络 ...

- mongodb部署单节点(一)

部署包:mongodb-linux-x86_64-rhel55-3.0.2.tgz(百度云盘下载地址:http://pan.baidu.com/s/1jIQAGlw 密码:l7pf) 第一步:上传该文 ...

- kubernetes环境部署单节点redis

kubernetes部署redis数据库(单节点) redis简介 Redis 是我们常用的非关系型数据库,在项目开发.测试.部署到生成环境时,经常需要部署一套 Redis 来对数据进行缓存.这里介绍 ...

- kolla-ansible部署单节点OpenStack-Pike

一.准备工作 最小化安装CentOS 7.5,装完后,进行初始化 selinux,防火墙端口无法访问,主机名问题,都是安装的常见错误,一定要细心确认. kolla的安装,要求目标机器是两块网卡: en ...

- 利用shell脚本[带注释的]部署单节点多实例es集群(docker版)

文章目录 目录结构 install_docker_es.sh elasticsearch.yml.template 没事写写shell[我自己都不信,如果不是因为工作需要,我才不要写shell],努力 ...

- CentOS7 部署单节点 FastDFS

准备 环境 系统:CentOS7.5 软件即依赖 libfatscommon FastDFS分离出的一些公用函数包 FastDFS fastdfs-nginx-module FastDFS和nginx ...

- 在Centos 7.7下用minikube部署单节点kubernetes.

centos8 下用yum安装docker-ce会报错,说明docker-ce对centos8支持还不太好.所以在centos7.7下安装 先更新一下系统 yum update 安装 yum工具, ...

随机推荐

- vs code python保存时pylint提示"Unable to import 'flask'"

在配置vscode python开发环境时,编写如下代码并保存时,会提示Unable to import 'flask' from flask import Flask app = Flask(__n ...

- SDUT-2122_数据结构实验之链表七:单链表中重复元素的删除

数据结构实验之链表七:单链表中重复元素的删除 Time Limit: 1000 ms Memory Limit: 65536 KiB Problem Description 按照数据输入的相反顺序(逆 ...

- 「BZOJ2510」弱题

「BZOJ2510」弱题 这题的dp式子应该挺好写的,我是不会告诉你我开始写错了的,设f[i][j]为操作前i次,取到j小球的期望个数(第一维这么大显然不可做),那么 f[i][j]=f[i-1][j ...

- List of open source software

List of open source software https://www.ibm.com/developerworks/community/wikis/home?lang=en#!/wiki/ ...

- OracleSpatial函数

Oracle_spatial的函数 一sdo_Geom包的函数: 用于表示两个几何对象的关系(结果为True/False)的函数:RELATE,WITHIN_DISTANCE 验证的函数:VALIDA ...

- H3C 代理ARP

- oracle优化EXPORT和IMPORT

使用较大的BUFFER(比如10MB , 10,240,000)可以提高EXPORT和IMPORT的速度. ORACLE将尽可能地获取你所指定的内存大小,即使在内存不满足,也不会报错.这个值至少要和表 ...

- Python--day24--单继承关键字super

super(). 调用父类方法:(super不仅可以在一个类的内部使用,还可以在一个类的外部使用)

- poj1573

题意:给出一个矩形,N,E,S,W分别代表进行移动的方向,如果走出矩形网格则输出经过的网格数,如果在矩形网格内循环,则输出没进入循环之前所走过的网格数和循环所经过的网格数: 思路:创建两个数组,一个字 ...

- C# GUID ToString

最近在看到小伙伴直接使用 Guid.ToString ,我告诉他需要使用 Guid.ToString("N") ,为什么需要使用 N ,因为默认的是 D 会出现连字符. Guid ...