Map Reduce Application(Partitioninig/Binning)

Map Reduce Application(Partitioninig/Group data by a defined key)

Assuming we want to group data by the year(2008 to 2016) of their [last access date time]. For each year, we use a reducer to collect them and output the data in this group/partition(year of the last access datetime). So, we want the MR to partition our key by year. We will lean what's the default partitioner and see how to set custom partitioner.

The default partitioner:

public int getPartition(K key, V value,

int numReduceTasks) {

return (key.hashCode() & Integer.MAX_VALUE) % numReduceTasks;

}

Custom Partitioner:

job.setPartitionerClass(CustomPartitioner.class)

With blew partitioner, the data of different year of [last access date time] will be assigned to different / unique partition. The num of reduce tasks is 9.

public static class CustomPartitioner extends Partitioner<Text, Text>{

@Override

public int getPartition(Text key, Text value, int numReduceTasks){

if(numReduceTasks == 0){

return 0;

}

return key-2008

}

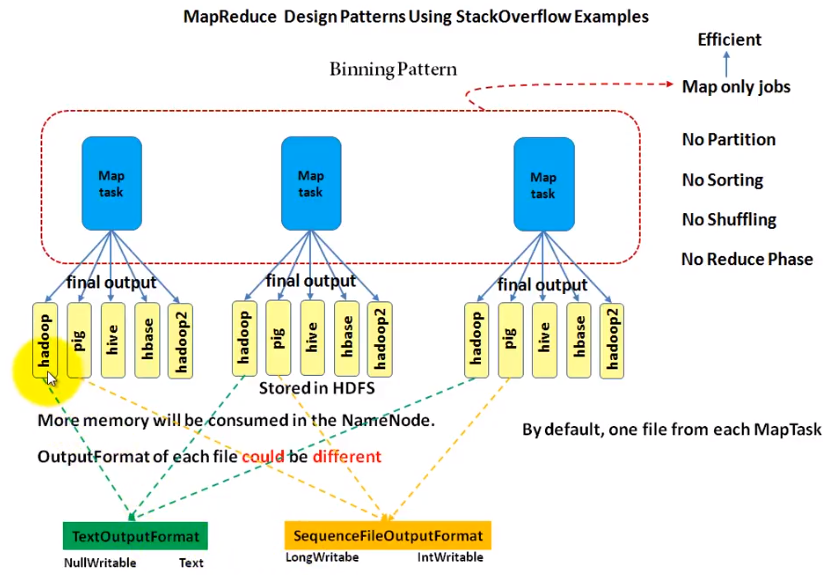

Binning pattern

The text/comments/answer/question....contains the specific words will be written into the corresponding files from mapper.

See below picture to understand the binning pattern. It is easier than partitioning as it does not have partition/sorting/shuffling and reducer(job.setNumReduceTasks(0)). The outputs from mappers compose the final outputs.

MultipleOutputs.addNamedOutput(job,"namedoutput",TextOutputFormat.class, NullWritable.class, Text.class)

In the mapper setup function, create the MultipleOutputs intance by calling its constructor

MultipleOutputs(TaskInputOutputContext<?,?,KEYOUT,VALUEOUT> context)

Creates and initializes multiple outputs support, it should be instantiated in the Mapper/Reducer setup method.

@Override

protected void setup(Context context){

maltipleOutputs = new MultipleOurputs(context);

}

Write your logic in the mapper function and output the result. "$tag/$tag-tag" means folder $pag will be created and $tag-tag is the prefix of the files(to distinguish the different mappers with suffix).

See doc for MultipleOutputs:https://hadoop.apache.org/docs/r3.0.1/api/org/apache/hadoop/mapreduce/lib/output/MultipleOutputs.html

if(tag.equalsIgnoreCase("pig"){

multipleOutputs.write("namedoutput",key,value,"pig/pig-tag");

}

if(tag.equalsIgnoreCase("hive"){

multipleOutputs.write("namedoutput",key,value,"hive/hive-tag");

}

.....

Map Reduce Application(Partitioninig/Binning)的更多相关文章

- Map Reduce Application(Join)

We are going to explain how join works in MR , we will focus on reduce side join and map side join. ...

- Map Reduce Application(Top 10 IDs base on their value)

Top 10 IDs base on their value First , we need to set the reduce to 1. For each map task, it is not ...

- mapreduce: 揭秘InputFormat--掌控Map Reduce任务执行的利器

随着越来越多的公司采用Hadoop,它所处理的问题类型也变得愈发多元化.随着Hadoop适用场景数量的不断膨胀,控制好怎样执行以及何处执行map任务显得至关重要.实现这种控制的方法之一就是自定义Inp ...

- MapReduce剖析笔记之三:Job的Map/Reduce Task初始化

上一节分析了Job由JobClient提交到JobTracker的流程,利用RPC机制,JobTracker接收到Job ID和Job所在HDFS的目录,够早了JobInProgress对象,丢入队列 ...

- python--函数式编程 (高阶函数(map , reduce ,filter,sorted),匿名函数(lambda))

1.1函数式编程 面向过程编程:我们通过把大段代码拆成函数,通过一层一层的函数,可以把复杂的任务分解成简单的任务,这种一步一步的分解可以称之为面向过程的程序设计.函数就是面向过程的程序设计的基本单元. ...

- 记一次MongoDB Map&Reduce入门操作

需求说明 用Map&Reduce计算几个班级中,每个班级10岁和20岁之间学生的数量: 需求分析 学生表的字段: db.students.insert({classid:1, age:14, ...

- filter,map,reduce,lambda(python3)

1.filter filter(function,sequence) 对sequence中的item依次执行function(item),将执行的结果为True(符合函数判断)的item组成一个lis ...

- map reduce

作者:Coldwings链接:https://www.zhihu.com/question/29936822/answer/48586327来源:知乎著作权归作者所有,转载请联系作者获得授权. 简单的 ...

- python基础——map/reduce

python基础——map/reduce Python内建了map()和reduce()函数. 如果你读过Google的那篇大名鼎鼎的论文“MapReduce: Simplified Data Pro ...

随机推荐

- Paxos一致性算法(三)

一.概述: Google Chubby的作者说过这个世界只有一种一致性算法,那就Paxos算法,其他的都是残次品. 二.Paxos算法: 一种基于消息传递的高度容错性的一致性算法. Paxos:少数服 ...

- Spring的扩展

Spring中引用属性文件 JNDI数据源 Spring中Bean的作用域 Spring自动装配 缺点

- Quote Helper

using System; using Microsoft.Xrm.Sdk; using Microsoft.Crm.Sdk.Messages; using Microsoft.Xrm.Sdk.Que ...

- hadoop生态搭建(3节点)-15.Nginx_Keepalived_Tomcat配置

# Nginx+Tomcat搭建高可用服务器名称 预装软件 IP地址Nginx服务器 Nginx1 192.168.6.131Nginx服务器 Nginx2 192.168.6.132 # ===== ...

- SVN错误记录

1.SVN错误:Attempted to lock an already-locked dir 发生这个错误多是中断提交导致了,执行clear后可修复 右键项目--->team--->清理 ...

- 『Linux基础 - 3』 Linux文件目录介绍

Windows 和 Linux 文件系统区别 -- 结构 Windows 下的文件系统 - 在 Windows 下,打开 "计算机",我们看到的是一个个的驱动器盘符: - 每个驱动 ...

- spark----词频统计(一)

利用Linux系统中安装的spark来统计: 1.选择目录,并创建一个存放文本的目录,将要处理的文本保存在该目录下以供查找操作: ① cd /usr/local ②mkdir mycode ③ cd ...

- categorical[np.arange(n), y] = 1 IndexError: index 2 is out of bounds for axis 1 with size 2

我的错误的代码是:train_labels = np_utils.to_categorical(train_labels,num_classes = 3) 错误的原因: IndexError: ind ...

- 解决应用程序无法正常启动0xc0150002等问题

1.在程序运行出错的时候,右键“我的电脑”,然后点击“管理”→“事件查看器”→“Windows 日志”→“应用程序”,查看错误信息: 1> “E:\IPCam_share\ARP\數據處理\Hg ...

- Java:xxx is not an enclosing class

1. 错误原因 该错误一般出现在对内部类进行实例化时,例如 public class A{ public class B{ } } 此时B是A的内部类,如果我们要使用如下语句实例化一个B类的对象: A ...