Spark MLlib + maven + scala 试水~

- 使用SGD算法逻辑回归的垃圾邮件分类器

package com.oreilly.learningsparkexamples.scala

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.mllib.classification.LogisticRegressionWithSGD

import org.apache.spark.mllib.feature.HashingTF

import org.apache.spark.mllib.regression.LabeledPoint

object MLlib {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName(s"MLlib example")

val sc = new SparkContext(conf)

// Load 2 types of emails from text files: spam and ham (non-spam).

// Each line has text from one email.

val spam = sc.textFile("files/spam.txt")

val ham = sc.textFile("files/ham.txt")

// Create a HashingTF instance to map email text to vectors of 100 features.

val tf = new HashingTF(numFeatures = 100)

// Each email is split into words, and each word is mapped to one feature.

val spamFeatures = spam.map(email => tf.transform(email.split(" ")))

val hamFeatures = ham.map(email => tf.transform(email.split(" ")))

// Create LabeledPoint datasets for positive (spam) and negative (ham) examples.

val positiveExamples = spamFeatures.map(features => LabeledPoint(1, features))

val negativeExamples = hamFeatures.map(features => LabeledPoint(0, features))

val trainingData = positiveExamples ++ negativeExamples

trainingData.cache() // Cache data since Logistic Regression is an iterative algorithm.

// Create a Logistic Regression learner which uses the SGD.

val lrLearner = new LogisticRegressionWithSGD()

// Run the actual learning algorithm on the training data.

val model = lrLearner.run(trainingData)

// Test on a positive example (spam) and a negative one (ham).

// First apply the same HashingTF feature transformation used on the training data.

val posTestExample = tf.transform("O M G GET cheap stuff by sending money to ...".split(" "))

val negTestExample = tf.transform("Hi Dad, I started studying Spark the other ...".split(" "))

// Now use the learned model to predict spam/ham for new emails.

println(s"Prediction for positive test example: ${model.predict(posTestExample)}")

println(s"Prediction for negative test example: ${model.predict(negTestExample)}")

sc.stop()

}

}

spam.txt

Dear sir, I am a Prince in a far kingdom you have not heard of. I want to send you money via wire transfer so please ...

Get Viagra real cheap! Send money right away to ...

Oh my gosh you can be really strong too with these drugs found in the rainforest. Get them cheap right now ...

YOUR COMPUTER HAS BEEN INFECTED! YOU MUST RESET YOUR PASSWORD. Reply to this email with your password and SSN ...

THIS IS NOT A SCAM! Send money and get access to awesome stuff really cheap and never have to ...

ham.txt

Dear Spark Learner, Thanks so much for attending the Spark Summit 2014! Check out videos of talks from the summit at ...

Hi Mom, Apologies for being late about emailing and forgetting to send you the package. I hope you and bro have been ...

Wow, hey Fred, just heard about the Spark petabyte sort. I think we need to take time to try it out immediately ...

Hi Spark user list, This is my first question to this list, so thanks in advance for your help! I tried running ...

Thanks Tom for your email. I need to refer you to Alice for this one. I haven't yet figured out that part either ...

Good job yesterday! I was attending your talk, and really enjoyed it. I want to try out GraphX ...

Summit demo got whoops from audience! Had to let you know. --Joe

- maven打包scala程序

├── pom.xml

├── README.md

├── src

│ └── main

│ └── scala

│ └── com

│ └── learningsparkexamples

│ └── scala

│ └── MLlib.scala

MLlib.scala 就是上面写的scala代码,pom.xml 是 maven 编译时候的 配置 文件:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>my.demo</groupId>

<artifactId>sparkdemo</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<!--编译时候 java版本

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

-->

<encoding>UTF-8</encoding>

<scala.tools.version>2.10</scala.tools.version>

<!-- Put the Scala version of the cluster -->

<scala.version>2.10.5</scala.version>

</properties>

<dependencies>

<dependency> <!-- Spark dependency -->

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>1.6.1</version>

<scope>provided</scope>

</dependency>

<dependency> <!-- Spark dependency -->

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.10</artifactId>

<version>1.6.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>2.10.5</version>

</dependency>

</dependencies>

<build>

<pluginManagement>

<plugins>

<plugin>

<!--用来编译scala的-->

<groupId>net.alchim31.maven</groupId>

<artifactId>

scala-maven-plugin</artifactId>

<version>3.1.5</version>

</plugin>

</plugins>

</pluginManagement>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<executions>

<execution>

<id>scala-compile-first</id>

<phase>process-resources</phase>

<goals>

<goal>add-source</goal>

<goal>compile</goal>

</goals>

</execution>

<execution>

<id>scala-test-compile</id>

<phase>

process-test-resources</phase>

<goals>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

其中:

import org.apache.spark.{SparkConf, SparkContext}

所需要的依赖包配置是:

<dependency> <!-- Spark dependency -->

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>1.6.1</version>

<scope>provided</scope>

</dependency>

import org.apache.spark.mllib.classification.LogisticRegressionWithSGD

import org.apache.spark.mllib.feature.HashingTF

import org.apache.spark.mllib.regression.LabeledPoint

所需要的依赖包配置是:

<dependency> <!-- Spark dependency -->

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.10</artifactId>

<version>1.6.1</version>

<scope>provided</scope>

</dependency>

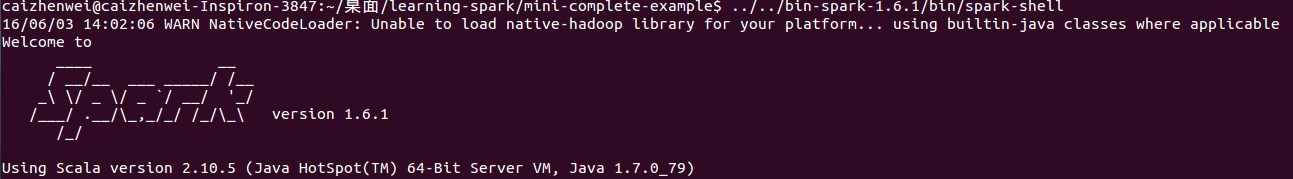

配置的时候要注意spark 和 scala 的版本,可以打开spark-shell 观察:

配置完成后,在pom.xml 所在的目录运行命令:

mvn clean && mvn compile && mvn package

如果mvn 下载 有问题,可以参考这篇博文:http://www.cnblogs.com/xiaoyesoso/p/5489822.html 的 3. Bulid GitHub Spark Runnable Distribution

- spark运行项目

mvn编译打包完成后会pom.xml所在目录下出现一个target文件夹:

├── target

│ ├── classes

│ │ └── com

│ │ └── oreilly

│ │ └── learningsparkexamples

│ │ └── scala

│ │ ├── MLlib$$anonfun$1.class

│ │ ├── MLlib$$anonfun$2.class

│ │ ├── MLlib$$anonfun$3.class

│ │ ├── MLlib$$anonfun$4.class

│ │ ├── MLlib.class

│ │ └── MLlib$.class

│ ├── classes.-475058802.timestamp

│ ├── maven-archiver

│ │ └── pom.properties

│ ├── maven-status

│ │ └── maven-compiler-plugin

│ │ └── compile

│ │ └── default-compile

│ │ ├── createdFiles.lst

│ │ └── inputFiles.lst

│ └── sparkdemo-1.0-SNAPSHOT.jar

最后 运行命令,提交执行任务(注意两个test文件所对应的位置):

${SPARK_HOME}/bin/spark-submit --class ${package.name}.${class.name} ${PROJECT_HOME}/target/*.jar

运行结果:

caizhenwei@caizhenwei-Inspiron-:~/桌面/learning-spark$ vim mini-complete-example/src/main/scala/com/oreilly/learningsparkexamples/mini/scala/MLlib.scala caizhenwei@caizhenwei-Inspiron-:~/桌面/learning-spark$ ../bin-spark-1.6./bin/spark-submit --class com.oreilly.learningsparkexamples.scala.MLlib ./mini-complete-example/target/sparkdemo-1.0-SNAPSHOT.jar

// :: WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: WARN Utils: Your hostname, caizhenwei-Inspiron- resolves to a loopback address: 127.0.1.1; using 172.16.111.93 instead (on interface eth0)

// :: WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

// :: WARN Utils: Service 'SparkUI' could not bind on port . Attempting port .

// :: WARN BLAS: Failed to load implementation from: com.github.fommil.netlib.NativeSystemBLAS

// :: WARN BLAS: Failed to load implementation from: com.github.fommil.netlib.NativeRefBLAS

Prediction for positive test example: 1.0

Prediction for negative test example: 0.0

Spark MLlib + maven + scala 试水~的更多相关文章

- 十二、spark MLlib的scala示例

简介 spark MLlib官网:http://spark.apache.org/docs/latest/ml-guide.html mllib是spark core之上的算法库,包含了丰富的机器学习 ...

- 朴素贝叶斯算法原理及Spark MLlib实例(Scala/Java/Python)

朴素贝叶斯 算法介绍: 朴素贝叶斯法是基于贝叶斯定理与特征条件独立假设的分类方法. 朴素贝叶斯的思想基础是这样的:对于给出的待分类项,求解在此项出现的条件下各个类别出现的概率,在没有其它可用信息下,我 ...

- eclipse构建maven+scala+spark工程 转载

转载地址:http://jingpin.jikexueyuan.com/article/47043.html 本文先叙述如何配置eclipse中maven+scala的开发环境,之后,叙述如何实现sp ...

- spark mllib配置pom.xml错误 Multiple markers at this line Could not transfer artifact net.sf.opencsv:opencsv:jar:2.3 from/to central (https://repo.maven.apache.org/maven2): repo.maven.apache.org

刚刚spark mllib,在maven repository网站http://mvnrepository.com/中查询mllib后得到相关库的最新dependence为: <dependen ...

- spark Using MLLib in Scala/Java/Python

Using MLLib in ScalaFollowing code snippets can be executed in spark-shell. Binary ClassificationThe ...

- Eclipse+maven+scala+spark环境搭建

准备条件 我用的Eclipse版本 Eclipse Java EE IDE for Web Developers. Version: Luna Release (4.4.0) 我用的是Eclipse ...

- 梯度迭代树(GBDT)算法原理及Spark MLlib调用实例(Scala/Java/python)

梯度迭代树(GBDT)算法原理及Spark MLlib调用实例(Scala/Java/python) http://blog.csdn.net/liulingyuan6/article/details ...

- 3 分钟学会调用 Apache Spark MLlib KMeans

Apache Spark MLlib是Apache Spark体系中重要的一块拼图:提供了机器学习的模块.只是,眼下对此网上介绍的文章不是非常多.拿KMeans来说,网上有些文章提供了一些演示样例程序 ...

- Spark MLlib编程API入门系列之特征选择之卡方特征选择(ChiSqSelector)

不多说,直接上干货! 特征选择里,常见的有:VectorSlicer(向量选择) RFormula(R模型公式) ChiSqSelector(卡方特征选择). ChiSqSelector用于使用卡方检 ...

随机推荐

- Thinking In Java持有对象阅读记录

这里记录下一些之前不太了解的知识点,还有一些小细节吧 序 首先,为什么要有Containers来持有对象,直接用array不好吗?——数组是固定大小的,使用不方便,而且是只能持有一个类型的对象,但当你 ...

- keil_rtx调试技巧

超级循环结构的程序调试一般依赖于断点,单步,查看变量和内存变量(keil中的Memory Window 或者 Watch window):而带微操作系统的程序由于加了这个中间层调试方法可能传统的有些区 ...

- php中3DES加密技术

因为工作中要用到加密,接口中要求也是用密文传输数据,用到3des加密,就研究了一下. 在网上也找了好多,但是都不可以用,没法正式运行,终于找到一个可以运行的,自己又修改了一下,记录下来,以后还可能会用 ...

- docker镜像与容器

目录 docker镜像与容器 概述 分层存储 镜像与容器 删除镜像与容器 将容器中的改动提交到镜像 慎用 docker commit--构建镜像推荐使用dockerfile docker镜像与容器 概 ...

- java的8大排序详解

本文转自 黑色幽默Lion的博客 http://www.cnblogs.com/pepcod/archive/2012/08/01/2618300.html 最近开始学习java,排序的部分之前学C的 ...

- JavaScript 获取浏览器版本

//获取IE版本function GetIEVersions(){ var iejson={ isIE:false,safariVersion:0 }; var ua = navigator.user ...

- vue2.0:(三)、项目开始,首页入门(main.js,App.vue,importfrom)

接下来,就需要对main.js App.vue 等进行操作了. 但是这就出现了一个问题:什么是main.js,他主要干什么用的?App.vue又是干什么用的?main.js 里面的import fro ...

- Django框架和前端的的基本结合

1 昨日回顾 a socket b 路由关系 c 模板字符串替换(模板语言) 主流web框架总结: django a用别人的 b自己写的 c自己写的 flask a用别人的 b自己写的 c用别人的(j ...

- jQuery属性和样式操作

回顾 1. jquery基本使用 <script src="jquery.min.js"></script><script> $(functio ...

- azure 创建redhat镜像帮助

为 Azure 准备基于 Red Hat 的虚拟机 从 Hyper-V 管理器准备基于 Red Hat 的虚拟机 先决条件 本部分假定你已经从 Red Hat 网站获取 ISO 文件并将 RHEL 映 ...