K8s 部署 Prometheus + Grafana

一、简介

1. Prometheus

- 一款开源的监控&报警&时间序列数据库的组合,起始是由 SoundCloud 公司开发的

- 基本原理是通过 HTTP 协议周期性抓取被监控组件的状态,这样做的好处是任意组件只要提供 HTTP 接口就可以接入监控系统,不需要任何 SDK 或者其他的集成过程。这样做非常适合虚拟化环境比如 VM 或者 Docker

- 输出被监控组件信息的 HTTP 接口被叫做 exporter 。目前互联网公司常用的组件大部分都有 exporter 可以直接使用,比如 Varnish、Haproxy、Nginx、MySQL、Linux 系统信息(包括磁盘、内存、CPU、网络等),具体支持的源看:https://github.com/prometheus

- 特点:

- 一个多维数据模型(时间序列由指标名称定义和设置键/值尺寸)

- 非常高效的存储,平均一个采样数据占 ~3.5bytes 左右,320 万的时间序列,每 30 秒采样,保持 60 天,消耗磁盘大概 228G

- 一种灵活的查询语言

- 不依赖分布式存储,单个服务器节点

- 时间集合通过 HTTP 上的 PULL 模型进行

- 通过中间网关支持推送时间

- 通过服务发现或静态配置发现目标

- 多种模式的图形和仪表板支持

2. Grafana

- 一个跨平台的开源的度量分析和可视化工具,可以通过将采集的数据查询然后可视化的展示,并及时通知

- 特点:

- 展示方式:快速灵活的客户端图表,面板插件有许多不同方式的可视化指标和日志,官方库中具有丰富的仪表盘插件,如热图、折线图、图表等多种展示方式

- 数据源:Graphite,InfluxDB,OpenTSDB,Prometheus,Elasticsearch,CloudWatch 和 KairosDB 等

- 通知提醒:以可视方式定义最重要指标的警报规则,Grafana 将不断计算并发送通知,在数据达到阈值时通过 Slack、PagerDuty 等获得通知

- 混合展示:在同一图表中混合使用不同的数据源,可以基于每个查询指定数据源,甚至自定义数据源

- 注释:使用来自不同数据源的丰富事件注释图表,将鼠标悬停在事件上会显示完整的事件元数据和标记

- 过滤器:Ad-hoc 过滤器允许动态创建新的键/值过滤器,这些过滤器会自动应用于使用该数据源的所有查询

3. 效果展示

二、部署

$ kubectl create ns ns-monitor$ kubectl create -f ...$ kubectl get all -n ns-monitorNAME READY STATUS RESTARTS AGEpod/node-exporter-rcbss 1/1 Running 0 4h41mpod/grafana-5567c66c9d-49b5w 1/1 Running 0 4h25mpod/prometheus-5ccc8db98f-lkwf5 1/1 Running 0 3h12mNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/node-exporter-service NodePort 10.43.75.152 <none> 9100:31672/TCP 4h41mservice/grafana-service NodePort 10.43.26.238 <none> 3000:32534/TCP 4h25mservice/prometheus-service NodePort 10.43.174.110 <none> 9090:31396/TCP 3h12m

没有配置

nodePort,端口随机生成

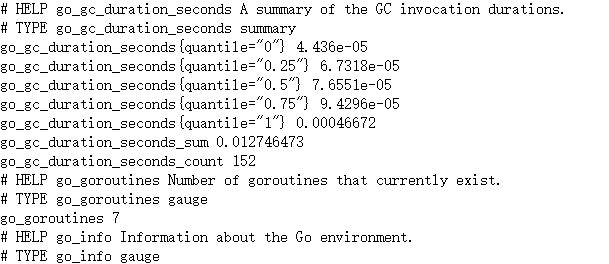

1. node-exporter

- 用于采集 k8s 集群中各个节点的物理指标,如 Memory、CPU 等。可以直接在每个物理节点直接安装

kind: DaemonSetapiVersion: apps/v1metadata:labels:app: node-exportername: node-exporternamespace: ns-monitorspec:revisionHistoryLimit: 10selector:matchLabels:app: node-exportertemplate:metadata:labels:app: node-exporterspec:containers:- name: node-exporterimage: prom/node-exporter:v0.16.0ports:- containerPort: 9100protocol: TCPname: httphostNetwork: true # 获得Node的物理指标信息hostPID: true # 获得Node的物理指标信息# tolerations: # Master节点# - effect: NoSchedule# operator: Exists---kind: ServiceapiVersion: v1metadata:labels:app: node-exportername: node-exporter-servicenamespace: ns-monitorspec:ports:- name: httpport: 9100nodePort: 31672protocol: TCPtype: NodePortselector:app: node-exporter

http://192.168.11.210:31672/metrics

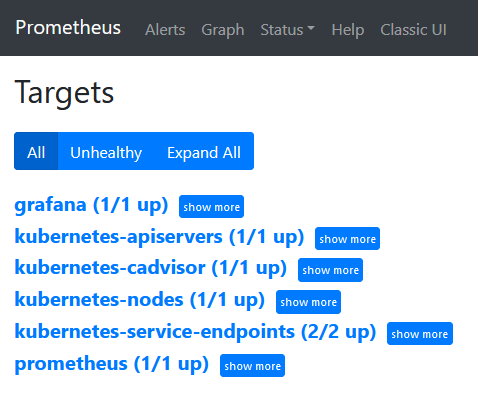

2. Prometheus

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:name: prometheusrules:- apiGroups: [""] # "" indicates the core API groupresources:- nodes- nodes/proxy- services- endpoints- podsverbs:- get- watch- list- apiGroups:- extensionsresources:- ingressesverbs:- get- watch- list- nonResourceURLs: ["/metrics"]verbs:- get---apiVersion: v1kind: ServiceAccountmetadata:name: prometheusnamespace: ns-monitorlabels:app: prometheus---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: prometheussubjects:- kind: ServiceAccountname: prometheusnamespace: ns-monitorroleRef:kind: ClusterRolename: prometheusapiGroup: rbac.authorization.k8s.io---apiVersion: v1kind: ConfigMapmetadata:name: prometheus-confnamespace: ns-monitorlabels:app: prometheusdata:prometheus.yml: |-# my global configglobal:scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.# scrape_timeout is set to the global default (10s).# Alertmanager configurationalerting:alertmanagers:- static_configs:- targets:# - alertmanager:9093# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.rule_files:# - "first_rules.yml"# - "second_rules.yml"# A scrape configuration containing exactly one endpoint to scrape:# Here it's Prometheus itself.scrape_configs:# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.- job_name: 'prometheus'# metrics_path defaults to '/metrics'# scheme defaults to 'http'.static_configs:- targets: ['localhost:9090']- job_name: 'grafana'static_configs:- targets:- 'grafana-service.ns-monitor:3000'- job_name: 'kubernetes-apiservers'kubernetes_sd_configs:- role: endpoints# Default to scraping over https. If required, just disable this or change to# `http`.scheme: https# This TLS & bearer token file config is used to connect to the actual scrape# endpoints for cluster components. This is separate to discovery auth# configuration because discovery & scraping are two separate concerns in# Prometheus. The discovery auth config is automatic if Prometheus runs inside# the cluster. Otherwise, more config options have to be provided within the# <kubernetes_sd_config>.tls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt# If your node certificates are self-signed or use a different CA to the# master CA, then disable certificate verification below. Note that# certificate verification is an integral part of a secure infrastructure# so this should only be disabled in a controlled environment. You can# disable certificate verification by uncommenting the line below.## insecure_skip_verify: truebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token# Keep only the default/kubernetes service endpoints for the https port. This# will add targets for each API server which Kubernetes adds an endpoint to# the default/kubernetes service.relabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]action: keepregex: default;kubernetes;https# Scrape config for nodes (kubelet).## Rather than connecting directly to the node, the scrape is proxied though the# Kubernetes apiserver. This means it will work if Prometheus is running out of# cluster, or can't connect to nodes for some other reason (e.g. because of# firewalling).- job_name: 'kubernetes-nodes'# Default to scraping over https. If required, just disable this or change to# `http`.scheme: https# This TLS & bearer token file config is used to connect to the actual scrape# endpoints for cluster components. This is separate to discovery auth# configuration because discovery & scraping are two separate concerns in# Prometheus. The discovery auth config is automatic if Prometheus runs inside# the cluster. Otherwise, more config options have to be provided within the# <kubernetes_sd_config>.tls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __address__replacement: kubernetes.default.svc:443- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __metrics_path__replacement: /api/v1/nodes/${1}/proxy/metrics# Scrape config for Kubelet cAdvisor.## This is required for Kubernetes 1.7.3 and later, where cAdvisor metrics# (those whose names begin with 'container_') have been removed from the# Kubelet metrics endpoint. This job scrapes the cAdvisor endpoint to# retrieve those metrics.## In Kubernetes 1.7.0-1.7.2, these metrics are only exposed on the cAdvisor# HTTP endpoint; use "replacement: /api/v1/nodes/${1}:4194/proxy/metrics"# in that case (and ensure cAdvisor's HTTP server hasn't been disabled with# the --cadvisor-port=0 Kubelet flag).## This job is not necessary and should be removed in Kubernetes 1.6 and# earlier versions, or it will cause the metrics to be scraped twice.- job_name: 'kubernetes-cadvisor'# Default to scraping over https. If required, just disable this or change to# `http`.scheme: https# This TLS & bearer token file config is used to connect to the actual scrape# endpoints for cluster components. This is separate to discovery auth# configuration because discovery & scraping are two separate concerns in# Prometheus. The discovery auth config is automatic if Prometheus runs inside# the cluster. Otherwise, more config options have to be provided within the# <kubernetes_sd_config>.tls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __address__replacement: kubernetes.default.svc:443- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __metrics_path__replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor# Scrape config for service endpoints.## The relabeling allows the actual service scrape endpoint to be configured# via the following annotations:## * `prometheus.io/scrape`: Only scrape services that have a value of `true`# * `prometheus.io/scheme`: If the metrics endpoint is secured then you will need# to set this to `https` & most likely set the `tls_config` of the scrape config.# * `prometheus.io/path`: If the metrics path is not `/metrics` override this.# * `prometheus.io/port`: If the metrics are exposed on a different port to the# service then set this appropriately.- job_name: 'kubernetes-service-endpoints'kubernetes_sd_configs:- role: endpointsrelabel_configs:- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]action: replacetarget_label: __scheme__regex: (https?)- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]action: replacetarget_label: __address__regex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_service_name]action: replacetarget_label: kubernetes_name# Example scrape config for probing services via the Blackbox Exporter.## The relabeling allows the actual service scrape endpoint to be configured# via the following annotations:## * `prometheus.io/probe`: Only probe services that have a value of `true`- job_name: 'kubernetes-services'metrics_path: /probeparams:module: [http_2xx]kubernetes_sd_configs:- role: servicerelabel_configs:- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]action: keepregex: true- source_labels: [__address__]target_label: __param_target- target_label: __address__replacement: blackbox-exporter.example.com:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels: [__meta_kubernetes_namespace]target_label: kubernetes_namespace- source_labels: [__meta_kubernetes_service_name]target_label: kubernetes_name# Example scrape config for probing ingresses via the Blackbox Exporter.## The relabeling allows the actual ingress scrape endpoint to be configured# via the following annotations:## * `prometheus.io/probe`: Only probe services that have a value of `true`- job_name: 'kubernetes-ingresses'metrics_path: /probeparams:module: [http_2xx]kubernetes_sd_configs:- role: ingressrelabel_configs:- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]action: keepregex: true- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]regex: (.+);(.+);(.+)replacement: ${1}://${2}${3}target_label: __param_target- target_label: __address__replacement: blackbox-exporter.example.com:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_ingress_label_(.+)- source_labels: [__meta_kubernetes_namespace]target_label: kubernetes_namespace- source_labels: [__meta_kubernetes_ingress_name]target_label: kubernetes_name# Example scrape config for pods## The relabeling allows the actual pod scrape endpoint to be configured via the# following annotations:## * `prometheus.io/scrape`: Only scrape pods that have a value of `true`# * `prometheus.io/path`: If the metrics path is not `/metrics` override this.# * `prometheus.io/port`: Scrape the pod on the indicated port instead of the# pod's declared ports (default is a port-free target if none are declared).- job_name: 'kubernetes-pods'kubernetes_sd_configs:- role: podrelabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2target_label: __address__- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_name---apiVersion: v1kind: ConfigMapmetadata:name: prometheus-rulesnamespace: ns-monitorlabels:app: prometheusdata:cpu-usage.rule: |groups:- name: NodeCPUUsagerules:- alert: NodeCPUUsageexpr: (100 - (avg by (instance) (irate(node_cpu{name="node-exporter",mode="idle"}[5m])) * 100)) > 75for: 2mlabels:severity: "page"annotations:summary: "{{$labels.instance}}: High CPU usage detected"description: "{{$labels.instance}}: CPU usage is above 75% (current value is: {{ $value }})"---apiVersion: v1kind: PersistentVolumemetadata:name: "prometheus-data-pv"labels:name: prometheus-data-pvrelease: stablespec:capacity:storage: 5GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: Recyclenfs:path: /nfs/prometheus/dataserver: 192.168.11.210---apiVersion: v1kind: PersistentVolumeClaimmetadata:name: prometheus-data-pvcnamespace: ns-monitorspec:accessModes:- ReadWriteOnceresources:requests:storage: 5Giselector:matchLabels:name: prometheus-data-pvrelease: stable---kind: DeploymentapiVersion: apps/v1metadata:labels:app: prometheusname: prometheusnamespace: ns-monitorspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:app: prometheustemplate:metadata:labels:app: prometheusspec:serviceAccountName: prometheussecurityContext:runAsUser: 0containers:- name: prometheusimage: prom/prometheus:latestimagePullPolicy: IfNotPresentvolumeMounts:- mountPath: /prometheusname: prometheus-data-volume- mountPath: /etc/prometheus/prometheus.ymlname: prometheus-conf-volumesubPath: prometheus.yml- mountPath: /etc/prometheus/rulesname: prometheus-rules-volumeports:- containerPort: 9090protocol: TCPvolumes:- name: prometheus-data-volumepersistentVolumeClaim:claimName: prometheus-data-pvc- name: prometheus-conf-volumeconfigMap:name: prometheus-conf- name: prometheus-rules-volumeconfigMap:name: prometheus-rulestolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule---kind: ServiceapiVersion: v1metadata:annotations:prometheus.io/scrape: 'true'labels:app: prometheusname: prometheus-servicenamespace: ns-monitorspec:ports:- port: 9090targetPort: 9090selector:app: prometheustype: NodePort

http://192.168.11.210:31396/targets

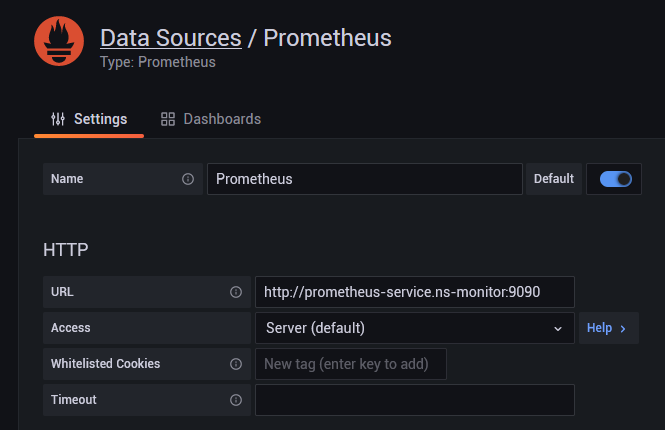

3. Grafana

apiVersion: v1kind: PersistentVolumemetadata:name: "grafana-data-pv"labels:name: grafana-data-pvrelease: stablespec:capacity:storage: 5GiaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: Recyclenfs:path: /nfs/grafana/dataserver: 192.168.11.210---apiVersion: v1kind: PersistentVolumeClaimmetadata:name: grafana-data-pvcnamespace: ns-monitorspec:accessModes:- ReadWriteOnceresources:requests:storage: 5Giselector:matchLabels:name: grafana-data-pvrelease: stable---kind: DeploymentapiVersion: apps/v1metadata:labels:app: grafananame: grafananamespace: ns-monitorspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:app: grafanatemplate:metadata:labels:app: grafanaspec:securityContext:runAsUser: 0containers:- name: grafanaimage: grafana/grafana:latestimagePullPolicy: IfNotPresentenv:- name: GF_AUTH_BASIC_ENABLEDvalue: "true"- name: GF_AUTH_ANONYMOUS_ENABLEDvalue: "false"readinessProbe:httpGet:path: /loginport: 3000volumeMounts:- mountPath: /var/lib/grafananame: grafana-data-volumeports:- containerPort: 3000protocol: TCPvolumes:- name: grafana-data-volumepersistentVolumeClaim:claimName: grafana-data-pvc---kind: ServiceapiVersion: v1metadata:labels:app: grafananame: grafana-servicenamespace: ns-monitorspec:ports:- port: 3000targetPort: 3000selector:app: grafanatype: NodePort

配置数据源

Import dashboard from file(非必须)

https://files.cnblogs.com/files/lb477/Kubernetes-Pod-Resources.json

参考:https://www.jianshu.com/p/ac8853927528

K8s 部署 Prometheus + Grafana的更多相关文章

- k8s实战之部署Prometheus+Grafana可视化监控告警平台

写在前面 之前部署web网站的时候,架构图中有一环节是监控部分,并且搭建一套有效的监控平台对于运维来说非常之重要,只有这样才能更有效率的保证我们的服务器和服务的稳定运行,常见的开源监控软件有好几种,如 ...

- k8b部署prometheus+grafana

来源: https://juejin.im/post/5c36054251882525a50bbdf0 https://github.com/redhatxl/k8s-prometheus-grafa ...

- 部署Prometheus+Grafana监控

Prometheus 1.不是很友好,各种配置都手写 2.对docker和k8s监控有成熟解决方案 Prometheus(普罗米修斯) 是一个最初在SoudCloud上构建的监控系统,开源项目,拥有非 ...

- kubenetes部署prometheus+grafana

文章目录 环境介绍 创建node-exporter 创建Prometheus 创建Grafana 测试 环境介绍 # 关于k8s的集群部署,可以查看我其他博客 [root@master ~]# cat ...

- k8s部署prometheus

https://www.kancloud.cn/huyipow/prometheus/527092 https://songjiayang.gitbooks.io/prometheus/content ...

- Rancher2.x 一键式部署 Prometheus + Grafana 监控 Kubernetes 集群

目录 1.Prometheus & Grafana 介绍 2.环境.软件准备 3.Rancher 2.x 应用商店 4.一键式部署 Prometheus 5.验证 Prometheus + G ...

- 群晖-使用docker套件部署Prometheus+Grafana

Docker 部署 Prometheus 说明: 先在群辉管理界面安装好docker套件,修改一下镜像源(更快一点) 所需容器如下 Prometheus Server(普罗米修斯监控主服务器 ) No ...

- Kubernetes部署Prometheus+Grafana(非存储持久化方式部署)

1.在master节点处新建一个文件夹,用于保存下载prometheus+granfana的yaml文件 mkdir /root/prometheus cd /root/prometheus git ...

- 【Linux】【Services】【SaaS】Docker+kubernetes(12. 部署prometheus/grafana/Influxdb实现监控)

1.简介 1.1. 官方网站: promethos:https://prometheus.io/ grafana:https://grafana.com/ 1.2. 架构图 2. 环境 2.1. 机器 ...

随机推荐

- 【近取 key】Alpha 阶段任务分配

项目 内容 这个作业属于哪个课程 2021春季计算机学院软件工程(罗杰 任健) 这个作业的要求在哪里 alpha阶段初始任务分配 我在这个课程的目标是 进一步提升工程化开发能力,积累团队协作经验,熟悉 ...

- CRM助力企业迎接数字化浪潮

去年,国家发展改革委官网发布'数字化转型伙伴行动'倡议.倡议政府和社会各界联合起来,共同构建多元化的联合推荐机制,带动全行业数字化转型,构建数字化产业链,培育数字化生态,形成"数字引领.抗击 ...

- Spring context的refresh函数执行过程分析

今天看了一下Spring Boot的run函数运行过程,发现它调用了Context中的refresh函数.所以先分析一下Spring context的refresh过程,然后再分析Spring boo ...

- 基于多IP地址Web服务

[Centos7.4版本] !!!测试环境我们首关闭防火墙和selinux [root@localhost ~]# systemctl stop firewalld [root@localhost ~ ...

- Linux_配置匿名访问FTP服务

[RHEL8]-FTPserver:[Centos7]-FTPclient !!!测试环境我们首关闭防火墙和selinux(FTPserver和FTPclient都需要) [root@localhos ...

- 搭建LNMP环境部署Wordpress博客

!!!首先要做的就是关闭系统的防火墙以及selinux: #systemctl stop firewalld #systemctl disable firewalld #sed -ri 's/^(SE ...

- Linux服务之nginx服务篇一(概念)

nginx官网:http://nginx.org/ 一. nginx和apache的区别 Nginx: 1.轻量级,采用 C 进行编写,同样的 web 服务,会占用更少的内存及资源. 2.抗并发,ng ...

- S6 文件备份与压缩命令

6.1 tar:打包备份 6.2 gzip:压缩或解压文件 6.3-4 zip.unzip 6.5 scp:远程文件复制 6.6 rsync:文件同步工具

- PHP相关session的知识

由于http协议是一种无状态协议,所以没有办法在多个页面间保持一些信息.例如,用户的登录状态,不可能让用户每浏览一个页面登录一次.session就是为了解决一些需要在多页面间持久保持一种状态的机制.P ...

- C语言关于指针函数与函数指针个人理解

1,函数指针 顾名思义,即指向函数的指针,功能与其他指针相同,该指针变量保存的是所指向函数的地址. 假如是void类型函数指针定义方式可以是 void (*f)(参数列表);亦可以先用 typedef ...