kafka集群(zookeeper)

部署环境准备

kafka集群部署

ip地址 主机名 安装软件

10.0.0.131 mcwkafka01 zookeeper、kafka

10.0.0.132 mcwkafka02 zookeeper、kafka

10.0.0.133 mcwkafka03 zookeeper、kafka

10.0.0.131 kafka-manager kafka-manager 查看防火墙,都已关闭

[root@mcwkafka01 ~]$ /etc/init.d/iptables status

-bash: /etc/init.d/iptables: No such file or directory

[root@mcwkafka01 ~]$ getenforce

Disabled

[root@mcwkafka01 ~]$ systemctl status firewalld.service

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; enabled; vendor preset: enabled)

Active: inactive (dead) since Tue 2022-02-08 01:35:17 CST; 35s ago 做hosts解析

[root@mcwkafka01 ~]$ tail -3 /etc/hosts

10.0.0.131 mcwkafka01

10.0.0.132 mcwkafka02

10.0.0.133 mcwkafka03 三个节点都部署jdk环境

[root@mcwkafka02 ~]$ unzip jdk1.8.0_202.zip

[root@mcwkafka02 ~]$ ls

anaconda-ks.cfg jdk1.8.0_202 jdk1.8.0_202.zip mcw4

[root@mcwkafka02 ~]$

[root@mcwkafka02 ~]$ mv jdk1.8.0_202 jdk

[root@mcwkafka02 ~]$ ls

anaconda-ks.cfg jdk jdk1.8.0_202.zip mcw4

[root@mcwkafka02 ~]$ mv jdk /opt/

[root@mcwkafka02 ~]$ vim /etc/profile

[root@mcwkafka02 ~]$ source /etc/profile

[root@mcwkafka02 ~]$ java -version

java version "1.8.0_202"

Java(TM) SE Runtime Environment (build 1.8.0_202-b08)

Java HotSpot(TM) 64-Bit Server VM (build 25.202-b08, mixed mode)

[root@mcwkafka02 ~]$

安装及配置zk(三台机器做如下同样操作)

[root@mcwkafka01 /usr/local/src]$ cd /usr/local/src/

[root@mcwkafka01 /usr/local/src]$ wget http://apache.forsale.plus/zookeeper/zookeeper-3.5.9/apache-zookeeper-3.5.9-bin.tar.gz 1)安装三个节点的zookeeper

[root@mcwkafka01 /usr/local/src]$ ls

apache-zookeeper-3.5.9-bin.tar.gz

[root@mcwkafka01 /usr/local/src]$ tar xf apache-zookeeper-3.5.9-bin.tar.gz

[root@mcwkafka01 /usr/local/src]$ mkdir /data

[root@mcwkafka01 /usr/local/src]$ ls

apache-zookeeper-3.5.9-bin apache-zookeeper-3.5.9-bin.tar.gz

[root@mcwkafka01 /usr/local/src]$ mv apache-zookeeper-3.5.9-bin /data/zk

[root@mcwkafka01 /usr/local/src]$ 修改三个节点的zookeeper的配置文件,内容如下所示:

[root@mcwkafka01 /usr/local/src]$ mkdir -p /data/zk/data

[root@mcwkafka01 /usr/local/src]$ ls /data/zk/

bin conf data docs lib LICENSE.txt NOTICE.txt README.md README_packaging.txt

[root@mcwkafka01 /usr/local/src]$ ls /data/zk/conf/

configuration.xsl log4j.properties zoo_sample.cfg

[root@mcwkafka01 /usr/local/src]$ ls /data/bin

ls: cannot access /data/bin: No such file or directory

[root@mcwkafka01 /usr/local/src]$ ls /data/zk/bin/

README.txt zkCli.cmd zkEnv.cmd zkServer.cmd zkServer.sh zkTxnLogToolkit.sh

zkCleanup.sh zkCli.sh zkEnv.sh zkServer-initialize.sh zkTxnLogToolkit.cmd

[root@mcwkafka01 /usr/local/src]$ cp /data/zk/conf/zoo_sample.cfg /data/zk/conf/zoo_sample.cfg.bak

[root@mcwkafka01 /usr/local/src]$ cp /data/zk/conf/zoo_sample.cfg /data/zk/conf/zoo.cfg

[root@mcwkafka01 /usr/local/src]$ vim /data/zk/conf/zoo.cfg ##清空之前的内容,配置成下面内容

[root@mcwkafka01 /usr/local/src]$ cat /data/zk/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zk/data/zookeeper

dataLogDir=/data/zk/data/logs

clientPort=2181

maxClientCnxns=60

autopurge.snapRetainCount=3

autopurge.purgeInterval=1

server.1=10.0.0.131:2888:3888

server.2=10.0.0.132:2888:3888

server.3=10.0.0.133:2888:3888

[root@mcwkafka01 /usr/local/src]$

===============

配置参数说明:

server.id=host:port:port:表示了不同的zookeeper服务器的自身标识,作为集群的一部分,每一台服务器应该知道其他服务器的信息。

用户可以从"server.id=host:port:port" 中读取到相关信息。

在服务器的data(dataDir参数所指定的目录)下创建一个文件名为myid的文件,这个文件的内容只有一行,指定的是自身的id值。

比如,服务器"1"应该在myid文件中写入"1"。这个id必须在集群环境中服务器标识中是唯一的,且大小在1~255之间。

这一样配置中,zoo1代表第一台服务器的IP地址。第一个端口号(port)是从follower连接到leader机器的端口,第二个端口是用来进行leader选举时所用的端口。

所以,在集群配置过程中有三个非常重要的端口:clientPort=2181、port:2888、port:3888。

=============== 注意:如果想更换日志输出位置,除了在zoo.cfg加入"dataLogDir=/data/zk/data/logs"外,还需要修改zkServer.sh文件,大概修改方式地方在

125行左右,内容如下:

[root@mcwkafka03 /usr/local/src]$ cp /data/zk/bin/zkServer.sh /data/zk/bin/zkServer.sh.bak

[root@mcwkafka03 /usr/local/src]$ vim /data/zk/bin/zkServer.sh

.......

125 ZOO_LOG_DIR="$($GREP "^[[:space:]]*dataLogDir" "$ZOOCFG" | sed -e 's/.*=//')" #添加这一行

126 if [ ! -w "$ZOO_LOG_DIR" ] ; then

127 mkdir -p "$ZOO_LOG_DIR"

128 fi

[root@mcwkafka03 /usr/local/src]$ diff /data/zk/bin/zkServer.sh /data/zk/bin/zkServer.sh.bak

125d124

< ZOO_LOG_DIR="$($GREP "^[[:space:]]*dataLogDir" "$ZOOCFG" | sed -e 's/.*=//')" 在启动zookeeper服务之前,还需要分别在三个zookeeper节点机器上创建myid,方式如下:

[root@mcwkafka01 /usr/local/src]$ mkdir /data/zk/data/zookeeper/

[root@mcwkafka01 /usr/local/src]$ echo 1 > /data/zk/data/zookeeper/myid 另外两个节点的myid分别为2、3(注意这三个节点机器的myid决不能一样,配置文件等其他都是一样配置)

[root@mcwkafka02 /usr/local/src]$ echo 2 > /data/zk/data/zookeeper/myid

[root@mcwkafka03 /usr/local/src]$ echo 3 > /data/zk/data/zookeeper/myid 启动三个节点的zookeeper服务

[root@mcwkafka01 /usr/local/src]$ /data/zk/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /data/zk/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@mcwkafka01 /usr/local/src]$ ps -ef|grep zookeeper

root 6997 1 58 21:53 pts/0 00:00:25 /opt/jdk/bin/java -Dzookeeper.log.dir=/data/zk/bin/../logs -Dzookeeper.log.file=zookeeper-root-server-mcwkafka01.log -Dzookeepe.root.logger=INFO,CONSOLE -XX:+HeapDumpOnOutOfMemoryError -XX:OnOutOfMemoryError=kill -9 %p -cp /data/zk/bin/../zookeeper-server/target/classes:/data/zk/bin/../build/classes:/data/zk/bin/../zookeeper-server/target/lib/*.jar:/data/zk/bin/../build/lib/*.jar:/data/zk/bin/../lib/zookeeper-jute-3.5.9.jar:/data/zk/bin/../lib/zookeeper-3.5.9.jar:/data/zk/bin/../lib/slf4j-log4j12-1.7.25.jar:/data/zk/bin/../lib/slf4j-api-1.7.25.jar:/data/zk/bin/../lib/netty-transport-native-unix-common-4.1.50.Final.jar:/data/zk/bin/../lib/netty-transport-native-epoll-4.1.50.Final.jar:/data/zk/bin/../lib/netty-transport-4.1.50.Final.jar:/data/zk/bin/../lib/netty-resolver-4.1.50.Final.jar:/data/zk/bin/../lib/netty-handler-4.1.50.Final.jar:/data/zk/bin/../lib/netty-common-4.1.50.Final.jar:/data/zk/bin/../lib/netty-codec-4.1.50.Final.jar:/data/zk/bin/../lib/netty-buffer-4.1.50.Final.jar:/data/zk/bin/../lib/log4j-1.2.17.jar:/data/zk/bin/../lib/json-simple-1.1.1.jar:/data/zk/bin/../lib/jline-2.14.6.jar:/data/zk/bin/../lib/jetty-util-ajax-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-util-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-servlet-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-server-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-security-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-io-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-http-9.4.35.v20201120.jar:/data/zk/bin/../lib/javax.servlet-api-3.1.0.jar:/data/zk/bin/../lib/jackson-databind-2.10.5.1.jar:/data/zk/bin/../lib/jackson-core-2.10.5.jar:/data/zk/bin/../lib/jackson-annotations-2.10.5.jar:/data/zk/bin/../lib/commons-cli-1.2.jar:/data/zk/bin/../lib/audience-annotations-0.5.0.jar:/data/zk/bin/../zookeeper-*.jar:/data/zk/bin/../zookeeper-server/src/main/resources/lib/*.jar:/data/zk/bin/../conf:.:/opt/jdk/lib:/opt/jdk/jre/lib:/opt/jdk/lib/tools.jar -Xmx1000m -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.local.only=false org.apache.zookeeper.server.quorum.QuorumPeerMain /data/zk/bin/../conf/zoo.cfg

root 7033 4049 0 21:54 pts/0 00:00:00 grep --color=auto zookeeper

[root@mcwkafka01 /usr/local/src]$ lsof -i:2181

-bash: lsof: command not found 查看三个节点的zookeeper角色

[root@mcwkafka01 /usr/local/src]$ /data/zk/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /data/zk/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower [root@mcwkafka02 /usr/local/src]$ /data/zk/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /data/zk/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower [root@mcwkafka03 /usr/local/src]$ /data/zk/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /data/zk/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

2)安装kafka(三个节点同样操作)

下载地址:http://mirrors.shu.edu.cn/apache/kafka/1.1.0/kafka_2.11-1.1.0.tgz

[root@mcwkafka01 /usr/local/src]$ wget http://mirrors.shu.edu.cn/apache/kafka/1.1.0/kafka_2.11-1.1.0.tgz

[root@mcwkafka01 /usr/local/src]$ ls

apache-zookeeper-3.5.9-bin.tar.gz kafka_2.11-1.0.1.tgz

[root@mcwkafka01 /usr/local/src]$ tar xf kafka_2.11-1.0.1.tgz

[root@mcwkafka01 /usr/local/src]$ mv kafka_2.11-1.0.1 /data/kafka 进入kafka下面的config目录,修改配置文件server.properties:

[root@mcwkafka03 /usr/local/src]$ cp /data/kafka/config/server.properties /data/kafka/config/server.properties.bak

[root@mcwkafka03 /usr/local/src]$ ls /data/kafka/

bin config libs LICENSE NOTICE site-docs

[root@mcwkafka03 /usr/local/src]$ ls /data/kafka/config/

connect-console-sink.properties connect-file-sink.properties connect-standalone.properties producer.properties tools-log4j.properties

connect-console-source.properties connect-file-source.properties consumer.properties server.properties zookeeper.properties

connect-distributed.properties connect-log4j.properties log4j.properties server.properties.bak

[root@mcwkafka03 /usr/local/src]$ ls /data/kafka/bin/

connect-distributed.sh kafka-consumer-groups.sh kafka-reassign-partitions.sh kafka-streams-application-reset.sh zookeeper-server-start.sh

connect-standalone.sh kafka-consumer-perf-test.sh kafka-replay-log-producer.sh kafka-topics.sh zookeeper-server-stop.sh

kafka-acls.sh kafka-delete-records.sh kafka-replica-verification.sh kafka-verifiable-consumer.sh zookeeper-shell.sh

kafka-broker-api-versions.sh kafka-log-dirs.sh kafka-run-class.sh kafka-verifiable-producer.sh

kafka-configs.sh kafka-mirror-maker.sh kafka-server-start.sh trogdor.sh

kafka-console-consumer.sh kafka-preferred-replica-election.sh kafka-server-stop.sh windows

kafka-console-producer.sh kafka-producer-perf-test.sh kafka-simple-consumer-shell.sh zookeeper-security-migration.sh

[root@mcwkafka03 /usr/local/src]$

[root@mcwkafka01 /usr/local/src]$ vim /data/kafka/config/server.properties

[root@mcwkafka01 /usr/local/src]$ cat /data/kafka/config/server.properties

broker.id=0

delete.topic.enable=true

listeners=PLAINTEXT://10.0.0.131:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/data/kafka/data

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.flush.interval.messages=10000

log.flush.interval.ms=1000

log.retention.hours=168

log.retention.bytes=1073741824

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.0.0.131:2181,10.0.0.132:2181,10.0.0.133:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0 其他两个节点的server.properties只需要修改下面两行,其他配置都一样

[root@mcwkafka02 /usr/local/src]$ egrep "listeners|broker" /data/kafka/config/server.properties

broker.id=1

listeners=PLAINTEXT://10.0.0.132:9092 [root@mcwkafka03 /usr/local/src]$ egrep "listeners|broker" /data/kafka/config/server.properties

broker.id=2

listeners=PLAINTEXT://10.0.0.133:9092 启动三个节点的kafka服务

[root@mcwkafka01 /usr/local/src]$ nohup /data/kafka/bin/kafka-server-start.sh /data/kafka/config/server.properties >/dev/null 2>&1 &

[1] 7224

[root@mcwkafka01 /usr/local/src]$ ls

apache-zookeeper-3.5.9-bin.tar.gz kafka_2.11-1.0.1.tgz

[root@mcwkafka01 /usr/local/src]$ lsof -i:9092

[root@mcwkafka01 /usr/local/src]$ ss -lntup|grep 9092

[root@mcwkafka01 /usr/local/src]$

[root@mcwkafka03 /usr/local/src]$ ss -lntup|grep 9092

tcp LISTEN 0 50 ::ffff:10.0.0.133:9092 :::* users:(("java",pid=5992,fd=103))

[root@mcwkafka02 /usr/local/src]$ ss -lntup|grep 9092

tcp LISTEN 0 50 ::ffff:10.0.0.132:9092 :::* users:(("java",pid=6766,fd=103))

验证服务

随便在其中一台节点主机执行

/data/kafka/bin/kafka-topics.sh --create --zookeeper 10.0.0.131:2181,10.0.0.132:2181,10.0.0.133:2181 --replication-factor 1 --partitions 1 --topic test [root@mcwkafka01 /usr/local/src]$ /data/kafka/bin/kafka-topics.sh --create --zookeeper 10.0.0.131:2181,10.0.0.132:2181,10.0.0.133:2181 --replication-factor 1 --partitions 1 --topic test

Created topic "test". /data/kafka/bin/kafka-topics.sh --list --zookeeper 10.0.0.131:2181,10.0.0.132:2181,10.0.0.133:2181 [root@mcwkafka02 /usr/local/src]$ /data/kafka/bin/kafka-topics.sh --list --zookeeper 10.0.0.131:2181,10.0.0.132:2181,10.0.0.133:2181

test 到此,kafka集群环境已部署完成!

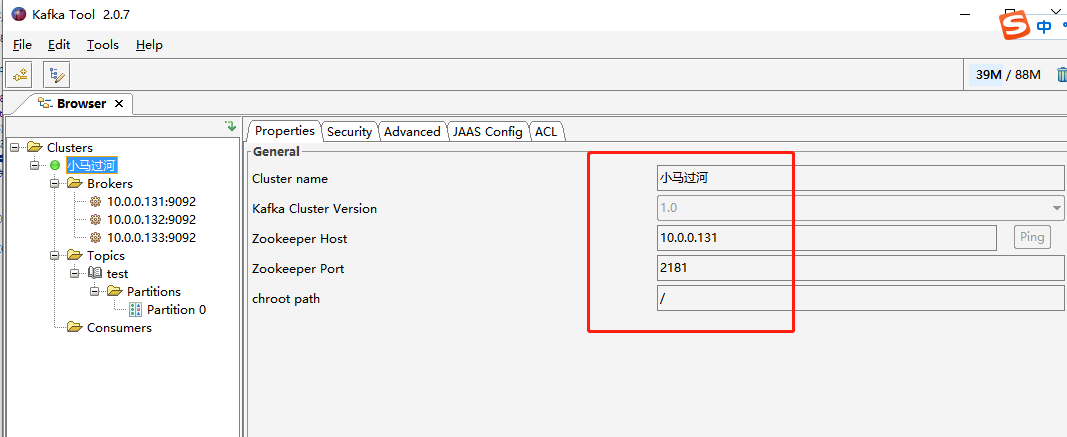

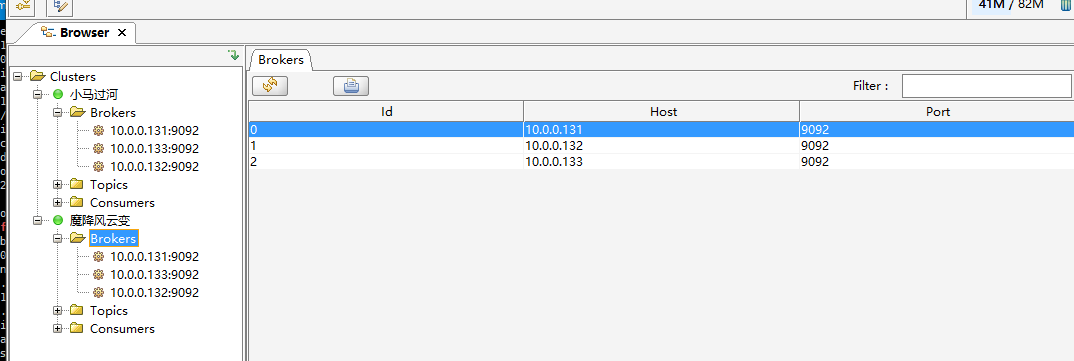

客户端连接工具

连接配置:

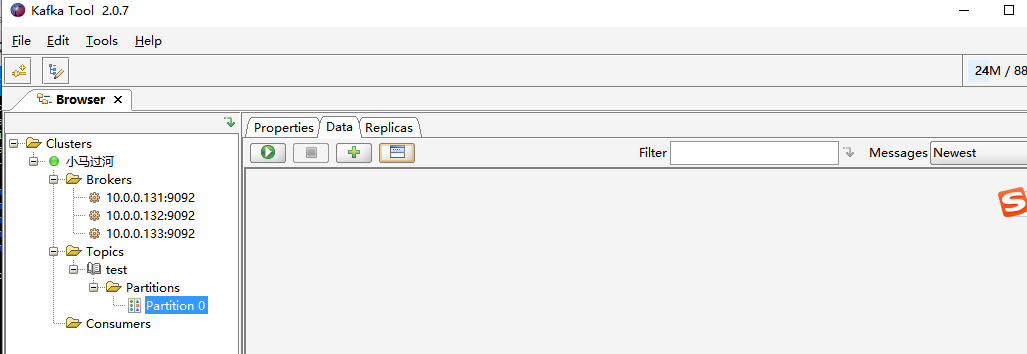

连接查看,之前命令行创建的test

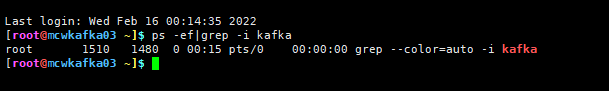

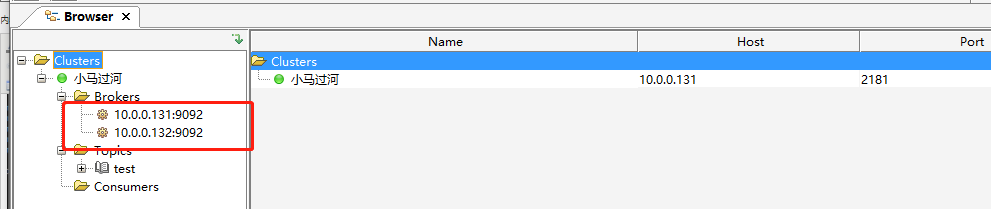

某次网络不通带来的问题Error contacting service. It is probably not running.

当重新启动虚拟机后,有个节点进程挂了

可以看到少了个节点

[root@mcwkafka02 ~]$ /data/zk/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /data/zk/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Error contacting service. It is probably not running.

[root@mcwkafka02 ~]$ ping mcwkafka02

PING mcwkafka02 (10.0.0.132) 56(84) bytes of data.

^C

--- mcwkafka02 ping statistics ---

9 packets transmitted, 0 received, 100% packet loss, time 8009ms [root@mcwkafka02 ~]$ systemctl restart network

[root@mcwkafka02 ~]$ vim /etc/resolv.conf

[root@mcwkafka02 ~]$ ping mcwkafka02

PING mcwkafka02 (10.0.0.132) 56(84) bytes of data.

64 bytes from mcwkafka02 (10.0.0.132): icmp_seq=1 ttl=64 time=0.106 ms

^C

--- mcwkafka02 ping statistics ---

[root@mcwkafka02 ~]$ /data/zk/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /data/zk/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

[root@mcwkafka02 ~]$

broker还是只有两个,后面再看,发现kafka节点进程挂了一个。重新启动132上的kafka后,broker从2变成了3

kafka命令行使用

1)创建Topic

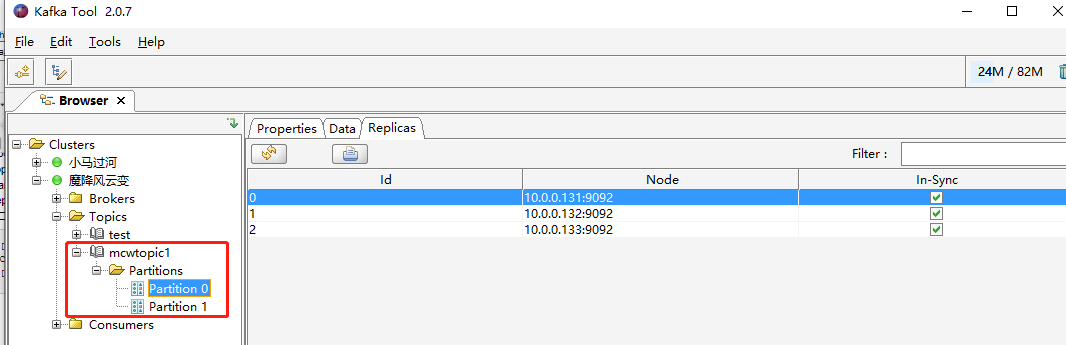

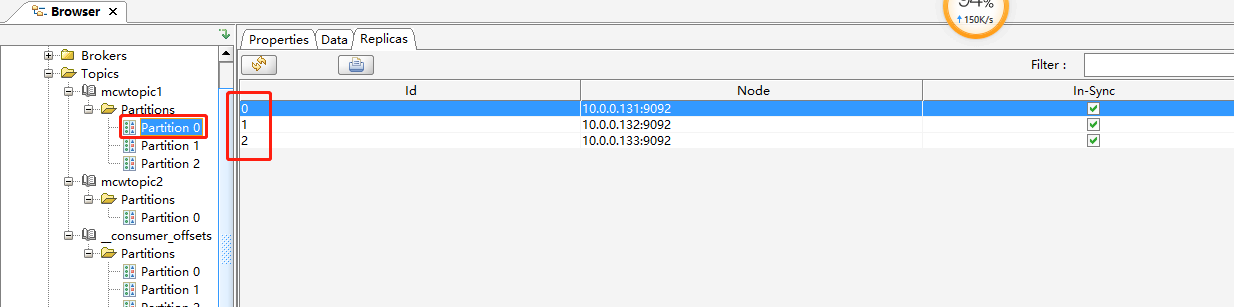

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --create --zookeeper mcwkafka02:2181 --topic mcwtopic1 --partitions 2 --replication-factor 3

Created topic "mcwtopic1".

[root@mcwkafka01 ~]$

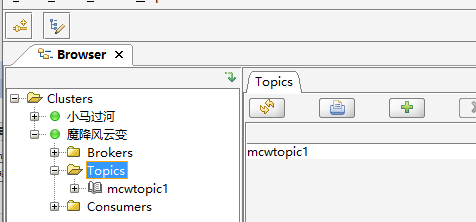

右击刷新,可以看到刚刚创建的topic

2)查看所有的Topic

mcwkafka02 zk leader节点

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --create --zookeeper mcwkafka02:2181 --topic mcwtopic1 --partitions 2 --replication-factor 3

Created topic "mcwtopic1".

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --zookeeper mcwkafka02:2181 --list

mcwtopic1

test

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --list --bootstrap-server mcwkafka02:9092

Exception in thread "main" joptsimple.UnrecognizedOptionException: bootstrap-server is not a recognized option

at joptsimple.OptionException.unrecognizedOption(OptionException.java:108)

at joptsimple.OptionParser.handleLongOptionToken(OptionParser.java:510)

at joptsimple.OptionParserState$2.handleArgument(OptionParserState.java:56)

at joptsimple.OptionParser.parse(OptionParser.java:396)

at kafka.admin.TopicCommand$TopicCommandOptions.<init>(TopicCommand.scala:352)

at kafka.admin.TopicCommand$.main(TopicCommand.scala:44)

at kafka.admin.TopicCommand.main(TopicCommand.scala)

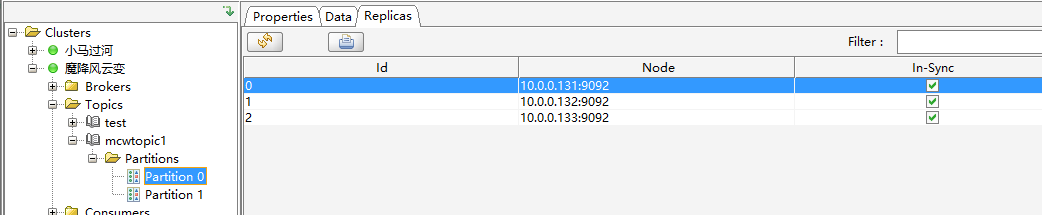

3)查看topic的详细信息

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --describe --zookeeper mcwkafka02:2181 --topic mcwtopic1 #查询指定topic

Topic:mcwtopic1 PartitionCount:2 ReplicationFactor:3 Configs:

Topic: mcwtopic1 Partition: 0 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2

Topic: mcwtopic1 Partition: 1 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --describe --zookeeper mcwkafka02:2181 --topic xxx #查询不存在的topic

[root@mcwkafka01 ~]$

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --describe --zookeeper mcwkafka02:2181 #没指定就查询所有的topic

Topic:mcwtopic1 PartitionCount:2 ReplicationFactor:3 Configs:

Topic: mcwtopic1 Partition: 0 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2

Topic: mcwtopic1 Partition: 1 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0

Topic:test PartitionCount:1 ReplicationFactor:1 Configs:

Topic: test Partition: 0 Leader: 0 Replicas: 0 Isr: 0

[root@mcwkafka01 ~]$

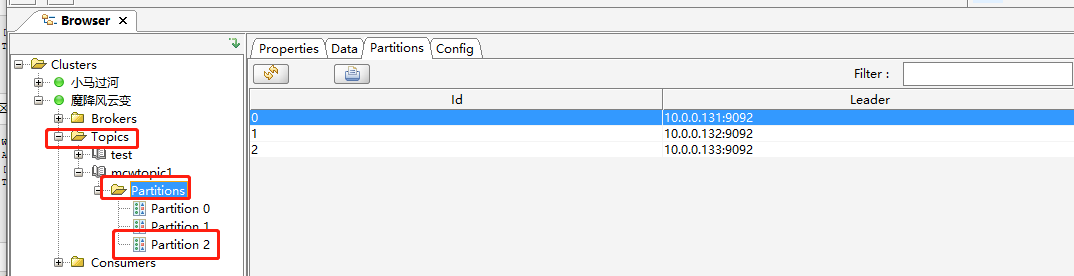

4)修改topic

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --describe --zookeeper mcwkafka02:2181 --topic mcwtopic1

Topic:mcwtopic1 PartitionCount:2 ReplicationFactor:3 Configs:

Topic: mcwtopic1 Partition: 0 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2

Topic: mcwtopic1 Partition: 1 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0

分区修改为3

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --describe --zookeeper mcwkafka02:2181 --topic mcwtopic1

Topic:mcwtopic1 PartitionCount:3 ReplicationFactor:3 Configs:

Topic: mcwtopic1 Partition: 0 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2

Topic: mcwtopic1 Partition: 1 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0

Topic: mcwtopic1 Partition: 2 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1

右击刷新分区才能出来,刷新topics不行

5)删除topic

当kafka是旧版本时,需要先更改server.propertites里的文件,再删除

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --zookeeper mcwkafka02:2181 --list

mcwtopic1

test

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --delete --zookeeper mcwkafka02:2181 --topic test

Topic test is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --zookeeper mcwkafka02:2181 --list

mcwtopic1

[root@mcwkafka01 ~]$

已删除

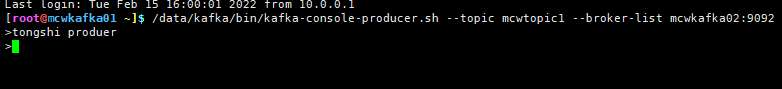

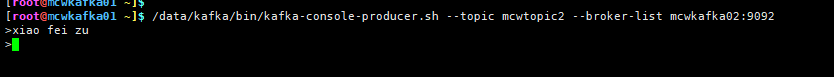

6)生产者

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-topics.sh --zookeeper mcwkafka02:2181 --list

mcwtopic1

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-console-producer.sh --topic mcwtopic1 --broker-list mcwkafka02:9092

>wo shi

mac>hangwei^H^H^H^C[root@mcwkafka01 ~]$ ^C

[root@mcwkafka01 ~]$

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-console-producer.sh --topic mcwtopic1 --broker-list mcwkafka02:9092

>wo shi

>machangwei

>

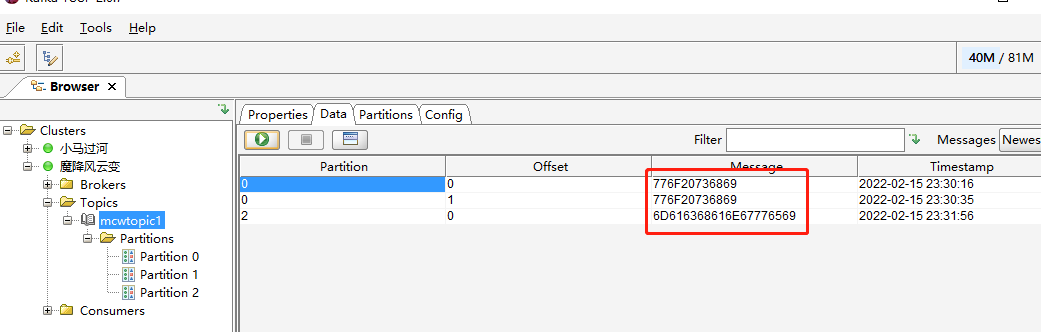

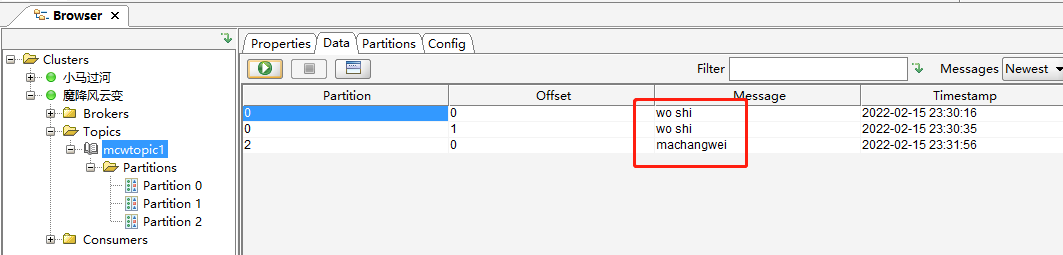

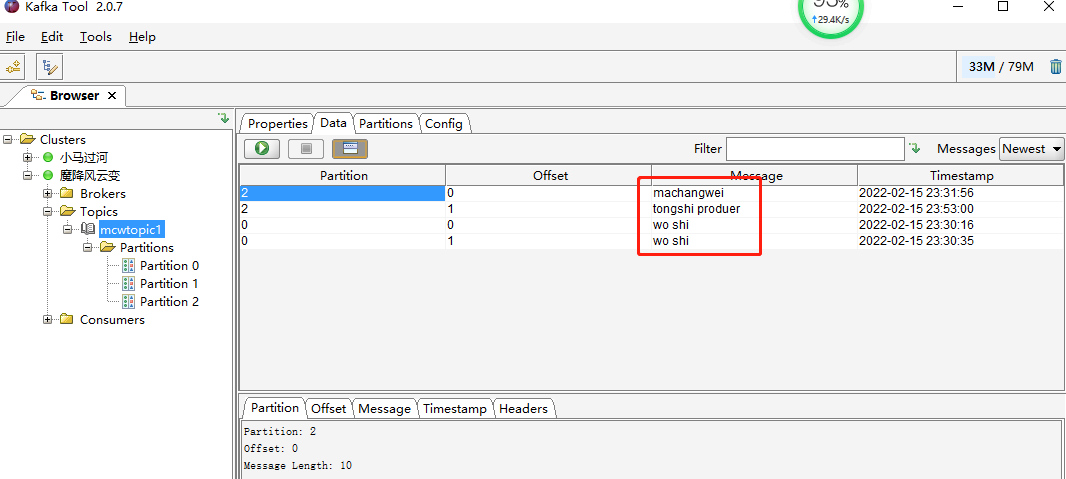

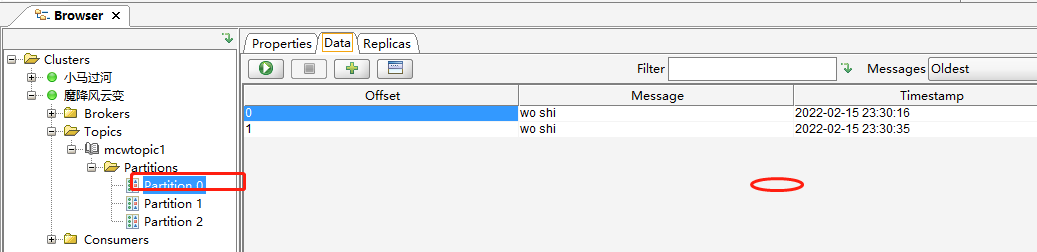

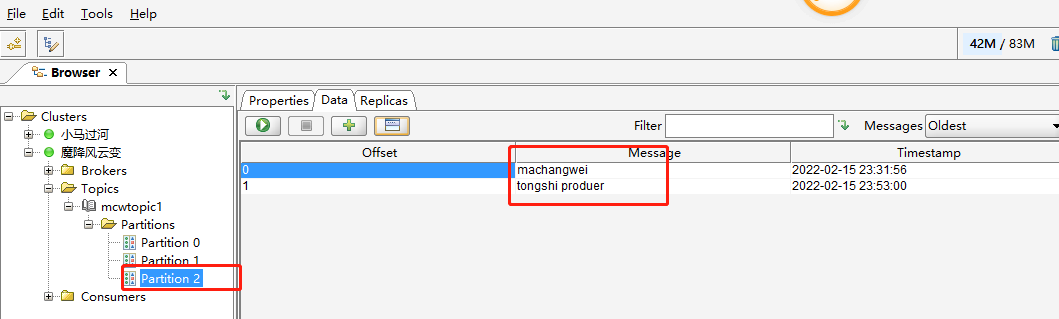

可以从工具中看到消息

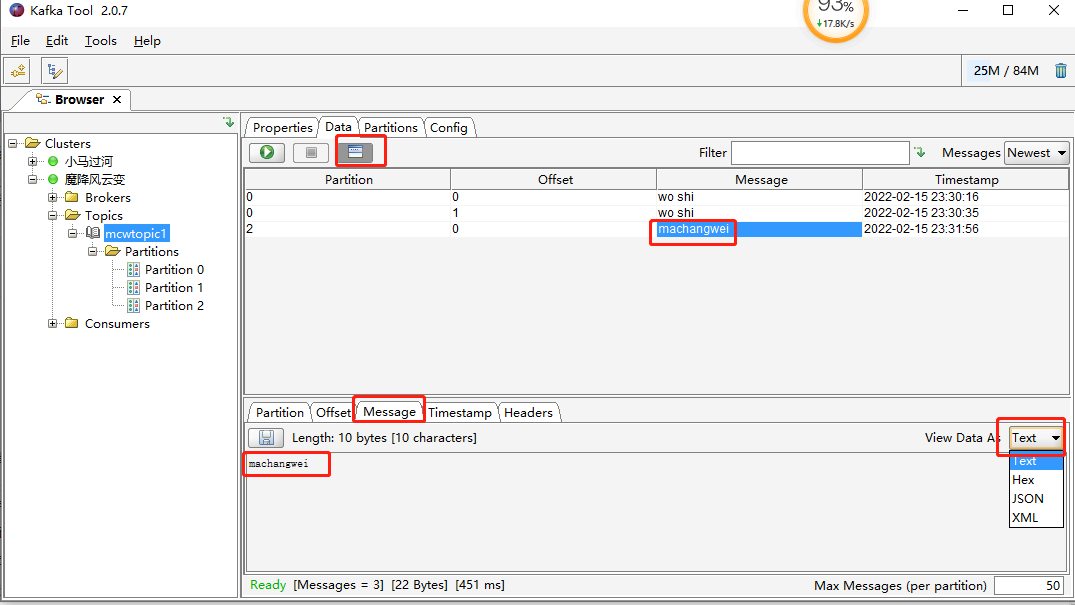

把这个topic的属性中修改为字符串,点击更新

然后就可以看到这个消息解码后的内容了

选中消息,可以看道下面有消息的内容,可以全选消息内容复制出来

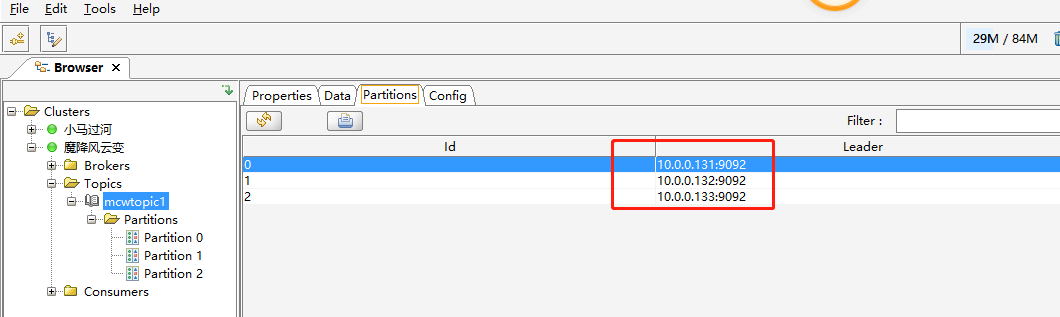

partitions可以看到分区对应的kafka节点,及时命令行ctrl+c退出>消息的编辑,但是发出去的消息还在kafka里面的

,

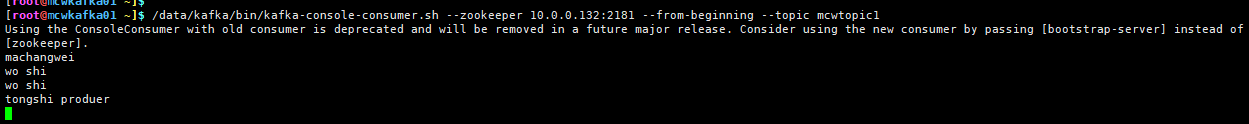

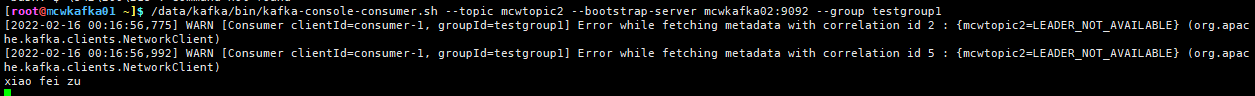

7)消费者以及工具使用

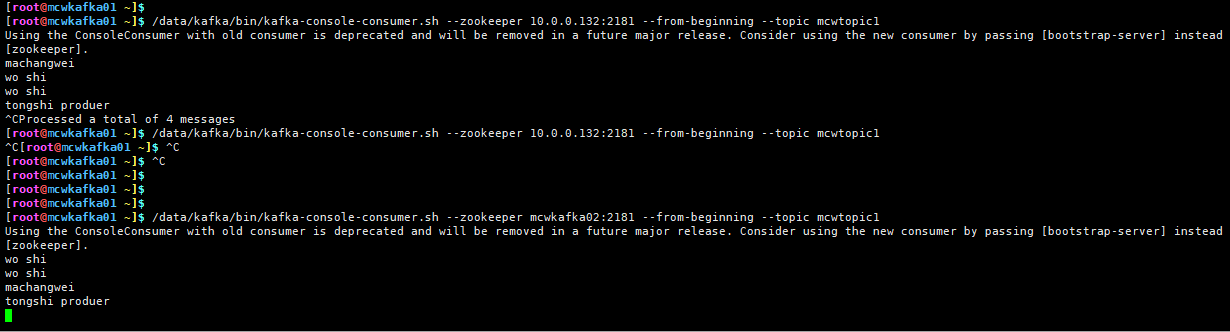

#消费消息

kafka-console-consumer.sh --zookeeper hadoop-001:2181 --from-beginning --topic first

当执行了消费者之后,如果没有消息产生,它一直卡在这里不动,监听新的消息到来

[root@mcwkafka01 ~]$ /data/kafka/bin/kafka-console-consumer.sh --zookeeper 10.0.0.132:2181 --from-beginning --topic mcwtopic1

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

machangwei

wo shi

wo shi

当生产这这里生产了数据时

命令行消费者同时监听到消息并打印了出来

虽然消费了,但是这里消息还是存在的,即使命令行ctrl+c退出了,消息还在kakfa里面,

只看分区0的消息

只看分区2的消息

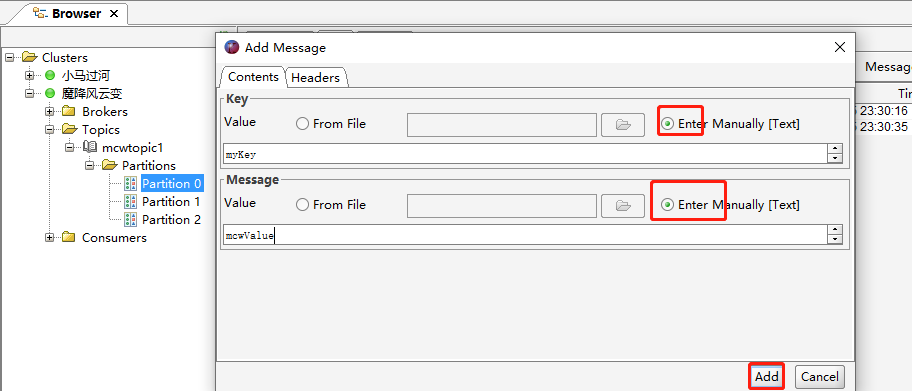

点击添加消息:

添加

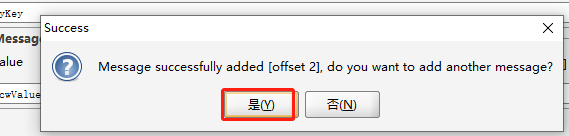

当点击了add之后,已经添加了,如果点击是,可以进行添加下一个

当前所有的消息,分区1是在主节点上,貌似默认它没选择zk主节点上去保存消息

8)疑问:为什么启动一个新的消费者消费不到topic中已有的消息?

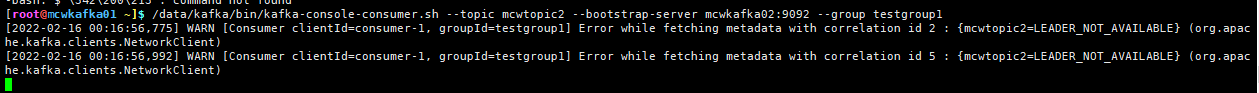

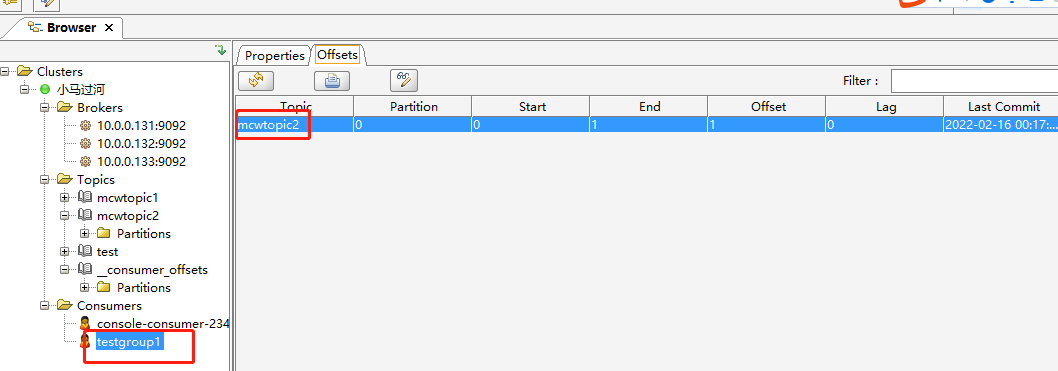

9)消费者组:

1)启动消费者指定配置文件,读取配置文件中的group.id配置的组名

这里的配置文件,指的是kafka-2.4.1/config/consumer.properties

进入配置文件后,可以通过group id配置组名

2)启动消费者时通过--group来指定组名

kafka-console-consumer.sh --topic second --bootstrap-server hadoop102:9092 --group testgroup

执行消费组命令

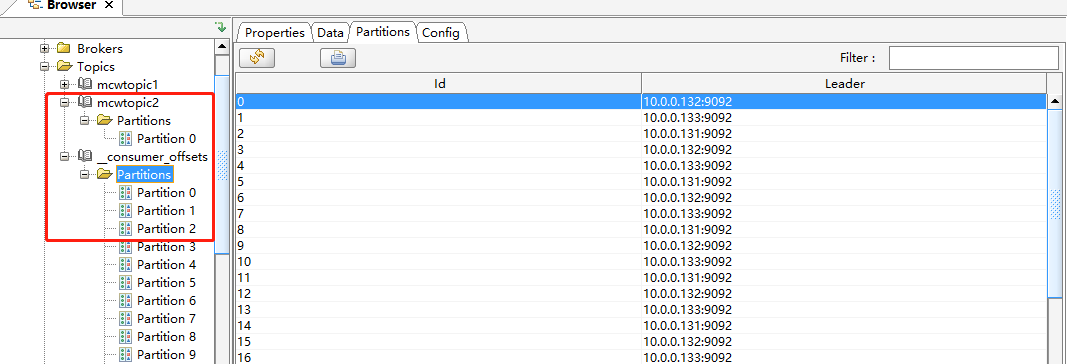

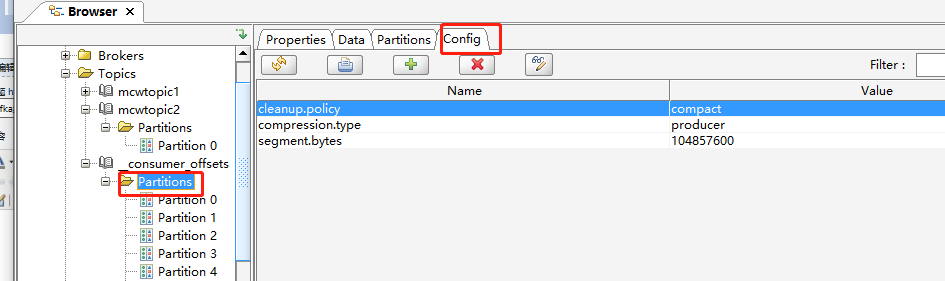

可以看到直接生成两个新的topic,其中一个有一个partitions,另一个带下划线的消费组有0-49的partitions.

可以看到它的配置

往topic2里生产一个消息

然后消费组收到了

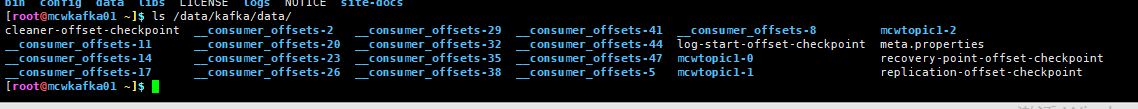

10)从目录的角度看topic

1)通过存储的角度观察

offset文件存储在每台kafka节点的

datas目录下,

格式为__consumer_offsets-xxx

分区0在主机1节点上有一个副本 ,所以这里显示topic1-分区0吧,表示节点上有分区0的一个副本

zookeeper命令

[root@mcwkafka03 ~]$ /data/zk/bin/zkCli.sh -server 10.0.0.132:2181 #连接zk

Connecting to 10.0.0.132:2181

2022-02-17 02:10:11,476 [myid:] - INFO [main:Environment@109] - Client environment:zookeeper.version=3.5.9-83df9301aa5c2a5d284a9940177808c01bc35cef, built on 01/06/2021 19:49 GMT

2022-02-17 02:10:11,481 [myid:] - INFO [main:Environment@109] - Client environment:host.name=mcwkafka03

2022-02-17 02:10:11,481 [myid:] - INFO [main:Environment@109] - Client environment:java.version=1.8.0_202

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:java.vendor=Oracle Corporation

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:java.home=/opt/jdk/jre

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:java.class.path=/data/zk/bin/../zookeeper-server/target/classes:/data/zk/bin/../build/classes:/data/zk/bin/../zookeeper-server/target/lib/*.jar:/data/zk/bin/../build/lib/*.jar:/data/zk/bin/../lib/zookeeper-jute-3.5.9.jar:/data/zk/bin/../lib/zookeeper-3.5.9.jar:/data/zk/bin/../lib/slf4j-log4j12-1.7.25.jar:/data/zk/bin/../lib/slf4j-api-1.7.25.jar:/data/zk/bin/../lib/netty-transport-native-unix-common-4.1.50.Final.jar:/data/zk/bin/../lib/netty-transport-native-epoll-4.1.50.Final.jar:/data/zk/bin/../lib/netty-transport-4.1.50.Final.jar:/data/zk/bin/../lib/netty-resolver-4.1.50.Final.jar:/data/zk/bin/../lib/netty-handler-4.1.50.Final.jar:/data/zk/bin/../lib/netty-common-4.1.50.Final.jar:/data/zk/bin/../lib/netty-codec-4.1.50.Final.jar:/data/zk/bin/../lib/netty-buffer-4.1.50.Final.jar:/data/zk/bin/../lib/log4j-1.2.17.jar:/data/zk/bin/../lib/json-simple-1.1.1.jar:/data/zk/bin/../lib/jline-2.14.6.jar:/data/zk/bin/../lib/jetty-util-ajax-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-util-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-servlet-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-server-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-security-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-io-9.4.35.v20201120.jar:/data/zk/bin/../lib/jetty-http-9.4.35.v20201120.jar:/data/zk/bin/../lib/javax.servlet-api-3.1.0.jar:/data/zk/bin/../lib/jackson-databind-2.10.5.1.jar:/data/zk/bin/../lib/jackson-core-2.10.5.jar:/data/zk/bin/../lib/jackson-annotations-2.10.5.jar:/data/zk/bin/../lib/commons-cli-1.2.jar:/data/zk/bin/../lib/audience-annotations-0.5.0.jar:/data/zk/bin/../zookeeper-*.jar:/data/zk/bin/../zookeeper-server/src/main/resources/lib/*.jar:/data/zk/bin/../conf:.:/opt/jdk/lib:/opt/jdk/jre/lib:/opt/jdk/lib/tools.jar

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:java.io.tmpdir=/tmp

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:java.compiler=<NA>

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:os.name=Linux

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:os.arch=amd64

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:os.version=3.10.0-693.el7.x86_64

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:user.name=root

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:user.home=/root

2022-02-17 02:10:11,484 [myid:] - INFO [main:Environment@109] - Client environment:user.dir=/root

2022-02-17 02:10:11,485 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.free=13MB

2022-02-17 02:10:11,486 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.max=247MB

2022-02-17 02:10:11,486 [myid:] - INFO [main:Environment@109] - Client environment:os.memory.total=15MB

2022-02-17 02:10:11,503 [myid:] - INFO [main:ZooKeeper@868] - Initiating client connection, connectString=10.0.0.132:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@4c98385c

2022-02-17 02:10:11,523 [myid:] - INFO [main:X509Util@79] - Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation

2022-02-17 02:10:11,539 [myid:] - INFO [main:ClientCnxnSocket@237] - jute.maxbuffer value is 4194304 Bytes

2022-02-17 02:10:11,573 [myid:] - INFO [main:ClientCnxn@1653] - zookeeper.request.timeout value is 0. feature enabled=

Welcome to ZooKeeper!

2022-02-17 02:10:11,623 [myid:10.0.0.132:2181] - INFO [main-SendThread(10.0.0.132:2181):ClientCnxn$SendThread@1112] - Opening socket connection to server mcwkafka02/10.0.0.132:2181. Will not attempt to authenticate using SASL (unknown error)

JLine support is enabled

2022-02-17 02:10:12,035 [myid:10.0.0.132:2181] - INFO [main-SendThread(10.0.0.132:2181):ClientCnxn$SendThread@959] - Socket connection established, initiating session, client: /10.0.0.133:37512, server: mcwkafka02/10.0.0.132:2181

2022-02-17 02:10:12,094 [myid:10.0.0.132:2181] - INFO [main-SendThread(10.0.0.132:2181):ClientCnxn$SendThread@1394] - Session establishment complete on server mcwkafka02/10.0.0.132:2181, sessionid = 0x200005b7a3d0005, negotiated timeout = 30000 WATCHER:: WatchedEvent state:SyncConnected type:None path:null

[zk: 10.0.0.132:2181(CONNECTED) 0] ls / #查看 ZooKeeper 所包含的内容

[admin, brokers, cluster, config, consumers, controller, controller_epoch, isr_change_notification, latest_producer_id_block, log_dir_event_notification, zookeeper]

[zk: 10.0.0.132:2181(CONNECTED) 1] help

ZooKeeper -server host:port cmd args

addauth scheme auth

close

config [-c] [-w] [-s]

connect host:port

create [-s] [-e] [-c] [-t ttl] path [data] [acl]

delete [-v version] path

deleteall path

delquota [-n|-b] path

get [-s] [-w] path

getAcl [-s] path

history

listquota path

ls [-s] [-w] [-R] path

ls2 path [watch]

printwatches on|off

quit

reconfig [-s] [-v version] [[-file path] | [-members serverID=host:port1:port2;port3[,...]*]] | [-add serverId=host:port1:port2;port3[,...]]* [-remove serverId[,...]*]

redo cmdno

removewatches path [-c|-d|-a] [-l]

rmr path

set [-s] [-v version] path data

setAcl [-s] [-v version] [-R] path acl

setquota -n|-b val path

stat [-w] path

sync path

Command not found: Command not found help

[zk: 10.0.0.132:2181(CONNECTED) 2] ls /

[admin, brokers, cluster, config, consumers, controller, controller_epoch, isr_change_notification, latest_producer_id_block, log_dir_event_notification, zookeeper]

[zk: 10.0.0.132:2181(CONNECTED) 3] ls brokers

Path must start with / character

[zk: 10.0.0.132:2181(CONNECTED) 4] ls /brokers

[ids, seqid, topics]

[zk: 10.0.0.132:2181(CONNECTED) 5] ls /brokers/ids

[0, 1, 2]

[zk: 10.0.0.132:2181(CONNECTED) 6] ls /brokers/ids/0

[]

[zk: 10.0.0.132:2181(CONNECTED) 7] get /brokers/ids/0 #查看以及获取

{"listener_security_protocol_map":{"PLAINTEXT":"PLAINTEXT"},"endpoints":["PLAINTEXT://10.0.0.131:9092"],"jmx_port":-1,"host":"10.0.0.131","timestamp":"1645063143117","port":9092,"version":4}

[zk: 10.0.0.132:2181(CONNECTED) 8] get /brokers/ids/1

{"listener_security_protocol_map":{"PLAINTEXT":"PLAINTEXT"},"endpoints":["PLAINTEXT://10.0.0.132:9092"],"jmx_port":-1,"host":"10.0.0.132","timestamp":"1645063166889","port":9092,"version":4}

[zk: 10.0.0.132:2181(CONNECTED) 9] get /brokers/ids/3

org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /brokers/ids/3

[zk: 10.0.0.132:2181(CONNECTED) 10] get /brokers/ids/2

{"listener_security_protocol_map":{"PLAINTEXT":"PLAINTEXT"},"endpoints":["PLAINTEXT://10.0.0.133:9092"],"jmx_port":-1,"host":"10.0.0.133","timestamp":"1645034387402","port":9092,"version":4}

[zk: 10.0.0.132:2181(CONNECTED) 11] get /brokers/ids/

Path must not end with / character

[zk: 10.0.0.132:2181(CONNECTED) 12] ls cluster

Path must start with / character

[zk: 10.0.0.132:2181(CONNECTED) 13] ls /cluster

[id]

[zk: 10.0.0.132:2181(CONNECTED) 14] ls /cluster/id

[]

[zk: 10.0.0.132:2181(CONNECTED) 15] get /cluster/id

{"version":"1","id":"SoDXd6jIQCuEIfnSv_FQYg"}

[zk: 10.0.0.132:2181(CONNECTED) 16] ls /zookeeper

[config, quota]

[zk: 10.0.0.132:2181(CONNECTED) 17] ls /admin

[delete_topics]

[zk: 10.0.0.132:2181(CONNECTED) 18] ls /admin/delete_topics

[]

[zk: 10.0.0.132:2181(CONNECTED) 19] get /admin/delete_topics

null

[zk: 10.0.0.132:2181(CONNECTED) 20] ls /con

config consumers controller controller_epoch

[zk: 10.0.0.132:2181(CONNECTED) 20] ls /consumers

[console-consumer-23437]

[zk: 10.0.0.132:2181(CONNECTED) 21] #创建一个新的 znode ,使用 create /zk myData 。这个命令创建了一个新的 znode 节点“ zk ”以及与它关联的字符串:

ZooKeeper -server host:port cmd args

addauth scheme auth

close

config [-c] [-w] [-s]

connect host:port

create [-s] [-e] [-c] [-t ttl] path [data] [acl]

delete [-v version] path

deleteall path

delquota [-n|-b] path

get [-s] [-w] path

getAcl [-s] path

history

listquota path

ls [-s] [-w] [-R] path

ls2 path [watch]

printwatches on|off

quit

reconfig [-s] [-v version] [[-file path] | [-members serverID=host:port1:port2;port3[,...]*]] | [-add serverId=host:port1:port2;port3[,...]]* [-remove serverId[,...]*]

redo cmdno

removewatches path [-c|-d|-a] [-l]

rmr path

set [-s] [-v version] path data

setAcl [-s] [-v version] [-R] path acl

setquota -n|-b val path

stat [-w] path

sync path

Command not found: Command not found #创建一个新的

[zk: 10.0.0.132:2181(CONNECTED) 22] create /zk myData

Created /zk

[zk: 10.0.0.132:2181(CONNECTED) 24] ls /

[admin, brokers, cluster, config, consumers, controller, controller_epoch, isr_change_notification, latest_producer_id_block, log_dir_event_notification, zk, zookeeper]

[zk: 10.0.0.132:2181(CONNECTED) 25] ls /zk

[]

[zk: 10.0.0.132:2181(CONNECTED) 26] get /zk

myData

[zk: 10.0.0.132:2181(CONNECTED) 27] ls /con

config consumers controller controller_epoch

[zk: 10.0.0.132:2181(CONNECTED) 27] ls /config

[changes, clients, topics]

[zk: 10.0.0.132:2181(CONNECTED) 28] ls /config/topics

[__consumer_offsets, mcwtopic1, mcwtopic2, test]

[zk: 10.0.0.132:2181(CONNECTED) 29] ls /config/topics/mcwtopic1

[]

[zk: 10.0.0.132:2181(CONNECTED) 30] get /config/topics/mcwtopic

mcwtopic1 mcwtopic2

[zk: 10.0.0.132:2181(CONNECTED) 30] get /config/topics/mcwtopic1

{"version":1,"config":{}}

[zk: 10.0.0.132:2181(CONNECTED) 31] get /zk

myData

[zk: 10.0.0.132:2181(CONNECTED) 32] set /zk mcw220217

[zk: 10.0.0.132:2181(CONNECTED) 33] get /zk

mcw220217

[zk: 10.0.0.132:2181(CONNECTED) 34] delete /zk #将刚才创建的 znode 删除

[zk: 10.0.0.132:2181(CONNECTED) 35] get /zk

org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /zk

[zk: 10.0.0.132:2181(CONNECTED) 36] ls /

[admin, brokers, cluster, config, consumers, controller, controller_epoch, isr_change_notification, latest_producer_id_block, log_dir_event_notification, zookeeper]

[zk: 10.0.0.132:2181(CONNECTED) 37] quit #退出 WATCHER:: WatchedEvent state:Closed type:None path:null

2022-02-17 02:21:43,519 [myid:] - INFO [main:ZooKeeper@1422] - Session: 0x200005b7a3d0005 closed

2022-02-17 02:21:43,520 [myid:] - INFO [main-EventThread:ClientCnxn$EventThread@524] - EventThread shut down for session: 0x200005b7a3d0005

[root@mcwkafka03 ~]$

参考:https://www.cnblogs.com/kevingrace/p/9021508.html

https://www.cnblogs.com/traveller-hzq/p/14105128.html

kafka集群(zookeeper)的更多相关文章

- kafka集群zookeeper集群详细配置

http://www.cnblogs.com/luotianshuai/p/5206662.html

- centos7搭建kafka集群-第二篇

好了,本篇开始部署kafka集群 Zookeeper集群搭建 注:Kafka集群是把状态保存在Zookeeper中的,首先要搭建Zookeeper集群(也可以用kafka自带的ZK,但不推荐) 1.软 ...

- kafka集群和zookeeper集群的部署,kafka的java代码示例

来自:http://doc.okbase.net/QING____/archive/19447.html 也可参考: http://blog.csdn.net/21aspnet/article/det ...

- kafka环境搭建2-broker集群+zookeeper集群(转)

原文地址:http://www.jianshu.com/p/dc4770fc34b6 zookeeper集群搭建 kafka是通过zookeeper来管理集群.kafka软件包内虽然包括了一个简版的z ...

- Zookeeper + Kafka 集群搭建

第一步:准备 1. 操作系统 CentOS-7-x86_64-Everything-1511 2. 安装包 kafka_2.12-0.10.2.0.tgz zookeeper-3.4.9.tar.gz ...

- HyperLedger Fabric基于zookeeper和kafka集群配置解析

简述 在搭建HyperLedger Fabric环境的过程中,我们会用到一个configtx.yaml文件(可参考Hyperledger Fabric 1.0 从零开始(八)--Fabric多节点集群 ...

- window环境搭建zookeeper,kafka集群

为了演示集群的效果,这里准备一台虚拟机(window 7),在虚拟机中搭建了单IP多节点的zookeeper集群(多IP节点的也是同理的),并且在本机(win 7)和虚拟机中都安装了kafka. 前期 ...

- zookeeper+kafka集群安装之二

zookeeper+kafka集群安装之二 此为上一篇文章的续篇, kafka安装需要依赖zookeeper, 本文与上一篇文章都是真正分布式安装配置, 可以直接用于生产环境. zookeeper安装 ...

- zookeeper+kafka集群安装之一

zookeeper+kafka集群安装之一 准备3台虚拟机, 系统是RHEL64服务版. 1) 每台机器配置如下: $ cat /etc/hosts ... # zookeeper hostnames ...

- Zookeeper+Kafka集群部署(转)

Zookeeper+Kafka集群部署 主机规划: 10.200.3.85 Kafka+ZooKeeper 10.200.3.86 Kafka+ZooKeeper 10.200.3.87 Kaf ...

随机推荐

- [P4551] 最长异或路径 题解

过程 手写利用DFS求出每个点到根节点的异或距离 不难得出 xor_dis[x][y]=xor_dis[0][x]^xor_dis[0][y] 于是树上异或问题转换成了Trie上异或问题. 代码 直接 ...

- 带你走进红帽企业级 Linux 6体验之旅(安装篇)

红帽在11月10日发布了其企业级Linux,RHEL 6的正式版(51CTO编辑注:红帽官方已经不用RHEL这个简称了,其全称叫做Red Hat Enterprise Linux).新版带来了将近18 ...

- python性能测试,请求QPS测试

QPS = (1000ms/平均响应时间ms)*服务并行数量 #!/user/bin/env python #coding=utf-8 import requests import datetime ...

- javascript现代编程系列教程之一:区块作用域对VAR不起作用的问题

在JavaScript中,使用var声明的变量具有函数作用域,而不是块级作用域.这意味着在一个函数内部,使用var声明的变量在整个函数范围内都是可见的,包括嵌套的块(如if语句.for循环等).为了避 ...

- 在Windows电脑上快速运行AI大语言模型-Llama3

概述 近期 Meta 发布了最新的 Llama3 模型,并开源了开源代码.Meta Llama 3 现已推出 8B 和 70B 预训练和指令调整版本,可支持广泛的应用程序. 并且 Llama 3 在语 ...

- 框架hash/history实现简单原理

1.hahs <!DOCTYPE html> <html lang="en"> <head> <meta charset="UT ...

- oracle表名、字段名等对象的命名长度限制(报错:ORA-00972: 标识符过长)

oracle表名.字段名等对象的命名长度限制(报错:ORA-00972: 标识符过长) 简单来说,出现了ORA-00972: 标识符过长的错误 找来找去发现是自己的中间表名太长导致的 Oracle数据 ...

- 牛客网-SQL专项练习1

①检索所有比"王华"年龄大的学生姓名.年龄和性别.SQL语句: 解析: 第一步:先找到王华的年龄 SELECT AGE FROM S WHRE SN = "王华" ...

- Serverless JOB | 传统任务新变革

简介: SAE Job 重点解决了用户的效率和成本问题,在兼具传统任务使用体验和功能的同时按需使用,按量计费,做到低门槛任务上云,节省闲置资源成本. Job 作为一种运完即停的负载类型,在企业级开发中 ...

- 基于Serverless的云原生转型实践

简介: 新一代的技术架构是什么?如何变革?是很多互联网企业面临的问题.而云原生架构则是这个问题最好的答案,因为云原生架构对云计算服务方式与互联网架构进行整体性升级,深刻改变着整个商业世界的 IT 根基 ...