报错:(未解决)Opening socket connection to server master/192.168.52.26:2181. Will not attempt to authenticate using SASL (unknown error)

报错背景:

CDH集群中,将kafka和Flume整合,将kafka的数据发送给Flume消费。

启动kafka的时候正常,但是启动Flume的时候出现了报错现象。

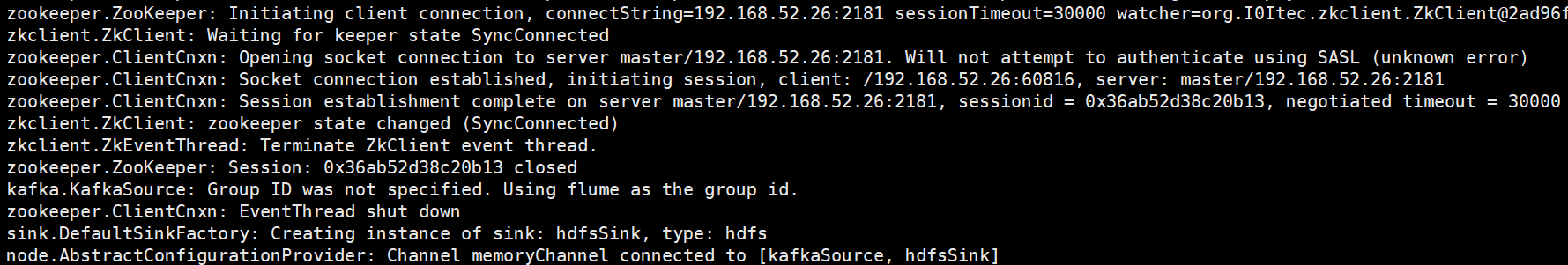

报错现象:

DH-5.15.-.cdh5.15.1.p0./lib/hadoop/lib/native:/opt/cloudera/parcels/CDH-5.15.-.cdh5.15.1.p0./lib/hbase/bin/../lib/native/Linux-amd64-

// :: INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

// :: INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

// :: INFO zookeeper.ZooKeeper: Client environment:os.name=Linux

// :: INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64

// :: INFO zookeeper.ZooKeeper: Client environment:os.version=3.10.-.el7.x86_64

// :: INFO zookeeper.ZooKeeper: Client environment:user.name=root

// :: INFO zookeeper.ZooKeeper: Client environment:user.home=/root

// :: INFO zookeeper.ZooKeeper: Client environment:user.dir=/opt/cloudera/parcels/CDH-5.15.-.cdh5.15.1.p0./etc/flume-ng/conf.empty

// :: INFO zookeeper.ZooKeeper: Initiating client connection, connectString=192.168.52.26: sessionTimeout= watcher=org.I0Itec.zkclient.ZkClient@2ad96f71

// :: INFO zkclient.ZkClient: Waiting for keeper state SyncConnected

// :: INFO zookeeper.ClientCnxn: Opening socket connection to server master/192.168.52.26:2181. Will not attempt to authenticate using SASL (unknown error)

// :: INFO zookeeper.ClientCnxn: Socket connection established, initiating session, client: /192.168.52.26:, server: master/192.168.52.26:

// :: INFO zookeeper.ClientCnxn: Session establishment complete on server master/192.168.52.26:, sessionid = 0x36ab52d38c20b13, negotiated timeout =

// :: INFO zkclient.ZkClient: zookeeper state changed (SyncConnected)

// :: INFO zkclient.ZkEventThread: Terminate ZkClient event thread.

// :: INFO zookeeper.ZooKeeper: Session: 0x36ab52d38c20b13 closed

// :: INFO kafka.KafkaSource: Group ID was not specified. Using flume as the group id.

// :: INFO zookeeper.ClientCnxn: EventThread shut down

// :: INFO sink.DefaultSinkFactory: Creating instance of sink: hdfsSink, type: hdfs

// :: INFO node.AbstractConfigurationProvider: Channel memoryChannel connected to [kafkaSource, hdfsSink]

// :: INFO node.Application: Starting new configuration:{ sourceRunners:{kafkaSource=PollableSourceRunner: { source:org.apache.flume.source.kafka.KafkaSource{name:kafkaSource,state:IDLE} counterGroup:{ name:null counters:{} } }} sinkRunners:{hdfsSink=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@414aa552 counterGroup:{ name:null counters:{} } }} channels:{memoryChannel=org.apache.flume.channel.MemoryChannel{name: memoryChannel}} }

// :: INFO node.Application: Starting Channel memoryChannel

// :: INFO node.Application: Waiting for channel: memoryChannel to start. Sleeping for ms

// :: INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: memoryChannel: Successfully registered new MBean.

// :: INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: memoryChannel started

// :: INFO node.Application: Starting Sink hdfsSink

// :: INFO node.Application: Starting Source kafkaSource

// :: INFO kafka.KafkaSource: Starting org.apache.flume.source.kafka.KafkaSource{name:kafkaSource,state:IDLE}...

// :: INFO zookeeper.ZooKeeper: Initiating client connection, connectString=192.168.52.26: sessionTimeout= watcher=org.I0Itec.zkclient.ZkClient@6bbdc6fd

// :: INFO zkclient.ZkEventThread: Starting ZkClient event thread.

// :: INFO zkclient.ZkClient: Waiting for keeper state SyncConnected

// :: INFO zookeeper.ClientCnxn: Opening socket connection to server master/192.168.52.26:. Will not attempt to authenticate using SASL (unknown error)

// :: INFO zookeeper.ClientCnxn: Socket connection established, initiating session, client: /192.168.52.26:, server: master/192.168.52.26:

// :: INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SINK, name: hdfsSink: Successfully registered new MBean.

// :: INFO instrumentation.MonitoredCounterGroup: Component type: SINK, name: hdfsSink started

// :: INFO zookeeper.ClientCnxn: Session establishment complete on server master/192.168.52.26:, sessionid = 0x36ab52d38c20b14, negotiated timeout =

// :: INFO zkclient.ZkClient: zookeeper state changed (SyncConnected)

// :: INFO consumer.ConsumerConfig: ConsumerConfig values:

auto.commit.interval.ms =

auto.offset.reset = latest

bootstrap.servers = [master:, worker1:, worker2:]

check.crcs = true

client.id =

connections.max.idle.ms =

enable.auto.commit = false

exclude.internal.topics = true

fetch.max.bytes =

fetch.max.wait.ms =

fetch.min.bytes =

group.id = flume

heartbeat.interval.ms =

interceptor.classes = null

internal.leave.group.on.close = true

key.deserializer = class org.apache.kafka.common.serialization.StringDeserializer

max.partition.fetch.bytes =

max.poll.interval.ms =

max.poll.records =

metadata.max.age.ms =

metric.reporters = []

metrics.num.samples =

metrics.recording.level = INFO

metrics.sample.window.ms =

partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor]

receive.buffer.bytes =

reconnect.backoff.ms =

request.timeout.ms =

retry.backoff.ms =

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin =

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes =

session.timeout.ms =

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1., TLSv1., TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

value.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer // :: WARN consumer.ConsumerConfig: The configuration 'timeout.ms' was supplied but isn't a known config.

// :: INFO utils.AppInfoParser: Kafka version : 0.10.-kafka-2.2.

// :: INFO utils.AppInfoParser: Kafka commitId : unknown

报错原因:

这个报错并不是Flume的原因,而是kafka的锅。kafka由于某些原因报错,导致Flume连接kafka的时候报错。解决报错的时候需要去定位并解决kafka的报错。

报错解决:

未解决。。。

报错:(未解决)Opening socket connection to server master/192.168.52.26:2181. Will not attempt to authenticate using SASL (unknown error)的更多相关文章

- 全网最详细的启动zkfc进程时,出现INFO zookeeper.ClientCnxn: Opening socket connection to server***/192.168.80.151:2181. Will not attempt to authenticate using SASL (unknown error)解决办法(图文详解)

不多说,直接上干货! at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:) at org ...

- Opening socket connection to server :2181. Will not attempt to authenticate using SASL (unknown error) hbase

问题: 在HBase机群搭建完成后,通过jdbc连接hbase,在连接zookeeper阶段出现Opening socket connection to server :2181. Will not ...

- 全网最详细的启动或格式化zkfc时出现java.net.NoRouteToHostException: No route to host ... Will not attempt to authenticate using SASL (unknown error)错误的解决办法(图文详解)

不多说,直接上干货! 全网最详细的启动zkfc进程时,出现INFO zookeeper.ClientCnxn: Opening socket connection to server***/192.1 ...

- 报错:Failed on local exception: Host Details : local host is: "master/192.168.52.26"; dest

报错现象 Failed on local exception: com.google.protobuf.InvalidProtocolBufferException: Protocol message ...

- zookeeper报错Will not attempt to authenticate using SASL (unknown error)

Will not attempt to authenticate using SASL (unknown error) 转自:http://blog.csdn.net/mo_xingwang/arti ...

- hive报错java.sql.SQLException: null, message from server: "Host '192.168.126.100' is not allowed to connect to this MySQL server"

- dubbo注册中心zookeeper出现异常 Opening socket connection to server 10.70.42.99/10.70.42.99:2181. Will not attempt to authenticate using SASL (无法定位登录配置)

linux下,zookeeper安装并启动起来了 DEMO时,JAVA控制台出现: INFO 2014-03-06 09:48:41,276 (ClientCnxn.java:966) - Openi ...

- 使用Mybatis连接到Mysql报错,WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be esta

在Eclipse中使用springboot整合Mybatis,连接到5.7版本Mysql报错WARN: Establishing SSL connection without server's ide ...

- git 报错及解决

报错:fatal: refusing to merge unrelated histories==== 解决办法:git pull加上参数,如:git pull –allow-unrelated-hi ...

随机推荐

- Oracle-分析函数之排序值rank()和dense_rank()

聚合函数RANK 和 dense_rank 主要的功能是计算一组数值中的排序值. 在9i版本之前,只有分析功能(analytic ),即从一个查询结果中计算每一行的排序值,是基于order_by_cl ...

- 快速开平方取倒数的算法--嵌入式ARM转载

#include<stdio.h> #include<string.h> #include <stdlib.h> /* atof */ /* 计算=1/sqrt(n ...

- Oracle中日期作为条件的查询

1.范围日期的查询: select * from goods where g_time betweento_date('2018/12/26 10:01:59','yyyy-MM-dd hh:mi:s ...

- Oracle DG 三种模式

DG有下面三种模式– Maximum protection– Maximum availability– Maximum performance 在Maximum protection下, 可以保证从 ...

- asp.net大文件分块上传断点续传demo

IE的自带下载功能中没有断点续传功能,要实现断点续传功能,需要用到HTTP协议中鲜为人知的几个响应头和请求头. 一. 两个必要响应头Accept-Ranges.ETag 客户端每次提交下载请求时,服务 ...

- RookeyFrame 删除 线下添加的model

环境:在model层已经添加了Crm_Cm_ContactInfo2 这个类,这个类现在已经添加到了数据库的,使用之前的方法(在前面的文章有提到该类) 删除步骤: 1.Sys_Module表 的字段 ...

- 39、Parquet数据源之自动分区推断&合并元数据

一.自动分区推断 1.概述 表分区是一种常见的优化方式,比如Hive中就提供了表分区的特性.在一个分区表中,不同分区的数据通常存储在不同的目录中, 分区列的值通常就包含在了分区目录的目录名中.Spar ...

- python模块之psutil

一.模块安装 1.简介 psutil是一个跨平台库(http://pythonhosted.org/psutil/)能够轻松实现获取系统运行的进程和系统利用率(包括CPU.内存.磁盘.网络等)信息. ...

- Adobe Acrobat DC

DC: document cloud [云服务] 但是Adobe document cloud包括: Acrobat DC, Adobe sign, 以及Web和移动应用程序. 参考: https:/ ...

- [nginx]nginx的一个奇葩问题 500 Internal Server Error phpstudy2018 nginx虚拟主机配置 fastadmin常见问题处理

[nginx]nginx的一个奇葩问题 500 Internal Server Error 解决方案 nginx 一直报500 Internal Server Error 错误,配置是通过phpstu ...