iOS: 并发编程的几个知识点

iOS 多线程问题

查阅的大部分资料都是英文的,整理完毕之后,想翻译成中文,却发现很多名字翻译成中文很难表述清楚。

所以直接把整理好的资料发出来,大家就当顺便学习学习英语。

1. Thread Safe Vs Main Thread Safe

Main Thread Safe means only safe execute on main thread;

Thread Safe means you can modify on any thread simultaneously;

2. ConditionLock Vs Condition

NSCondition

A condition variable whose semantics follow those used for POSIX-style conditions.

A condition is another type of semaphore that allows threads to signal each other when a certain condition is true. Conditions are typically used to indicate the availability of a resource or to ensure that tasks are performed in a specific order. When a thread tests a condition, it blocks unless that condition is already true. It remains blocked until some other thread explicitly changes and signals the condition. The difference between a condition and a mutex lock is that multiple threads may be permitted access to the condition at the same time. The condition is more of a gatekeeper that lets different threads through the gate depending on some specified criteria.

Due to the subtleties involved in implementing operating systems, condition locks are permitted to return with spurious success even if they were not actually signaled by your code. To avoid problems caused by these spurious signals, you should always use a predicate in conjunction with your condition lock.

When a thread waits on a condition, the condition object unlocks its lock and blocks the thread. When the condition is signaled, the system wakes up the thread. The condition object then reacquires its lock before returning from the wait or waitUntilDate: method. Thus, from the point of view of the thread, it is as if it always held the lock.

A boolean predicate is an important part of the semantics of using conditions because of the way signaling works. Signaling a condition does not guarantee that the condition itself is true. Using a predicate ensures that these spurious signals do not cause you to perform work before it is safe to do so. The predicate itself is simply a flag or other variable in your code that you test in order to acquire a Boolean result.

The semantics for using an NSCondition object is as follows:

- Lock the condition object.

- Test a boolean predicate. (This predicate is a boolean flag or other variable in your code that indicates whether it is safe to perform the task protected by the condition.)

- If the boolean predicate is false, call the condition object’s wait

or waitUntilDate: method to block the thread. Upon returning from these methods, go to step 2 to retest your boolean predicate. (Continue waiting and retesting the predicate until it is true.) - If the boolean predicate is true, perform the task.

- Optionally update any predicates (or signal any conditions) affected by your task.

- When your task is done, unlock the condition object.

lock the condition

while (!(boolean_predicate)) {

wait on condition

}

do protected work

(optionally, signal or broadcast the condition again or change a predicate value)

unlock the condition

NSCondition 的底层是通过pthread_mutex + pthread_cond_t 来实现的。

NSConditionLock

A lock that can be associated with specific, user-defined conditions.

Using an NSConditionLock object, you can ensure that a thread can acquire a lock only if a certain condition is met.

An NSConditionLock object defines a mutex lock that can be locked and unlocked with specific values.

NSConditionLock just support condition with a int, if you want support a custom condition value, you should use NSCondition.

用互斥所能不能实现生产者,消费者模型???

答案是: YES

参考资料:

https://web.stanford.edu/class/cs140/cgi-bin/lecture.php?topic=locks

http://blog.ibireme.com/2016/01/16/spinlock_is_unsafe_in_ios/

https://bestswifter.com/ios-lock/

3. @synchronized Directive

The object passed to the @synchronized directive is a unique identifier used to distinguish the protected block.

If you execute the preceding method in two different threads, passing a different object for the anObj parameter on each thread, each would take its lock and continue processing without being blocked by the other. If you pass the same object in both cases, however, one of the threads would acquire the lock first and the other would block until the first thread completed the critical section.

Several Common ways to use @synchronized wrong

- @synchronized(nil)

- @synchronized(][NSObject all] init])

Exceptions With @synchronized

As a precautionary measure, the @synchronized block implicitly adds an exception handler to the protected code. This handler automatically releases the mutex in the event that an exception is thrown. This means that in order to use the @synchronized directive, you must also enable Objective-C exception handling in your code.

If you do not want the additional overhead caused by the implicit exception handler, you should consider using the lock classes.

原理

OBJC_EXPORT int objc_sync_enter(id obj)

OBJC_AVAILABLE(10.3, 2.0, 9.0, 1.0); OBJC_EXPORT int objc_sync_exit(id obj)

OBJC_AVAILABLE(10.3, 2.0, 9.0, 1.0); @synchronized(obj) {

// do work

}

会被编译器转换为:

@try {

objc_sync_enter(obj);

// do work

} @finally {

objc_sync_exit(obj);

}

Example

结论:

- 你调用 sychronized 的每个对象,Objective-C runtime 都会为其分配一个递归锁并存储在哈希表中。

- 如果在 sychronized 内部对象被释放或被设为 nil 看起来都 OK。

- 注意不要向你的 sychronized block 传入 nil!这将会从代码中移走线程安全。

参考资料

http://rykap.com/objective-c/2015/05/09/synchronized/

http://yulingtianxia.com/blog/2015/11/01/More-than-you-want-to-know-about-synchronized/

https://opensource.apple.com/source/objc4/objc4-646/runtime/objc-sync.mm

4. Runloop

Perform selector on a thread

当目标线程runloop未启动时是没有效果的。

启动 Runloop

If no input sources or timers are attached to the run loop, this method exits immediately;

Manually removing all known input sources and timers from the run loop is not a guarantee that the run loop will exit.macOS can install and remove additional input sources as needed to process requests targeted at the receiver’s thread. Those sources could therefore prevent the run loop from exiting.

The Run Loop Sequence of Events

Each time you run it, your thread’s run loop processes pending events and generates notifications for any attached observers. The order in which it does this is very specific and is as follows:

- Notify observers that the run loop has been entered.

- Notify observers that any ready timers are about to fire.

- Notify observers that any input sources that are not port based are about to fire.

- Fire any non-port-based input sources that are ready to fire.

- If a port-based input source is ready and waiting to fire, process the event immediately. Go to step 9.

- Notify observers that the thread is about to sleep.

- Put the thread to sleep until one of the following events occurs:

- An event arrives for a port-based input source.

- A timer fires.

- The timeout value set for the run loop expires.

- The run loop is explicitly woken up.

- Notify observers that the thread just woke up.

- Process the pending event.

- If a user-defined timer fired, process the timer event and restart the loop. Go to step 2.

- If an input source fired, deliver the event.

- If the run loop was explicitly woken up but has not yet timed out, restart the loop. Go to step 2.

- Notify observers that the run loop has exited.

Example: Detect Main Runloop lag with RunloopObserver

参考资料:

http://www.tanhao.me/code/151113.html/

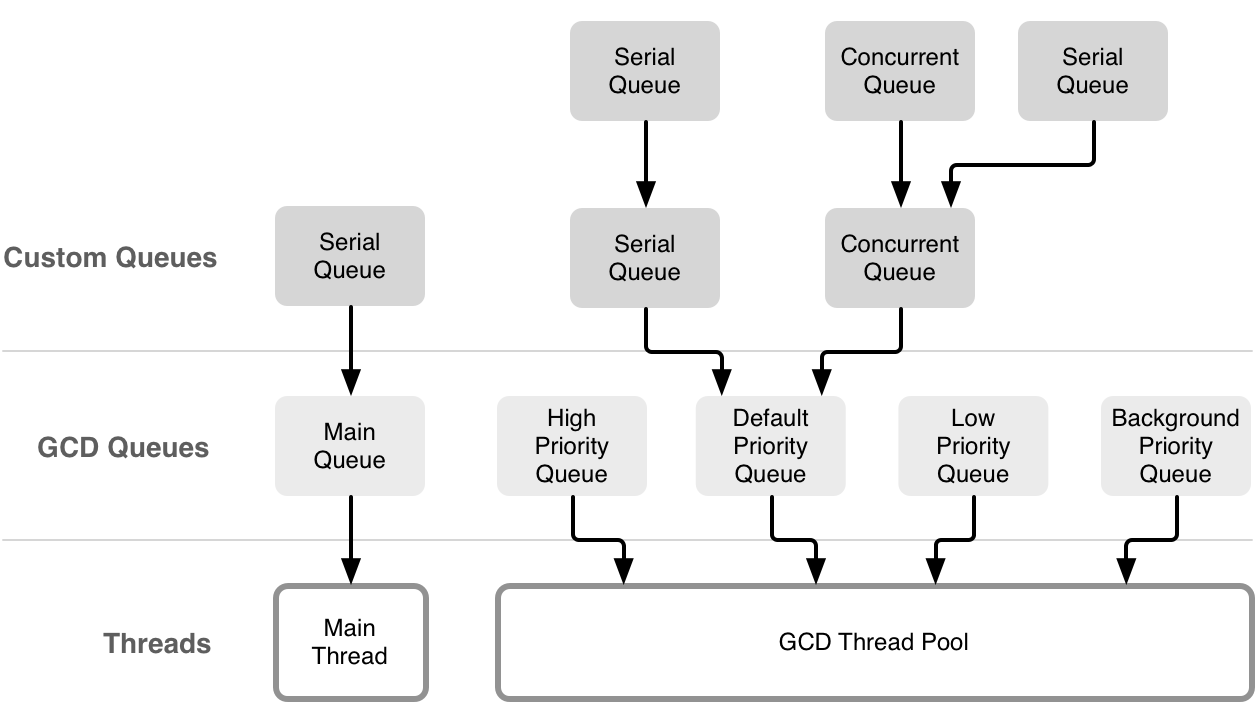

6. Queue Vs Thread

Thread != Queue

A queue doesn't own a thread and a thread is not bound to a queue. There are threads and there are queues. Whenever a queue wants to run a block, it needs a thread but that won't always be the same thread. It just needs any thread for it (this may be a different one each time) and when it's done running blocks (for the moment), the same thread can now be used by a different queue.

There's also no guarantee that a given serial queue will always use the same thread.

The only exception is the main queue:

dispatch_get_main_queue will must run on main thread.

While, main thread may run task at any more than one queue.

7. Dispatch Sync Vs Dispatch Async

dispatch_sync

dispatch_sync

└──dispatch_sync_f

└──_dispatch_sync_f2

└──_dispatch_sync_f_slow

static void _dispatch_sync_f_slow(dispatch_queue_t dq, void *ctxt, dispatch_function_t func) {

_dispatch_thread_semaphore_t sema = _dispatch_get_thread_semaphore();

struct dispatch_sync_slow_s {

DISPATCH_CONTINUATION_HEADER(sync_slow);

} dss = {

.do_vtable = (void*)DISPATCH_OBJ_SYNC_SLOW_BIT,

.dc_ctxt = (void*)sema,

};

_dispatch_queue_push(dq, (void *)&dss); _dispatch_thread_semaphore_wait(sema);

_dispatch_put_thread_semaphore(sema);

// ...

}

Submits a block to a dispatch queue for synchronous execution. Unlike

dispatch_async, this function does not return until the block has finished. Calling this function and targeting the current queue results in deadlock.

Unlike with dispatch_async, no retain is performed on the target queue. Because calls to this function are synchronous, it "borrows" the reference of the caller. Moreover, no Block_copy is performed on the block.

As an optimization, this function invokes the block on the current thread when possible.

dispatch_sync does two things:

- queue a block

- blocks the current thread until the block has finished running

dispatch_async

dispatch_async(dispatch_queue_t queue, dispatch_block_t block) {

dispatch_async_f(dq, _dispatch_Block_copy(work), _dispatch_call_block_and_release);

}

dispatch_async_f(dispatch_queue_t queue, void *context, dispatch_function_t work);

Dead Locks

dispatch_sync(queueA, ^{

dispatch_sync(queueB, ^{

dispatch_sync(queueA, ^{ // DEAD LOCK

// some task

});

});

});

Example:

dispatch_async(QueueA, ^{

someFunctionA(...);

dispatch_sync(QueueB, ^{

someFunctionB(...);

});

});

When QueueA runs the block, it will temporarily own a thread, any thread. someFunctionA(...)will execute on that thread. Now while doing the synchronous dispatch, QueueA cannot do anything else, it has to wait for the dispatch to finish. QueueB on the other hand, will also need a thread to run its block and execute someFunctionB(...). So either QueueA temporarily suspends its thread and QueueB uses some other thread to run the block or QueueA hands its thread over to QueueB (after all it won't need it anyway until the synchronous dispatch has finished) and QueueB directly uses the current thread of QueueA.

Needless to say that the last option is much faster as no thread switch is required. And this is the optimization the sentence talks about. So a dispatch_sync() to a different queue may not always cause a thread switch (different queue, maybe same thread).

But a dispatch_sync() still cannot happen to the same queue (same thread, yes, same queue, no). That's because a queue will execute block after block and when it currently executes a block, it won't execute another one until this one is done. So it executes BlockA and BlockA does a dispatch_sync() of BlockB on the same queue. The queue won't run BlockB as long as it still runs BlockA, but running BlockA won't continue until BlockB has run.

Important: You should never call the dispatch_sync or dispatch_sync_f function from a task that is executing in the same queue that you are planning to pass to the function. This is particularly important for serial queues, which are guaranteed to deadlock, but should also be avoided for concurrent queues.

8. Dispatch set target

The misunderstanding here is that dispatch_get_specific doesn't traverse the stack of nested queues, it traverses the queue targeting lineage.

Modifying the target queue of some objects changes their behavior:

- Dispatch queues:

A dispatch queue's priority is inherited from its target queue.

If you submit a block to a serial queue, and the serial queue’s target queue is a different serial queue, that block is not invoked concurrently with blocks submitted to the target queue or to any other queue with that same target queue.

- Dispatch sources:

A dispatch source's target queue specifies where its event handler and cancellation handler blocks are submitted.

- Dispatch I/O channels:

A dispatch I/O channel's target queue specifies where its I/O operations are executed.

By default, a newly created queue forwards into the default priority global queue.

参考资料:

https://bestswifter.com/deep-gcd/?spm=5176.100239.0.0.vCv2rL

https://stackoverflow.com/questions/23955948/why-did-apple-deprecate-dispatch-get-current-queue

libdispatch 源码:https://opensource.apple.com/tarballs/libdispatch/

9. Read-write Lock in GCD

Use dispatch_barrier_async().

When the barrier block reaches the front of a private concurrent queue, it is not executed immediately. Instead, the queue waits until its currently executing blocks finish executing. At that point, the barrier block executes by itself. Any blocks submitted after the barrier block are not executed until the barrier block completes.

The queue you specify should be a concurrent queue that you create yourself using the dispatch_queue_create function. If the queue you pass to this function is a serial queue or one of the global concurrent queues, this function behaves like the dispatch_async function.

附录:

测试Demo:http://files.cnblogs.com/files/smileEvday/iOSMultiThreadSample.zip

iOS: 并发编程的几个知识点的更多相关文章

- iOS并发编程笔记【转】

线程 使用Instruments的CPU strategy view查看代码如何在多核CPU中执行.创建线程可以使用POSIX 线程API,或者NSThread(封装POSIX 线程API).下面是并 ...

- iOS 并发编程指南

iOS Concurrency Programming Guide iOS 和 Mac OS 传统的并发编程模型是线程,不过线程模型伸缩性不强,而且编写正确的线程代码也不容易.Mac OS 和 iOS ...

- IOS并发编程GCD

iOS有三种多线程编程的技术 (一)NSThread (二)Cocoa NSOperation (三)GCD(全称:Grand Central Dispatch) 这三种编程方式从上到下,抽象度层次 ...

- python并发编程之多进程基础知识点

1.操作系统 位于硬件与应用软件之间,本质也是一种软件,由系统内核和系统接口组成 和进程之间的关系是: 进程只能由操作系统创建 和普通软件区别: 操作系统是真正的控制硬件 应用程序实际在调用操作系统提 ...

- iOS并发编程指南之同步

1.gcd fmdb使用了gcd,它是通过 建立系列化的G-C-D队列 从多线程同时调用调用方法,GCD也会按它接收的块的顺序来执行. fmdb使用的是dispatch_sync,多线程调用a ser ...

- python并发编程之协程知识点

由线程遗留下的问题:GIL导致多个线程不能真正的并行,CPython中多个线程不能并行 单线程实现并发:切换+保存状态 第一种方法:使用yield,yield可以保存状态.yield的状态保存与操作系 ...

- python并发编程之多线程基础知识点

1.线程理论知识 概念:指的是一条流水线的工作过程的总称,是一个抽象的概念,是CPU基本执行单位. 进程和线程之间的区别: 1. 进程仅仅是一个资源单位,其中包含程序运行所需的资源,而线程就相当于车间 ...

- 最强Java并发编程详解:知识点梳理,BAT面试题等

本文原创更多内容可以参考: Java 全栈知识体系.如需转载请说明原处. 知识体系系统性梳理 Java 并发之基础 A. Java进阶 - Java 并发之基础:首先全局的了解并发的知识体系,同时了解 ...

- 干货:Java并发编程必懂知识点解析

本文大纲 并发编程三要素 原子性 原子,即一个不可再被分割的颗粒.在Java中原子性指的是一个或多个操作要么全部执行成功要么全部执行失败. 有序性 程序执行的顺序按照代码的先后顺序执行.(处理器可能会 ...

随机推荐

- zookeeper部署

版本:zookeeper-3.4.5-cdh5.10.0.tar.gz 网址:http://archive-primary.cloudera.com/cdh5/cdh/5/ 1. 解压 $ tar - ...

- 20165223 week2测试补交与总结

测试题二 题目: 在Ubuntu或Windows命令行中 建如下目录结构 Hello.java的内容见附件package isxxxx; (xxxx替换为你的四位学号) 编译运行Hello.java ...

- Thinkphp5 captcha扩展包安装,验证码验证以及点击刷新

首先下载 captcha扩展包,↓ 下载附件,解压到vendor目录下: 然后进入application/config.php添加配置信息: //验证码 'captcha' => ...

- Codeforces1076D. Edge Deletion(最短路树+bfs)

题目链接:http://codeforces.com/contest/1076/problem/D 题目大意: 一个图N个点M条双向边.设各点到点1的距离为di,保证满足条件删除M-K条边之后使得到点 ...

- 三小时学会Kubernetes:容器编排详细指南

三小时学会Kubernetes:容器编排详细指南 如果谁都可以在三个小时内学会Kubernetes,银行为何要为这么简单的东西付一大笔钱? 如果你心存疑虑,我建议你不妨跟着我试一试!在完成本文的学习后 ...

- VS Code汉化

F1搜索 Configure Language { // Defines VS Code's display language. // See https://go.microsoft.com/fw ...

- JS原生 未来元素监听写法

绑定事件的另一种方法是用 addEventListener() 或 attachEvent() 来绑定事件监听函数. addEventListener()函数语法:elementObject.addE ...

- 【SDOI2008】仪仗队

//裸的欧拉函数//y=kx//求不同的k有多少#include<bits/stdc++.h> #define ll long long #define N 40010 using nam ...

- CMakeLists.txt使用

背景:C++代码在编译的过程中需要进行文件的包含,该文主要介绍CMakeLists.txt相关语法 CMake之CMakeLists.txt编写入门

- 收藏:win32 控件之 sysLink控件(超链接)

来源:https://blog.csdn.net/dai_jing/article/details/8683487 手动创建syslink(msdn): CreateWindowEx(, WC_LIN ...